CIS 6930 Chip Multiprocessor Parallel Architecture and Programming

- Slides: 17

CIS 6930: Chip Multiprocessor: Parallel Architecture and Programming Fall 2010 Jih-Kwon Peir Computer Information Science Engineering University of Florida 1

Chapter 6, Supplement 2: Threading Hardware 2

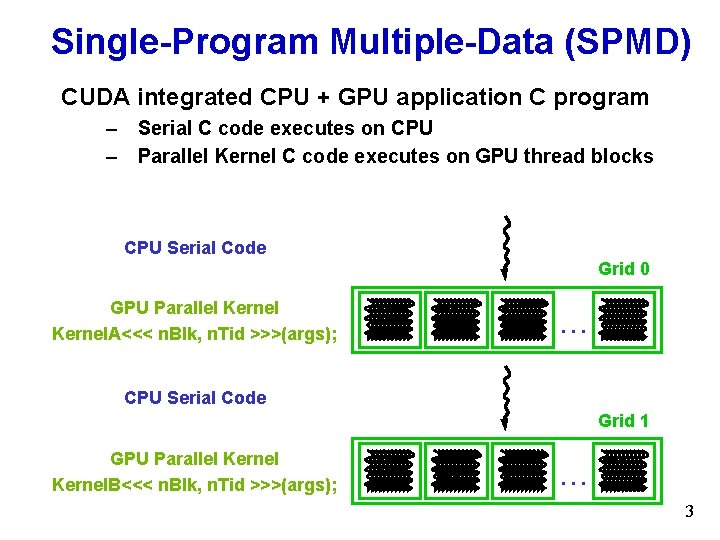

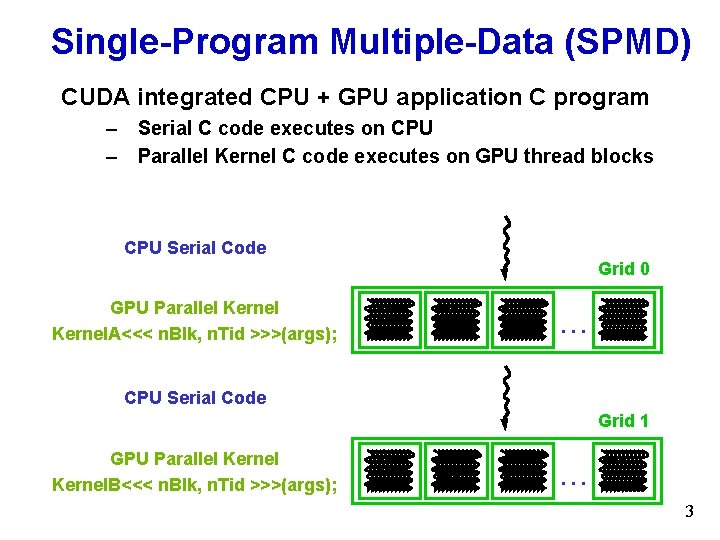

Single-Program Multiple-Data (SPMD) CUDA integrated CPU + GPU application C program – Serial C code executes on CPU – Parallel Kernel C code executes on GPU thread blocks CPU Serial Code Grid 0 GPU Parallel Kernel. A<<< n. Blk, n. Tid >>>(args); . . . CPU Serial Code Grid 1 GPU Parallel Kernel. B<<< n. Blk, n. Tid >>>(args); . . . 3

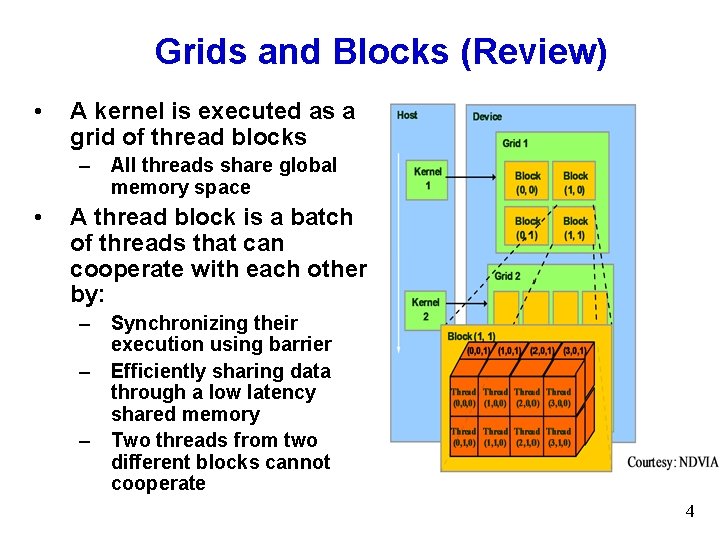

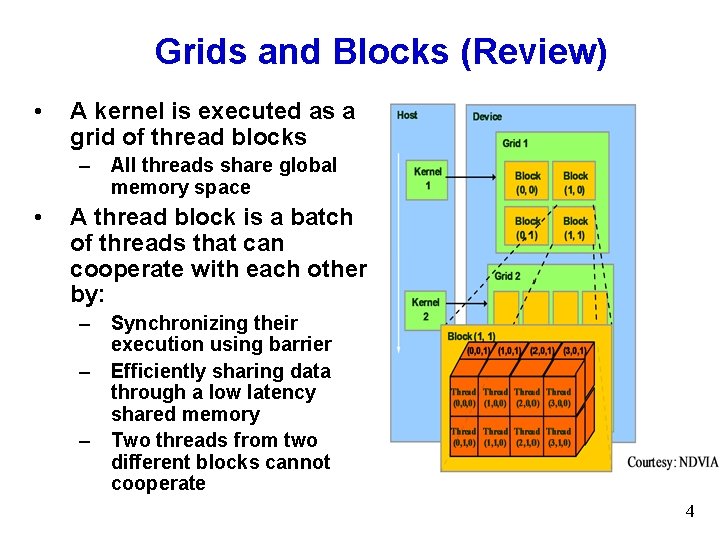

Grids and Blocks (Review) • A kernel is executed as a grid of thread blocks – All threads share global memory space • A thread block is a batch of threads that can cooperate with each other by: – Synchronizing their execution using barrier – Efficiently sharing data through a low latency shared memory – Two threads from two different blocks cannot cooperate 4

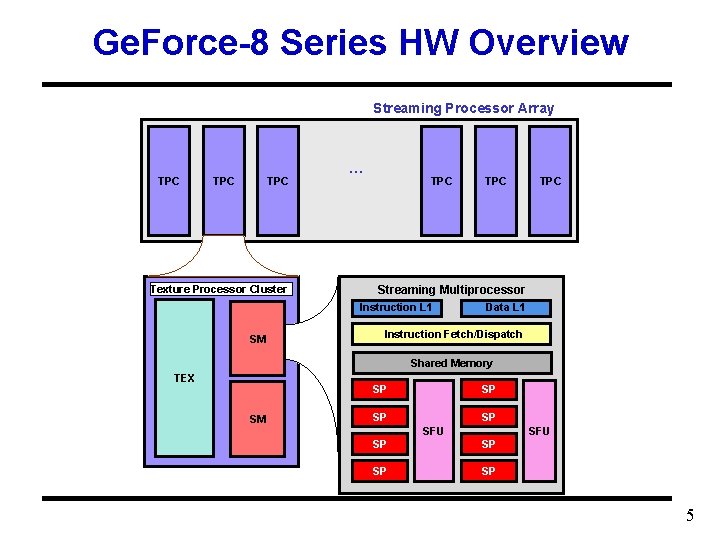

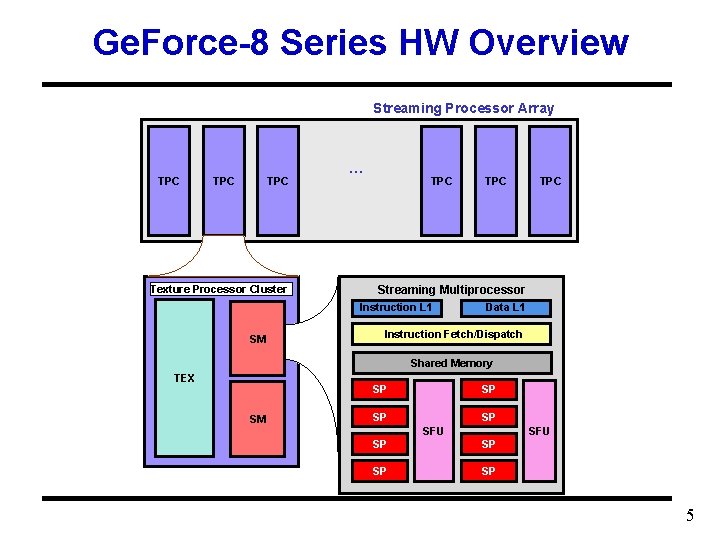

Ge. Force-8 Series HW Overview Streaming Processor Array TPC TPC Texture Processor Cluster … TPC Streaming Multiprocessor Instruction L 1 SM TPC Data L 1 Instruction Fetch/Dispatch Shared Memory TEX SP SM SP SP SP SFU SP SP 5

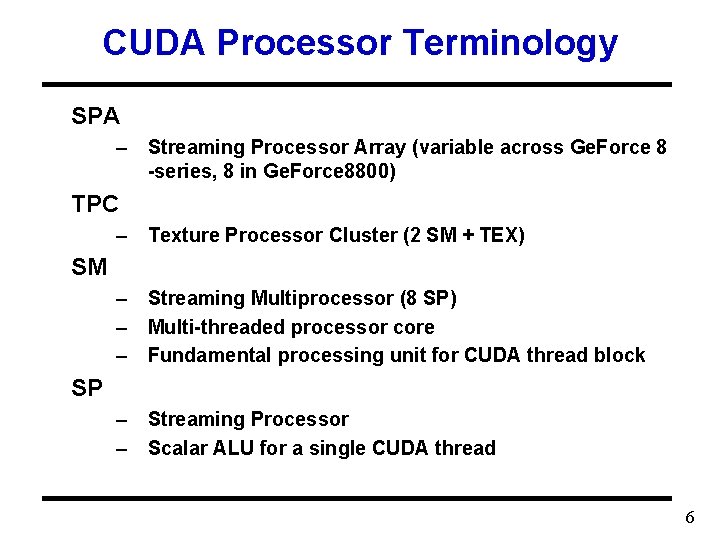

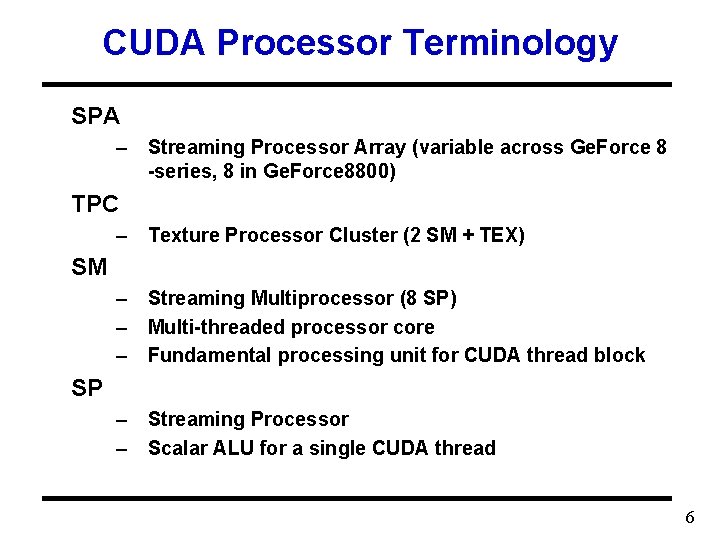

CUDA Processor Terminology SPA – Streaming Processor Array (variable across Ge. Force 8 -series, 8 in Ge. Force 8800) TPC – Texture Processor Cluster (2 SM + TEX) SM – Streaming Multiprocessor (8 SP) – Multi-threaded processor core – Fundamental processing unit for CUDA thread block SP – Streaming Processor – Scalar ALU for a single CUDA thread 6

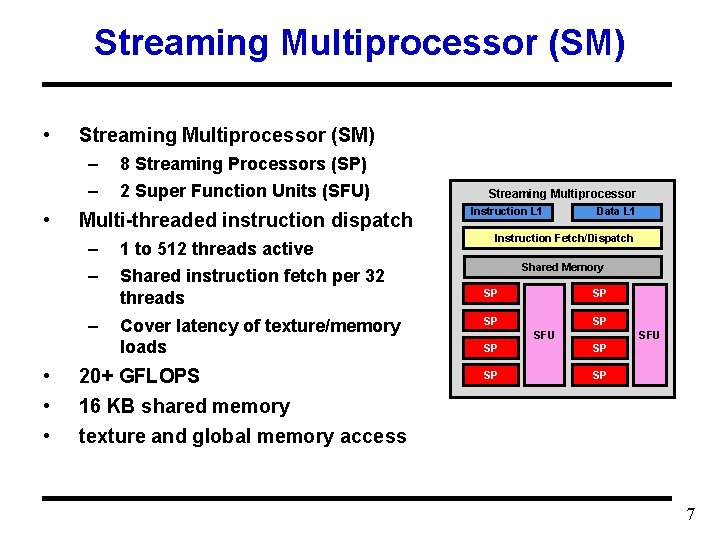

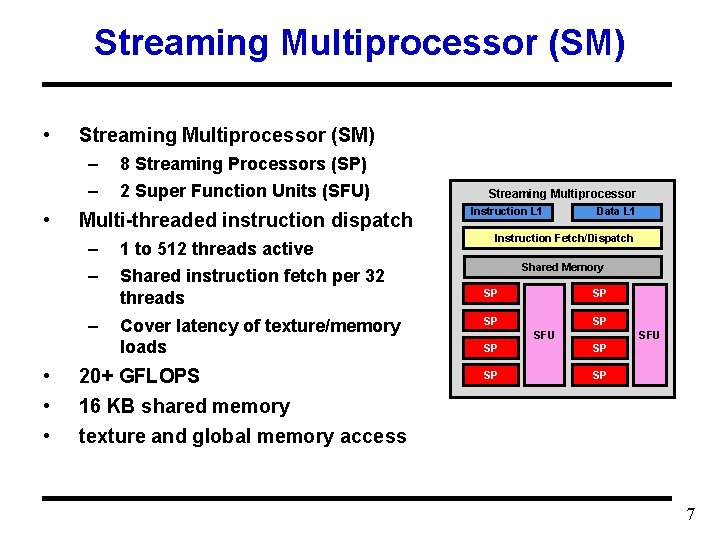

Streaming Multiprocessor (SM) • Streaming Multiprocessor (SM) – – • 2 Super Function Units (SFU) Multi-threaded instruction dispatch – – – • • • 8 Streaming Processors (SP) 1 to 512 threads active Shared instruction fetch per 32 threads Cover latency of texture/memory loads 20+ GFLOPS 16 KB shared memory texture and global memory access Streaming Multiprocessor Instruction L 1 Data L 1 Instruction Fetch/Dispatch Shared Memory SP SP SFU SP SP 7

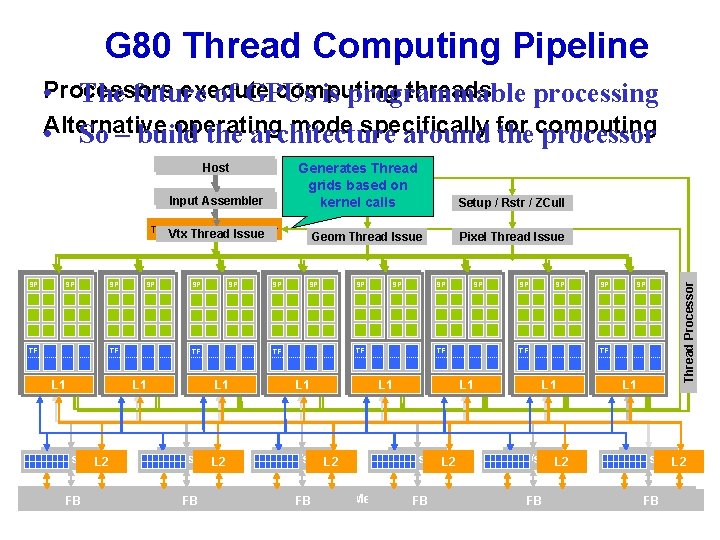

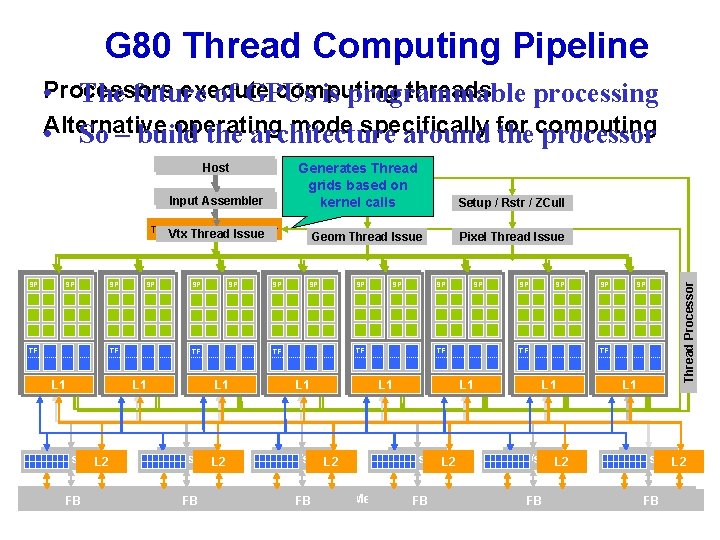

G 80 Thread Computing Pipeline execute computing threads • Processors The future of GPUs is programmable processing operating mode specifically • Alternative So – build the architecture around for thecomputing processor Generates Thread grids based on kernel calls Input Assembler Thread Execution Manager Vtx Thread Issue SP SP SP Setup / Rstr / ZCull Geom Thread Issue SP SP Pixel Thread Issue SP SP SP Parallel Data TF Cache Parallel Data TF Cache Texture L 1 Texture L 1 Texture L 1 Load/store L 2 Load/store. L 2 FB FB Load/store L 2 FB Global Memory FB Thread Processor Host Load/store L 2 FB FB

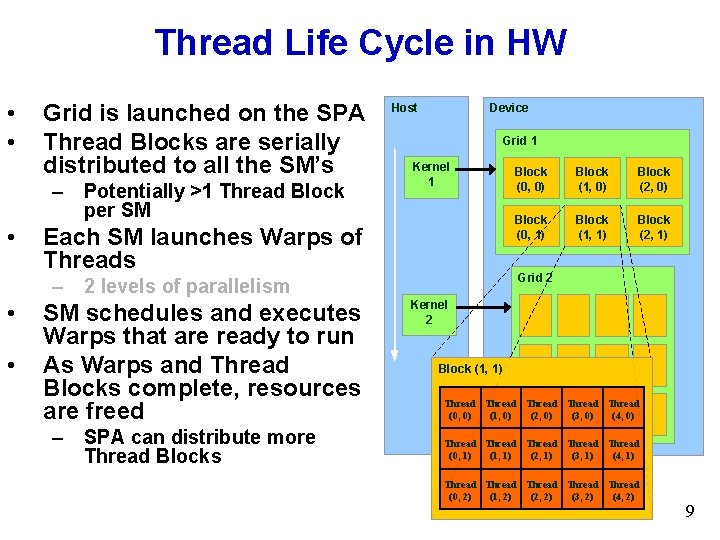

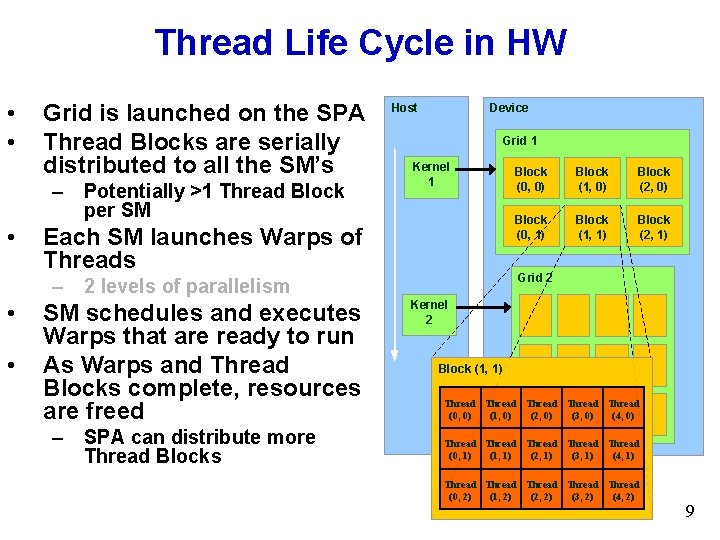

Thread Life Cycle in HW • • Grid is launched on the SPA Thread Blocks are serially distributed to all the SM’s – Potentially >1 Thread Block per SM • Host Device Grid 1 Kernel 1 Each SM launches Warps of Threads • SM schedules and executes Warps that are ready to run As Warps and Thread Blocks complete, resources are freed – SPA can distribute more Thread Blocks Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Grid 2 – 2 levels of parallelism • Block (0, 0) Kernel 2 Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2) 9

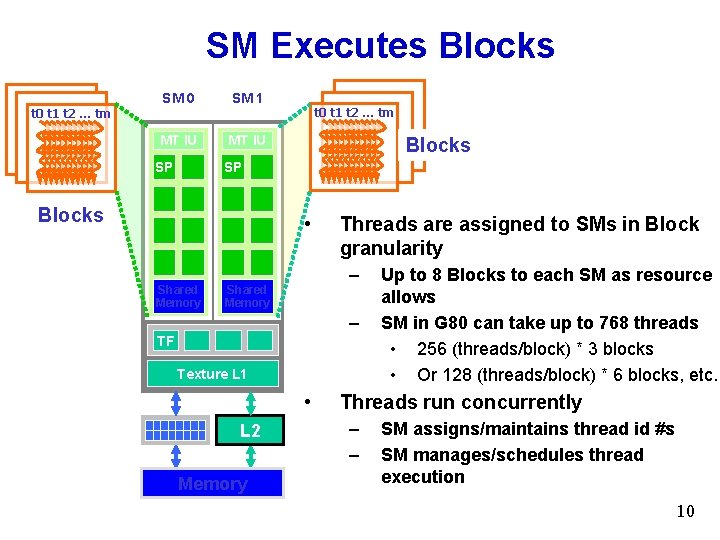

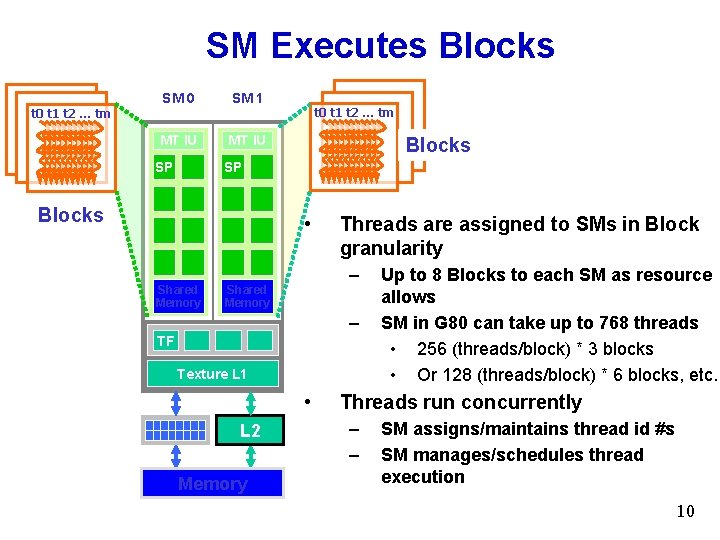

SM Executes Blocks t 0 t 1 t 2 … tm SM 0 SM 1 MT IU SP t 0 t 1 t 2 … tm Blocks SP Blocks • Shared Memory Threads are assigned to SMs in Block granularity – Shared Memory – TF Texture L 1 • L 2 Memory Up to 8 Blocks to each SM as resource allows SM in G 80 can take up to 768 threads • 256 (threads/block) * 3 blocks • Or 128 (threads/block) * 6 blocks, etc. Threads run concurrently – – SM assigns/maintains thread id #s SM manages/schedules thread execution 10

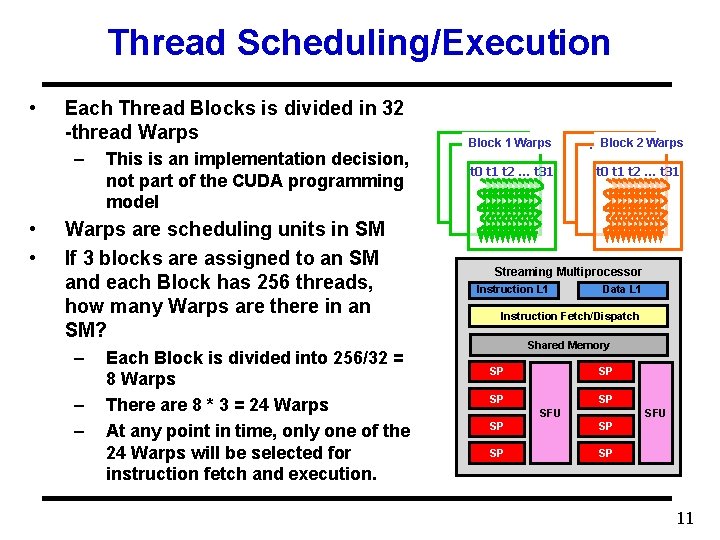

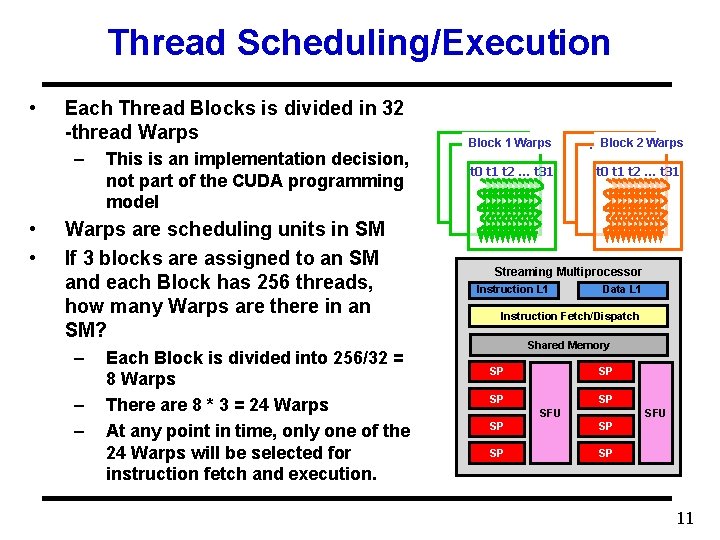

Thread Scheduling/Execution • Each Thread Blocks is divided in 32 -thread Warps – • • This is an implementation decision, not part of the CUDA programming model Warps are scheduling units in SM If 3 blocks are assigned to an SM and each Block has 256 threads, how many Warps are there in an SM? – – – Each Block is divided into 256/32 = 8 Warps There are 8 * 3 = 24 Warps At any point in time, only one of the 24 Warps will be selected for instruction fetch and execution. Block 1 Warps … t 0 t 1 t 2 … t 31 …Block 2 Warps t 0 t 1 t 2 … t 31 … … Streaming Multiprocessor Instruction L 1 Data L 1 Instruction Fetch/Dispatch Shared Memory SP SP SFU SP SP 11

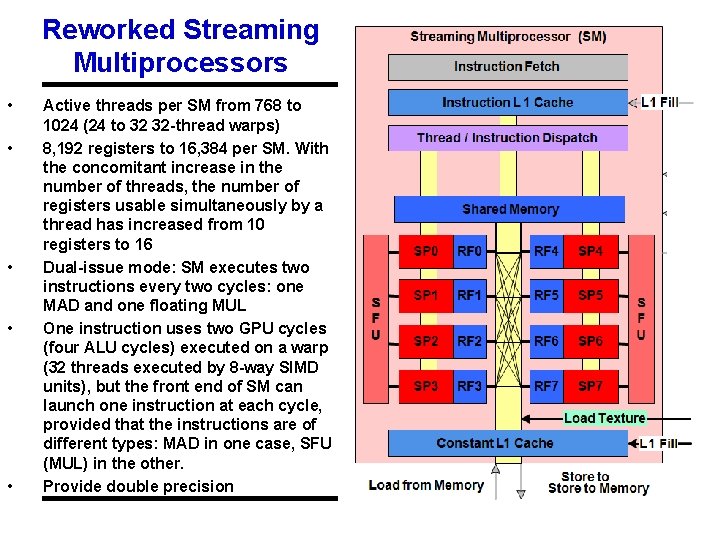

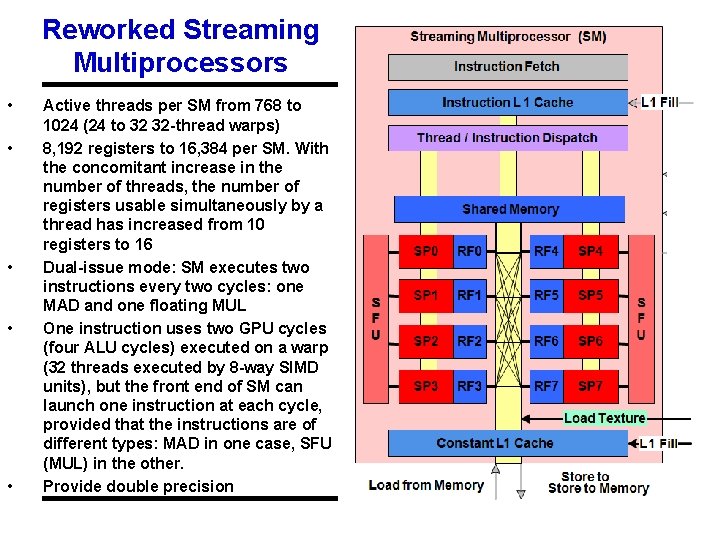

Reworked Streaming Multiprocessors • • • Active threads per SM from 768 to 1024 (24 to 32 32 -thread warps) 8, 192 registers to 16, 384 per SM. With the concomitant increase in the number of threads, the number of registers usable simultaneously by a thread has increased from 10 registers to 16 Dual-issue mode: SM executes two instructions every two cycles: one MAD and one floating MUL One instruction uses two GPU cycles (four ALU cycles) executed on a warp (32 threads executed by 8 -way SIMD units), but the front end of SM can launch one instruction at each cycle, provided that the instructions are of different types: MAD in one case, SFU (MUL) in the other. Provide double precision

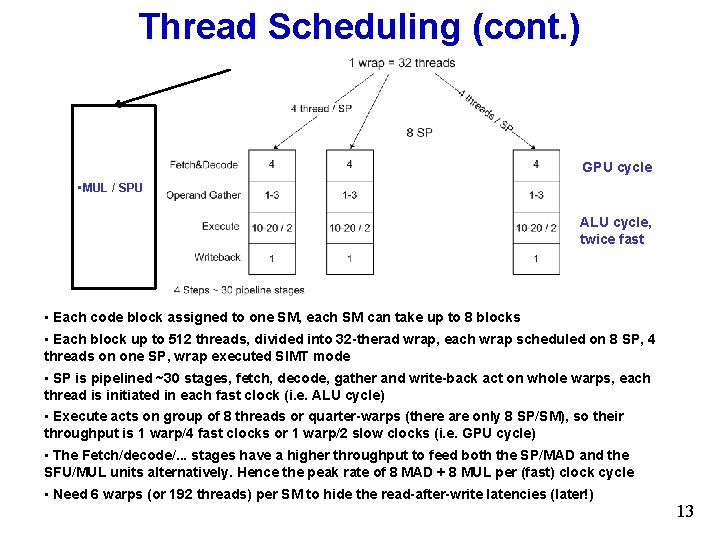

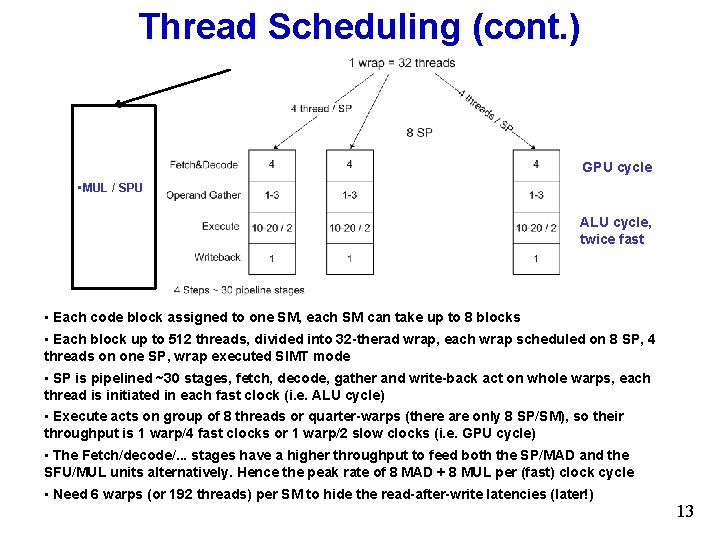

Thread Scheduling (cont. ) GPU cycle • MUL / SPU ALU cycle, twice fast • Each code block assigned to one SM, each SM can take up to 8 blocks • Each block up to 512 threads, divided into 32 -therad wrap, each wrap scheduled on 8 SP, 4 threads on one SP, wrap executed SIMT mode • SP is pipelined ~30 stages, fetch, decode, gather and write-back act on whole warps, each thread is initiated in each fast clock (i. e. ALU cycle) • Execute acts on group of 8 threads or quarter-warps (there are only 8 SP/SM), so their throughput is 1 warp/4 fast clocks or 1 warp/2 slow clocks (i. e. GPU cycle) • The Fetch/decode/. . . stages have a higher throughput to feed both the SP/MAD and the SFU/MUL units alternatively. Hence the peak rate of 8 MAD + 8 MUL per (fast) clock cycle • Need 6 warps (or 192 threads) per SM to hide the read-after-write latencies (later!) 13

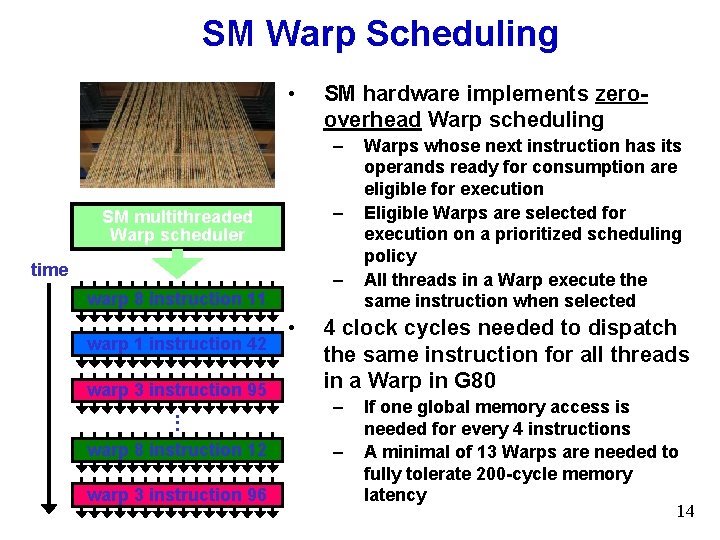

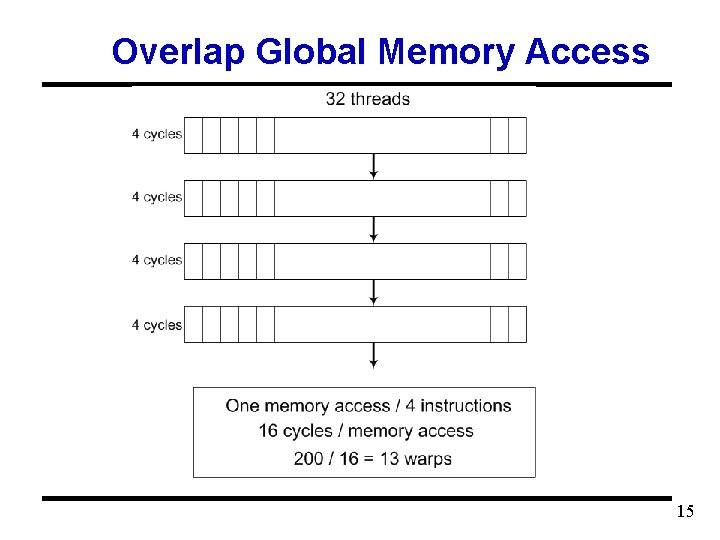

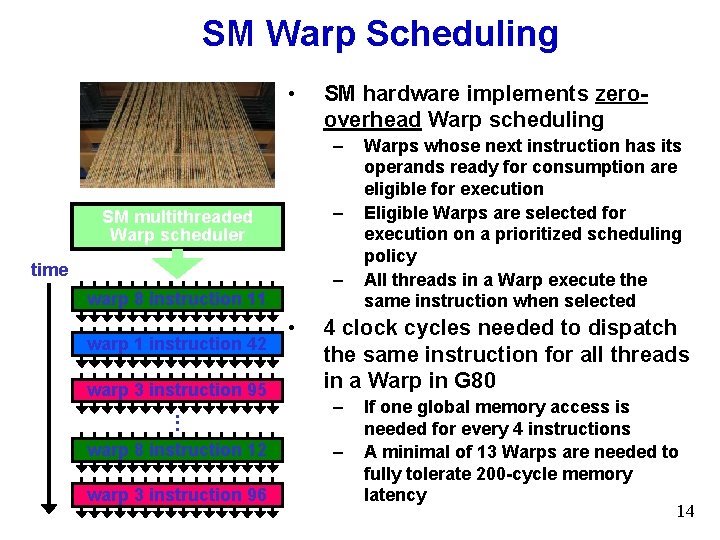

SM Warp Scheduling • SM hardware implements zerooverhead Warp scheduling – – SM multithreaded Warp scheduler time – warp 8 instruction 11 warp 1 instruction 42 warp 3 instruction 95. . . warp 8 instruction 12 warp 3 instruction 96 • Warps whose next instruction has its operands ready for consumption are eligible for execution Eligible Warps are selected for execution on a prioritized scheduling policy All threads in a Warp execute the same instruction when selected 4 clock cycles needed to dispatch the same instruction for all threads in a Warp in G 80 – – If one global memory access is needed for every 4 instructions A minimal of 13 Warps are needed to fully tolerate 200 -cycle memory latency 14

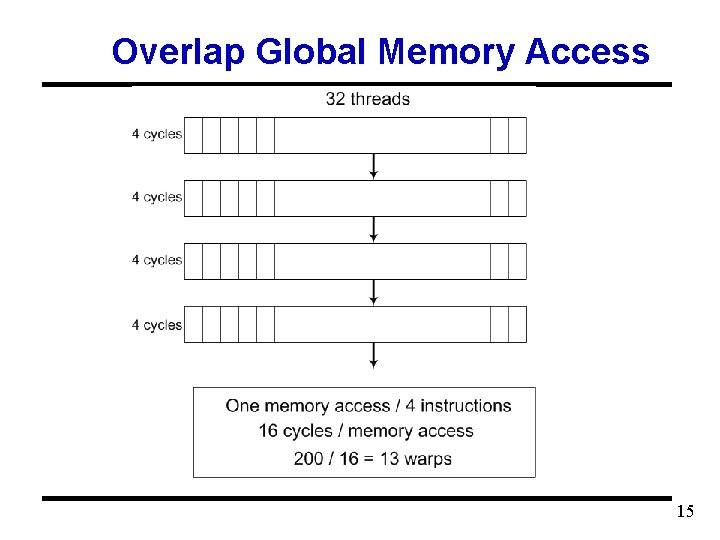

Overlap Global Memory Access 15

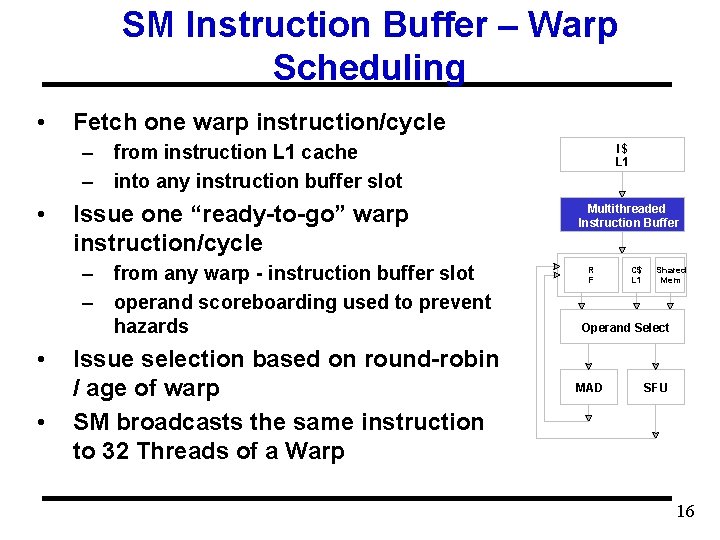

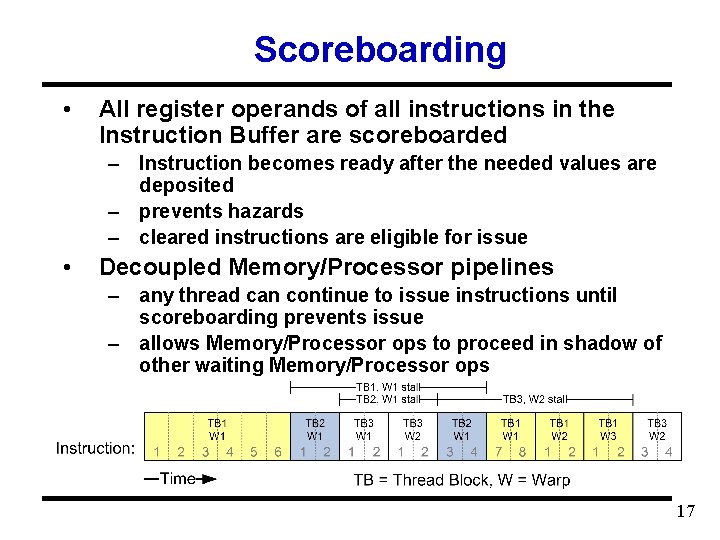

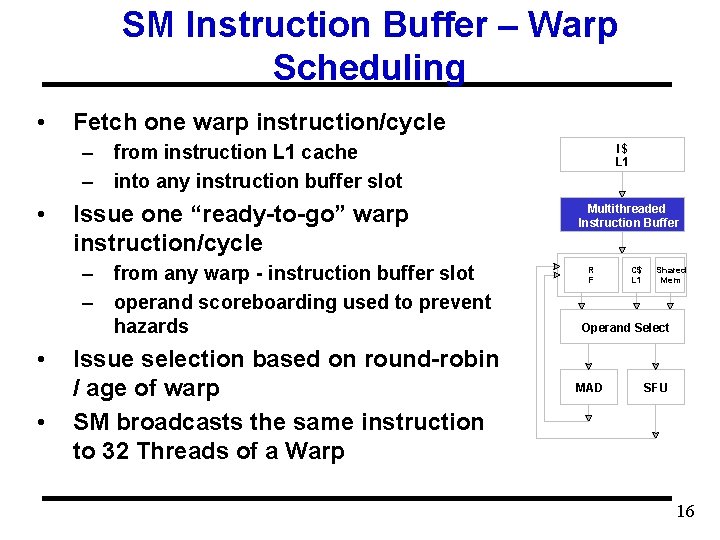

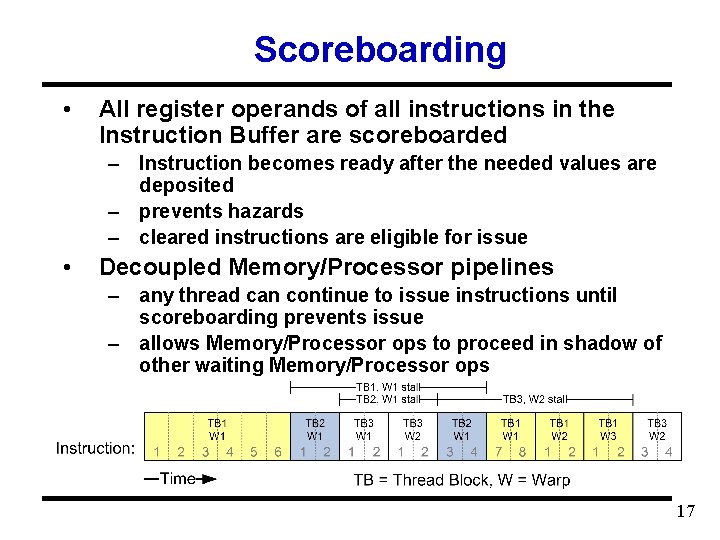

SM Instruction Buffer – Warp Scheduling • Fetch one warp instruction/cycle – from instruction L 1 cache – into any instruction buffer slot • Issue one “ready-to-go” warp instruction/cycle – from any warp - instruction buffer slot – operand scoreboarding used to prevent hazards • • Issue selection based on round-robin / age of warp SM broadcasts the same instruction to 32 Threads of a Warp I$ L 1 Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU 16

Scoreboarding • All register operands of all instructions in the Instruction Buffer are scoreboarded – Instruction becomes ready after the needed values are deposited – prevents hazards – cleared instructions are eligible for issue • Decoupled Memory/Processor pipelines – any thread can continue to issue instructions until scoreboarding prevents issue – allows Memory/Processor ops to proceed in shadow of other waiting Memory/Processor ops 17