CAP 6938 Neuroevolution and Developmental Encoding Approaches to

- Slides: 13

CAP 6938 Neuroevolution and Developmental Encoding Approaches to Neuroevolution Dr. Kenneth Stanley September 20, 2006

Many TWEANN Problems • Competing conventions problem – Topology matching problem • Initial population topology randomization – Defective starter genomes – Unnecessarily high-dimensional search space • Loss of innovative structures – More complex can’t compete in the short run – Need to protect innovation • How do researchers design NE methods?

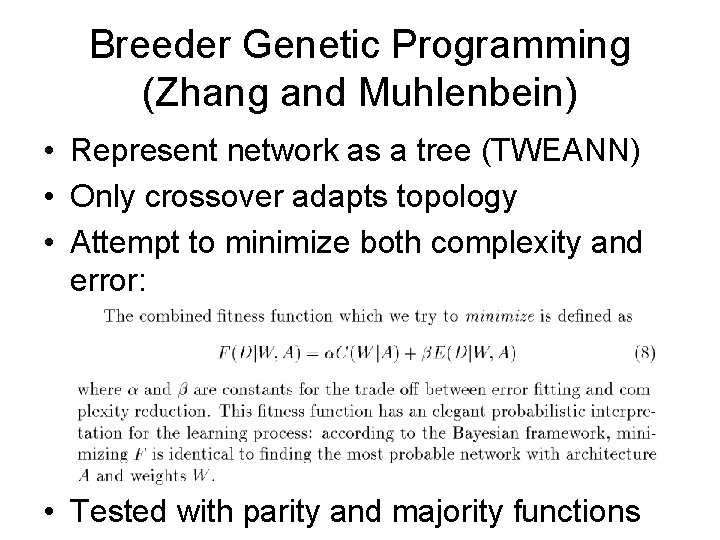

Breeder Genetic Programming (Zhang and Muhlenbein) • Represent network as a tree (TWEANN) • Only crossover adapts topology • Attempt to minimize both complexity and error: • Tested with parity and majority functions

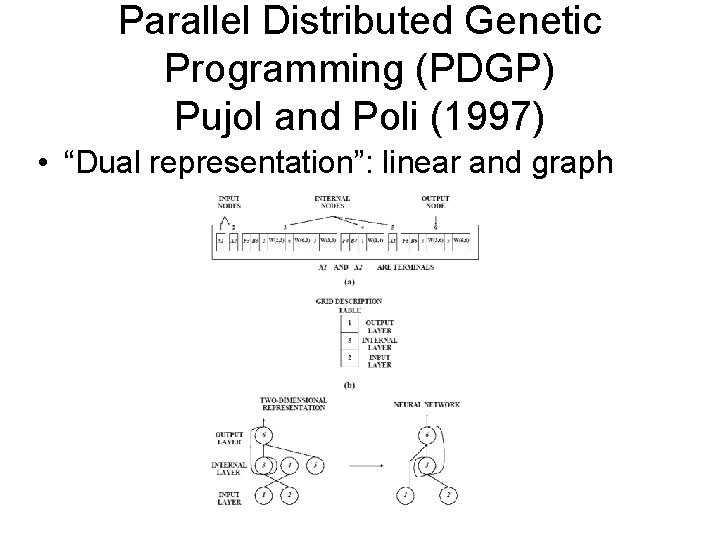

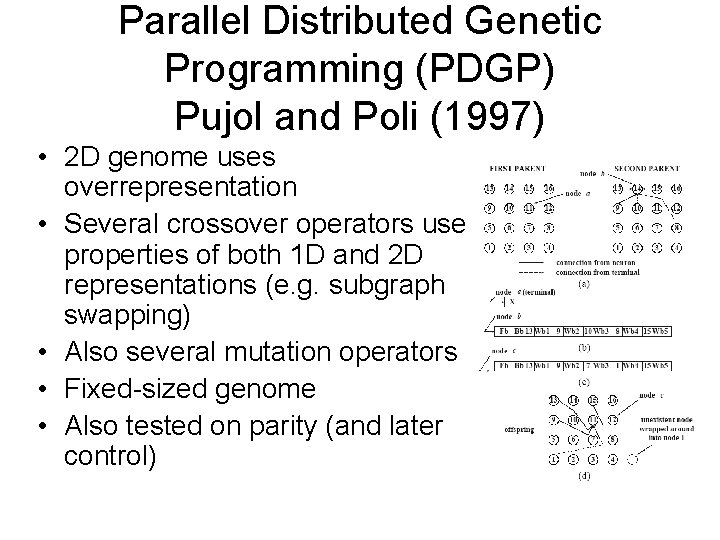

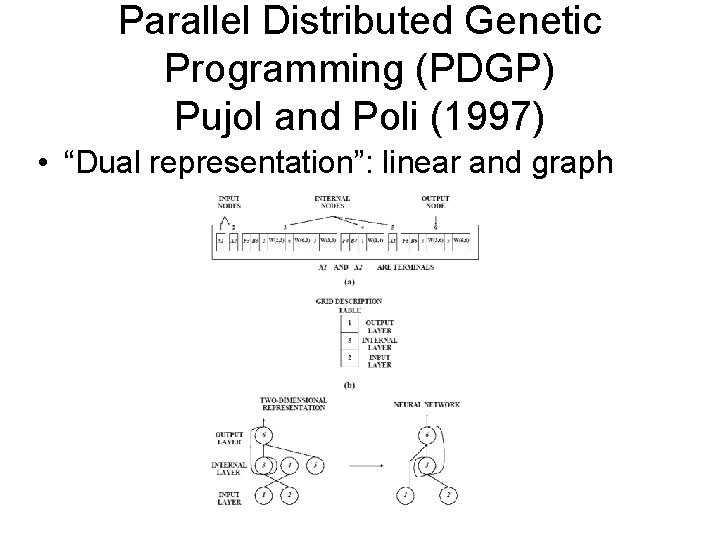

Parallel Distributed Genetic Programming (PDGP) Pujol and Poli (1997) • “Dual representation”: linear and graph

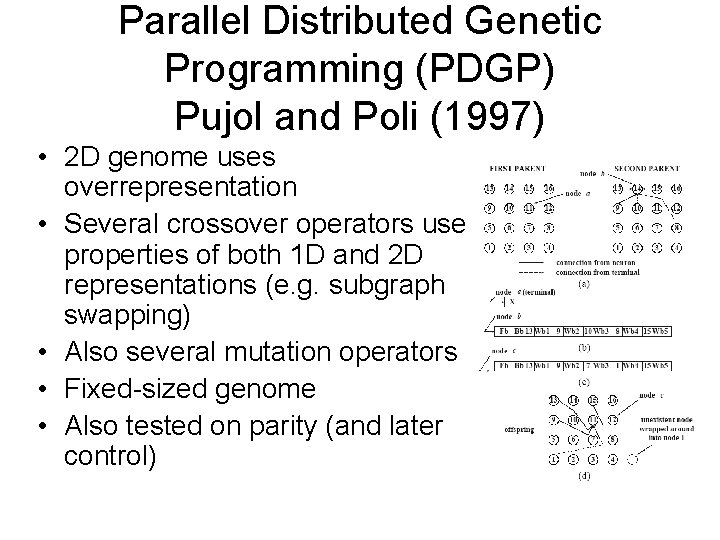

Parallel Distributed Genetic Programming (PDGP) Pujol and Poli (1997) • 2 D genome uses overrepresentation • Several crossover operators use properties of both 1 D and 2 D representations (e. g. subgraph swapping) • Also several mutation operators • Fixed-sized genome • Also tested on parity (and later control)

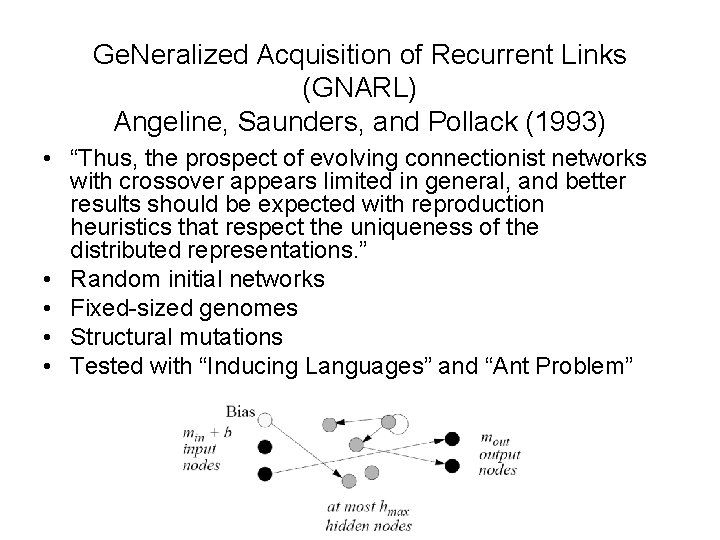

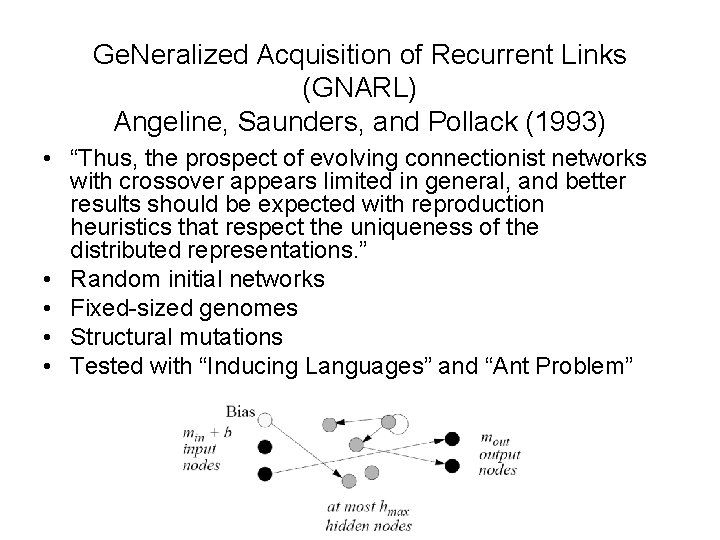

Ge. Neralized Acquisition of Recurrent Links (GNARL) Angeline, Saunders, and Pollack (1993) • “Thus, the prospect of evolving connectionist networks with crossover appears limited in general, and better results should be expected with reproduction heuristics that respect the uniqueness of the distributed representations. ” • Random initial networks • Fixed-sized genomes • Structural mutations • Tested with “Inducing Languages” and “Ant Problem”

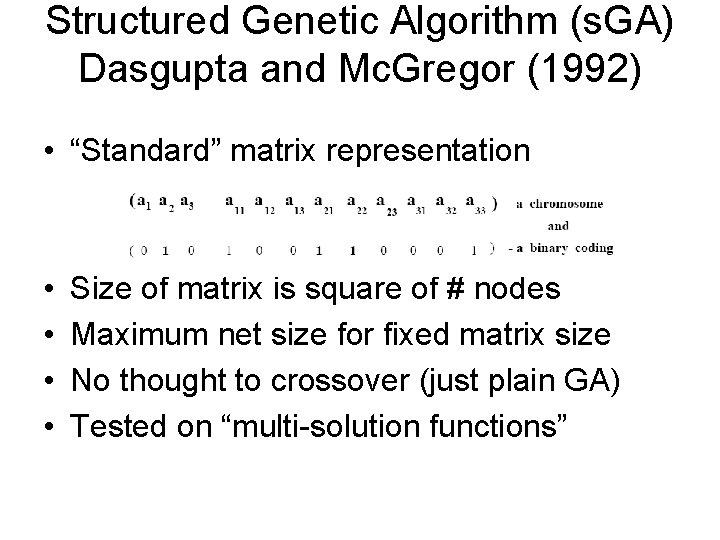

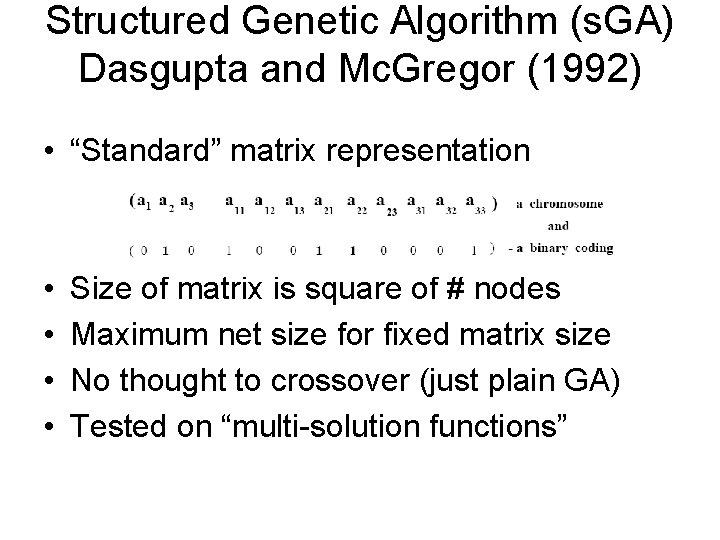

Structured Genetic Algorithm (s. GA) Dasgupta and Mc. Gregor (1992) • “Standard” matrix representation • • Size of matrix is square of # nodes Maximum net size for fixed matrix size No thought to crossover (just plain GA) Tested on “multi-solution functions”

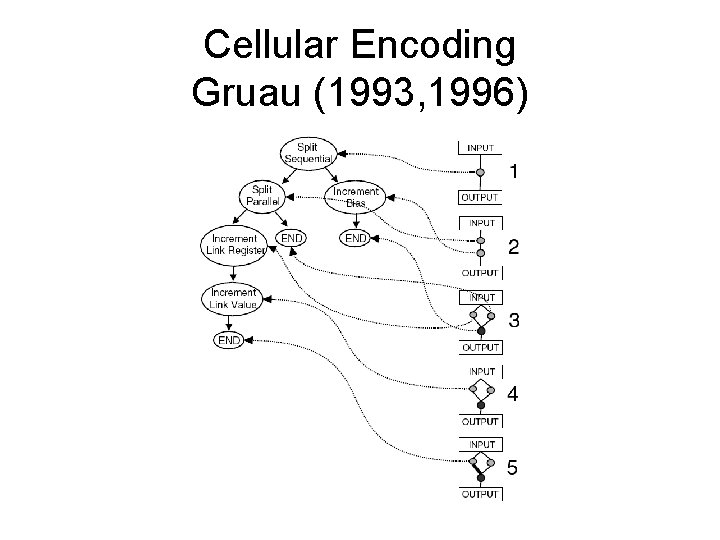

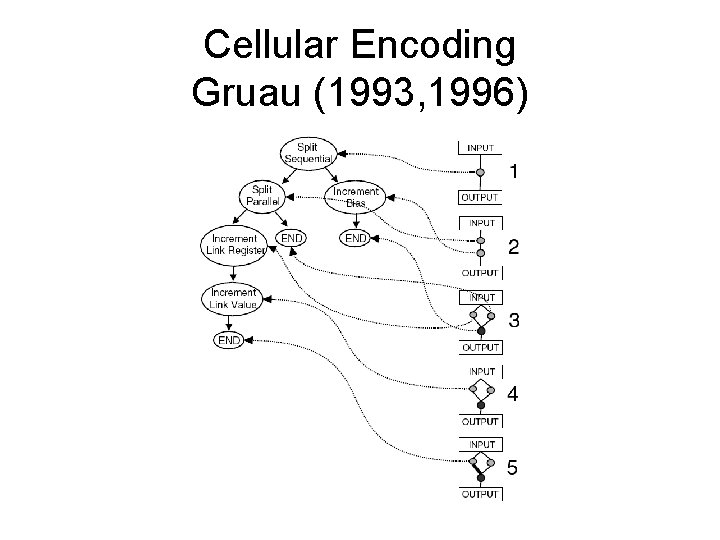

Cellular Encoding Gruau (1993, 1996) • Indirect encoding (Developmental) • First method to balance 2 poles without velocity inputs • Biological motivation: grow from single cell • Gruau proved CE can generate any graph • Crossover swaps subtrees like GP • Indirect encoding only makes competing conventions harder to comprehend

Cellular Encoding Gruau (1993, 1996)

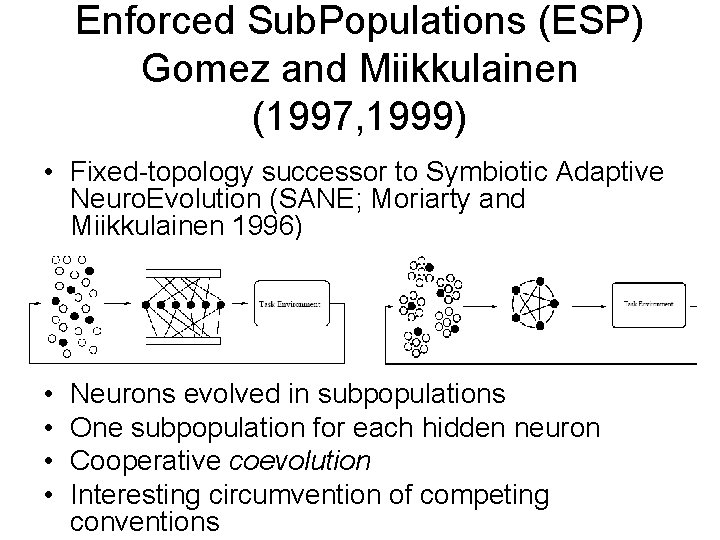

Enforced Sub. Populations (ESP) Gomez and Miikkulainen (1997, 1999) • Fixed-topology successor to Symbiotic Adaptive Neuro. Evolution (SANE; Moriarty and Miikkulainen 1996) • • Neurons evolved in subpopulations One subpopulation for each hidden neuron Cooperative coevolution Interesting circumvention of competing conventions

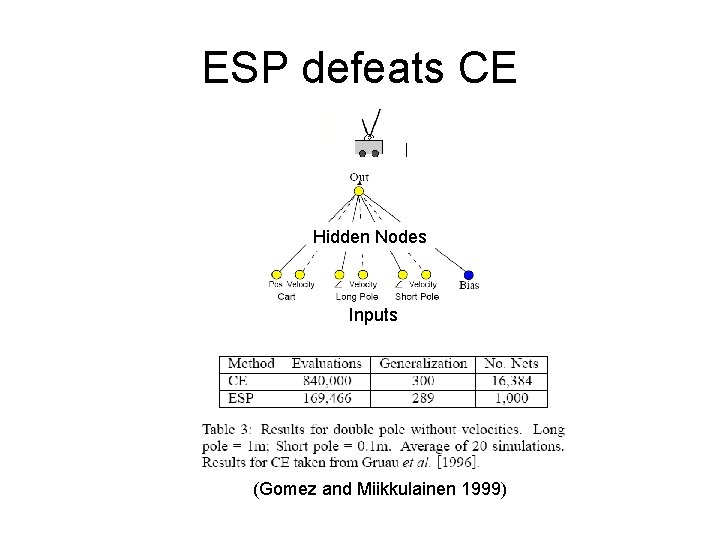

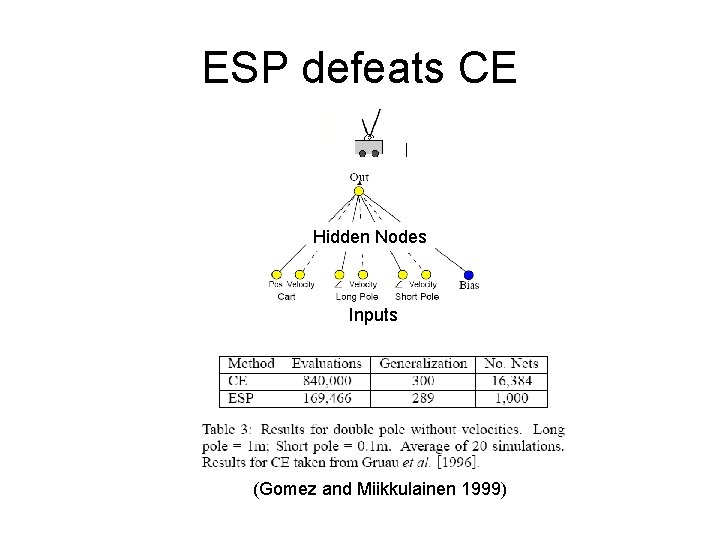

ESP defeats CE Hidden Nodes Inputs (Gomez and Miikkulainen 1999)

TWEANNS need Principles • Is there a principled method for evolving topologies that is not ad hoc? • How can the TWEANN challenges be handled directly? • Are all TWEANNs created equal?

• • Next Class: Neuro. Evolution of Augmenting Topologies (NEAT) Directly address TWEANN challenges Turns topology into an advantage Applicable outside NN’s Basis of class projects Evolving Neural Networks Through Augmenting Topologies by Kenneth O. Stanley and Risto Miikkulainen (2002)