Assessment in Online Courses Online Courseware I see

- Slides: 11

Assessment in Online Courses

Online Courseware I see two fundamental aspects: • Content • Assessment (Exercises and activities)

Assessment Example: Evaluating Student Essays • Manual: Calibrated Peer Review (CPR): UCLA (Coursera) • Automated: Automated Essay Scoring (AES): Ed. X

Automated Grading: AES • Long history • There are formal competitions for automated essay scoring systems! • Machine learning algorithms • Indistinguishable in quality from human graders • Ed. X: Grade 100 sample essays • Breaks down for longer assignments, things where style counts (poetry, humor), individual topics

Manual Grading: CPR • Requires a strong rubric (multiple choice) • Students must be “trained”: • Score 3+ essays, then get a competency rating • Competency rating affects weighting on reviews of others • Your reviewer rating depends on how close you are to weighted average • Gives students experience with reviewing • At end, students can self-rate own essays, and be graded on that exercise

CPR Issues • Some fraction of papers get all low-quality evaluators • Only works for an assignment for which a rubric can be generated (not so individual) • Length limits on what students can bear since they evaluate a number of essays • “Synchronous” vs. “Asynchronous” courses • Is there a peer group?

Comparison • Type: CPR has a little more flexibility, greater length restrictions • Consistency of scoring: AES more consistent • Feedback: More consistent with AES, but CPR allows potentially better feedback from (good) human evaluators • Instructor workload: AES needs a training pool (100 essays), CPR needs rubric, intervention for some fraction of poorly evaluated essays (not scaleable? ) • Student learning: AES has rapid feedback, is good for catching mechanical writing problem. CPR teaches evaluation skills (for a time cost)

Assessment: Automated • Data interchange for those and custom exercises (all we really need is scoring and perhaps interaction data) • Formats and systems for “standard” question types (MCQ, etc) • How rich can we make the activities?

Automated Assessment: Learning to Program • We will study this because it is a fairly well developed area (many systems), and it provides and interesting example of a “non-trivial” interactive exercise type. • Categories: • Programming Exercises: Write actual code • Proficiency exercises of some sort

Problets • Not programming exercises, but has other interesting interactive exercises. • Implementation: Java Applets • http: //www. problets. org/

Open. DSA Proficiency Exercises • Algorithm “simulation” • Manipulate a view of a data structure to reproduce the behavior of an algorithm. • Model answer generated by instumented implementation of algorithm: Store a series of states • Each (appropriate) manipulation by user also generates a state • Simply verify that the states match (by some definition of match) • Various behaviors possible when states do not match. • http: //algoviz. org/Open. DSA/Books/CS 3114/html/BST. html

2013 cci learning

2013 cci learning Myfloridareadytowork

Myfloridareadytowork Win career readiness assessment

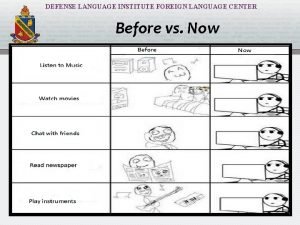

Win career readiness assessment Language now

Language now Pipeline engineering courses online

Pipeline engineering courses online Power bi mistakes to avoid online courses

Power bi mistakes to avoid online courses Ccri online courses

Ccri online courses Azure cosmos db: sql api deep dive online courses

Azure cosmos db: sql api deep dive online courses What is mooc

What is mooc Prince2 foundation elearning

Prince2 foundation elearning Process oriented learning competencies

Process oriented learning competencies Define dynamic assessment

Define dynamic assessment