University of Texas at Arlington Scheduling and Load

- Slides: 11

University of Texas at Arlington Scheduling and Load Balancing on the NASA Information Power Grid Sajal K. Das, Shailendra Kumar, Manish Arora Department of Computer Science and Engineering University of Texas at Arlington

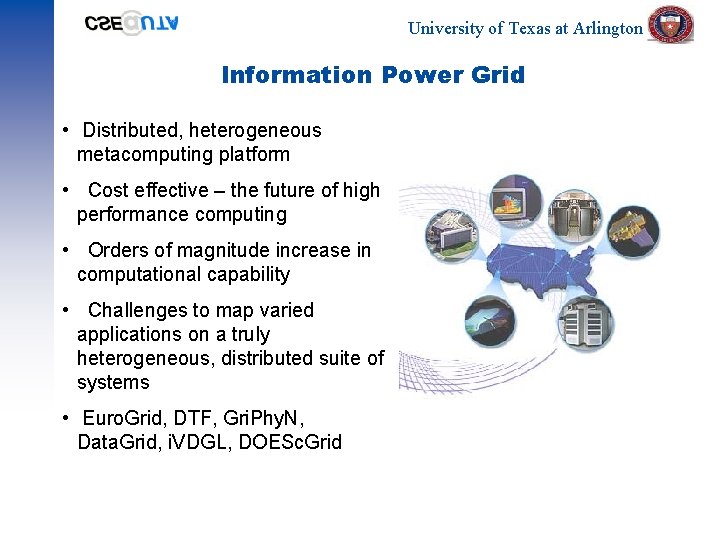

University of Texas at Arlington Information Power Grid • Distributed, heterogeneous metacomputing platform • Cost effective – the future of high performance computing • Orders of magnitude increase in computational capability • Challenges to map varied applications on a truly heterogeneous, distributed suite of systems • Euro. Grid, DTF, Gri. Phy. N, Data. Grid, i. VDGL, DOESc. Grid

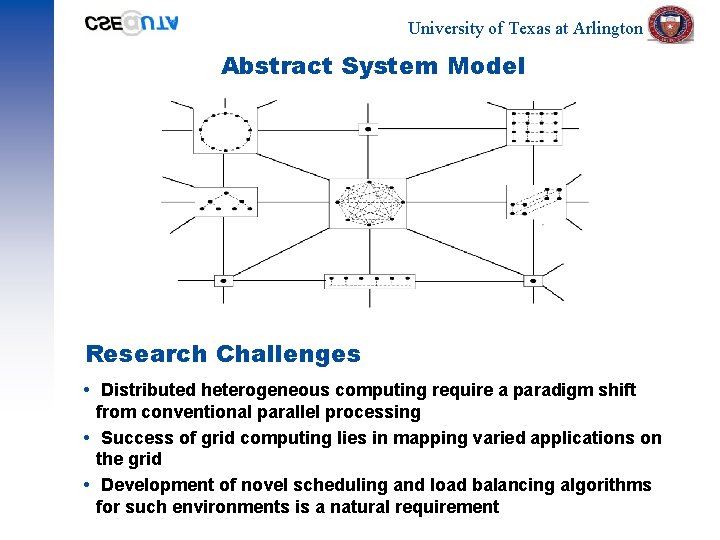

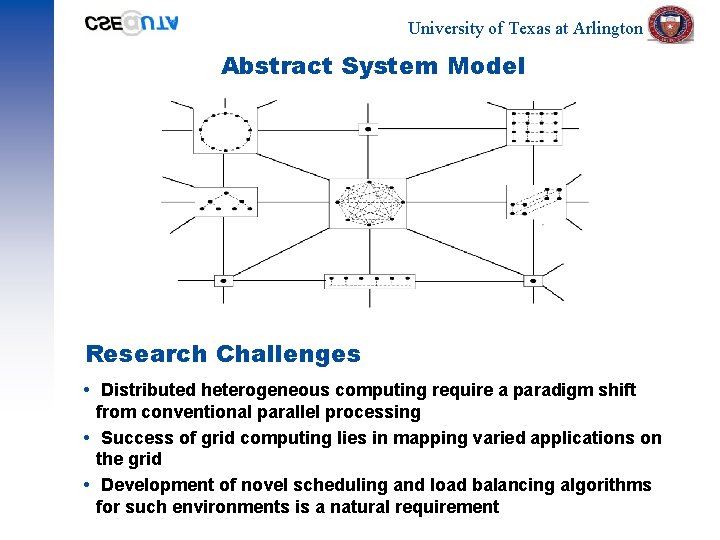

University of Texas at Arlington Abstract System Model Research Challenges Distributed heterogeneous computing require a paradigm shift from conventional parallel processing Success of grid computing lies in mapping varied applications on the grid Development of novel scheduling and load balancing algorithms for such environments is a natural requirement

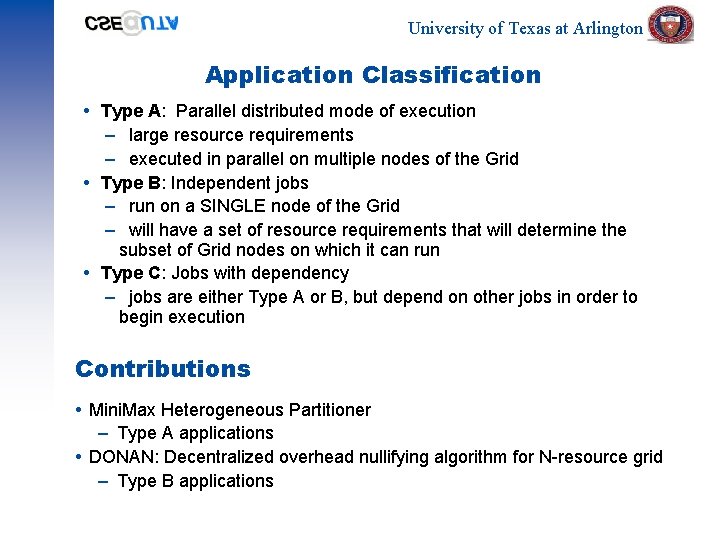

University of Texas at Arlington Application Classification Type A: Parallel distributed mode of execution – large resource requirements – executed in parallel on multiple nodes of the Grid Type B: Independent jobs – run on a SINGLE node of the Grid – will have a set of resource requirements that will determine the subset of Grid nodes on which it can run Type C: Jobs with dependency – jobs are either Type A or B, but depend on other jobs in order to begin execution Contributions Mini. Max Heterogeneous Partitioner – Type A applications DONAN: Decentralized overhead nullifying algorithm for N-resource grid – Type B applications

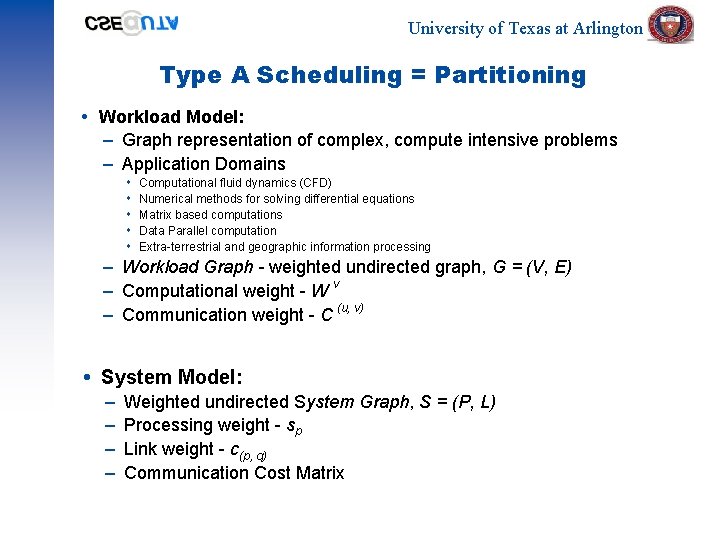

University of Texas at Arlington Type A Scheduling = Partitioning Workload Model: – Graph representation of complex, compute intensive problems – Application Domains Computational fluid dynamics (CFD) Numerical methods for solving differential equations Matrix based computations Data Parallel computation Extra-terrestrial and geographic information processing – Workload Graph - weighted undirected graph, G = (V, E) – Computational weight - W v – Communication weight - C (u, v) System Model: – – Weighted undirected System Graph, S = (P, L) Processing weight - sp Link weight - c(p, q) Communication Cost Matrix

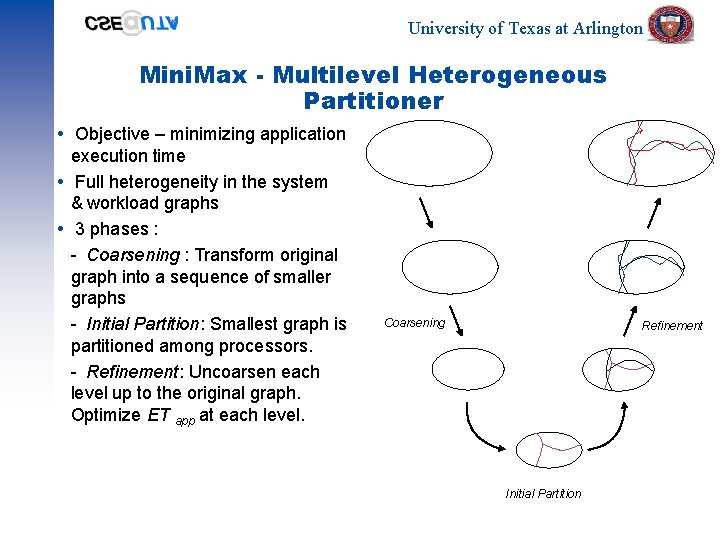

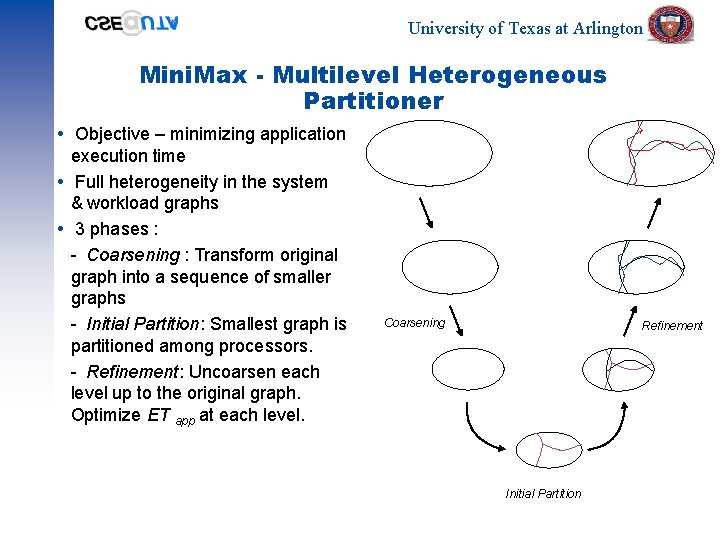

University of Texas at Arlington Mini. Max - Multilevel Heterogeneous Partitioner Objective – minimizing application execution time Full heterogeneity in the system & workload graphs 3 phases : - Coarsening : Transform original graph into a sequence of smaller graphs - Initial Partition: Smallest graph is partitioned among processors. - Refinement: Uncoarsen each level up to the original graph. Optimize ET app at each level. Coarsening Refinement Initial Partition

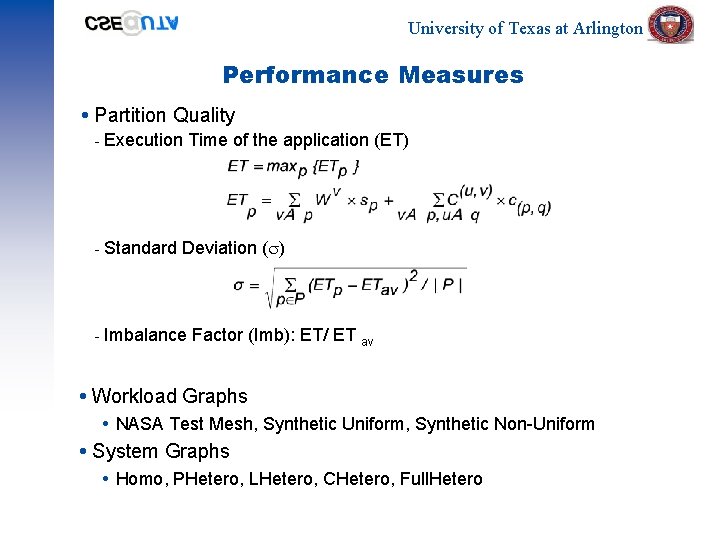

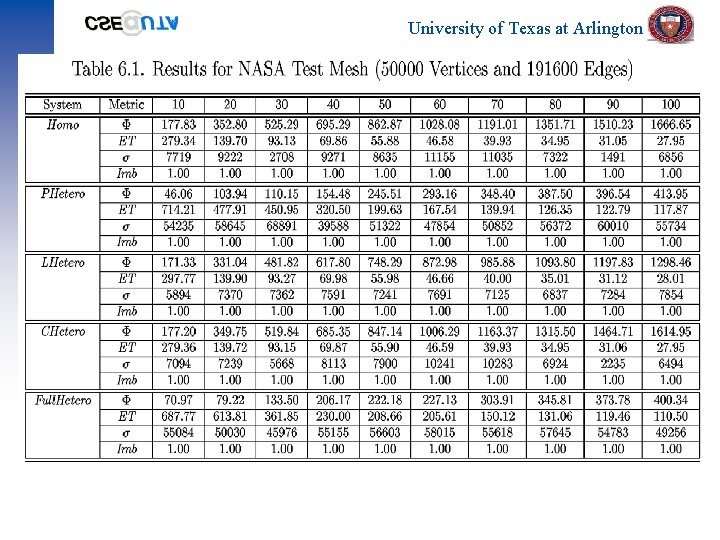

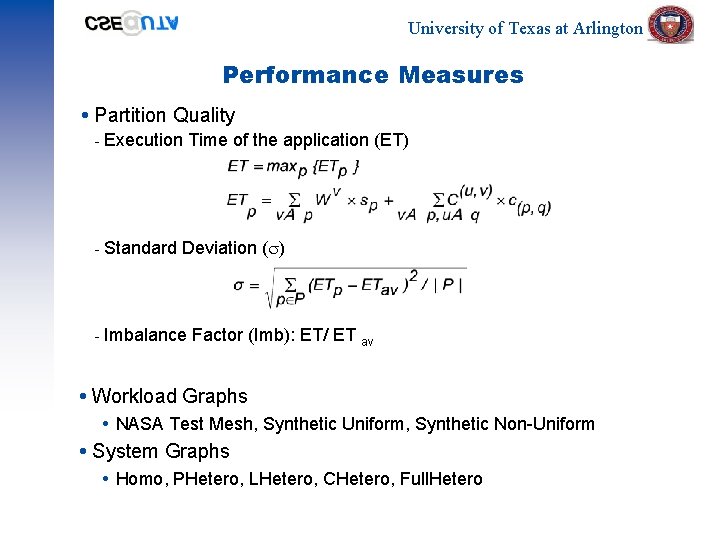

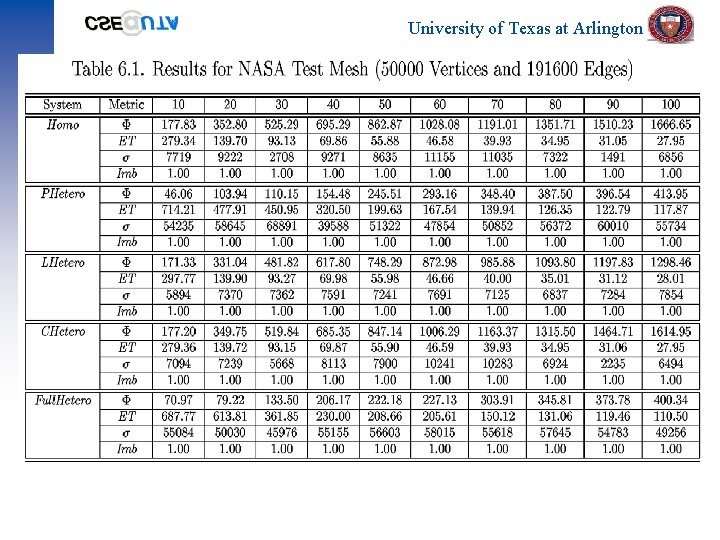

University of Texas at Arlington Performance Measures Partition Quality - Execution Time of the application (ET) - Standard Deviation ( ) - Imbalance Factor (Imb): ET/ ET av Workload Graphs NASA Test Mesh, Synthetic Uniform, Synthetic Non-Uniform System Graphs Homo, PHetero, LHetero, CHetero, Full. Hetero

University of Texas at Arlington

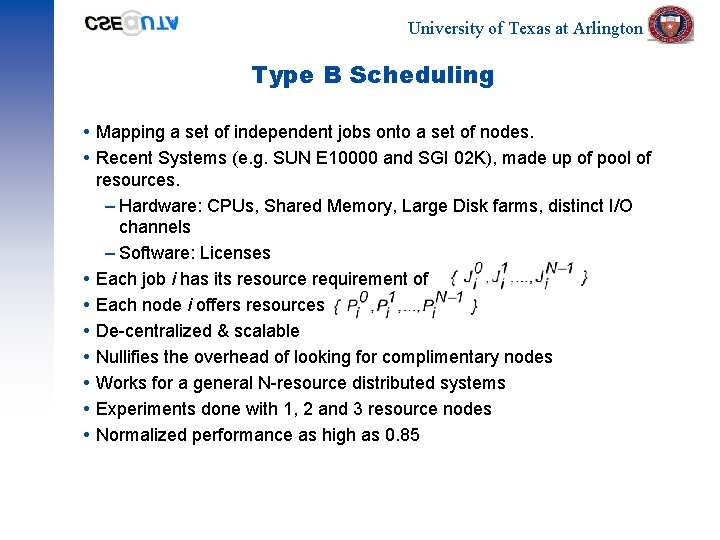

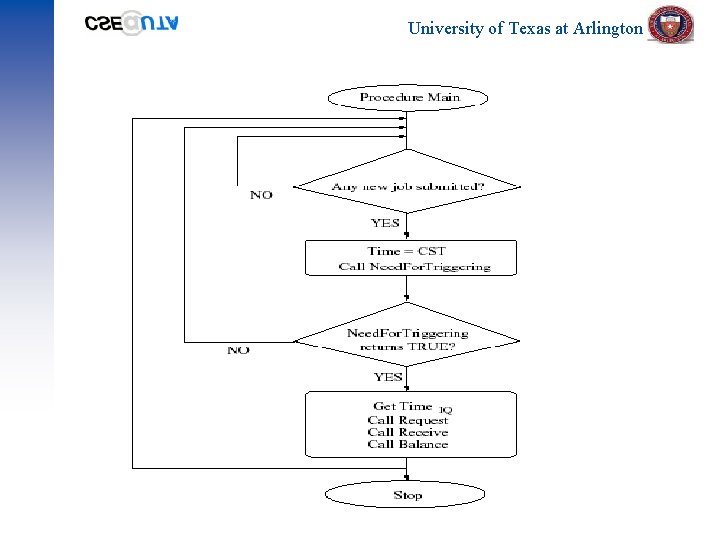

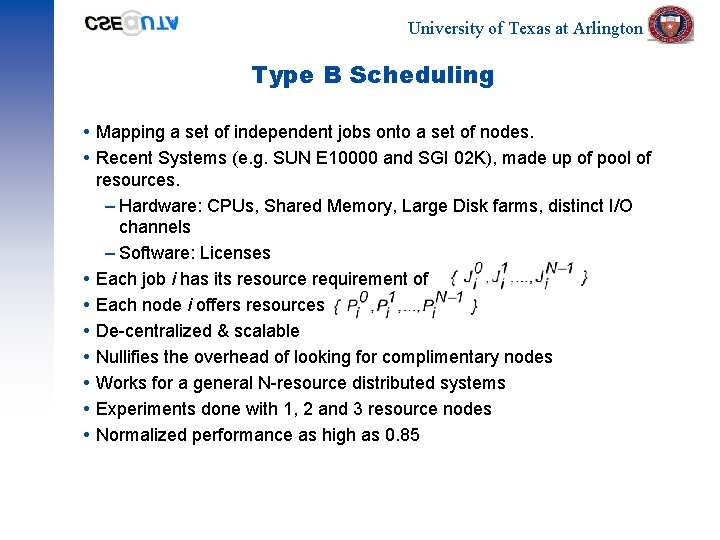

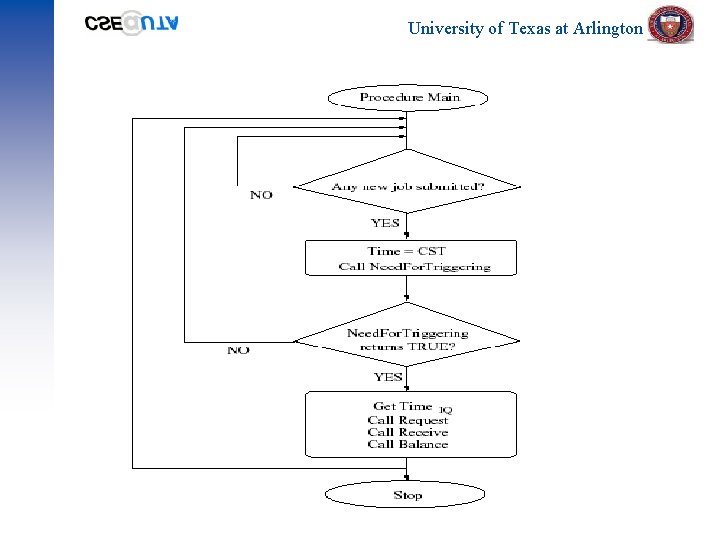

University of Texas at Arlington Type B Scheduling Mapping a set of independent jobs onto a set of nodes. Recent Systems (e. g. SUN E 10000 and SGI 02 K), made up of pool of resources. – Hardware: CPUs, Shared Memory, Large Disk farms, distinct I/O channels – Software: Licenses Each job i has its resource requirement of Each node i offers resources De-centralized & scalable Nullifies the overhead of looking for complimentary nodes Works for a general N-resource distributed systems Experiments done with 1, 2 and 3 resource nodes Normalized performance as high as 0. 85

University of Texas at Arlington

University of Texas at Arlington Conclusion & Future Work Developed Mini. Max and DONAN scheduling algorithms Scheduler for Type C Applications Integration with Globus Test functionality on real Grid

University of texas at arlington school of social work

University of texas at arlington school of social work Arlington texas university ranking

Arlington texas university ranking Uta disciplinary probation

Uta disciplinary probation Ut arlington demographics

Ut arlington demographics Taper roller bearing advantages and disadvantages

Taper roller bearing advantages and disadvantages Red dot

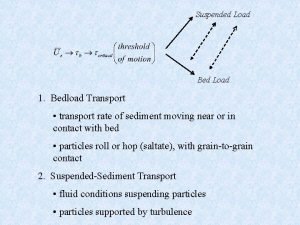

Red dot Bed load and suspended load transport

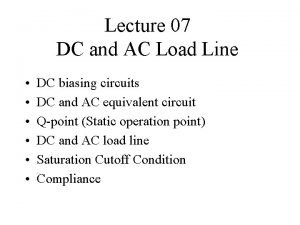

Bed load and suspended load transport Difference between ac and dc load line

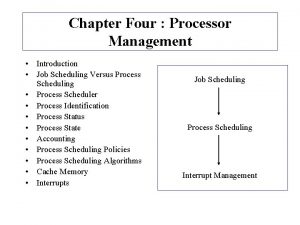

Difference between ac and dc load line Job scheduling vs process scheduling

Job scheduling vs process scheduling Evan stiles swimming

Evan stiles swimming St ann catholic church

St ann catholic church Missed connections arlington va

Missed connections arlington va