Targeted Marketing KDD Cup and Customer Modeling Outline

- Slides: 40

Targeted Marketing, KDD Cup and Customer Modeling

Outline § Direct Marketing § Review: Evaluation: Lift, Gains § KDD Cup 1997 § Lift and Benefit estimation § Privacy and Data Mining 2

Direct Marketing Paradigm § Find most likely prospects to contact § Not everybody needs to be contacted § Number of targets is usually much smaller than number of prospects § Typical Applications § retailers, catalogues, direct mail (and e-mail) § customer acquisition, cross-sell, attrition §. . . 3

Direct Marketing Evaluation § Accuracy on the entire dataset is not the right measure § Approach § develop a target model § score all prospects and rank them by decreasing score § select top P% of prospects for action § Evaluate Performance on top P% using Gains and Lift 4

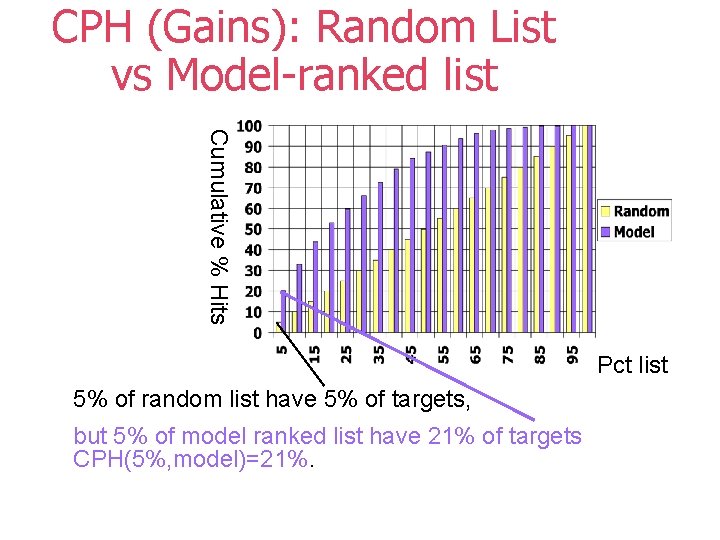

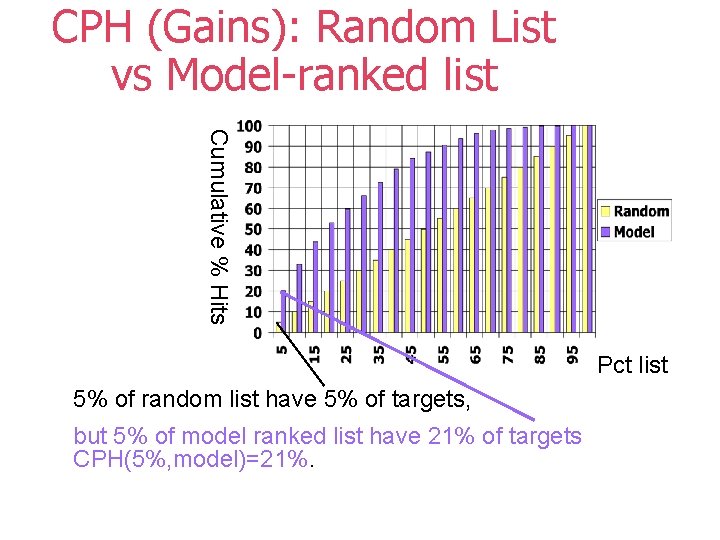

CPH (Gains): Random List vs Model-ranked list Cumulative % Hits Pct list 5% of random list have 5% of targets, but 5% of model ranked list have 21% of targets CPH(5%, model)=21%.

Lift Curve Lift(P) = CPH(P) / P Lift (at 5%) = 21% / 5% = 4. 2 better than random P -- percent of the list

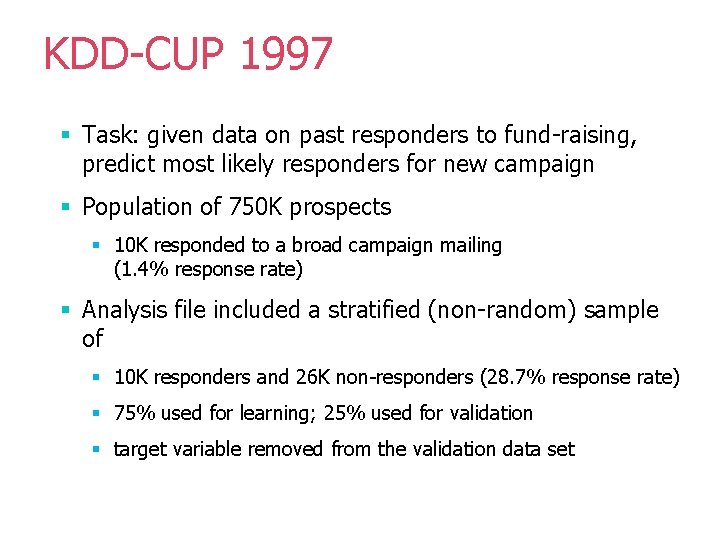

KDD-CUP 1997 § Task: given data on past responders to fund-raising, predict most likely responders for new campaign § Population of 750 K prospects § 10 K responded to a broad campaign mailing (1. 4% response rate) § Analysis file included a stratified (non-random) sample of § 10 K responders and 26 K non-responders (28. 7% response rate) § 75% used for learning; 25% used for validation § target variable removed from the validation data set

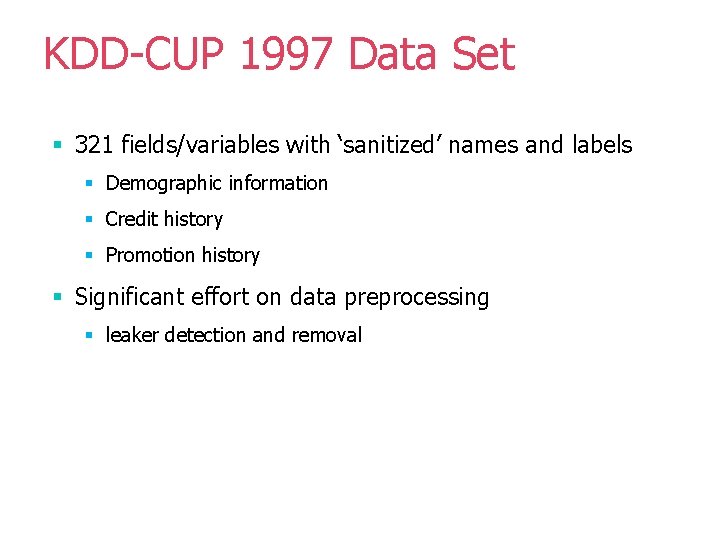

KDD-CUP 1997 Data Set § 321 fields/variables with ‘sanitized’ names and labels § Demographic information § Credit history § Promotion history § Significant effort on data preprocessing § leaker detection and removal

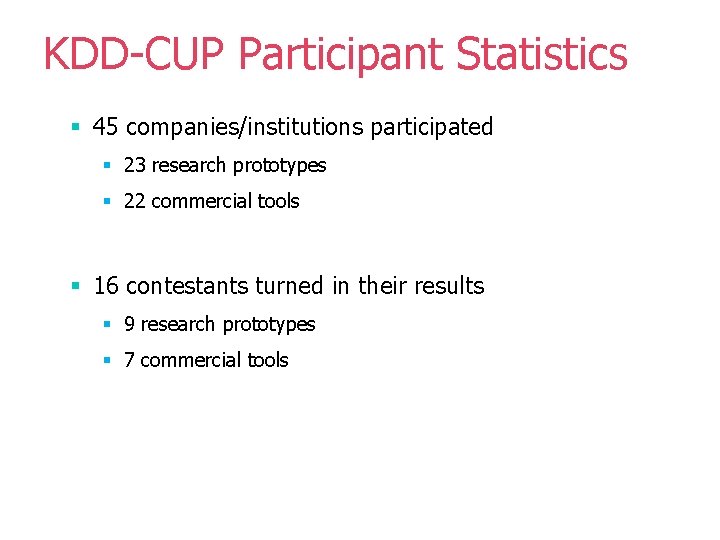

KDD-CUP Participant Statistics § 45 companies/institutions participated § 23 research prototypes § 22 commercial tools § 16 contestants turned in their results § 9 research prototypes § 7 commercial tools

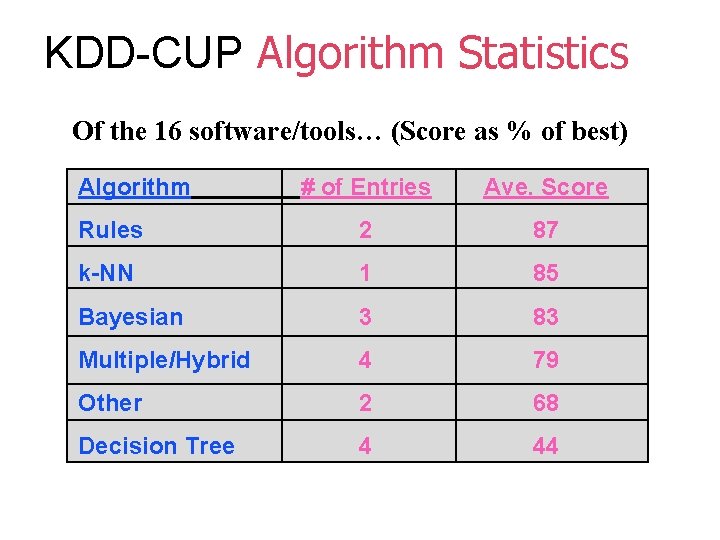

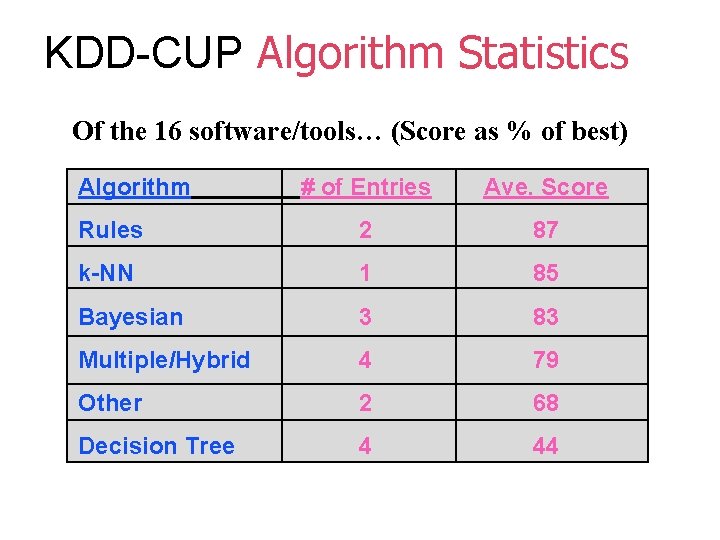

KDD-CUP Algorithm Statistics Of the 16 software/tools… (Score as % of best) Algorithm # of Entries Ave. Score Rules 2 87 k-NN 1 85 Bayesian 3 83 Multiple/Hybrid 4 79 Other 2 68 Decision Tree 4 44

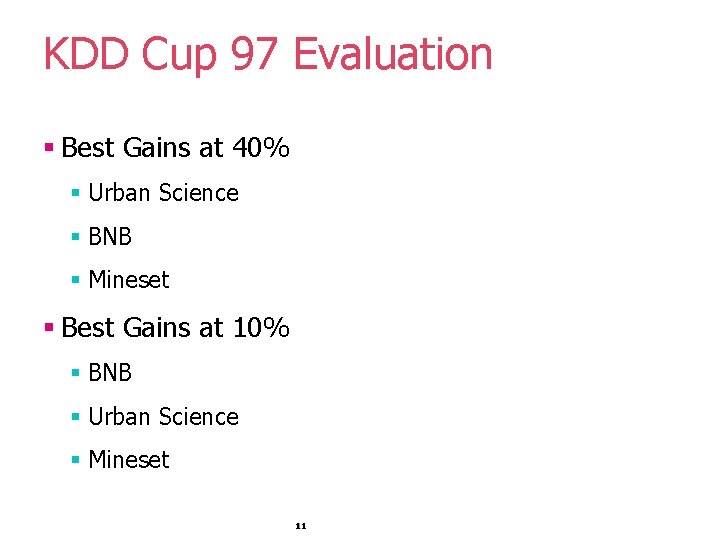

KDD Cup 97 Evaluation § Best Gains at 40% § Urban Science § BNB § Mineset § Best Gains at 10% § BNB § Urban Science § Mineset 11

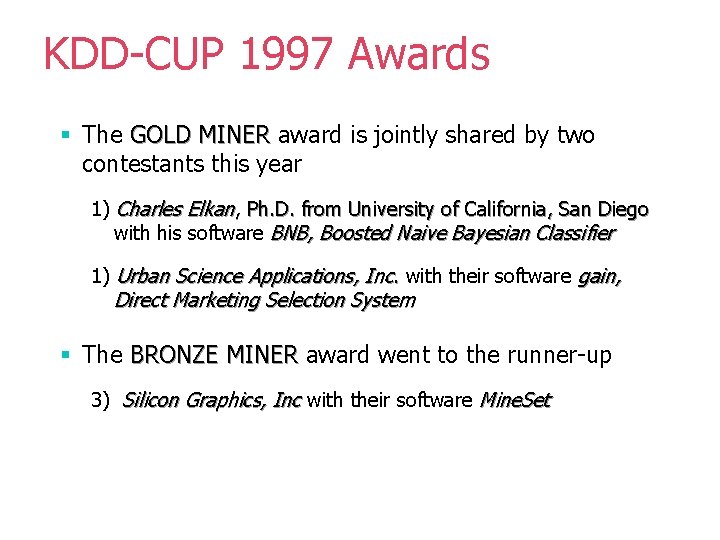

KDD-CUP 1997 Awards § The GOLD MINER award is jointly shared by two contestants this year 1) Charles Elkan, Ph. D. from University of California, San Diego with his software BNB, Boosted Naive Bayesian Classifier 1) Urban Science Applications, Inc. with their software gain, Direct Marketing Selection System § The BRONZE MINER award went to the runner-up 3) Silicon Graphics, Inc with their software Mine. Set

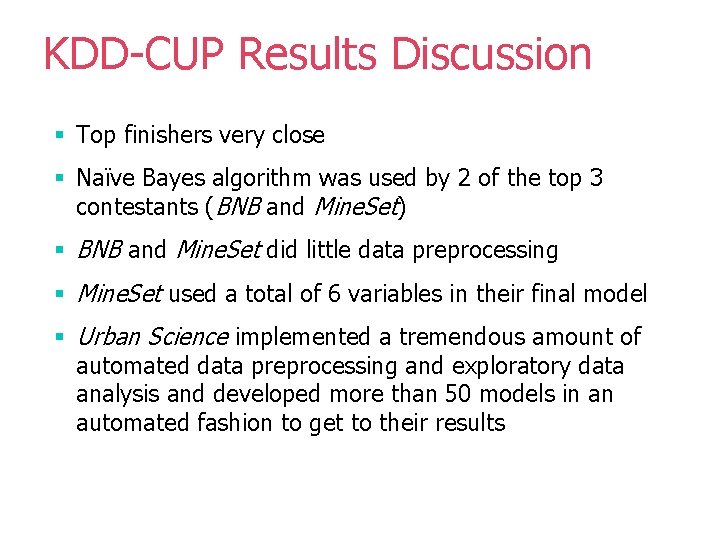

KDD-CUP Results Discussion § Top finishers very close § Naïve Bayes algorithm was used by 2 of the top 3 contestants (BNB and Mine. Set) § BNB and Mine. Set did little data preprocessing § Mine. Set used a total of 6 variables in their final model § Urban Science implemented a tremendous amount of automated data preprocessing and exploratory data analysis and developed more than 50 models in an automated fashion to get to their results

KDD Cup 1997: Top 3 results Top 3 finishers are very close 14

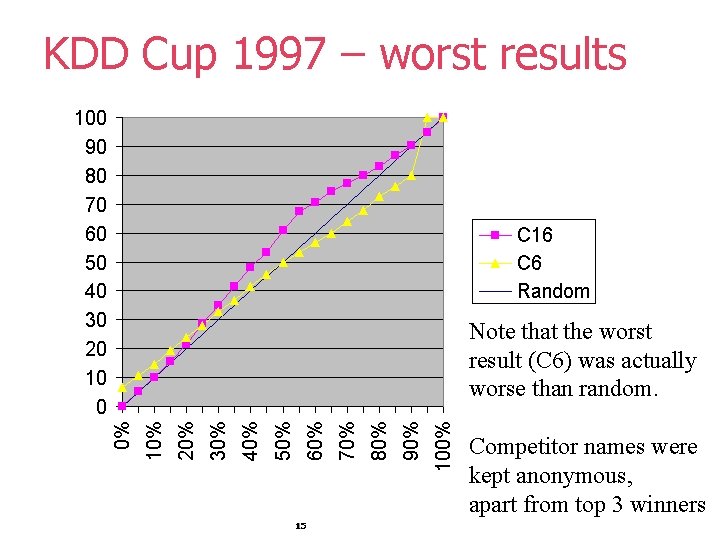

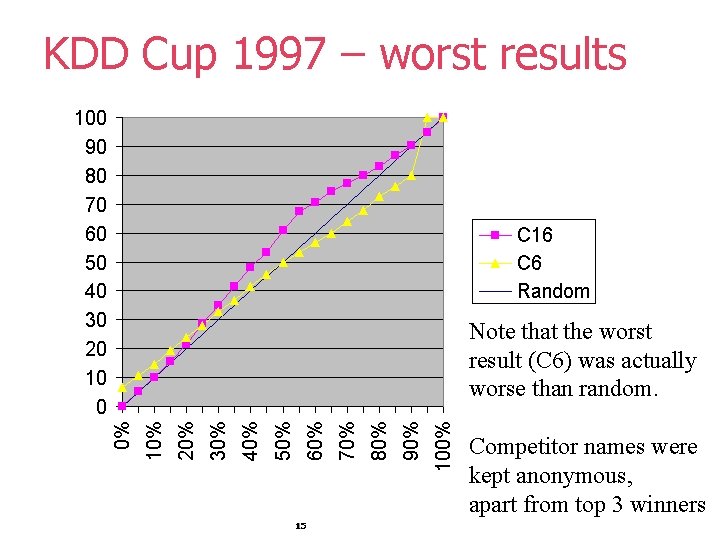

KDD Cup 1997 – worst results Note that the worst result (C 6) was actually worse than random. Competitor names were kept anonymous, apart from top 3 winners 15

Better Model Evaluation? § Comparing Gains at 10% and 40% is ad-hoc § Are there more principled methods? § Area Under the Curve (AUC) of Gains Chart § Lift Quality § Ultimately, financial measures: Campaign Benefits 16

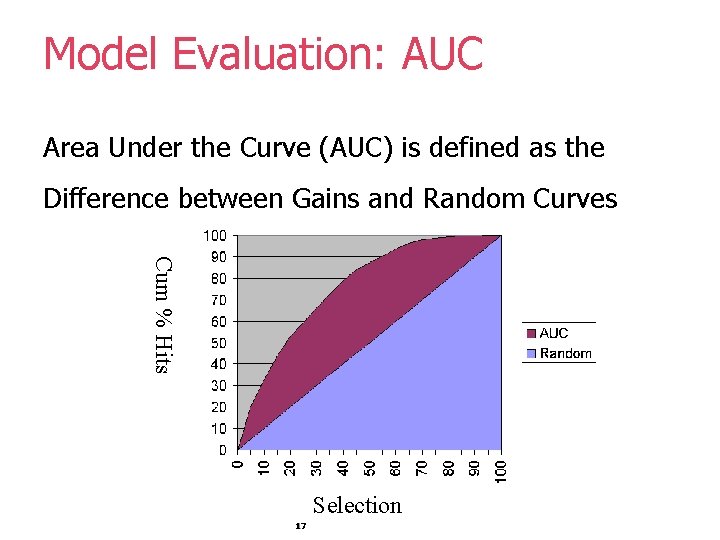

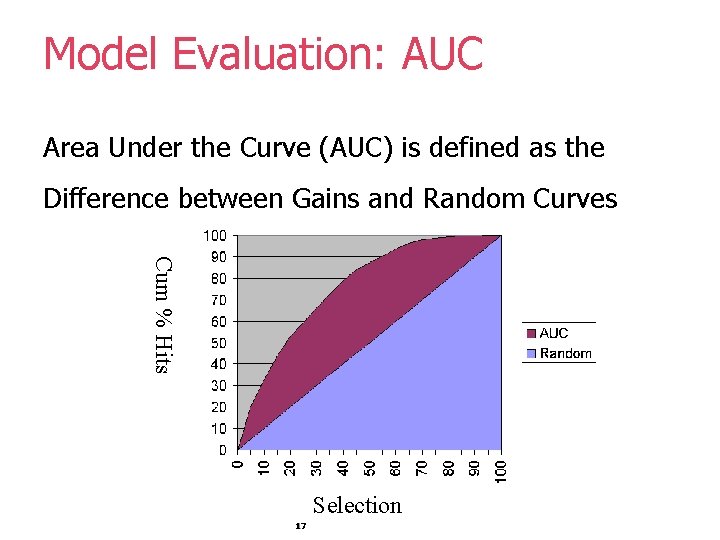

Model Evaluation: AUC Area Under the Curve (AUC) is defined as the Difference between Gains and Random Curves Cum % Hits Selection 17

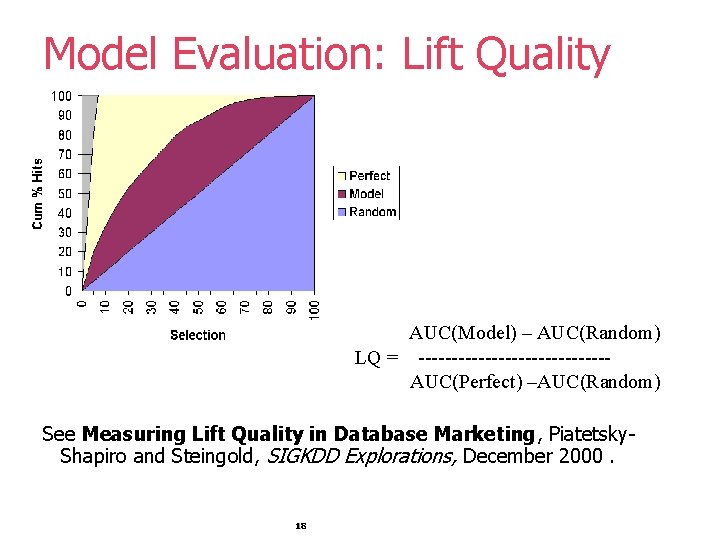

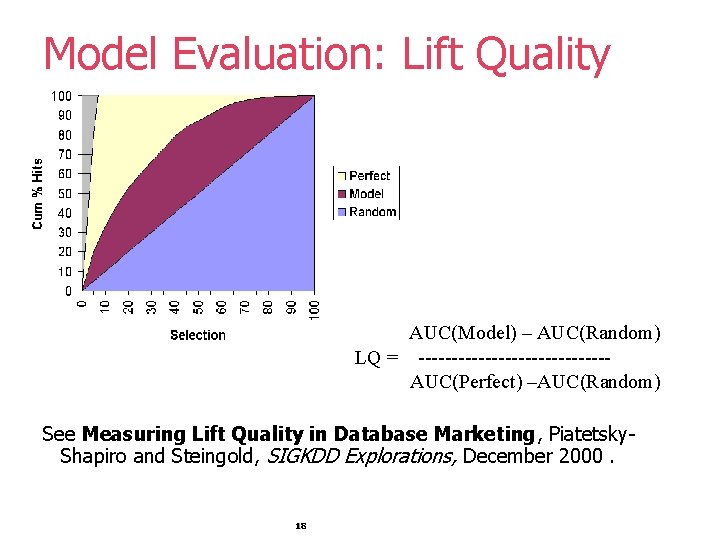

Model Evaluation: Lift Quality AUC(Model) – AUC(Random) LQ = --------------AUC(Perfect) –AUC(Random) See Measuring Lift Quality in Database Marketing, Piatetsky. Shapiro and Steingold, SIGKDD Explorations, December 2000. 18

Lift Quality (Lquality) § For a perfect model, Lquality = 100% § For a random model, Lquality = 0 § For KDD Cup 97, § Lquality(Urban Science) = 43. 3% § Lquality(Elkan) = 42. 7% § However, small differences in Lquality are not significant 19

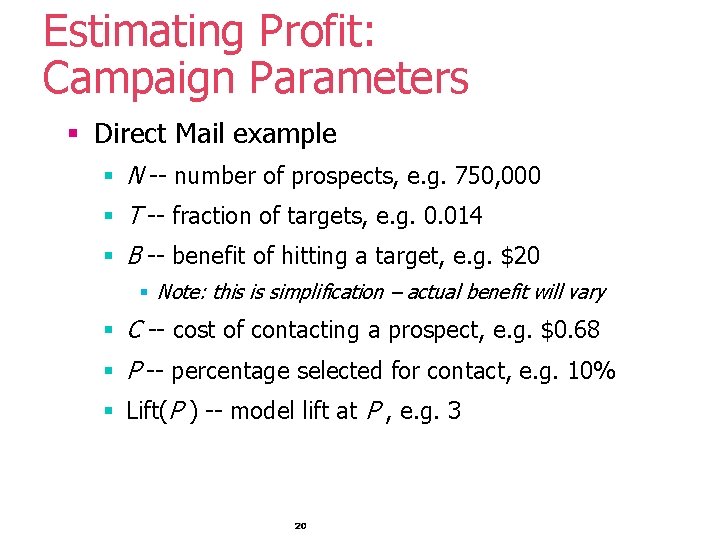

Estimating Profit: Campaign Parameters § Direct Mail example § N -- number of prospects, e. g. 750, 000 § T -- fraction of targets, e. g. 0. 014 § B -- benefit of hitting a target, e. g. $20 § Note: this is simplification – actual benefit will vary § C -- cost of contacting a prospect, e. g. $0. 68 § P -- percentage selected for contact, e. g. 10% § Lift(P ) -- model lift at P , e. g. 3 20

Contacting Top P of Model-Sorted List § Using previous example, let selection be P = 10% and Lift(P) = 3 § Selection size = N P , e. g. 75, 000 § Random has N P T targets in first P list, e. g. 1, 050 § Q: How many targets are in model P-selection? § Model has more by a factor Lift(P) or N P T Lift(P) targets in the selection, e. g. 3, 150 § Benefit of contacting the selection is N P T Lift(P) B , e. g. $63, 000 § Cost of contacting N P is N P C , e. g. $51, 000 21

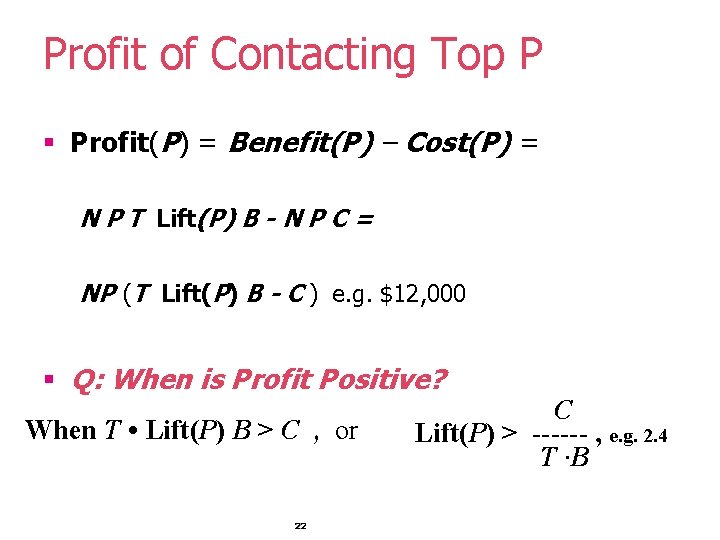

Profit of Contacting Top P § Profit(P) = Benefit(P) – Cost(P) = N P T Lift(P) B - N P C = NP (T Lift(P) B - C ) e. g. $12, 000 § Q: When is Profit Positive? When T • Lift(P) B > C , or 22 C Lift(P) > ------ , e. g. 2. 4 T ·B

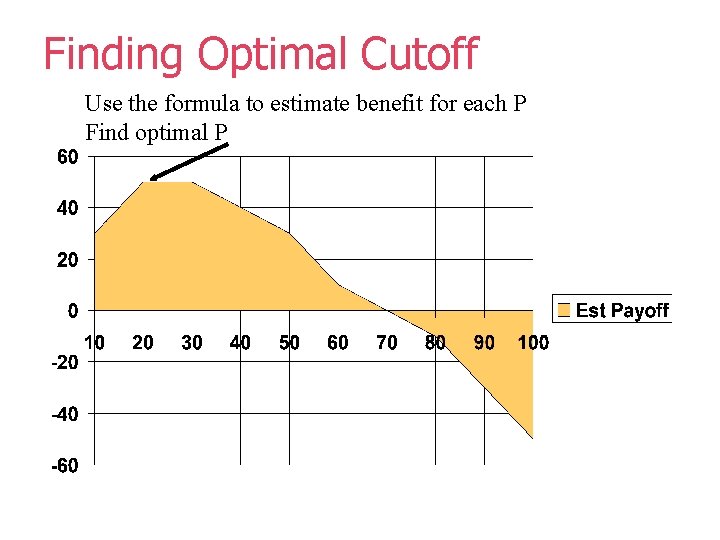

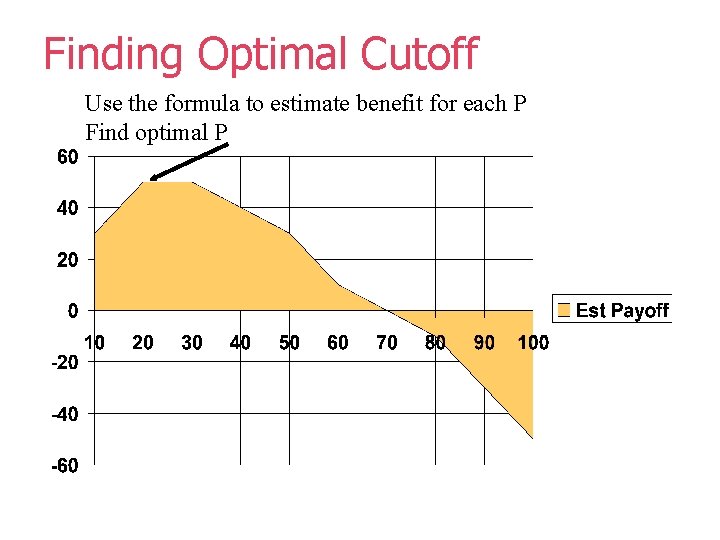

Finding Optimal Cutoff Use the formula to estimate benefit for each P Find optimal P

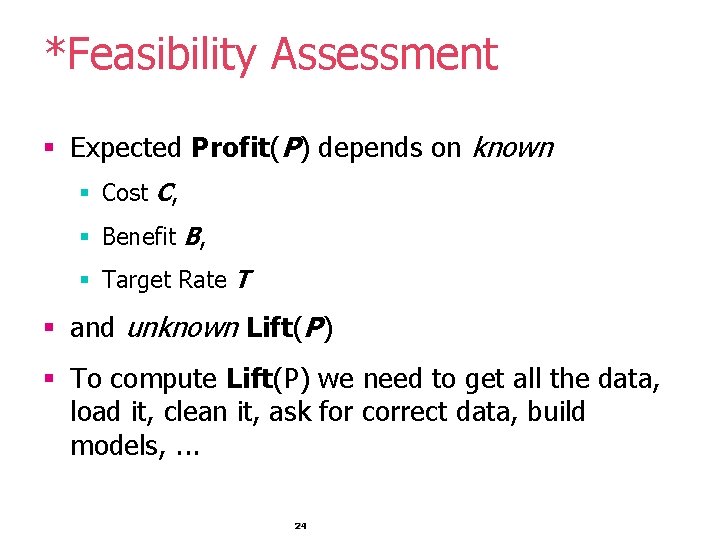

*Feasibility Assessment § Expected Profit(P) depends on known § Cost C, § Benefit B, § Target Rate T § and unknown Lift(P) § To compute Lift(P) we need to get all the data, load it, clean it, ask for correct data, build models, . . . 24

*Can Expected Lift be estimated ? § only from N and T ? § In theory -- no, but in many practical applications, § ? !? ! surprisingly yes ? !? ! 25

*Empirical Observations about Lift § For good models, usually Lift(P) is monotically decreasing with P § Lift at fixed P (e. g. 0. 05) is usually higher for lower T § Special point P = T § for a perfect predictor, all targets are in the first T of the list, for a maximum lift of 1/T § What can we expect compared to 1/T ? 26

*Meta Analysis of Lift § 26 attrition & cross-sell problems from finance and telecom domains § N ranges from 1, 000 to 150, 000 § T ranges from 1% to 22% § No clear relation to N, but there is dependence on T 27

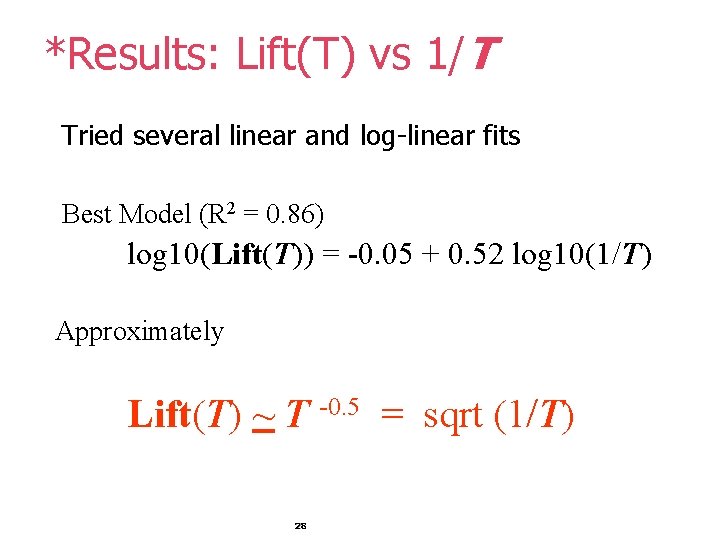

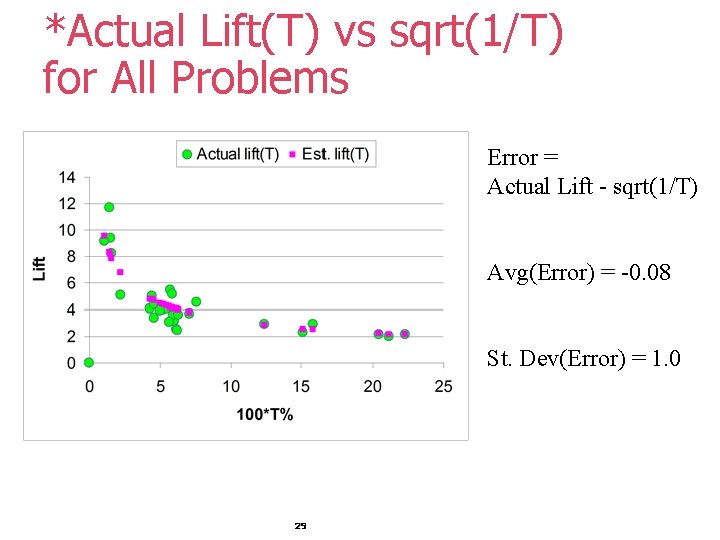

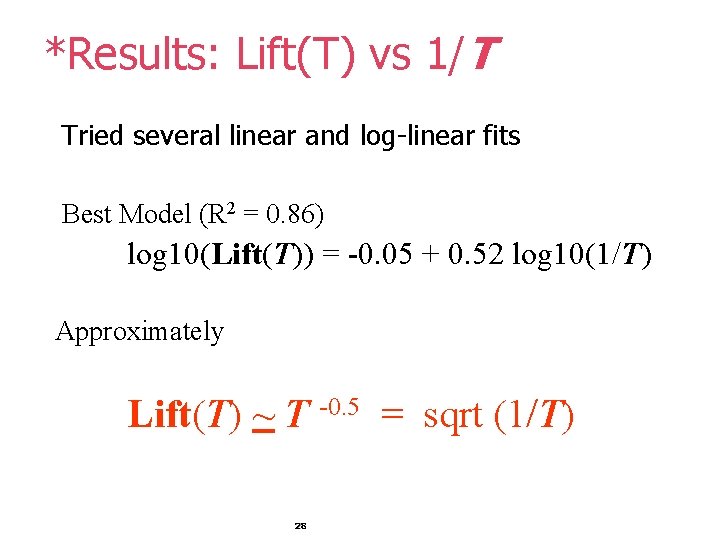

*Results: Lift(T) vs 1/T Tried several linear and log-linear fits Best Model (R 2 = 0. 86) log 10(Lift(T)) = -0. 05 + 0. 52 log 10(1/T) Approximately Lift(T) ~ T -0. 5 = sqrt (1/T) 28

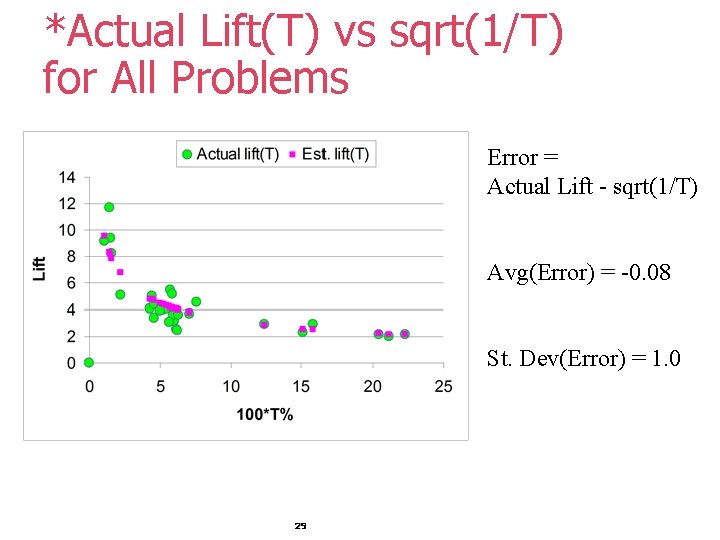

*Actual Lift(T) vs sqrt(1/T) for All Problems Error = Actual Lift - sqrt(1/T) Avg(Error) = -0. 08 St. Dev(Error) = 1. 0 29

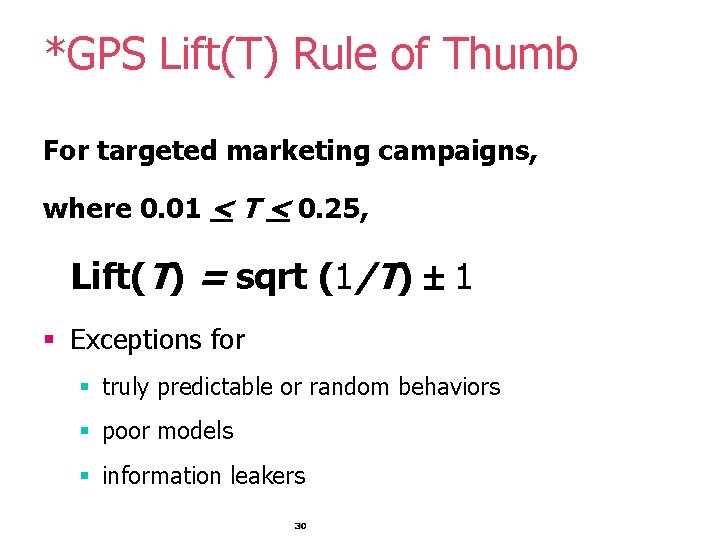

*GPS Lift(T) Rule of Thumb For targeted marketing campaigns, where 0. 01 < T < 0. 25, Lift(T) = sqrt (1/T) 1 § Exceptions for § truly predictable or random behaviors § poor models § information leakers 30

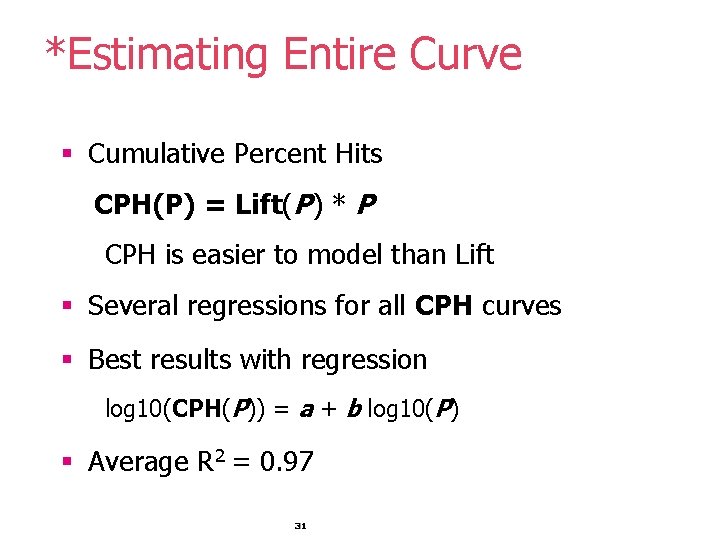

*Estimating Entire Curve § Cumulative Percent Hits CPH(P) = Lift(P) * P CPH is easier to model than Lift § Several regressions for all CPH curves § Best results with regression log 10(CPH(P)) = a + b log 10(P) § Average R 2 = 0. 97 31

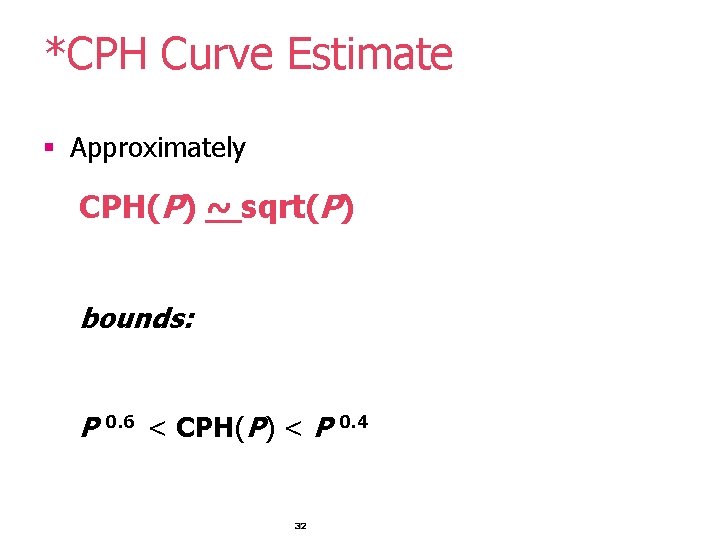

*CPH Curve Estimate § Approximately CPH(P) ~ sqrt(P) bounds: P 0. 6 < CPH(P) < P 0. 4 32

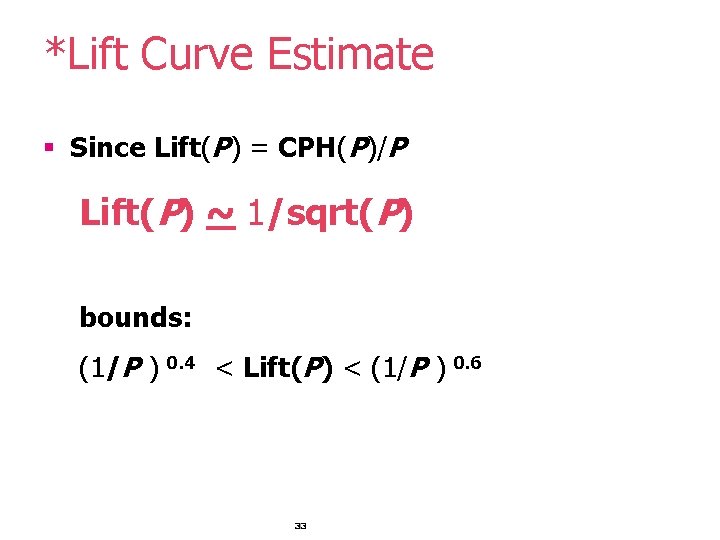

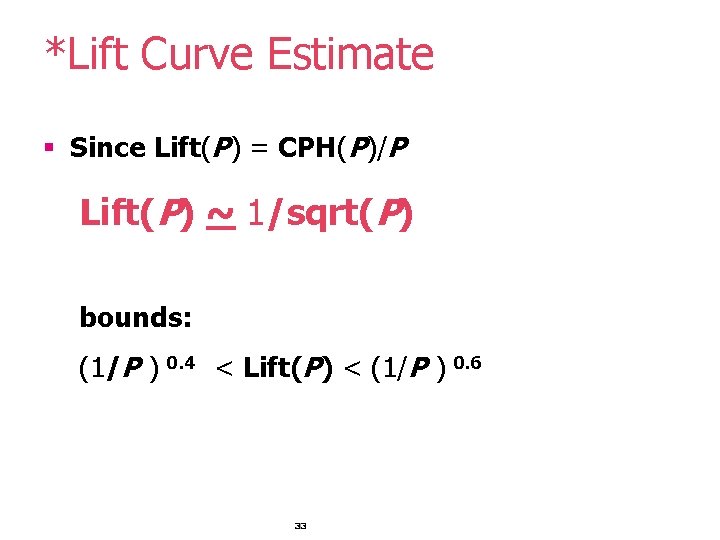

*Lift Curve Estimate § Since Lift(P) = CPH(P)/P Lift(P) ~ 1/sqrt(P) bounds: (1/P ) 0. 4 < Lift(P) < (1/P ) 0. 6 33

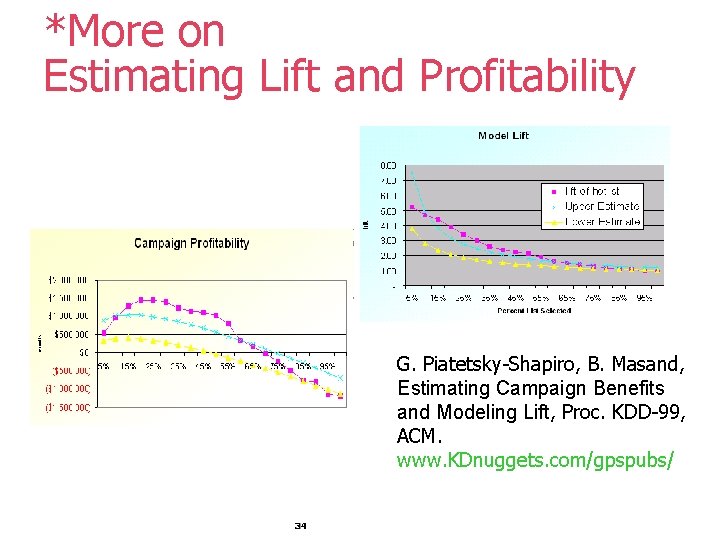

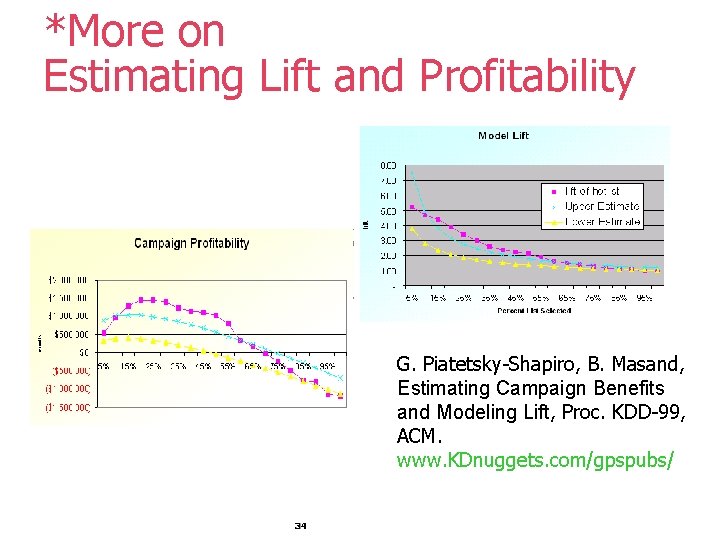

*More on Estimating Lift and Profitability G. Piatetsky-Shapiro, B. Masand, Estimating Campaign Benefits and Modeling Lift, Proc. KDD-99, ACM. www. KDnuggets. com/gpspubs/ 34

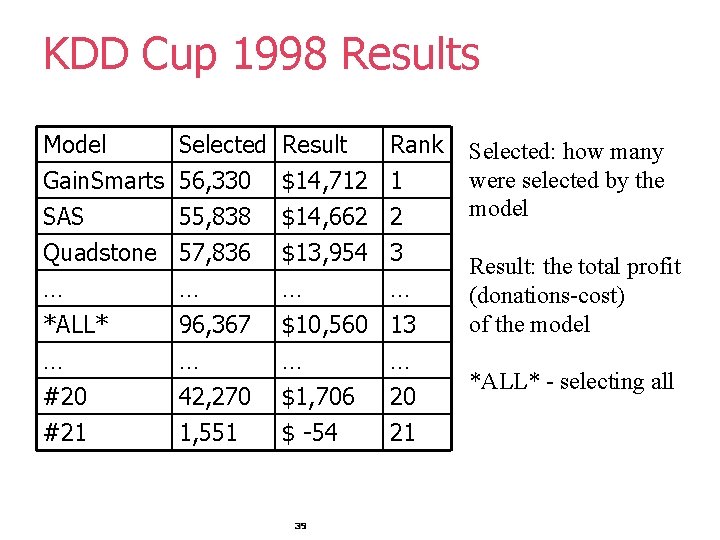

KDD Cup 1998 § Data from Paralyzed Veterans of America (charity) § Goal: select mailing with the highest profit § Winners: Urban Science, SAS, Quadstone § see full results and winner’s presentations at www. kdnuggets. com/meetings/kdd 98 35

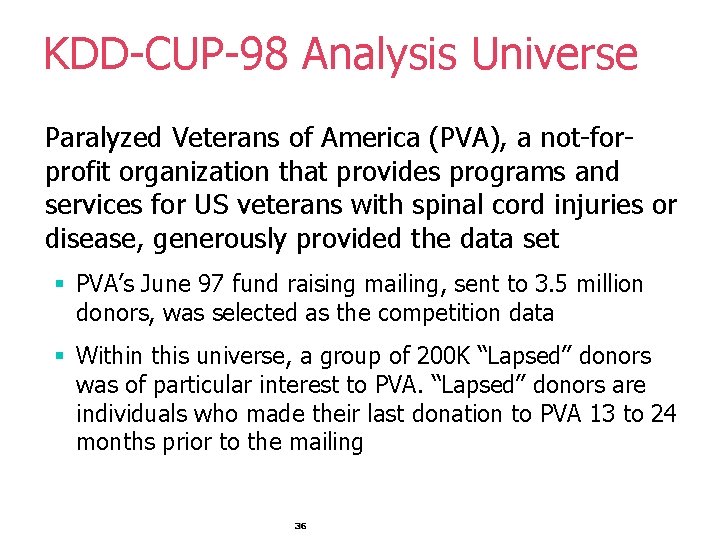

KDD-CUP-98 Analysis Universe Paralyzed Veterans of America (PVA), a not-forprofit organization that provides programs and services for US veterans with spinal cord injuries or disease, generously provided the data set § PVA’s June 97 fund raising mailing, sent to 3. 5 million donors, was selected as the competition data § Within this universe, a group of 200 K “Lapsed” donors was of particular interest to PVA. “Lapsed” donors are individuals who made their last donation to PVA 13 to 24 months prior to the mailing 36

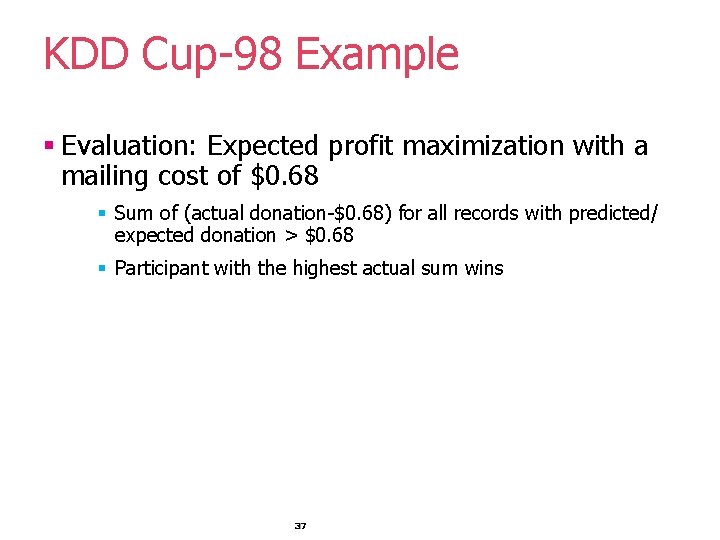

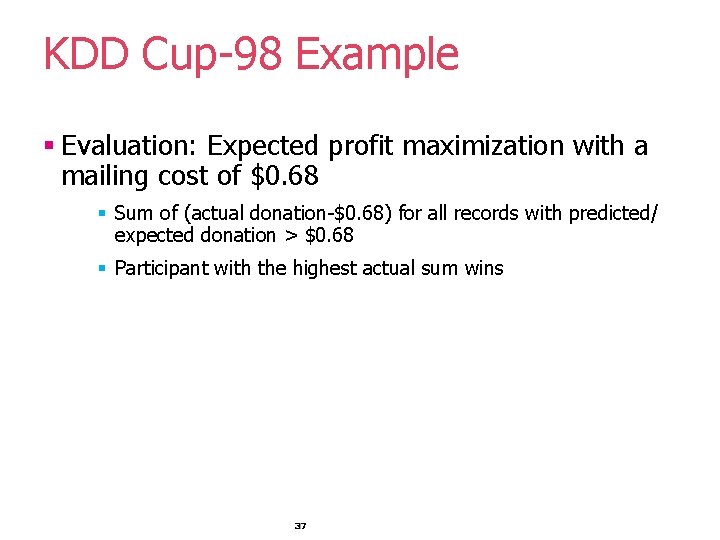

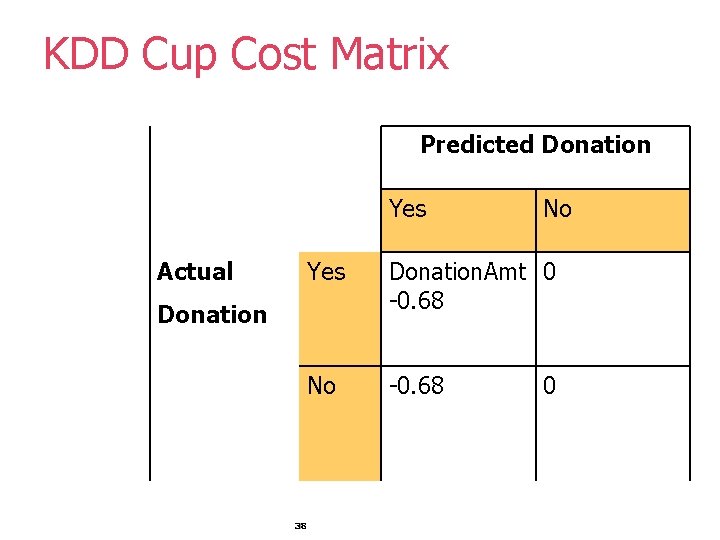

KDD Cup-98 Example § Evaluation: Expected profit maximization with a mailing cost of $0. 68 § Sum of (actual donation-$0. 68) for all records with predicted/ expected donation > $0. 68 § Participant with the highest actual sum wins 37

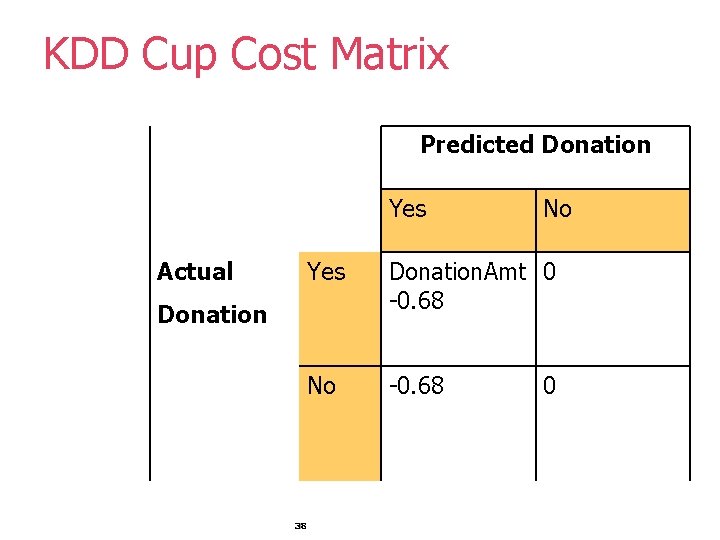

KDD Cup Cost Matrix Predicted Donation Yes Actual Yes Donation. Amt 0 -0. 68 No -0. 68 Donation 38 No 0

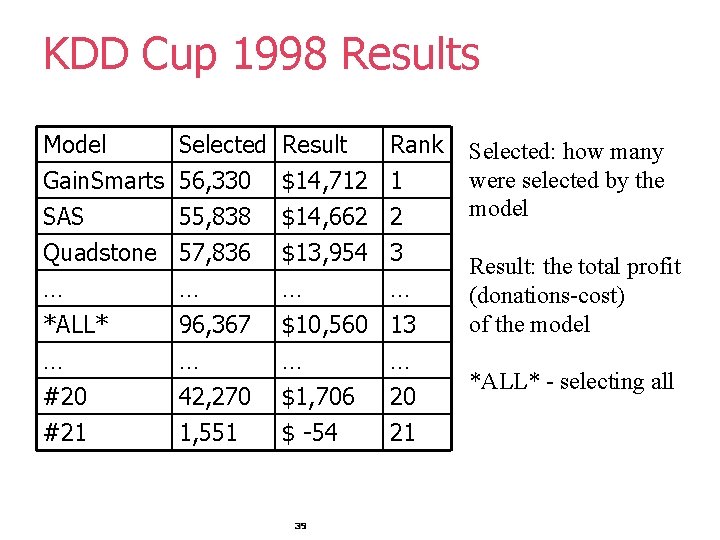

KDD Cup 1998 Results Model Gain. Smarts SAS Quadstone Selected 56, 330 55, 838 57, 836 Result $14, 712 $14, 662 $13, 954 Rank 1 2 3 … *ALL* … #20 #21 … 96, 367 … 42, 270 1, 551 … $10, 560 … $1, 706 $ -54 … 13 … 20 21 39 Selected: how many were selected by the model Result: the total profit (donations-cost) of the model *ALL* - selecting all

Summary § KDD Cup 1997 case study § Model Evaluation: AUC and Lift Quality § Estimating Campaign Profit § *Feasibility Assessment § GPS Rule of Thumb for Typical Lift Curve § KDD Cup 1998 40

Kdd cup 1998 solution

Kdd cup 1998 solution Marketing involve engaging directly with carefully targeted

Marketing involve engaging directly with carefully targeted Marketing involve engaging directly with carefully targeted

Marketing involve engaging directly with carefully targeted Helen c. erickson nursing theory

Helen c. erickson nursing theory Dimensional modeling vs relational modeling

Dimensional modeling vs relational modeling Kdd process

Kdd process Kdd endikasyonları

Kdd endikasyonları Data mining in data warehouse

Data mining in data warehouse Kdd task manager

Kdd task manager Kdd fayyad

Kdd fayyad Metodologia kdd

Metodologia kdd Proceso kdd

Proceso kdd Proceso kdd

Proceso kdd Targeted youth support islington

Targeted youth support islington Ncach

Ncach Tst hand hygiene tool

Tst hand hygiene tool Targeted local hire program

Targeted local hire program Targeted local hire program

Targeted local hire program Targeted disabilities

Targeted disabilities Targeted local hiring program

Targeted local hiring program Mshda first time home buyer

Mshda first time home buyer Targeted early numeracy (ten) intervention program

Targeted early numeracy (ten) intervention program External staffing

External staffing Epr effect

Epr effect Ovansertib

Ovansertib Public candy companies

Public candy companies Consist of your most important targeted or segmented groups

Consist of your most important targeted or segmented groups Which mcos cover the south gsa

Which mcos cover the south gsa Bread sentence

Bread sentence Customer relationship management and customer intimacy

Customer relationship management and customer intimacy Customer relationship management and customer intimacy

Customer relationship management and customer intimacy Customer relationship management and customer intimacy

Customer relationship management and customer intimacy Customer service training program outline

Customer service training program outline Managing marketing information to gain customer insights

Managing marketing information to gain customer insights Perbedaan customer relation dan customer service

Perbedaan customer relation dan customer service Beyond customer satisfaction

Beyond customer satisfaction Principles of marketing chapter 1

Principles of marketing chapter 1 Medical practice marketing and customer service

Medical practice marketing and customer service Creating and capturing customer value

Creating and capturing customer value Disadvantages of cup and bob viscometer

Disadvantages of cup and bob viscometer Kotler and armstrong

Kotler and armstrong