Recognition with Expression Variations Pattern Recognition Theory Spring

- Slides: 16

Recognition with Expression Variations Pattern Recognition Theory – Spring 2003 Prof. Vijayakumar Bhagavatula Derek Hoiem Tal Blum

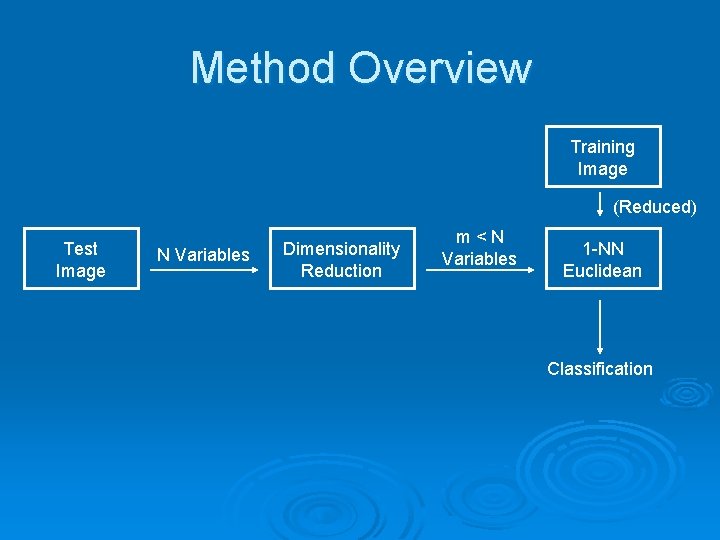

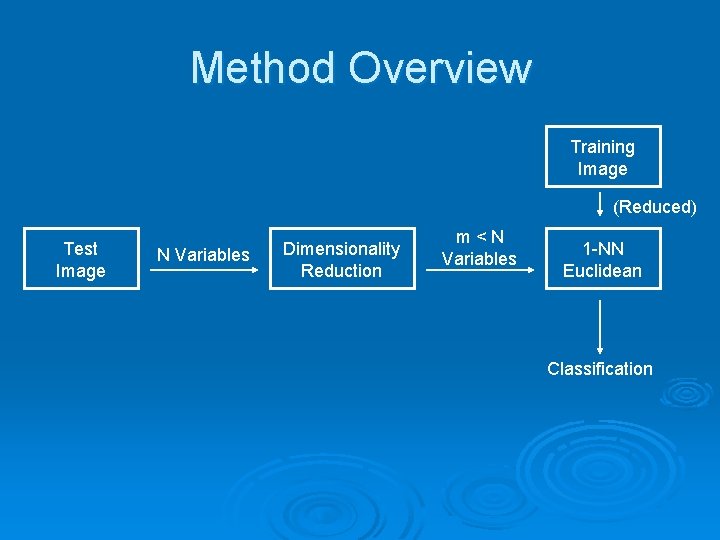

Method Overview Training Image (Reduced) Test Image N Variables Dimensionality Reduction m<N Variables 1 -NN Euclidean Classification

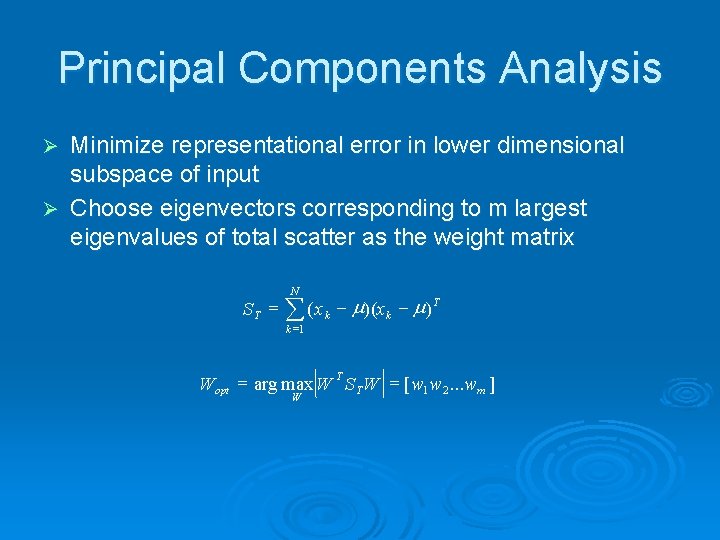

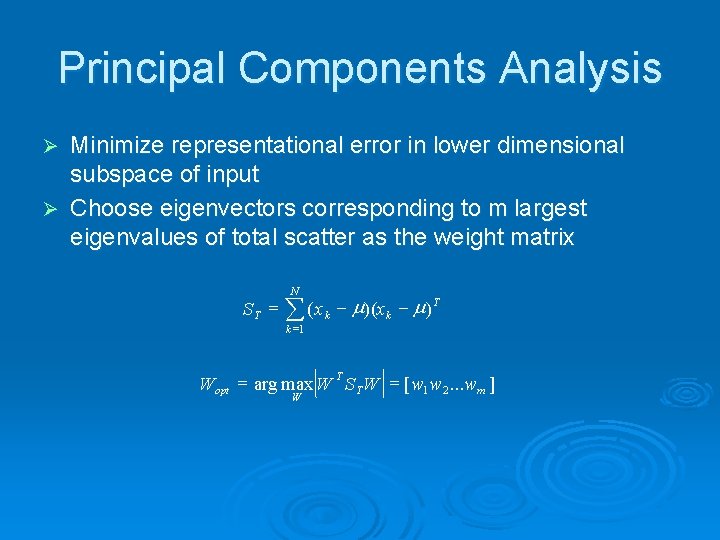

Principal Components Analysis Minimize representational error in lower dimensional subspace of input Ø Choose eigenvectors corresponding to m largest eigenvalues of total scatter as the weight matrix Ø N S T = å ( x k - m )(x k - m ) T k =1 Wopt = arg max W T S T W = [ w 1 w 2. . . wm ] W

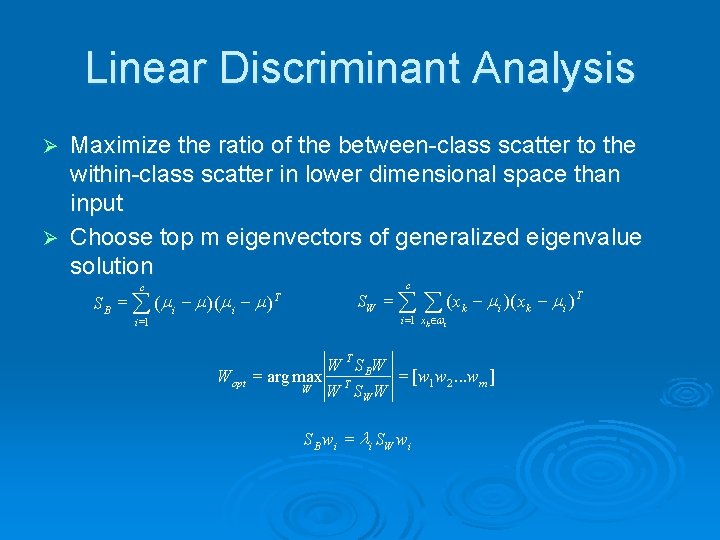

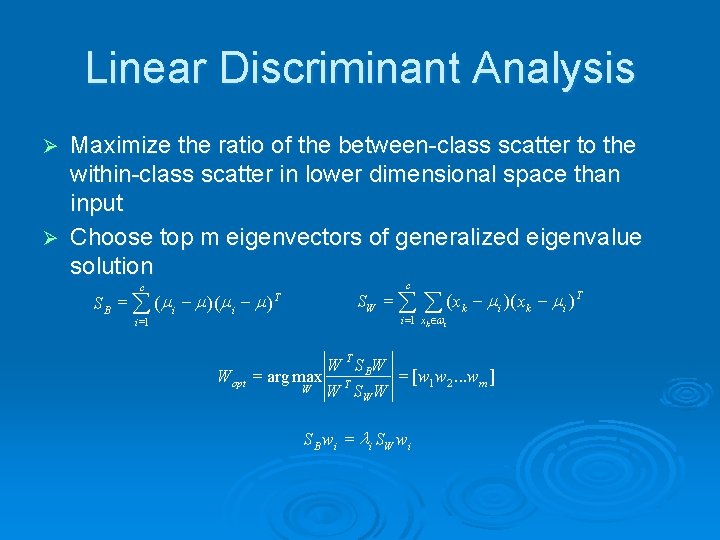

Linear Discriminant Analysis Maximize the ratio of the between-class scatter to the within-class scatter in lower dimensional space than input Ø Choose top m eigenvectors of generalized eigenvalue solution Ø c S B = å ( m i - m ) i =1 Wopt T c SW = å å ( x k - m i ) T i =1 xk Îwi W T S BW = arg max T = [ w 1 w 2. . . wm ] W W S B wi = li SW wi

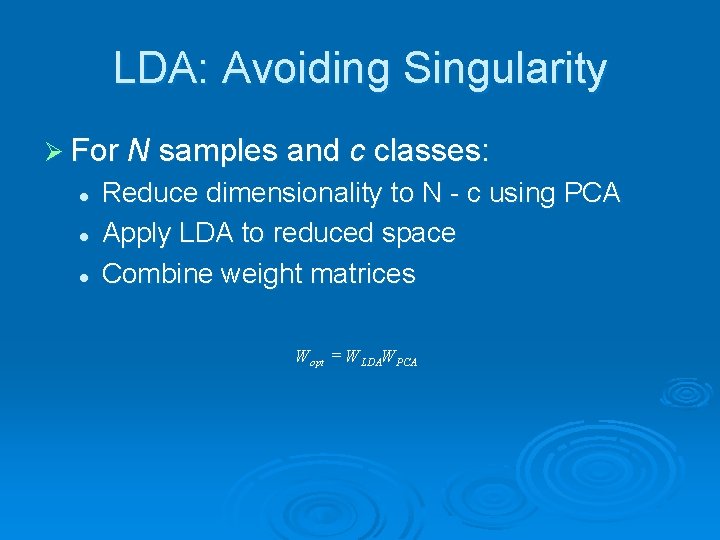

LDA: Avoiding Singularity Ø For N samples and c classes: l l l Reduce dimensionality to N - c using PCA Apply LDA to reduced space Combine weight matrices Wopt = W LDAW PCA

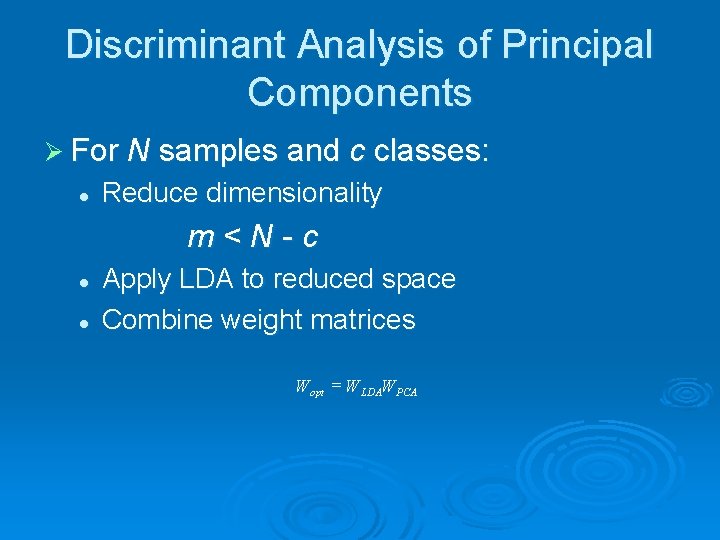

Discriminant Analysis of Principal Components Ø For N samples and c classes: l Reduce dimensionality m<N-c l l Apply LDA to reduced space Combine weight matrices Wopt = W LDAW PCA

When PCA+LDA Can Help Ø Test includes subjects not present in training set Ø Very few (1 -3) examples available per class Ø Test samples vary significantly from training samples

Why Would PCA+LDA Help? Ø Allows more freedom of movement for maximizing between-class scatter Ø Removes potentially noisy low-ranked principal components in determining LDA projection Ø Goal is improved generalization to nontraining samples

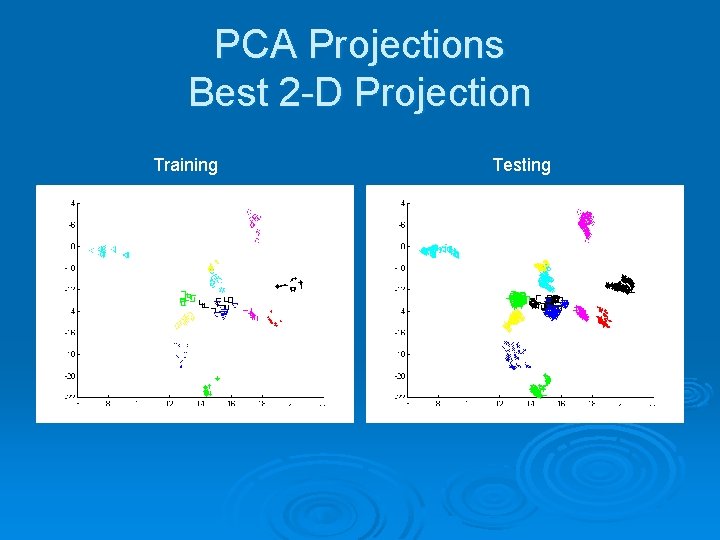

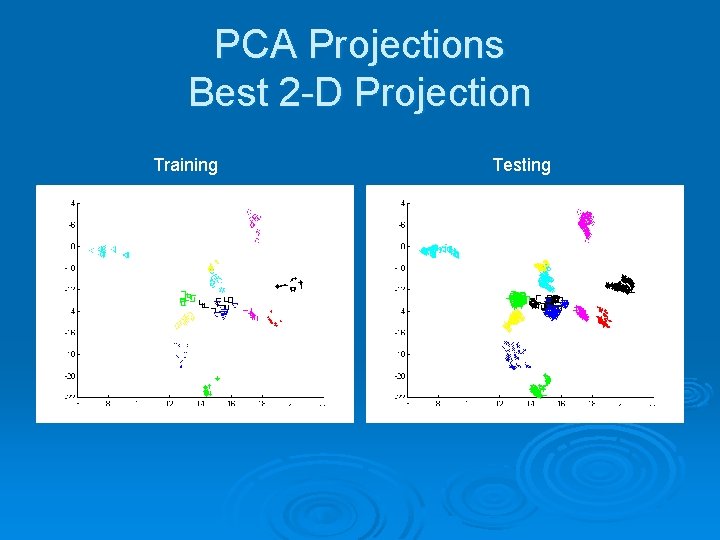

PCA Projections Best 2 -D Projection Training Testing

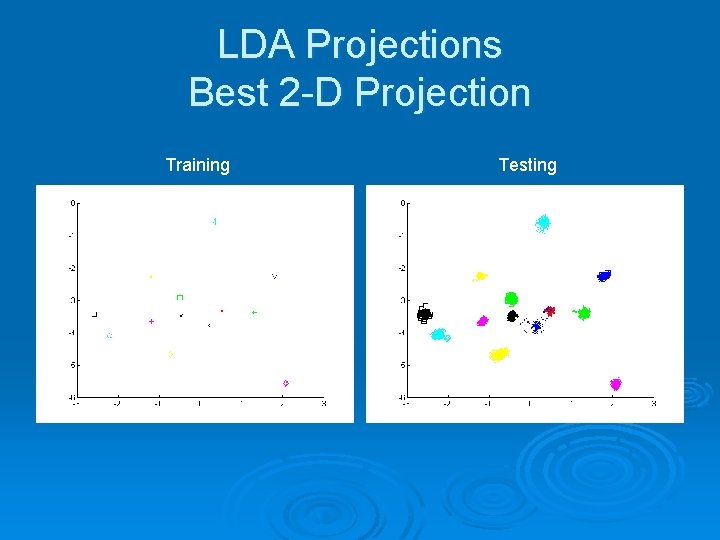

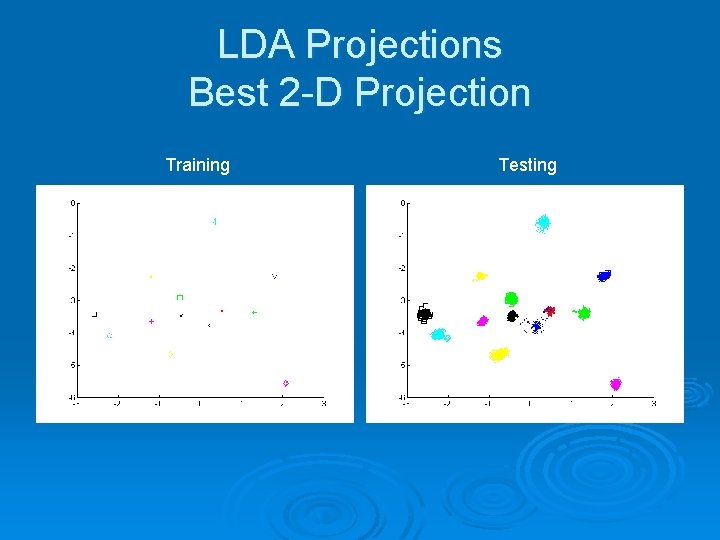

LDA Projections Best 2 -D Projection Training Testing

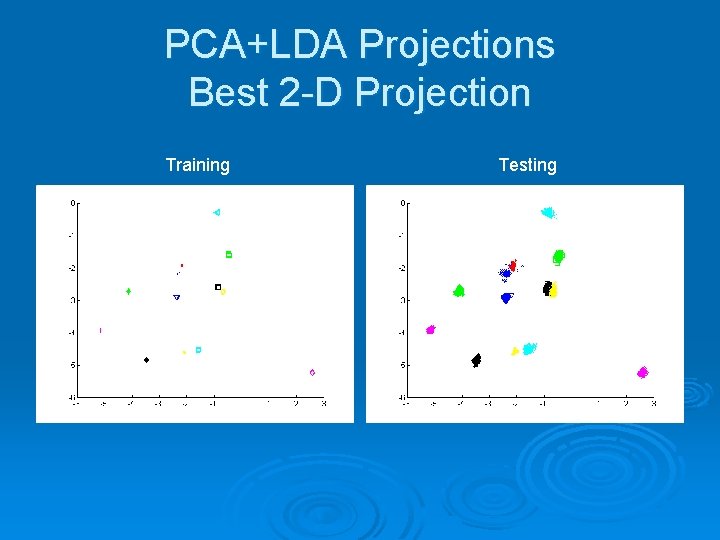

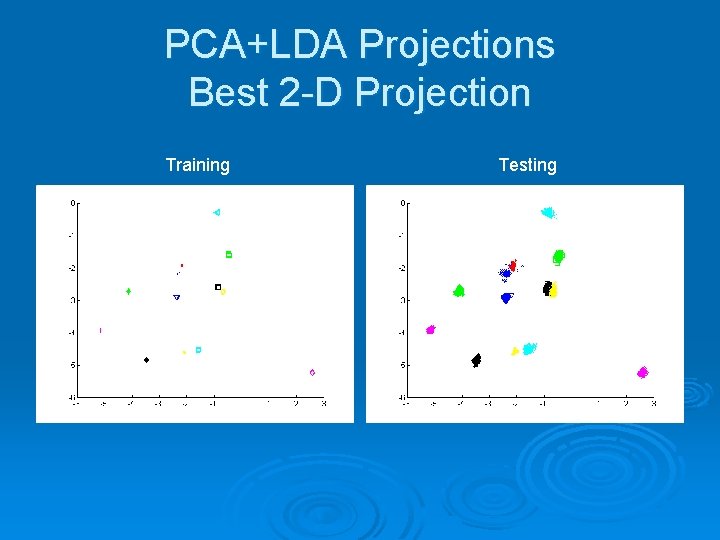

PCA+LDA Projections Best 2 -D Projection Training Testing

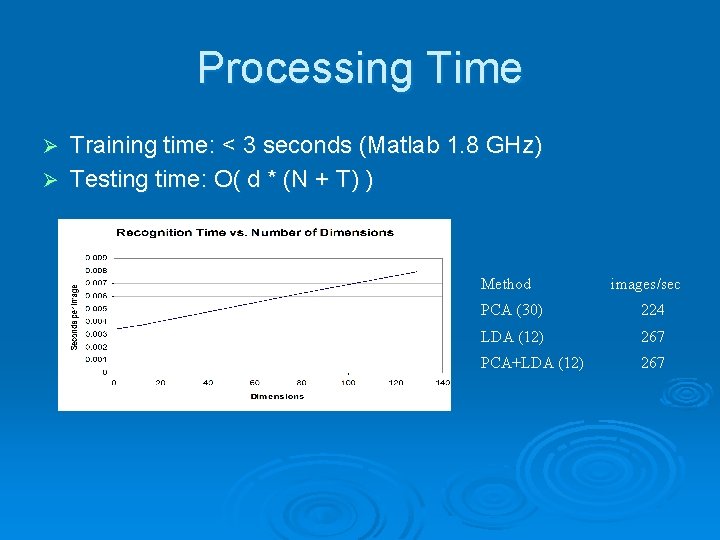

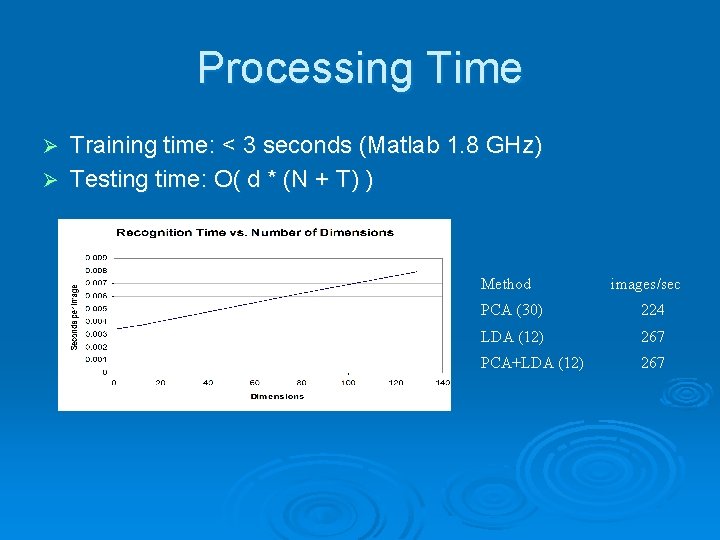

Processing Time Training time: < 3 seconds (Matlab 1. 8 GHz) Ø Testing time: O( d * (N + T) ) Ø Method images/sec PCA (30) 224 LDA (12) 267 PCA+LDA (12) 267

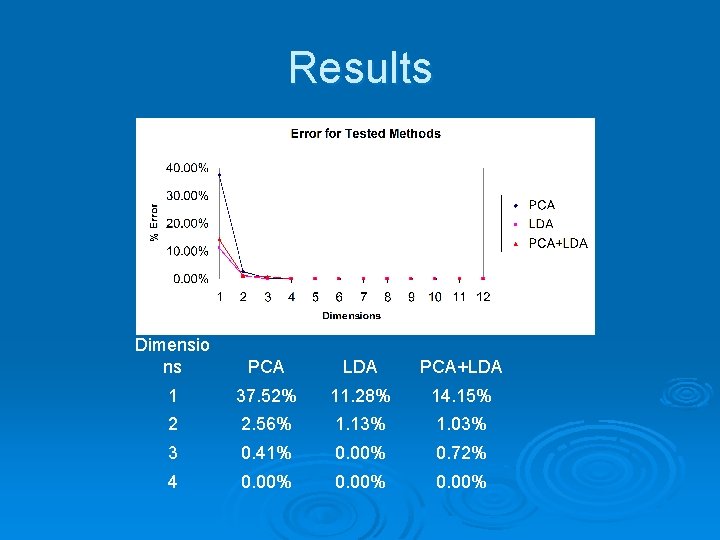

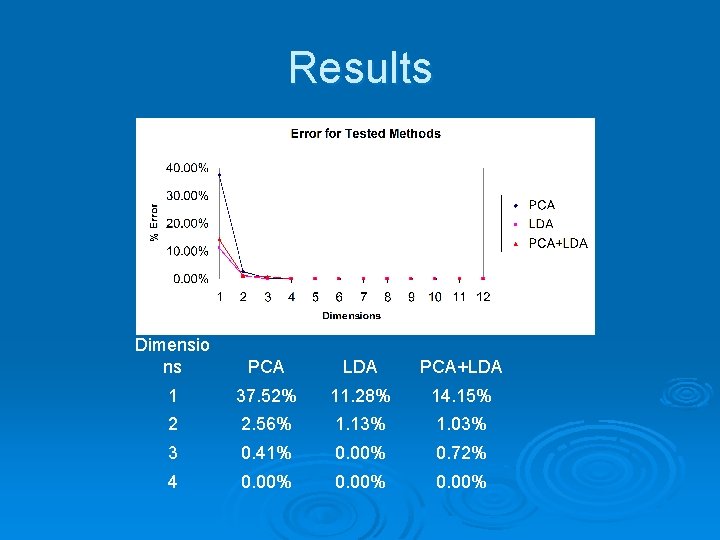

Results Dimensio ns PCA LDA PCA+LDA 1 37. 52% 11. 28% 14. 15% 2 2. 56% 1. 13% 1. 03% 3 0. 41% 0. 00% 0. 72% 4 0. 00%

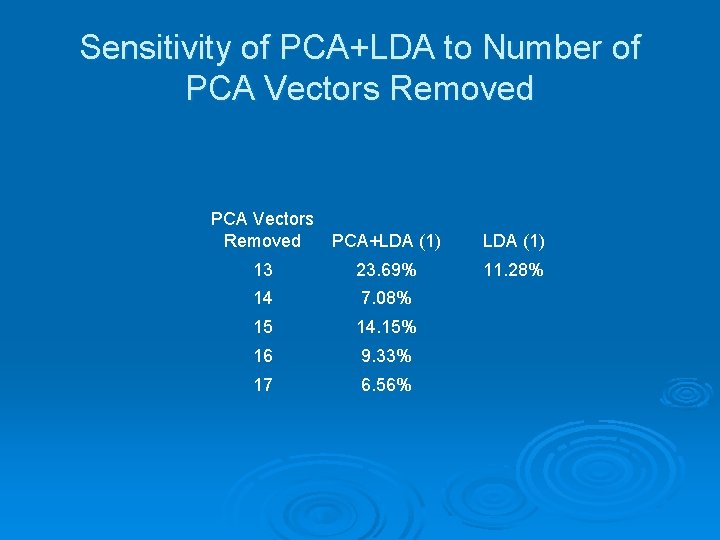

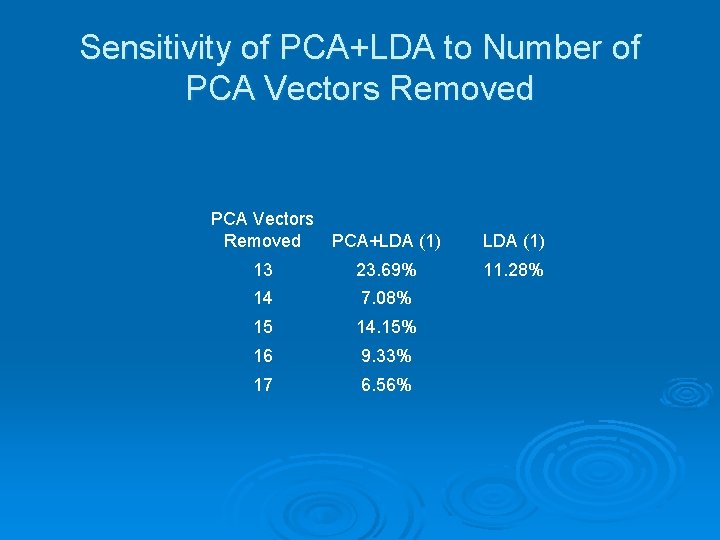

Sensitivity of PCA+LDA to Number of PCA Vectors Removed PCA+LDA (1) 13 23. 69% 14 7. 08% 15 14. 15% 16 9. 33% 17 6. 56% LDA (1) 11. 28%

Conclusions Recognition under varying expressions is an easy problem Ø LDA and LDA+PCA produce better subspaces for discrimination than PCA Ø Simply removing lowest ranked PCA vectors may not be good strategy for PCA+LDA Ø Maximizing the minimum between-class distance may be a better strategy than maximizing the Fisher ratio Ø

References Ø Ø Ø M. Turk and A. Pentland, “Face recognition using eigenfaces, ” in Proc. IEEE Conf. on Comp. Vision and Patt. Recog. , pages 586 -591, 1991 P. N. Belhumeur, J. P. Hespanha, and D. J. Kriegman, “Eigenfaces vs. Fisherfaces: Recognition Using Class Specific Linear Projection, ” in Proc. European Conf. on Computer Vision, April 1996 W. Zhao, R. Chellappa, and P. J. Phillips, “Discriminant Analysis of Principal Components for Face Recognition, ” in Proceedings, International Conference on Automatic Face and Gesture Recognition, pp. 336341, 1998