Fuzzy Logic in Pattern Recognition Chapter 13 1

- Slides: 20

Fuzzy Logic in Pattern Recognition Chapter 13 1

Introduction • Pattern recognition techniques can be classified into two broad categories – unsupervised (非監督式) techniques : Clustering. – supervised (監督式) techniques : Classification. • An unsupervised technique use a given set of unclassified data points, where as a supervised technique uses a dataset with known classifications. 2

Unsupervised Clustering • Unsupervised clustering is motivated by the need to find interesting patterns or groupings in a given set of data. – Used to perform the task of “segmenting” the images. (*i. e. , partitioning pixels on an image into regions that correspond to different objects in the image) 3

Unsupervised Clustering • Let X be a set of data, and xi be an element of X. A partition P={c 1, c 2, …, cl}of X is “hard” if and only if – (the partition covers all data points in X) – (all clusters in the partition are mutually exclusive) 4

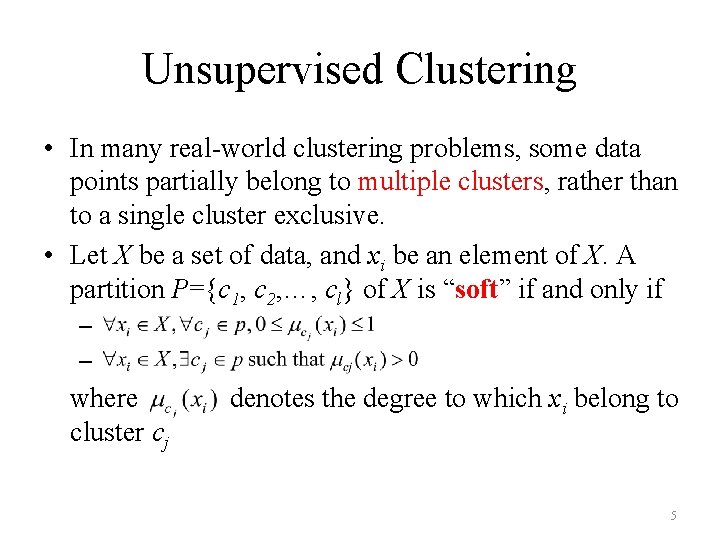

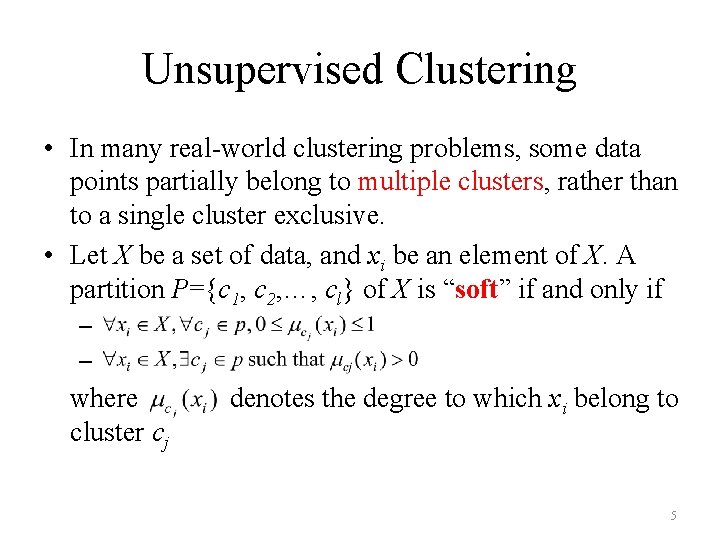

Unsupervised Clustering • In many real-world clustering problems, some data points partially belong to multiple clusters, rather than to a single cluster exclusive. • Let X be a set of data, and xi be an element of X. A partition P={c 1, c 2, …, cl} of X is “soft” if and only if – – where cluster cj denotes the degree to which xi belong to 5

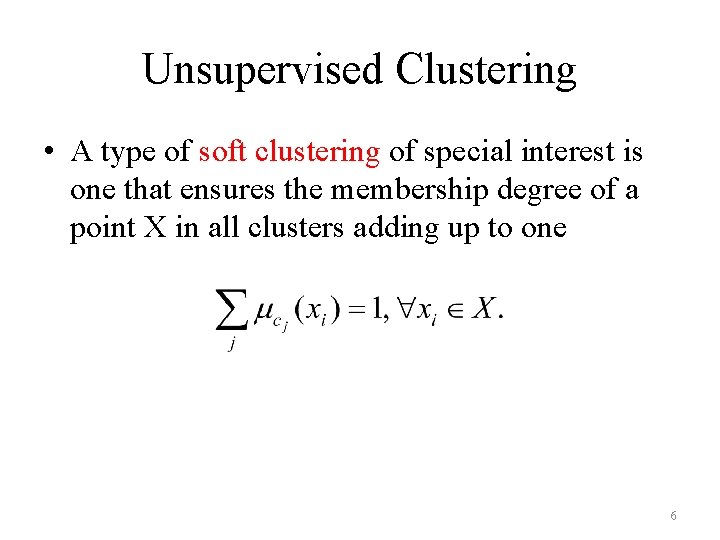

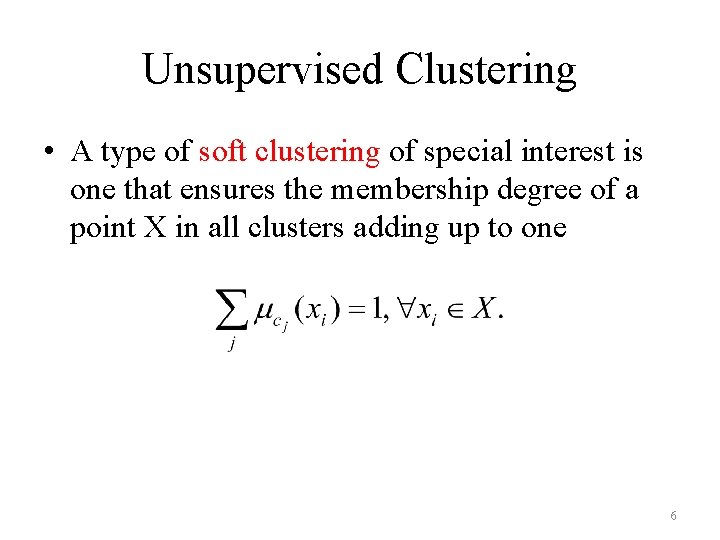

Unsupervised Clustering • A type of soft clustering of special interest is one that ensures the membership degree of a point X in all clusters adding up to one 6

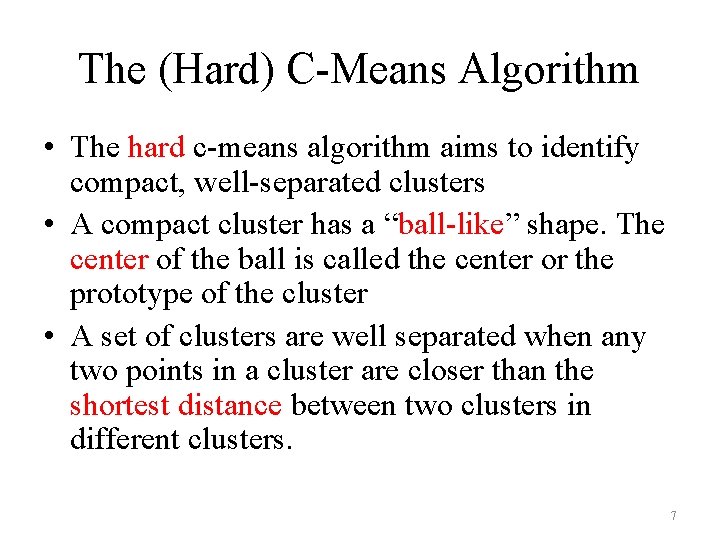

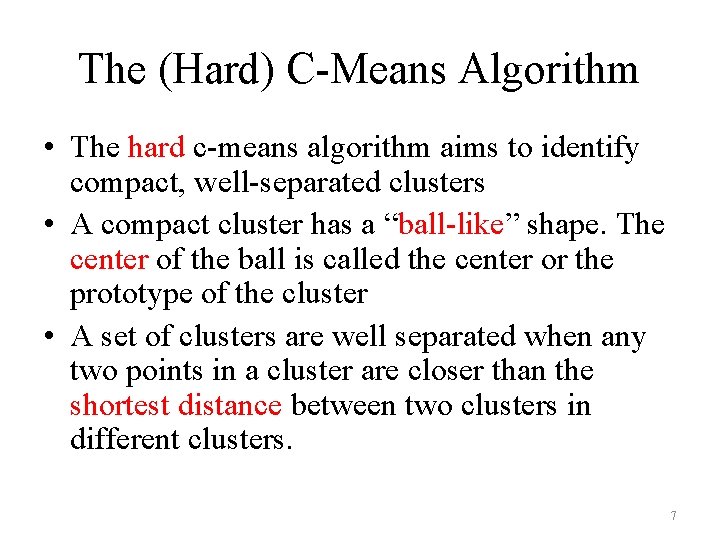

The (Hard) C-Means Algorithm • The hard c-means algorithm aims to identify compact, well-separated clusters • A compact cluster has a “ball-like” shape. The center of the ball is called the center or the prototype of the cluster • A set of clusters are well separated when any two points in a cluster are closer than the shortest distance between two clusters in different clusters. 7

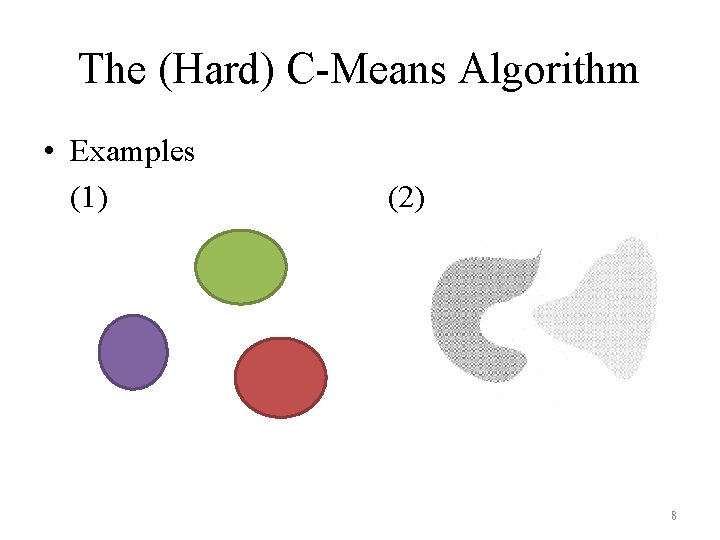

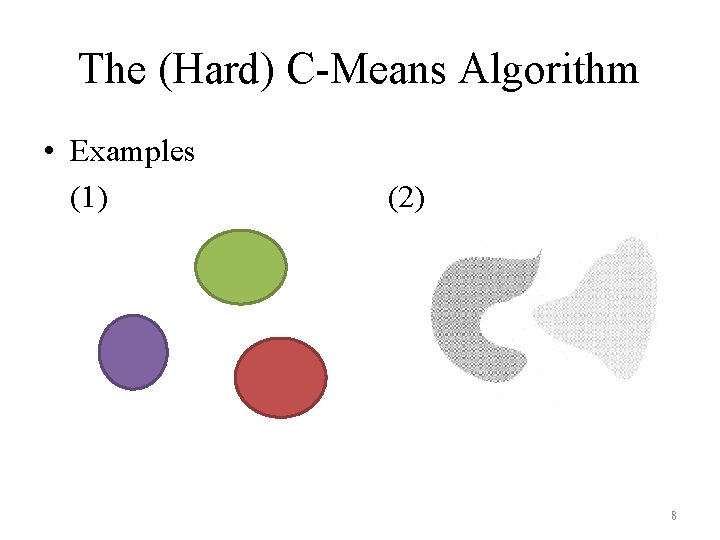

The (Hard) C-Means Algorithm • Examples (1) (2) 8

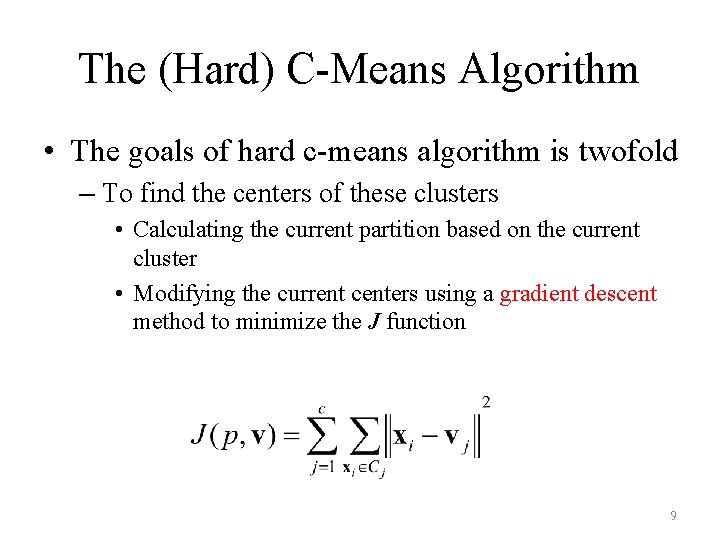

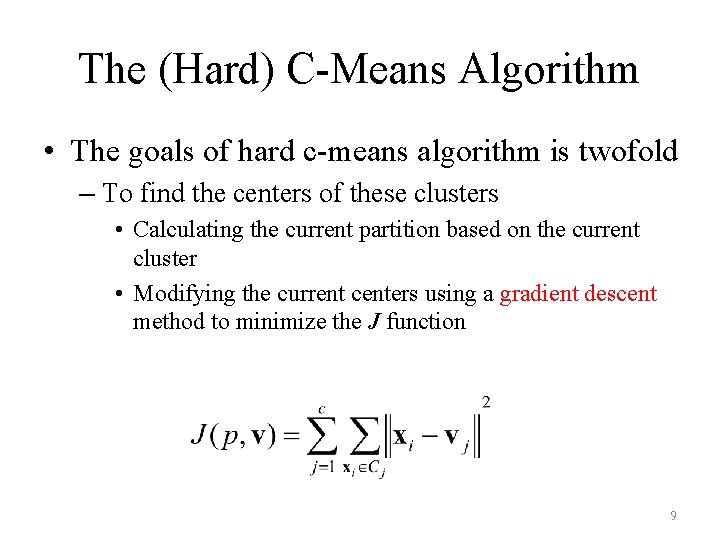

The (Hard) C-Means Algorithm • The goals of hard c-means algorithm is twofold – To find the centers of these clusters • Calculating the current partition based on the current cluster • Modifying the current centers using a gradient descent method to minimize the J function 9

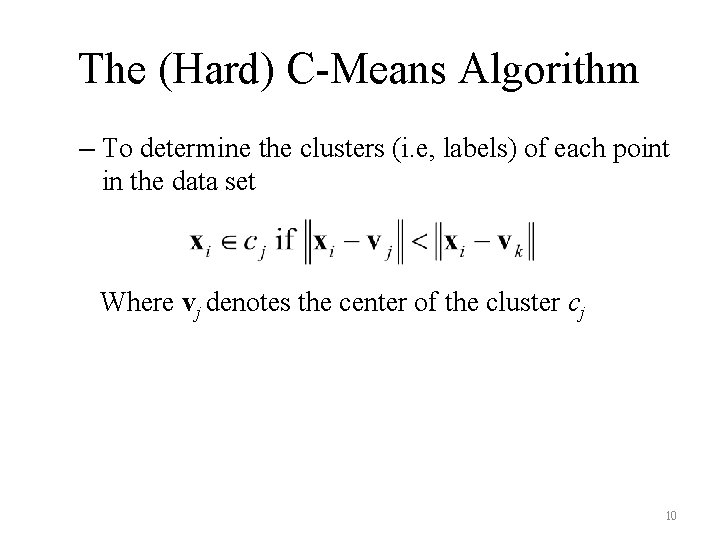

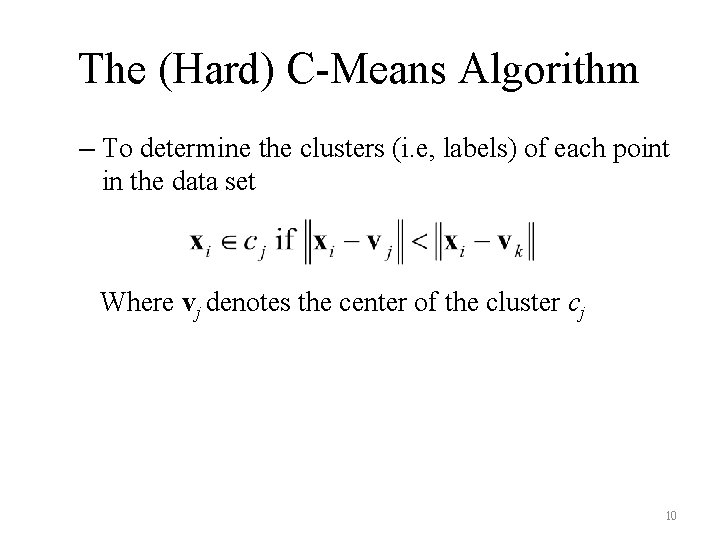

The (Hard) C-Means Algorithm – To determine the clusters (i. e, labels) of each point in the data set Where vj denotes the center of the cluster cj 10

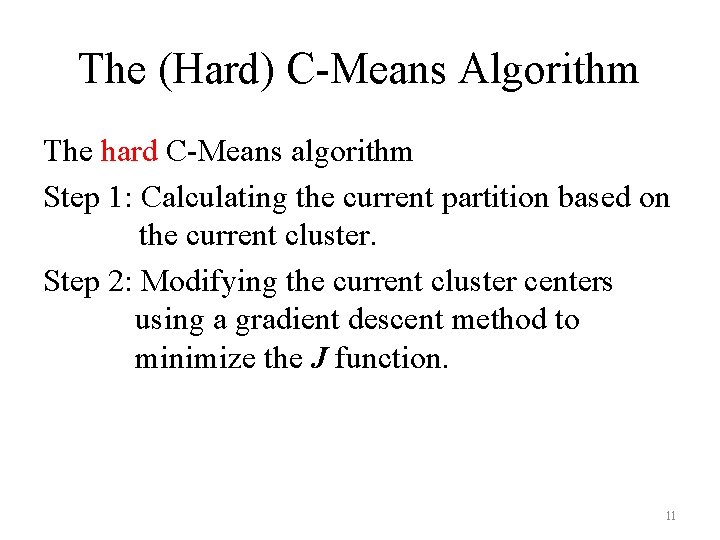

The (Hard) C-Means Algorithm The hard C-Means algorithm Step 1: Calculating the current partition based on the current cluster. Step 2: Modifying the current cluster centers using a gradient descent method to minimize the J function. 11

Fuzzy C-means Algorithm • The fuzzy c-means algorithm (FCM) generalizes the hard c-means algorithm to allow a point to partially belong to multiple clusters. 12

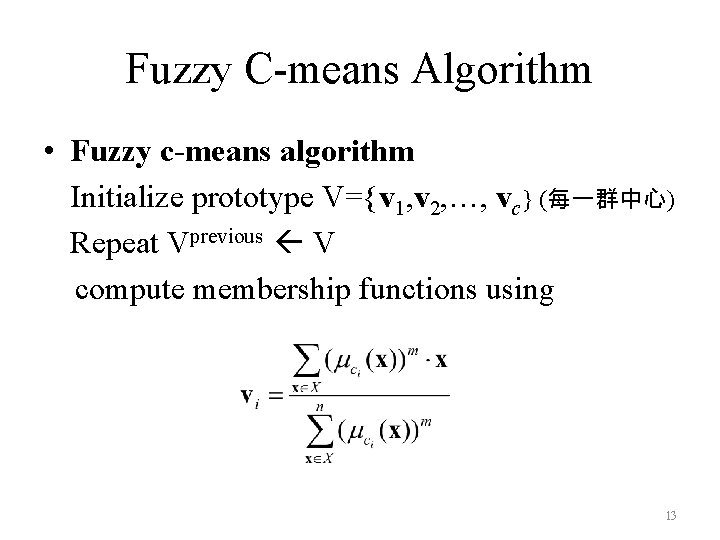

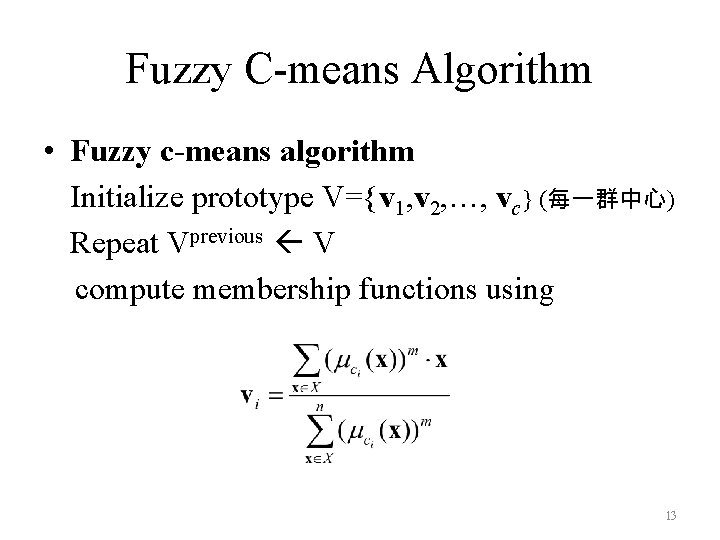

Fuzzy C-means Algorithm • Fuzzy c-means algorithm Initialize prototype V={v 1, v 2, …, vc} (每一群中心) Repeat Vprevious V compute membership functions using 13

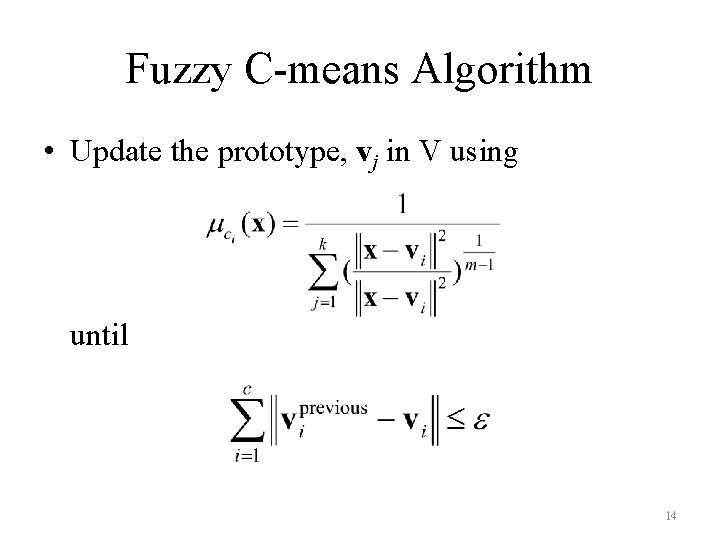

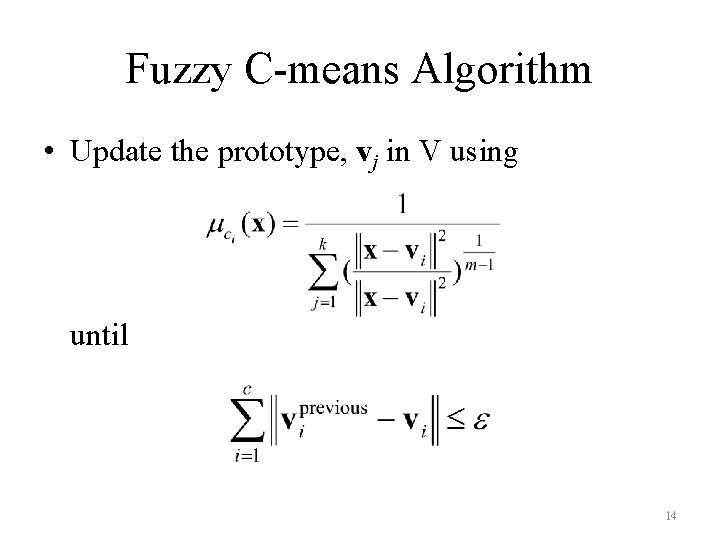

Fuzzy C-means Algorithm • Update the prototype, vj in V using until 14

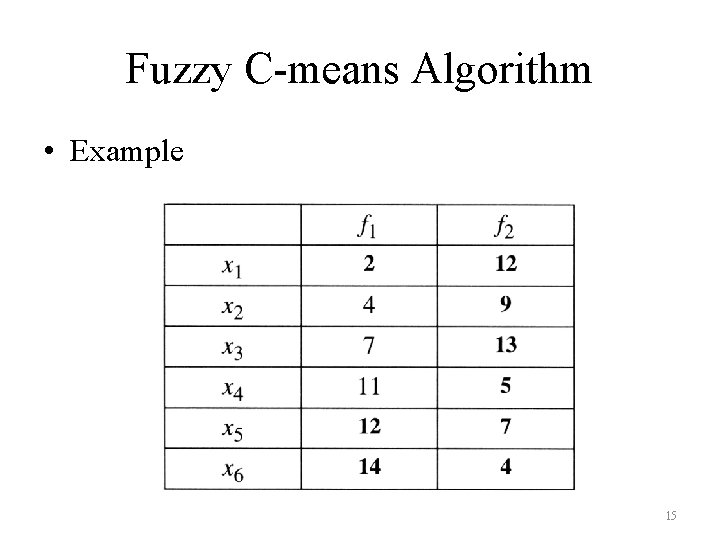

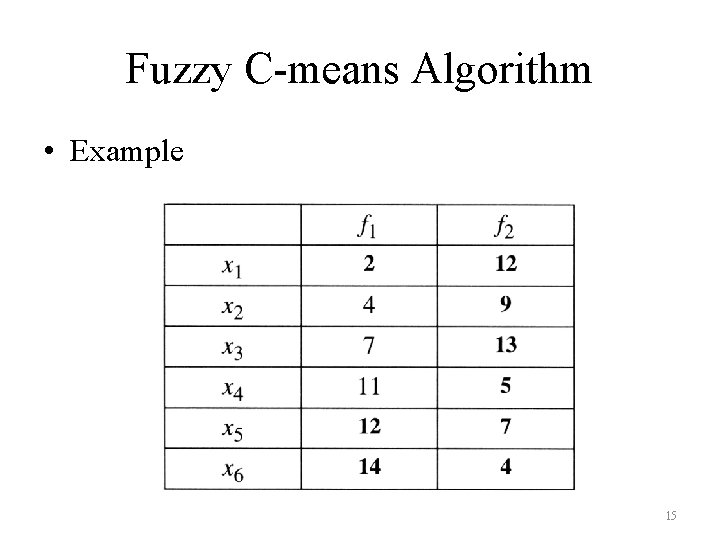

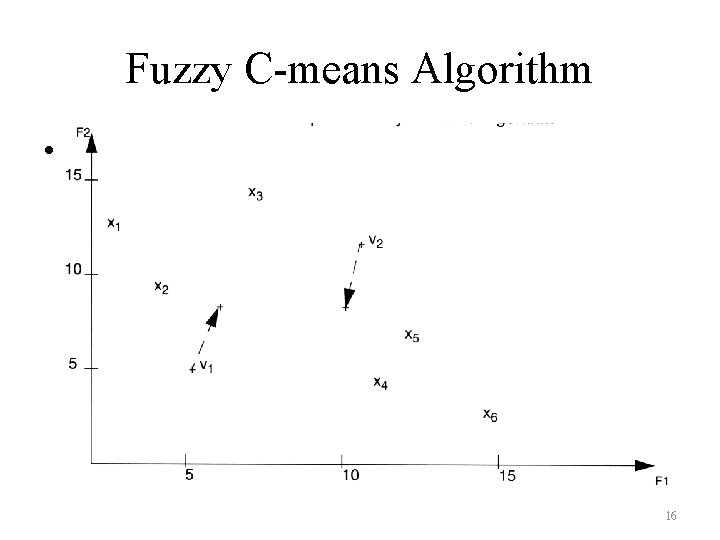

Fuzzy C-means Algorithm • Example 15

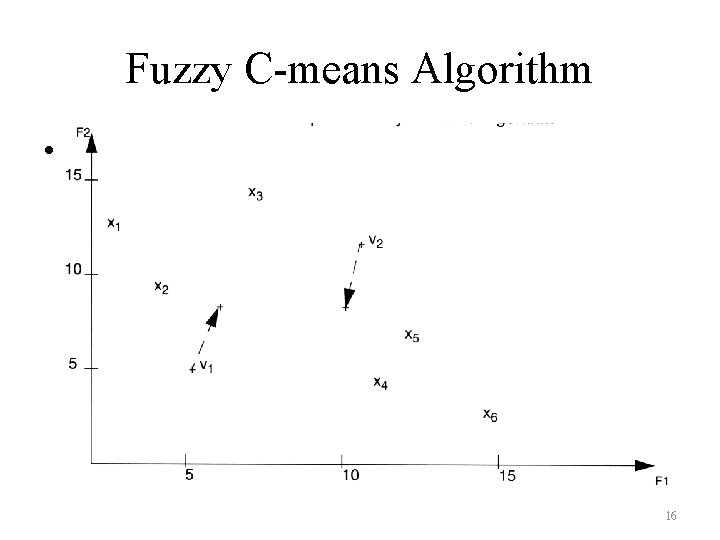

Fuzzy C-means Algorithm • The dataset are partitioned into two clusters 16

Classifier Design and Supervised Pattern Recognition • Supervised pattern recognition uses data with known classifications, which are also called labeled data, to determine the classification of new data. • The main benefit of unsupervised pattern recognition techniques is that they do not require training data; however, their computation time is relatively high due to a large number of iterations (疊代) needed before the algorithm converges. 17

Classifier Design and Supervised Pattern Recognition • A supervised pattern recognition technique is relatively fast because it does not need to iterate; however, it cannot be applied to a problem unless training data or relevant knowledge are available. 18

K-Nearest Neighborhood(K-NN) • The main advantage of K-NN is its computational simplicity. • It has two major problems – Each of the neighbors is considered equally important in determining the classification of the input data. A far neighbor is given the same weight as a close neighbor of the input. – The algorithm only assigns a class to the input data, it does not determine the “strength” of membership in the class. 19

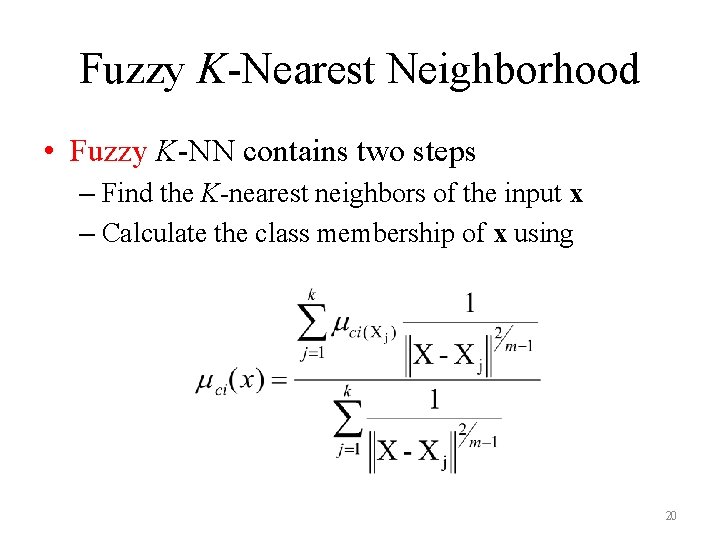

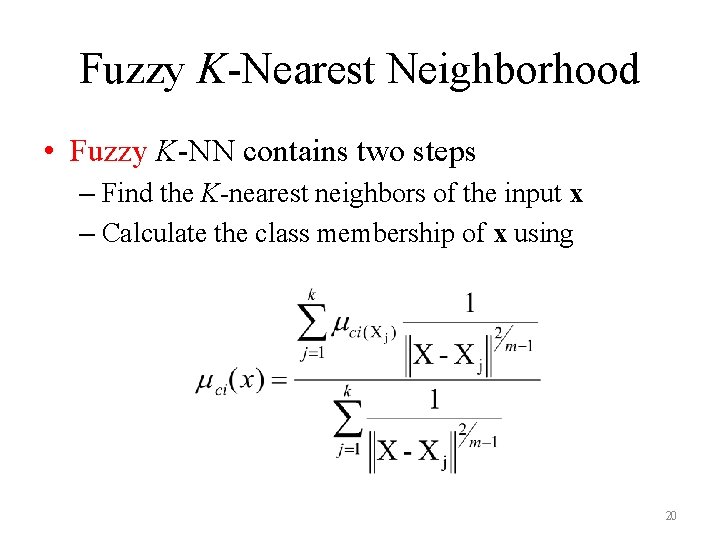

Fuzzy K-Nearest Neighborhood • Fuzzy K-NN contains two steps – Find the K-nearest neighbors of the input x – Calculate the class membership of x using 20