Qo S Support in HighSpeed Wormhole Routing Networks

- Slides: 19

Qo. S Support in High-Speed, Wormhole Routing Networks Mario Gerla, B. Kannan, Bruce Kwan, Prasasth Palanti, Simon Walton

Overview • Introduction • Qo. S via separate subnets • Qo. S via synchronous framework • Qo. S via virtual channels • Conclusions

Introduction • Wormhole routing offers low latency, high speed interconnection for supercomputers and clusters. • It’s a modification of virtual cut-through: -A packet is forwarded to output port once its head is received at the switch -If channel is busy, whole packet is buffered at input port -Wormhole: packet composed of several flits is stored across several switches • Used in high speed LANs like Myrinet: -Asynchronous LAN -uses wormhole routing, source routing, backpressure flow control to achieve low latency and high bandwidth

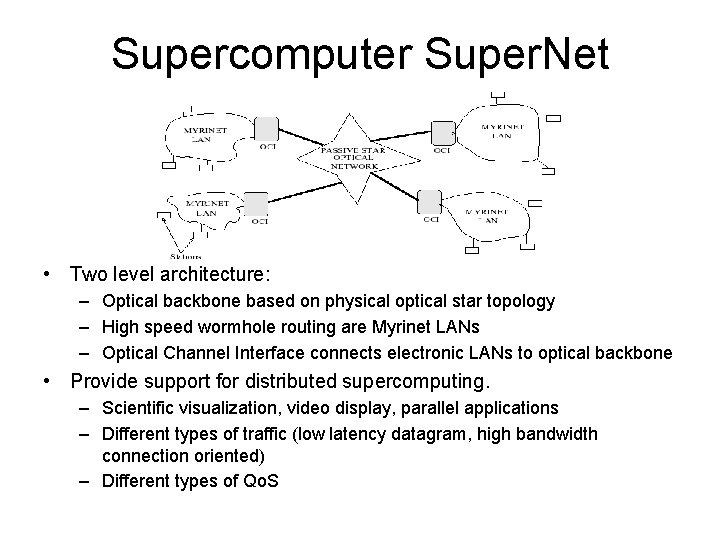

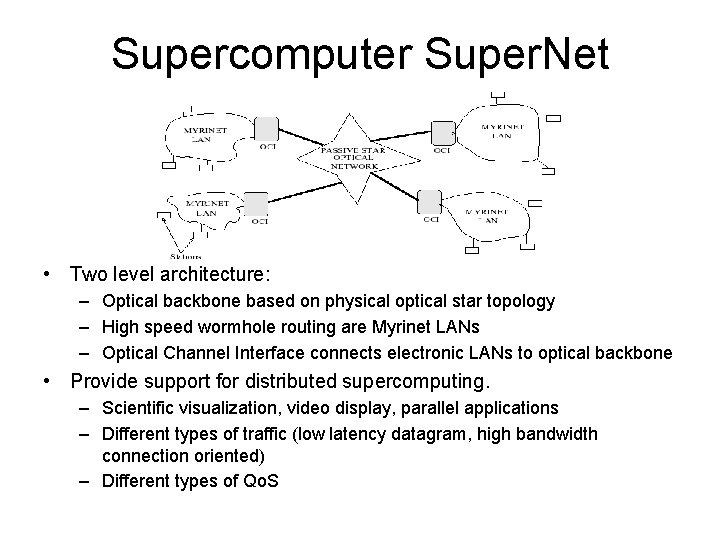

Supercomputer Super. Net • Two level architecture: – Optical backbone based on physical optical star topology – High speed wormhole routing are Myrinet LANs – Optical Channel Interface connects electronic LANs to optical backbone • Provide support for distributed supercomputing. – Scientific visualization, video display, parallel applications – Different types of traffic (low latency datagram, high bandwidth connection oriented) – Different types of Qo. S

Objective • Want to provide connection oriented traffic with Qo. S parameters: -reliable support: no worm loss -scalable and deadlock free network • Assumption: -Traffic with Qo. S is connection oriented -Qo. S parameters specified at connection setup -Connection can be refused if no guarantee for Qos parameters -Qo. S parameters: average bandwidth, end-to-end delay or jitter

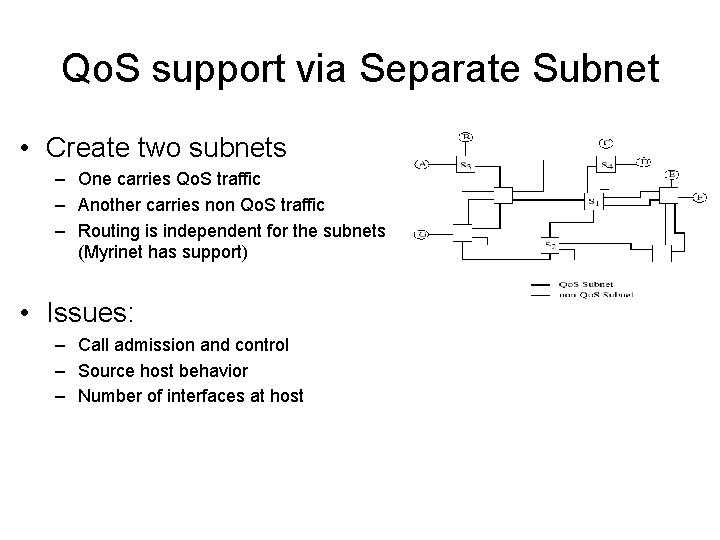

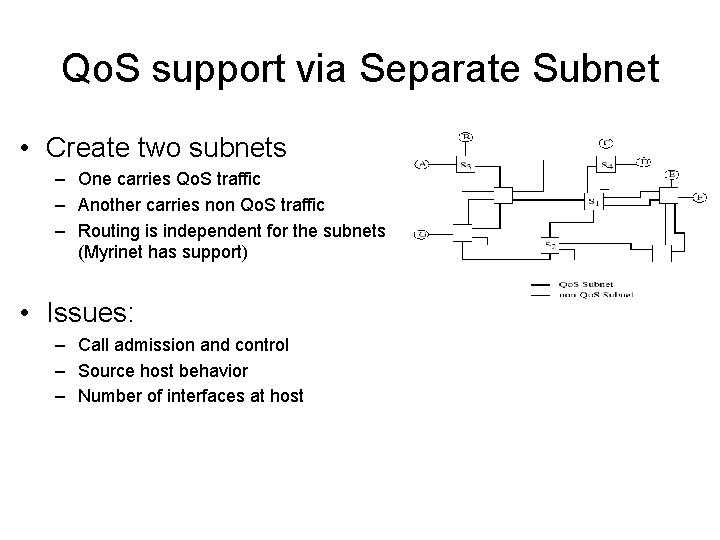

Qo. S support via Separate Subnet • Create two subnets – One carries Qo. S traffic – Another carries non Qo. S traffic – Routing is independent for the subnets (Myrinet has support) • Issues: – Call admission and control – Source host behavior – Number of interfaces at host

Call Admission & Control • Admission agent maintains state of Qo. S subnet • Request for Qo. S traffic connection comes in • Upon receiving request, agent decides a suitable route • If route not available, host can retry or use other subnet • If route exists, connection is accepted, host can send • Once completed, host informs admission agent • Admission agent update its view or state of subnet

Host Behavior • Host must be responsible for amount of traffic injected in subnet according to Qo. S parameters it required • Solution: host uses pacing mechanism – Allow only predetermined number of flits to be transmitted per time period

Number of Interfaces • Suppose host has only one interface • Sender side: – Host can schedule transmission into the network • Receiver side: – Possible non-Qo. S worm may block Qo. S worm – Qo. S worm encounters delay if non-Qo. S worm is large • Solutions: – Two interfaces: this double cost of network – Account for the worst case non-Qo. S traffic delay on single host interface at call setup time

Alternative • Subnets: – difficult to provide delay bounds due to delay dynamics from blocking at different cross points • Alternative: – Impose synchronous structure on top of the asynchronous network – Enables control over the blocking – Delay bounds and message priorities may be implemented • Trade off: – Network is no longer asynchronous – Under low traffic load, messages suffer delay due to synchronous protocol overhead

Qo. S support via Synchronous framework • Similar to dedicated traffic channels • Use timed-token to control traffic streams • Provides tighter delay bounds and bandwidth guarantees • Target Token Rotation Time TTRT limit the amount of transmission • Average delay = TTRT • Worst case delay = 2 * TTRT

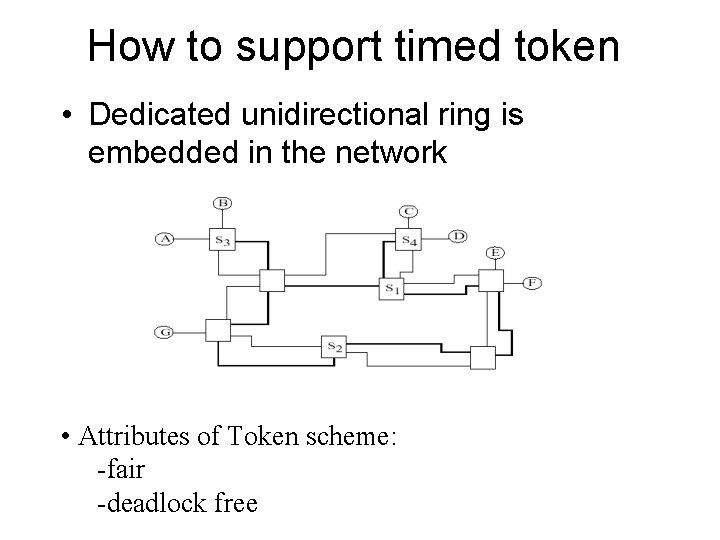

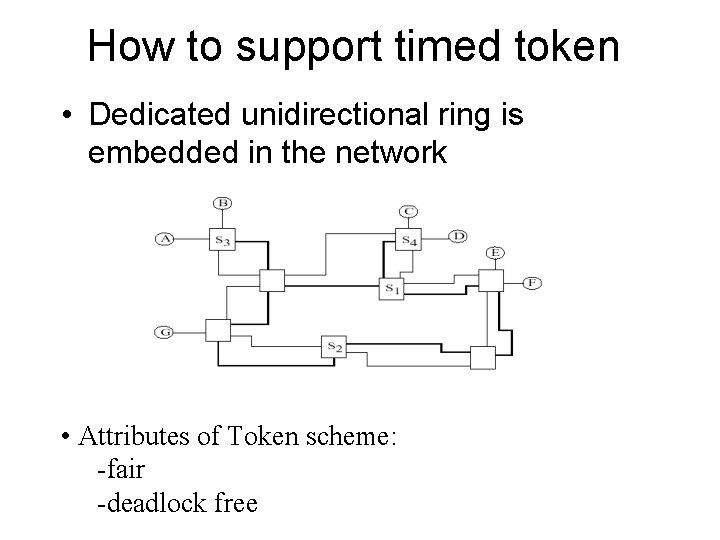

How to support timed token • Dedicated unidirectional ring is embedded in the network • Attributes of Token scheme: -fair -deadlock free

Issues • Number of host interfaces – Caused by interaction of Qo. S & non-Qo. S traffic – Qo. S traffic travel on core ring while non-Qo. S travel on other links not on ring – If host with one interface is busy receiving non. Qo. S message, a Qo. S message will suffer delay – Qo. S message must have preemptive priority • To increase non-Qo. S throughput, embedded ring may be used if bandwidth is not completely taken by Qo. S traffic

Continued • Scalability: – throughput performance maintained by increasing TTRT parameter – Allows nodes to transmit for longer time when they have the token – Causes less capability to provide tight delay bounds

Virtual Channel Based Qo. S • Each link is split into two different sets of virtual channels used for datagram and Qo. S traffic • Each input port buffer of switch is split into several disjoint buffers • Link between node and input port of switch is a collection of virtual channels • Allows worms to be interleaved • Give Qo. S traffic priority in the network

Non-preemptive priority • A worm arriving at Qo. S virtual channel does not get transmitted right away • Current worm (datagram or Qo. S) being transmitted on outgoing link must either complete or get blocked • Then, scan Qo. S virtual channels before datagram channels to schedule the worm for transmission on outgoing link • Easy to apply preemptive priority by making arrived worm preempt datagram worm at the Qo. S virtual channel

Implementation • Preemptive and non-preemptive implementation require intelligence switch • At a switch: – monitor all traffic passing – Schedule Qo. S & non-Qo. S traffic according to protocol • Harder to implement preemtive: – Switch must check arrival of Qo. S traffic at any input port before transmitting non-Qo. S flit from output port

Advantage of virtual channels • Network appears the same for both traffic • Intelligent switches allocate bandwidth as required to support Qo. S • Can provide delay jitter bounds • Bandwidth guarantee is provided by employing call admission agent

conclusions • Wormhole routing networks provide low latency, high bandwidth support for datagram traffic • To support Qo. S traffic is a challenge • Dedicated Qo. S subnet with pacing and call admission control can support Qo. S • Synchronous framework on top of asynchronous network provides guaranteed bandwidth and delay • Virtual channels with priority mechanism also is effective way to support Qo. S

Highspeed test

Highspeed test Dsl highspeed

Dsl highspeed Static routing and dynamic routing

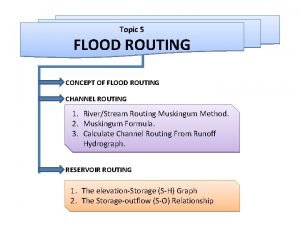

Static routing and dynamic routing Hydrologic continuity equation

Hydrologic continuity equation Difference between clock routing and power routing

Difference between clock routing and power routing Flood routing example

Flood routing example Schwartz child wormhole

Schwartz child wormhole Wormhole teleportation

Wormhole teleportation Wormhole 10gb

Wormhole 10gb Jonkers

Jonkers Badrinath

Badrinath Broadcast routing in computer networks

Broadcast routing in computer networks Backbone networks in computer networks

Backbone networks in computer networks Difference between virtual circuit and datagram

Difference between virtual circuit and datagram Minor and major supporting details

Minor and major supporting details Traditional inter vlan routing

Traditional inter vlan routing Dragonfly network topology

Dragonfly network topology Diverse routing

Diverse routing Masking and data routing mechanism

Masking and data routing mechanism Hydrologic routing

Hydrologic routing