Modeling Long Term Care and Supportive Housing Marisela

- Slides: 23

Modeling Long Term Care and Supportive Housing Marisela Mainegra Hing Telfer School of Management University of Ottawa Canadian Operational Research Society, May 18, 2011

Outline Ø Long Term Care and Supportive Housing Ø Queueing Models Ø Dynamic Programming Model Ø Approximate Dynamic Programming

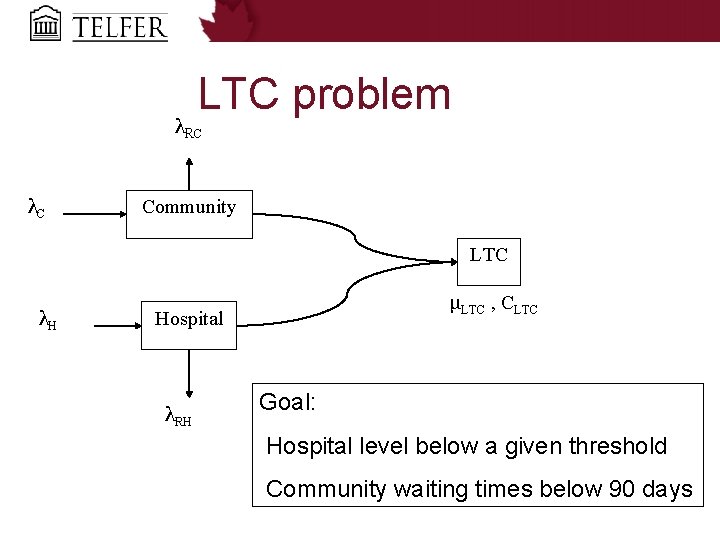

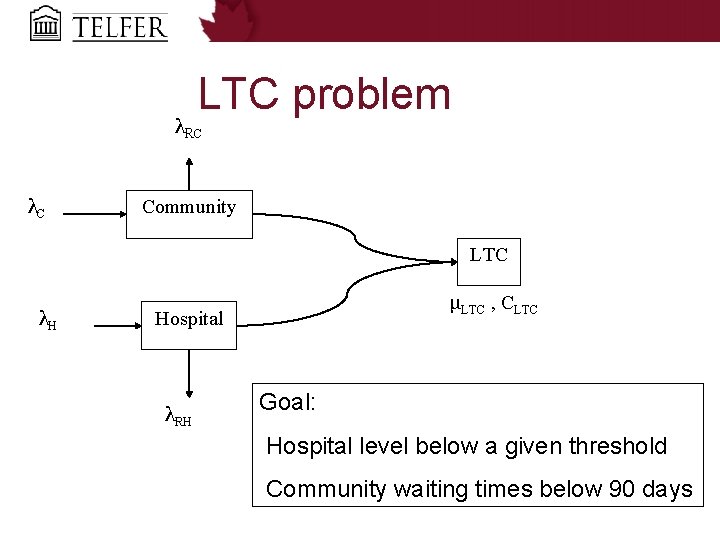

LTC problem λRC λC Community LTC λH μLTC , CLTC Hospital λRH Goal: Hospital level below a given threshold Community waiting times below 90 days

LTC previous results Ø MDP model determined a threshold policy for the Hospital but it did not take into account community demands Ø Simulation Model determined that current capacity is insufficient to achieve the goal

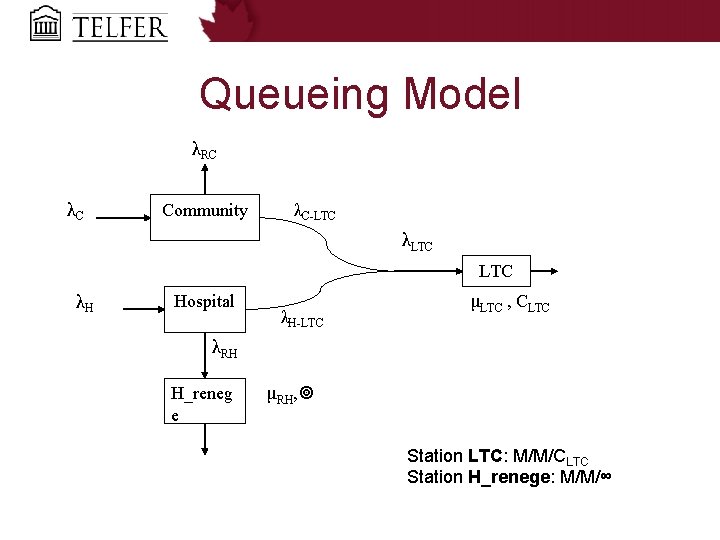

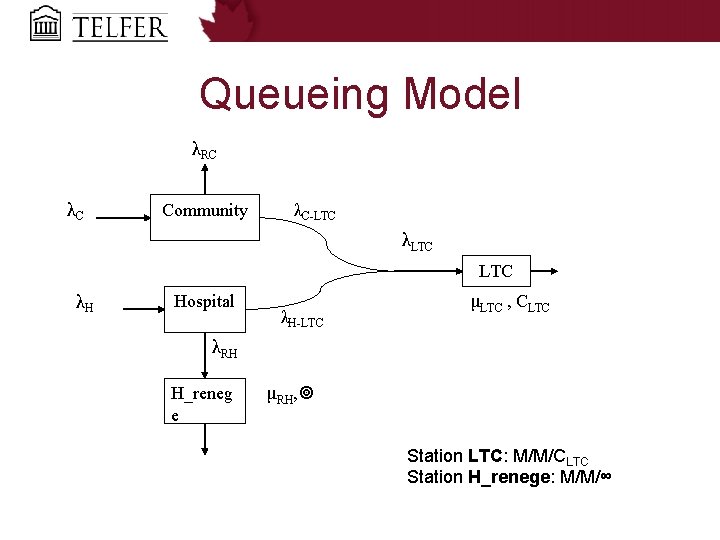

Queueing Model λRC λC Community λC LTC λLTC λH Hospital λH LTC μLTC , CLTC λRH H_reneg e μRH, Station LTC: M/M/CLTC Station H_renege: M/M/∞

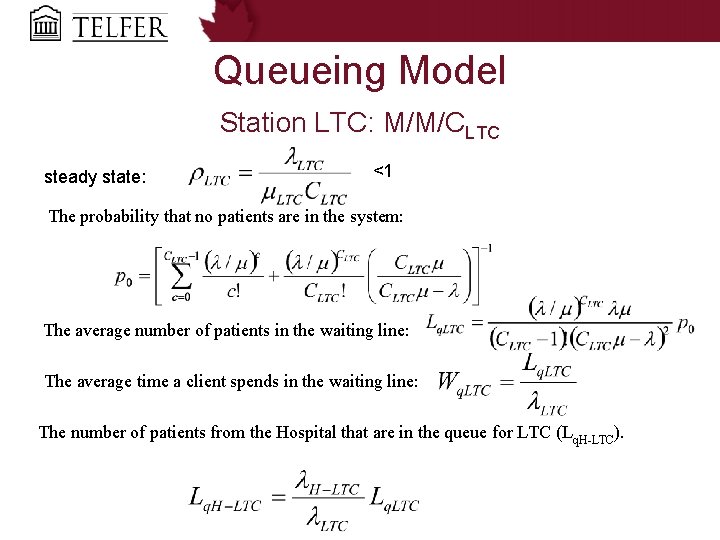

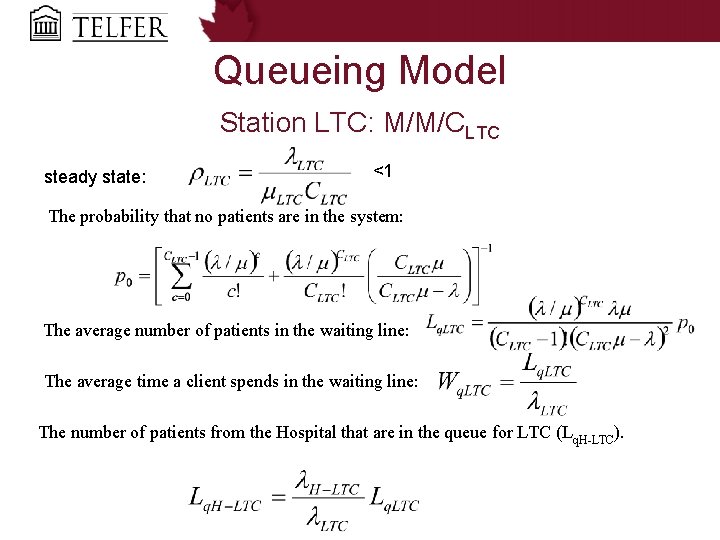

Queueing Model Station LTC: M/M/CLTC steady state: <1 The probability that no patients are in the system: The average number of patients in the waiting line: The average time a client spends in the waiting line: The number of patients from the Hospital that are in the queue for LTC (Lq. H LTC).

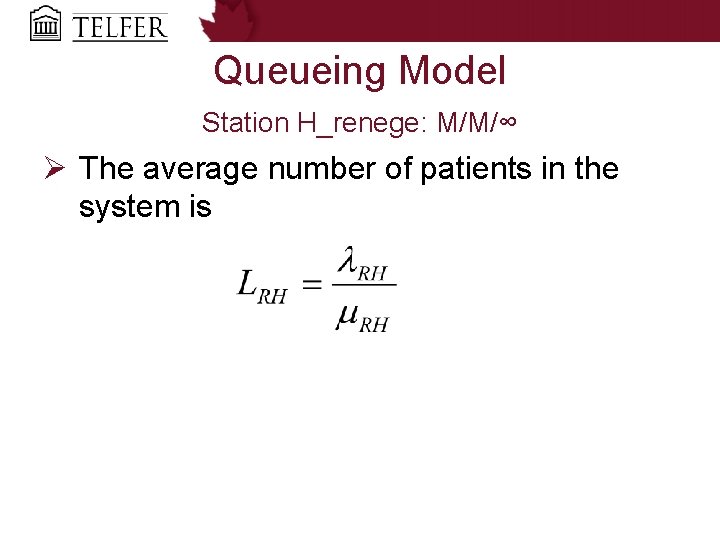

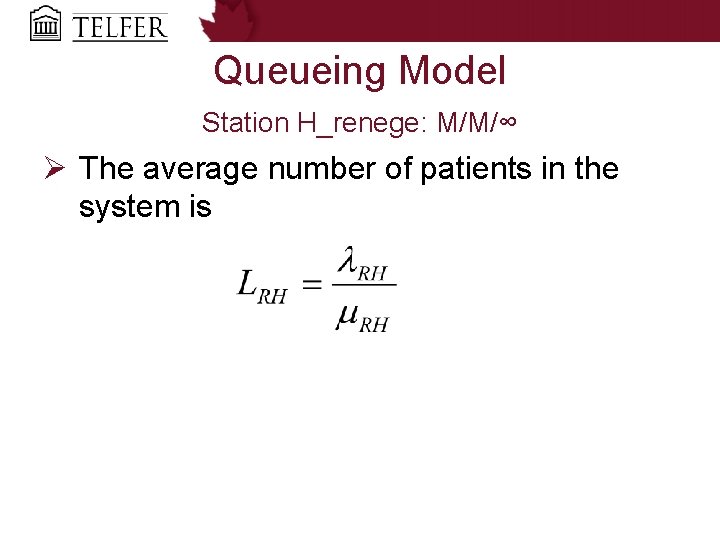

Queueing Model Station H_renege: M/M/∞ Ø The average number of patients in the system is

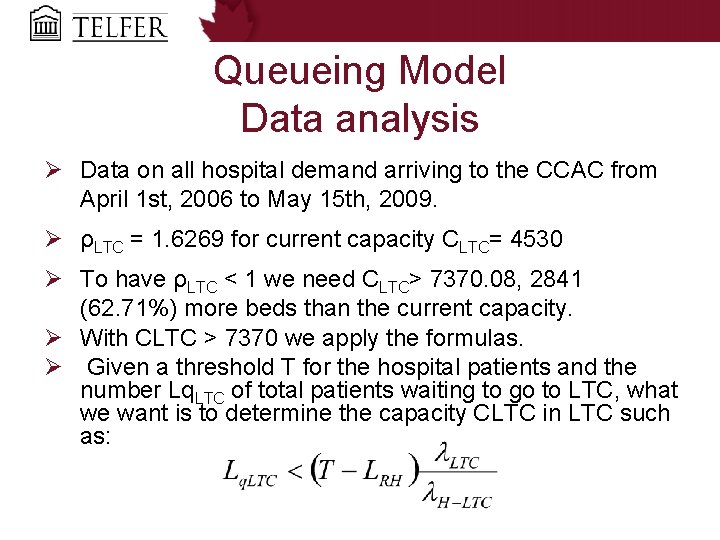

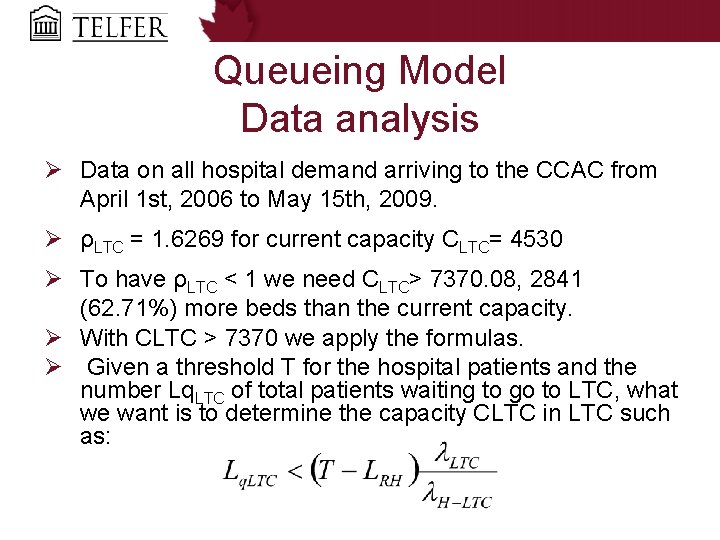

Queueing Model Data analysis Ø Data on all hospital demand arriving to the CCAC from April 1 st, 2006 to May 15 th, 2009. Ø ρLTC = 1. 6269 for current capacity CLTC= 4530 Ø To have ρLTC < 1 we need CLTC> 7370. 08, 2841 (62. 71%) more beds than the current capacity. Ø With CLTC > 7370 we apply the formulas. Ø Given a threshold T for the hospital patients and the number Lq. LTC of total patients waiting to go to LTC, what we want is to determine the capacity CLTC in LTC such as:

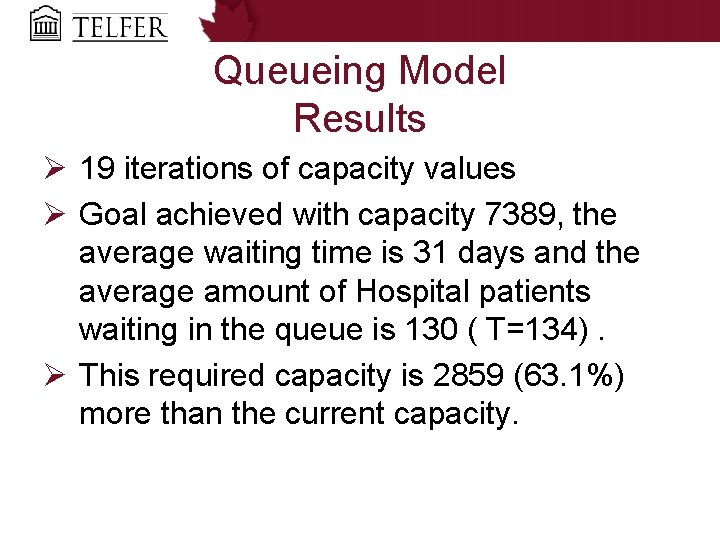

Queueing Model Results Ø 19 iterations of capacity values Ø Goal achieved with capacity 7389, the average waiting time is 31 days and the average amount of Hospital patients waiting in the queue is 130 ( T=134). Ø This required capacity is 2859 (63. 1%) more than the current capacity.

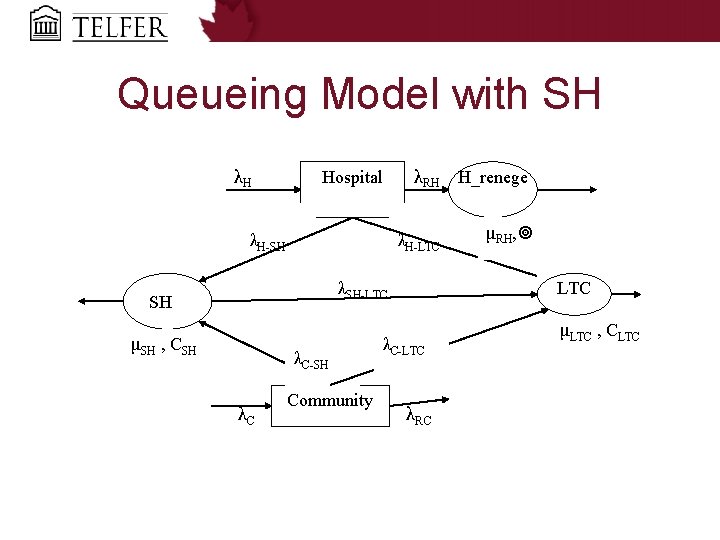

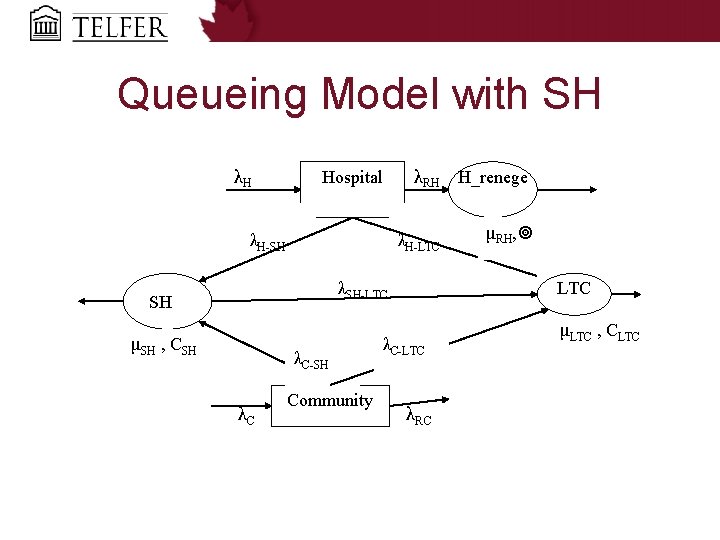

Queueing Model with SH λH Hospital λH SH λRH H_renege λH LTC μRH, λSH LTC SH μSH , CSH λC Community LTC λC LTC λRC μLTC , CLTC

Queueing Model with SH Results Ø Required capacity in LTC is 6835, 2305 (50. 883%) more beds than the current capacity (4530). Ø Required capacity in SH is 1169. Ø With capacity values at LTC: 6835 and at SH: 1169 there are 133. 9943 (T= 134) Hospital Patients waiting for care (for LTC: 110. 3546, reneging: 22. 7475, for SH: 0. 89229), and Community Patients wait for care in average (days) at LTC: 34. 8799, and at SH: 3. 2433.

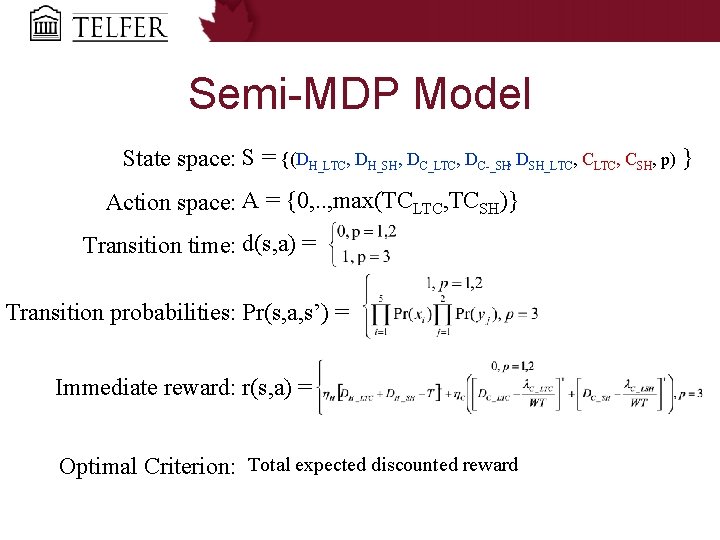

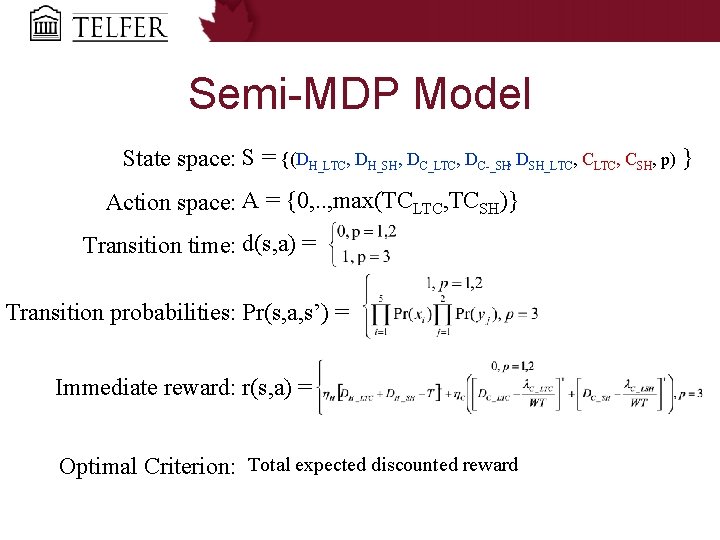

Semi-MDP Model State space: S = {(DH_LTC, DH_SH, DC_LTC, DC _SH, DSH_LTC, CSH, p) } Action space: A = {0, . . , max(TCLTC, TCSH)} Transition time: d(s, a) = Transition probabilities: Pr(s, a, s’) = Immediate reward: r(s, a) = Optimal Criterion: Total expected discounted reward

Approximate Dynamic programming Goal find π : S A that maximizes the state action value function γ: discount factor Bellman: there exists Q* optimal: Q* =max. Q(s, a) and the optimal policy π*

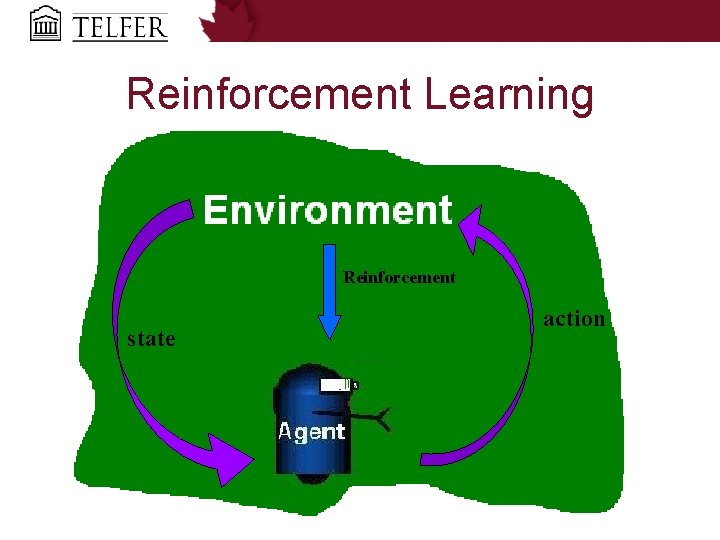

Reinforcement Learning Reinforcement state action

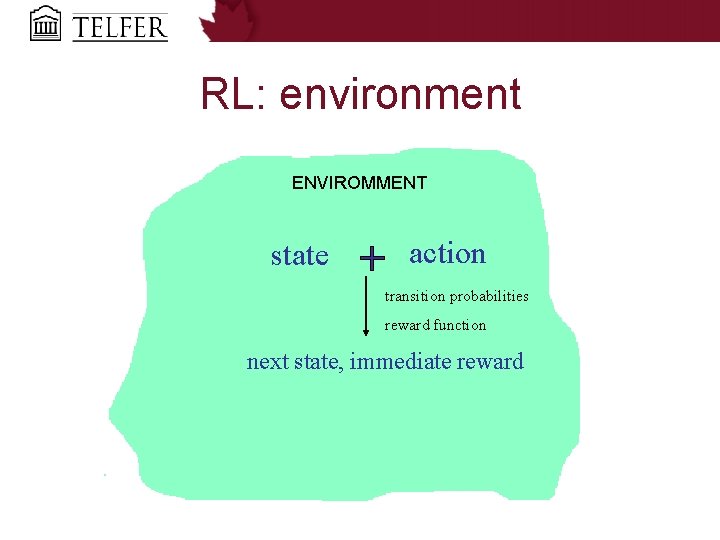

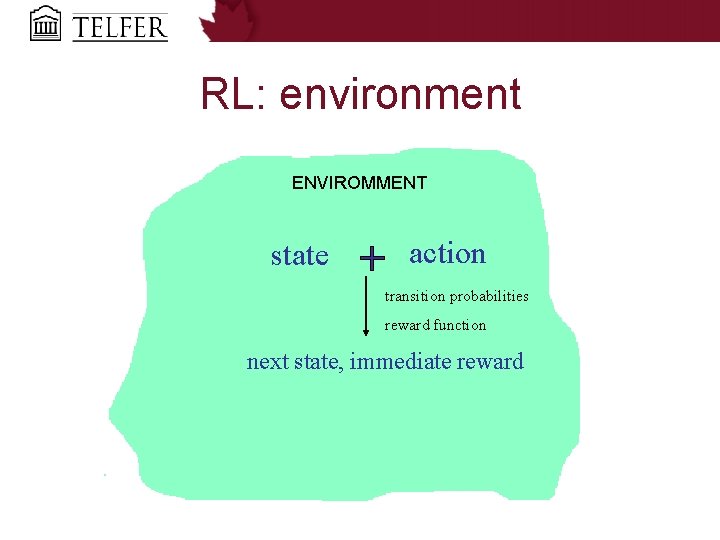

RL: environment ENVIROMMENT state action transition probabilities reward function next state, immediate reward

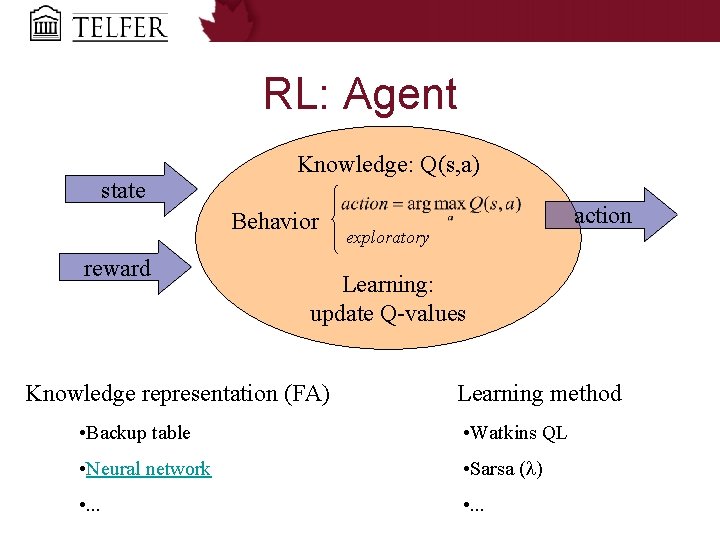

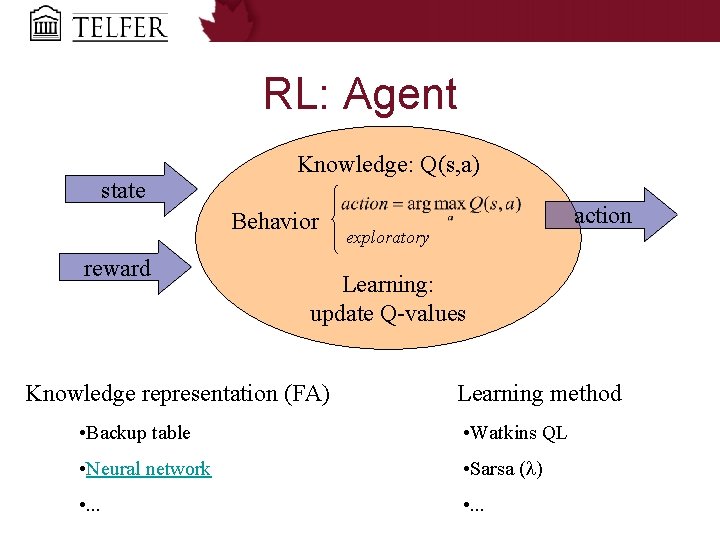

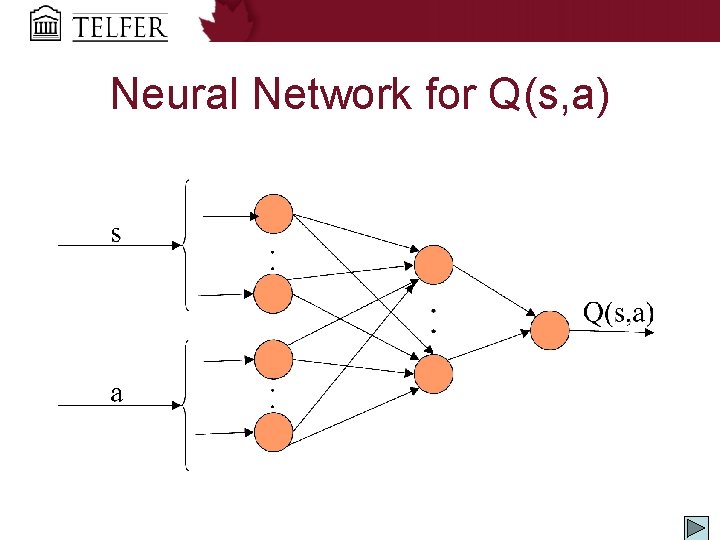

RL: Agent state Knowledge: Q(s, a) Behavior reward action exploratory Learning: update Q values Knowledge representation (FA) Learning method • Backup table • Watkins QL • Neural network • Sarsa ( ) • . . .

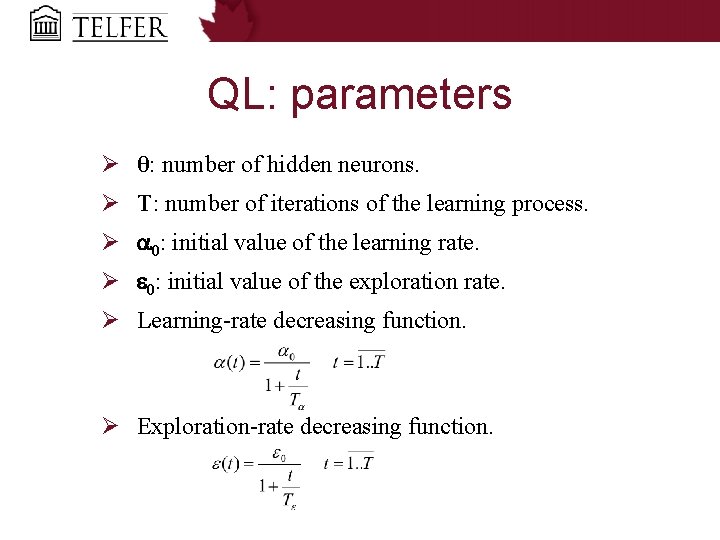

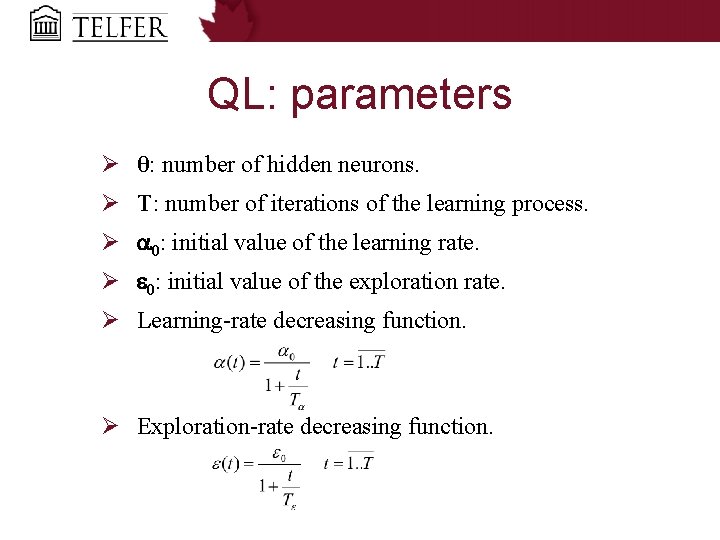

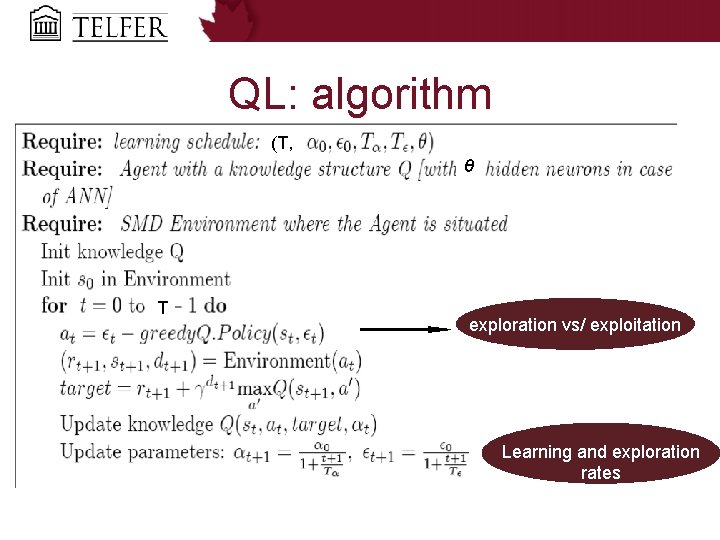

QL: parameters Ø θ: number of hidden neurons. Ø T: number of iterations of the learning process. Ø 0: initial value of the learning rate. Ø 0: initial value of the exploration rate. Ø Learning rate decreasing function. Ø Exploration rate decreasing function.

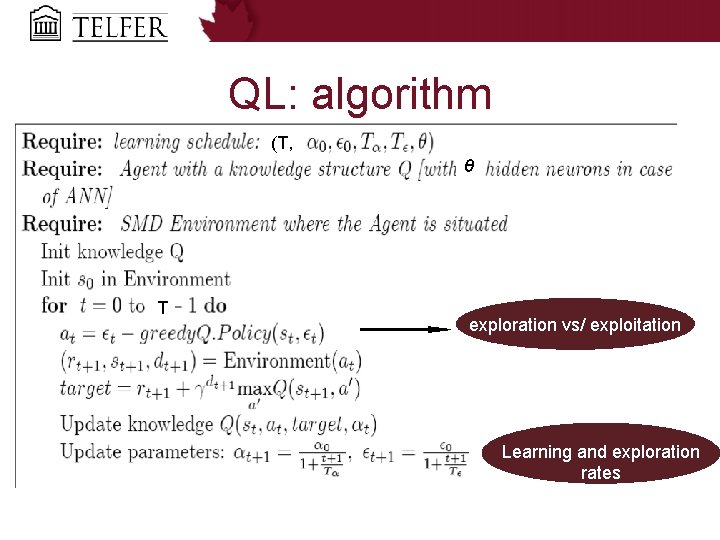

QL: algorithm (T, θ T exploration vs/ exploitation Learning and exploration rates

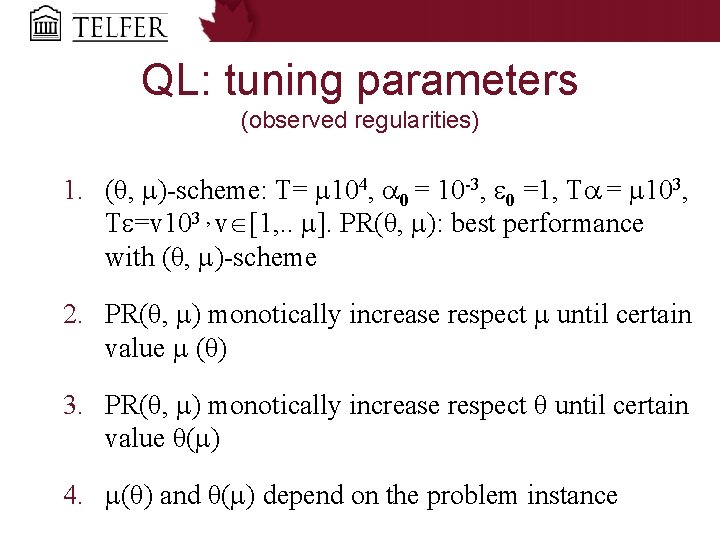

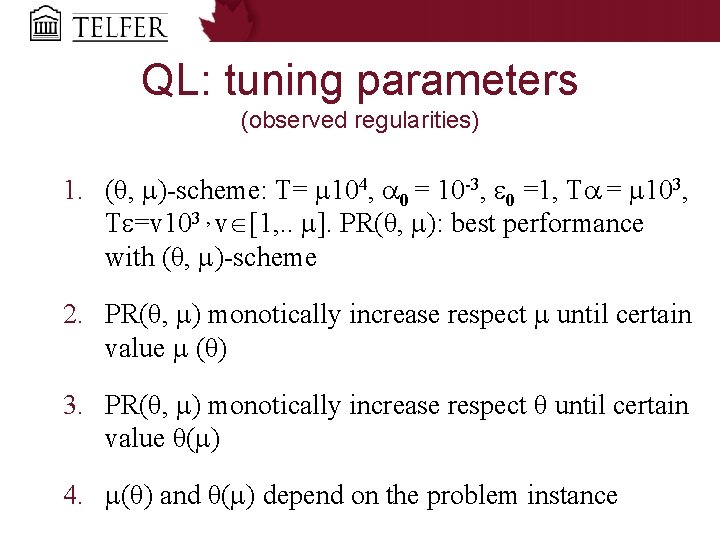

QL: tuning parameters (observed regularities) 1. (θ, ) scheme: T= 104, 0 = 10 3, 0 =1, T = 103, T =v 103 , v [1, . . ]. PR(θ, ): best performance with (θ, ) scheme 2. PR(θ, ) monotically increase respect until certain value (θ) 3. PR(θ, ) monotically increase respect θ until certain value θ( ) 4. (θ) and θ( ) depend on the problem instance

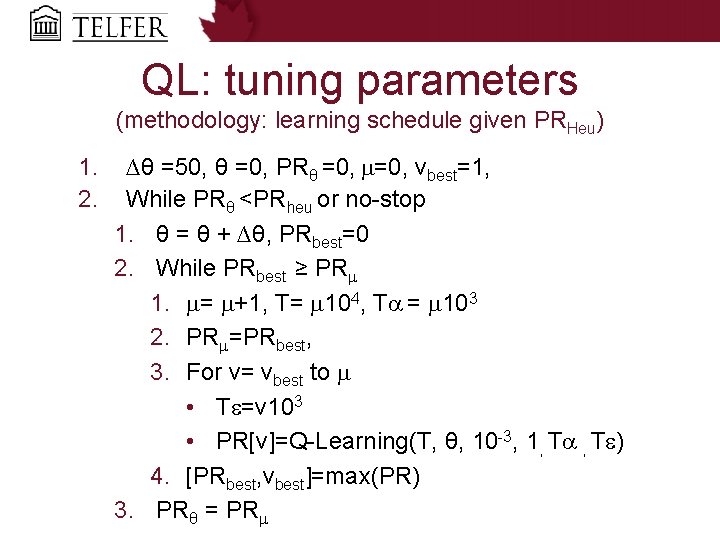

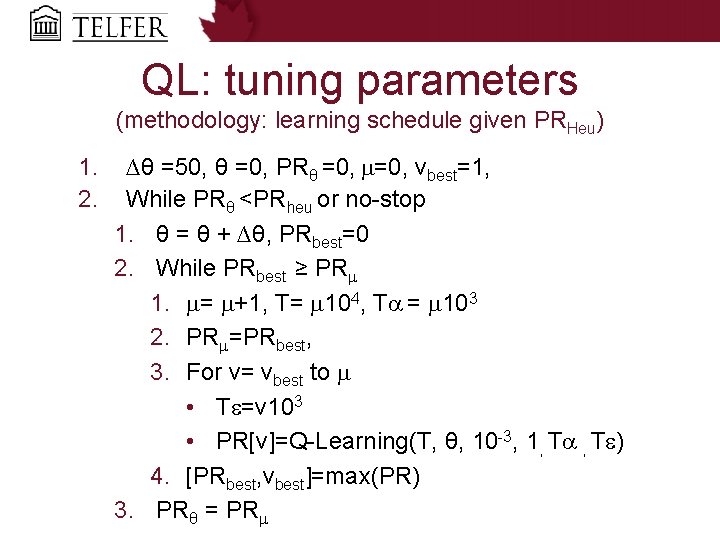

QL: tuning parameters (methodology: learning schedule given PRHeu) 1. 2. ∆θ =50, θ =0, PRθ =0, vbest=1, While PRθ <PRheu or no-stop 1. θ = θ + ∆θ, PRbest=0 2. While PRbest ≥ PR 1. = +1, T= 104, T = 103 2. PR =PRbest, 3. For v= vbest to • T =v 103 • PR[v]=Q-Learning(T, θ, 10 -3, 1, T ) 4. [PRbest, vbest]=max(PR) 3. PRθ = PR

Discussion Ø For given capacities solve the SMDP with QL Ø Model other LTC complexities: • different facilities and room accommodations, • client choice and • level of care

Thank you for your attention Ø Questions?

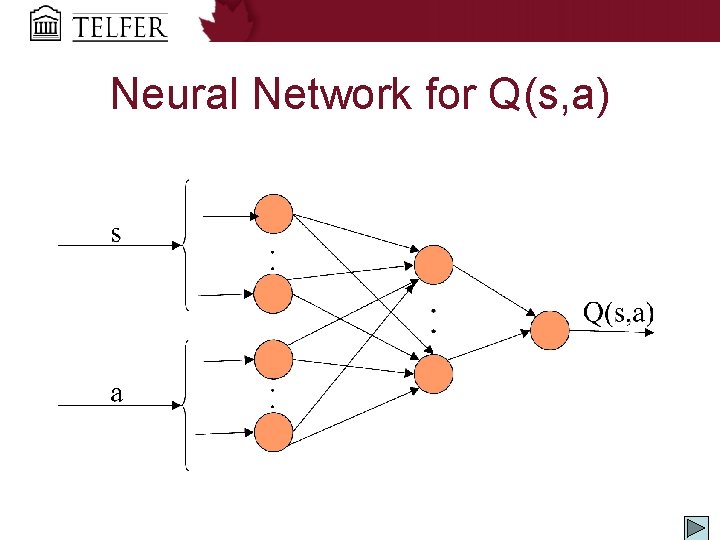

Neural Network for Q(s, a)