Machine Learning CPSC 315 Programming Studio Spring 2009

- Slides: 14

Machine Learning CPSC 315 – Programming Studio Spring 2009 Project 2, Lecture 5

Forms of Learning l Supervised l l Unsupervised l l Learns from examples which provide desired outputs for given inputs Learns patterns in input data when no specific output values are given Reinforcement l Learns by an indication of correctness at end of some reasoning

Supervised Learning l Must have training data including l l l Inputs (features) to be considered in decision Outputs (correct decisions) for those inputs Inductive reasoning l Given a collection of examples of function f, return a function h that approximates f l l l Difficulty: many functions h may be possible Hope to pick function h that generalizes well Tradeoff between the complexity of the hypothesis and the degree of fit to the data l Consider data modeling

Evaluating Supervised Learning Algorithms l l Collect a large set of examples (input/output pairs) Divide into two disjoint sets l l l Training data Testing data Apply learning algorithm to training data, generating a hypothesis h Measure % of examples in the testing data that are successfully classified by h (or amount of error for continuously valued outputs) Repeat above steps for different sizes of training sets and different randomly selected training sets

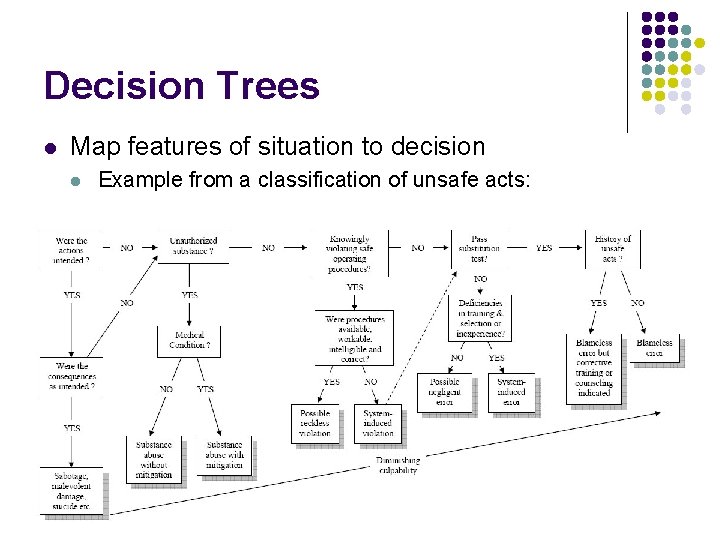

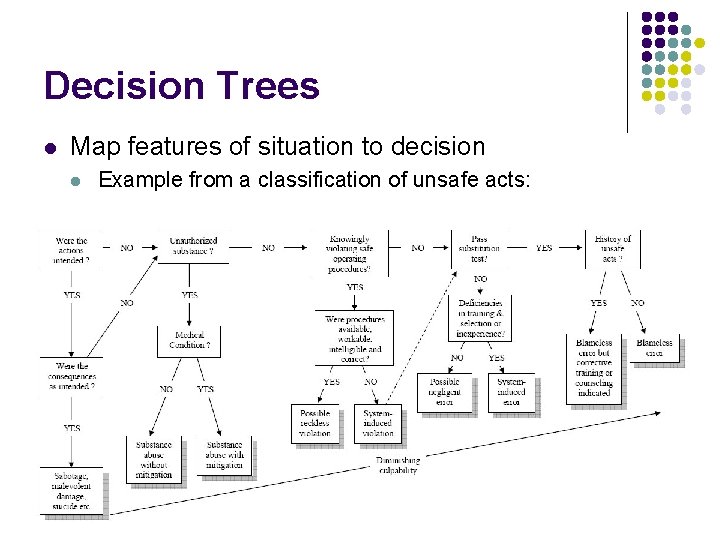

Decision Trees l Map features of situation to decision l Example from a classification of unsafe acts:

Decision Trees l Relation to rule-based reasoning l l l Features of element used to classify element Features of situation used to select action Used as the basis for many “how to” books l How to identify type of snake? l l How to fix an automobile? l l Observable features of snake Features related to problem and state of automobile If features are understandable, the decision tree can be used to explain decision

Learning Decision Trees l Types of Decision Trees l l l Learning a discrete-valued function is classification learning Learning a continuous-valued function is regression Assumption: use of Ockham’s razor will result in more general function l l Want the smallest decision tree, but that is not tractable Will be satisfied with smallish tree

Algorithm for Decision Tree Learning l Basic idea l l l Recursively select feature that splits data (most) unevenly No need to use all features Heuristic approach l Compare features for their ability to meaningfully split data l l Feature-value = greatest difference in average output value(s) * size of smaller subset Avoids splitting out individuals too early

Unsupervised Learning l Used to characterize/explain the key features of a set of data l l l Techniques l l No notion of desired output Example: identifying fast-food vs. fine-dining restaurants when classes are not known ahead of time Clustering (k means, HAC) Self-Organizing Maps Gaussian Mixture Models More on this topic in Project 3

Reinforcement Learning l l Many large problems do not have desired outputs that can be used as training data Process l l l Agent (system) performs a set of actions Agent occasionally receives a reward to indicate something went right or penalty to indicate something went wrong Agent has to learn relationship between the model of the situation, the chosen actions, and the rewards/penalties

Analogy to Animal Training l l l We cannot tell our pets what is right and wrong in (preconditions, action) pairs Instead we reward good behavior (giving treats) and penalize bad behavior (spraying water or loud noise) Pet has to learn when and where what is appropriate l l Can result in incorrect interpretations (go in corner vs. go outside) Difficulty: what of the prior/recent actions caused the positive/negative outcome l Clicker training for animals is meant to help this

Reinforcement Learning in Games l Simplest reinforcements l l l Winning or losing Requires lots of games/time to learn Other potential reinforcements l Opponent’s action selection l l Did they minimize your goodness value Modify goodness function to better match their moves Potential to learn an individual’s values/strategy Predicted goodness value vs. observed goodness value l l l This can be used in small (a few moves) or large (a game) time scales Similar to person reflecting on when things went wrong Need to be careful in implementation or else goodness function will return a constant (thus being totally consistent)

Modifying a Goodness Function l l Consider the game of chess Presume goodness function has three linear components l l l Board. Control l the difference between the number of board positions that Player 1 and Player 2 can get a piece to in one move Threatened l the difference between the number of opponents pieces threatened (can be taken in one move) between Player 1 and Player 2 Pieces l the difference in the sum of the values of pieces left for Player 1 and Player 2 where Queen = 10, Rook = 6, Bishop = 3, Knight = 3, Pawn = 1

Modifying a Goodness Function l l G(s) = a*Board. Control + b*Pieces + c*Threatened Modify coefficients to learn appropriate weighting of terms l l Quantity of overall modification should relate to difference between predicted goodness and observed goodness Direction of modification to each linear component should be related to whether they are consistent with or disagree with outcome Could modify coefficients using fixed values (e. g. +/-. 1) or with values a function of their effect on overall G for the state being considered In theory, such a computer player could recognize that Board. Control is more important early in a game, Pieces is more important mid-game, and Threatened is more important for the end game.