Implementing Processes and Threads CS 550 Operating Systems

- Slides: 16

Implementing Processes and Threads CS 550 Operating Systems

pthread_create • See last class's notes and check the man page. • Recall: there are four parameters that you pass in. • Each has a different meaning – The thread identifier within the function where thread is created – The thread attributes – The thread name, and – The thread parameter.

pthread_exit • The thread exits and returns the value passed into this function

pthread_join • Waits for a thread to complete in the calling thread. • This should be called for every thread that is created aside from the main thread.

Thread Memory • Remember that threads share the same process memory. • Variables declared outside the scope of the thread (outside thread's curly brackets) are accessible (and shared between) within every thread. • Variables declared inside the scope of the thread (within the curly brackets of the function representing the thread) are local to the thread.

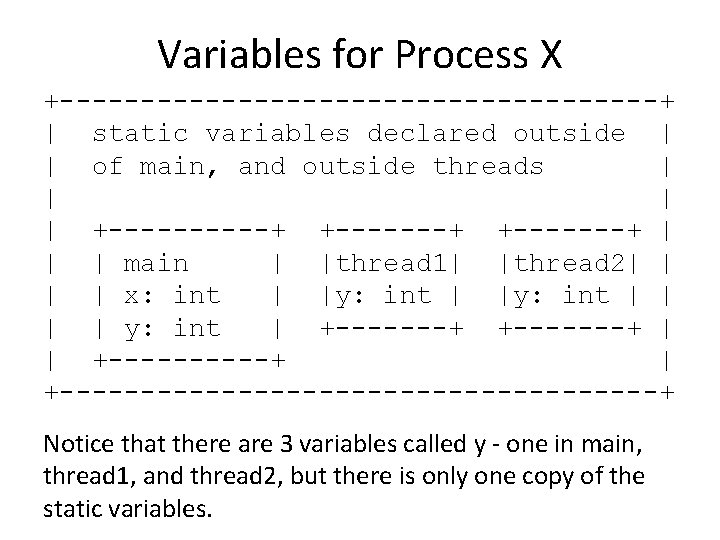

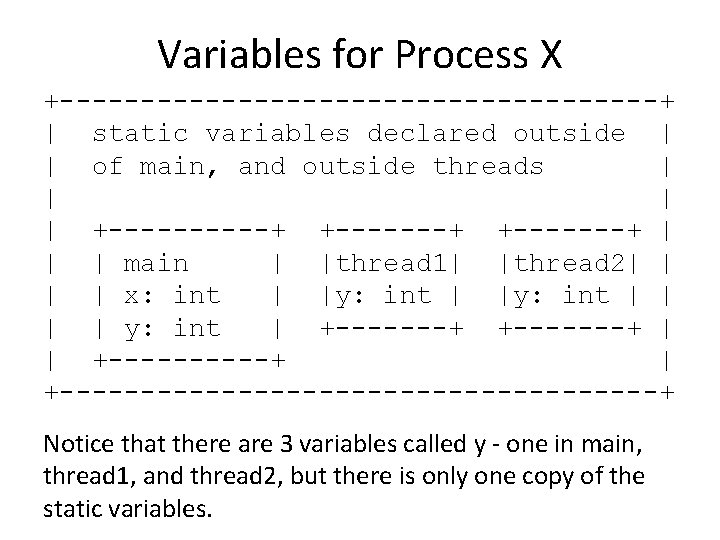

Variables for Process X +-------------------+ | static variables declared outside | | of main, and outside threads | | +-----+ +-------+ | | | main | |thread 1| |thread 2| | x: int | |y: int | | y: int | +-------+ | | +-----+ | +-------------------+ Notice that there are 3 variables called y - one in main, thread 1, and thread 2, but there is only one copy of the static variables.

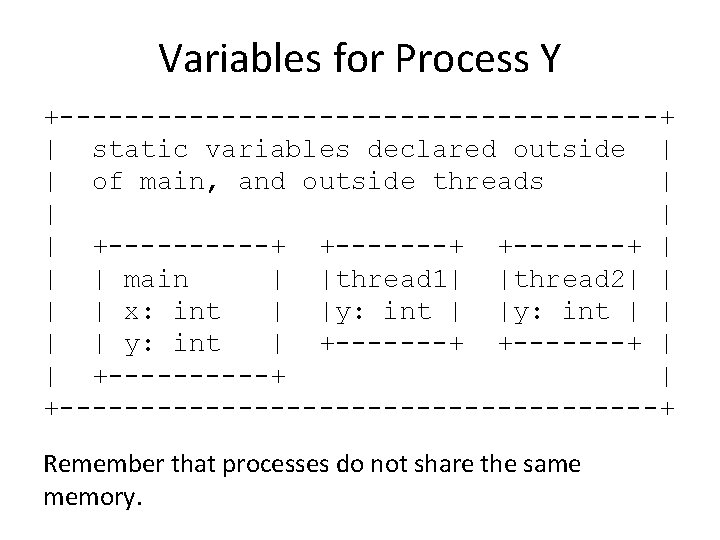

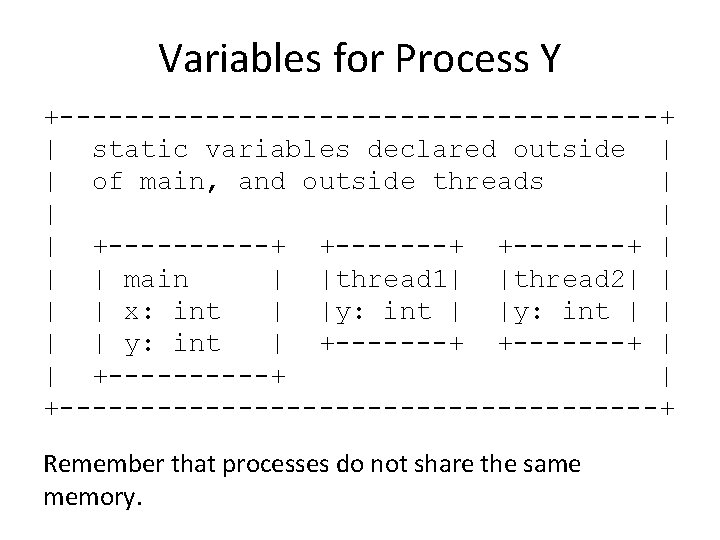

Variables for Process Y +-------------------+ | static variables declared outside | | of main, and outside threads | | +-----+ +-------+ | | | main | |thread 1| |thread 2| | x: int | |y: int | | y: int | +-------+ | | +-----+ | +-------------------+ Remember that processes do not share the same memory.

Processes X and Y • The static variables for process X and process Y are different even if they have the same names. • Processes do not share static or local variables, even if they were created using the same set of code.

Interprocess Communication • As we mentioned before, interprocess communication can occur by many different means: shared memory, pipes, sockets, etc. • These tools allow for data transfer between running programs (processes), but they are often not portable. • Shared memory, for example, acts almost like a shared file. • Processes can read and write data to and from the file.

Interprocess Communication • Pipes may allow for multidirectional communication between processes on the same machine. • Sockets may allow for multidirectional communication between processes on the same or networked machines. • We will investigate these in lab assignments, but to reduce some of the complexity, we will look at MPI in depth • MPI allows us to abstract away from reliance upon specific systems for shared memory and socket toolkits.

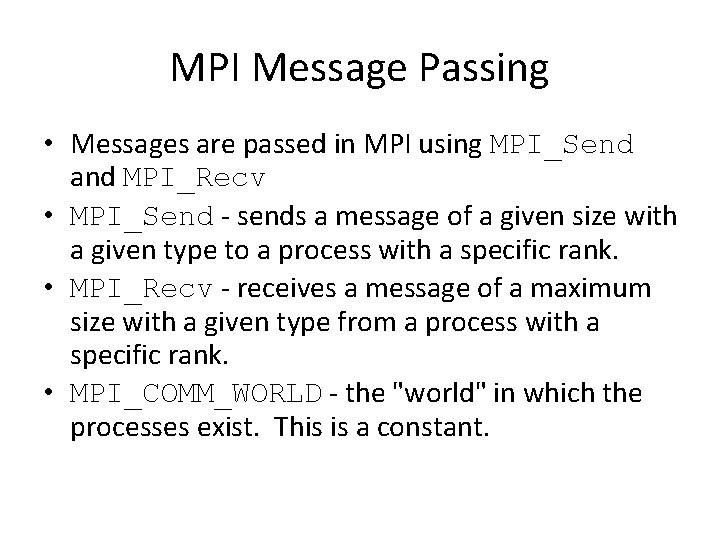

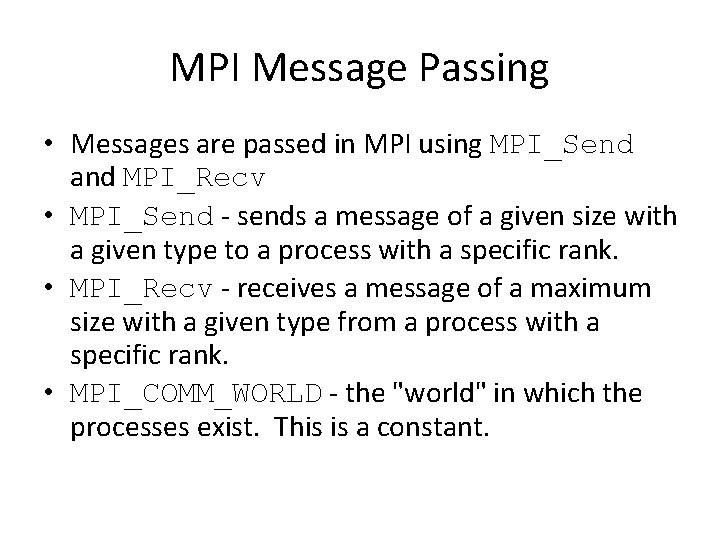

MPI Message Passing • MPI - the message passing interface allows for communication (IPC) between running processes, even those using the same source code.

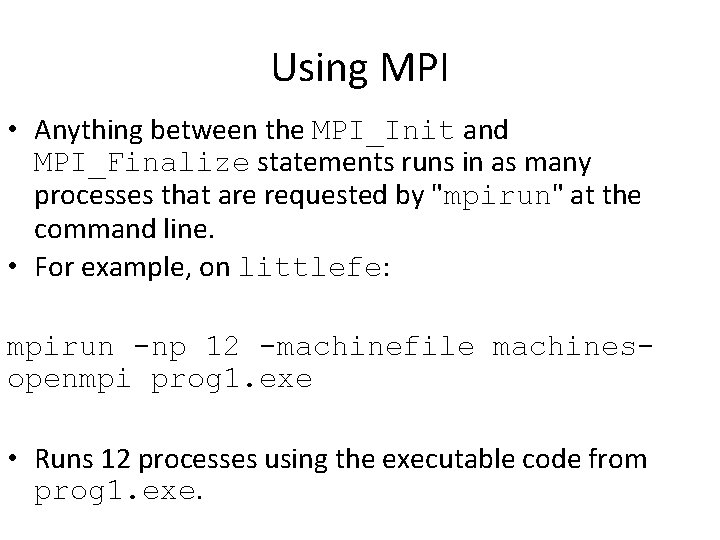

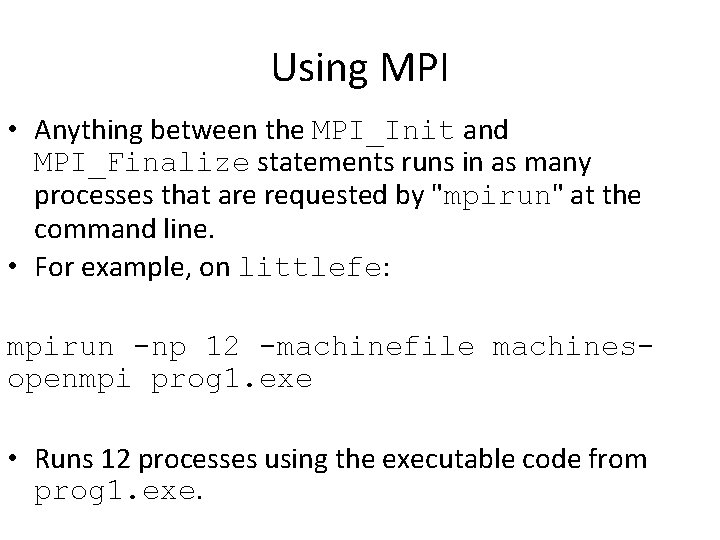

Using MPI • Processes use MPI by using #include "mpi. h" or <mpi. h> depending upon the system and MPI stack. • MPI is started in a program using: MPI_Init(&argc, &argv); • and ended with: MPI_Finalize(); • These function almost like curly brackets to start and end the program.

Using MPI • Anything between the MPI_Init and MPI_Finalize statements runs in as many processes that are requested by "mpirun" at the command line. • For example, on littlefe: mpirun -np 12 -machinefile machinesopenmpi prog 1. exe • Runs 12 processes using the executable code from prog 1. exe.

Identifying Processes in MPI • The MPI_Comm_rank and MPI_Comm_size functions get the rank (process identifier) and number of processes (the value 12 after -np, on the previous slide). • These were previously reviewed in class.

MPI Message Passing • Messages are passed in MPI using MPI_Send and MPI_Recv • MPI_Send - sends a message of a given size with a given type to a process with a specific rank. • MPI_Recv - receives a message of a maximum size with a given type from a process with a specific rank. • MPI_COMM_WORLD - the "world" in which the processes exist. This is a constant.

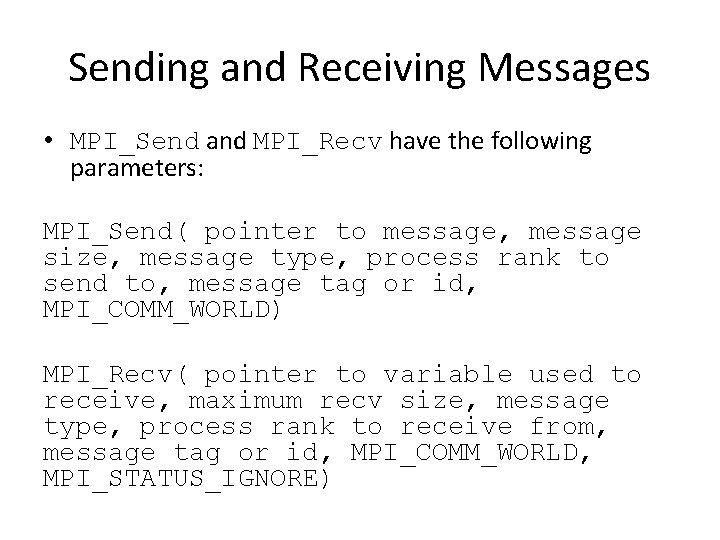

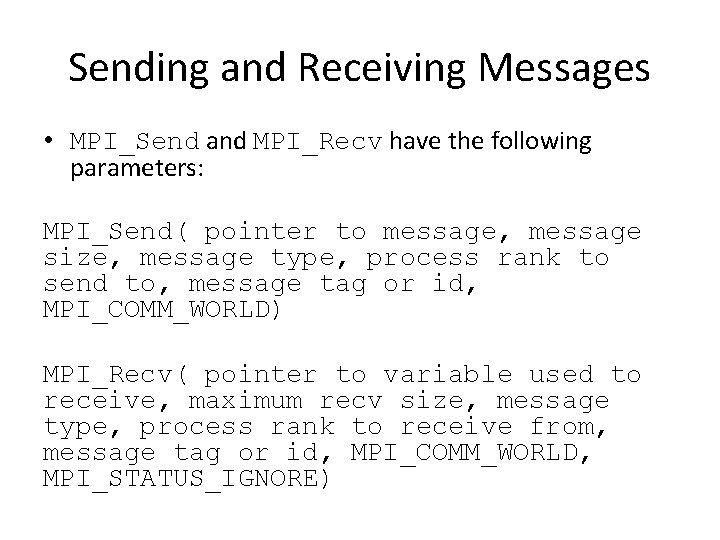

Sending and Receiving Messages • MPI_Send and MPI_Recv have the following parameters: MPI_Send( pointer to message, message size, message type, process rank to send to, message tag or id, MPI_COMM_WORLD) MPI_Recv( pointer to variable used to receive, maximum recv size, message type, process rank to receive from, message tag or id, MPI_COMM_WORLD, MPI_STATUS_IGNORE)