Efficiently Sharing Common Data HTCondor Week 2015 Zach

- Slides: 28

Efficiently Sharing Common Data HTCondor Week 2015 Zach Miller (zmiller@cs. wisc. edu) Center for High Throughput Computing Department of Computer Sciences University of Wisconsin-Madison

“Problems” with HTCondor › Input files are never reused h. From one job to the next h. Multiple slots on same machine › Input files are transferred serially from the › machine where the job was submitted This results in the submit machine often transferring multiple copies of the same file simultaneously (bad!), sometimes to the same machine (even worse!). 2

HTCache › Enter the HTCache! › Runs on the execute machine › Runs under the condor_master just like any › › other daemon One daemon serves all users of that machine Runs with same privilege as the startd 3

HTCache › Cache is on disk › Persists across restarts › Configurable size › Configurable cache replacement policy 4

HTCache › The cache is shared › All slots use same local cache › Even if user is different (data is data!) › Thus, the HTCache needs the ability to write files into a job’s sandbox as the user that will run the job 5

Preparing Job Sandbox › Instead of fetching files from the shadow, › › the job instructs the HTCache to put specific files into the sandbox If the file is in the cache, the HTCache COPIES the file into the sandbox Each slot gets its own copy, in case the job decides to modify it. (As opposed to hard or soft-linking the file into the sandbox) 6

Preparing Job Sandbox › If the file is not in the cache, the HTCache fetches the file directly into the sandbox and then possibly adds it to the cache › Wait… possibly? 7

Cache Policy › Yes, possibly. › Obvious case: File is larger than cache › Larger question: which files are the best to › keep? Cache policy is one of those things where it is rarely a “one solution works best in all cases” 8

Cache Policy › There are 10 problems in Computer Science: h. Caching h. Levels of Indirection h. Off-by-one errors › Allow flexible caching by adding a level of indirection. Don’t use size, time, etc. , but rather the “value” of a file. 9

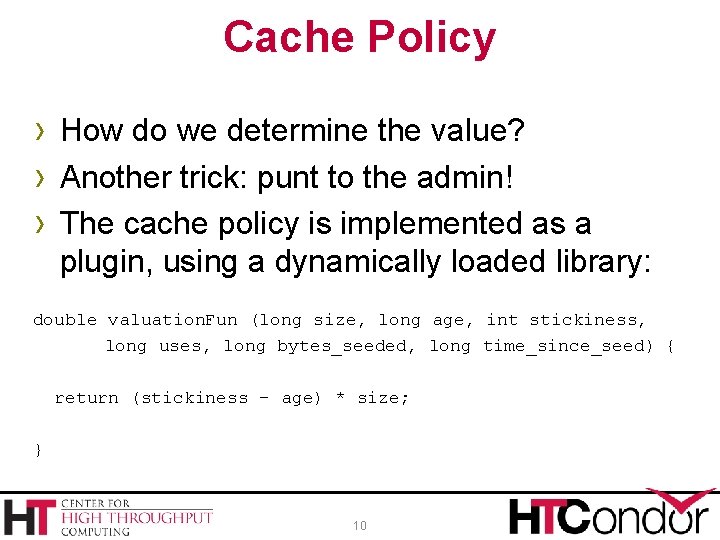

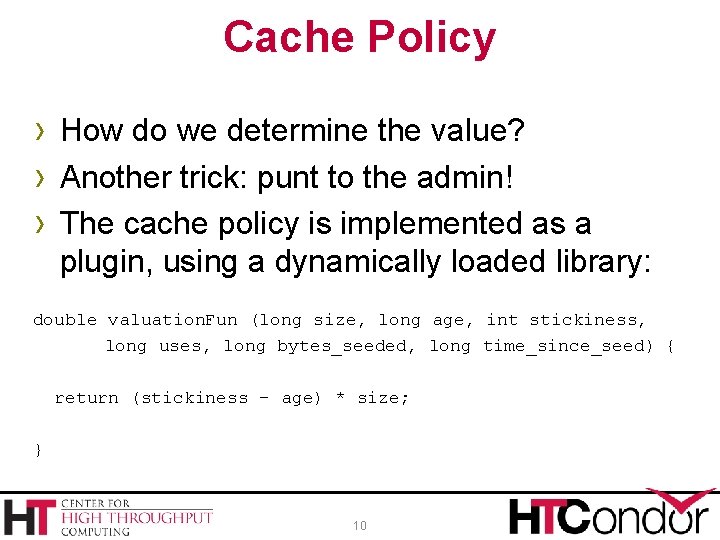

Cache Policy › How do we determine the value? › Another trick: punt to the admin! › The cache policy is implemented as a plugin, using a dynamically loaded library: double valuation. Fun (long size, long age, int stickiness, long uses, long bytes_seeded, long time_since_seed) { return (stickiness – age) * size; } 10

Cache Policy › The plugin determines the “value” of a file using the input parameters: h. File size h. Time file entered cache h. Time last accessed h. Number of hits h“Stickiness” (This is a hint provided by the submit node… more on that later) 11

Cache Policy › When deciding whether or not to cache a › › › file, the HTCache considers all files currently in the cache, plus the file under consideration Computes the “value” of each file Finds the “maximum value cache” that fits in the allocated size May or may NOT include the file just fetched 12

Submit Node HTCache › There is a submit-side component as well, although it has a slightly different role h. Does not have a dedicated disk cache h. Instead, serves all files requested by jobs h. Periodically scans the queue, counts the number of jobs that use each input file, and broadcasts this “stickiness” value to all HTCache daemons 13

Example › Suppose I have a cluster of 25 eight-core › machines I have a 1 GB input file common to all my jobs (a common scenario for say, BLAST) › I submit 1000 jobs › Old way: Each time a job starts up it transfers the 1 GB file to the sandbox (1 TB) 14

Example › New way: Each of the 25 machines gets the file once, shares it among all 8 slots, and it persists across jobs › Naïve calculation: 25 GB transfer (as › opposed to 1 TB). Of course, this ignores competition for the cache. 15

Example › This is where “stickiness” helps › If I submit a separate batch of 50 jobs using › a different 1 GB input, the HTCache can look at the stickiness and decide not to evict the first 1 GB file since 1000 jobs are scheduled to use it is opposed to 50 It’s possible to write a cache policy tailored to your cluster’s particular workload 16

Success! › This already has huge advantages. › Even if cache does nothing useful and › › makes all the wrong choices, it can do NO WORSE than the existing method of transferring file every time. A huge advantage: Multiple slots share same cache! (And this advantage grows as number of cores grows) Massively reduces network load on Schedd 17

HTCache Results 18

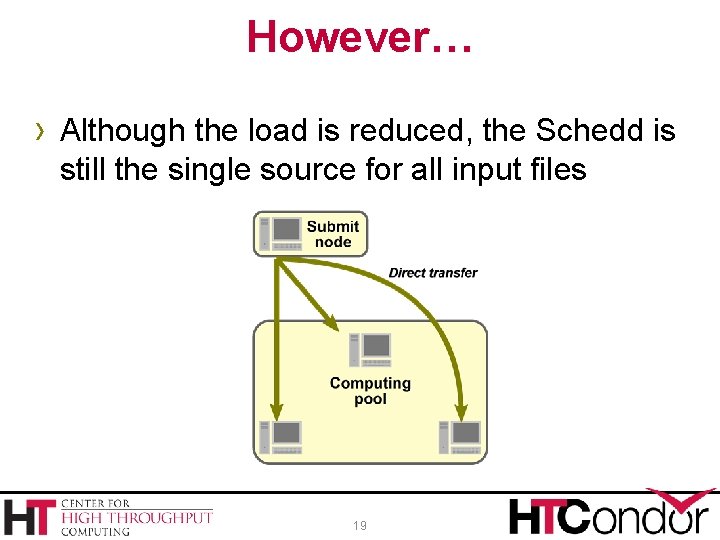

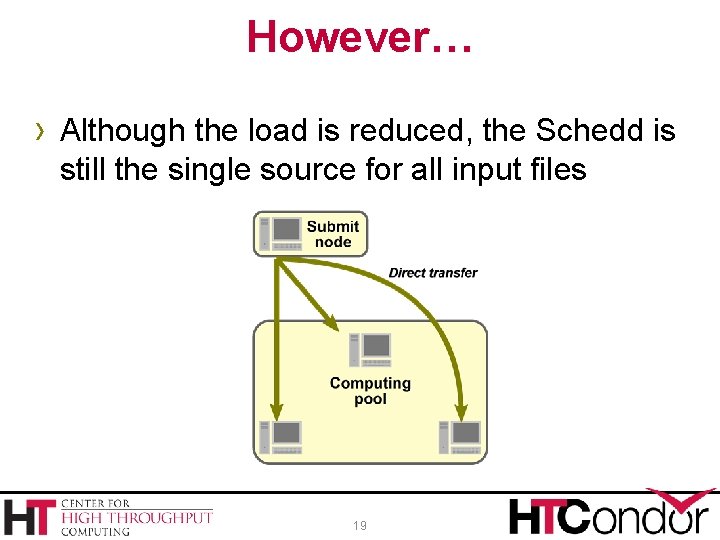

However… › Although the load is reduced, the Schedd is still the single source for all input files 19

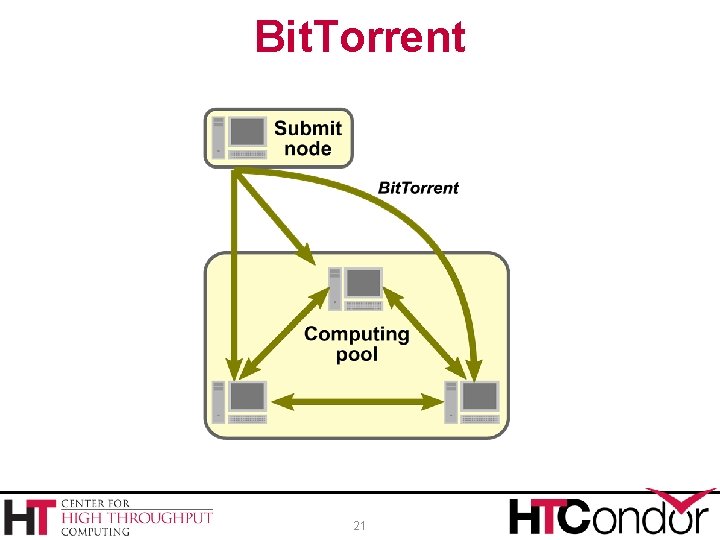

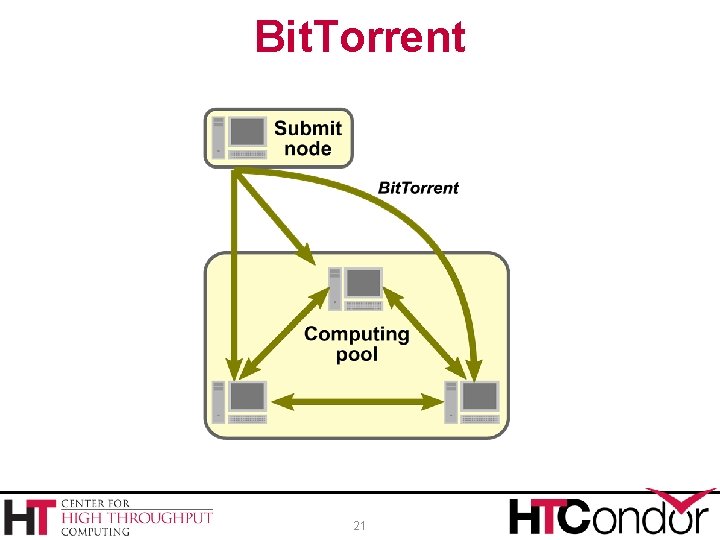

However… › What if there was a way to get the files from › › › somewhere else? Maybe even bits of the files from multiple different sources? Peer-to-peer? We already have an HTCache deployed on all the execute nodes… 20

Bit. Torrent 21

Submit Node w/ Bit. Torrent › The HTCache running on the submit node › › acts as a Seed. Server It always has all pieces of files that may be read. If you recall, it is not managing a cache, only serving the already existing files in place. When a job is submitted, input files are then automatically added to the seed server 22

Execute Node w/ Bit. Torrent › The HTCache uses Bit. Torrent to retreive the file directly into the sandbox first. › Optionally adds the file to its own cache › Thus, Bit. Torrent is used to transfer files even if they won't end up in the cache 23

Putting It All Together 24

Putting It All Together 25

Project Status › “Grad. Student-ware” h. Was done as a class project h. Doesn’t yet meet the exceedingly high standards for committing into our main code repository. › Bit. Torrent traffic is completely independent from HTCondor. As such, doesn’t work with the shared_port daemon 26

Conclusion › Obvious statement of the year: Caching is › › good! Runner-up: Using peer-to-peer file transfer can be faster than one-to-many file transfer! However, the nature of scientific workloads and multi-core machines creates an environment where these are especially advantageous 27

Conclusion › Thank you! › Questions? Comments? › Ask now, talk to me at lunch, or email me at zmiller@cs. wisc. edu 28