CS 621 Artificial Intelligence ARTIFICIAL INTELLIGENCE Lecture 22

- Slides: 16

CS 621 Artificial Intelligence ARTIFICIAL INTELLIGENCE Lecture 22 - 07/10/05 Prof. Pushpak Bhattacharyya Perceptron Training & Convergence 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 1

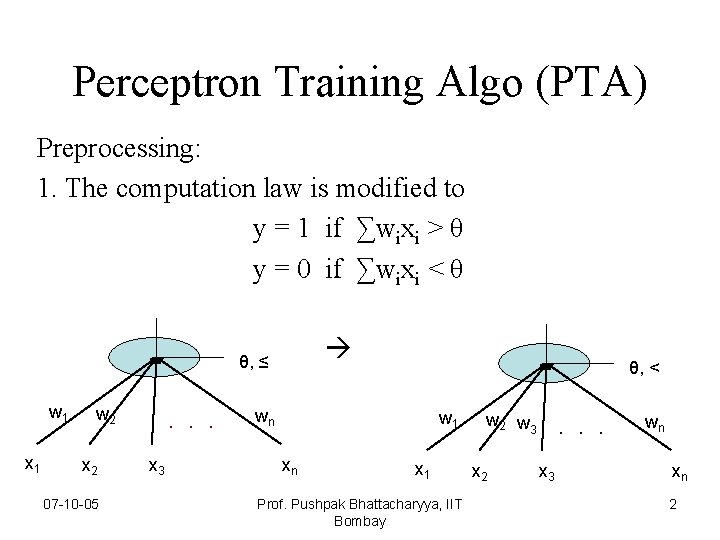

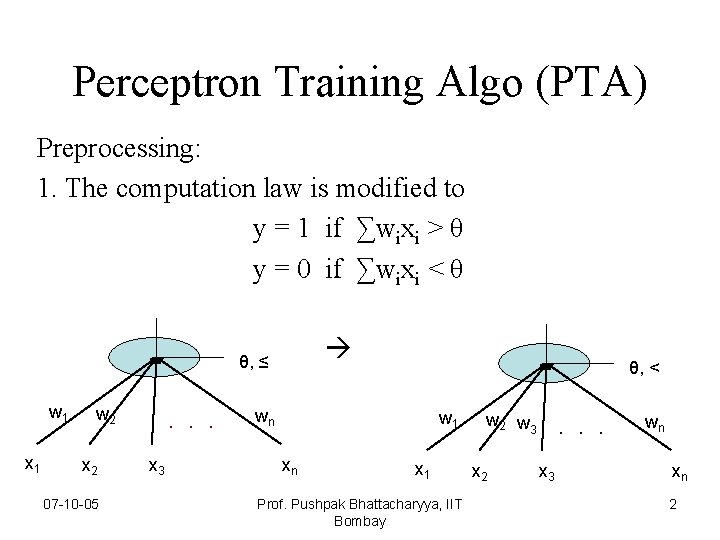

Perceptron Training Algo (PTA) Preprocessing: 1. The computation law is modified to y = 1 if ∑wixi > θ y = 0 if ∑wixi < θ θ, ≤ w 1 x 1 w 2 x 2 07 -10 -05 . . . x 3 θ, < wn w 1 xn x 1 Prof. Pushpak Bhattacharyya, IIT Bombay w 2 w 3 x 2 . . . x 3 wn xn 2

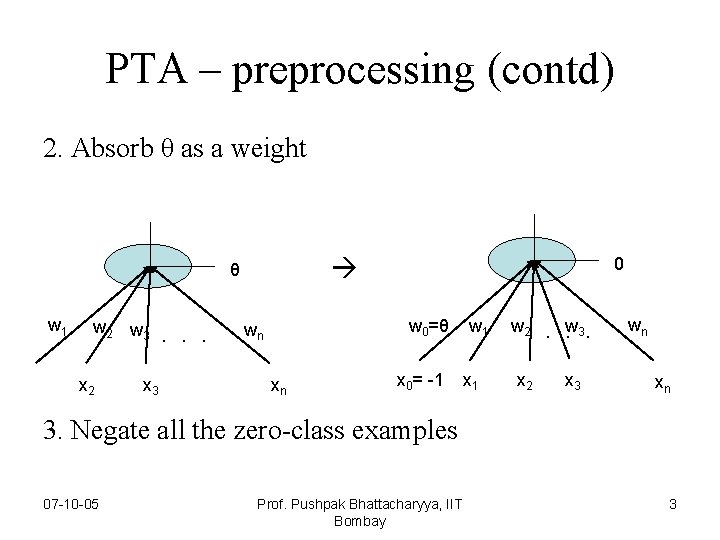

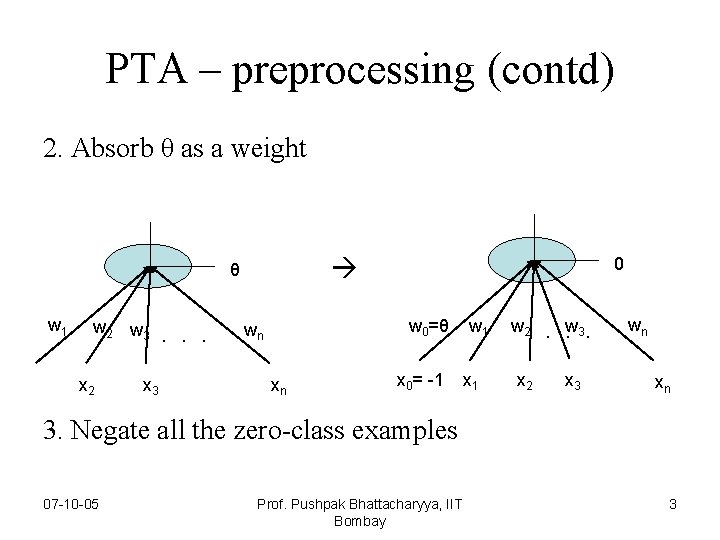

PTA – preprocessing (contd) 2. Absorb θ as a weight θ w 1 w 2 w 3. . . x 2 x 3 0 w 0=θ wn xn x 0= -1 w 1 x 1 w 2. w. 3. x 2 x 3 wn xn 3. Negate all the zero-class examples 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 3

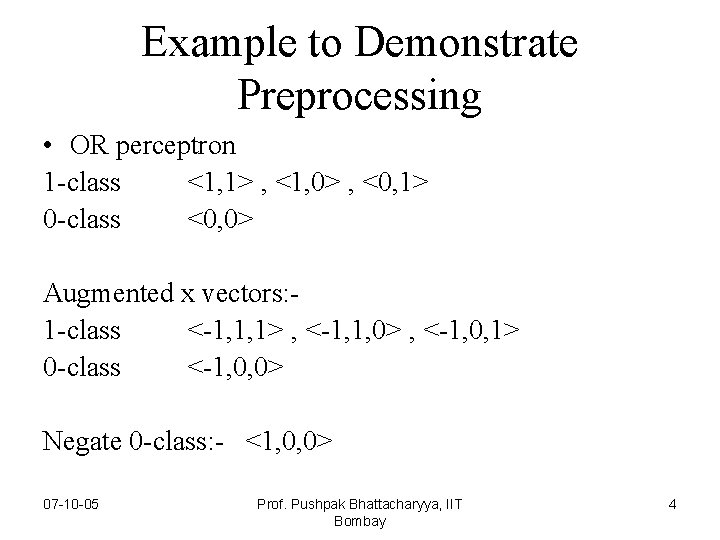

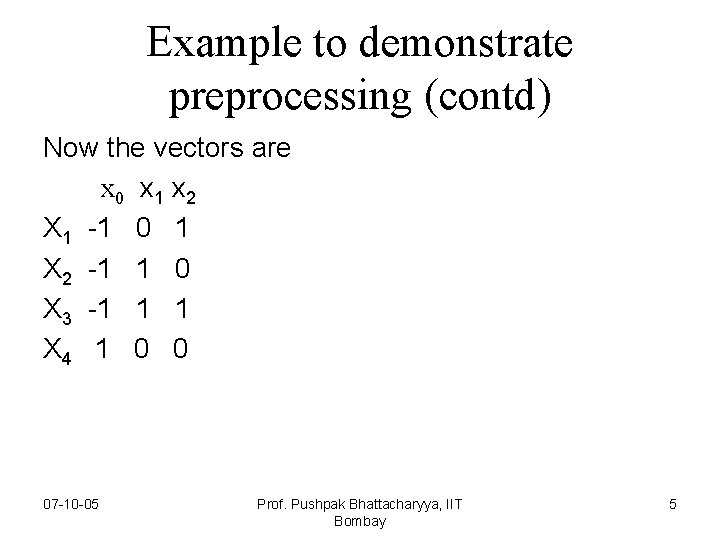

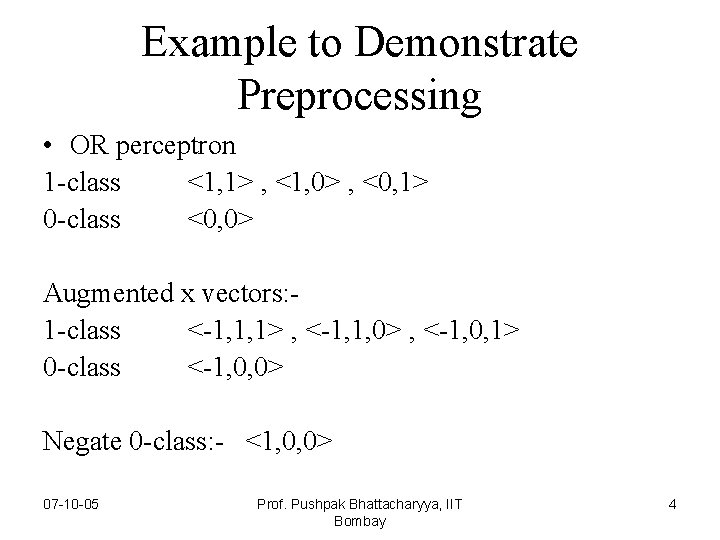

Example to Demonstrate Preprocessing • OR perceptron 1 -class <1, 1> , <1, 0> , <0, 1> 0 -class <0, 0> Augmented x vectors: 1 -class <-1, 1, 1> , <-1, 1, 0> , <-1, 0, 1> 0 -class <-1, 0, 0> Negate 0 -class: - <1, 0, 0> 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 4

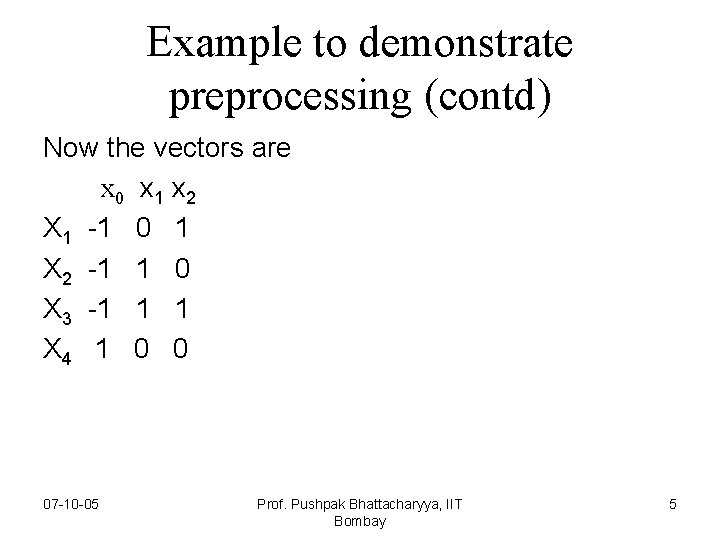

Example to demonstrate preprocessing (contd) Now the vectors are x 0 x 1 x 2 X 1 -1 0 1 X 2 -1 1 0 X 3 -1 1 1 X 4 1 0 0 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 5

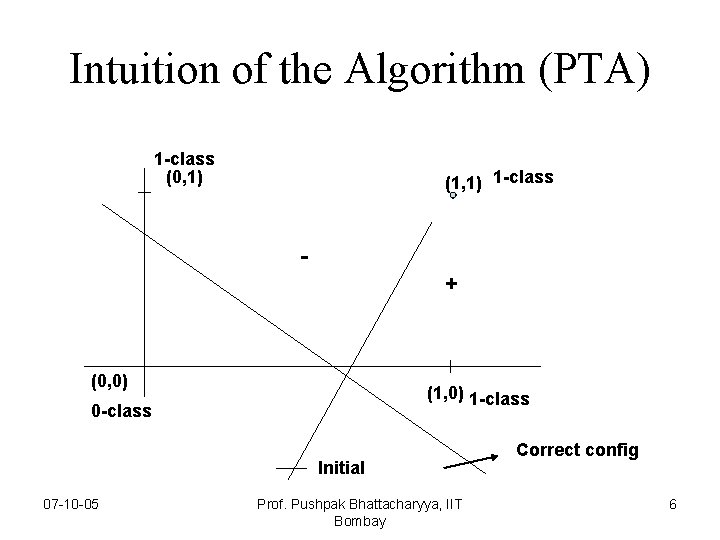

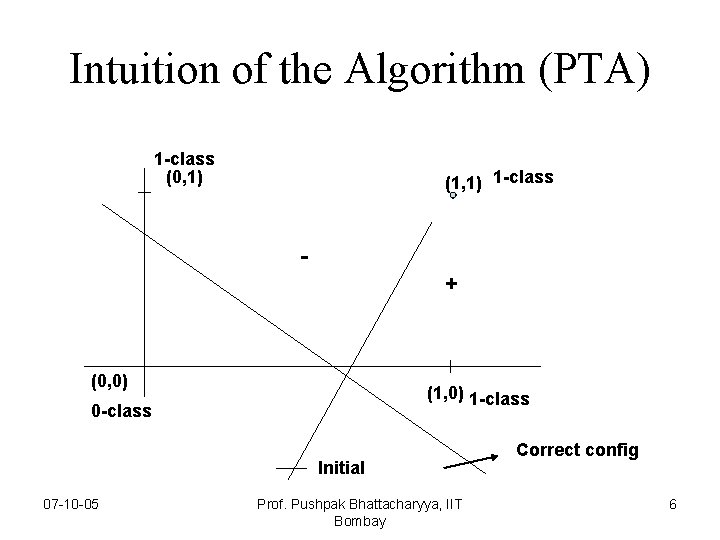

Intuition of the Algorithm (PTA) 1 -class (0, 1) (1, 1) 1 -class + (0, 0) (1, 0) 1 -class 0 -class Initial 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay Correct config 6

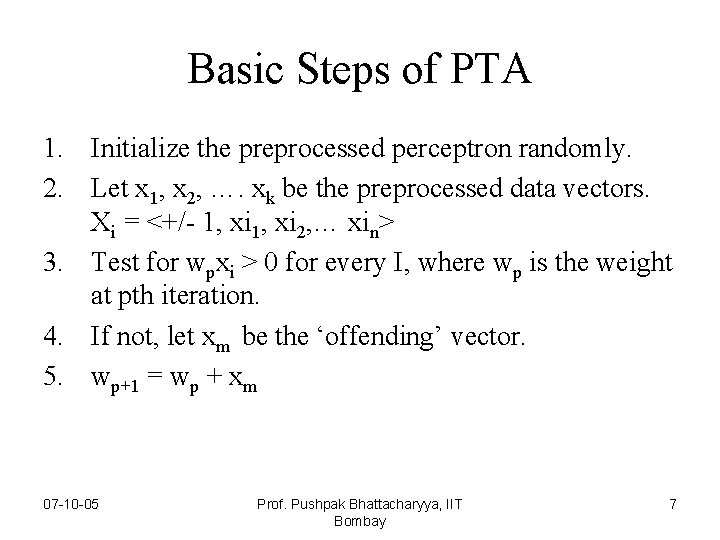

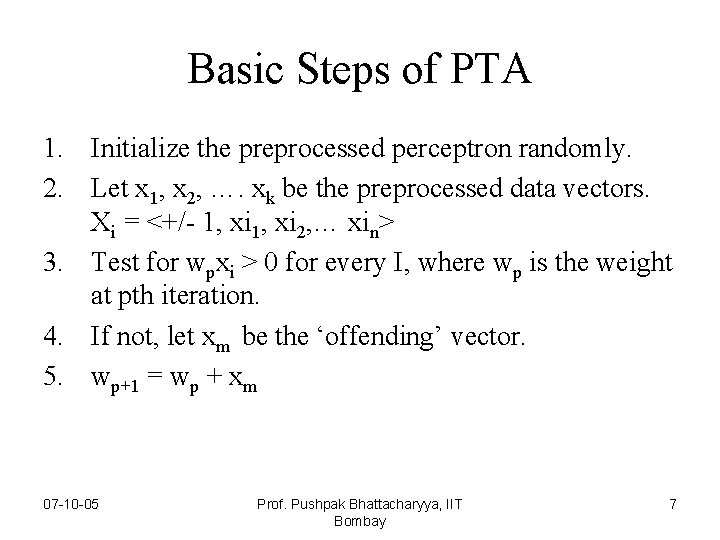

Basic Steps of PTA 1. Initialize the preprocessed perceptron randomly. 2. Let x 1, x 2, …. xk be the preprocessed data vectors. Xi = <+/- 1, xi 2, … xin> 3. Test for wpxi > 0 for every I, where wp is the weight at pth iteration. 4. If not, let xm be the ‘offending’ vector. 5. wp+1 = wp + xm 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 7

Basic Steps of PTA (contd) 6. Repeat from step 3 7. If wqxi > 0 for all i exit with success wq is the required weight. 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 8

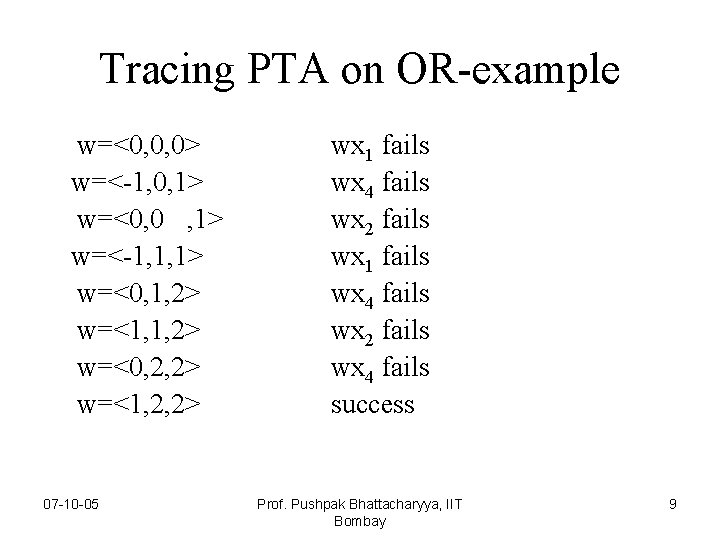

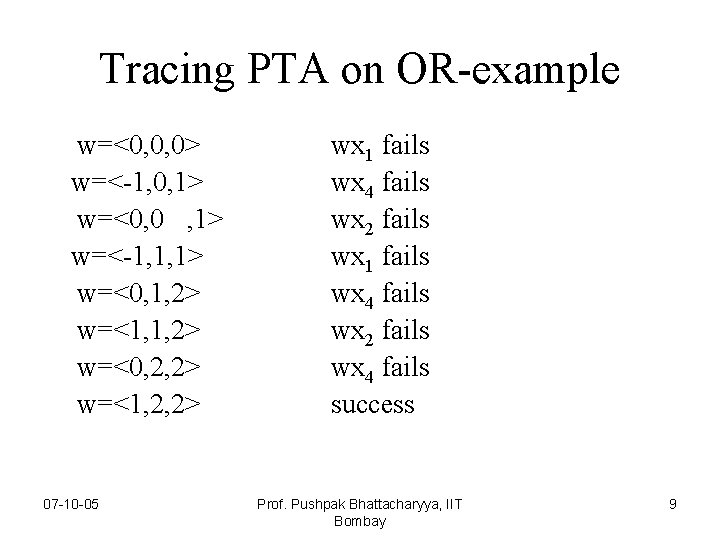

Tracing PTA on OR-example w=<0, 0, 0> w=<-1, 0, 1> w=<0, 0 , 1> w=<-1, 1, 1> w=<0, 1, 2> w=<1, 1, 2> w=<0, 2, 2> w=<1, 2, 2> 07 -10 -05 wx 1 fails wx 4 fails wx 2 fails wx 4 fails success Prof. Pushpak Bhattacharyya, IIT Bombay 9

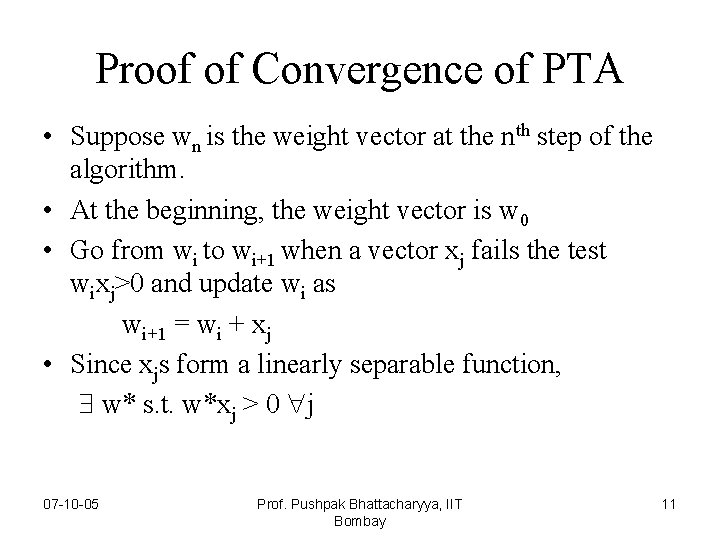

Convergence of PTA • Perceptron Training Algorithm (PTA) • Statement: Whatever be the initial choice of weights and whatever be the vector chosen for testing, PTA converges if the vectors are from a linearly separable function. 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 10

Proof of Convergence of PTA • Suppose wn is the weight vector at the nth step of the algorithm. • At the beginning, the weight vector is w 0 • Go from wi to wi+1 when a vector xj fails the test wixj>0 and update wi as wi+1 = wi + xj • Since xjs form a linearly separable function, w* s. t. w*xj > 0 j 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 11

Proof of Convergence of PTA (contd) • Suppose wn is the weight vector at the nth step of the algorithm. • At the beginning, the weight vector is w 0 • Go from wi to wi+1 when a vector xj fails the test wixj > 0 and update wi as wi+1 = wi + xj • Since xjs form a linearly separable function, w* s. t. w*xj > 0 j 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 12

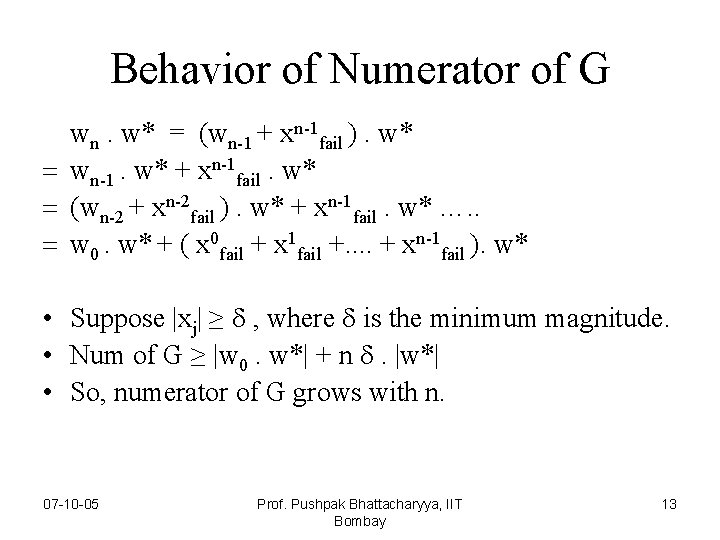

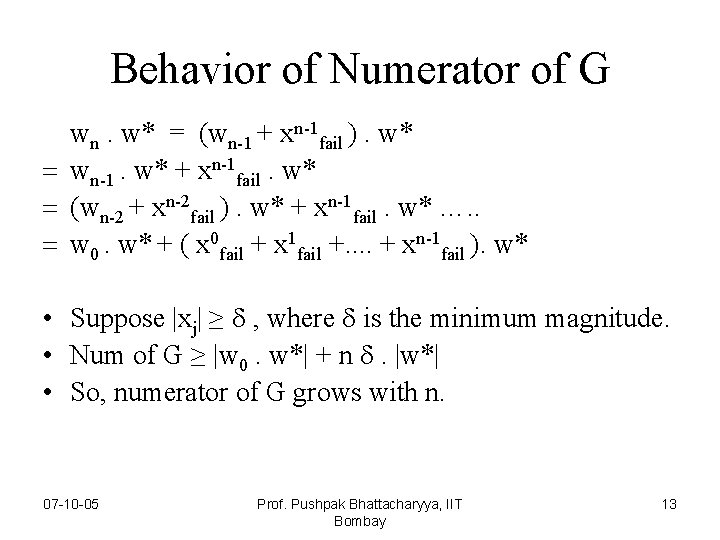

Behavior of Numerator of G wn. w* = (wn-1 + xn-1 fail ). w* = wn-1. w* + xn-1 fail. w* = (wn-2 + xn-2 fail ). w* + xn-1 fail. w* …. . = w 0. w* + ( x 0 fail + x 1 fail +. . + xn-1 fail ). w* • Suppose |xj| ≥ , where is the minimum magnitude. • Num of G ≥ |w 0. w*| + n . |w*| • So, numerator of G grows with n. 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 13

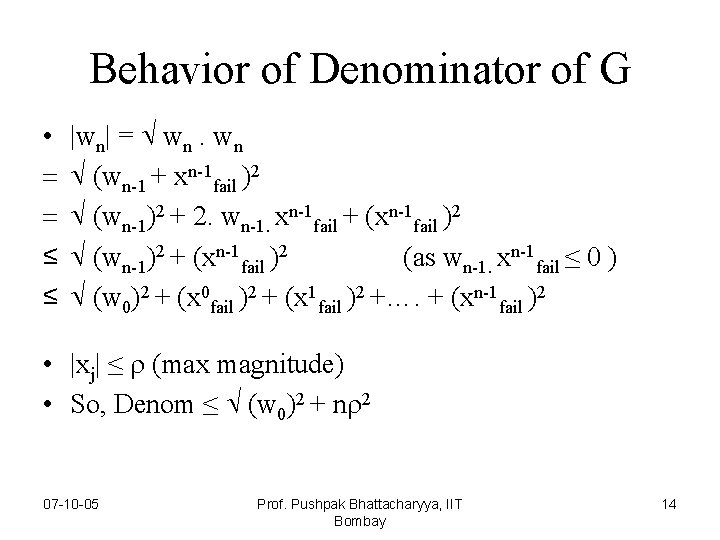

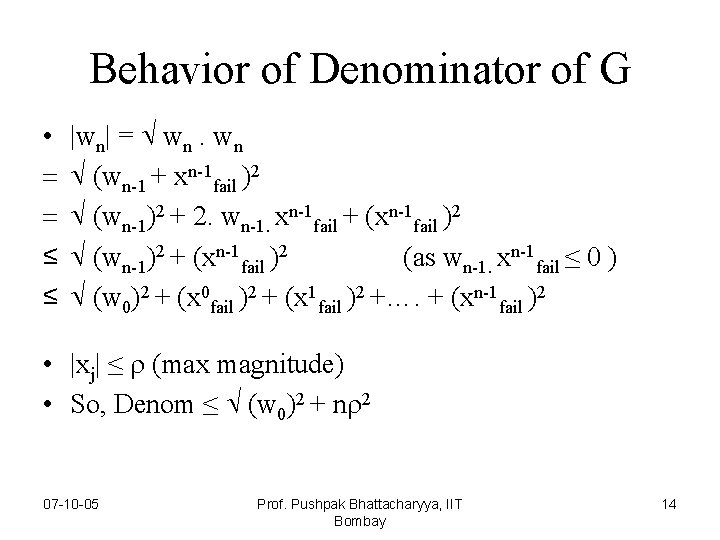

Behavior of Denominator of G • = = ≤ ≤ |wn| = wn. wn (wn-1 + xn-1 fail )2 (wn-1)2 + 2. wn-1. xn-1 fail + (xn-1 fail )2 (wn-1)2 + (xn-1 fail )2 (as wn-1. xn-1 fail ≤ 0 ) (w 0)2 + (x 0 fail )2 + (x 1 fail )2 +…. + (xn-1 fail )2 • |xj| ≤ (max magnitude) • So, Denom ≤ (w 0)2 + n 2 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 14

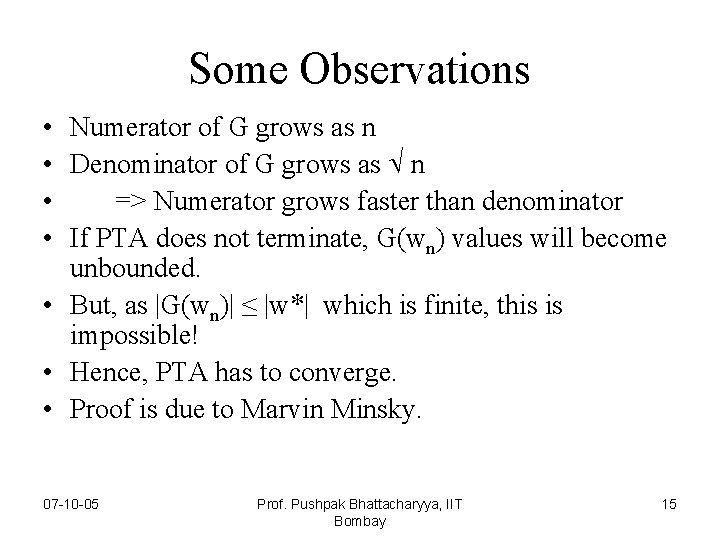

Some Observations • Numerator of G grows as n • Denominator of G grows as n • => Numerator grows faster than denominator • If PTA does not terminate, G(wn) values will become unbounded. • But, as |G(wn)| ≤ |w*| which is finite, this is impossible! • Hence, PTA has to converge. • Proof is due to Marvin Minsky. 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 15

Exercise Look at the geometric proof of convergence of PTA from the book M. L. Minsky and S. A. Papert. Perceptrons, Expanded Edition. MIT Press, 1988. 07 -10 -05 Prof. Pushpak Bhattacharyya, IIT Bombay 16