Coregistration of Human Hand NonHuman Primate Hand Kinematics

- Slides: 4

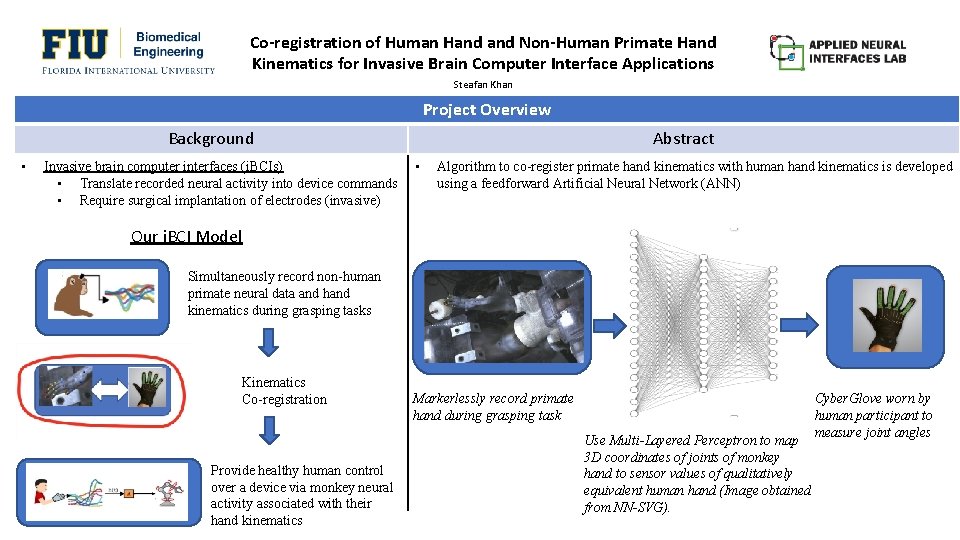

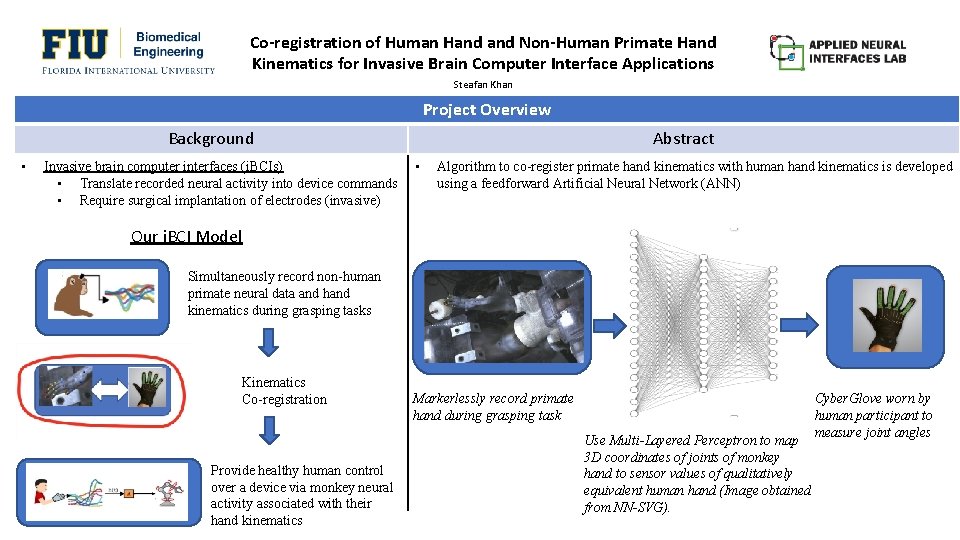

Co-registration of Human Hand Non-Human Primate Hand Kinematics for Invasive Brain Computer Interface Applications Steafan Khan Project Overview Background • Invasive brain computer interfaces (i. BCIs) • Translate recorded neural activity into device commands • Require surgical implantation of electrodes (invasive) Abstract • Algorithm to co-register primate hand kinematics with human hand kinematics is developed using a feedforward Artificial Neural Network (ANN) Our i. BCI Model Simultaneously record non-human primate neural data and hand kinematics during grasping tasks Kinematics Co-registration Provide healthy human control over a device via monkey neural activity associated with their hand kinematics Markerlessly record primate hand during grasping task Use Multi-Layered Perceptron to map 3 D coordinates of joints of monkey hand to sensor values of qualitatively equivalent human hand (Image obtained from NN-SVG). Cyber. Glove worn by human participant to measure joint angles

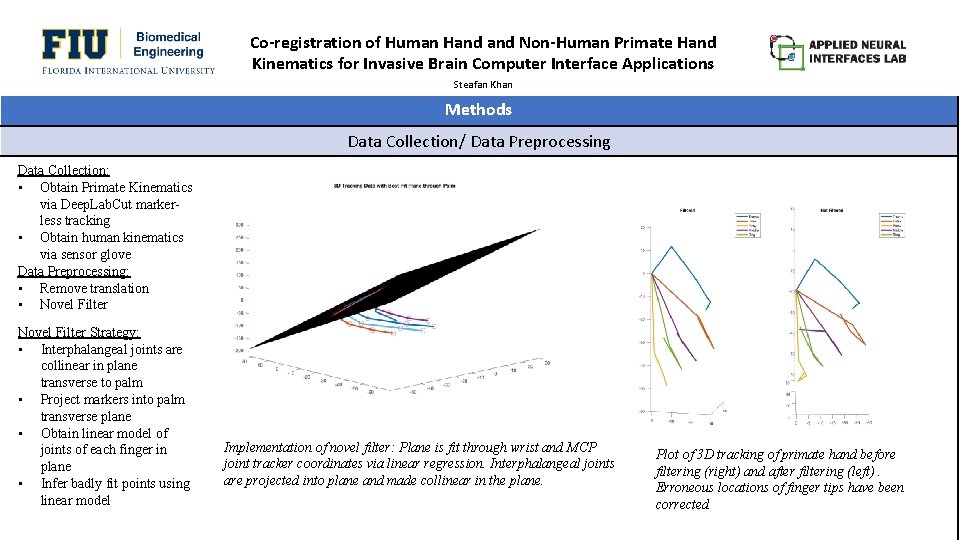

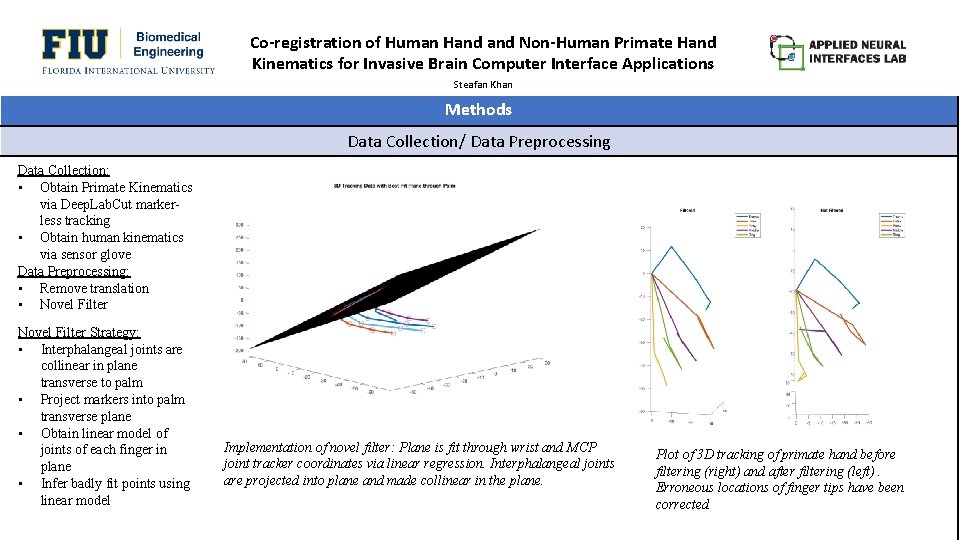

Co-registration of Human Hand Non-Human Primate Hand Kinematics for Invasive Brain Computer Interface Applications Steafan Khan Methods Data Collection/ Data Preprocessing Data Collection: • Obtain Primate Kinematics via Deep. Lab. Cut markerless tracking • Obtain human kinematics via sensor glove Data Preprocessing: • Remove translation • Novel Filter Strategy: • Interphalangeal joints are collinear in plane transverse to palm • Project markers into palm transverse plane • Obtain linear model of joints of each finger in plane • Infer badly fit points using linear model Implementation of novel filter: Plane is fit through wrist and MCP joint tracker coordinates via linear regression. Interphalangeal joints are projected into plane and made collinear in the plane. Plot of 3 D tracking of primate hand before filtering (right) and after filtering (left). Erroneous locations of finger tips have been corrected

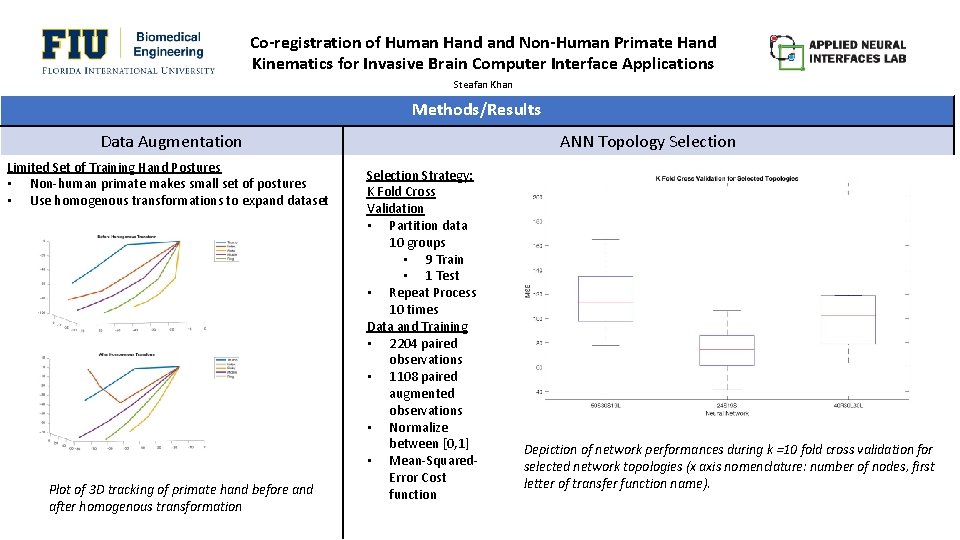

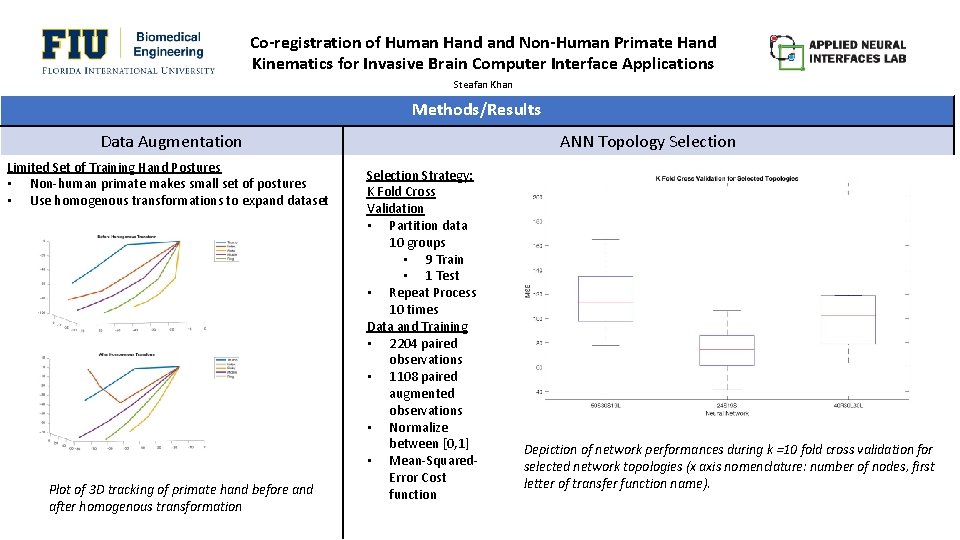

Co-registration of Human Hand Non-Human Primate Hand Kinematics for Invasive Brain Computer Interface Applications Steafan Khan Methods/Results Data Augmentation Limited Set of Training Hand Postures • Non-human primate makes small set of postures • Use homogenous transformations to expand dataset Plot of 3 D tracking of primate hand before and after homogenous transformation ANN Topology Selection Strategy: K Fold Cross Validation • Partition data 10 groups • 9 Train • 1 Test • Repeat Process 10 times Data and Training • 2204 paired observations • 1108 paired augmented observations • Normalize between [0, 1] • Mean-Squared. Error Cost function Depiction of network performances during k =10 fold cross validation for selected network topologies (x axis nomenclature: number of nodes, first letter of transfer function name).

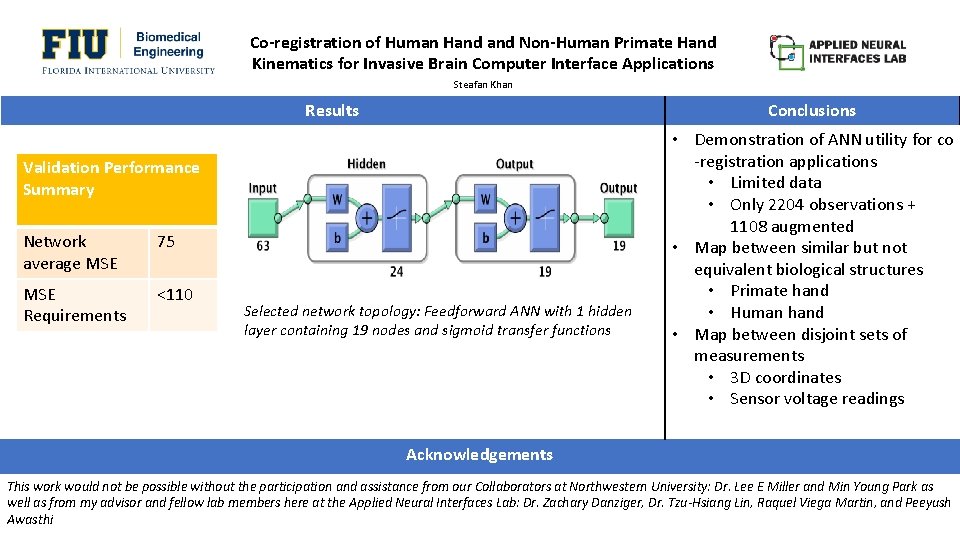

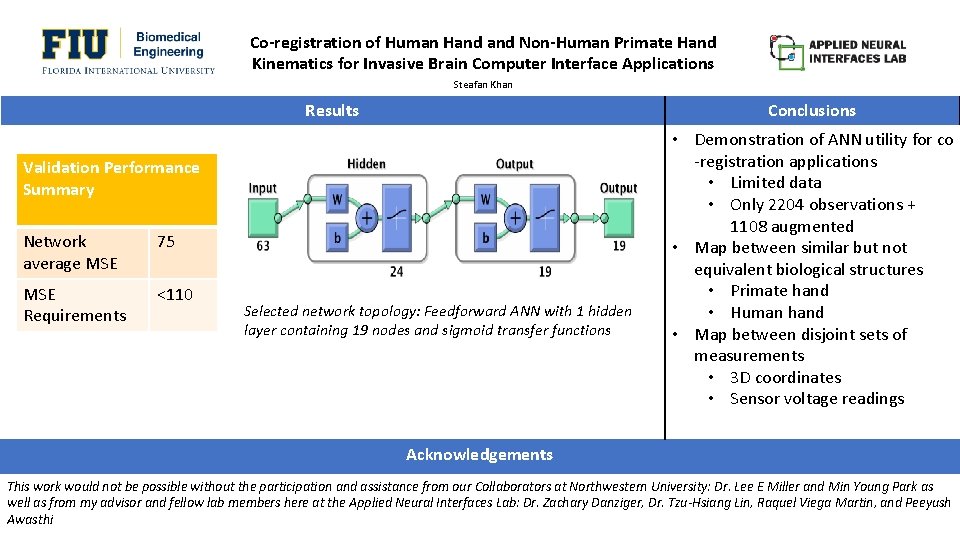

Co-registration of Human Hand Non-Human Primate Hand Kinematics for Invasive Brain Computer Interface Applications Steafan Khan Results Conclusions Validation Performance Summary Network average MSE 75 MSE Requirements <110 Selected network topology: Feedforward ANN with 1 hidden layer containing 19 nodes and sigmoid transfer functions • Demonstration of ANN utility for co -registration applications • Limited data • Only 2204 observations + 1108 augmented • Map between similar but not equivalent biological structures • Primate hand • Human hand • Map between disjoint sets of measurements • 3 D coordinates • Sensor voltage readings Acknowledgements This work would not be possible without the participation and assistance from our Collaborators at Northwestern University: Dr. Lee E Miller and Min Young Park as well as from my advisor and fellow lab members here at the Applied Neural Interfaces Lab: Dr. Zachary Danziger, Dr. Tzu-Hsiang Lin, Raquel Viega Martin, and Peeyush Awasthi