COMPRESSION Compression in General Why Compress So Many

- Slides: 12

COMPRESSION

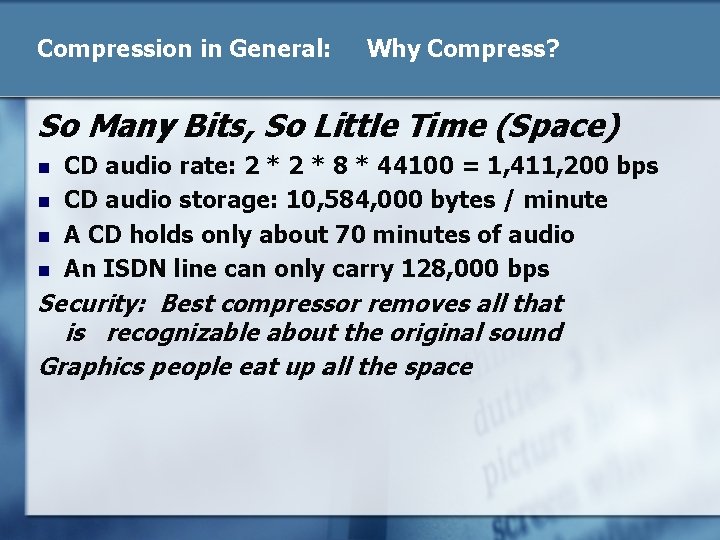

Compression in General: Why Compress? So Many Bits, So Little Time (Space) n n CD audio rate: 2 * 8 * 44100 = 1, 411, 200 bps CD audio storage: 10, 584, 000 bytes / minute A CD holds only about 70 minutes of audio An ISDN line can only carry 128, 000 bps Security: Best compressor removes all that is recognizable about the original sound Graphics people eat up all the space

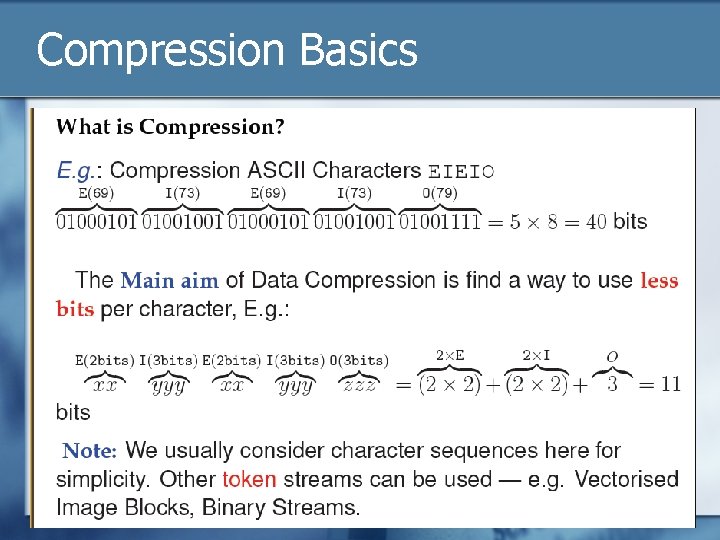

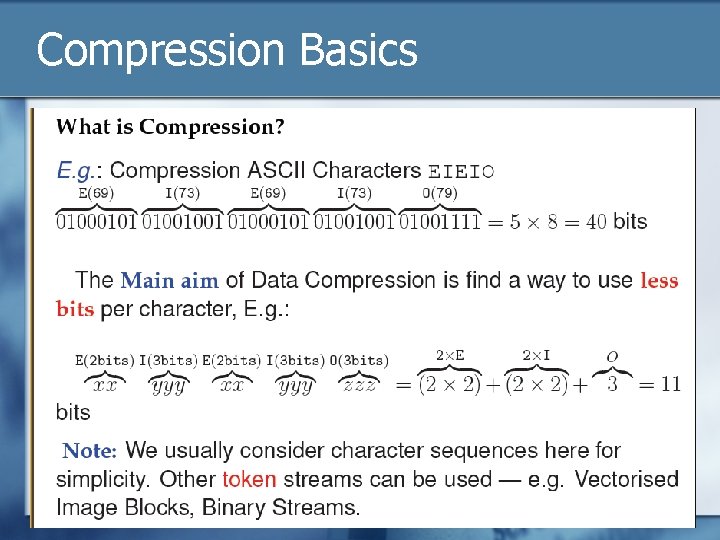

Compression Basics

Lossless Compression n n Lossless audio compression allows one to preserve an exact copy of one's audio files, in contrast to the irreversible changes from lossy compression techniques. Compression ratios are similar to those for generic lossless data compression (around 50– 60% of original size), and substantially less than for lossy compression (which typically yield 5– 20% of original size). naturally wishes to maximize quality. Editing lossily compressed data leads to digital generation loss, since the decoding and reencoding introduce artifacts at each generation.

Lossy Compression greater compression than lossless compression (data of 5 percent to 20 percent of the original stream, rather than 50 percent to 60 percent), by discarding less -critical data. n use psychoacoustics to recognize that not all data in an audio stream can be perceived by the human auditory system. n data is removed during lossy compression and cannot be recovered by decompression n

Audio Compression n n Simple Repetition Suppression: How Much Compression? Compression savings depend on the content of the data. Applications of this simple compression technique include: n Suppression of zero’s in a file (Zero Length Suppression) n n n Silence in audio data, Pauses in conversation etc. – Bitmaps – Blanks in text or program source files – Backgrounds in simple images • Other regular image or data tokens

Entropy Coding n n Entropy encoding (no loss): Ignores semantics of input data and compresses media streams by regarding them as sequences of digits or symbols n Examples: run-length encoding, Huffman encoding , . . . Run-length encoding: n A compression technique that replaces consecutive occurrences of a symbol with the symbol followed by the number of times it is repeated n a a a => 5 a n 00000000001111111 => 0 x 20 1 x 7 n Most useful where symbols appear in long runs: e. g. , for images that have areas where the pixels all have the same value, fax and cartoons for examples.

Huffman Coding (Lossless) n Huffman coding has been shown to be one of the most efficient and simple variable length coding techniques used in high-speed data compression applications. Two important principles in Huffman coding is that no code is a prefix of another code which allows for a unique way to decode each word, and that no information is needed as a delimiter between codes.

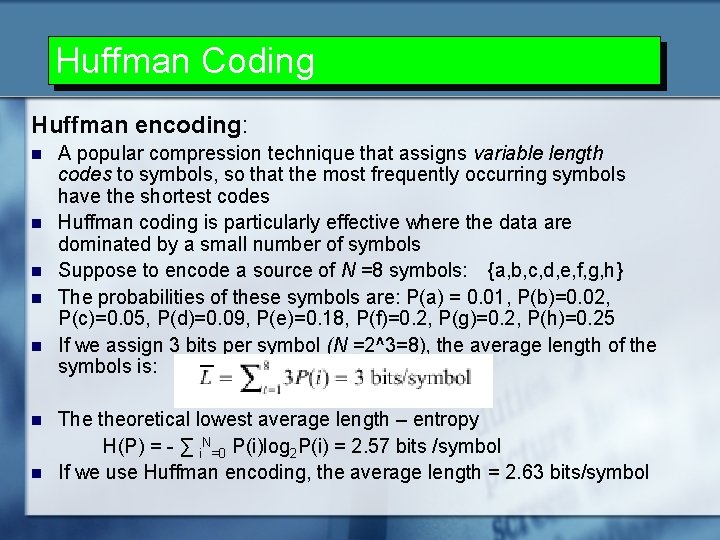

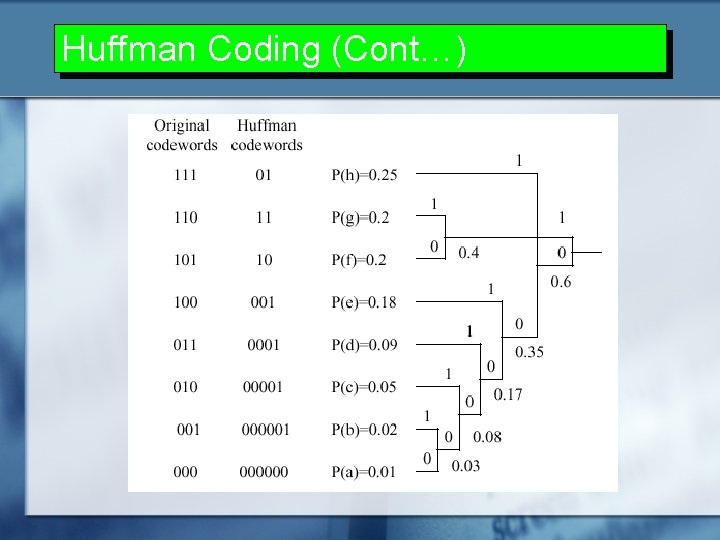

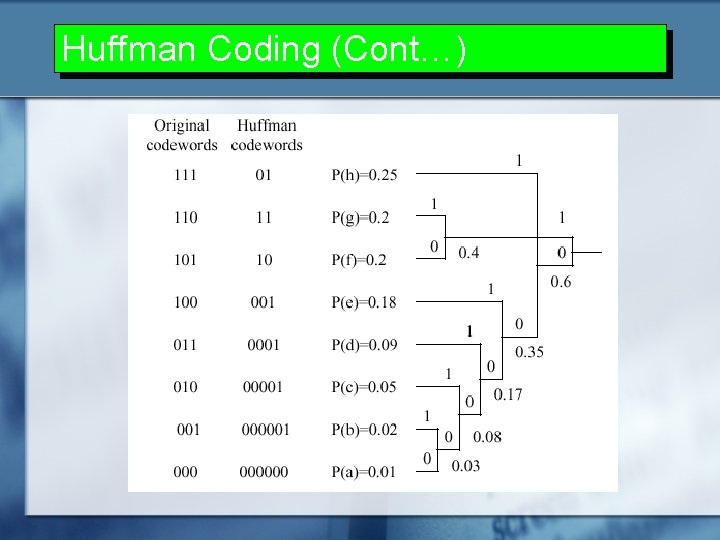

Huffman Coding Huffman encoding: n n n n A popular compression technique that assigns variable length codes to symbols, so that the most frequently occurring symbols have the shortest codes Huffman coding is particularly effective where the data are dominated by a small number of symbols Suppose to encode a source of N =8 symbols: {a, b, c, d, e, f, g, h} The probabilities of these symbols are: P(a) = 0. 01, P(b)=0. 02, P(c)=0. 05, P(d)=0. 09, P(e)=0. 18, P(f)=0. 2, P(g)=0. 2, P(h)=0. 25 If we assign 3 bits per symbol (N =2^3=8), the average length of the symbols is: The theoretical lowest average length – entropy H(P) = - ∑ i. N=0 P(i)log 2 P(i) = 2. 57 bits /symbol If we use Huffman encoding, the average length = 2. 63 bits/symbol

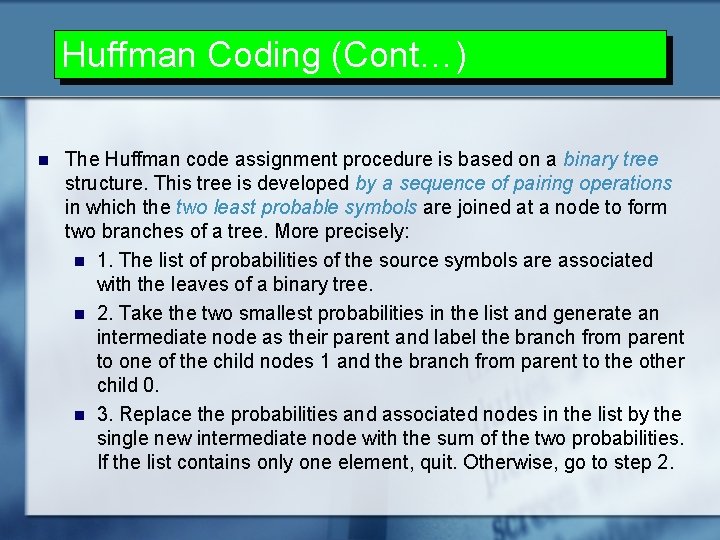

Huffman Coding (Cont…) n The Huffman code assignment procedure is based on a binary tree structure. This tree is developed by a sequence of pairing operations in which the two least probable symbols are joined at a node to form two branches of a tree. More precisely: n 1. The list of probabilities of the source symbols are associated with the leaves of a binary tree. n 2. Take the two smallest probabilities in the list and generate an intermediate node as their parent and label the branch from parent to one of the child nodes 1 and the branch from parent to the other child 0. n 3. Replace the probabilities and associated nodes in the list by the single new intermediate node with the sum of the two probabilities. If the list contains only one element, quit. Otherwise, go to step 2.

Huffman Coding (Cont…)

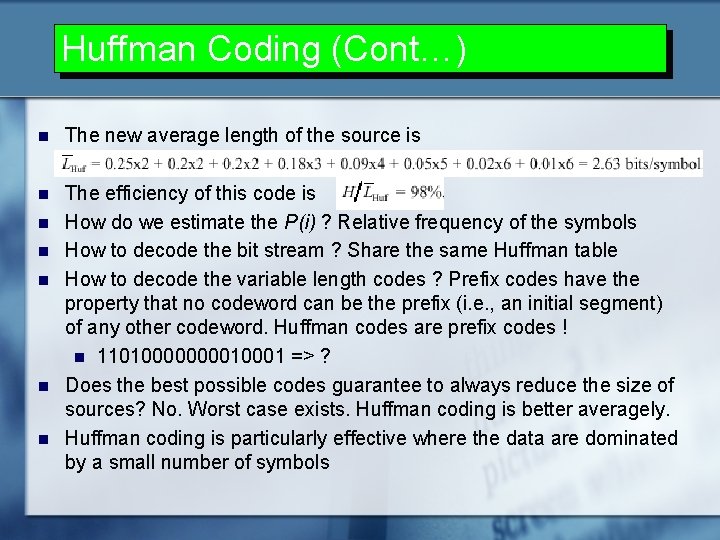

Huffman Coding (Cont…) n The new average length of the source is n The efficiency of this code is How do we estimate the P(i) ? Relative frequency of the symbols How to decode the bit stream ? Share the same Huffman table How to decode the variable length codes ? Prefix codes have the property that no codeword can be the prefix (i. e. , an initial segment) of any other codeword. Huffman codes are prefix codes ! n 1101000010001 => ? Does the best possible codes guarantee to always reduce the size of sources? No. Worst case exists. Huffman coding is better averagely. Huffman coding is particularly effective where the data are dominated by a small number of symbols n n n