WLCG Demonstrator WLCG storage Cloud Resources and volatile

- Slides: 9

WLCG Demonstrator WLCG storage, Cloud Resources and volatile storage into HTTP/Web. DAV-based regional federations S. Pardi (INFN – Belle-II) GDB: 09 November 2016 1

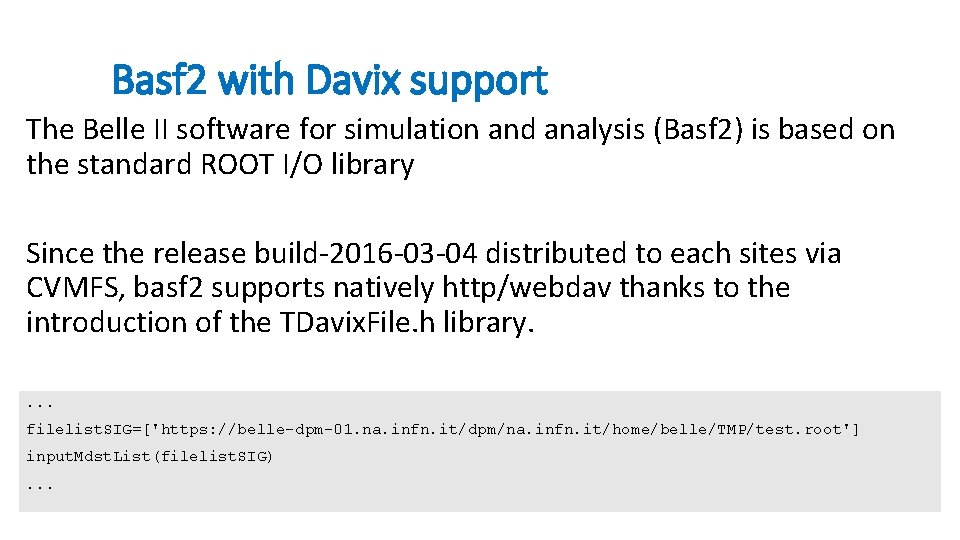

Basf 2 with Davix support The Belle II software for simulation and analysis (Basf 2) is based on the standard ROOT I/O library Since the release build-2016 -03 -04 distributed to each sites via CVMFS, basf 2 supports natively http/webdav thanks to the introduction of the TDavix. File. h library. . filelist. SIG=['https: //belle-dpm-01. na. infn. it/dpm/na. infn. it/home/belle/TMP/test. root'] input. Mdst. List(filelist. SIG). . .

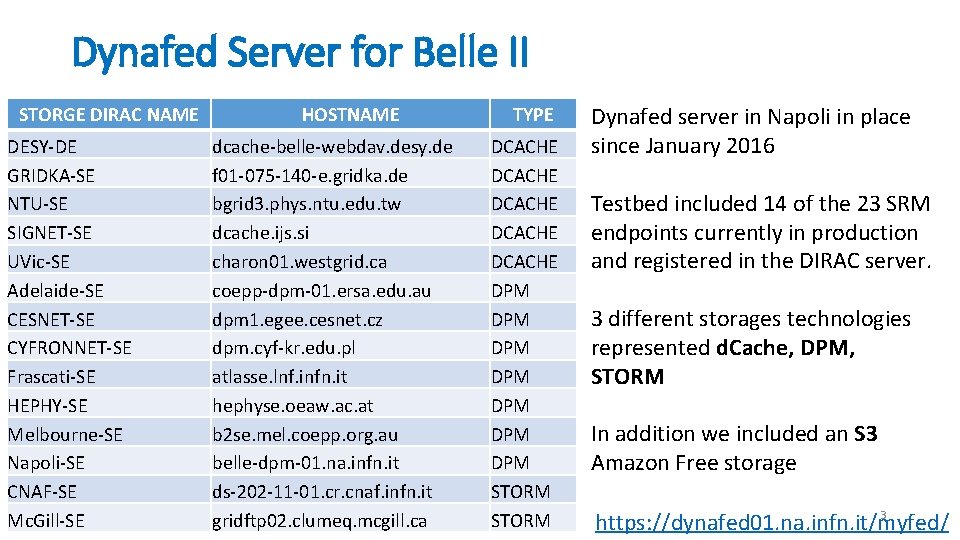

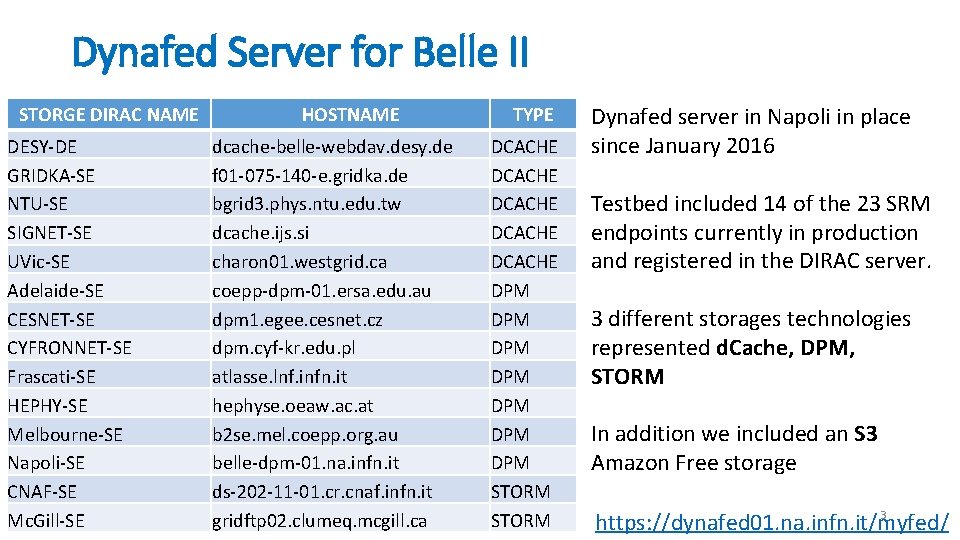

Dynafed Server for Belle II STORGE DIRAC NAME DESY-DE GRIDKA-SE NTU-SE SIGNET-SE UVic-SE Adelaide-SE CESNET-SE CYFRONNET-SE Frascati-SE HEPHY-SE Melbourne-SE Napoli-SE CNAF-SE Mc. Gill-SE HOSTNAME dcache-belle-webdav. desy. de f 01 -075 -140 -e. gridka. de bgrid 3. phys. ntu. edu. tw dcache. ijs. si charon 01. westgrid. ca coepp-dpm-01. ersa. edu. au dpm 1. egee. cesnet. cz dpm. cyf-kr. edu. pl atlasse. lnf. infn. it hephyse. oeaw. ac. at b 2 se. mel. coepp. org. au belle-dpm-01. na. infn. it ds-202 -11 -01. cr. cnaf. infn. it gridftp 02. clumeq. mcgill. ca TYPE DCACHE DCACHE DPM DPM STORM Dynafed server in Napoli in place since January 2016 Testbed included 14 of the 23 SRM endpoints currently in production and registered in the DIRAC server. 3 different storages technologies represented d. Cache, DPM, STORM In addition we included an S 3 Amazon Free storage 3 https: //dynafed 01. na. infn. it/myfed/

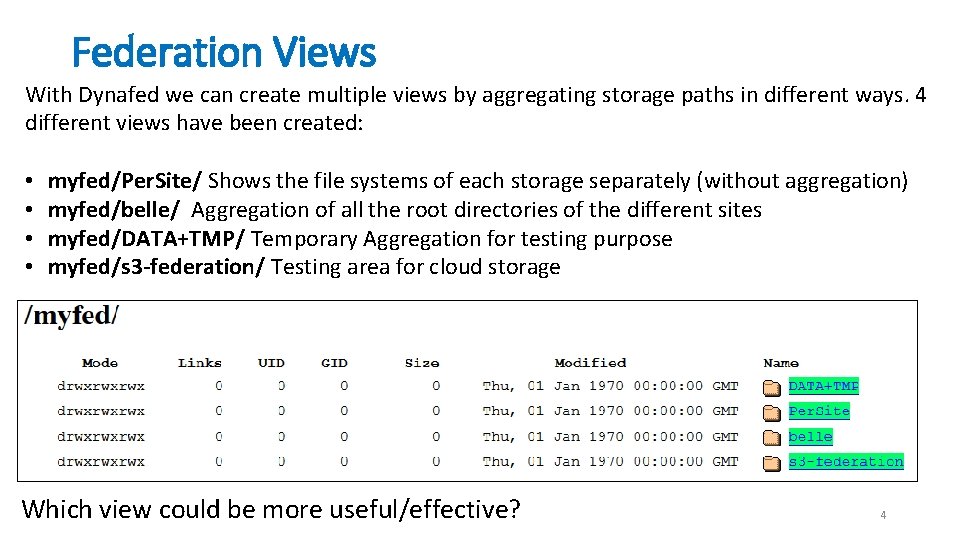

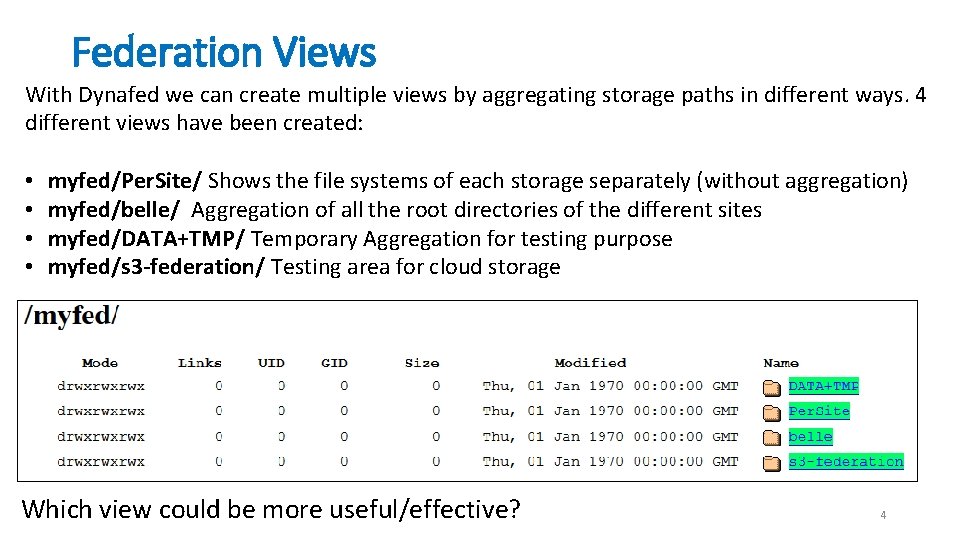

Federation Views With Dynafed we can create multiple views by aggregating storage paths in different ways. 4 different views have been created: • • myfed/Per. Site/ Shows the file systems of each storage separately (without aggregation) myfed/belle/ Aggregation of all the root directories of the different sites myfed/DATA+TMP/ Temporary Aggregation for testing purpose myfed/s 3 -federation/ Testing area for cloud storage Which view could be more useful/effective? 4

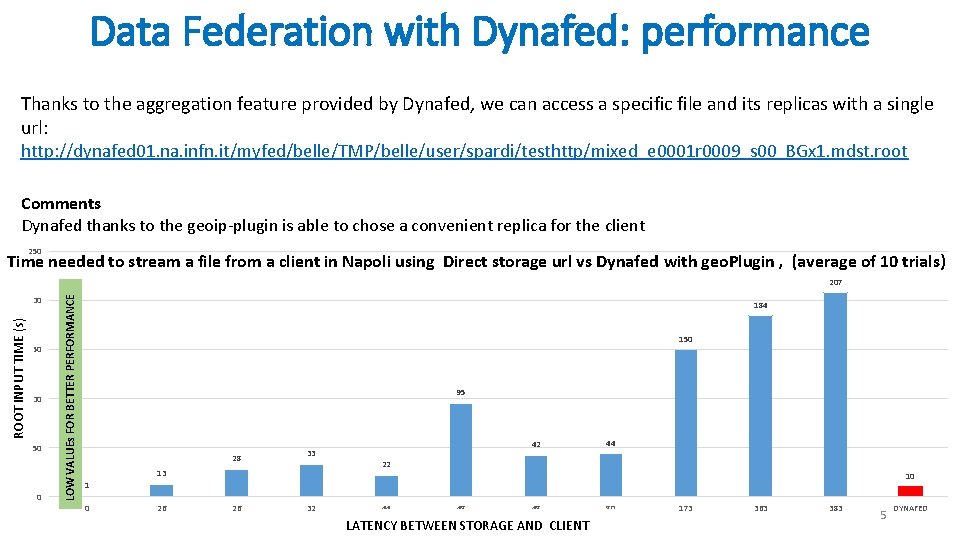

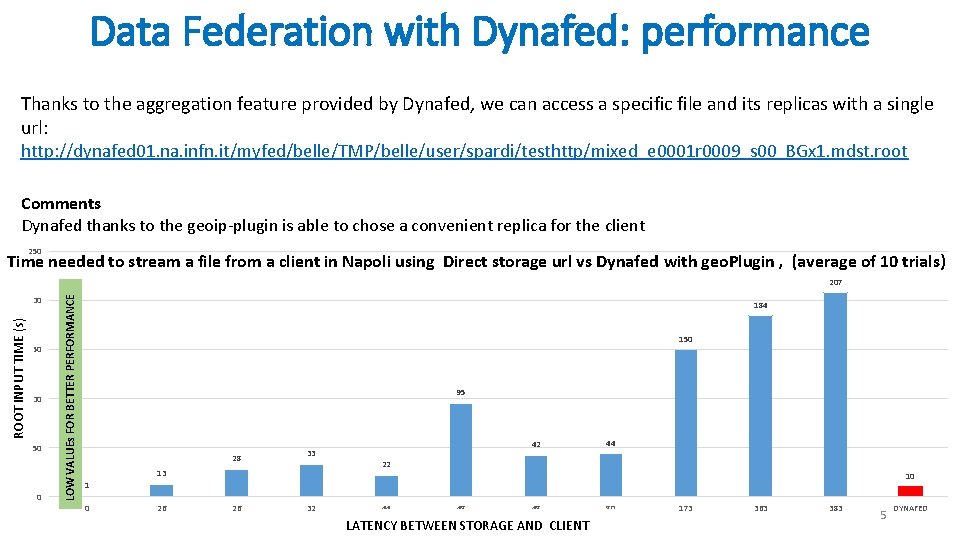

Data Federation with Dynafed: performance Thanks to the aggregation feature provided by Dynafed, we can access a specific file and its replicas with a single url: http: //dynafed 01. na. infn. it/myfed/belle/TMP/belle/user/spardi/testhttp/mixed_e 0001 r 0009_s 00_BGx 1. mdst. root Comments Dynafed thanks to the geoip-plugin is able to chose a convenient replica for the client 250 Time needed to stream a file from a client in Napoli using Direct storage url vs Dynafed with geo. Plugin , (average of 10 trials) ROOT INPUT TIME (s) Root. Input 200 150 100 50 0 LOW VALUEs FOR BETTER PERFORMANCE 207 184 150 95 28 33 13 42 44 22 10 1 0 26 26 32 44 48 Latency 48 LATENCY BETWEEN STORAGE AND CLIENT 80 173 363 383 5 DYNAFED

Further Investigations • About Cloud and heterogeneous resources • Aggregate Cloud Storage areas provided via a Swift interface by sites running on Open. Stack or similar • Investigate performance with cloud resources • Other interesting topics • Http interface Monitoring using the WLCG Nagios probe • Implementation and integration of Cache systems 6

Backup - Test with Http 7

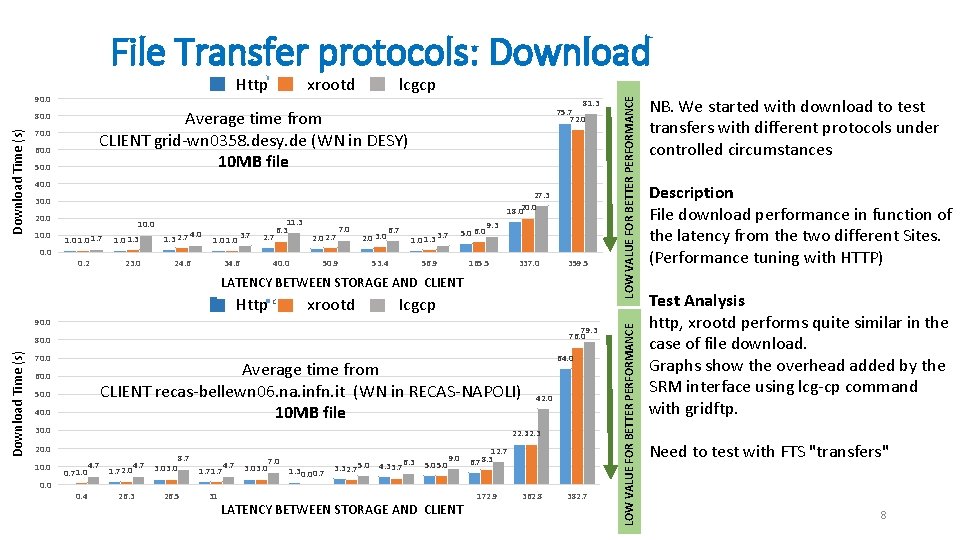

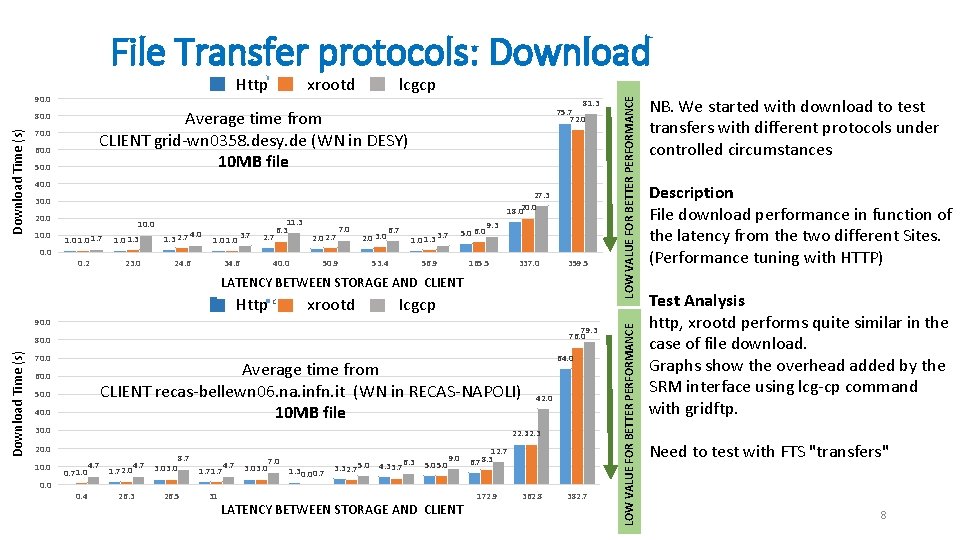

File Transfer protocols: Download lcgcp xrootd 81. 3 75. 7 72. 0 70. 0 60. 0 50. 0 40. 0 27. 3 20. 0 18. 0 30. 0 20. 0 10. 0 1. 7 0. 2 1. 0 1. 3 23. 0 4. 0 1. 3 2. 7 1. 0 24. 6 3. 7 2. 7 34. 6 11. 3 6. 3 2. 0 2. 7 40. 0 7. 0 2. 0 3. 0 50. 9 Latency 6. 7 1. 0 1. 3 53. 4 3. 7 5. 0 6. 0 56. 9 9. 3 165. 5 337. 0 359. 5 LATENCY BETWEEN STORAGE AND CLIENT Http curl lcgcp xrootd lcgcp xrdcp 90. 0 79. 3 76. 0 Download (s) Download Time (s) 80. 0 70. 0 Average time from CLIENT recas-bellewn 06. na. infn. it (WN in RECAS-NAPOLI) 10 MB file 60. 0 50. 0 40. 0 30. 0 42. 0 22. 3 20. 0 10. 0 64. 0 0. 7 1. 0 0. 4 4. 7 1. 7 2. 0 4. 7 26. 3 3. 0 8. 7 26. 5 1. 7 4. 7 31. 6 3. 0 7. 0 44. 2 LATENCY 1. 3 0. 0 0. 7 46. 3 3. 3 2. 7 5. 0 48. 0 4. 3 3. 7 Latency BETWEEN STORAGE 6. 3 48. 1 5. 0 9. 0 80. 5 AND CLIENT 12. 7 6. 7 8. 3 172. 9 362. 8 382. 7 LOW VALUE FOR BETTER PERFORMANCE lcgcp Average time from CLIENT grid-wn 0358. desy. de (WN in DESY) 10 MB file 80. 0 Download (s) xrdcp LOW VALUE FOR BETTER PERFORMANCE 90. 0 Download Time (s) curl Http NB. We started with download to test transfers with different protocols under controlled circumstances Description File download performance in function of the latency from the two different Sites. (Performance tuning with HTTP) Test Analysis http, xrootd performs quite similar in the case of file download. Graphs show the overhead added by the SRM interface using lcg-cp command with gridftp. Need to test with FTS "transfers" 8

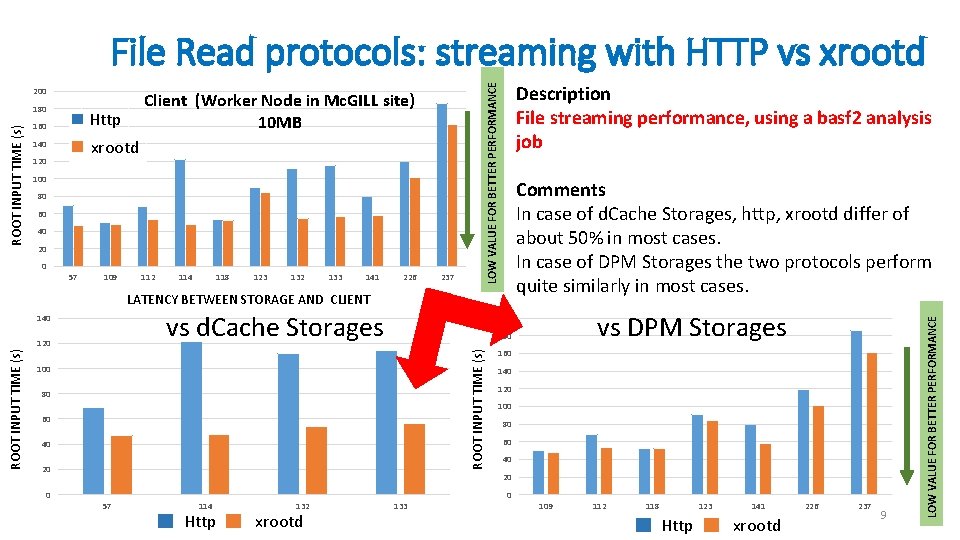

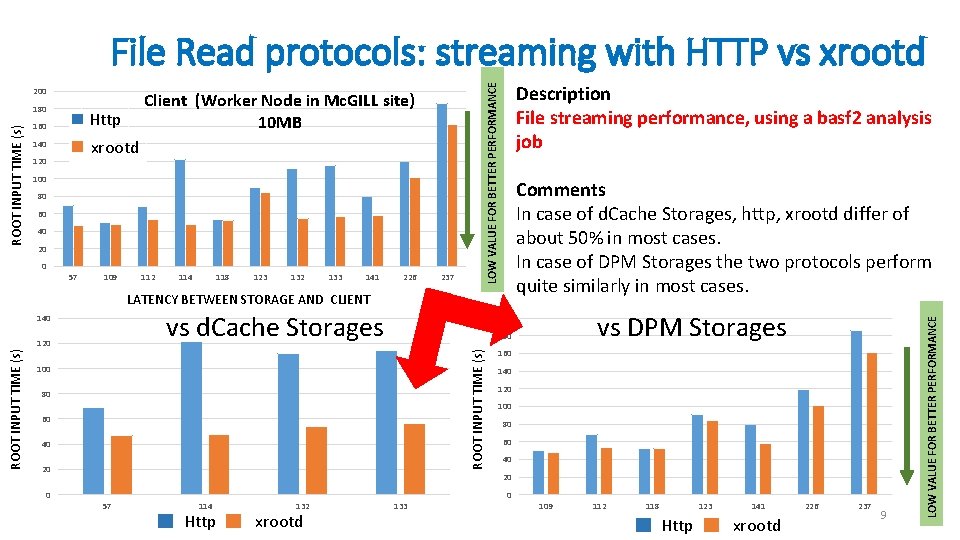

File Read protocols: streaming with HTTP vs xrootd Client (Worker Node in Mc. GILL site) 10 MB Http 160 xrootd 140 120 100 80 60 40 20 0 57 109 112 114 118 123 Latency 132 133 141 226 237 Description File streaming performance, using a basf 2 analysis job Comments In case of d. Cache Storages, http, xrootd differ of about 50% in most cases. In case of DPM Storages the two protocols perform quite similarly in most cases. LATENCY BETWEEN STORAGE AND CLIENT vs d. Cache Storages 140 100 80 60 40 20 0 vs DPM Storages 180 ROOT INPUT TIME (s) 120 200 160 140 120 100 80 60 40 20 57 114 Http 132 http xrootd 133 0 109 112 118 123 http Http xrootd 141 xrootd 226 237 9 LOW VALUE FOR BETTER PERFORMANCE Root. Input (s) ROOT INPUT TIME (s) 180 LOW VALUE FOR BETTER PERFORMANCE 200