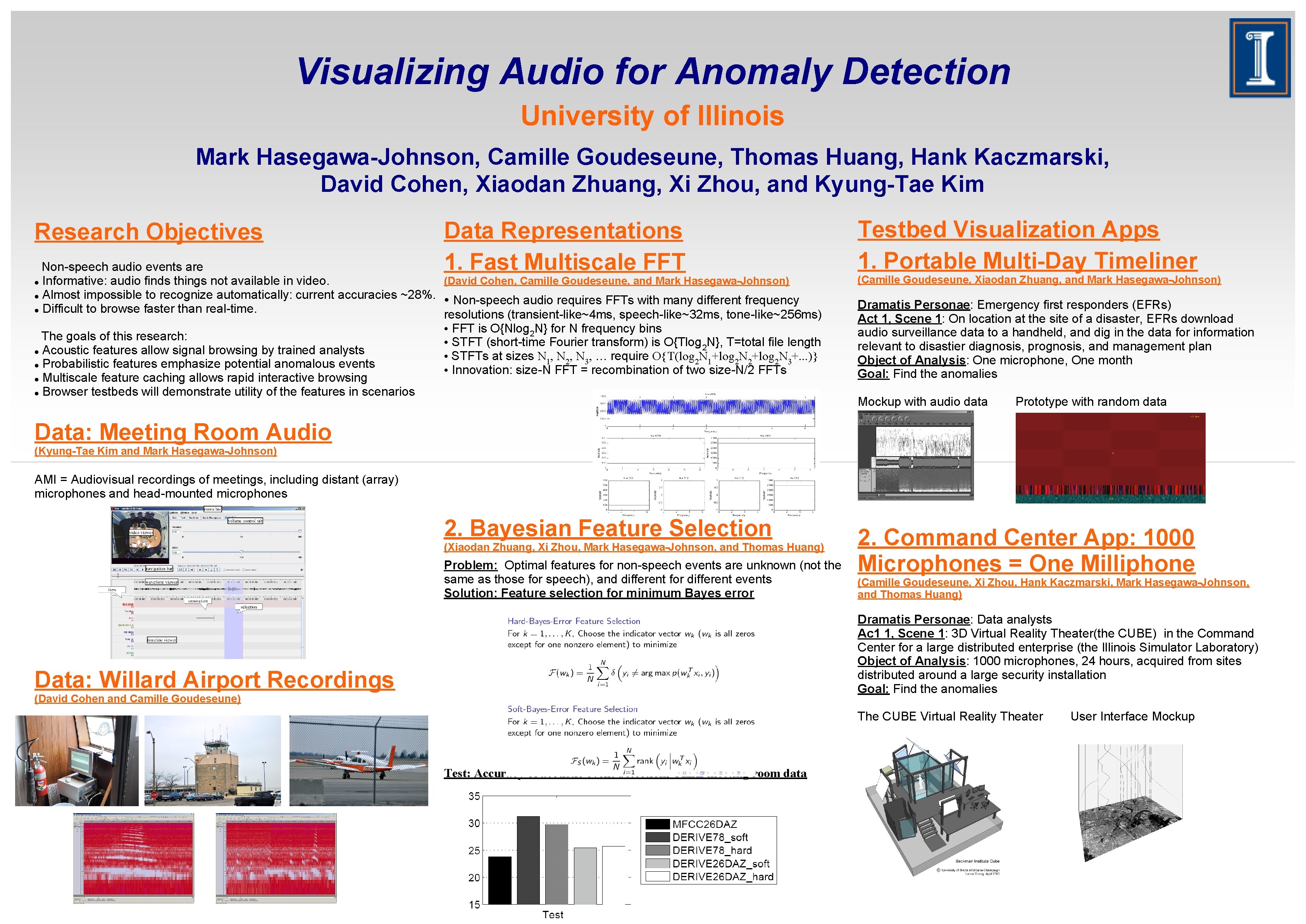

Visualizing Audio for Anomaly Detection University of Illinois

- Slides: 1

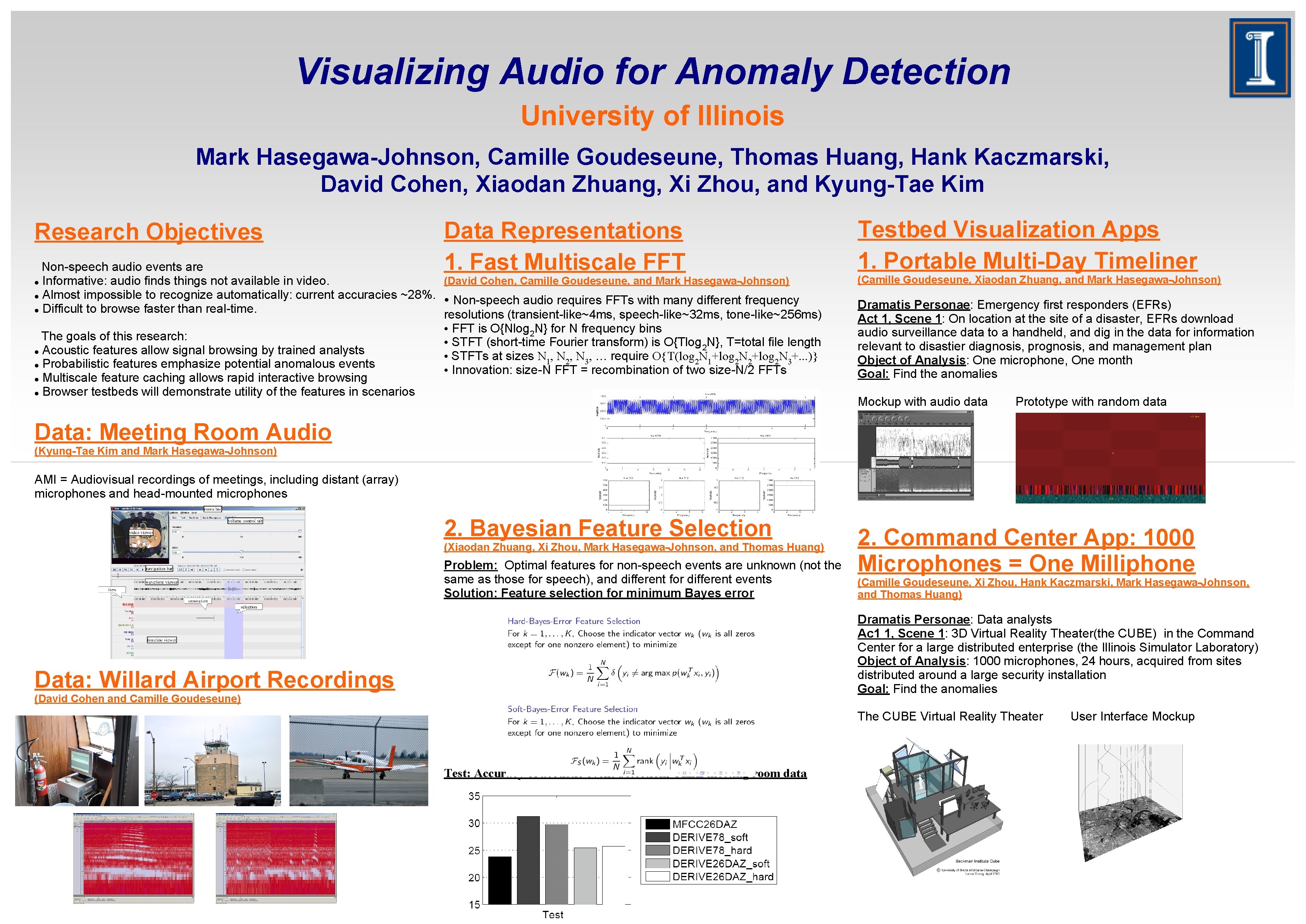

Visualizing Audio for Anomaly Detection University of Illinois Mark Hasegawa-Johnson, Camille Goudeseune, Thomas Huang, Hank Kaczmarski, David Cohen, Xiaodan Zhuang, Xi Zhou, and Kyung-Tae Kim Research Objectives Data Representations 1. Fast Multiscale FFT Non-speech audio events are (David Cohen, Camille Goudeseune, and Mark Hasegawa-Johnson) Informative: audio finds things not available in video. Almost impossible to recognize automatically: current accuracies ~28%. • Non-speech audio requires FFTs with many different frequency Difficult to browse faster than real-time. resolutions (transient-like~4 ms, speech-like~32 ms, tone-like~256 ms) • FFT is O{Nlog 2 N} for N frequency bins The goals of this research: • STFT (short-time Fourier transform) is O{Tlog 2 N}, T=total file length Acoustic features allow signal browsing by trained analysts • STFTs at sizes N 1, N 2, N 3, … require O{T(log 2 N 1+log 2 N 2+log 2 N 3+. . . )} Probabilistic features emphasize potential anomalous events • Innovation: size-N FFT = recombination of two size-N/2 FFTs Multiscale feature caching allows rapid interactive browsing Browser testbeds will demonstrate utility of the features in scenarios Testbed Visualization Apps 1. Portable Multi-Day Timeliner (Camille Goudeseune, Xiaodan Zhuang, and Mark Hasegawa-Johnson) Dramatis Personae: Emergency first responders (EFRs) Act 1, Scene 1: On location at the site of a disaster, EFRs download audio surveillance data to a handheld, and dig in the data for information relevant to disastier diagnosis, prognosis, and management plan Object of Analysis: One microphone, One month Goal: Find the anomalies Mockup with audio data Prototype with random data Data: Meeting Room Audio (Kyung-Tae Kim and Mark Hasegawa-Johnson) AMI = Audiovisual recordings of meetings, including distant (array) microphones and head-mounted microphones 2. Bayesian Feature Selection (Xiaodan Zhuang, Xi Zhou, Mark Hasegawa-Johnson, and Thomas Huang) Problem: Optimal features for non-speech events are unknown (not the same as those for speech), and different for different events Solution: Feature selection for minimum Bayes error 2. Command Center App: 1000 Microphones = One Milliphone (Camille Goudeseune, Xi Zhou, Hank Kaczmarski, Mark Hasegawa-Johnson, and Thomas Huang) Dramatis Personae: Data analysts Ac 1 1, Scene 1: 3 D Virtual Reality Theater(the CUBE) in the Command Center for a large distributed enterprise (the Illinois Simulator Laboratory) Object of Analysis: 1000 microphones, 24 hours, acquired from sites distributed around a large security installation Goal: Find the anomalies Data: Willard Airport Recordings (David Cohen and Camille Goudeseune) The CUBE Virtual Reality Theater Test: Accuracy of acoustic event detection, CHIL meeting room data User Interface Mockup