Rhythmic Transcription of MIDI Signals Carmine Casciato MUMT

- Slides: 16

Rhythmic Transcription of MIDI Signals Carmine Casciato MUMT 611 Thursday, February 10, 2005

Uses of Rhythmic Transcription l Automatic scoring ¡ Improvisation l Score following ¡ Triggering of audio/visual components l Performance l Audio classification and retrieval ¡ Genre classification ¡ Ethnomusicology considerations ¡ Sample database management

MIDI Signals Unidirectional message stream at 3. 125 KHz l System Real Time Messages provide Timing Tick message l A simplification of acoustic signals l ¡ No noise, masking effects Easily retrieve note onsets, offsets, velocities, pitches l However, no knowledge of acoustic properties of sound l

Difficulties in Rhythmic Transcription Expressive performance vs mechanical performance l Inexact performance of notes l ¡ ¡ ¡ l Robustness of beat tracker ¡ l Syncopations Silences Grace notes Can the tracker recover from incorrect beat induction? Real time implementation

Human Limits of Rhythmic Perception l Two note onsets are deemed synchronous when played within 40 ms of each other, 70 ms for > two notes l Piano and orchestral performances exhibit note onset asynchronicity of 30 -50 ms l Note onset differences of 50 ms to 2 s give rhythmic information

Evaluation Criteria for Beat Trackers l Informally - click track of reported beats added to signal l Visually marking the reporting beats l Comparing reported vs known, correct beats

Definitions (Dixon 2001) l Beat - “perceived pulses which are approximately equally spaced and define the rate at which notes in a piece are played” l meterical, score , performance level l tempo - beats per minute l Inter-onset Intervals (IOI) - time intervals between note onsets

Approaches - Probabilistic Frameworks l Cemgil et al (2000) - Bayesian framework, using a tempogram (wavelet) and a 10 th order Kalman Filter to estimate tempo, which is a hidden state variable l Takeda et al (2002) - Hidden Markov models for fluctuating note lengths and note sequences, estimating both rhythms and tempo l Raphael (2002) - tempo and rhythm

Approaches - Oscillators Period and phase that adjusts itself to synchronize to IOI input l Dannenberg and Allen (1990) - weighted IOIs and credibility evaluation based on past input l Meudic (2002) - real time implementation of Dixon l ¡ l Induce several beats and attempt to propagate them through the signal (agents), then choose the best Pardo (2004) - Oscillator, compared to Cemgil using same corpus

Pardo l Is a Kalman Filter (Cemgil) or oscillator better for online tempo tracking? l Performance as time series of weights, W, over T time steps l Weight of time step with no note onsets = 0, increased proportional to # of note onsets l 100 ms is minimum IOI allowed, minimum beat period

Pardo Uses weighted average of last 20 beat periods, with one parameter varying degrees of smoothing l A correction parameter varies how far the period and phase of the next predicted beat is changed according to known information l A window size parameter affects how many periods may affect the current prediction l Chose 5000 random values of these three parameters, ran each triplet on 99 performances of Cemgil corpora l

Cemgil MIDI/Piano Corpora l Four pro jazz, four pro classical, three amateur piano players l Yesterday and Michelle, fast, slow and normal, on a Yamaha Diskclavier l Available at www. nici. kun. nl/mmm/

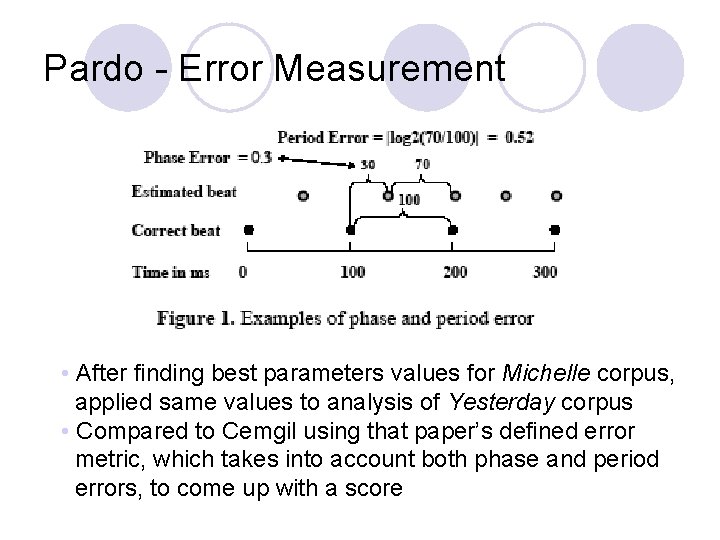

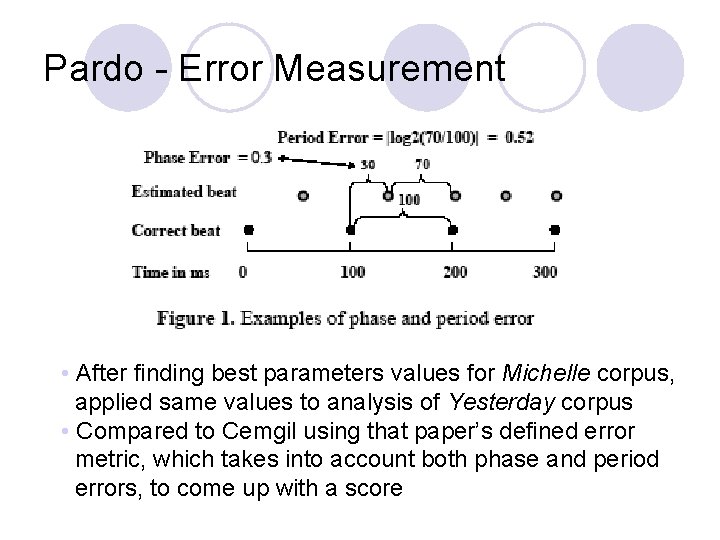

Pardo - Error Measurement • After finding best parameters values for Michelle corpus, applied same values to analysis of Yesterday corpus • Compared to Cemgil using that paper’s defined error metric, which takes into account both phase and period errors, to come up with a score

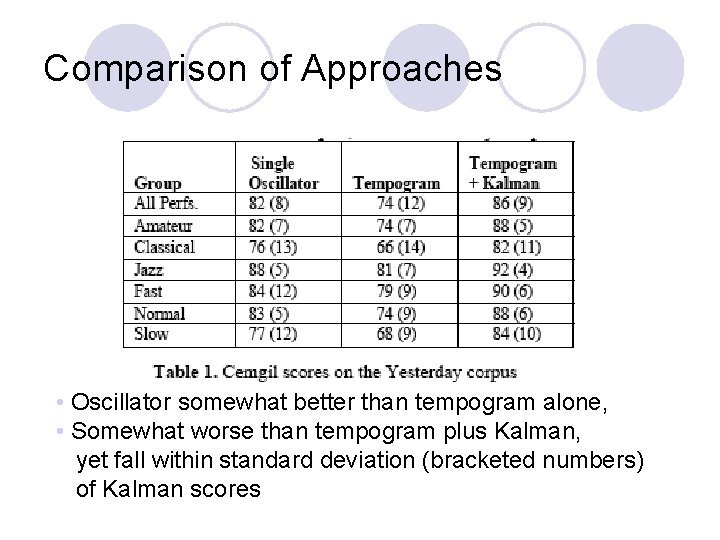

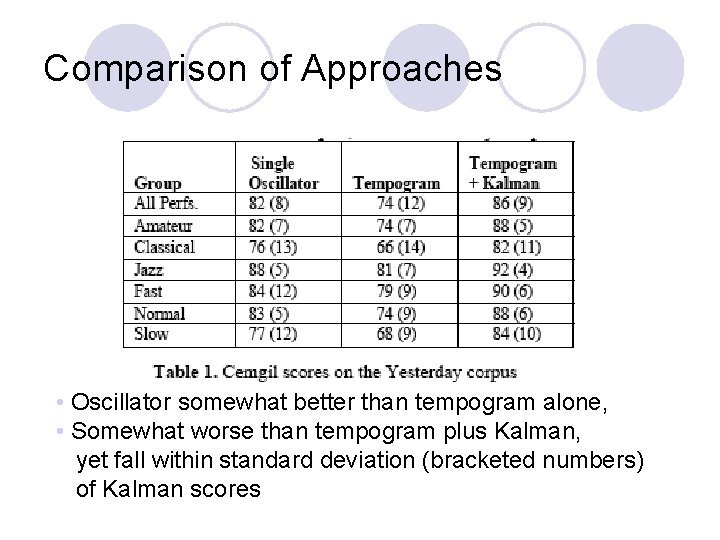

Comparison of Approaches • Oscillator somewhat better than tempogram alone, • Somewhat worse than tempogram plus Kalman, yet fall within standard deviation (bracketed numbers) of Kalman scores

Other Considerations l Stylistic information ¡ Training l Musical of tracker importance of note ¡ Duration ¡ Pitch ¡ Velocity

Bibliography l l l Allen, Paul and Roger Dannenberg. 1990. Tracking Musical Beats in Real Time. In Proc. of the ICMC 1990, 140 -143. Dixon, Simon. 2001. Automatic extraction of tempo and beat from expressive performances. In Journal of New Music Research, 30, 1, 39 -58. Meudic, Benoit. 2002. A causal algorithm for beat-tracking. 2 nd Conference Understanding and Creating Music. Pardo, Bryan. 2004. Tempo tracking with a single oscillator. ISMIR 2004. Raphael, Christopher. 2002. A hybrid graphical model for rhythmic parsing. In Artificial Intelligence, 137, 217 -238. Takeda, Haruto, et al. 2002. Hidden Markov model for automatic transcription of MIDI signals. In Proc. of Multimedia Signal Processing Workshop 2002.

Chapter 6 audio basics

Chapter 6 audio basics Pps carminé

Pps carminé Carmine ventre

Carmine ventre Pps carminé

Pps carminé E100 carmine

E100 carmine Carmine rizzo

Carmine rizzo Animals and human language شرح

Animals and human language شرح Communicative and informative signals

Communicative and informative signals Animals and human language chapter 2

Animals and human language chapter 2 Escatologia bíblica arrebatamento

Escatologia bíblica arrebatamento Programmazione midi

Programmazione midi Pic du midi d'ossau mariano

Pic du midi d'ossau mariano Draaiorgel midi files

Draaiorgel midi files Bandtrax

Bandtrax General midi map

General midi map Midi channel voice messages

Midi channel voice messages Onss gare du midi

Onss gare du midi