Planning Evaluations for Discovery Research K12 DR K12

- Slides: 16

Planning Evaluations for Discovery Research K-12 (DR K-12) Design Projects Dr. Kathleen Haynie Research and Evaluation November 12, 2010

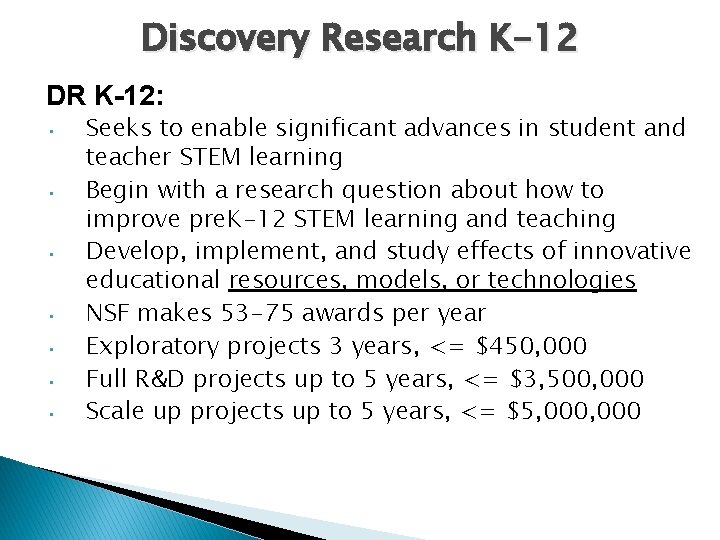

Discovery Research K-12 DR K-12: • • Seeks to enable significant advances in student and teacher STEM learning Begin with a research question about how to improve pre. K-12 STEM learning and teaching Develop, implement, and study effects of innovative educational resources, models, or technologies NSF makes 53 -75 awards per year Exploratory projects 3 years, <= $450, 000 Full R&D projects up to 5 years, <= $3, 500, 000 Scale up projects up to 5 years, <= $5, 000

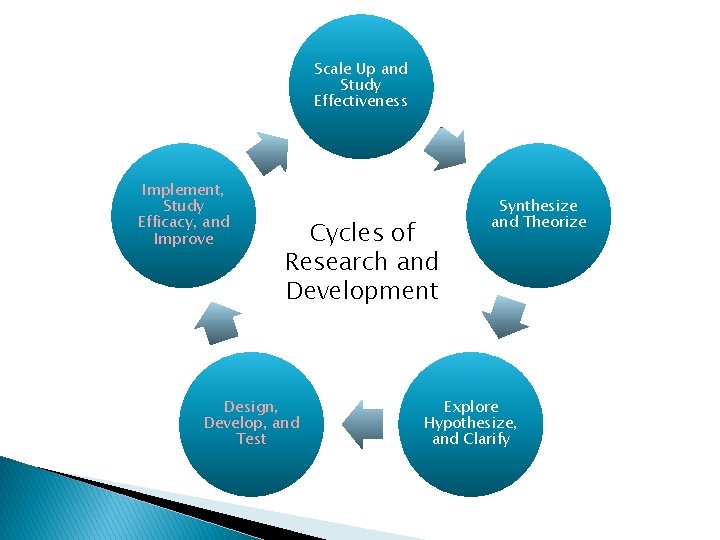

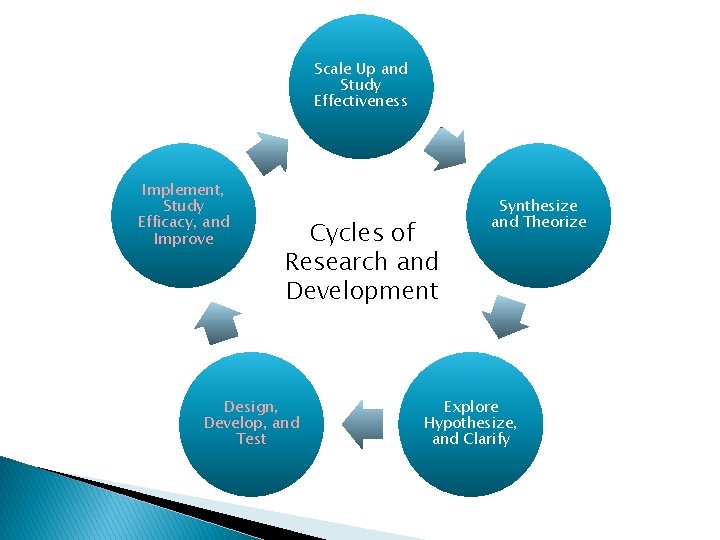

Scale Up and Study Effectiveness Implement, Study Efficacy, and Improve Cycles of Research and Development Design, Develop, and Test Synthesize and Theorize Explore Hypothesize, and Clarify

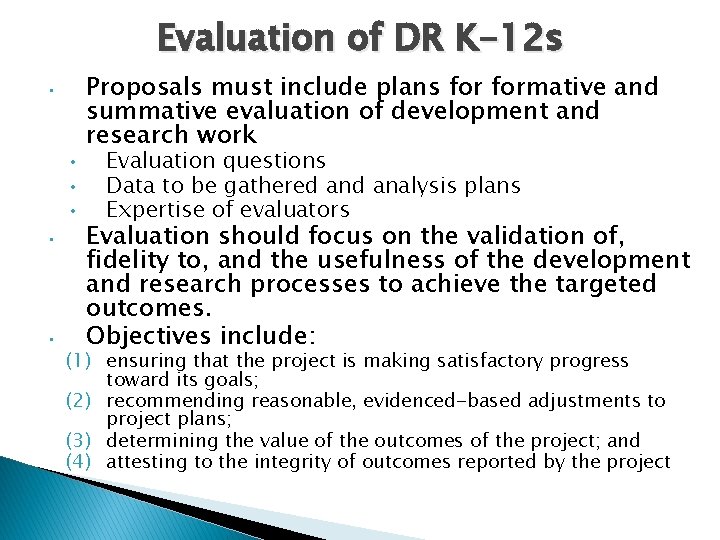

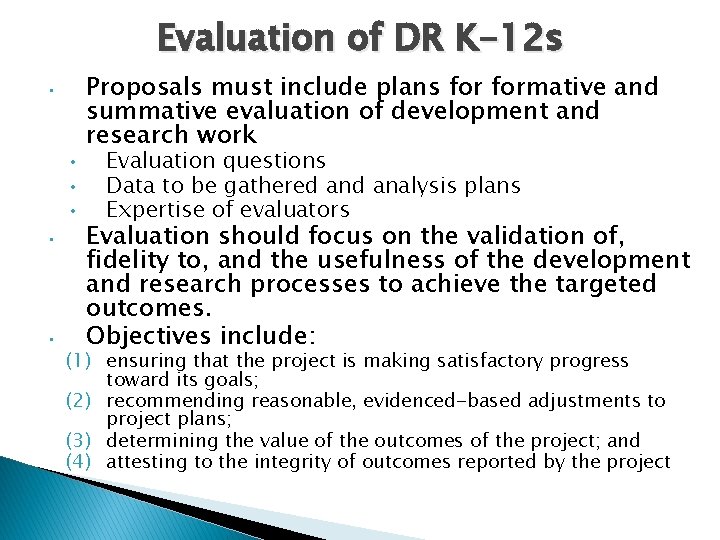

Evaluation of DR K-12 s • • • Proposals must include plans formative and summative evaluation of development and research work Evaluation questions Data to be gathered analysis plans Expertise of evaluators Evaluation should focus on the validation of, fidelity to, and the usefulness of the development and research processes to achieve the targeted outcomes. Objectives include: (1) ensuring that the project is making satisfactory progress toward its goals; (2) recommending reasonable, evidenced-based adjustments to project plans; (3) determining the value of the outcomes of the project; and (4) attesting to the integrity of outcomes reported by the project

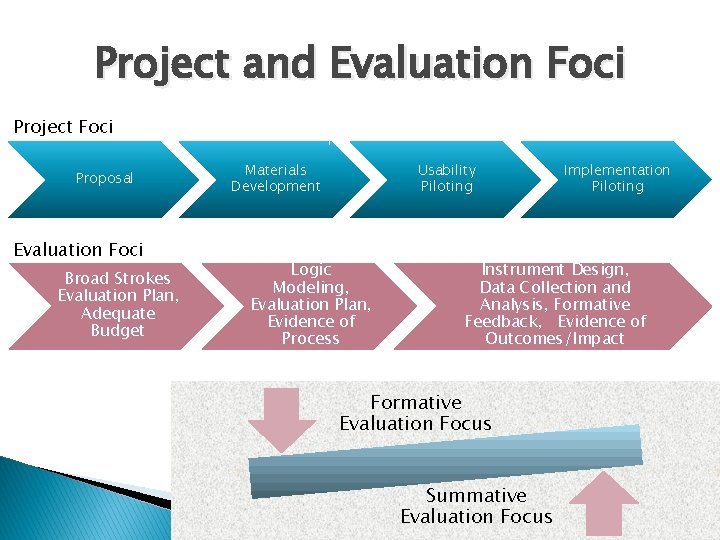

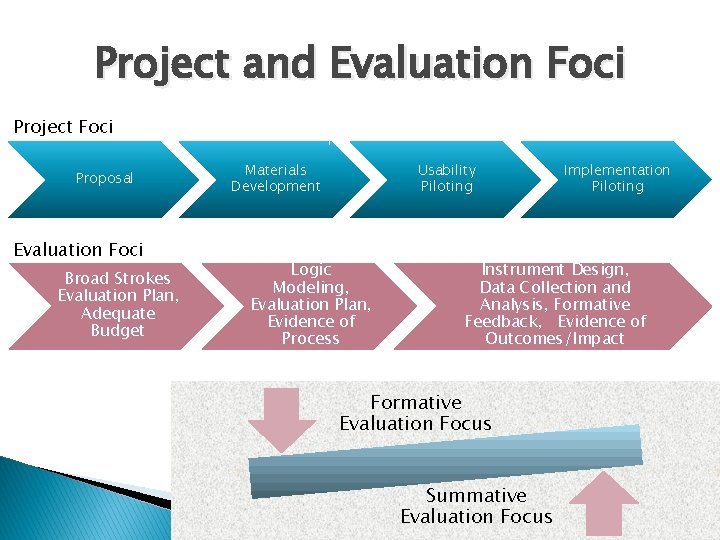

Project and Evaluation Foci Project Foci Proposal Evaluation Foci Broad Strokes Evaluation Plan, Adequate Budget Materials Development Usability Piloting Logic Modeling, Evaluation Plan, Evidence of Process Implementation Piloting Instrument Design, Data Collection and Analysis, Formative Feedback, Evidence of Outcomes/Impact Formative Evaluation Focus Summative Evaluation Focus

Example of DR K-12 Evaluation Timeline

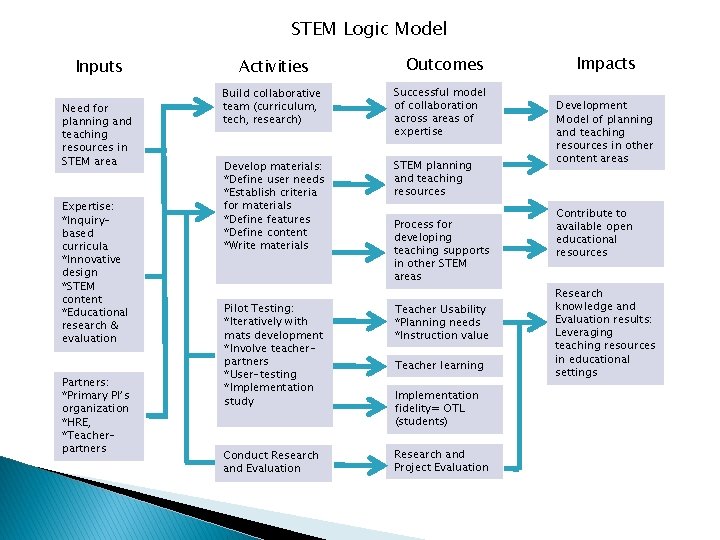

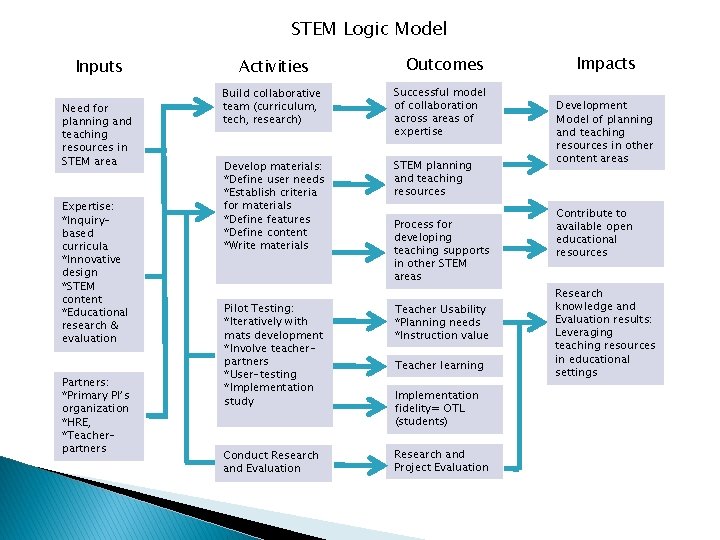

STEM Logic Model Inputs Need for planning and teaching resources in STEM area Expertise: *Inquirybased curricula *Innovative design *STEM content *Educational research & evaluation Partners: *Primary PI’s organization *HRE, *Teacherpartners Activities Outcomes Build collaborative team (curriculum, tech, research) Successful model of collaboration across areas of expertise Develop materials: *Define user needs *Establish criteria for materials *Define features *Define content *Write materials STEM planning and teaching resources Process for developing teaching supports in other STEM areas Pilot Testing: *Iteratively with mats development *Involve teacherpartners *User-testing *Implementation study Teacher Usability *Planning needs *Instruction value Conduct Research and Evaluation Research and Project Evaluation Teacher learning Implementation fidelity= OTL (students) Impacts Development Model of planning and teaching resources in other content areas Contribute to available open educational resources Research knowledge and Evaluation results: Leveraging teaching resources in educational settings

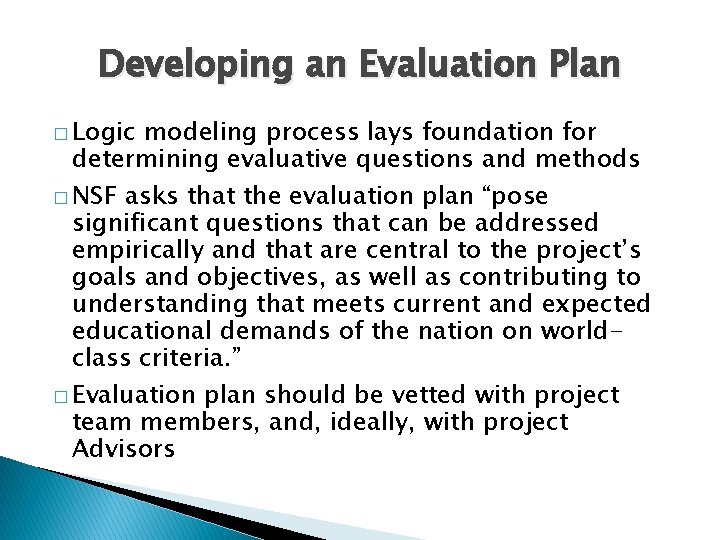

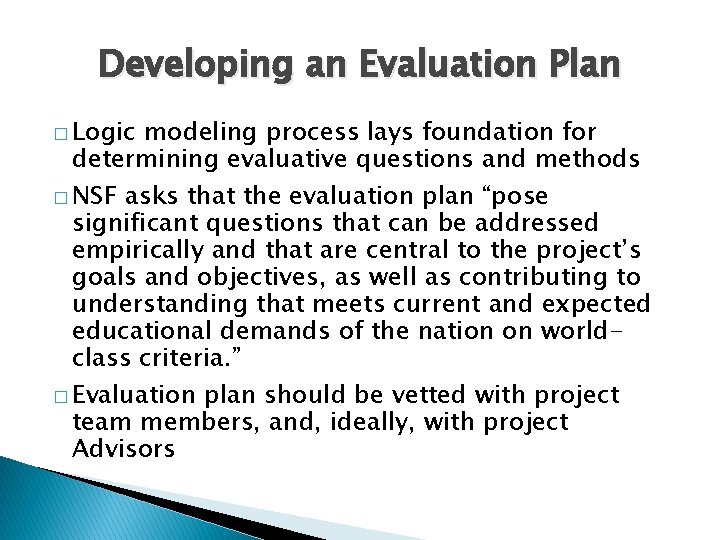

Developing an Evaluation Plan � Logic modeling process lays foundation for determining evaluative questions and methods � NSF asks that the evaluation plan “pose significant questions that can be addressed empirically and that are central to the project’s goals and objectives, as well as contributing to understanding that meets current and expected educational demands of the nation on worldclass criteria. ” � Evaluation plan should be vetted with project team members, and, ideally, with project Advisors

General Evaluative Approach Matrix for NSF Projects

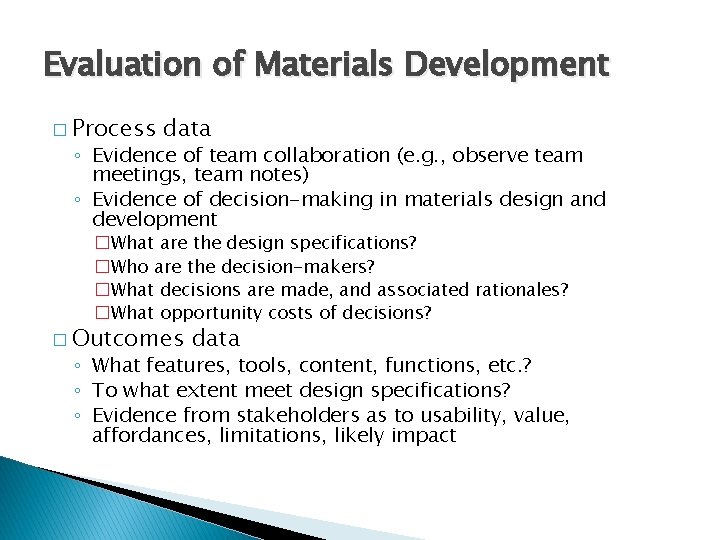

Evaluation of Materials Development � Process data ◦ Evidence of team collaboration (e. g. , observe team meetings, team notes) ◦ Evidence of decision-making in materials design and development �What are the design specifications? �Who are the decision-makers? �What decisions are made, and associated rationales? �What opportunity costs of decisions? � Outcomes data ◦ What features, tools, content, functions, etc. ? ◦ To what extent meet design specifications? ◦ Evidence from stakeholders as to usability, value, affordances, limitations, likely impact

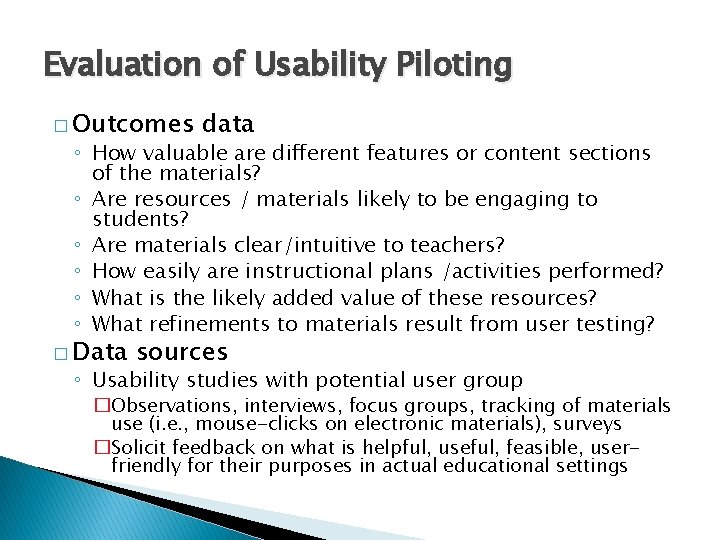

Evaluation of Usability Piloting � Outcomes data ◦ How valuable are different features or content sections of the materials? ◦ Are resources / materials likely to be engaging to students? ◦ Are materials clear/intuitive to teachers? ◦ How easily are instructional plans /activities performed? ◦ What is the likely added value of these resources? ◦ What refinements to materials result from user testing? � Data sources ◦ Usability studies with potential user group �Observations, interviews, focus groups, tracking of materials use (i. e. , mouse-clicks on electronic materials), surveys �Solicit feedback on what is helpful, useful, feasible, userfriendly for their purposes in actual educational settings

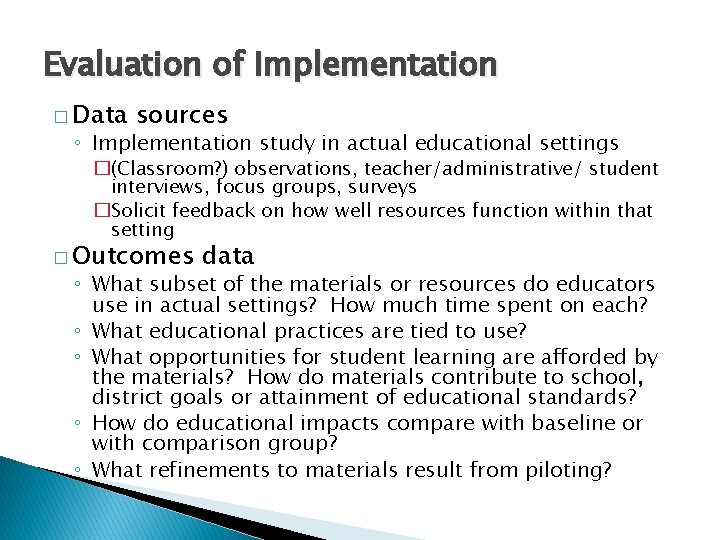

Evaluation of Implementation � Data sources ◦ Implementation study in actual educational settings �(Classroom? ) observations, teacher/administrative/ student interviews, focus groups, surveys �Solicit feedback on how well resources function within that setting � Outcomes data ◦ What subset of the materials or resources do educators use in actual settings? How much time spent on each? ◦ What educational practices are tied to use? ◦ What opportunities for student learning are afforded by the materials? How do materials contribute to school, district goals or attainment of educational standards? ◦ How do educational impacts compare with baseline or with comparison group? ◦ What refinements to materials result from piloting?

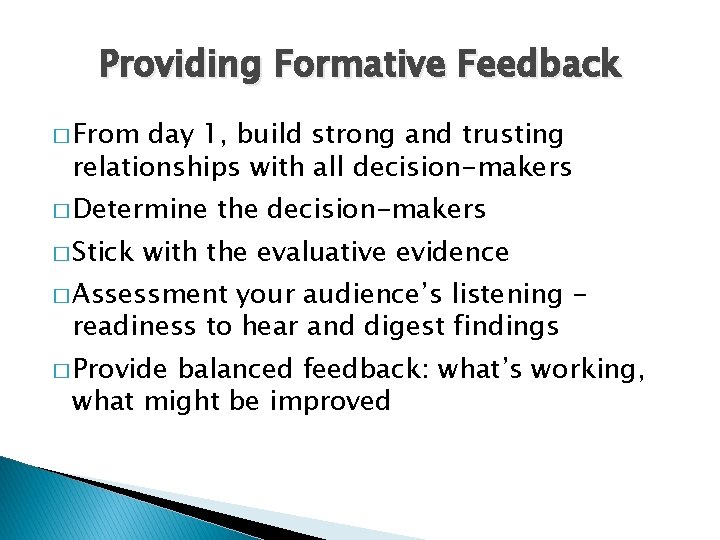

Providing Formative Feedback � From day 1, build strong and trusting relationships with all decision-makers � Determine � Stick the decision-makers with the evaluative evidence � Assessment your audience’s listening readiness to hear and digest findings � Provide balanced feedback: what’s working, what might be improved

Reporting Summative Results � Start well in advance of May, when most Annual reports are due � Use the evaluation plan as a guide ◦ Be sure to focus on evaluation questions and methods ◦ Can include emergent findings, but try to connect with original project goals � Make sure evaluation write-up is well-vetted with project team � Unless final evaluation report, be sure to include formative recommendations that are realistic

Questions? � Thank � Dr. you, and go to it!!! Kathleen Haynie Director of Haynie Research and Evaluation kchaynie@stanfordalumni. org 609 -466 -2990 haynieresearch. com