DIRECT HASHING AND PRUNING DHP ALGORITHM Pouya khani

- Slides: 10

DIRECT HASHING AND PRUNING (DHP) ALGORITHM Pouya khani Mostafa heshmat

review Basics : • Itemsets: Set of items that occur together like {milk, bread, beer} • K-itemsets: Subsets of an itemsets with number K like: 2 -itemsets of {milk, bread, beer} is {milk, bread) {milk, beer} {bread, beer} • Absolute support: the absolute support of A, i. e. the absolute number of transactions which contains A, is 2. • Relative support: the relative support of A, i. e. the relative number of transactions which contains A, is 2/2=1 • Frequent itemsets: A frequent itemset is an itemset whose support is greater than some user-specified minimum support

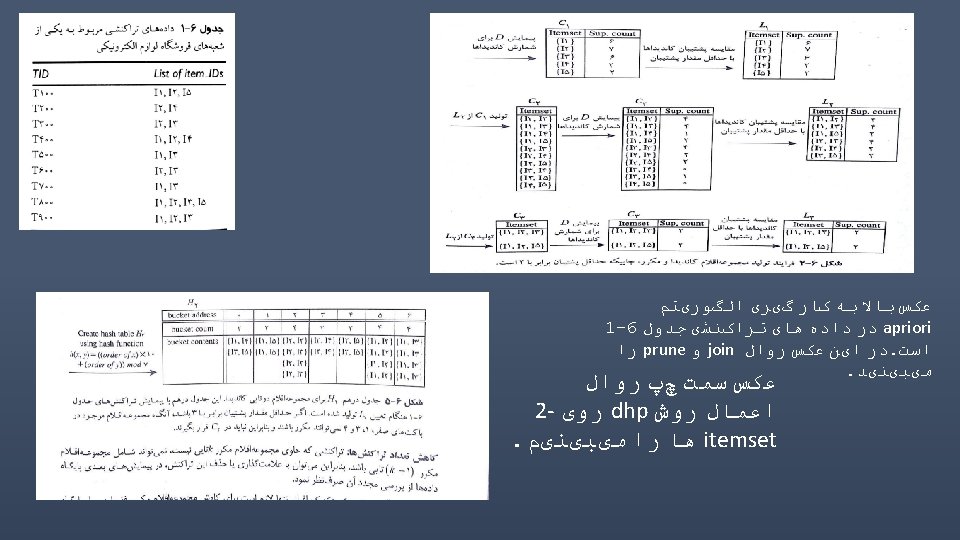

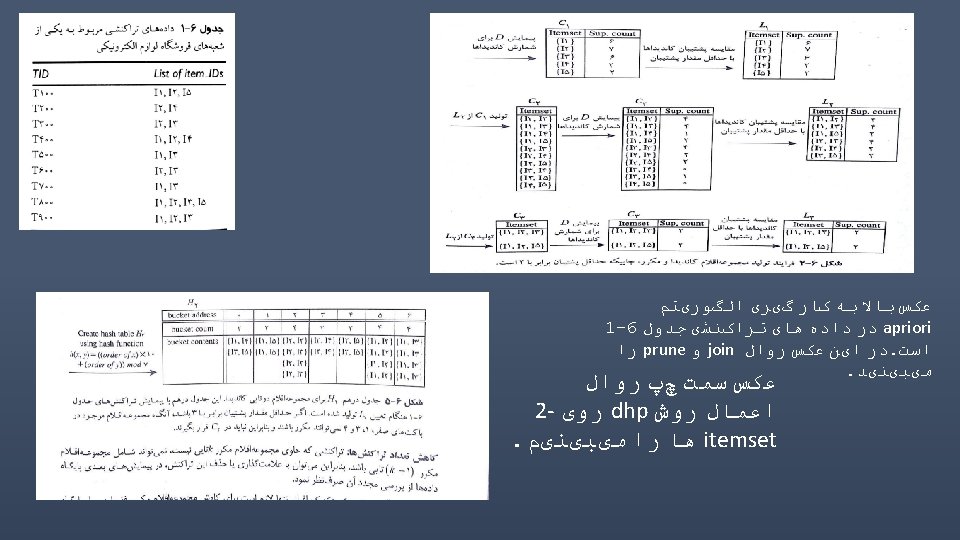

Association Rules : A B Support: Support is an indication of how frequently the itemset appears in the dataset. The support of X with respect to T is defined as the proportion of transactions t in the dataset which contains the itemset X. Confidence: Confidence is an indication of how often the rule has been found to be true. The confidence value of a rule, X=>Y, with respect to a set of transactions T, is the proportion of the transactions that contains X which also contains Y. • Strong association Rules: A association rule that have min-sup and min-conf value. Apriori algorithm : • Apriori property: any subset of frequent itemset must be frequent. Join: meaning k-itemset is made to self join with itself to generate (k+1)-itemsets for example join(CD → AB, BD → AC) would produce the candidate rule D → ABC. Prune: here resulting set from join is filtered with minimum support threshold. (base on apriori property) for example Prune rule D → ABC if its subset AD → BC does not have high confidence.

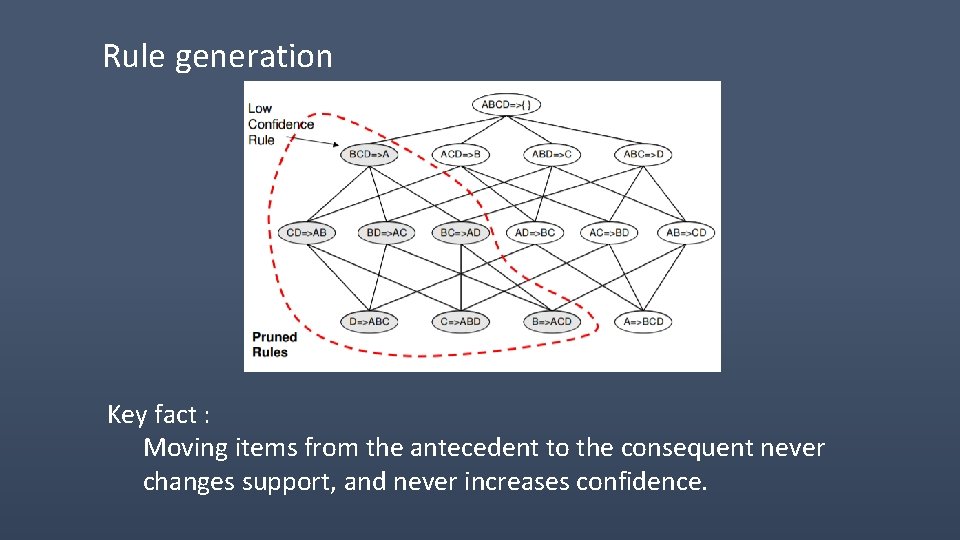

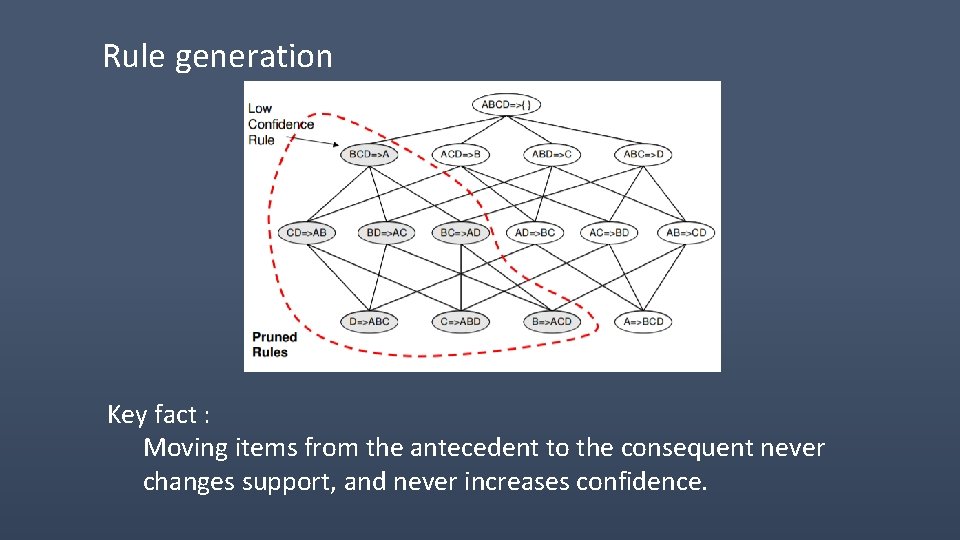

Rule generation Key fact : Moving items from the antecedent to the consequent never changes support, and never increases confidence.

DIRECT HASHING AND PRUNING (DHP) ALGORITHM • Is based on apriori algorithm • Use hashing technique to filter unnecessary C(k + 1) • Also the DHP algorithm proposed in prunes the transactions

Conclusion : • The drawback of the DHP algorithm is that, the hash table is in competition for memory space with the hash tree used to hold the counts for the itemsets. • Experiments performed have showed that as the size of the database grows, the DHP algorithm significantly outperforms the Apriori algorithm. However, the performance of the DHP algorithm highly depends on the hash table size.