Data Visualization and Annotation for SAN Research Vineeth

- Slides: 1

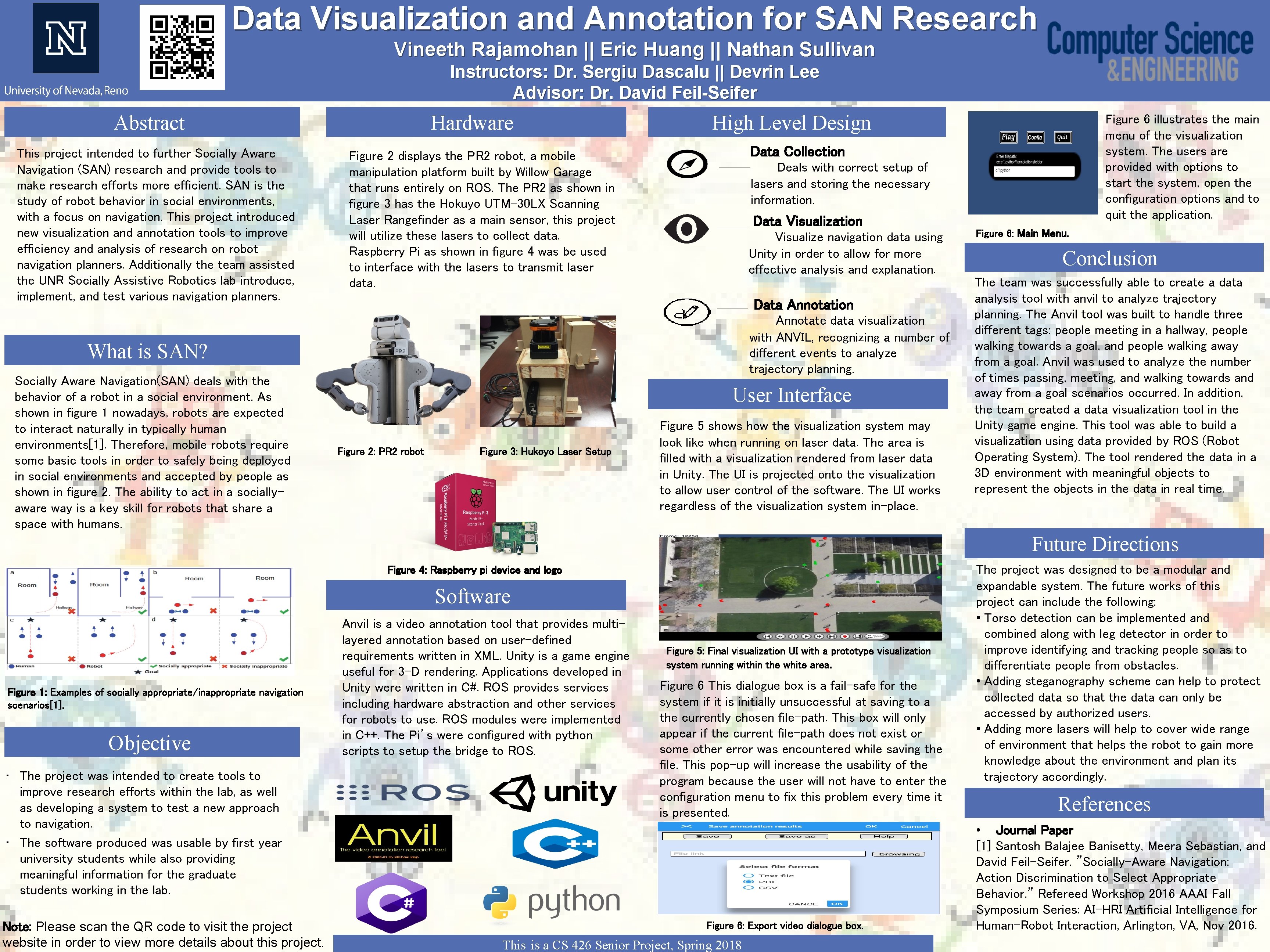

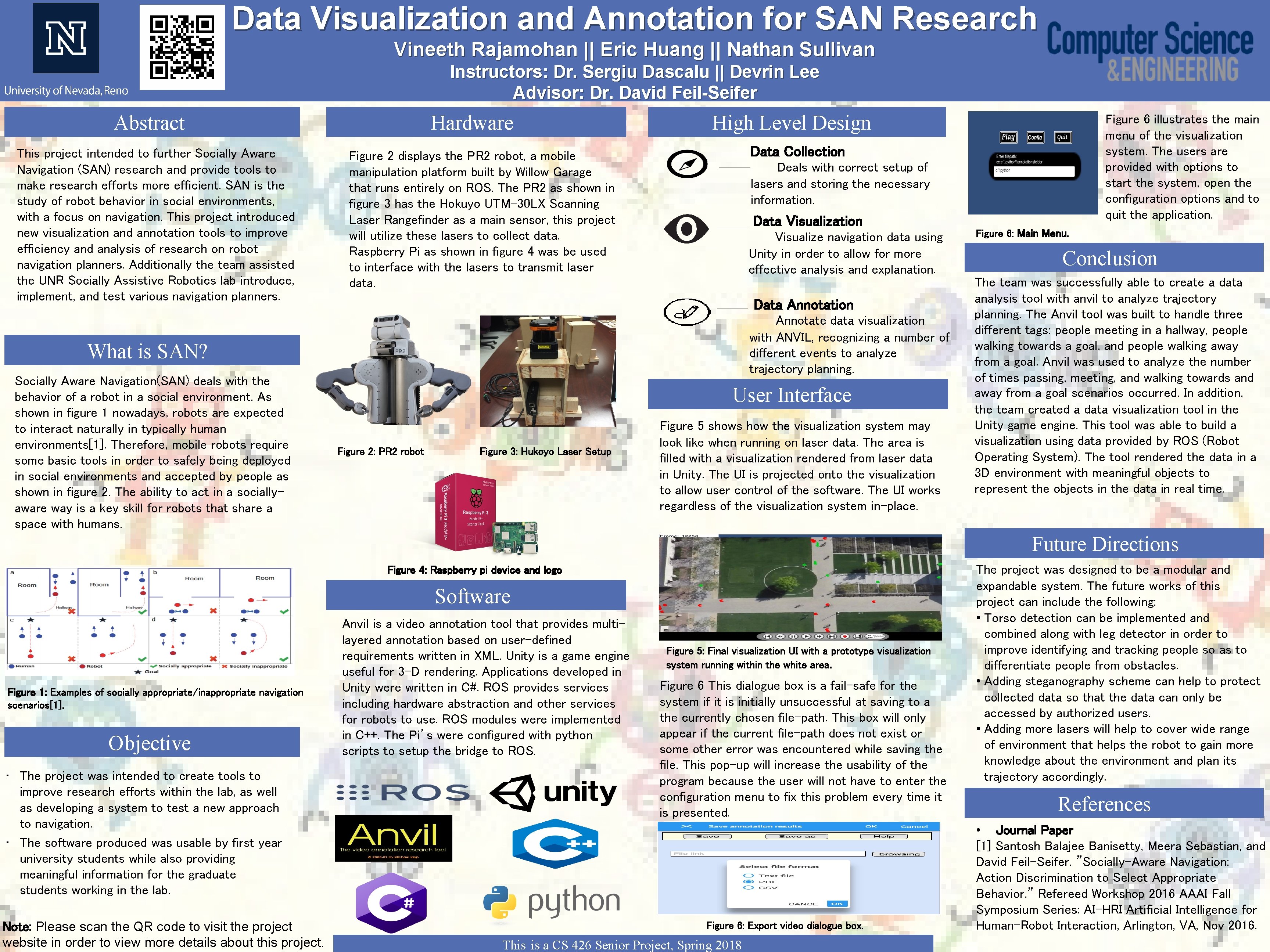

Data Visualization and Annotation for SAN Research Vineeth Rajamohan || Eric Huang || Nathan Sullivan Instructors: Dr. Sergiu Dascalu || Devrin Lee Advisor: Dr. David Feil-Seifer Abstract This project intended to further Socially Aware Navigation (SAN) research and provide tools to make research efforts more efficient. SAN is the study of robot behavior in social environments, with a focus on navigation. This project introduced new visualization and annotation tools to improve efficiency and analysis of research on robot navigation planners. Additionally the team assisted the UNR Socially Assistive Robotics lab introduce, implement, and test various navigation planners. Hardware High Level Design Data Collection Figure 2 displays the PR 2 robot, a mobile manipulation platform built by Willow Garage that runs entirely on ROS. The PR 2 as shown in figure 3 has the Hokuyo UTM-30 LX Scanning Laser Rangefinder as a main sensor, this project will utilize these lasers to collect data. Raspberry Pi as shown in figure 4 was be used to interface with the lasers to transmit laser data. Deals with correct setup of lasers and storing the necessary information. Data Visualization Visualize navigation data using Unity in order to allow for more effective analysis and explanation. Data Annotation Annotate data visualization with ANVIL, recognizing a number of different events to analyze trajectory planning. What is SAN? Socially Aware Navigation(SAN) deals with the behavior of a robot in a social environment. As shown in figure 1 nowadays, robots are expected to interact naturally in typically human environments[1]. Therefore, mobile robots require some basic tools in order to safely being deployed in social environments and accepted by people as shown in figure 2. The ability to act in a sociallyaware way is a key skill for robots that share a space with humans. User Interface Figure 2: PR 2 robot Figure 3: Hukoyo Laser Setup Figure 6 illustrates the main menu of the visualization system. The users are provided with options to start the system, open the configuration options and to quit the application. Figure 5 shows how the visualization system may look like when running on laser data. The area is filled with a visualization rendered from laser data in Unity. The UI is projected onto the visualization to allow user control of the software. The UI works regardless of the visualization system in-place. Figure 6: Main Menu. Conclusion The team was successfully able to create a data analysis tool with anvil to analyze trajectory planning. The Anvil tool was built to handle three different tags: people meeting in a hallway, people walking towards a goal, and people walking away from a goal. Anvil was used to analyze the number of times passing, meeting, and walking towards and away from a goal scenarios occurred. In addition, the team created a data visualization tool in the Unity game engine. This tool was able to build a visualization using data provided by ROS (Robot Operating System). The tool rendered the data in a 3 D environment with meaningful objects to represent the objects in the data in real time. Future Directions Figure 4: Raspberry pi device and logo Software Figure 1: Examples of socially appropriate/inappropriate navigation scenarios[1]. Objective • The project was intended to create tools to improve research efforts within the lab, as well as developing a system to test a new approach to navigation. • The software produced was usable by first year university students while also providing meaningful information for the graduate students working in the lab. Note: Please scan the QR code to visit the project website in order to view more details about this project. Anvil is a video annotation tool that provides multilayered annotation based on user-defined requirements written in XML. Unity is a game engine useful for 3 -D rendering. Applications developed in Unity were written in C#. ROS provides services including hardware abstraction and other services for robots to use. ROS modules were implemented in C++. The Pi’s were configured with python scripts to setup the bridge to ROS. Figure 5: Final visualization UI with a prototype visualization system running within the white area. Figure 6 This dialogue box is a fail-safe for the system if it is initially unsuccessful at saving to a the currently chosen file-path. This box will only appear if the current file-path does not exist or some other error was encountered while saving the file. This pop-up will increase the usability of the program because the user will not have to enter the configuration menu to fix this problem every time it is presented. Figure 6: Export video dialogue box. This is a CS 426 Senior Project, Spring 2018 The project was designed to be a modular and expandable system. The future works of this project can include the following: • Torso detection can be implemented and combined along with leg detector in order to improve identifying and tracking people so as to differentiate people from obstacles. • Adding steganography scheme can help to protect collected data so that the data can only be accessed by authorized users. • Adding more lasers will help to cover wide range of environment that helps the robot to gain more knowledge about the environment and plan its trajectory accordingly. References • Journal Paper [1] Santosh Balajee Banisetty, Meera Sebastian, and David Feil-Seifer. ”Socially-Aware Navigation: Action Discrimination to Select Appropriate Behavior. ” Refereed Workshop 2016 AAAI Fall Symposium Series: AI-HRI Artificial Intelligence for Human-Robot Interaction, Arlington, VA, Nov 2016.