CMS data access Artem Trunov CMS site roles

- Slides: 7

CMS data access Artem Trunov

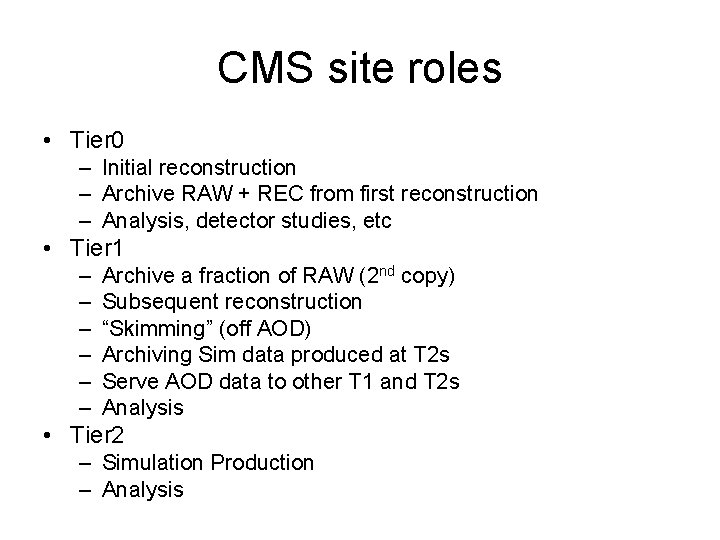

CMS site roles • Tier 0 – Initial reconstruction – Archive RAW + REC from first reconstruction – Analysis, detector studies, etc • Tier 1 – – – Archive a fraction of RAW (2 nd copy) Subsequent reconstruction “Skimming” (off AOD) Archiving Sim data produced at T 2 s Serve AOD data to other T 1 and T 2 s Analysis • Tier 2 – Simulation Production – Analysis

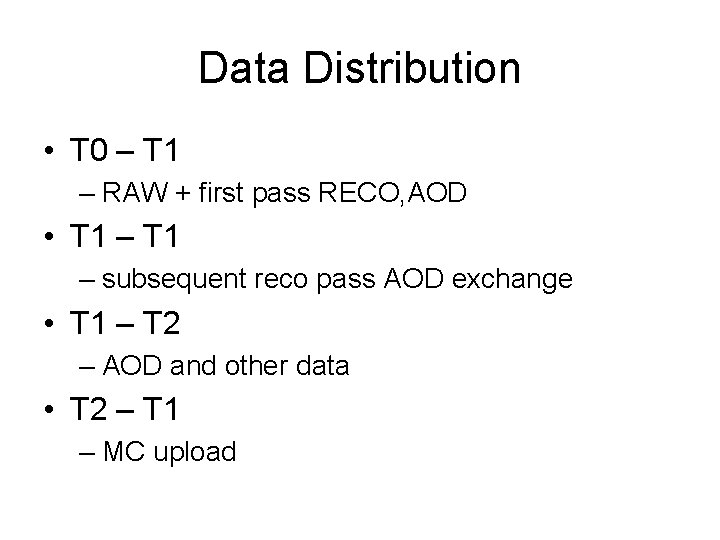

Data Distribution • T 0 – T 1 – RAW + first pass RECO, AOD • T 1 – subsequent reco pass AOD exchange • T 1 – T 2 – AOD and other data • T 2 – T 1 – MC upload

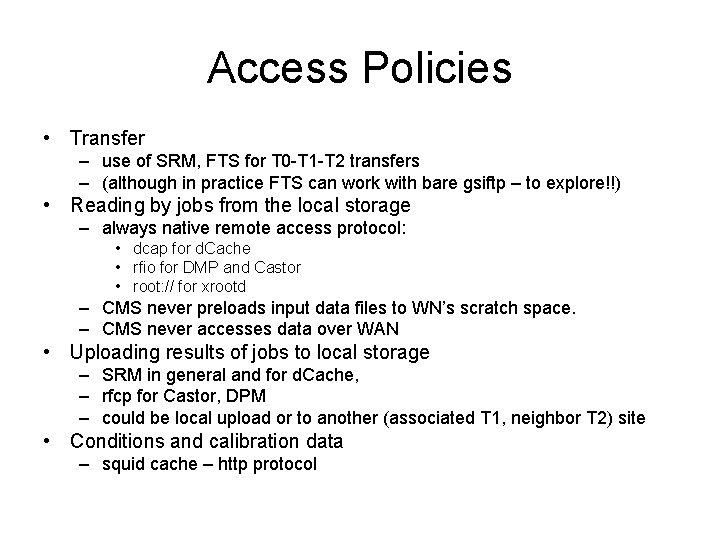

Access Policies • Transfer – use of SRM, FTS for T 0 -T 1 -T 2 transfers – (although in practice FTS can work with bare gsiftp – to explore!!) • Reading by jobs from the local storage – always native remote access protocol: • dcap for d. Cache • rfio for DMP and Castor • root: // for xrootd – CMS never preloads input data files to WN’s scratch space. – CMS never accesses data over WAN • Uploading results of jobs to local storage – SRM in general and for d. Cache, – rfcp for Castor, DPM – could be local upload or to another (associated T 1, neighbor T 2) site • Conditions and calibration data – squid cache – http protocol

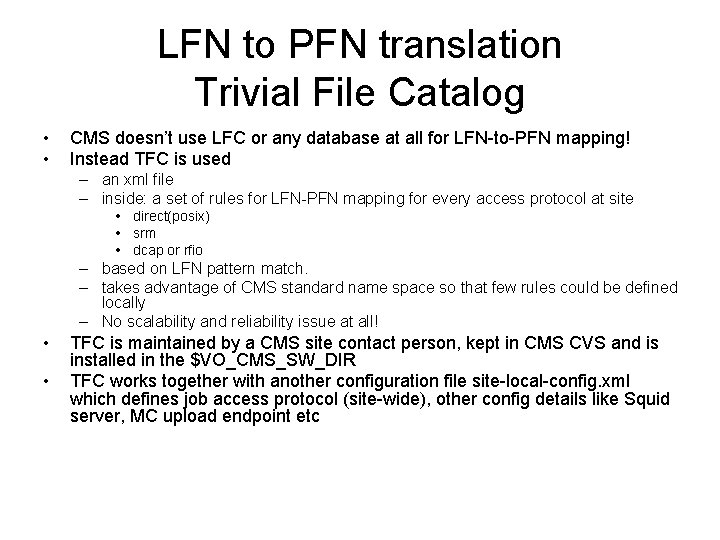

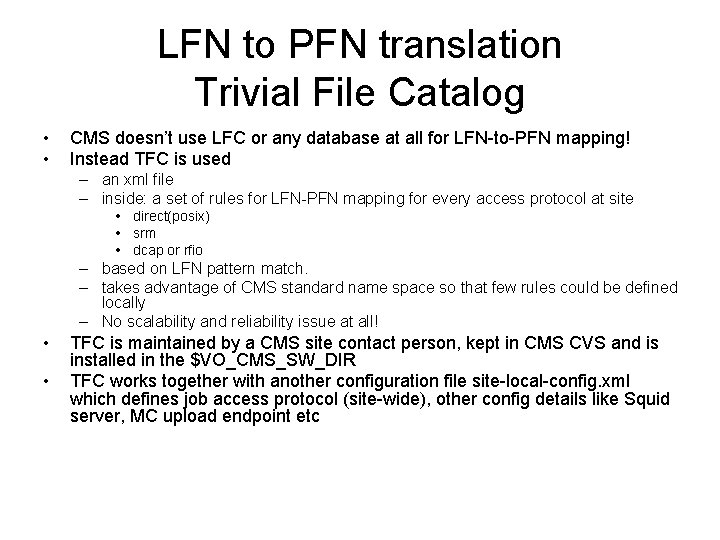

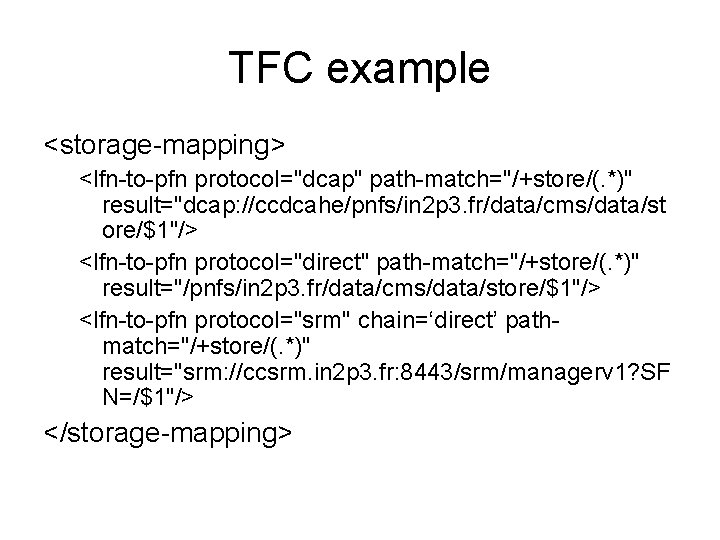

LFN to PFN translation Trivial File Catalog • • CMS doesn’t use LFC or any database at all for LFN-to-PFN mapping! Instead TFC is used – an xml file – inside: a set of rules for LFN-PFN mapping for every access protocol at site • direct(posix) • srm • dcap or rfio – based on LFN pattern match. – takes advantage of CMS standard name space so that few rules could be defined locally – No scalability and reliability issue at all! • • TFC is maintained by a CMS site contact person, kept in CMS CVS and is installed in the $VO_CMS_SW_DIR TFC works together with another configuration file site-local-config. xml which defines job access protocol (site-wide), other config details like Squid server, MC upload endpoint etc

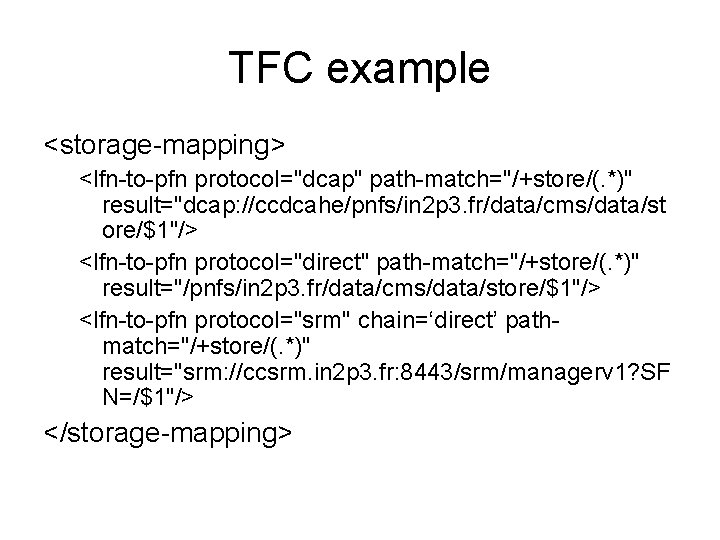

TFC example <storage-mapping> <lfn-to-pfn protocol="dcap" path-match="/+store/(. *)" result="dcap: //ccdcahe/pnfs/in 2 p 3. fr/data/cms/data/st ore/$1"/> <lfn-to-pfn protocol="direct" path-match="/+store/(. *)" result="/pnfs/in 2 p 3. fr/data/cms/data/store/$1"/> <lfn-to-pfn protocol="srm" chain=‘direct’ pathmatch="/+store/(. *)" result="srm: //ccsrm. in 2 p 3. fr: 8443/srm/managerv 1? SF N=/$1"/> </storage-mapping>

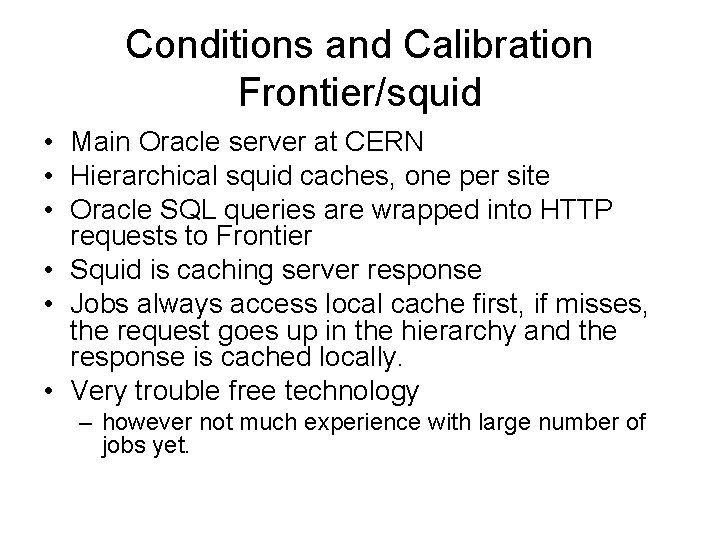

Conditions and Calibration Frontier/squid • Main Oracle server at CERN • Hierarchical squid caches, one per site • Oracle SQL queries are wrapped into HTTP requests to Frontier • Squid is caching server response • Jobs always access local cache first, if misses, the request goes up in the hierarchy and the response is cached locally. • Very trouble free technology – however not much experience with large number of jobs yet.