CLS 2015 https 162 105 160 15 6443

- Slides: 22

CLS集群 2015年

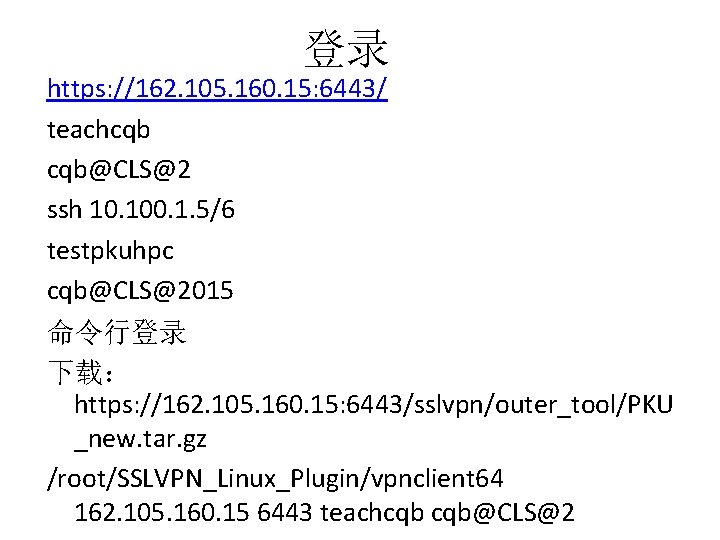

登录 https: //162. 105. 160. 15: 6443/ teachcqb cqb@CLS@2 ssh 10. 100. 1. 5/6 testpkuhpc cqb@CLS@2015 命令行登录 下载: https: //162. 105. 160. 15: 6443/sslvpn/outer_tool/PKU _new. tar. gz /root/SSLVPN_Linux_Plugin/vpnclient 64 162. 105. 160. 15 6443 teachcqb cqb@CLS@2

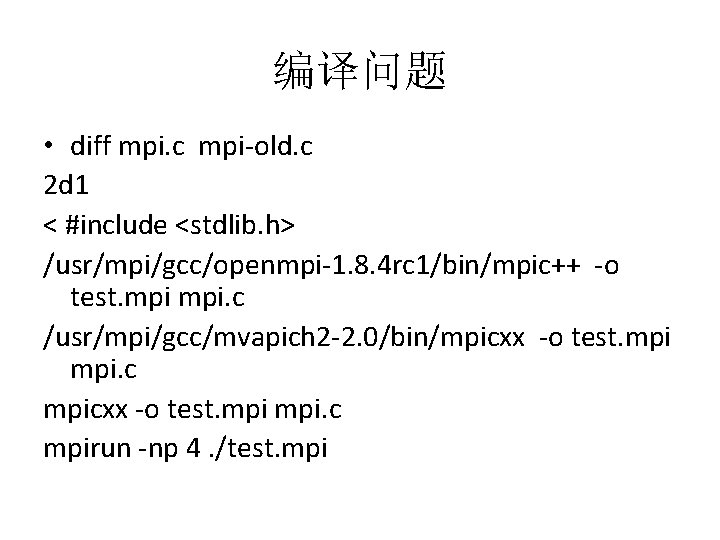

编译问题 • diff mpi. c mpi-old. c 2 d 1 < #include <stdlib. h> /usr/mpi/gcc/openmpi-1. 8. 4 rc 1/bin/mpic++ -o test. mpi. c /usr/mpi/gcc/mvapich 2 -2. 0/bin/mpicxx -o test. mpi mpi. c mpirun -np 4. /test. mpi

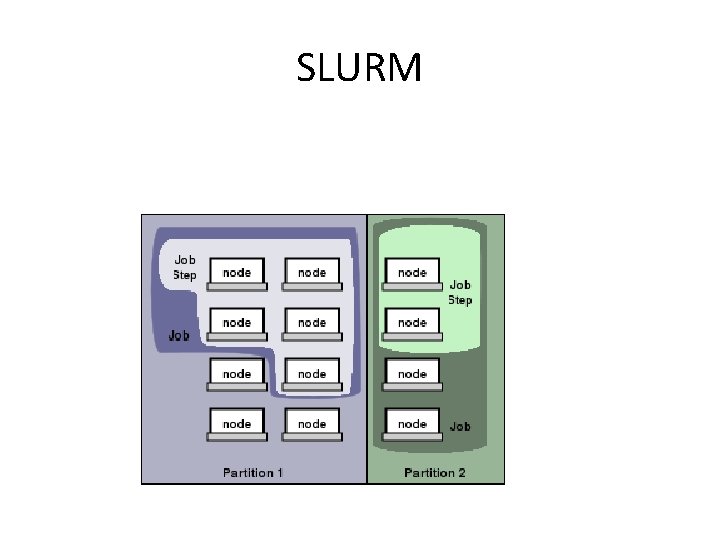

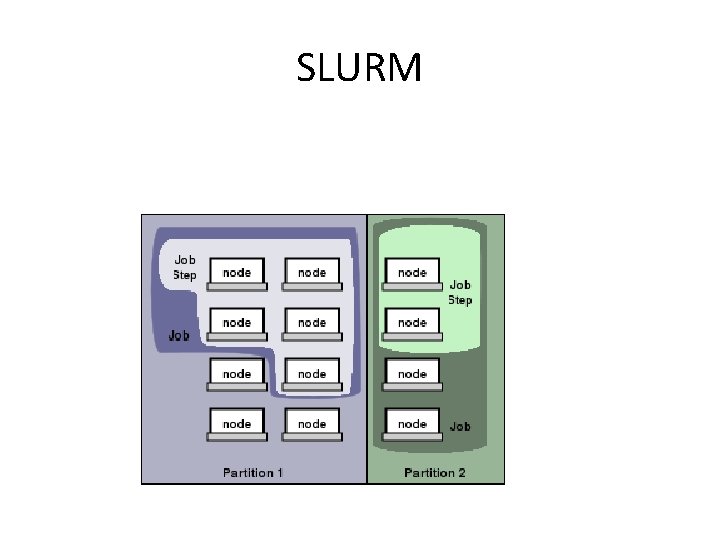

SLURM

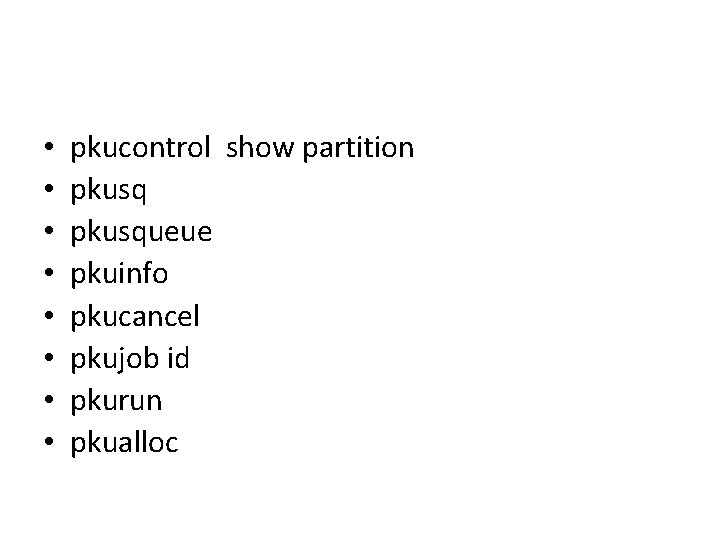

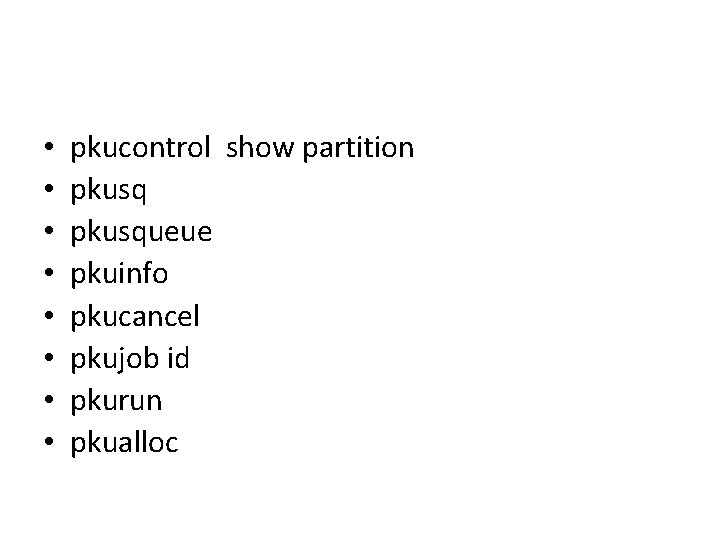

• • pkucontrol show partition pkusqueue pkuinfo pkucancel pkujob id pkurun pkualloc

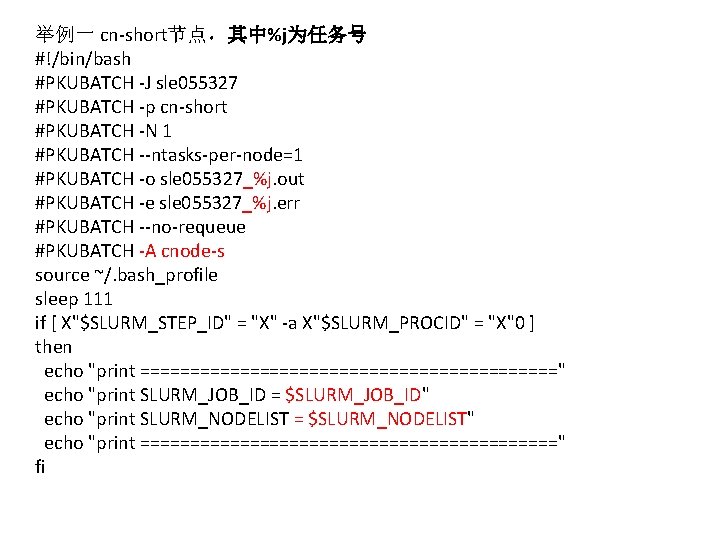

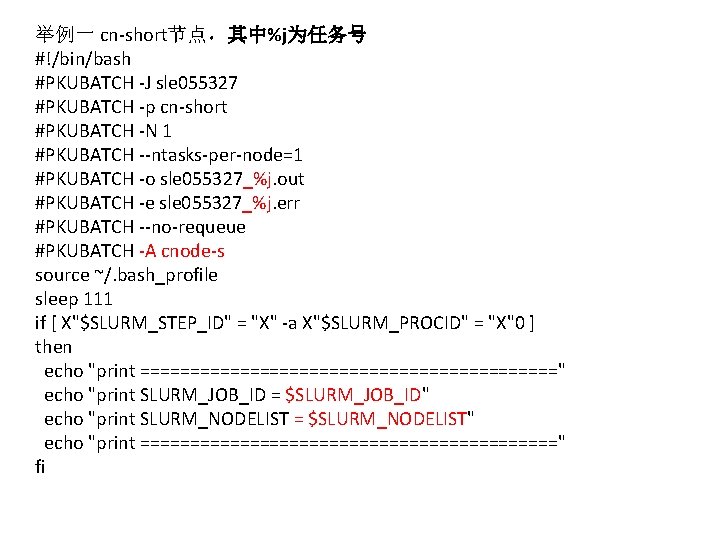

举例一 cn-short节点,其中%j为任务号 #!/bin/bash #PKUBATCH -J sle 055327 #PKUBATCH -p cn-short #PKUBATCH -N 1 #PKUBATCH --ntasks-per-node=1 #PKUBATCH -o sle 055327_%j. out #PKUBATCH -e sle 055327_%j. err #PKUBATCH --no-requeue #PKUBATCH -A cnode-s source ~/. bash_profile sleep 111 if [ X"$SLURM_STEP_ID" = "X" -a X"$SLURM_PROCID" = "X"0 ] then echo "print =====================" echo "print SLURM_JOB_ID = $SLURM_JOB_ID" echo "print SLURM_NODELIST = $SLURM_NODELIST" echo "print =====================" fi

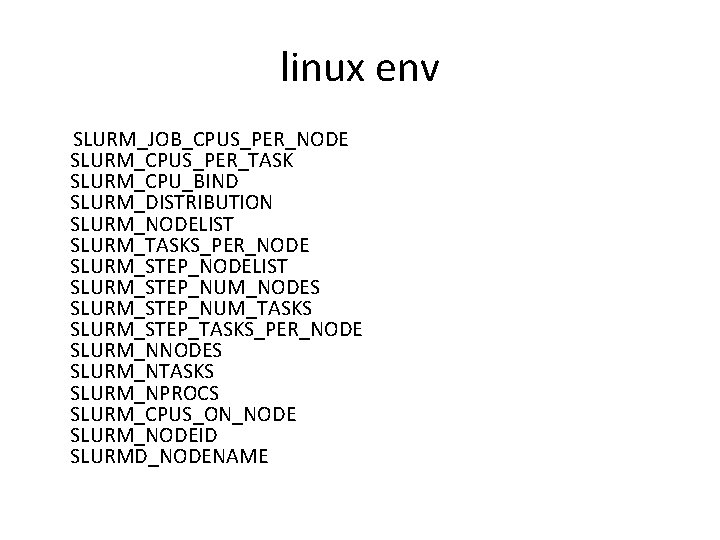

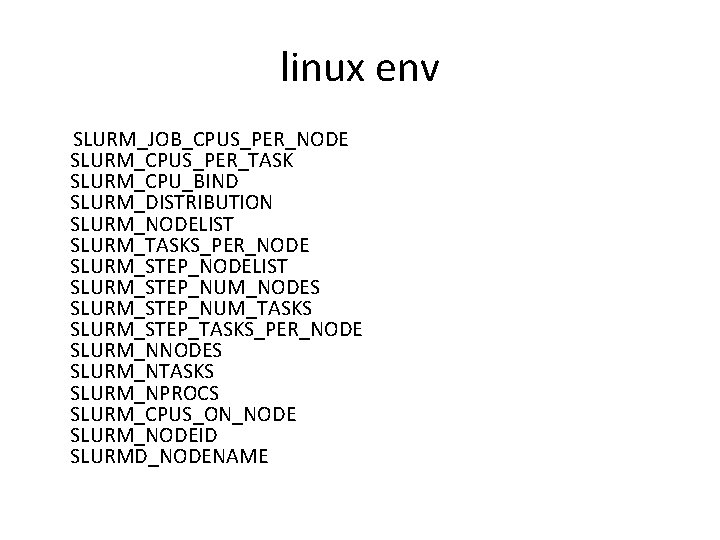

linux env SLURM_JOB_CPUS_PER_NODE SLURM_CPUS_PER_TASK SLURM_CPU_BIND SLURM_DISTRIBUTION SLURM_NODELIST SLURM_TASKS_PER_NODE SLURM_STEP_NODELIST SLURM_STEP_NUM_NODES SLURM_STEP_NUM_TASKS SLURM_STEP_TASKS_PER_NODE SLURM_NNODES SLURM_NTASKS SLURM_NPROCS SLURM_CPUS_ON_NODE SLURM_NODEID SLURMD_NODENAME

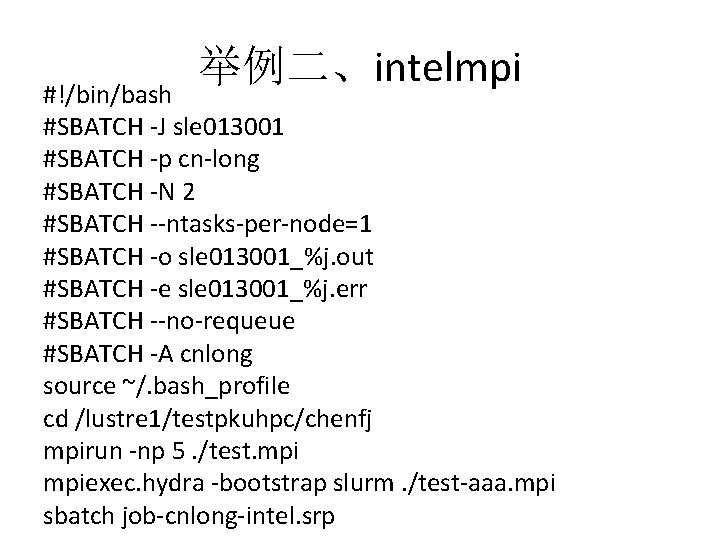

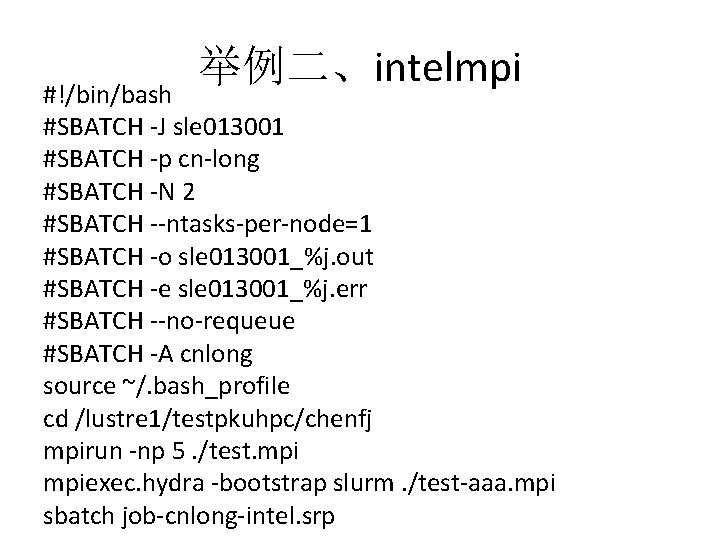

举例二、intelmpi #!/bin/bash #SBATCH -J sle 013001 #SBATCH -p cn-long #SBATCH -N 2 #SBATCH --ntasks-per-node=1 #SBATCH -o sle 013001_%j. out #SBATCH -e sle 013001_%j. err #SBATCH --no-requeue #SBATCH -A cnlong source ~/. bash_profile cd /lustre 1/testpkuhpc/chenfj mpirun -np 5. /test. mpiexec. hydra -bootstrap slurm. /test-aaa. mpi sbatch job-cnlong-intel. srp

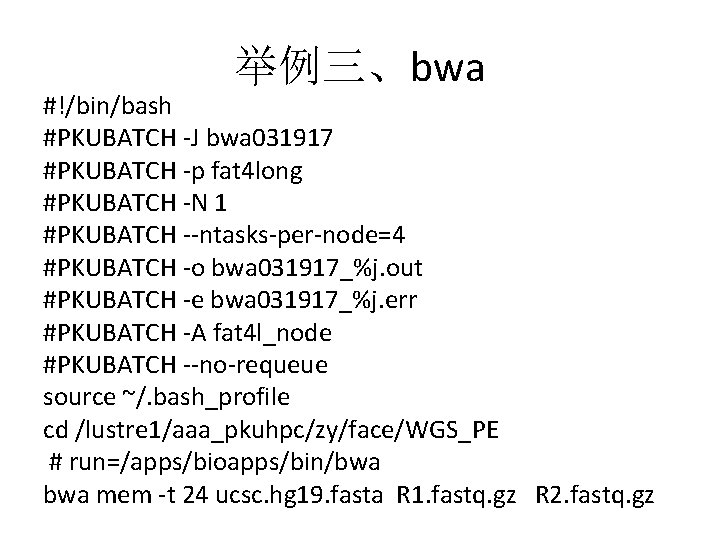

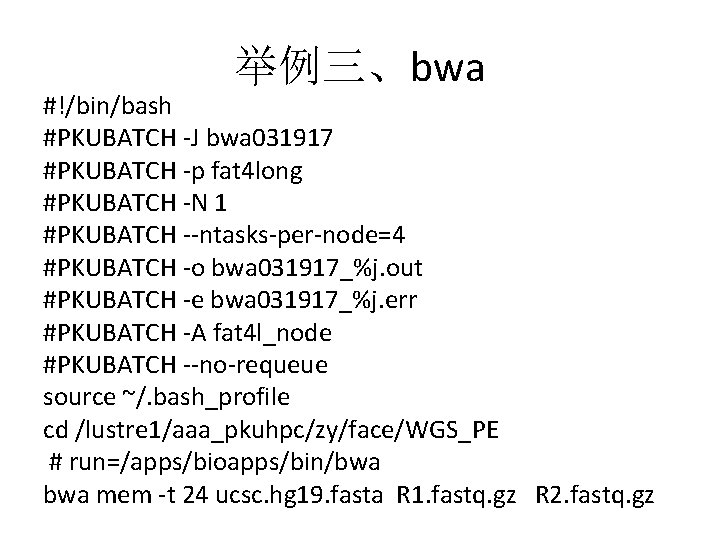

举例三、bwa #!/bin/bash #PKUBATCH -J bwa 031917 #PKUBATCH -p fat 4 long #PKUBATCH -N 1 #PKUBATCH --ntasks-per-node=4 #PKUBATCH -o bwa 031917_%j. out #PKUBATCH -e bwa 031917_%j. err #PKUBATCH -A fat 4 l_node #PKUBATCH --no-requeue source ~/. bash_profile cd /lustre 1/aaa_pkuhpc/zy/face/WGS_PE # run=/apps/bioapps/bin/bwa mem -t 24 ucsc. hg 19. fasta R 1. fastq. gz R 2. fastq. gz

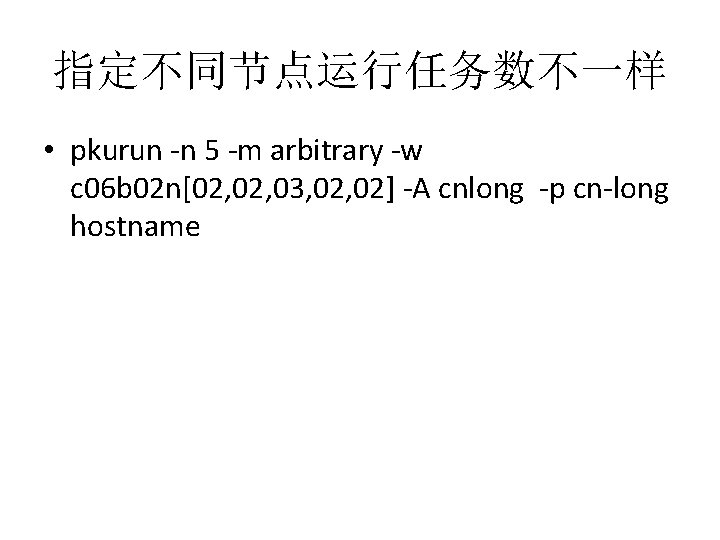

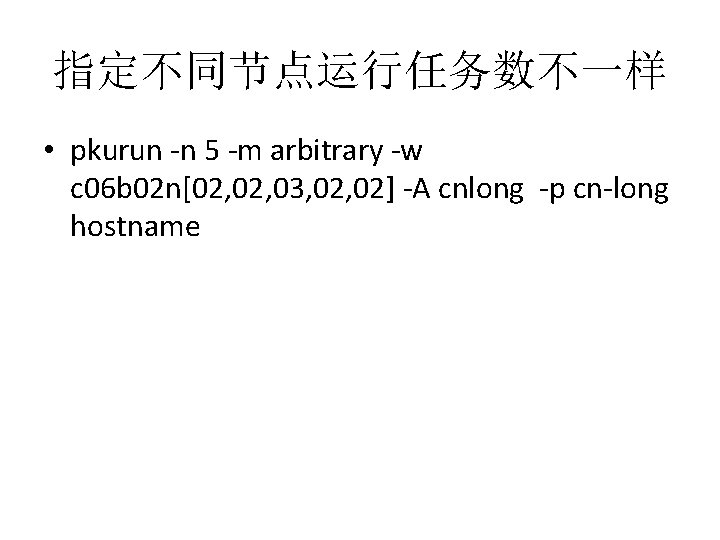

指定不同节点运行任务数不一样 • pkurun -n 5 -m arbitrary -w c 06 b 02 n[02, 03, 02] -A cnlong -p cn-long hostname

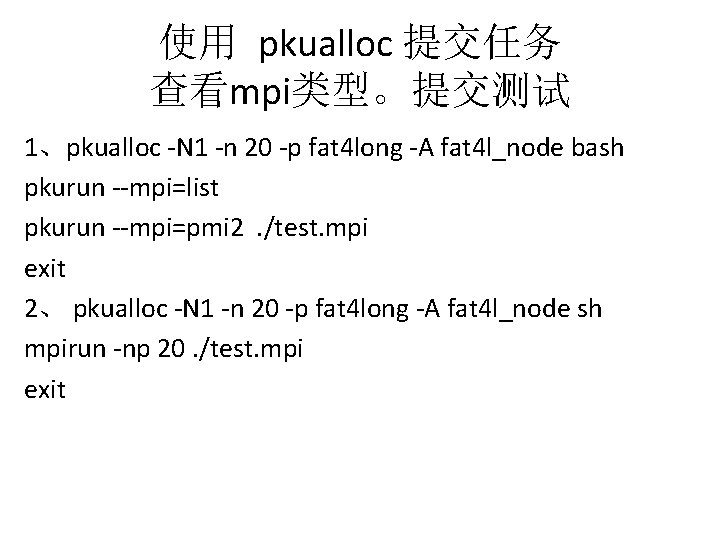

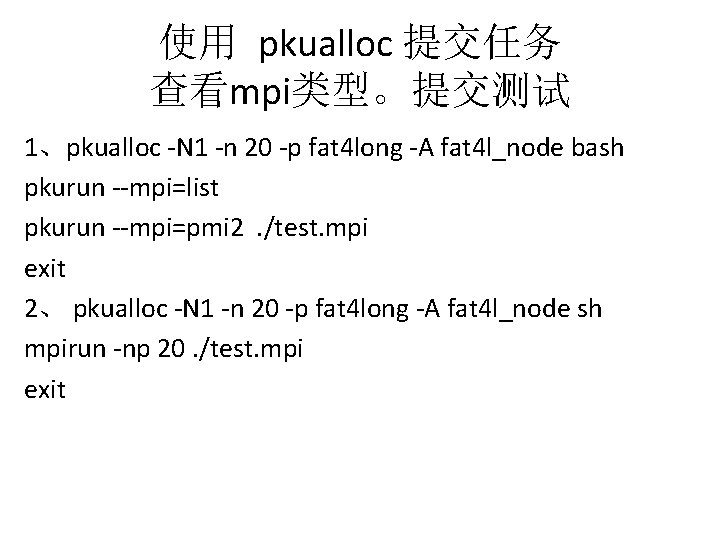

使用 pkualloc 提交任务 查看mpi类型。提交测试 1、pkualloc -N 1 -n 20 -p fat 4 long -A fat 4 l_node bash pkurun --mpi=list pkurun --mpi=pmi 2. /test. mpi exit 2、 pkualloc -N 1 -n 20 -p fat 4 long -A fat 4 l_node sh mpirun -np 20. /test. mpi exit

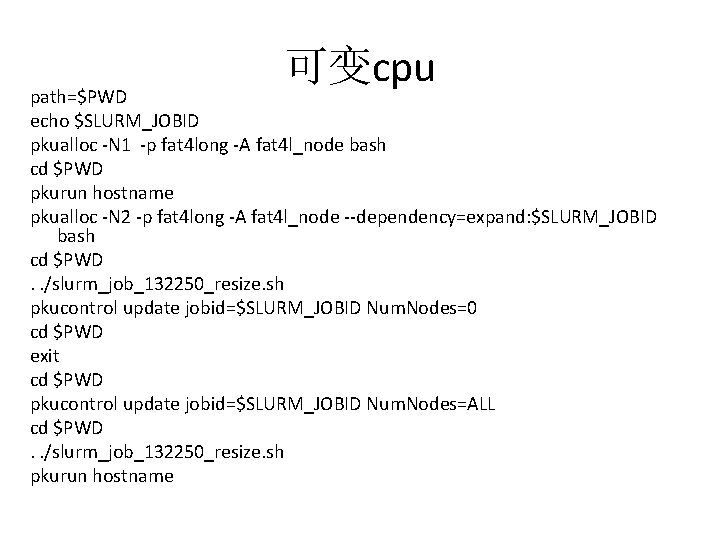

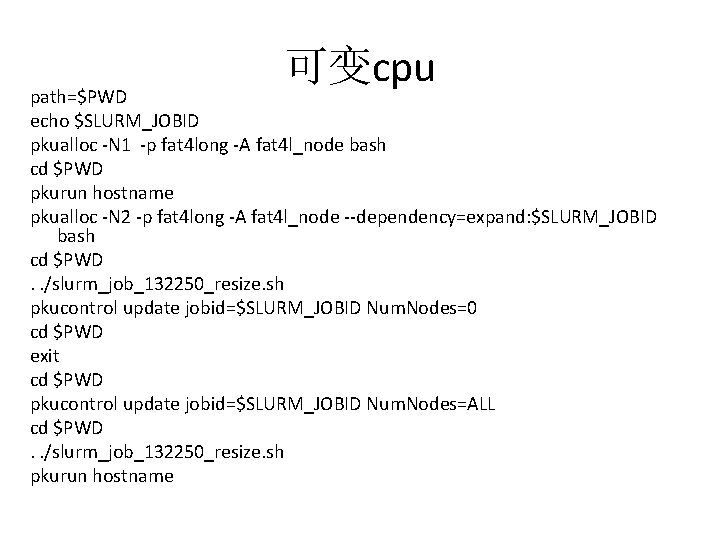

可变cpu path=$PWD echo $SLURM_JOBID pkualloc -N 1 -p fat 4 long -A fat 4 l_node bash cd $PWD pkurun hostname pkualloc -N 2 -p fat 4 long -A fat 4 l_node --dependency=expand: $SLURM_JOBID bash cd $PWD. . /slurm_job_132250_resize. sh pkucontrol update jobid=$SLURM_JOBID Num. Nodes=0 cd $PWD exit cd $PWD pkucontrol update jobid=$SLURM_JOBID Num. Nodes=ALL cd $PWD. . /slurm_job_132250_resize. sh pkurun hostname

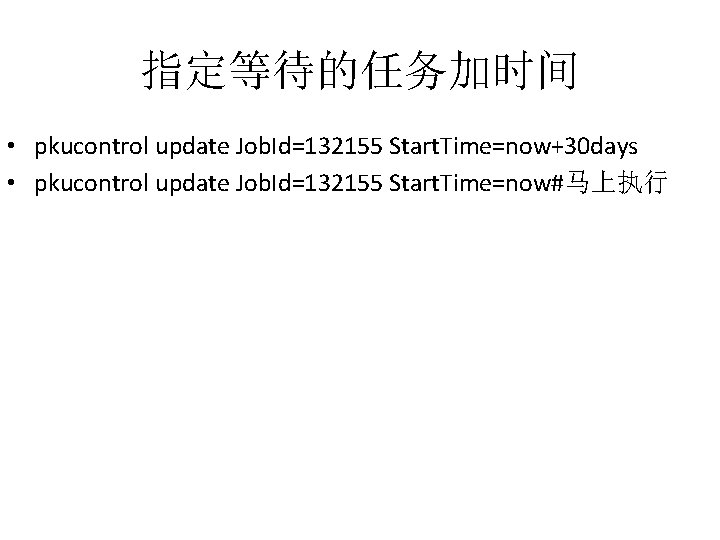

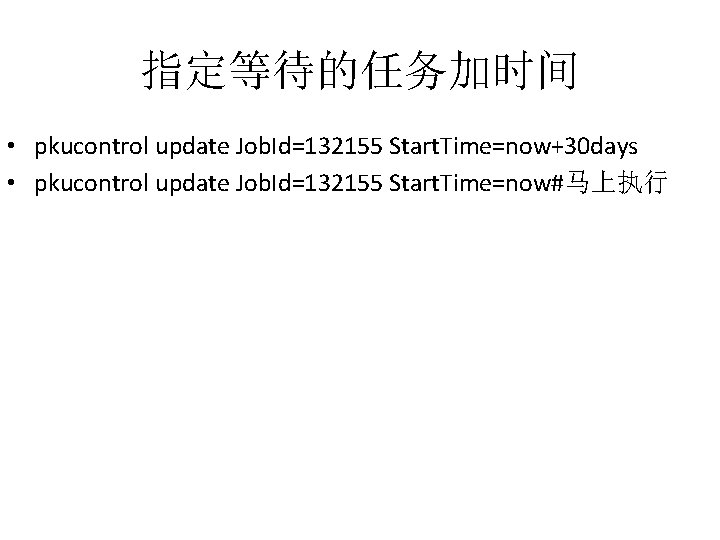

指定等待的任务加时间 • pkucontrol update Job. Id=132155 Start. Time=now+30 days • pkucontrol update Job. Id=132155 Start. Time=now#马上执行

• SLURM has a job purging mechanism to remove inactive jobs pkucontrol show config | grep Inactive. Limit • Why is my job not running? pkucontrol show config | grep Scheduler. Type

vncserver

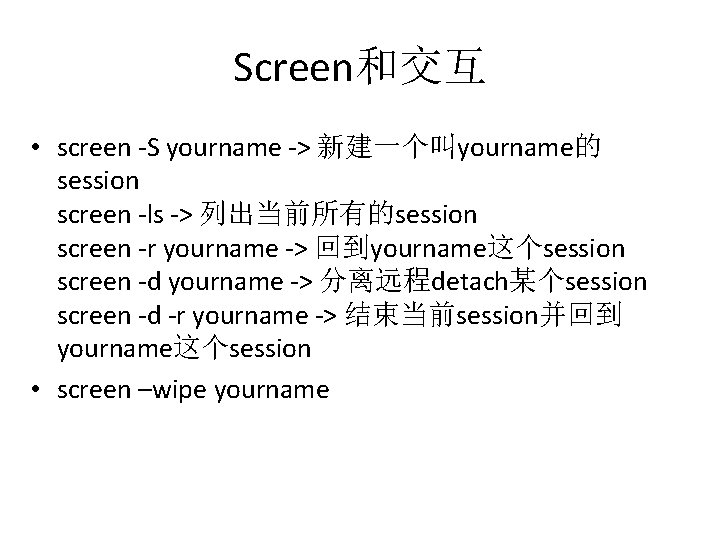

Screen和交互 • screen -S yourname -> 新建一个叫yourname的 session screen -ls -> 列出当前所有的session screen -r yourname -> 回到yourname这个session screen -d yourname -> 分离远程detach某个session screen -d -r yourname -> 结束当前session并回到 yourname这个session • screen –wipe yourname

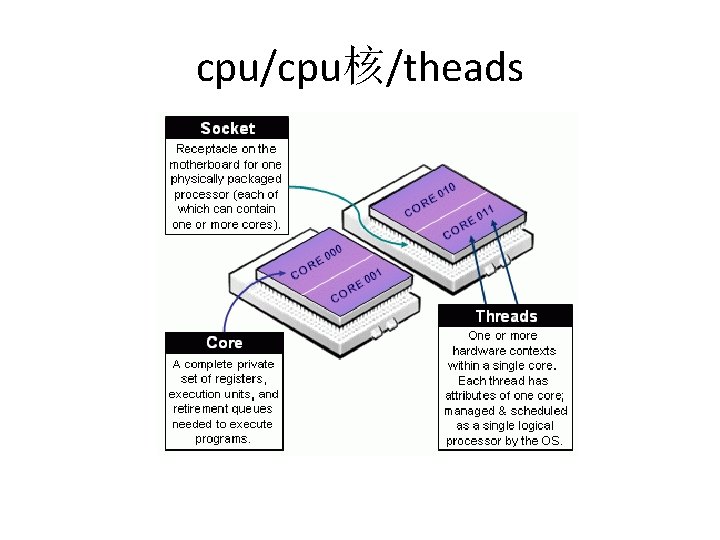

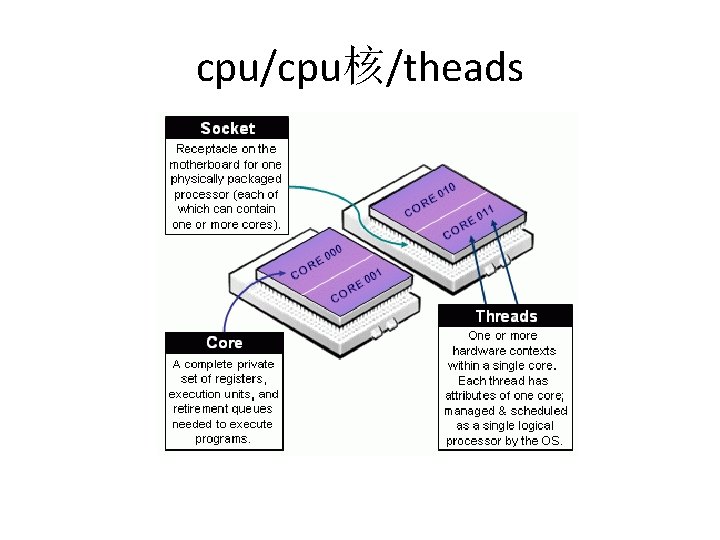

cpu/cpu核/theads

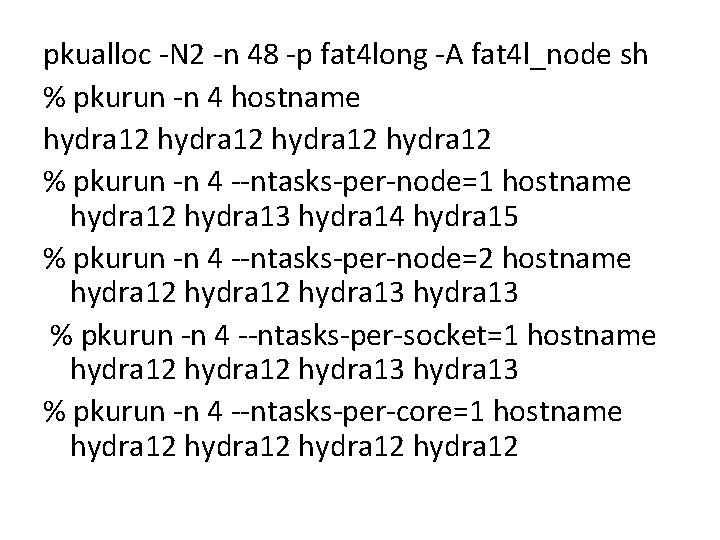

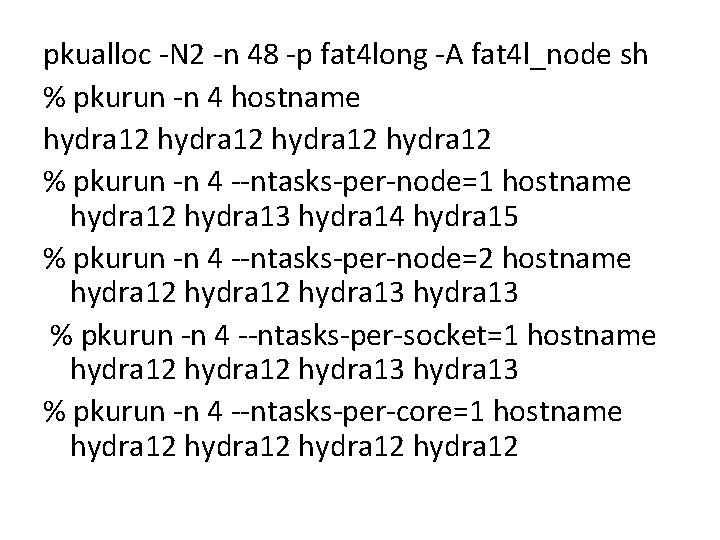

pkualloc -N 2 -n 48 -p fat 4 long -A fat 4 l_node sh % pkurun -n 4 hostname hydra 12 % pkurun -n 4 --ntasks-per-node=1 hostname hydra 12 hydra 13 hydra 14 hydra 15 % pkurun -n 4 --ntasks-per-node=2 hostname hydra 12 hydra 13 % pkurun -n 4 --ntasks-per-socket=1 hostname hydra 12 hydra 13 % pkurun -n 4 --ntasks-per-core=1 hostname hydra 12

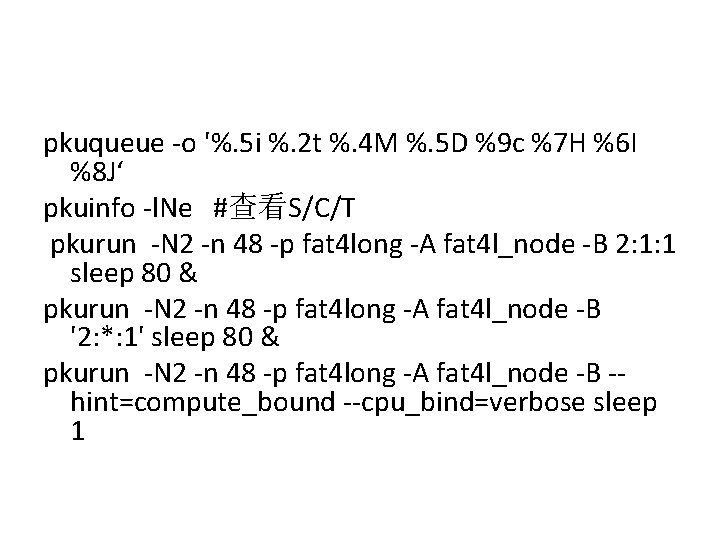

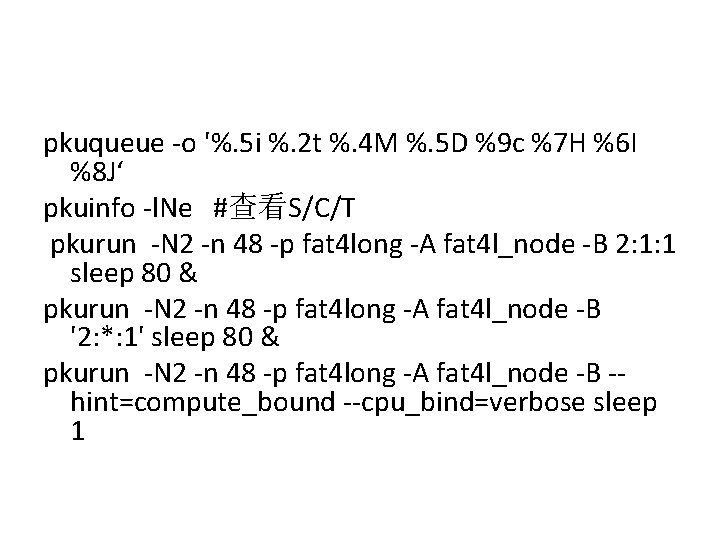

pkuqueue -o '%. 5 i %. 2 t %. 4 M %. 5 D %9 c %7 H %6 I %8 J‘ pkuinfo -l. Ne #查看S/C/T pkurun -N 2 -n 48 -p fat 4 long -A fat 4 l_node -B 2: 1: 1 sleep 80 & pkurun -N 2 -n 48 -p fat 4 long -A fat 4 l_node -B '2: *: 1' sleep 80 & pkurun -N 2 -n 48 -p fat 4 long -A fat 4 l_node -B -hint=compute_bound --cpu_bind=verbose sleep 1