A comparison of Kfold and leaveoneout crossvalidation of

- Slides: 17

A comparison of K-fold and leave-one-out cross-validation of empirical keys Alan D. Mead, IIT mead@iit. edu

What is “Keying”? o Many selection tests do not have demonstrably correct answers n o Biodata, SJT, some simulations, etc. Keying is the constructing of a valid key n n What the “best” people answered is probably “correct” Most approaches use a correlation, or something similar

Correlation approach o o Create 1 -0 indicator variables for each response Correlate indicators with a criterion (e. g. , job performance) n n n o If r >. 01, key = 1 If r < -. 01, key = -1 Else, key = 0 Little loss by using 1, 0, -1 key

How valid is my key? o Now that I have a key, I want to compute a validity… n n n o But I based my key on the responses of my “best” test-takers Can/should I compute a validity in this sample? No! Cureton (1967) showed that very high validities will result even for invalid keys What shall I do?

Validation Approaches o Charge ahead! n o Split my sample into “calibration” and “cross-validation” samples n o “Sure, . 60 is an over-estimate; there will be shrinkage. But even half would still be substantial” Fine if you have a large N… Resample

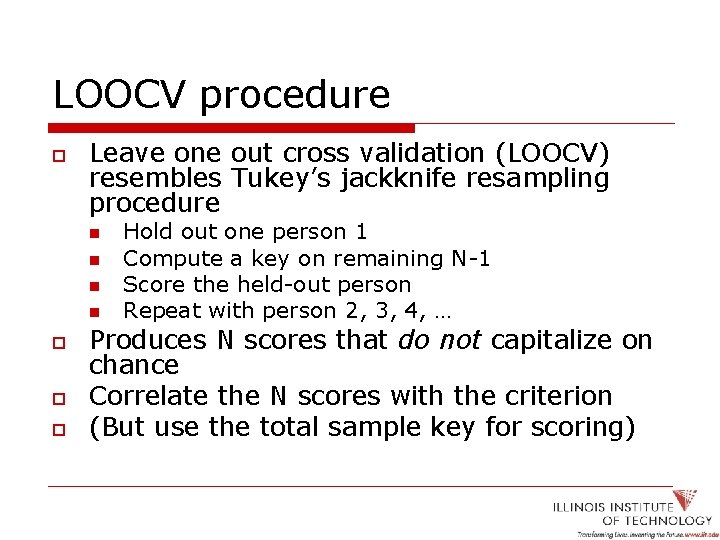

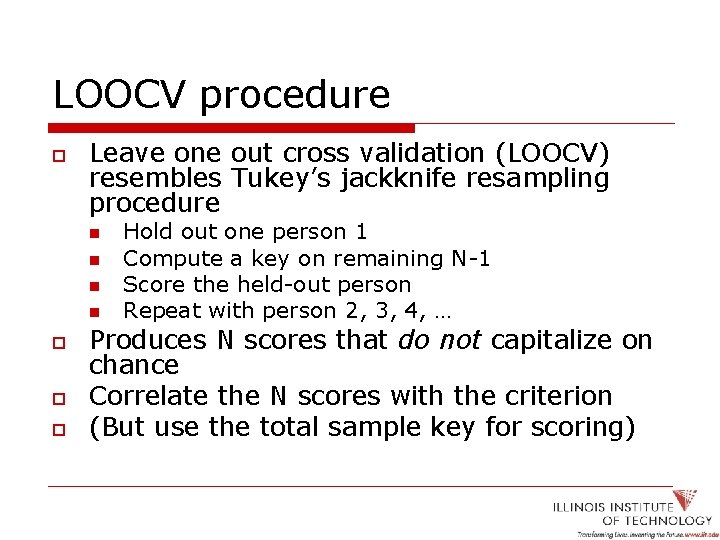

LOOCV procedure o Leave one out cross validation (LOOCV) resembles Tukey’s jackknife resampling procedure n n o o o Hold out one person 1 Compute a key on remaining N-1 Score the held-out person Repeat with person 2, 3, 4, … Produces N scores that do not capitalize on chance Correlate the N scores with the criterion (But use the total sample key for scoring)

Mead & Drasgow, 2003 o o Simulated test responses & criterion Three approaches n n n o Charge ahead LOOCV True cross-validation Varying sample sizes: n N=50, 100, 200, 500, 1000

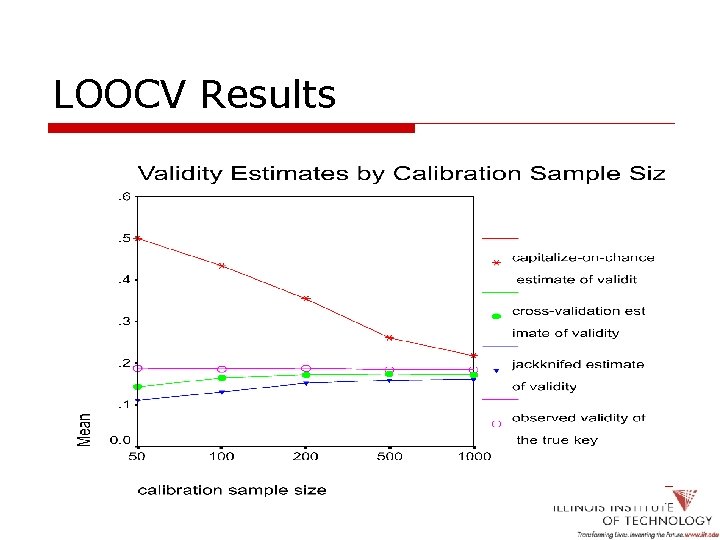

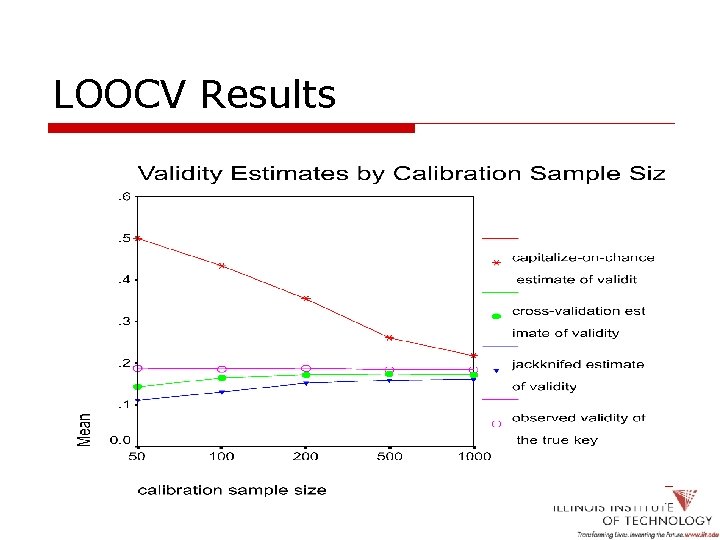

LOOCV Results

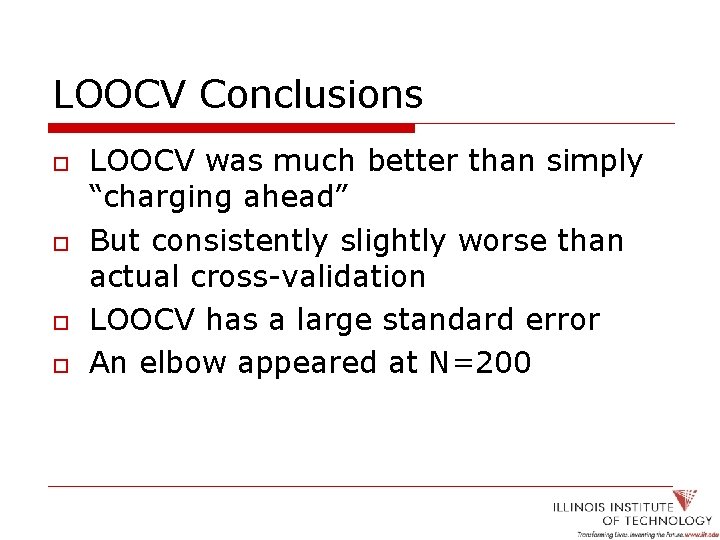

LOOCV Conclusions o o LOOCV was much better than simply “charging ahead” But consistently slightly worse than actual cross-validation LOOCV has a large standard error An elbow appeared at N=200

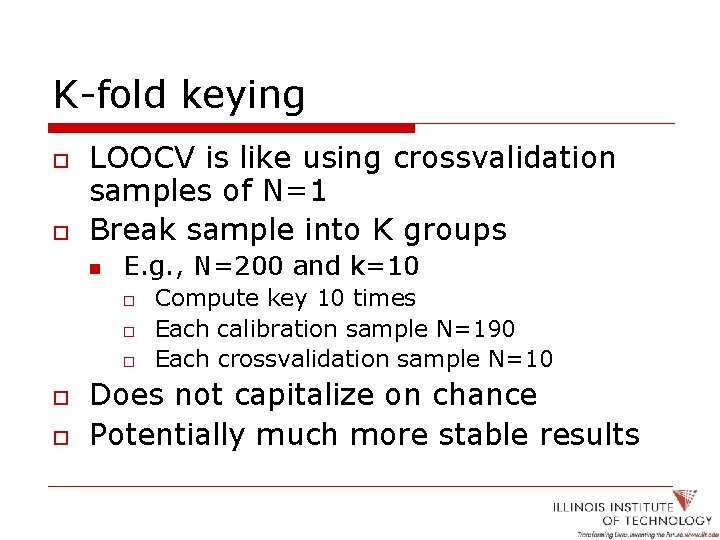

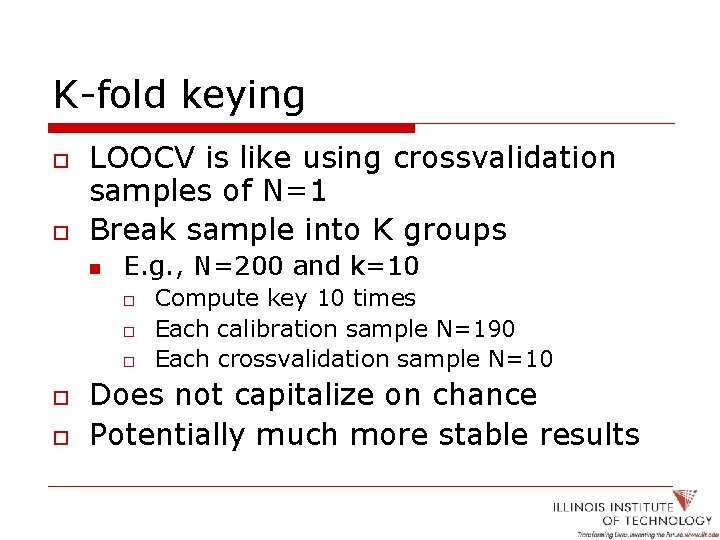

K-fold keying o o LOOCV is like using crossvalidation samples of N=1 Break sample into K groups n E. g. , N=200 and k=10 o o o Compute key 10 times Each calibration sample N=190 Each crossvalidation sample N=10 Does not capitalize on chance Potentially much more stable results

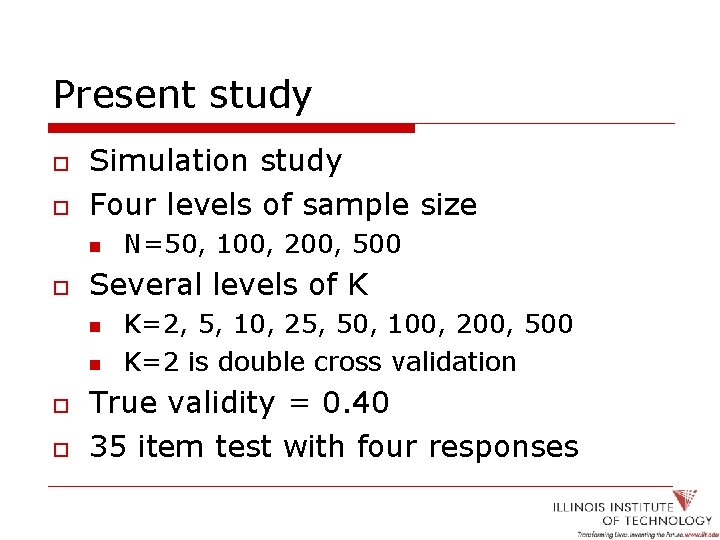

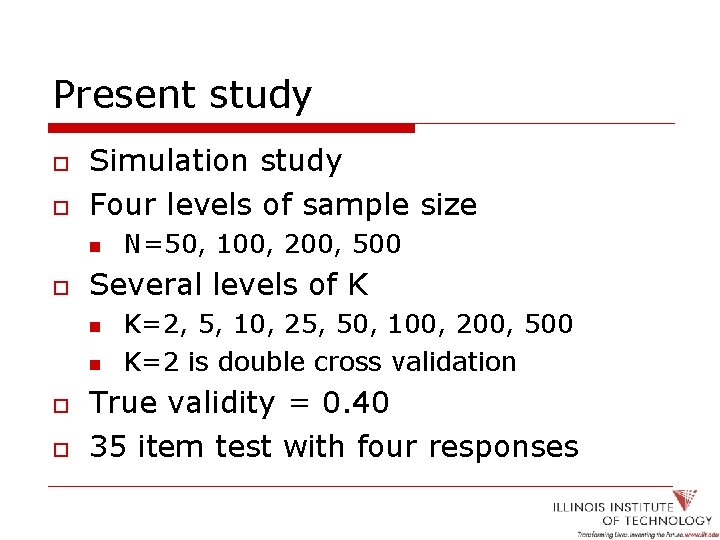

Present study o o Simulation study Four levels of sample size n o Several levels of K n n o o N=50, 100, 200, 500 K=2, 5, 10, 25, 50, 100, 200, 500 K=2 is double cross validation True validity = 0. 40 35 item test with four responses

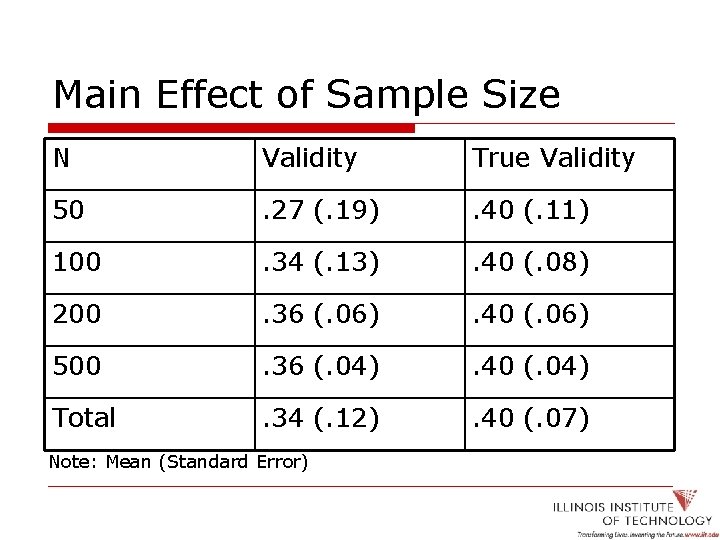

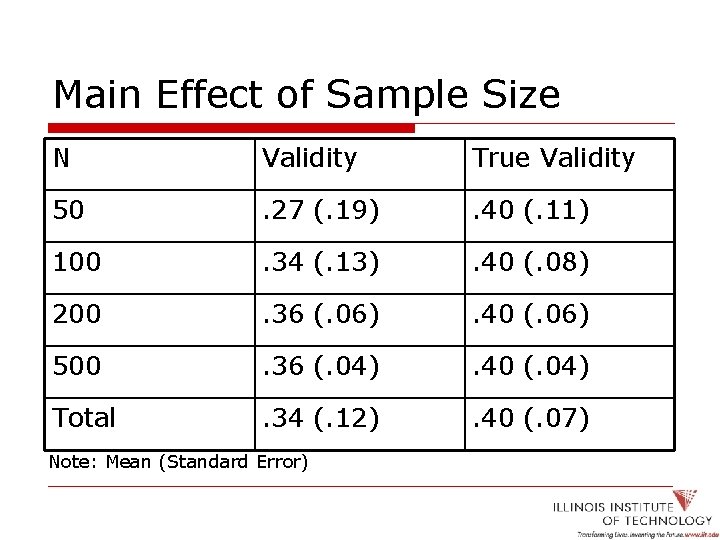

Main Effect of Sample Size N Validity True Validity 50 . 27 (. 19) . 40 (. 11) 100 . 34 (. 13) . 40 (. 08) 200 . 36 (. 06) . 40 (. 06) 500 . 36 (. 04) . 40 (. 04) Total . 34 (. 12) . 40 (. 07) Note: Mean (Standard Error)

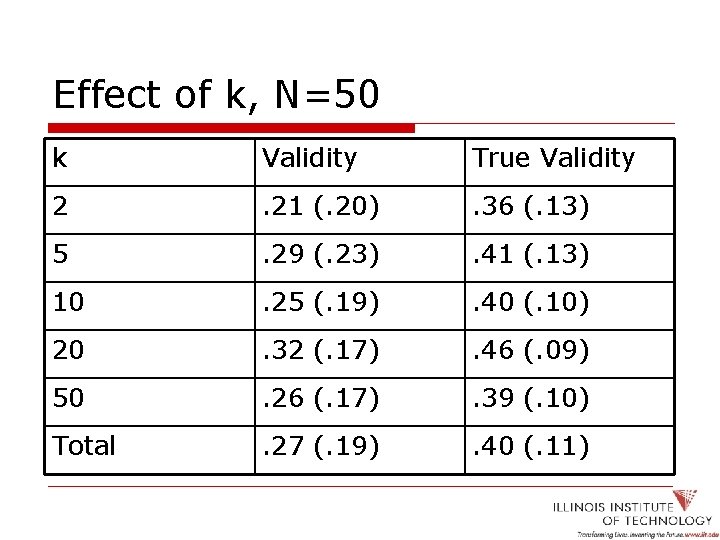

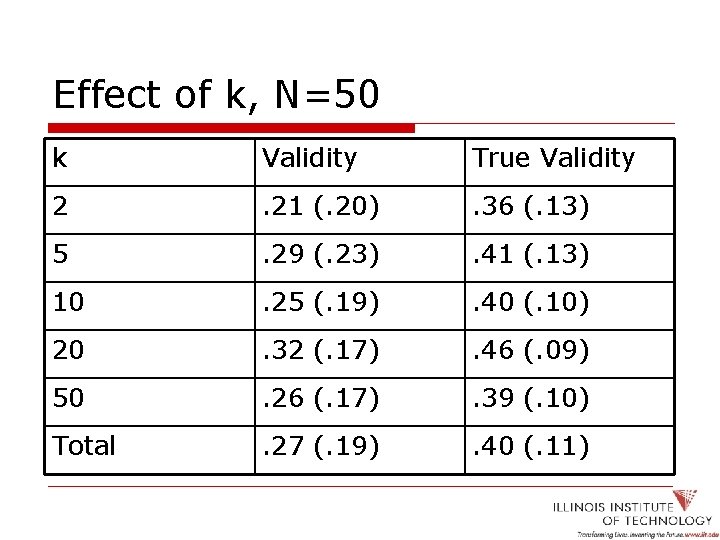

Effect of k, N=50 k Validity True Validity 2 . 21 (. 20) . 36 (. 13) 5 . 29 (. 23) . 41 (. 13) 10 . 25 (. 19) . 40 (. 10) 20 . 32 (. 17) . 46 (. 09) 50 . 26 (. 17) . 39 (. 10) Total . 27 (. 19) . 40 (. 11)

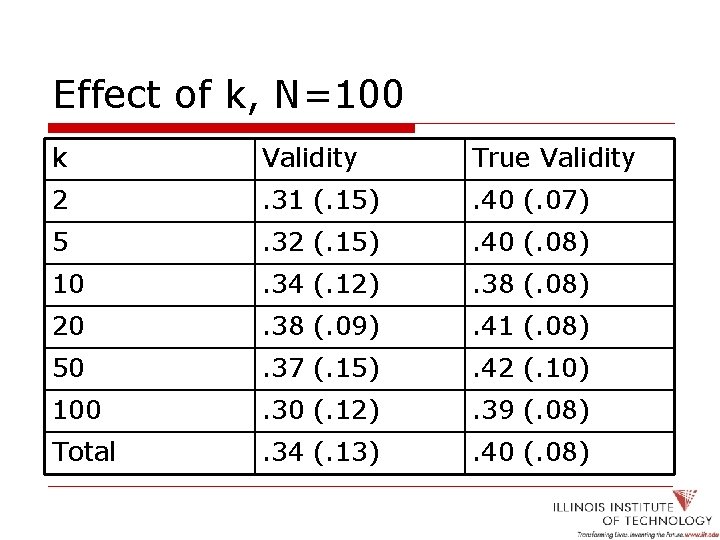

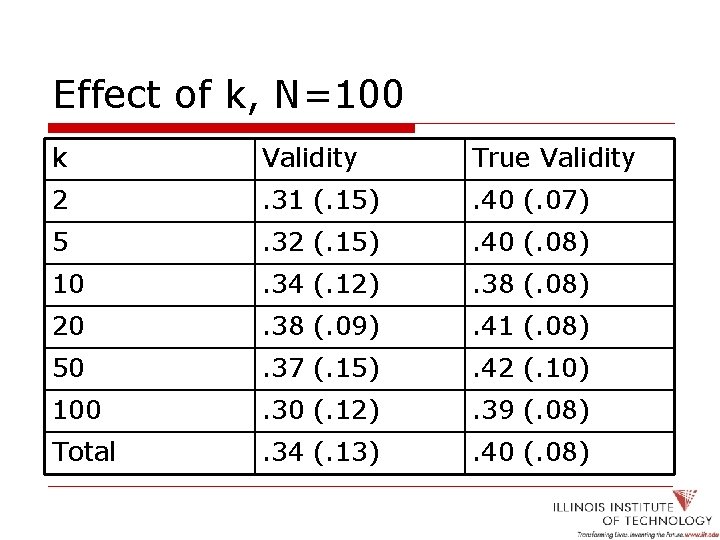

Effect of k, N=100 k Validity True Validity 2 . 31 (. 15) . 40 (. 07) 5 . 32 (. 15) . 40 (. 08) 10 . 34 (. 12) . 38 (. 08) 20 . 38 (. 09) . 41 (. 08) 50 . 37 (. 15) . 42 (. 10) 100 . 30 (. 12) . 39 (. 08) Total . 34 (. 13) . 40 (. 08)

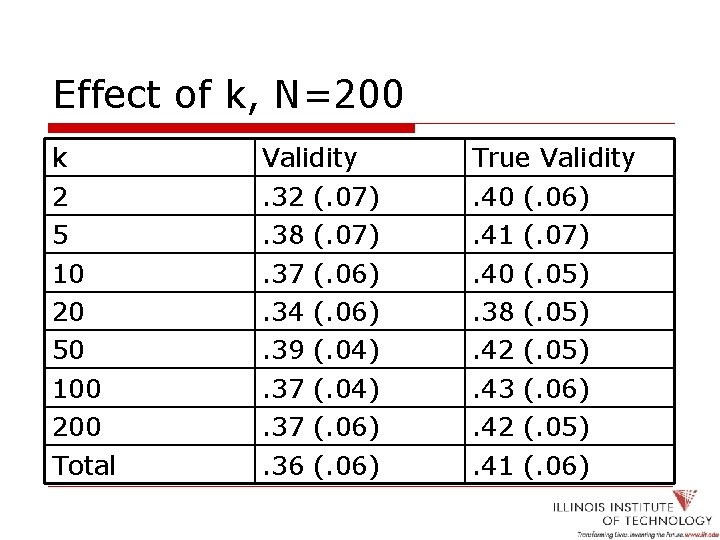

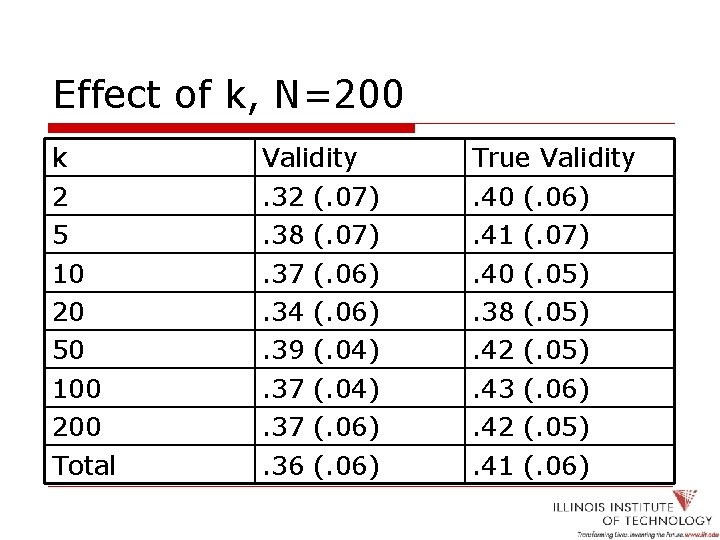

Effect of k, N=200 k 2 5 10 20 50 100 200 Total Validity. 32 (. 07). 38 (. 07). 37 (. 06). 34 (. 06). 39 (. 04). 37 (. 06). 36 (. 06) True Validity. 40 (. 06). 41 (. 07). 40 (. 05). 38 (. 05). 42 (. 05). 43 (. 06). 42 (. 05). 41 (. 06)

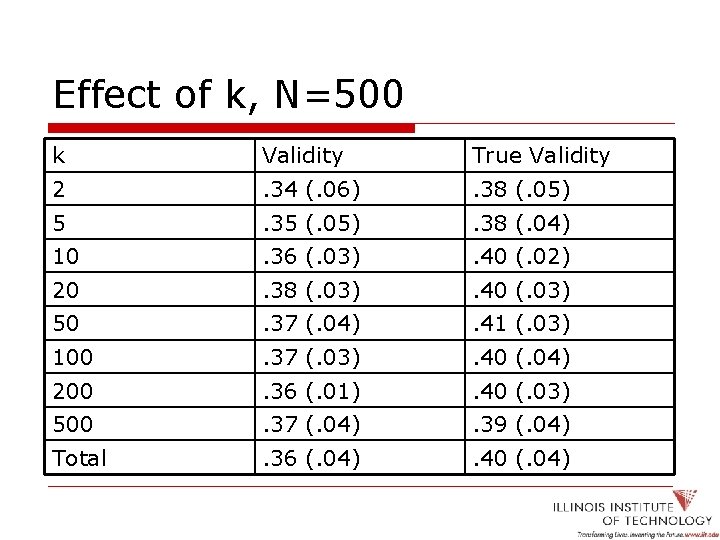

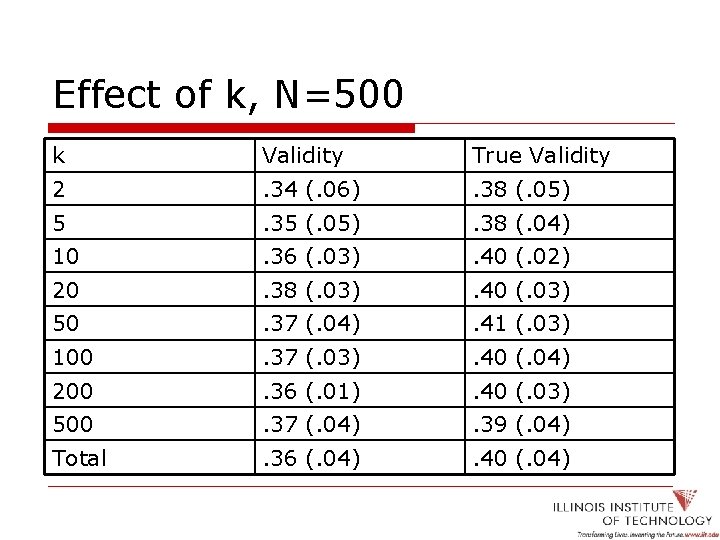

Effect of k, N=500 k Validity True Validity 2 . 34 (. 06) . 38 (. 05) 5 . 35 (. 05) . 38 (. 04) 10 . 36 (. 03) . 40 (. 02) 20 . 38 (. 03) . 40 (. 03) 50 . 37 (. 04) . 41 (. 03) 100 . 37 (. 03) . 40 (. 04) 200 . 36 (. 01) . 40 (. 03) 500 . 37 (. 04) . 39 (. 04) Total . 36 (. 04) . 40 (. 04)

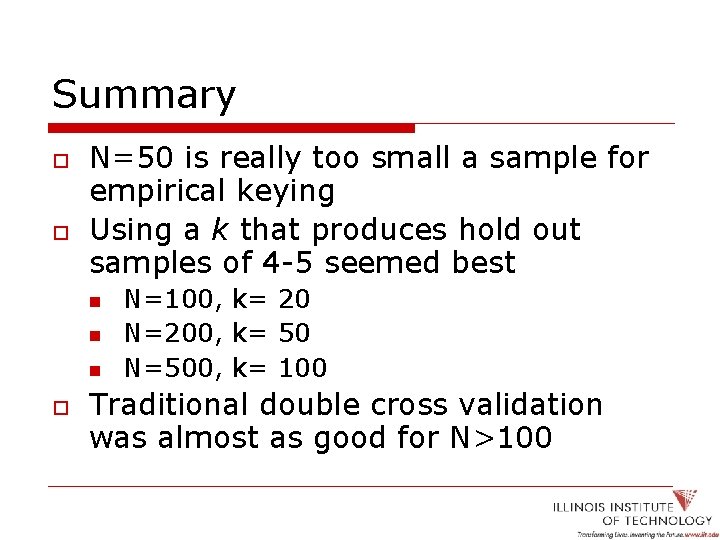

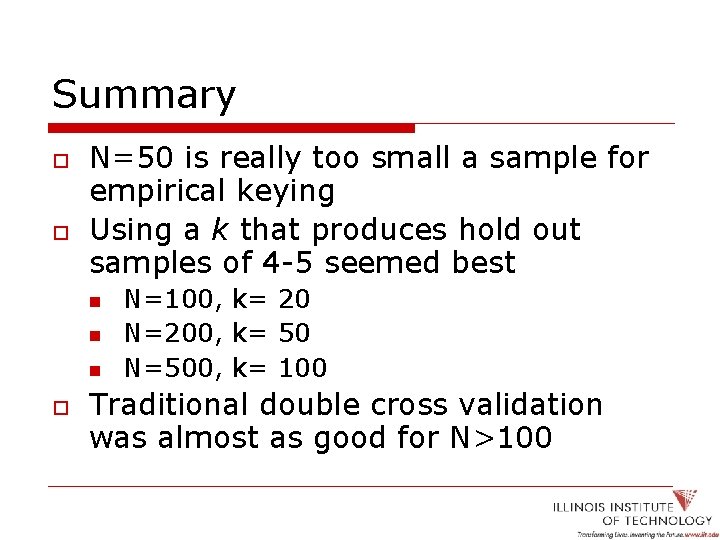

Summary o o N=50 is really too small a sample for empirical keying Using a k that produces hold out samples of 4 -5 seemed best n n n o N=100, k= 20 N=200, k= 50 N=500, k= 100 Traditional double cross validation was almost as good for N>100

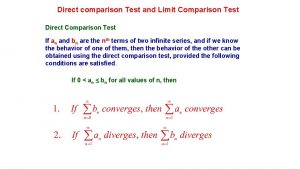

Limit comparison test

Limit comparison test West side story, romeo and juliet comparison chart

West side story, romeo and juliet comparison chart Definition of compare and contrast essay

Definition of compare and contrast essay Iso/osi vs. tcp/ip

Iso/osi vs. tcp/ip Comparison and critique of osi and tcp/ip model

Comparison and critique of osi and tcp/ip model Comparison and contrast outline

Comparison and contrast outline Comparison and contrast example paragraph

Comparison and contrast example paragraph Differentiate between conservancy and water carriage system

Differentiate between conservancy and water carriage system Datagram network vs virtual circuit network

Datagram network vs virtual circuit network Uniform costing advantages and disadvantages

Uniform costing advantages and disadvantages Kamikaze power of nature

Kamikaze power of nature The graceful slopes glow even clearer

The graceful slopes glow even clearer How are similarities passed from parent to offspring

How are similarities passed from parent to offspring Comparison between monitoring and evaluation

Comparison between monitoring and evaluation Description pattern example

Description pattern example Pattern and development

Pattern and development Sarcoplasmic

Sarcoplasmic The network layer is concerned with of data

The network layer is concerned with of data