Introduction to concepts in deep learning and crossvalidation

- Slides: 45

Introduction to concepts in deep learning and crossvalidation Rick Klein Université Grenoble Alpes

Preamble 2

Preamble ○ We are not very efficient 3

Preamble ○ We are not very efficient 4

Preamble ○ We are not very efficient ○ We are remarkably inefficient □ Developing theory when 50/50 finding replicates □ Convinced false positives contribute 5

Preamble ○ We are not very efficient ○ We are remarkably inefficient □ Developing theory when 50/50 finding replicates □ Convinced false positives contribute ○ And yet despite this, we’ve learned a ton about human behavior and the brain 6

Preamble ○ We are not very efficient ○ We are remarkably inefficient □ Developing theory when 50/50 finding replicates □ Convinced false positives contribute ○ And yet despite this, we’ve learned a ton about human behavior and the brain ○ Imagine if we fix it? 7

Preamble ○ 2012 -> Kate Ratliff (UF) new lab □ First/only phd student □ Lab protocol ○ 2014 -> Many Labs 1 published □ Totally revise lab protocol ○ 2018 -> Hans IJzerman (UGA) new lab □ First/only postdoc □ Establish lab workflow (corelab. io) 8

Preamble ○ 2012 -> Kate Ratliff (UF) new lab □ First/only phd student □ Lab protocol ○ 2014 -> Many Labs 1 published □ Totally revise lab protocol ○ 2018 -> Hans IJzerman (UGA) new lab □ First/only postdoc □ Establish lab workflow (corelab. io) 9 Final. docx?

Preamble ○ Leave workflow talk to Frederik ○ Talk about exciting possibilities □ At least one solid one (guilt-free phacking!) ○ Suspend disbelief □ Don’t necessarily try to integrate this with your current research paradigm □ Exposure + different way of thinking ○ Tools have revolutionized other fields 10

Preamble ○ …Will show immediately useful tools also □ E. g. , how to do exploratory/secondary data analysis with confidence □ (half this talk is “things I accidentally learned from data scientists that are actually super helpful”) ○ Perhaps different/complementary approaches 11

Workshop Goals ○ Machine learning/deep learning intro ○ Lessons from computer science □ □ Cross-validation + prediction R/Rstudio + Reproducible code Git. Hub + collaboration/exposure But I’m not a computer scientist! ○ Make machine learning accessible □ Incentive to learn R □ Fun hands-on examples 12

Caveats ○ Don’t forget: □ □ Replication Pre-registration Increasing statistical power (+collaborations) Open data/materials/code 13

What is Machine Learning? ○ Machine learning □ Broad field □ Humans define inputs/structure/outputs □ Computer “learns” parameters ○ Three broad categories: □ Supervised learning □ Unsupervised learning □ Reinforcement learning 14

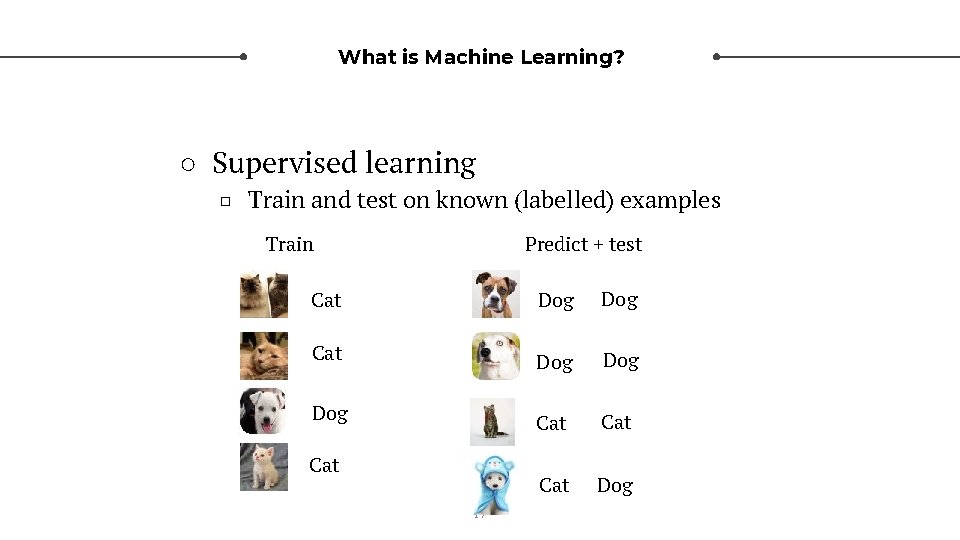

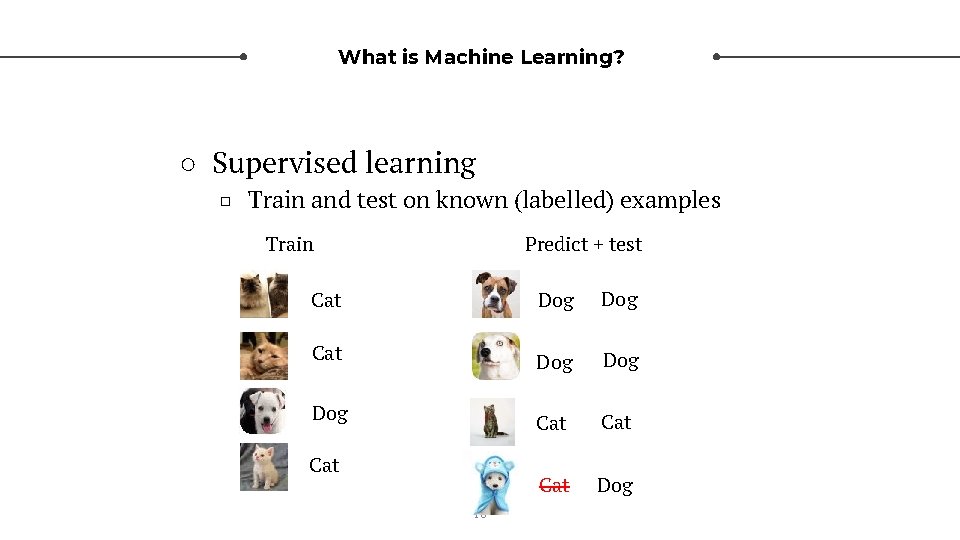

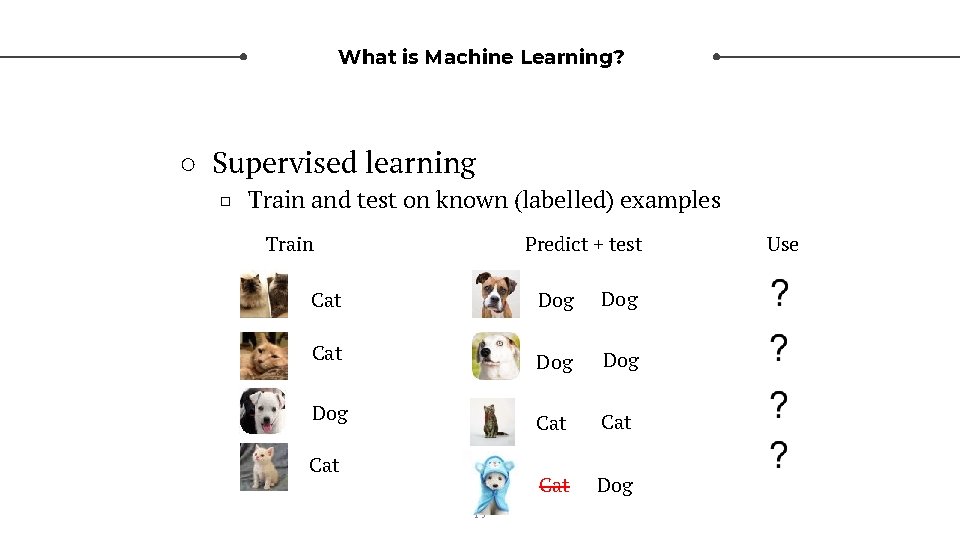

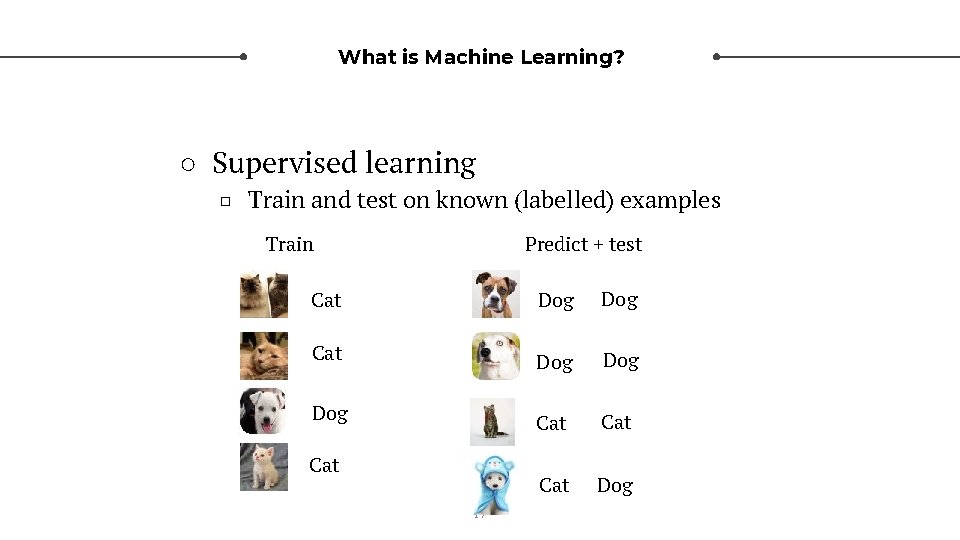

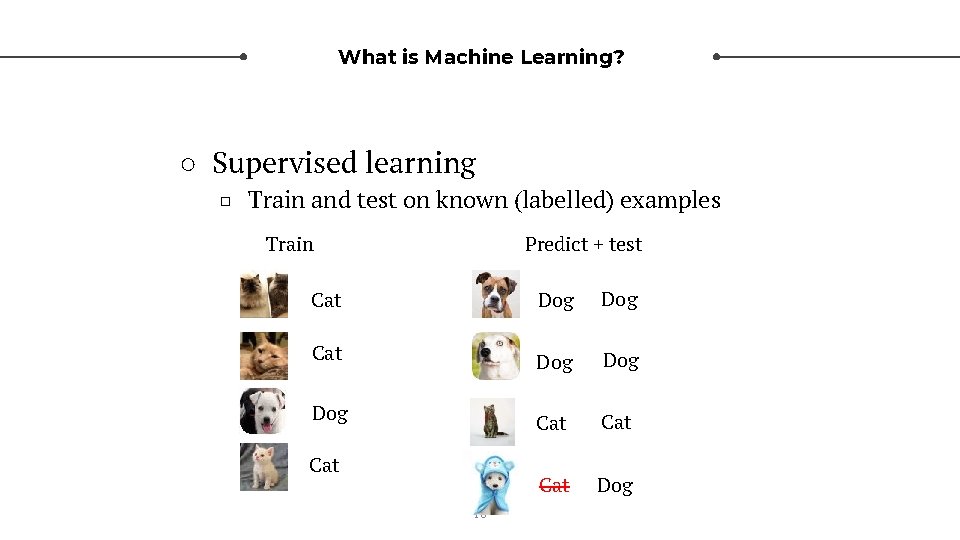

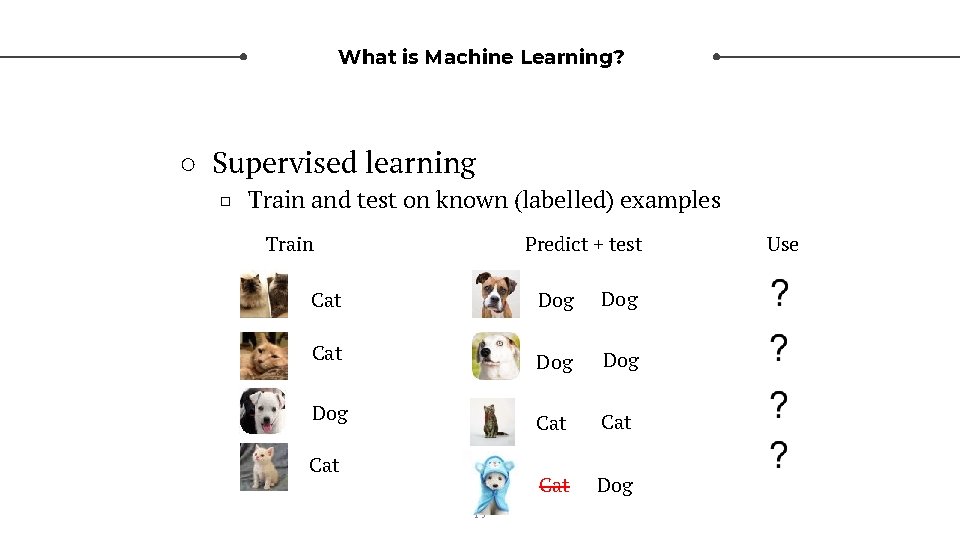

What is Machine Learning? ○ Supervised learning □ Train and test on known (labelled) examples 15

What is Machine Learning? ○ Supervised learning □ Train and test on known (labelled) examples Train Cat Dog Cat 16

What is Machine Learning? ○ Supervised learning □ Train and test on known (labelled) examples Predict + test Train Cat Dog Dog Cat Cat Dog Cat 17

What is Machine Learning? ○ Supervised learning □ Train and test on known (labelled) examples Predict + test Train Cat Dog Dog Cat Cat Dog Cat 18

What is Machine Learning? ○ Supervised learning □ Train and test on known (labelled) examples Predict + test Train Cat Dog Dog Cat Cat Dog Cat 19 Use

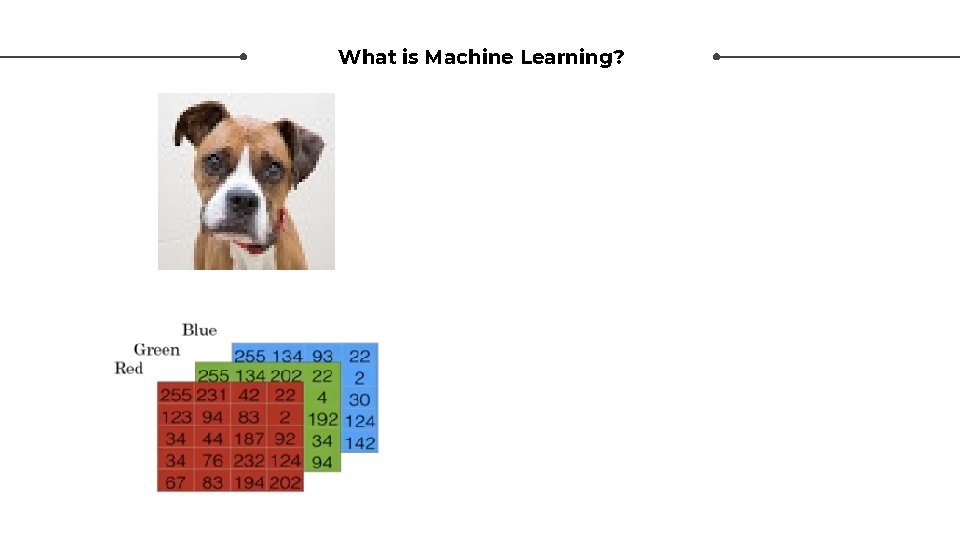

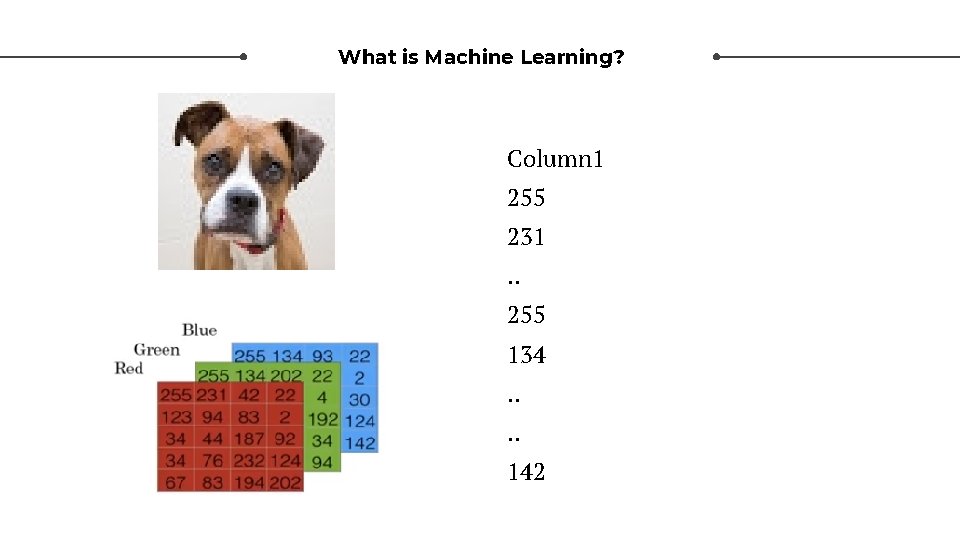

What is Machine Learning?

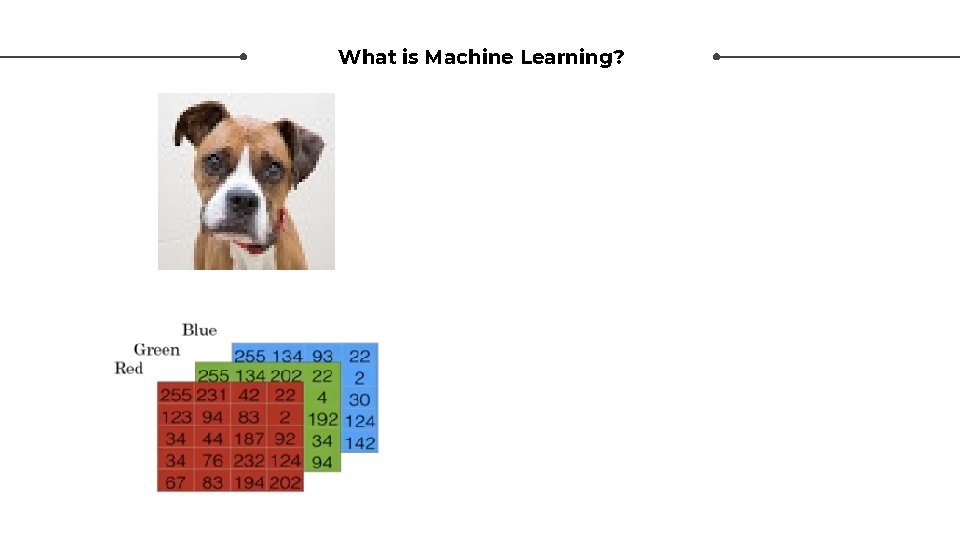

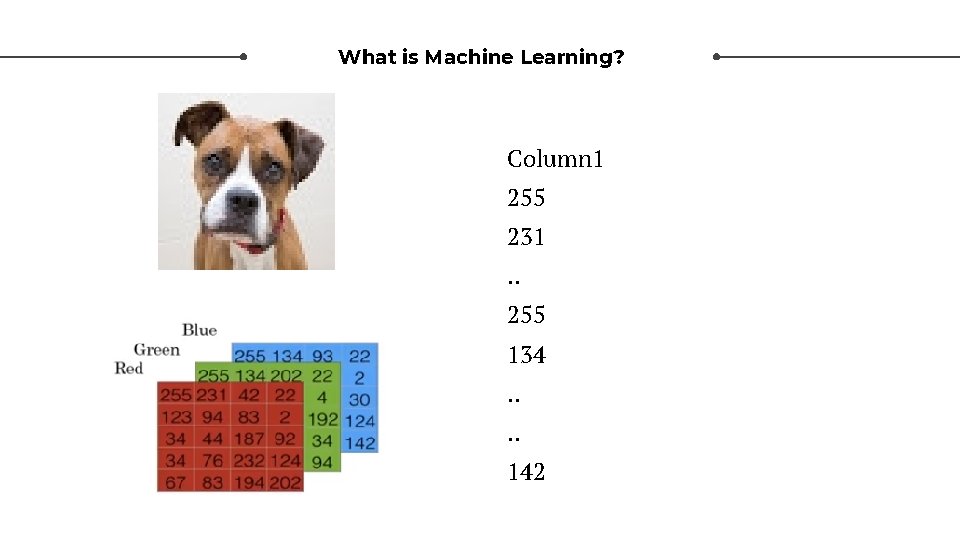

What is Machine Learning?

What is Machine Learning? Column 1 255 231. . 255 134. . 142

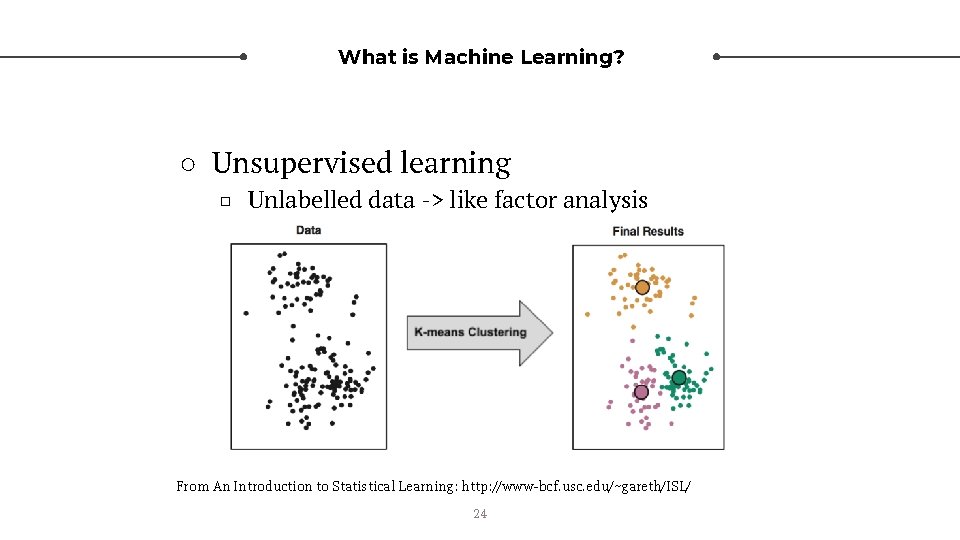

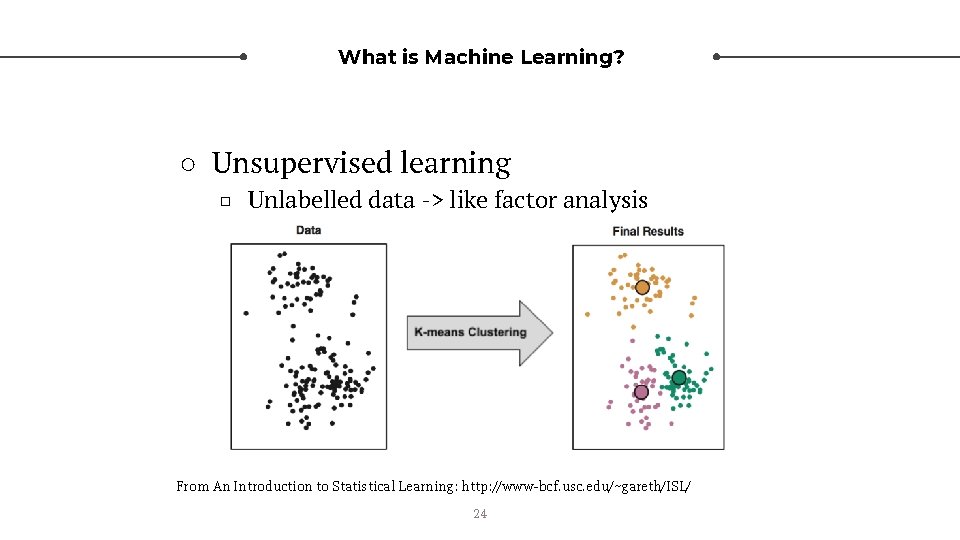

What is Machine Learning? ○ Unsupervised learning □ Unlabelled data -> like factor analysis 23

What is Machine Learning? ○ Unsupervised learning □ Unlabelled data -> like factor analysis From An Introduction to Statistical Learning: http: //www-bcf. usc. edu/~gareth/ISL/ 24

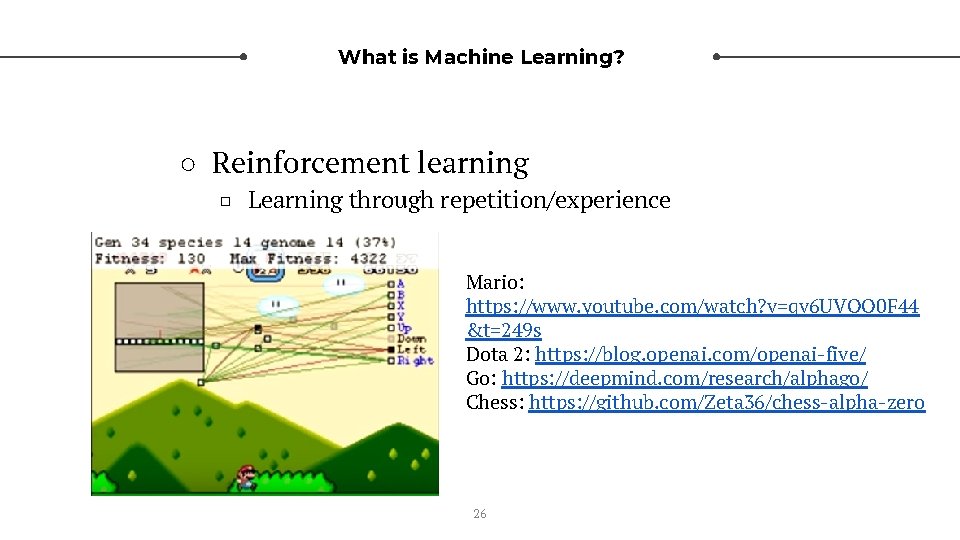

What is Machine Learning? ○ Reinforcement learning □ Learning through repetition/experience 25

What is Machine Learning? ○ Reinforcement learning □ Learning through repetition/experience Mario: https: //www. youtube. com/watch? v=qv 6 UVOQ 0 F 44 &t=249 s Dota 2: https: //blog. openai. com/openai-five/ Go: https: //deepmind. com/research/alphago/ Chess: https: //github. com/Zeta 36/chess-alpha-zero 26

What is Machine Learning? ○ Deep learning = Artificial Neural Network □ Specific sub-type of machine learning (kind of) □ Can also have “shallow” neural network □ Today, focus on supervised learning ○ Is this “AI”? □ Not “general” intelligence 27

Deep Learning is Amazing ○ Self-driving cars □ https: //selfdrivingcars. mit. edu/ ○ Facial recognition ○ Siri/Cortana ○ Google Translate □ As of Sept 2016 ○ Which ads to show users 28

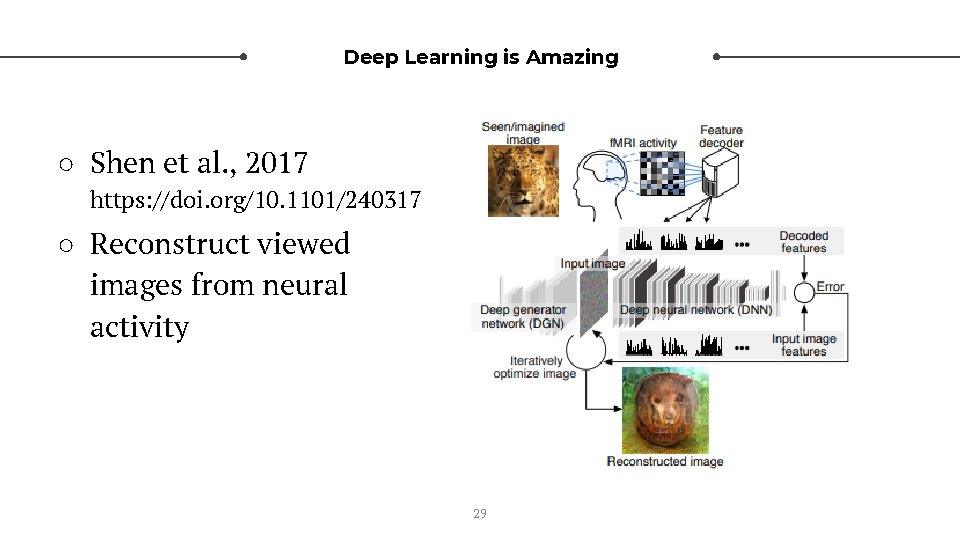

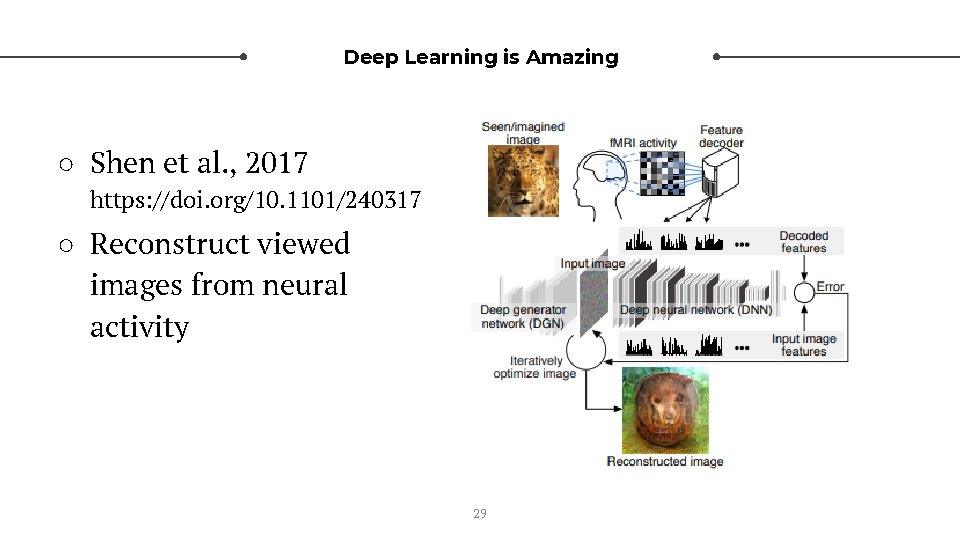

Deep Learning is Amazing ○ Shen et al. , 2017 https: //doi. org/10. 1101/240317 ○ Reconstruct viewed images from neural activity 29

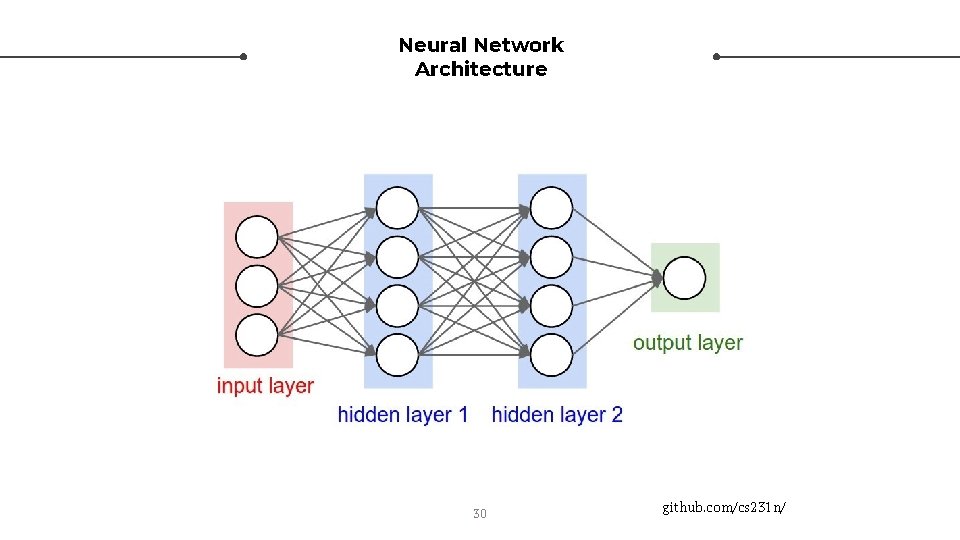

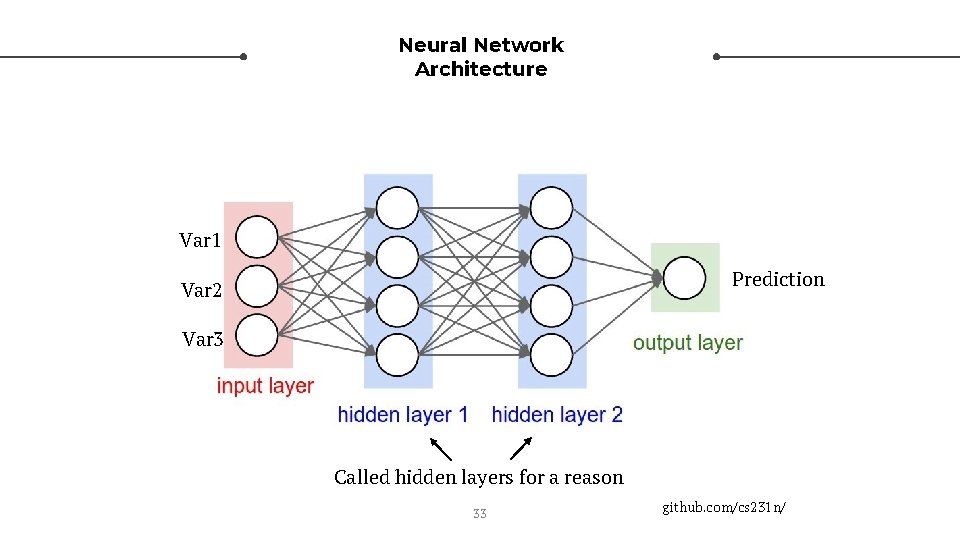

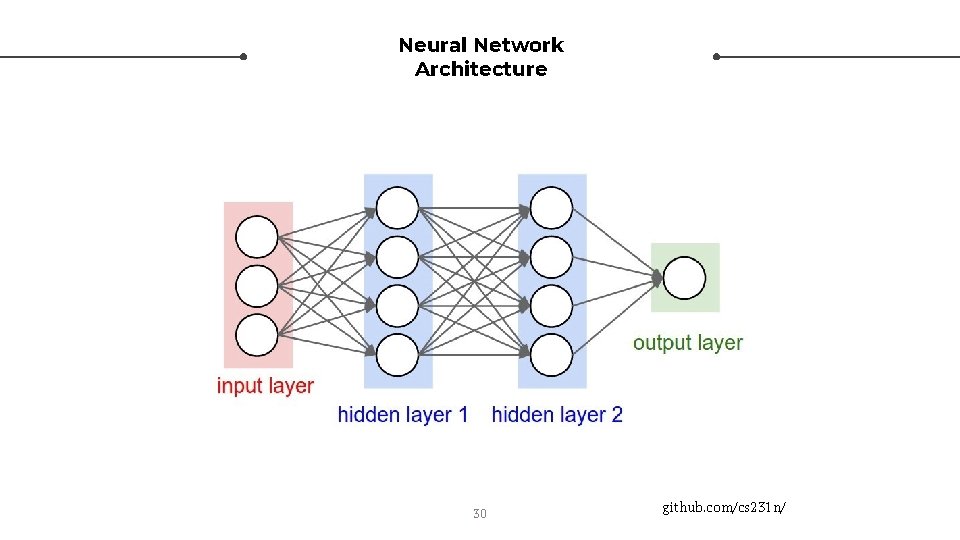

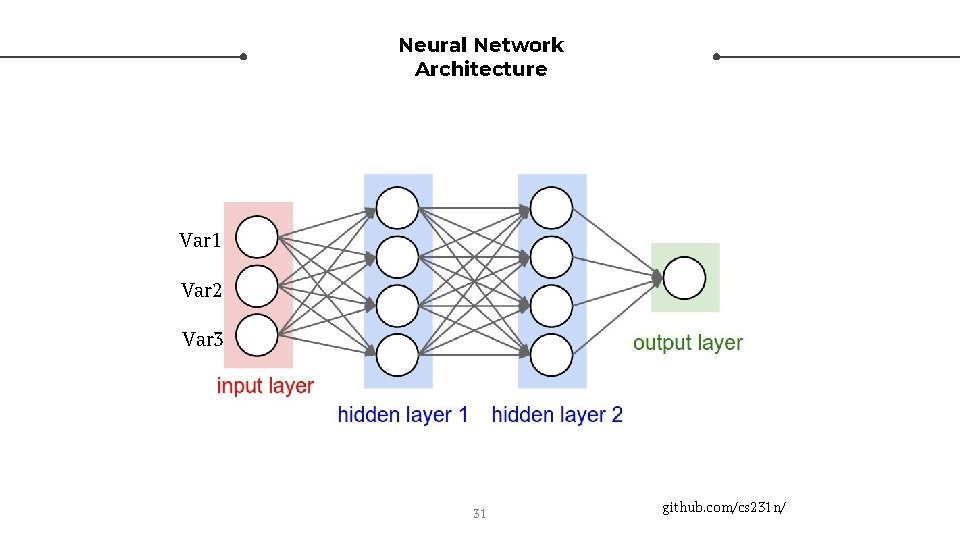

Neural Network Architecture 30 github. com/cs 231 n/

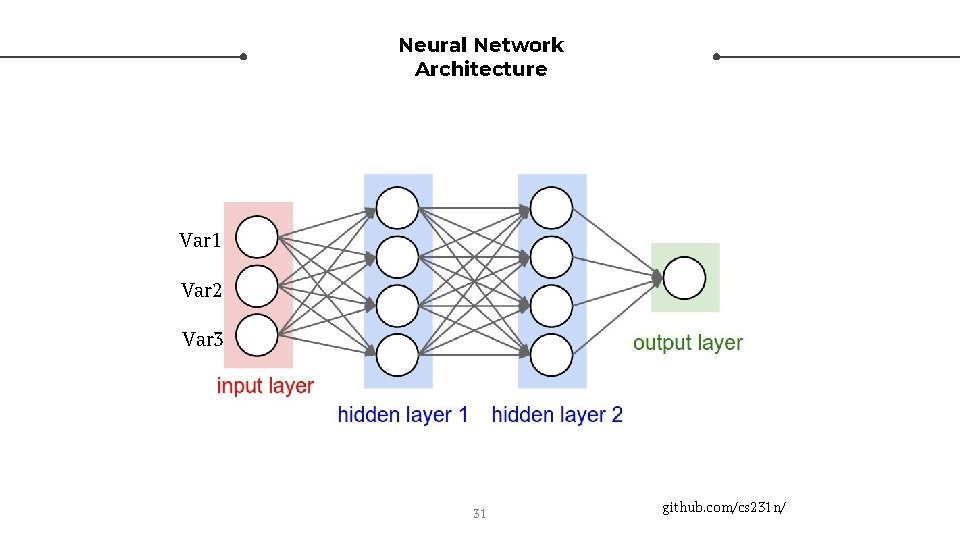

Neural Network Architecture Var 1 Var 2 Var 3 31 github. com/cs 231 n/

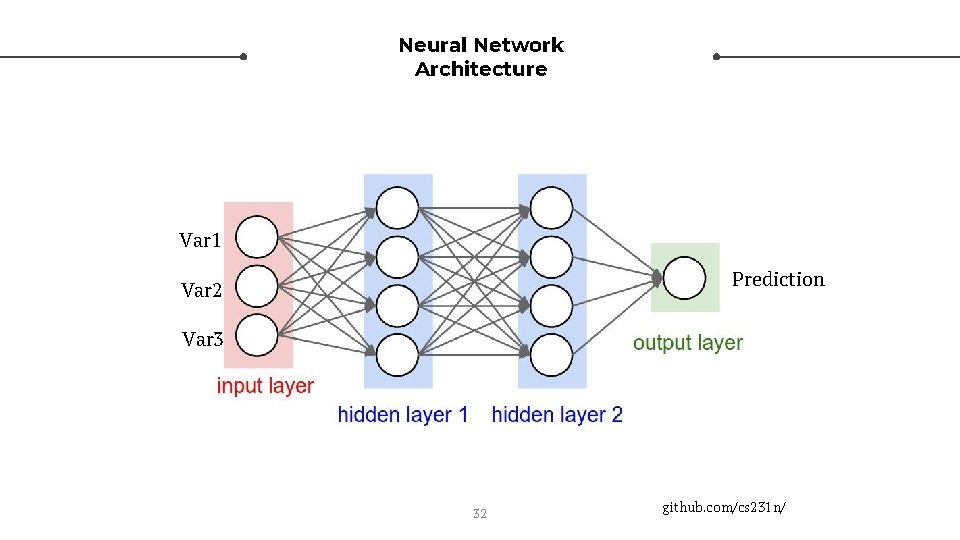

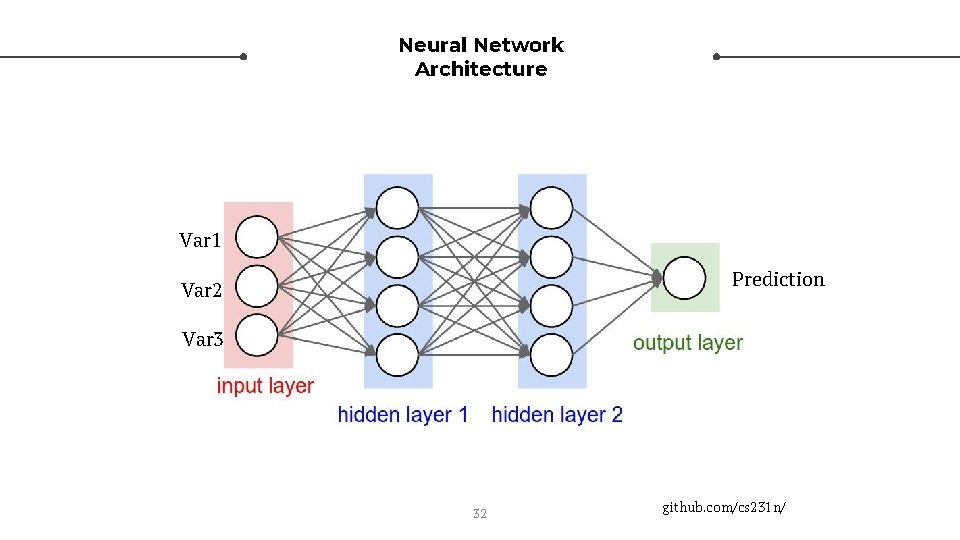

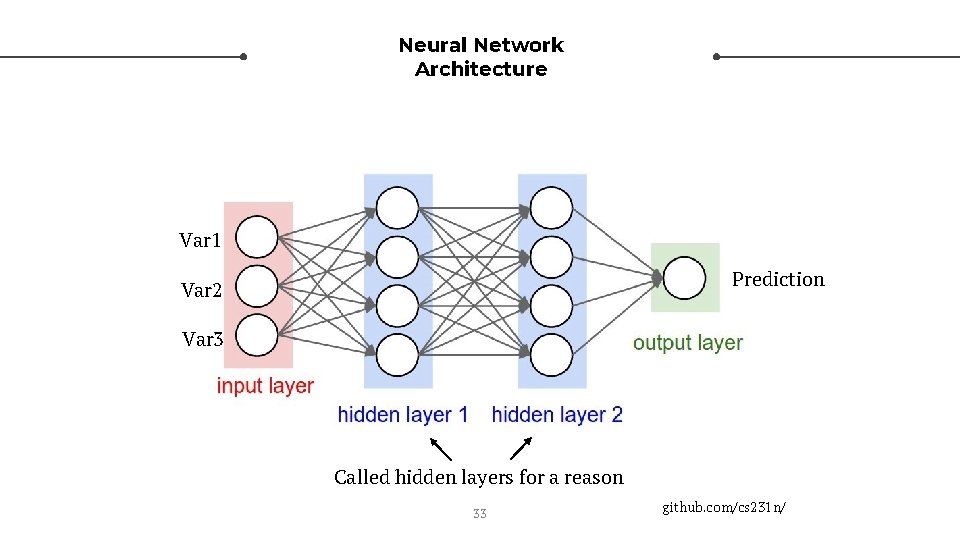

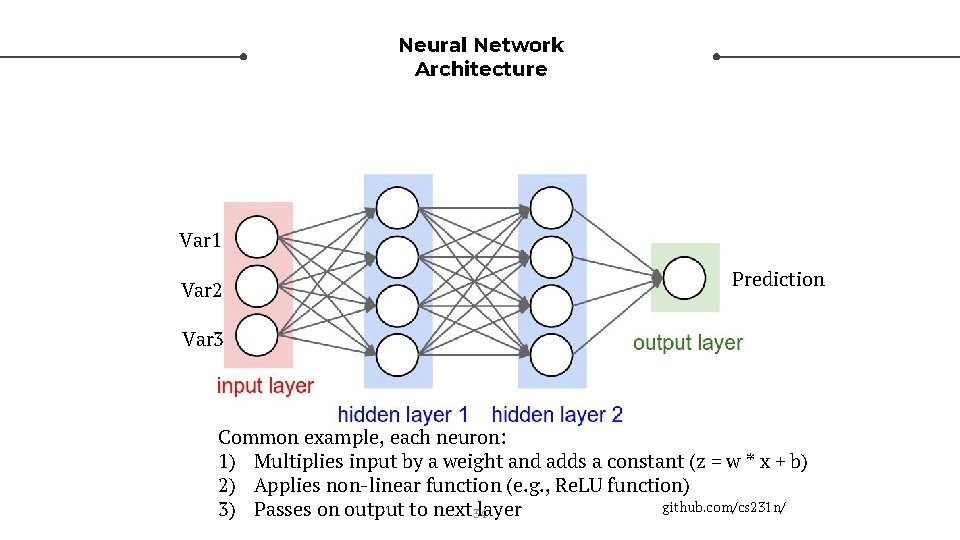

Neural Network Architecture Var 1 Prediction Var 2 Var 3 32 github. com/cs 231 n/

Neural Network Architecture Var 1 Prediction Var 2 Var 3 Called hidden layers for a reason 33 github. com/cs 231 n/

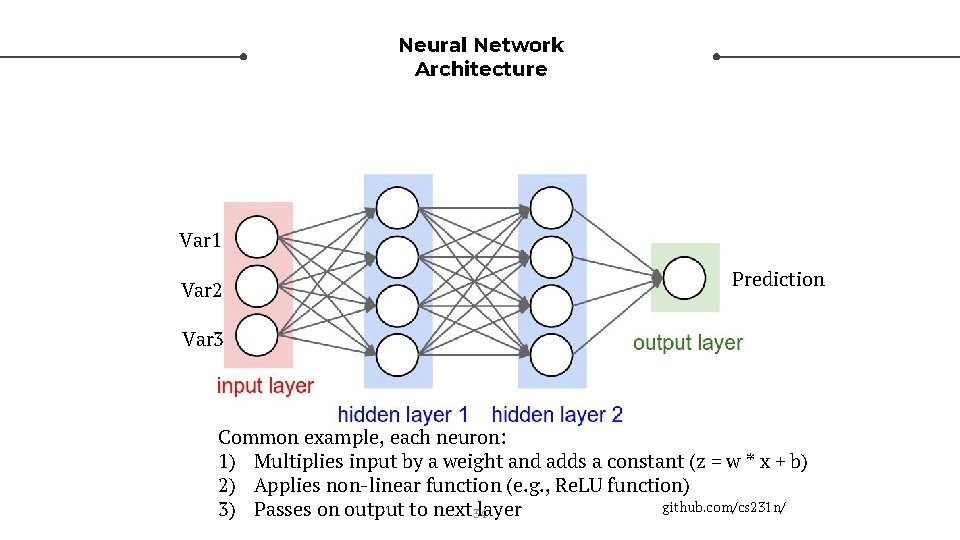

Neural Network Architecture Var 1 Var 2 Prediction Var 3 Common example, each neuron: 1) Multiplies input by a weight and adds a constant (z = w * x + b) 2) Applies non-linear function (e. g. , Re. LU function) github. com/cs 231 n/ 3) Passes on output to next 34 layer

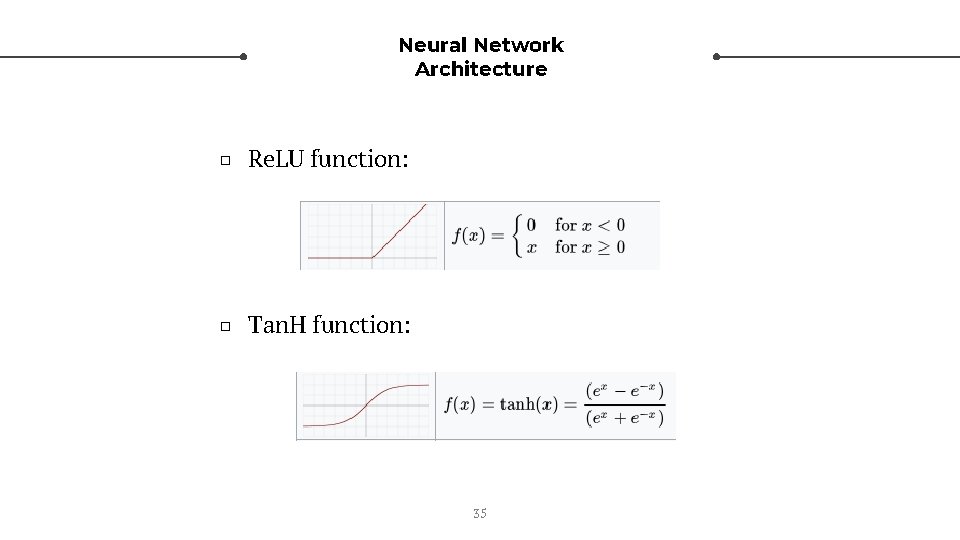

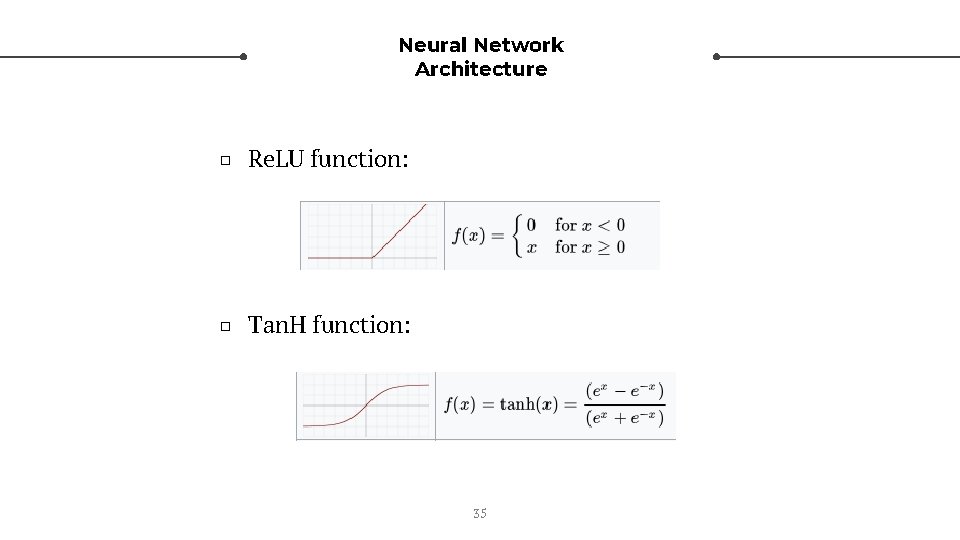

Neural Network Architecture □ Re. LU function: □ Tan. H function: 35

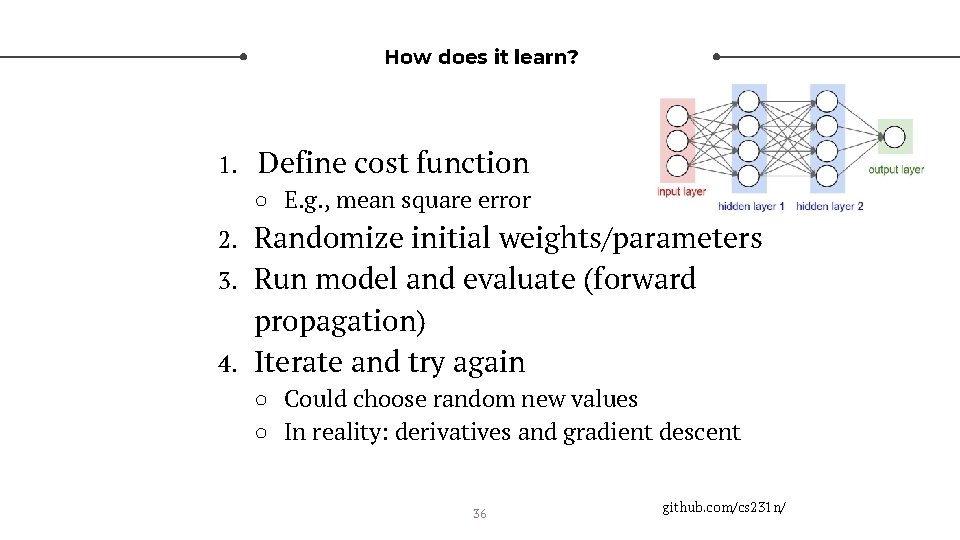

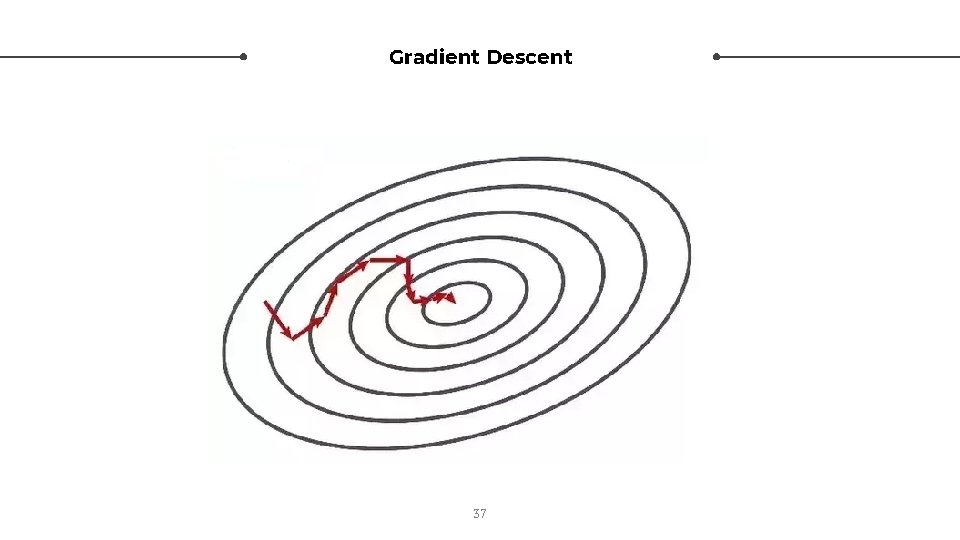

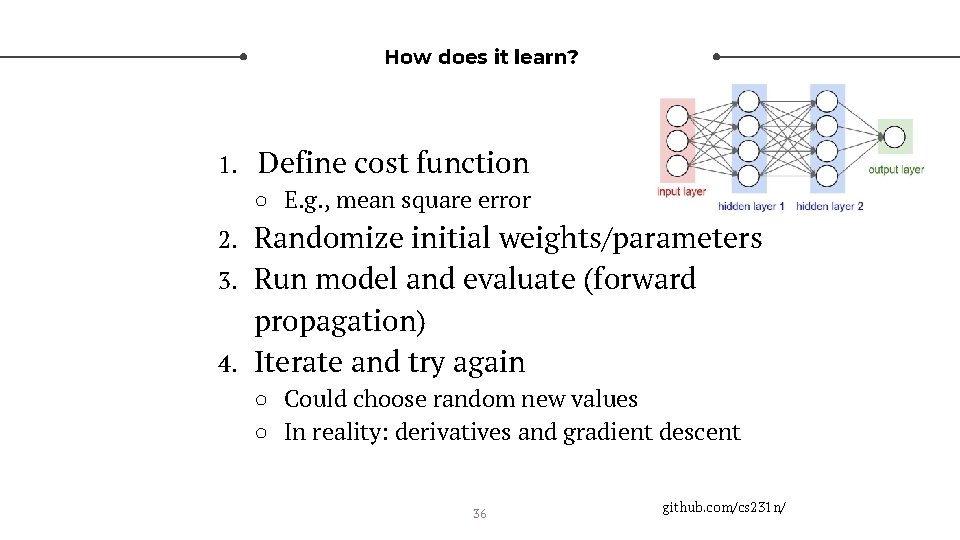

How does it learn? 1. Define cost function ○ E. g. , mean square error 2. 3. 4. Randomize initial weights/parameters Run model and evaluate (forward propagation) Iterate and try again ○ Could choose random new values ○ In reality: derivatives and gradient descent 36 github. com/cs 231 n/

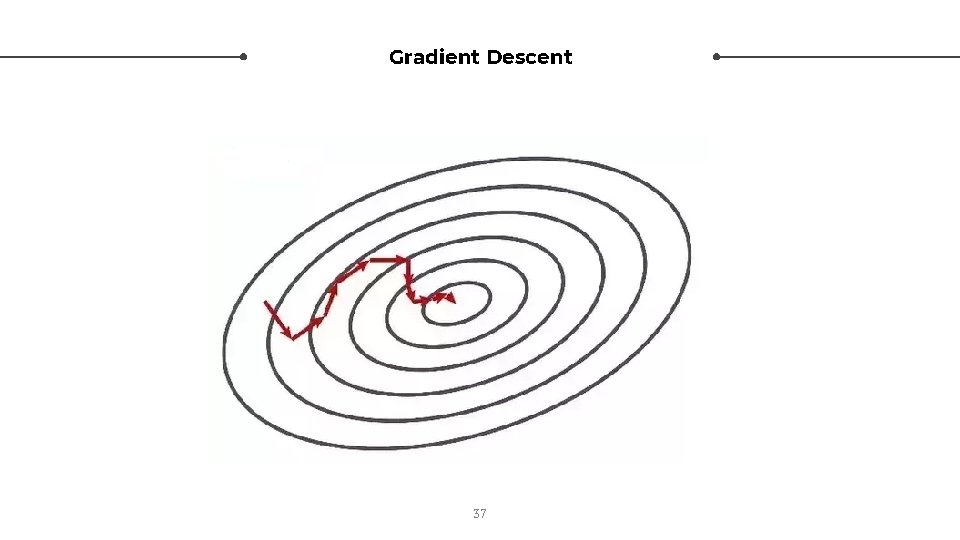

Gradient Descent 37

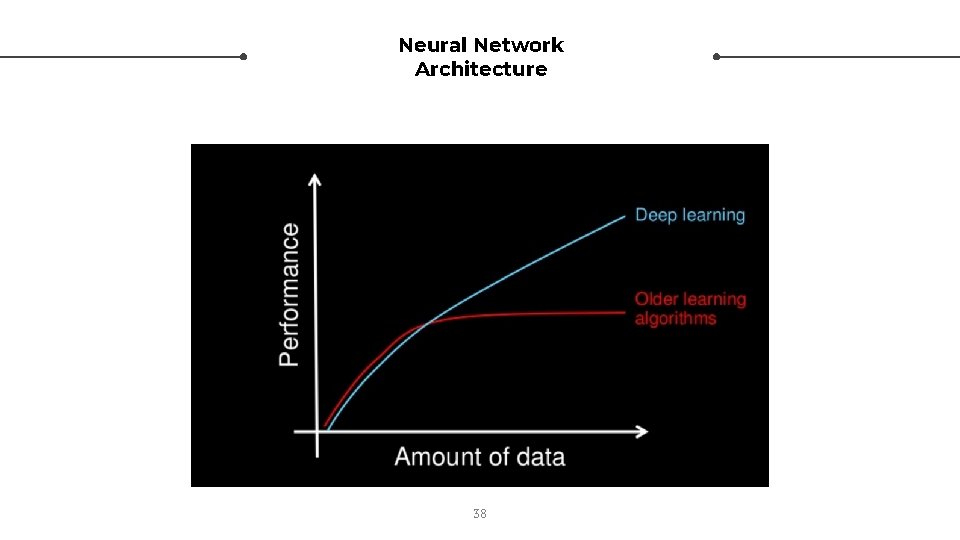

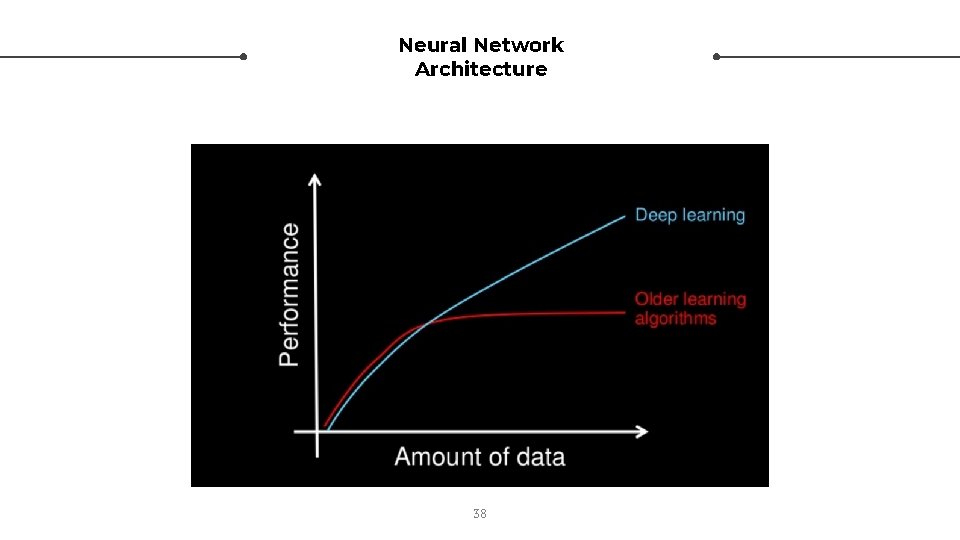

Neural Network Architecture 38

Neural Network Architecture □ Many different kinds of hidden layers ○ Pooling layers (managing shape/dimensions of data) ○ Convolutional layers (used in computer vision, edge detection, etc) □ Number of nodes? Layers? ○ Mix-and-match 39

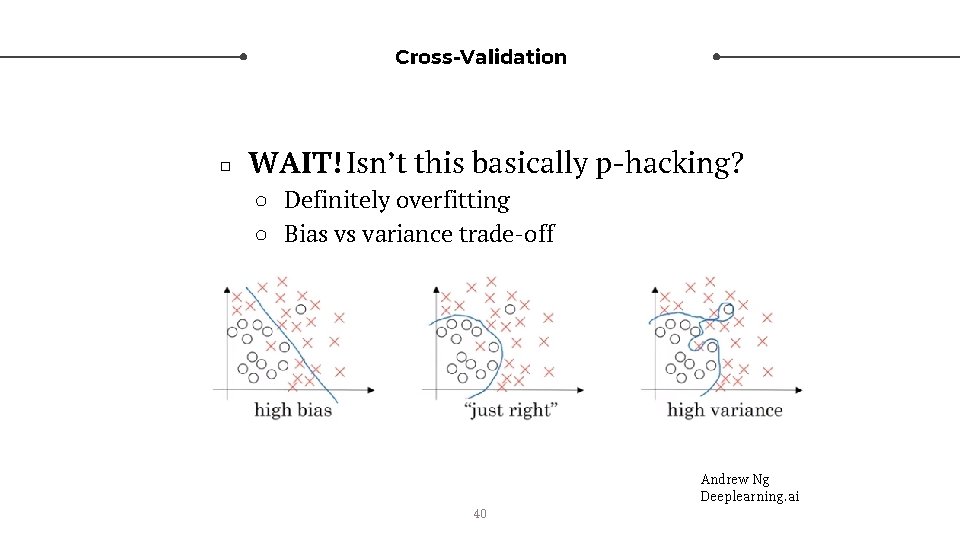

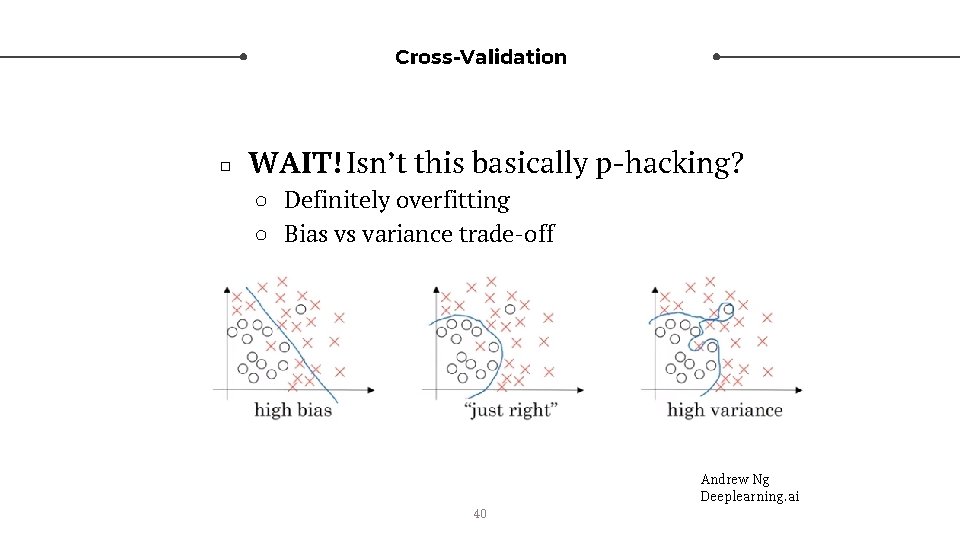

Cross-Validation □ WAIT! Isn’t this basically p-hacking? ○ Definitely overfitting ○ Bias vs variance trade-off 40 Andrew Ng Deeplearning. ai

Cross-Validation □ □ Solution: Make predictions and test them Immediately applicable to social psychology ○ Yarkoni and Westfall, 2017 □ Our statistical models are really not intended for predicting future outcomes ○ Machine learning or not 41

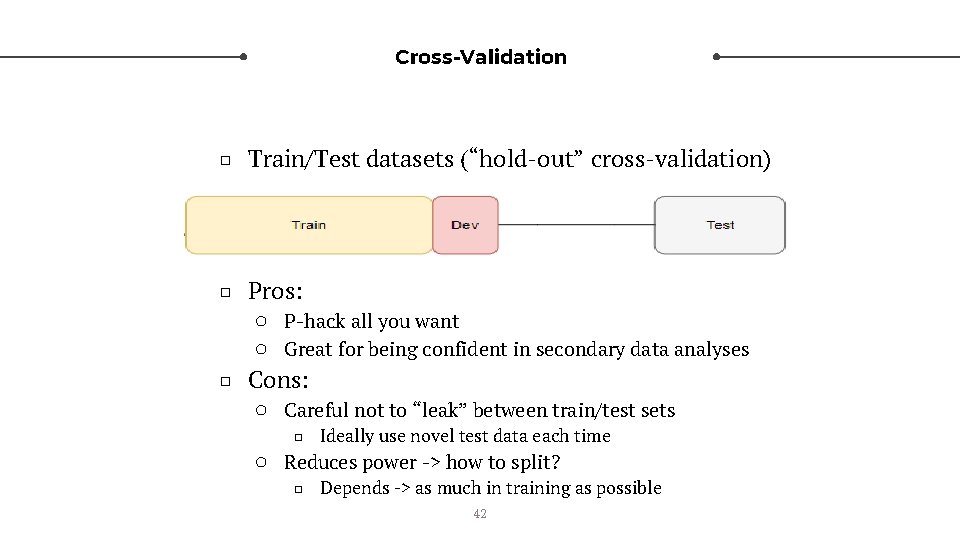

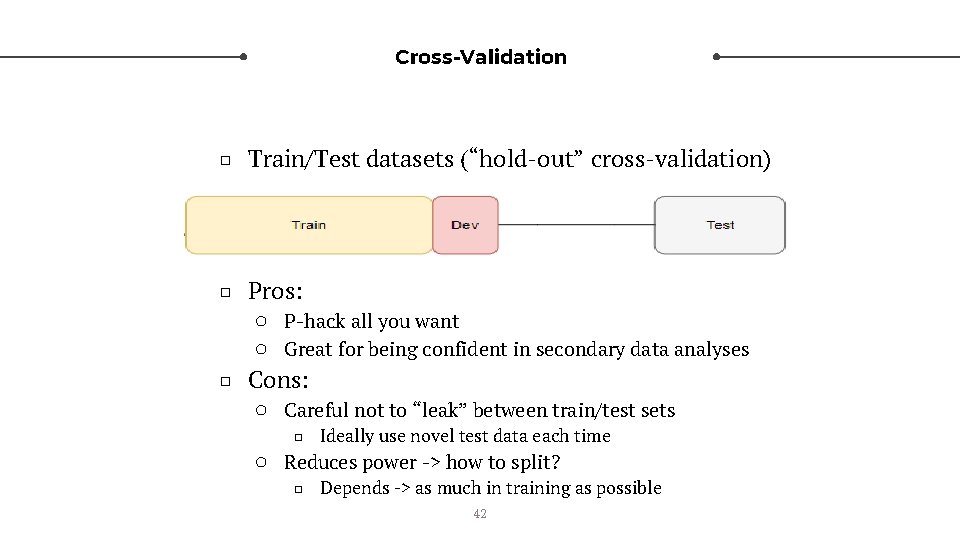

Cross-Validation □ Train/Test datasets (“hold-out” cross-validation) □ Pros: ○ P-hack all you want ○ Great for being confident in secondary data analyses □ Cons: ○ Careful not to “leak” between train/test sets □ Ideally use novel test data each time ○ Reduces power -> how to split? □ Depends -> as much in training as possible 42

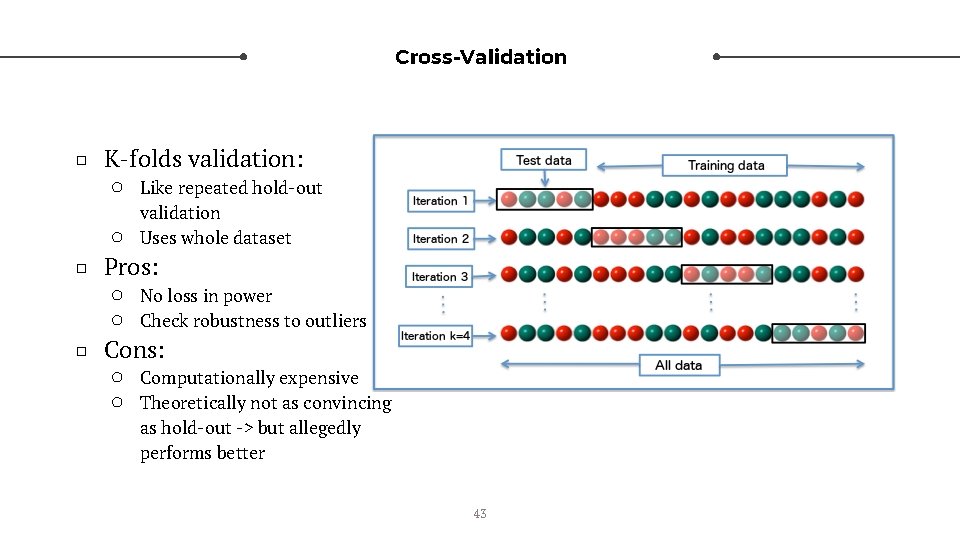

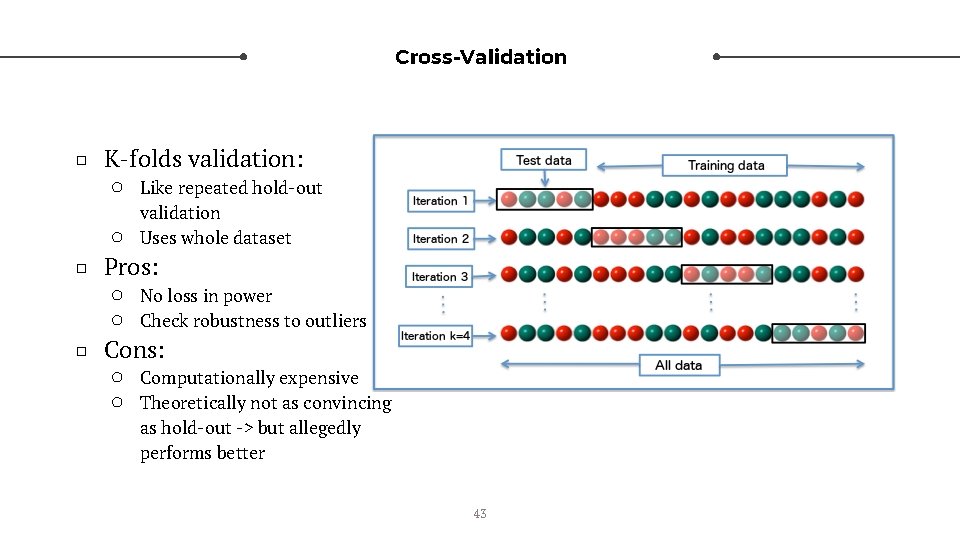

Cross-Validation □ K-folds validation: ○ Like repeated hold-out validation Uses whole dataset ○ □ Pros: ○ No loss in power ○ Check robustness to outliers □ Cons: ○ Computationally expensive ○ Theoretically not as convincing as hold-out -> but allegedly performs better 43

Deep Learning/Neural Nets ○ Extremely good at prediction □ Complex patterns in data □ Unstructured data or structured data ○ NOT very good at explanation □ Prediction or explanation? □ Use NN to see if something is there, other approaches to confirm mechanism □ May be tools coming 44

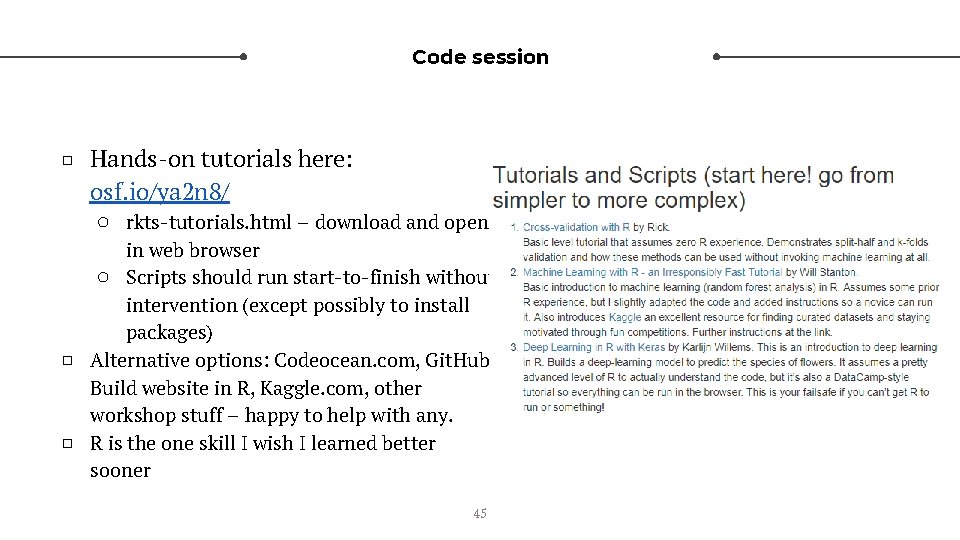

Code session □ Hands-on tutorials here: osf. io/ya 2 n 8/ ○ rkts-tutorials. html – download and open □ □ in web browser ○ Scripts should run start-to-finish without intervention (except possibly to install packages) Alternative options: Codeocean. com, Git. Hub, Build website in R, Kaggle. com, other workshop stuff – happy to help with any. R is the one skill I wish I learned better sooner 45