Zyg OS Achieving Low Tail Latency for Microsecondscale

- Slides: 36

Zyg. OS: Achieving Low Tail Latency for Microsecondscale Networked Tasks George Prekas, Marios Kogias, Edouard Bugnion 1

2

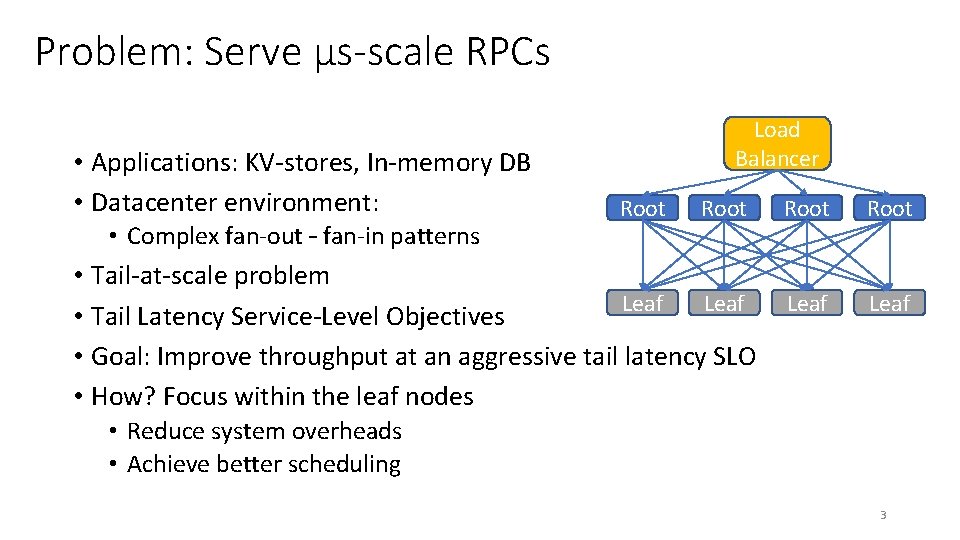

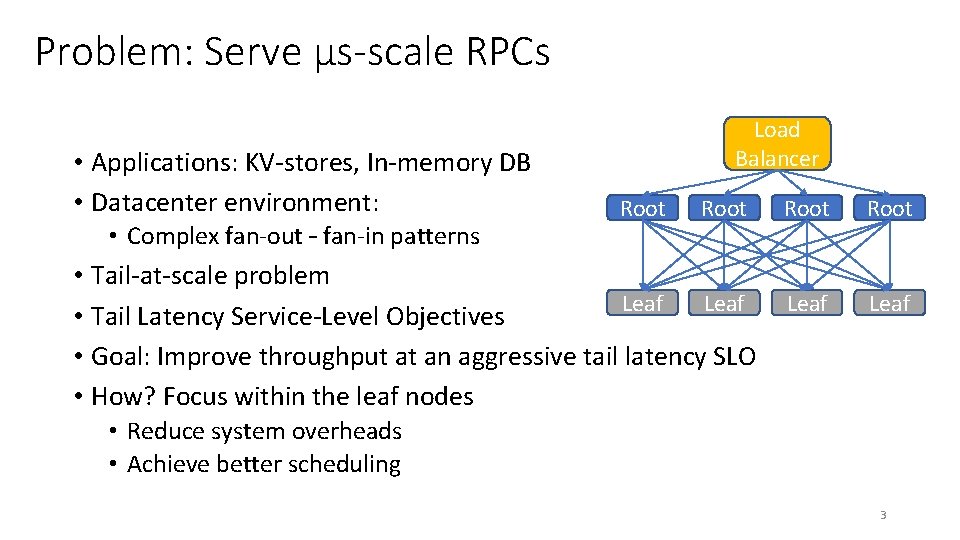

Problem: Serve μs-scale RPCs • Applications: KV-stores, In-memory DB • Datacenter environment: • Complex fan-out – fan-in patterns Load Balancer Root • Tail-at-scale problem Leaf • Tail Latency Service-Level Objectives • Goal: Improve throughput at an aggressive tail latency SLO • How? Focus within the leaf nodes Root Leaf • Reduce system overheads • Achieve better scheduling 3

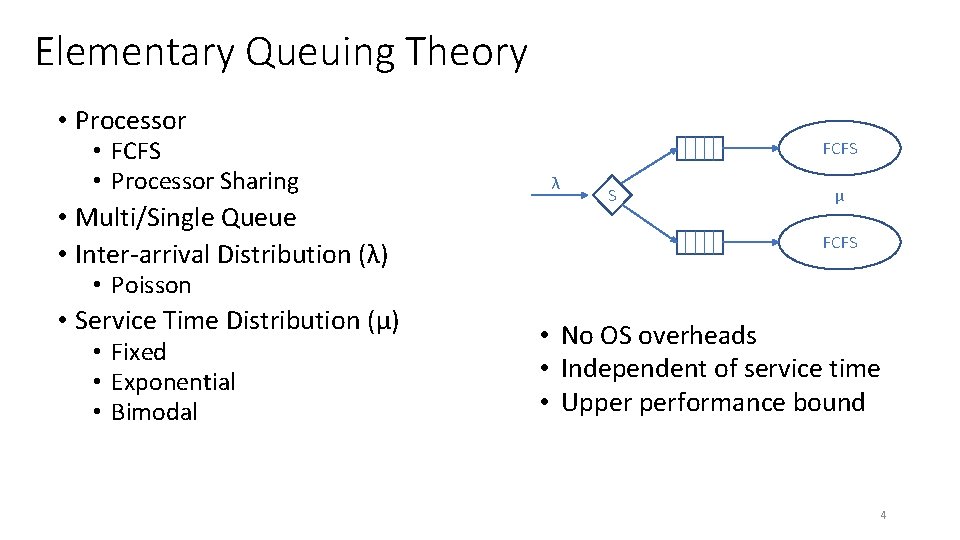

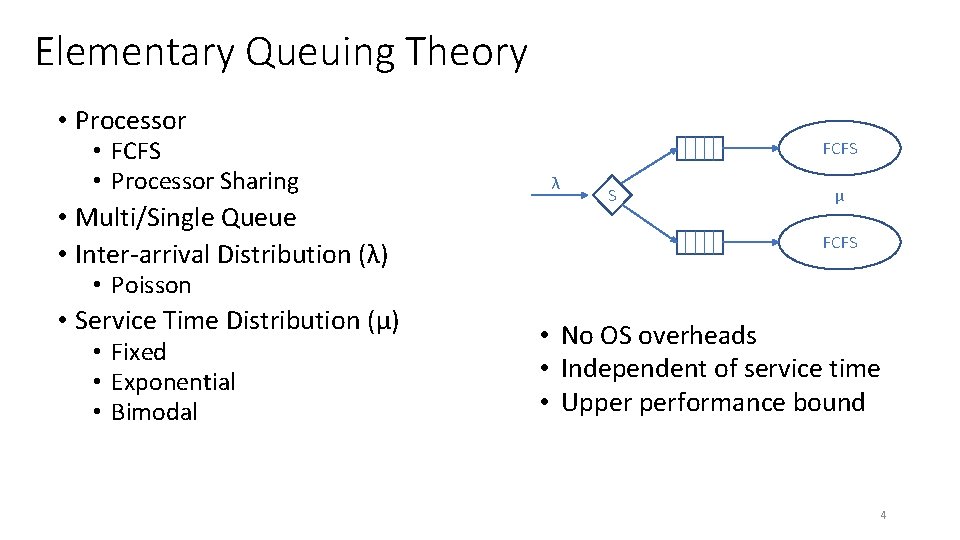

Elementary Queuing Theory • Processor • FCFS • Processor Sharing • Multi/Single Queue • Inter-arrival Distribution (λ) FCFS λ S μ FCFS • Poisson • Service Time Distribution (μ) • Fixed • Exponential • Bimodal • No OS overheads • Independent of service time • Upper performance bound 4

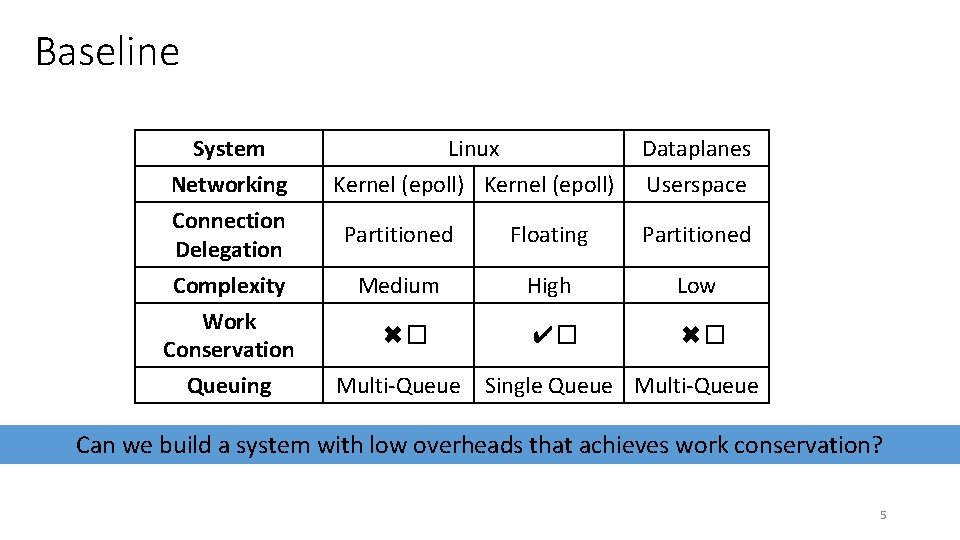

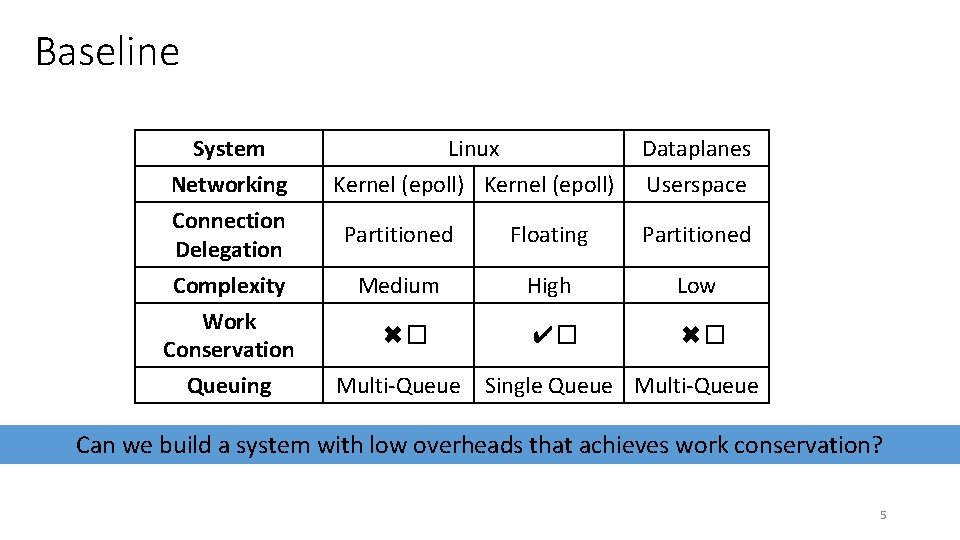

Baseline System Networking Connection Delegation Complexity Work Conservation Queuing Linux Kernel (epoll) Dataplanes Userspace Partitioned Floating Partitioned Medium High Low ✖� ✔� ✖� Multi-Queue Single Queue Multi-Queue Can we build a system with low overheads that achieves work conservation? 5

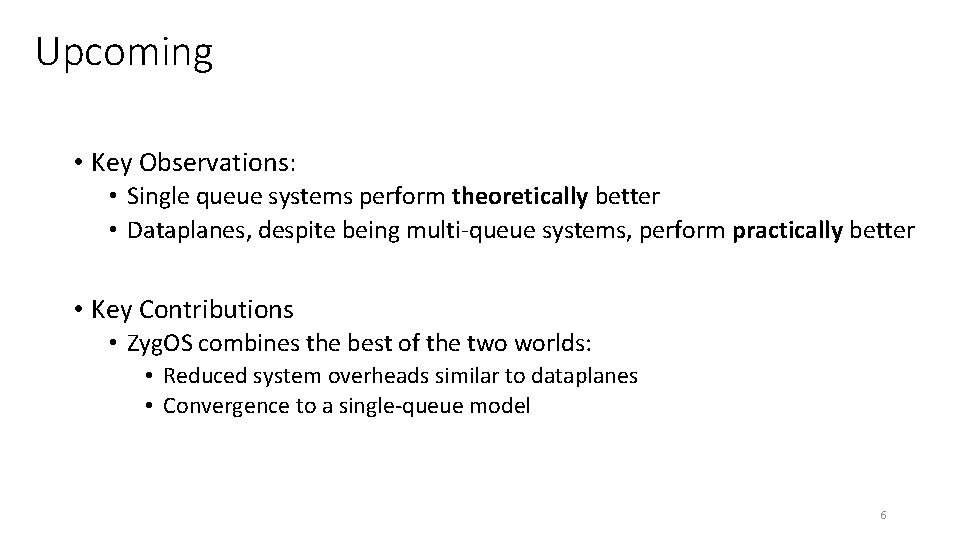

Upcoming • Key Observations: • Single queue systems perform theoretically better • Dataplanes, despite being multi-queue systems, perform practically better • Key Contributions • Zyg. OS combines the best of the two worlds: • Reduced system overheads similar to dataplanes • Convergence to a single-queue model 6

Analysis • Metric to optimize: Load @ Tail-Latency SLO • Run timescale-independent simulations • Run synthetic benchmarks on real system • Questions: • Which model achieves better throughput? • Which system converges to its model at low service times? 7

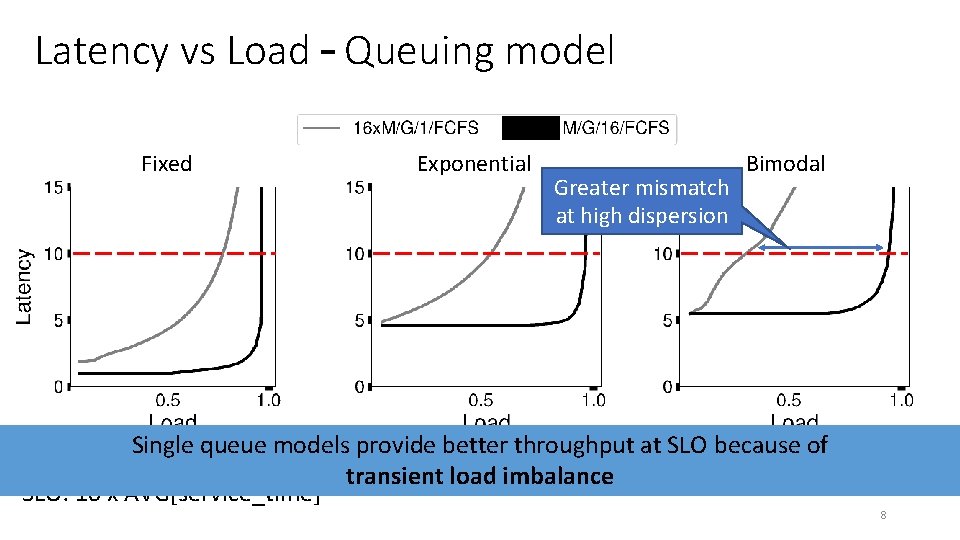

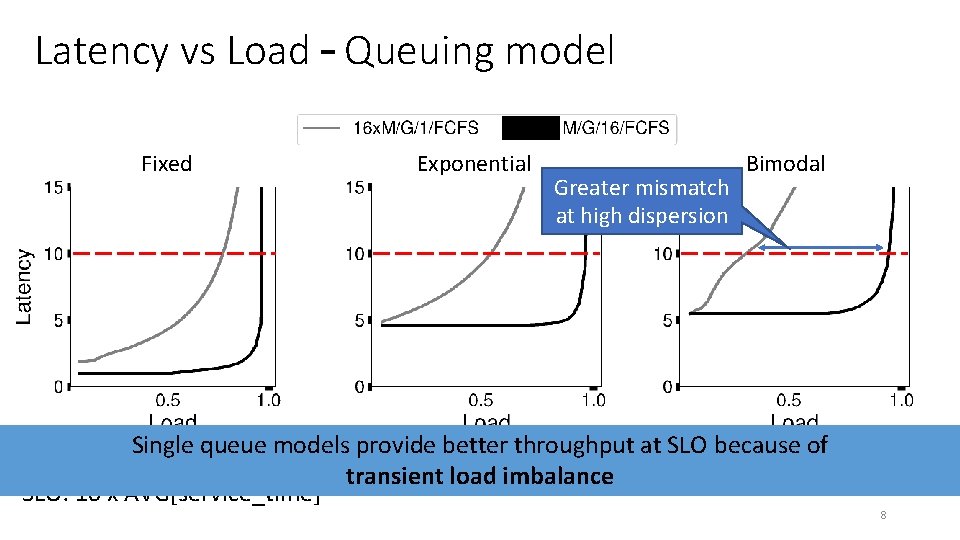

Latency vs Load – Queuing model Fixed Exponential Greater mismatch at high dispersion Bimodal Single queue models provide better throughput at SLO because of 99 th percentile latency transient load imbalance SLO: 10 x AVG[service_time] 8

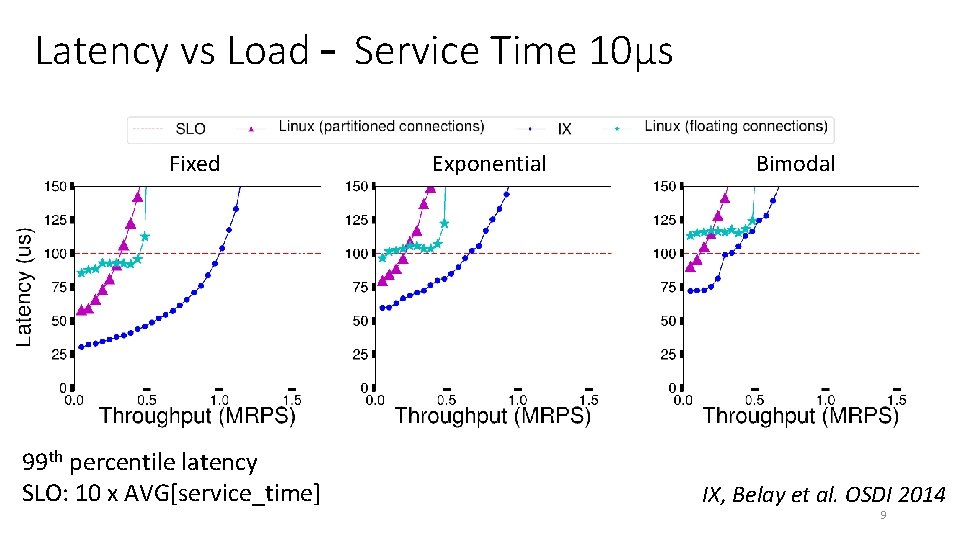

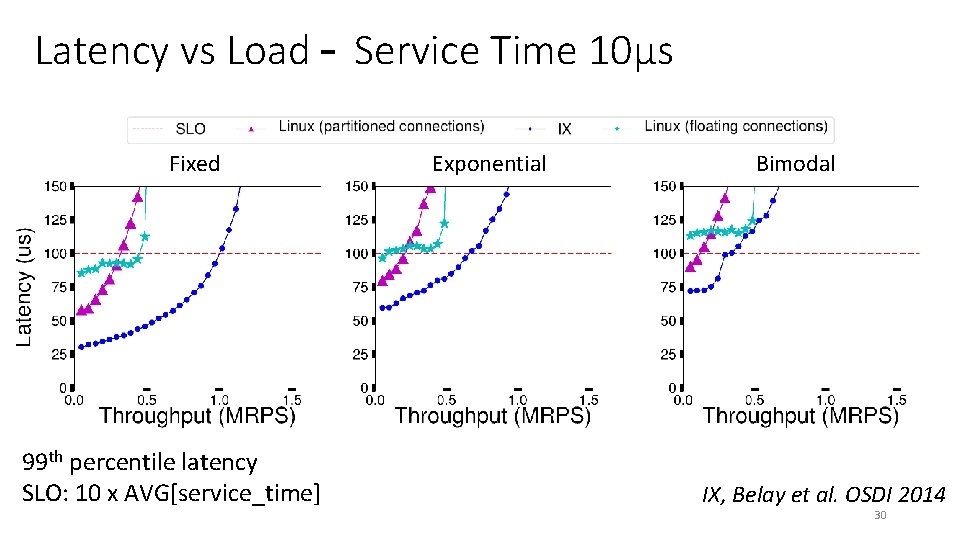

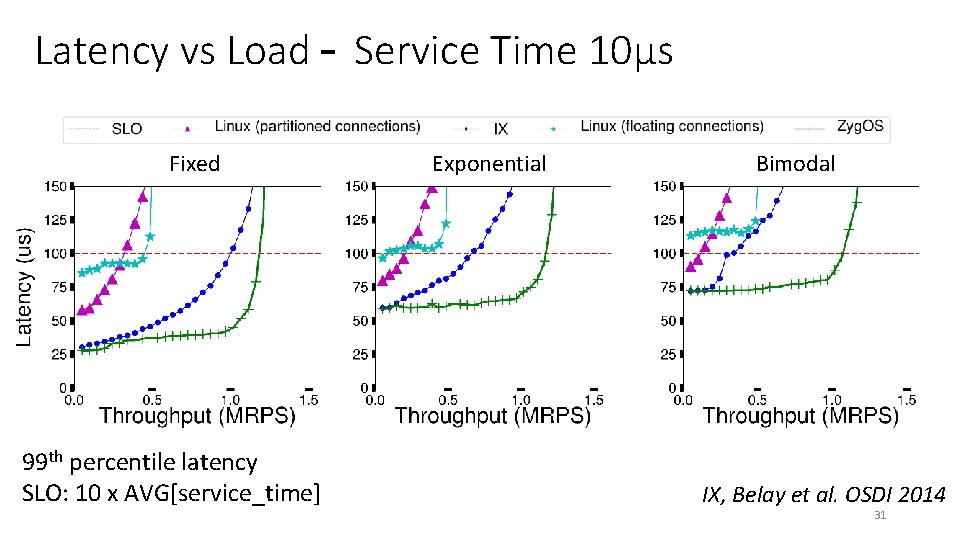

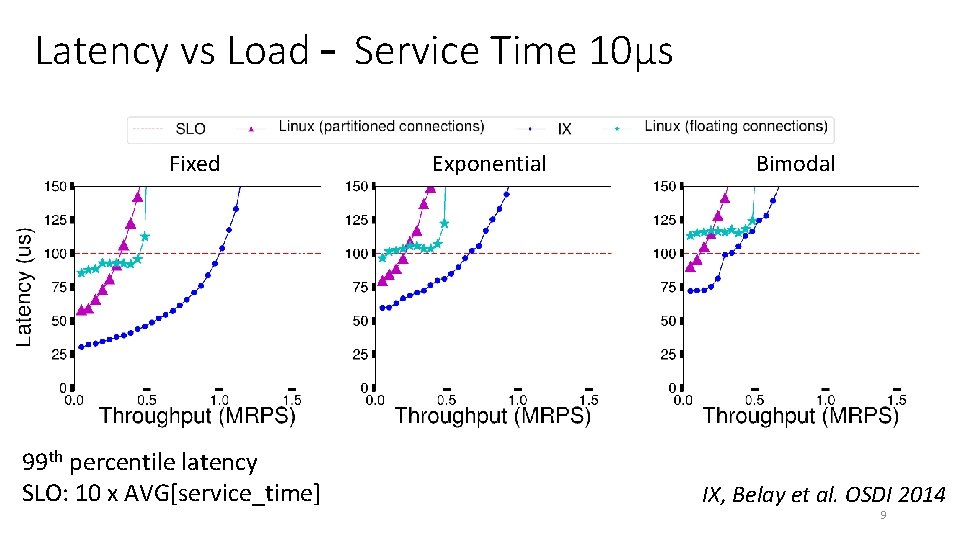

Latency vs Load – Service Time 10μs Fixed 99 th percentile latency SLO: 10 x AVG[service_time] Exponential Bimodal IX, Belay et al. OSDI 2014 9

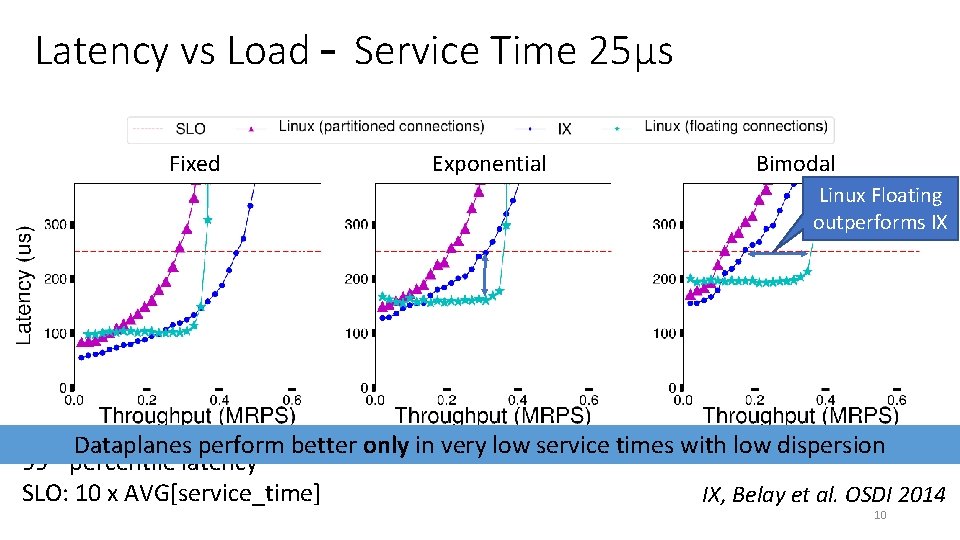

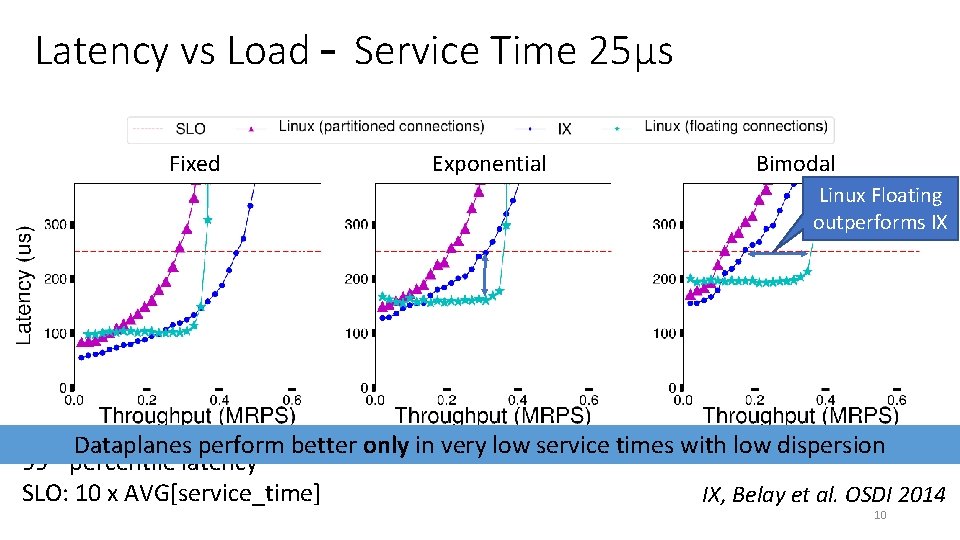

Latency vs Load – Service Time 25μs Fixed Exponential Bimodal Linux Floating outperforms IX Dataplanes perform better only in very low service times with low dispersion percentile latency SLO: 10 x AVG[service_time] IX, Belay et al. OSDI 2014 99 th 10

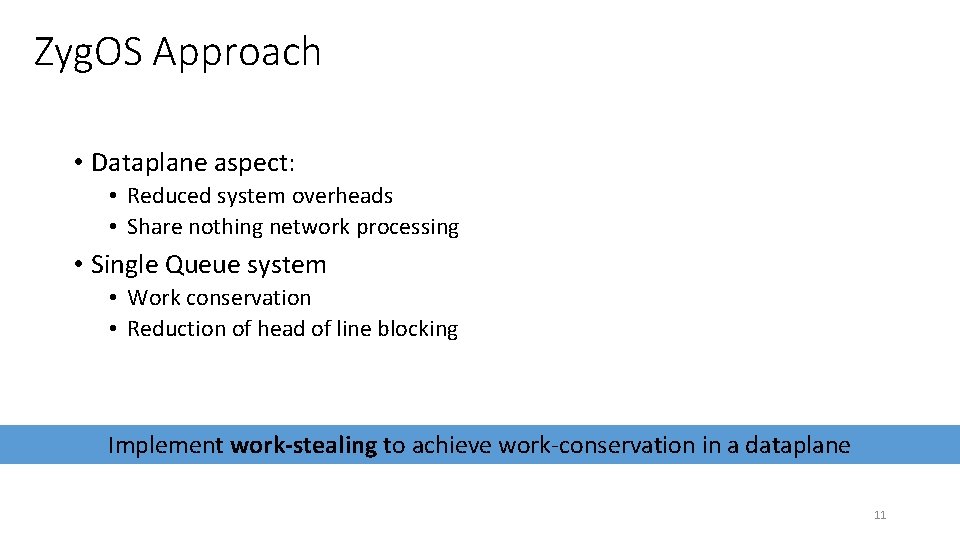

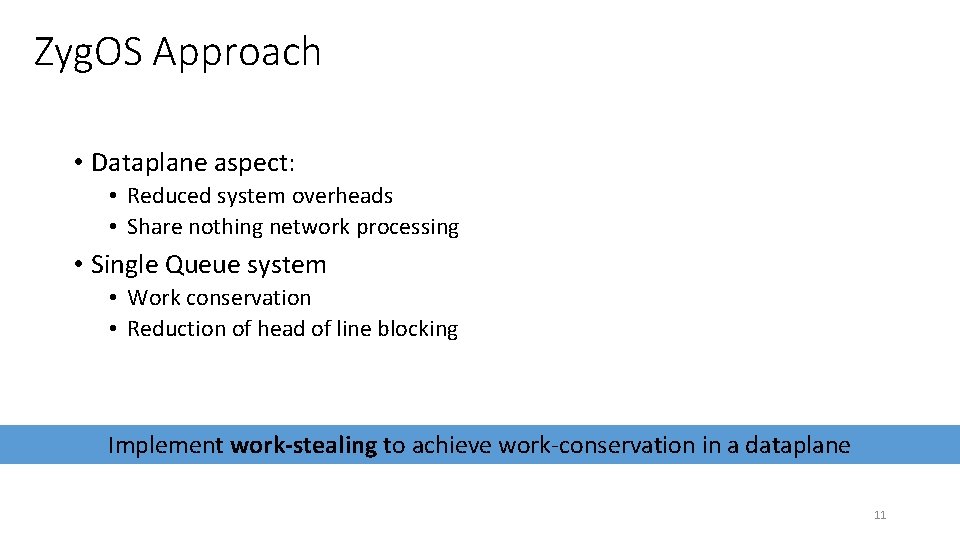

Zyg. OS Approach • Dataplane aspect: • Reduced system overheads • Share nothing network processing • Single Queue system • Work conservation • Reduction of head of line blocking Implement work-stealing to achieve work-conservation in a dataplane 11

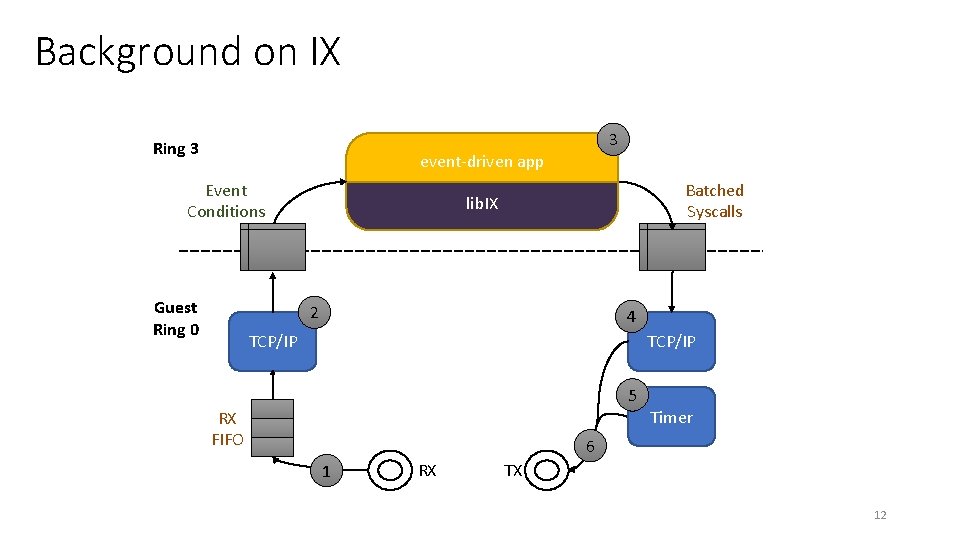

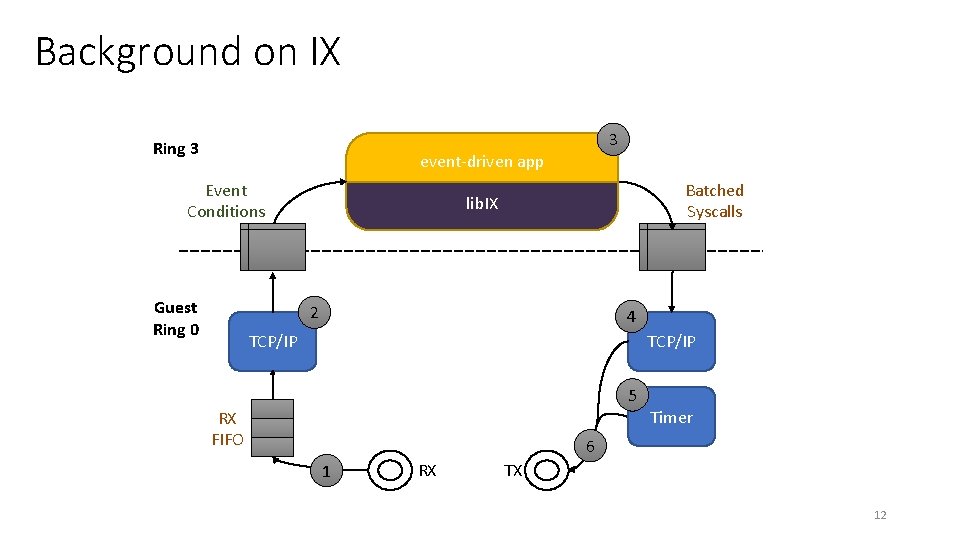

Background on IX Ring 3 3 event-driven app Event Conditions Guest Ring 0 Batched Syscalls lib. IX 2 4 TCP/IP 5 RX FIFO Timer 6 1 RX TX 12

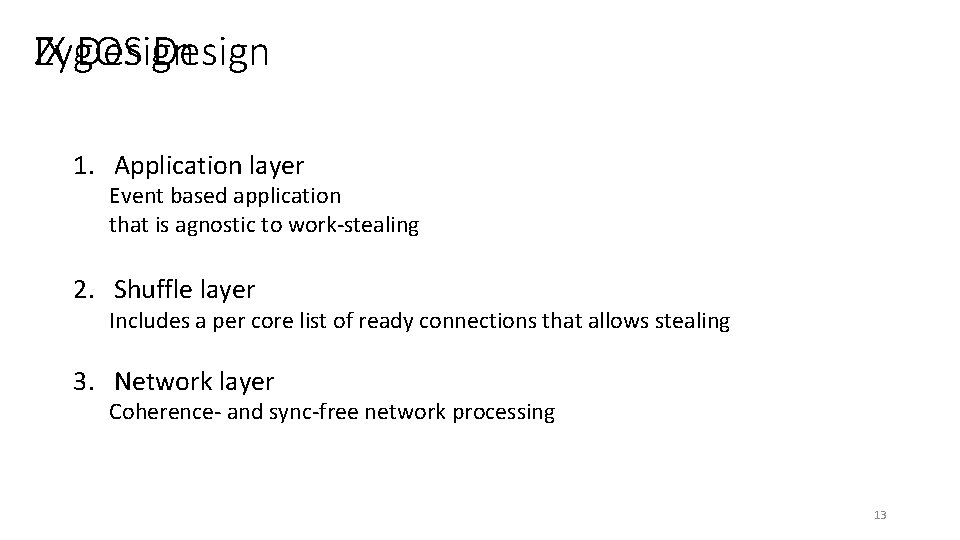

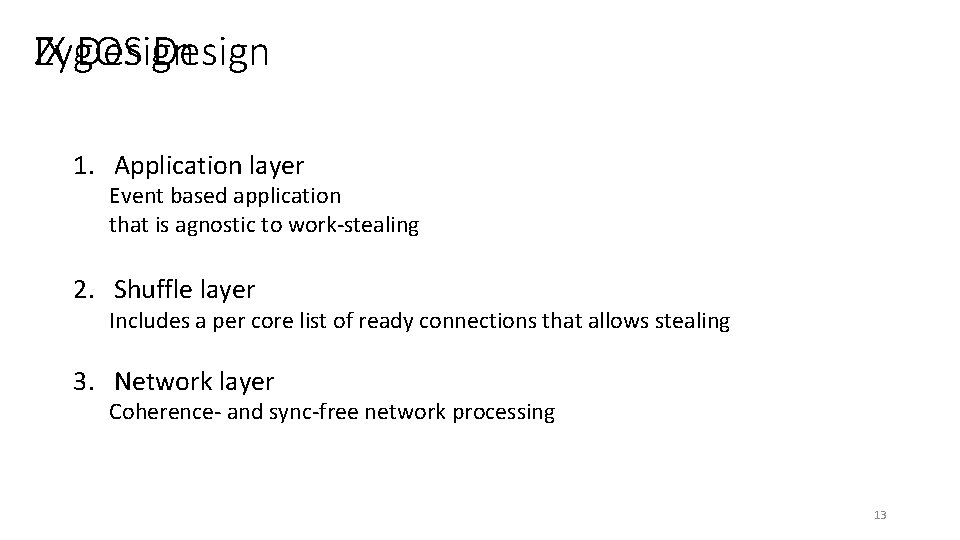

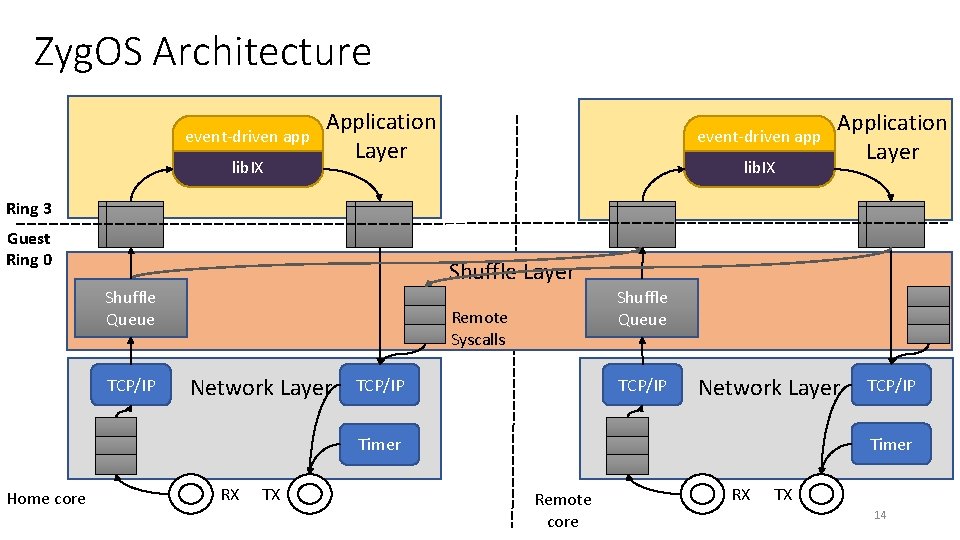

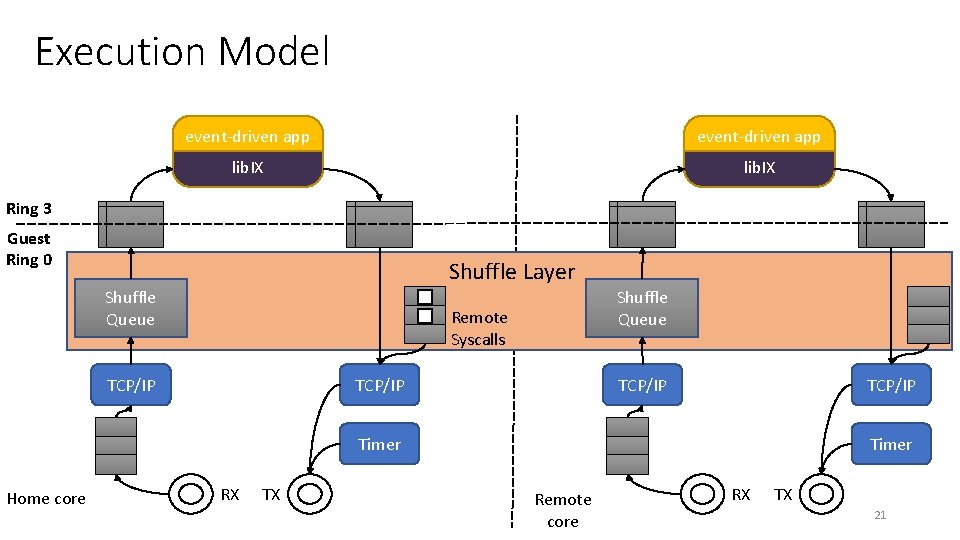

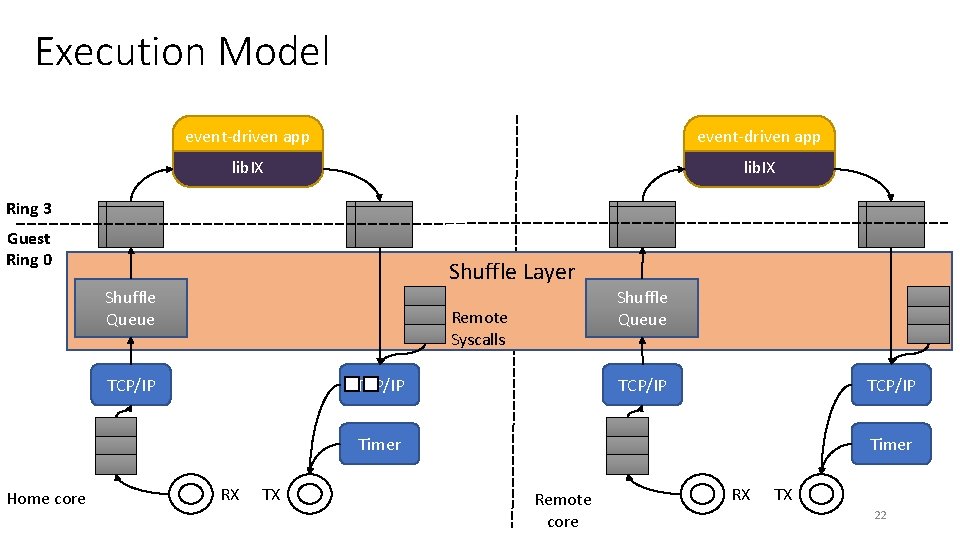

IX Design Zyg. OS Design 1. Application layer Event based application that is agnostic to work-stealing 2. Shuffle layer Includes a per core list of ready connections that allows stealing 3. Network layer Coherence- and sync-free network processing 13

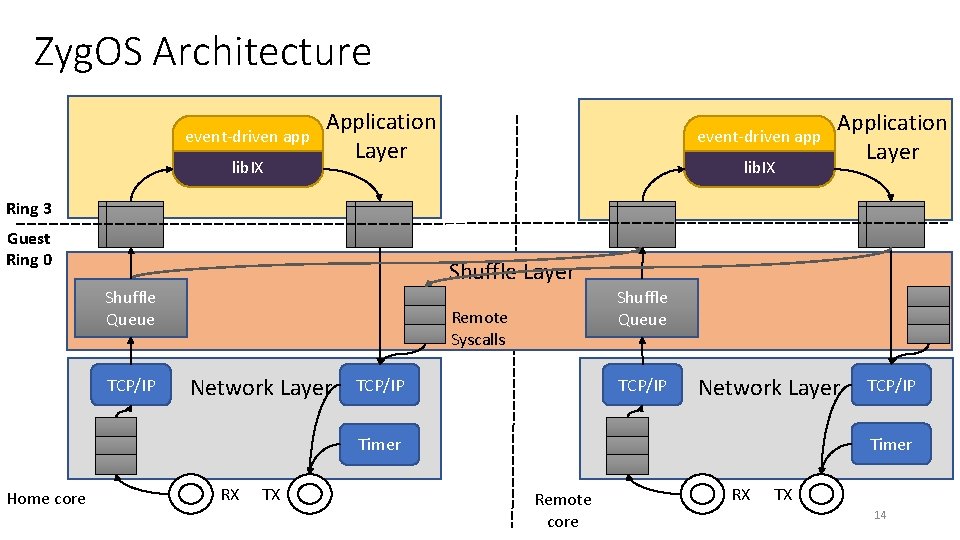

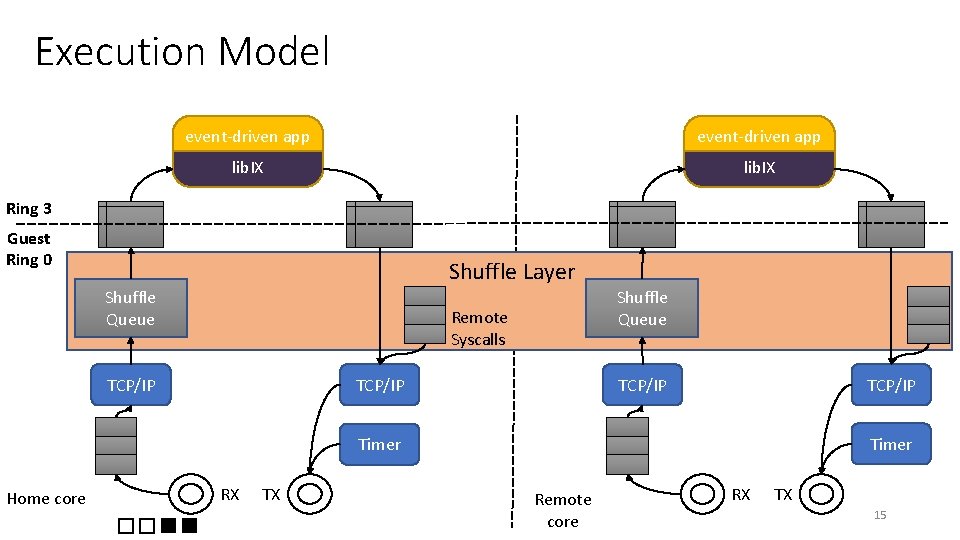

Zyg. OS Architecture event-driven app lib. IX Application Layer Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue TCP/IP Shuffle Queue Remote Syscalls Network Layer TCP/IP Network Layer Timer Home core RX TX TCP/IP Remote core RX TX 14

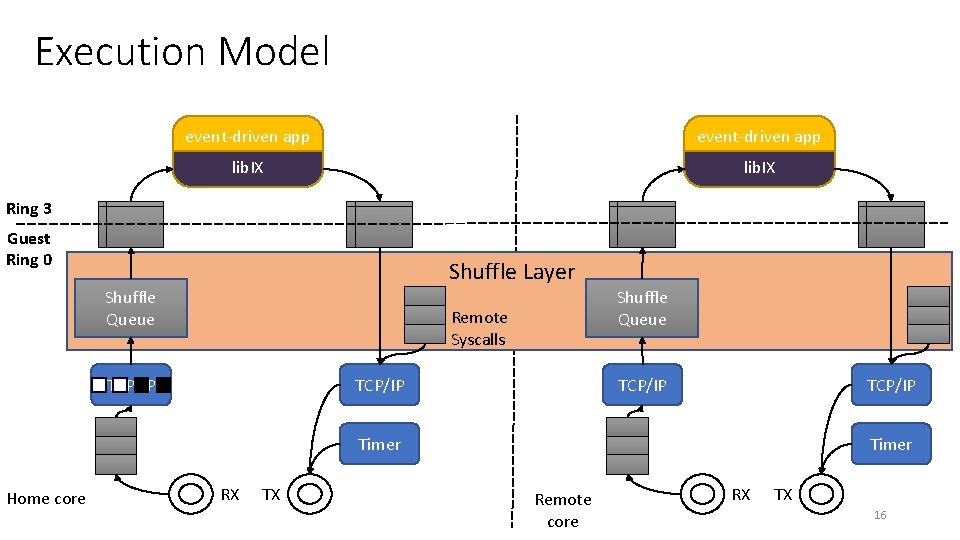

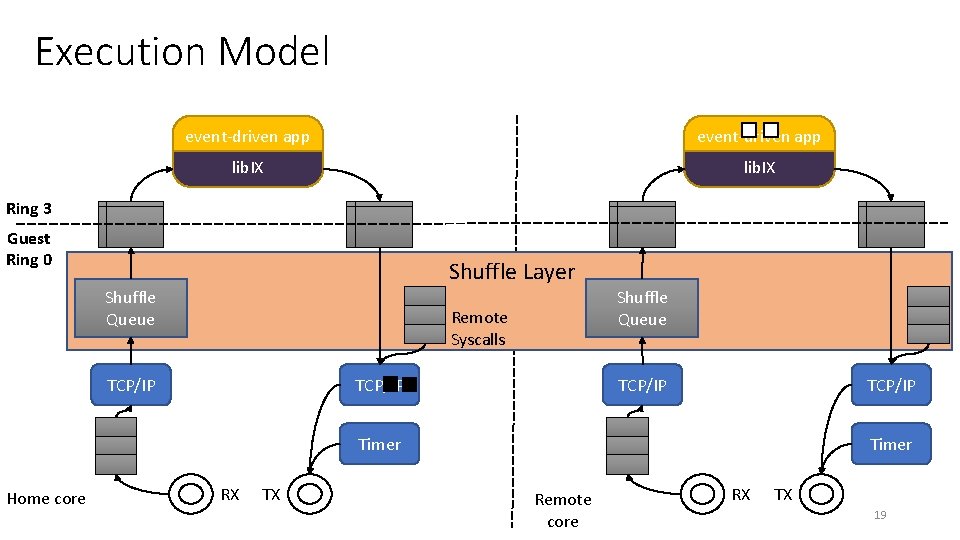

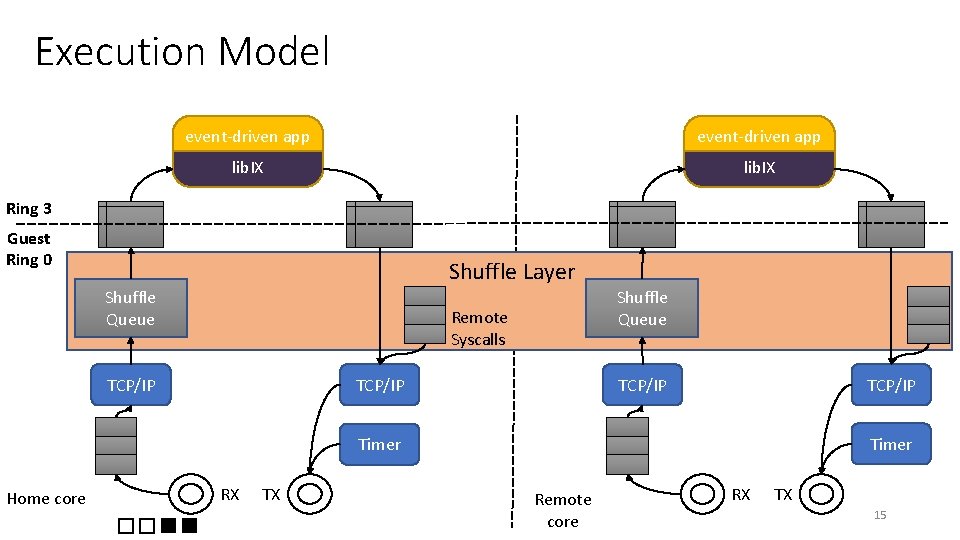

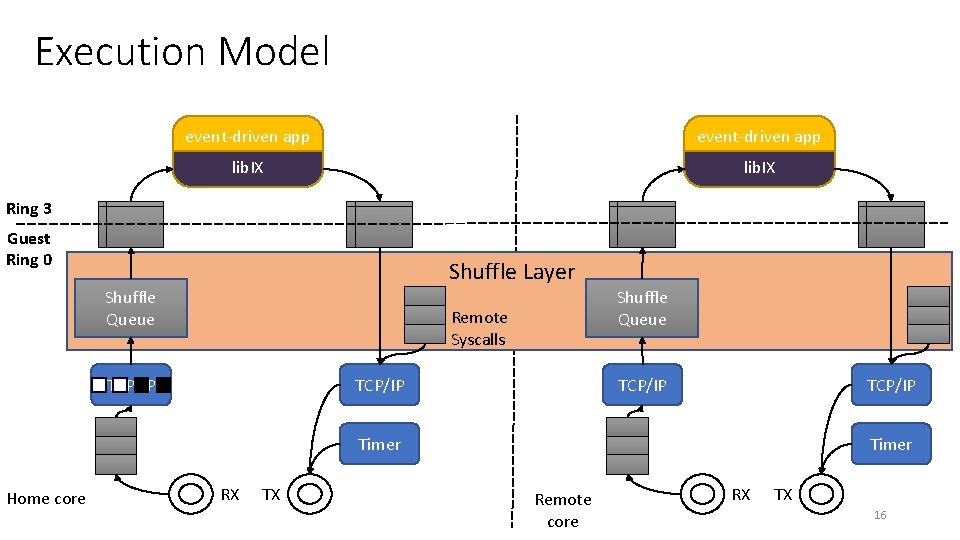

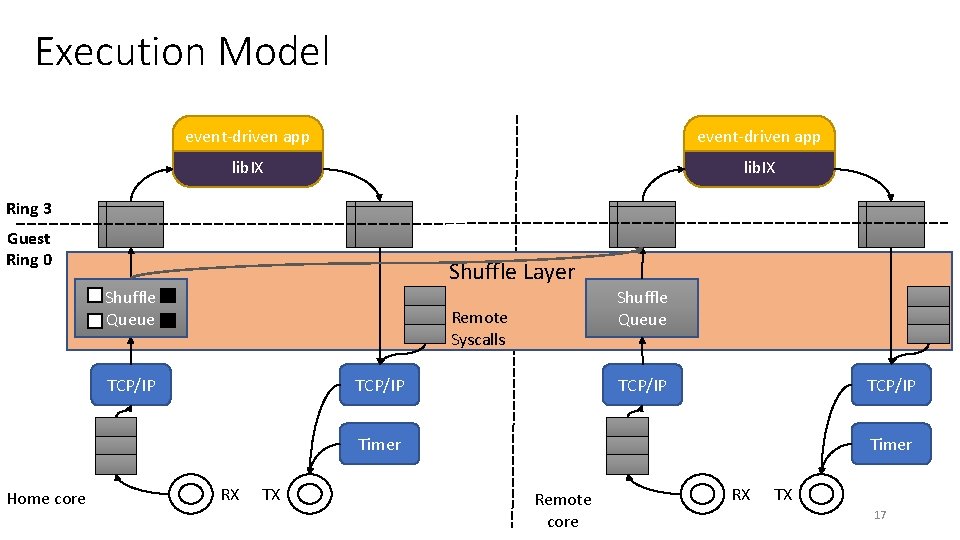

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Remote core RX TX 15

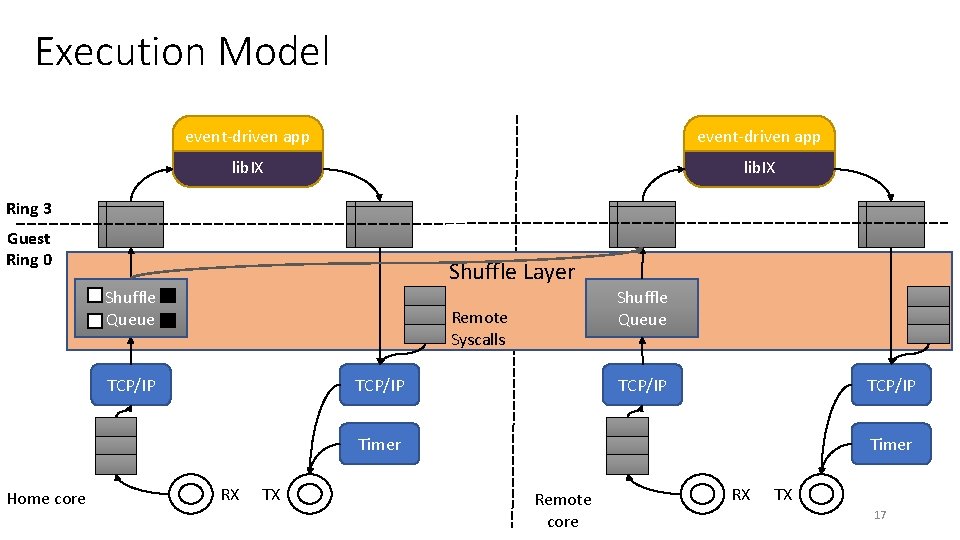

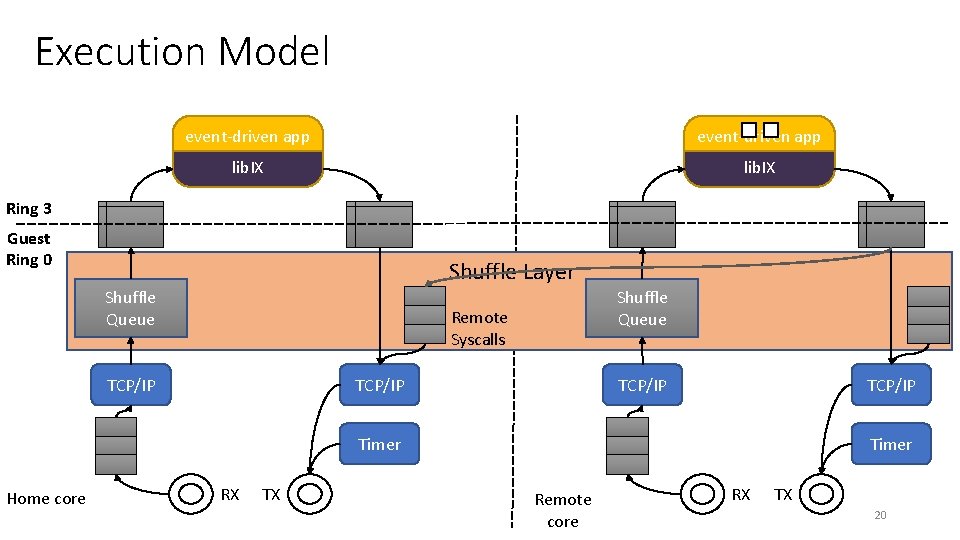

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 16

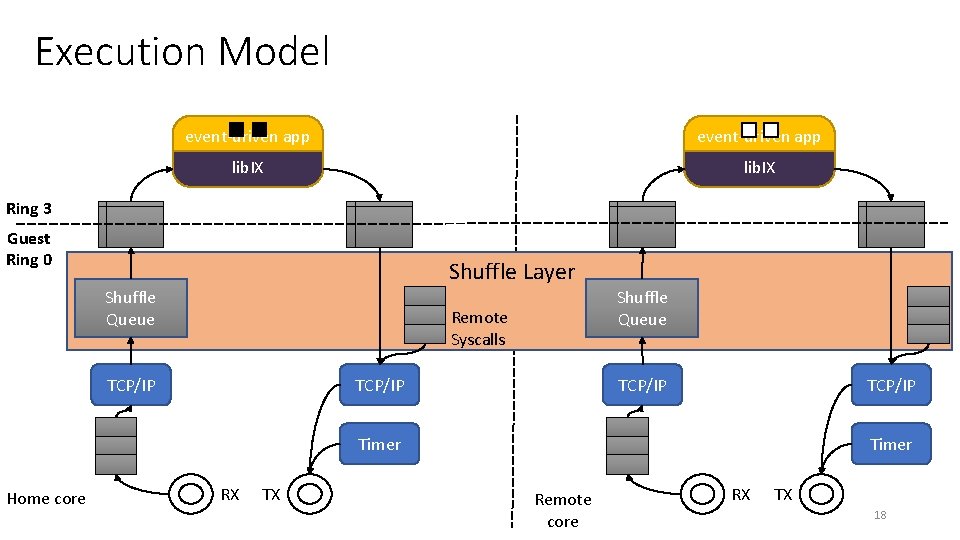

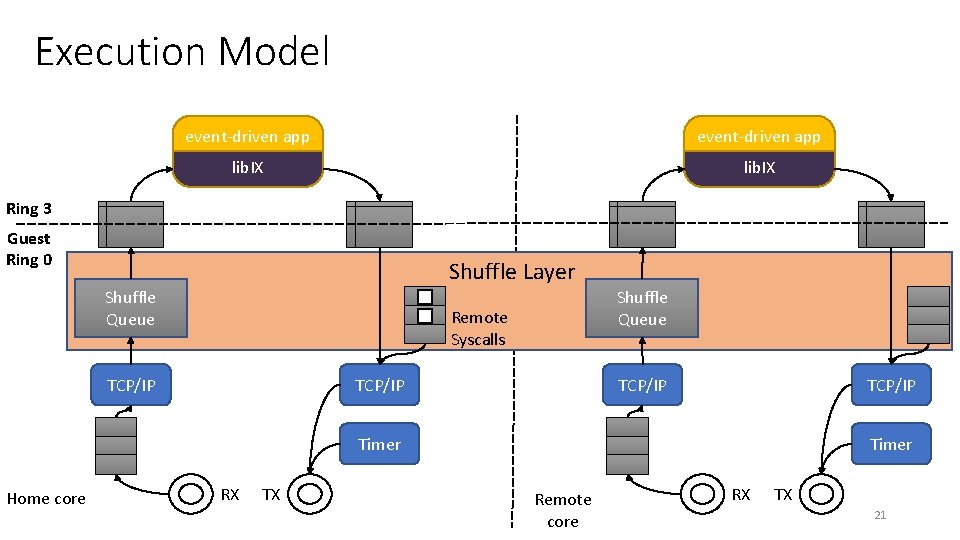

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 17

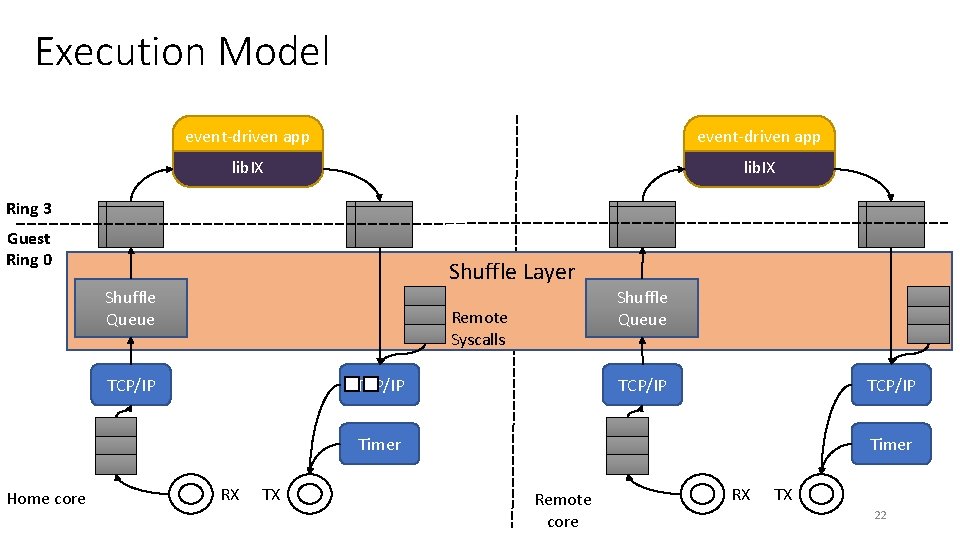

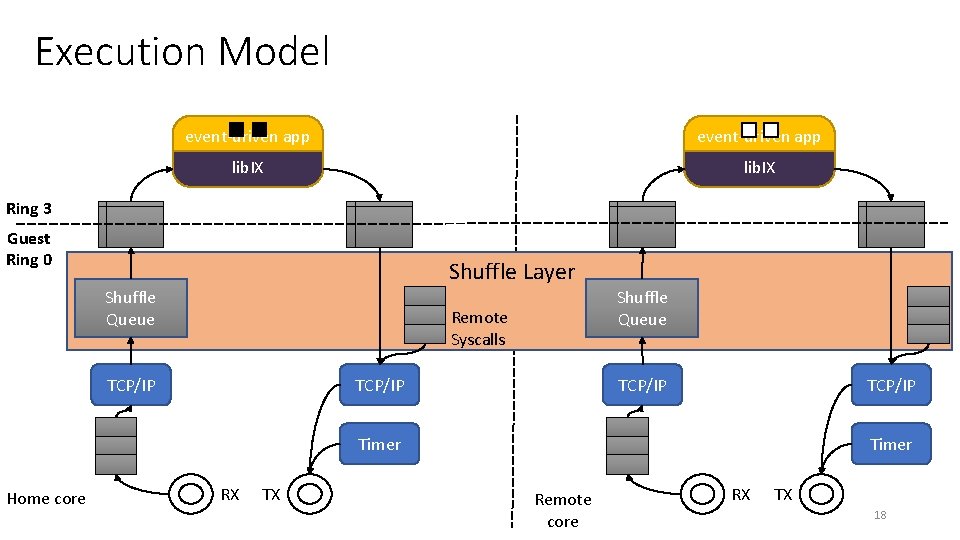

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 18

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 19

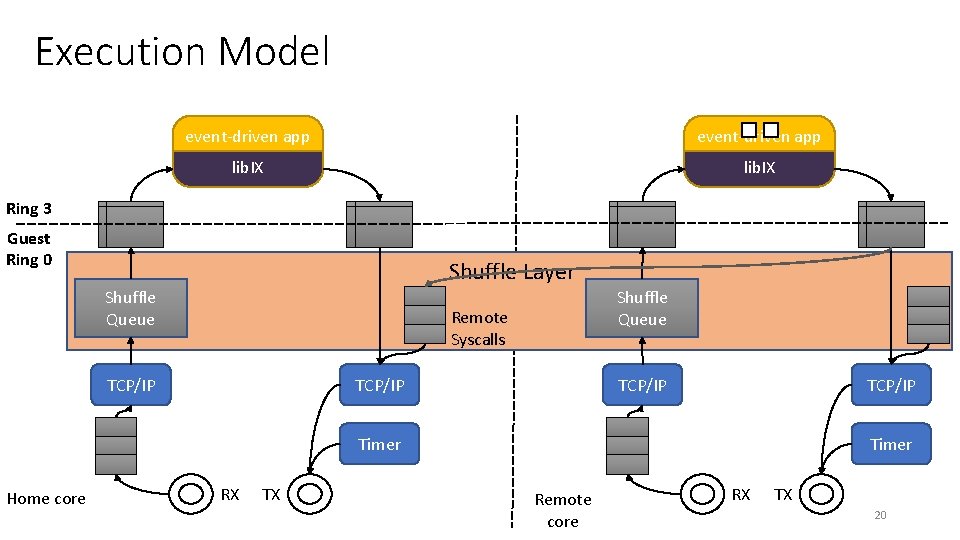

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 20

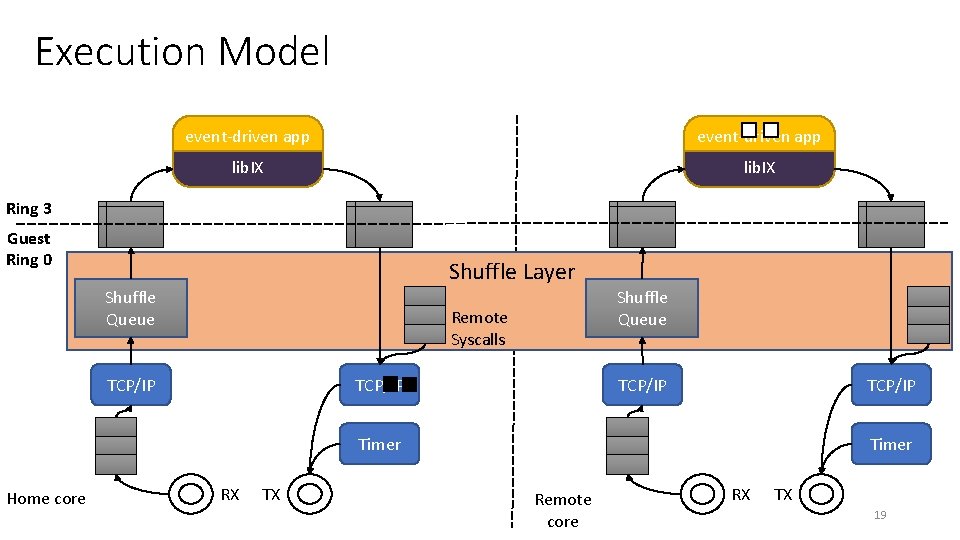

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 21

Execution Model event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Timer Remote core RX TX 22

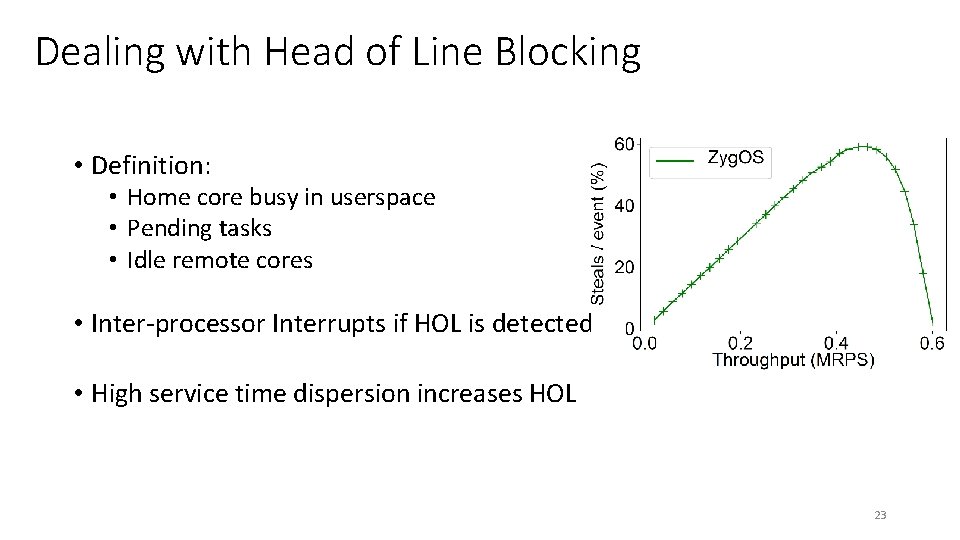

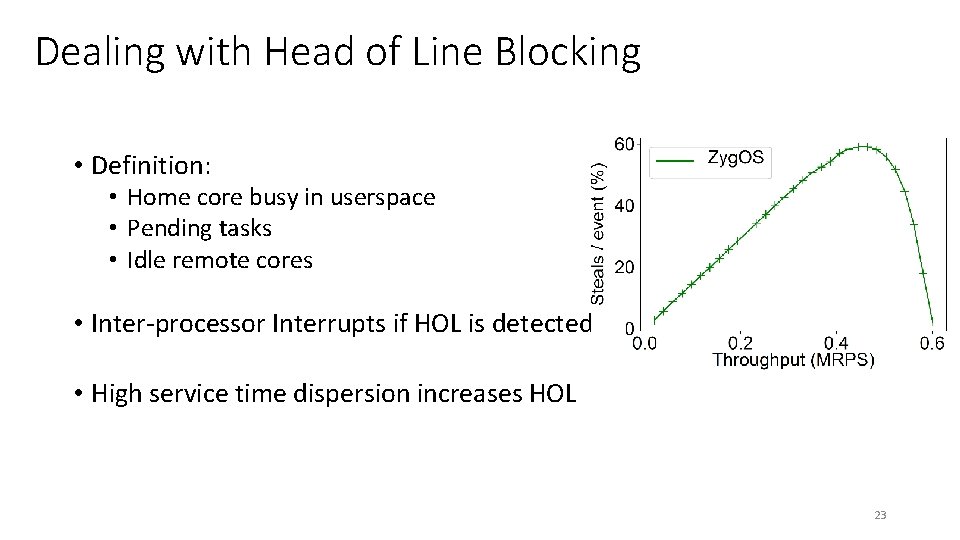

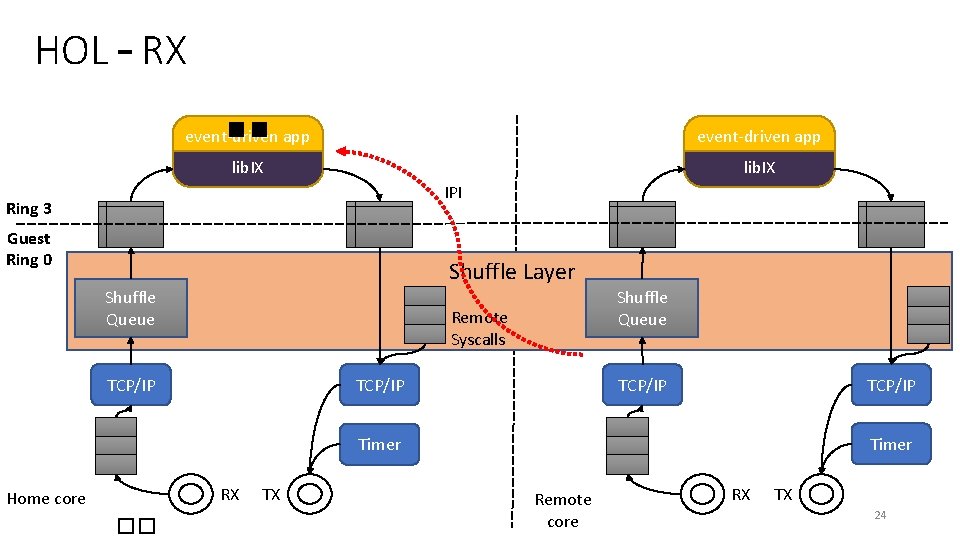

Dealing with Head of Line Blocking • Definition: • Home core busy in userspace • Pending tasks • Idle remote cores • Inter-processor Interrupts if HOL is detected • High service time dispersion increases HOL 23

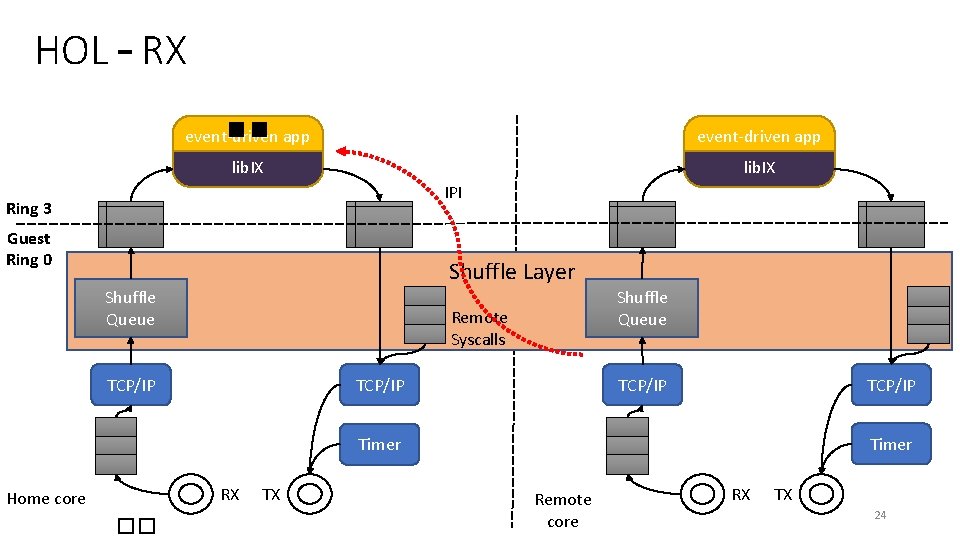

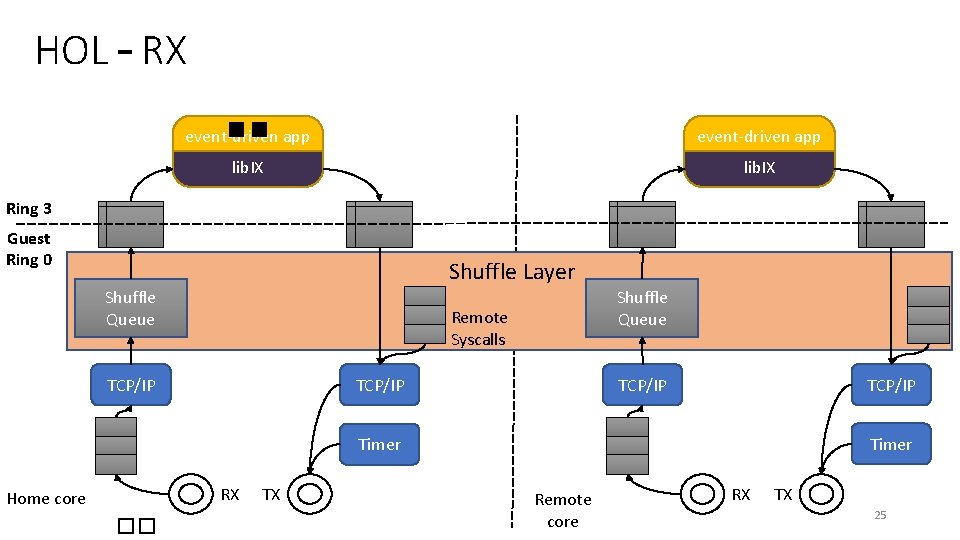

HOL – RX event-driven app lib. IX IPI Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Remote core RX TX 24

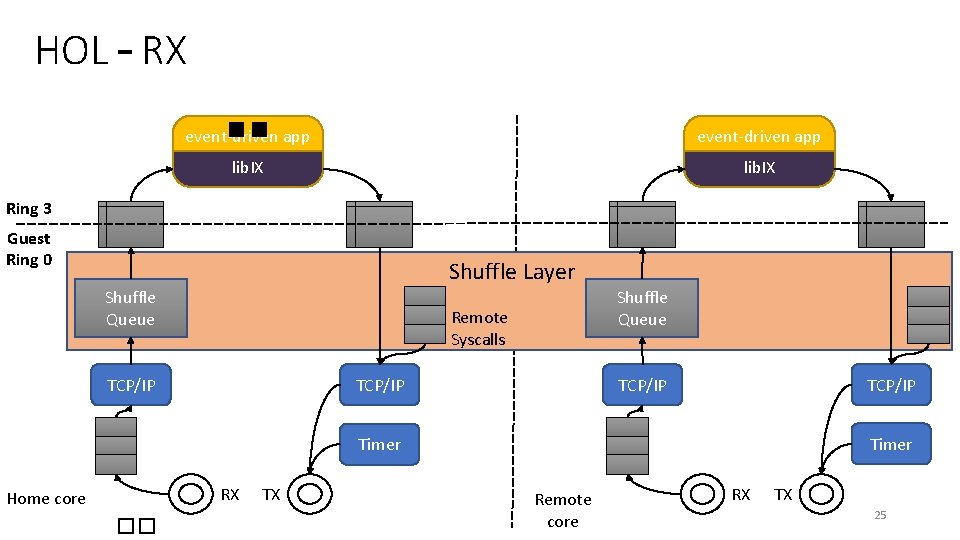

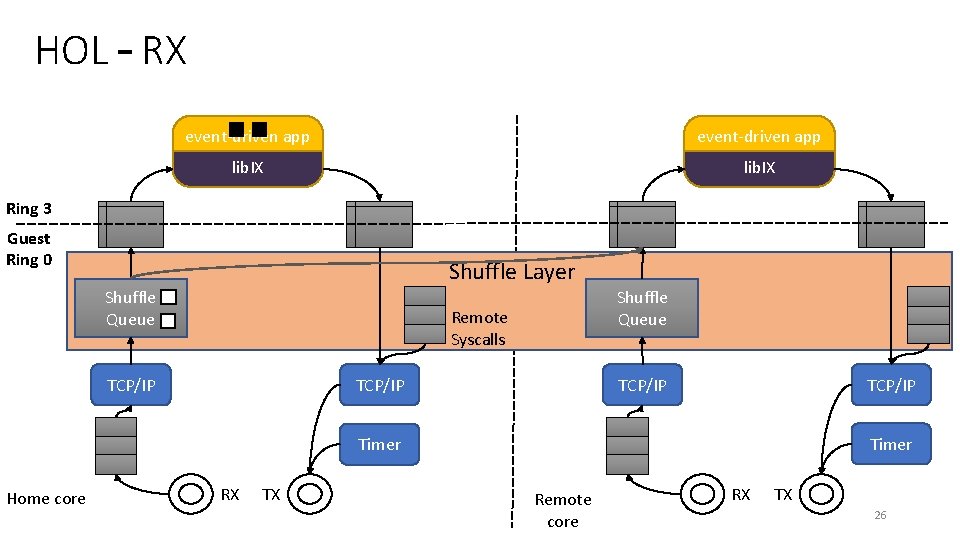

HOL – RX event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Remote core RX TX 25

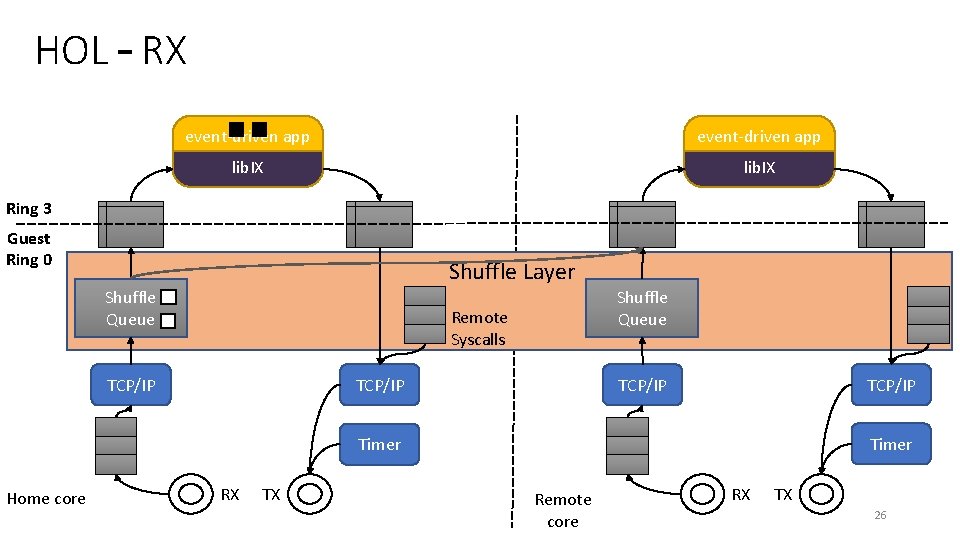

HOL – RX event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Remote core RX TX 26

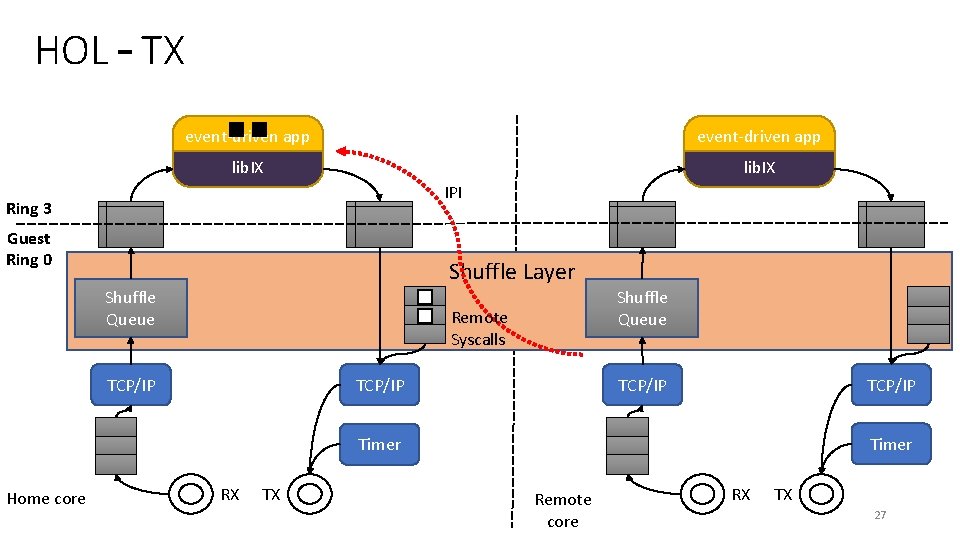

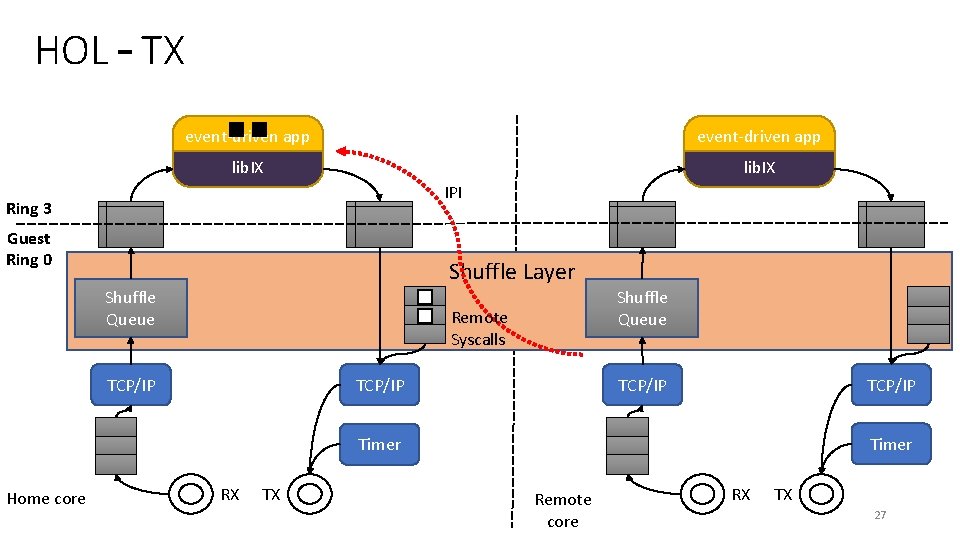

HOL – TX event-driven app lib. IX IPI Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Remote core RX TX 27

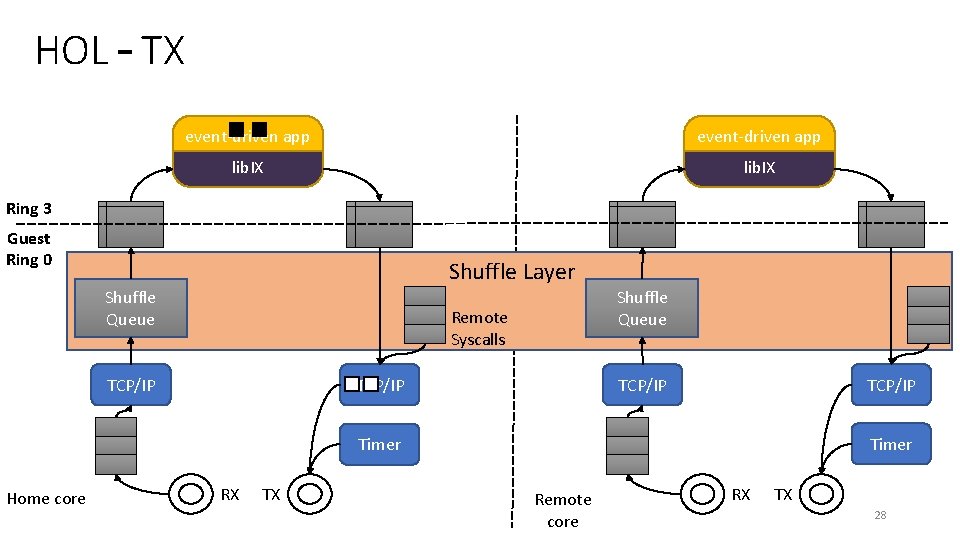

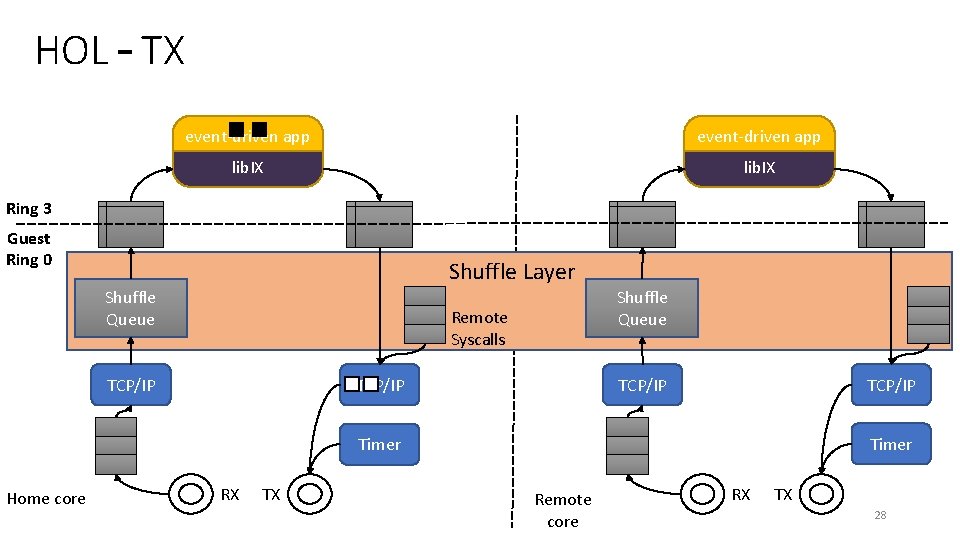

HOL – TX event-driven app lib. IX Ring 3 Guest Ring 0 Shuffle Layer Shuffle Queue Remote Syscalls TCP/IP Timer Home core RX TX Remote core RX TX 28

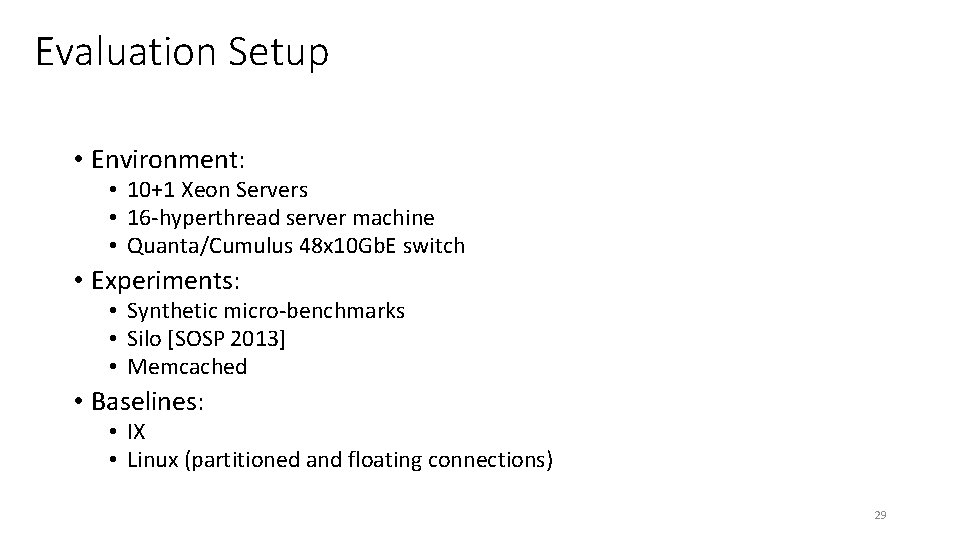

Evaluation Setup • Environment: • 10+1 Xeon Servers • 16 -hyperthread server machine • Quanta/Cumulus 48 x 10 Gb. E switch • Experiments: • Synthetic micro-benchmarks • Silo [SOSP 2013] • Memcached • Baselines: • IX • Linux (partitioned and floating connections) 29

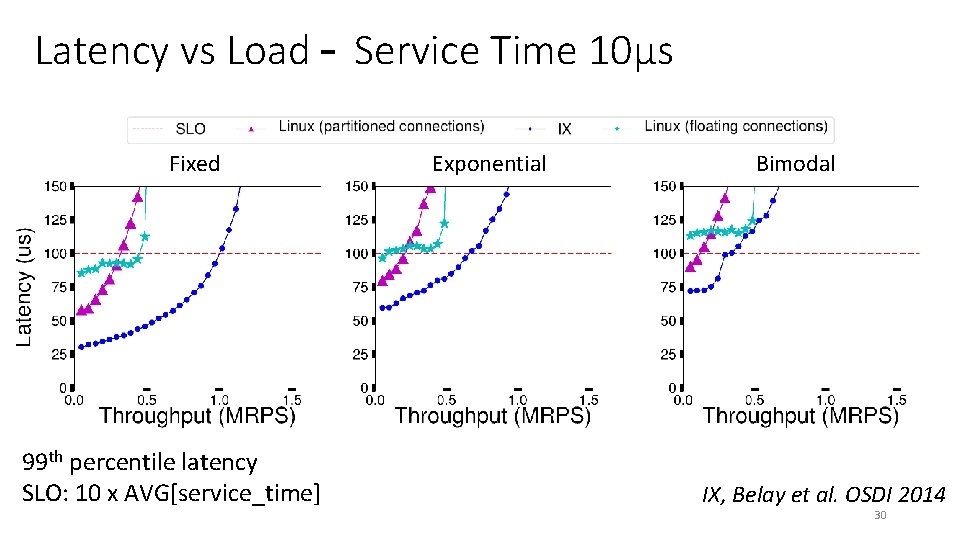

Latency vs Load – Service Time 10μs Fixed 99 th percentile latency SLO: 10 x AVG[service_time] Exponential Bimodal IX, Belay et al. OSDI 2014 30

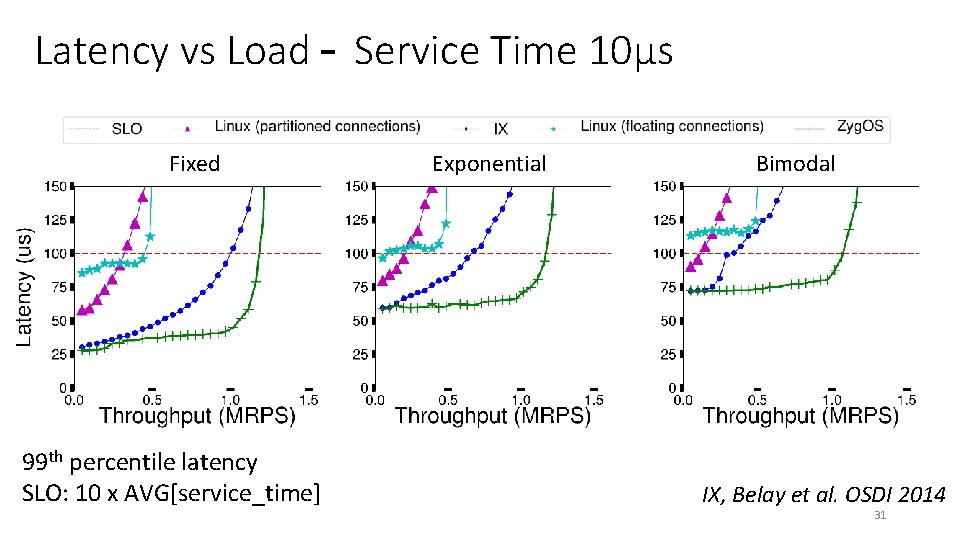

Latency vs Load – Service Time 10μs Fixed 99 th percentile latency SLO: 10 x AVG[service_time] Exponential Bimodal IX, Belay et al. OSDI 2014 31

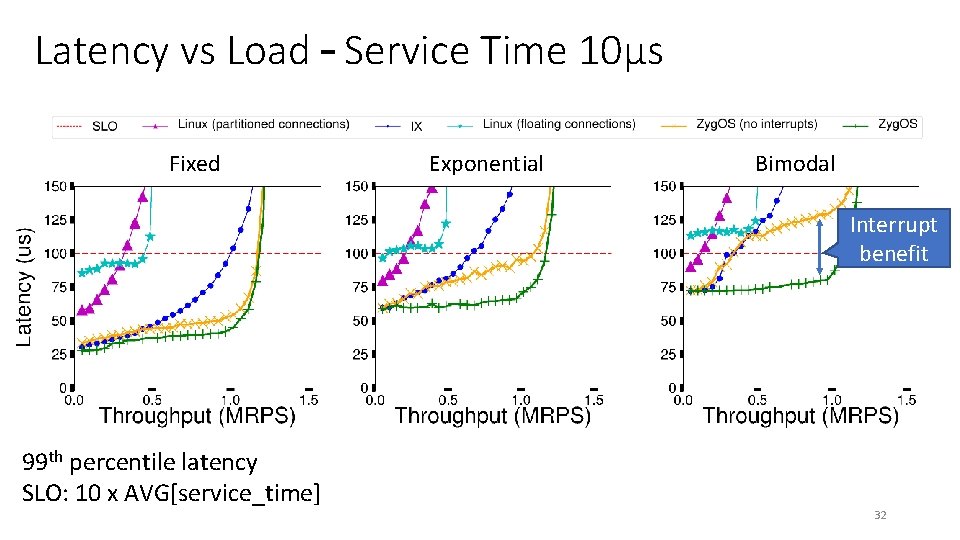

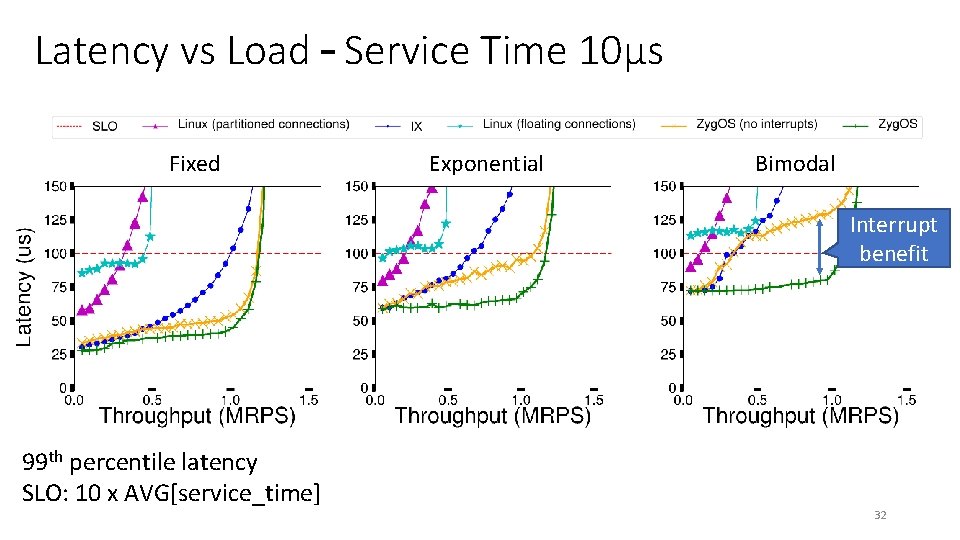

Latency vs Load – Service Time 10μs Fixed Exponential Bimodal Interrupt benefit 99 th percentile latency SLO: 10 x AVG[service_time] 32

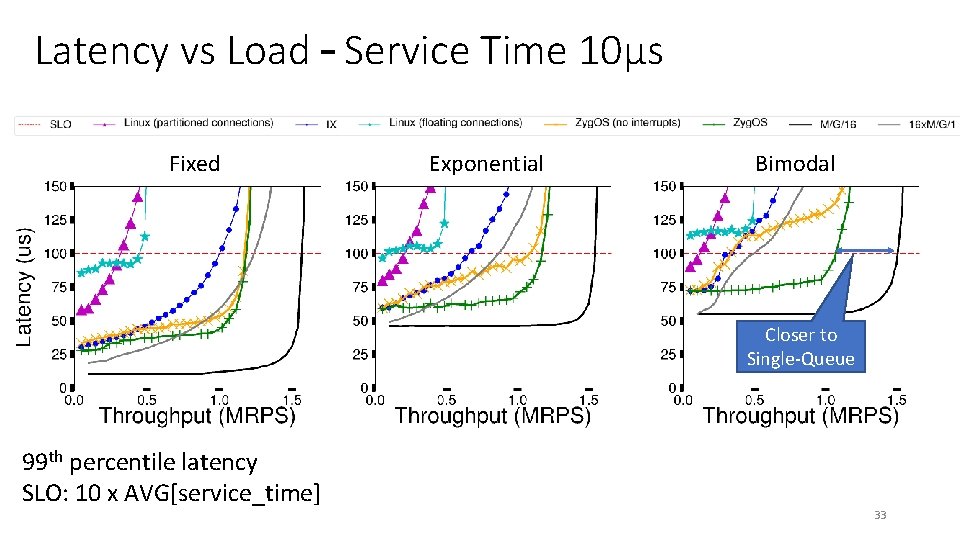

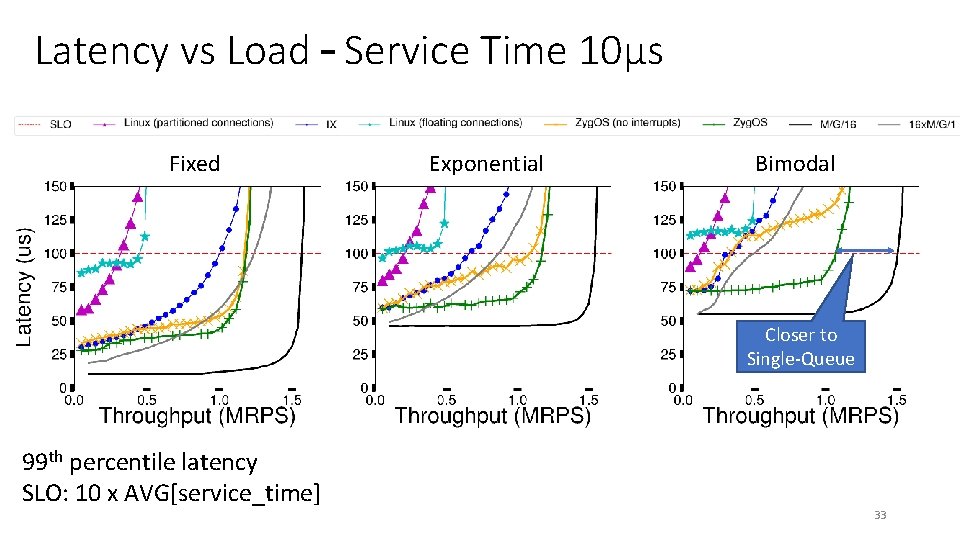

Latency vs Load – Service Time 10μs Fixed Exponential Bimodal Closer to Single-Queue 99 th percentile latency SLO: 10 x AVG[service_time] 33

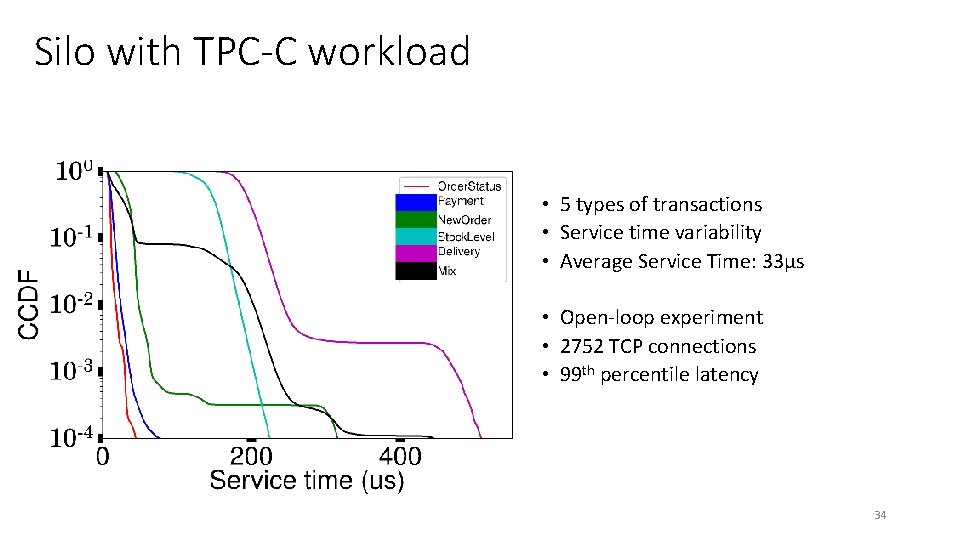

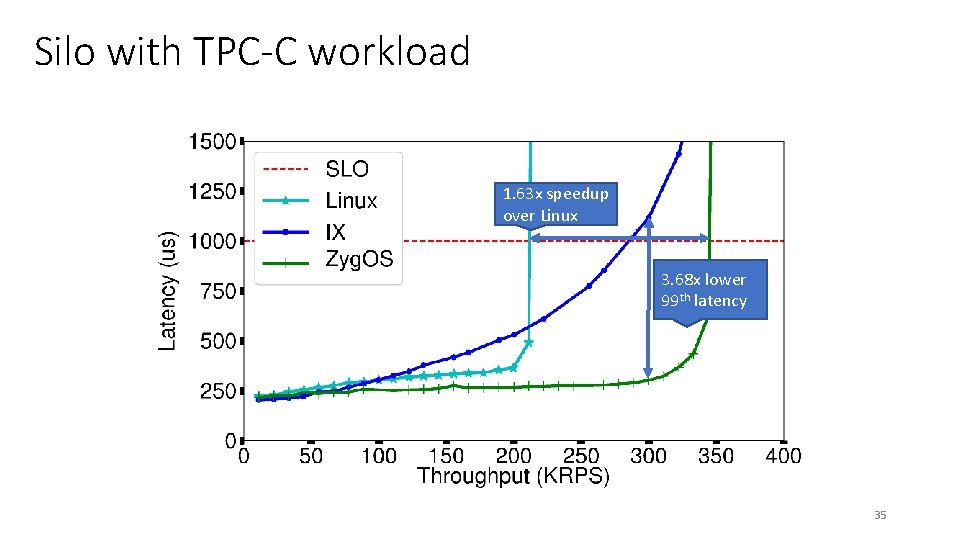

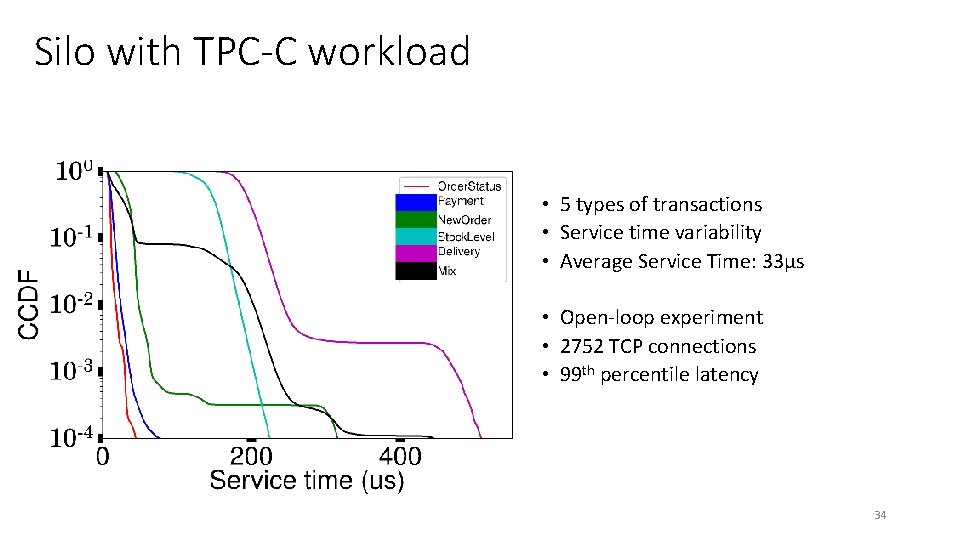

Silo with TPC-C workload • 5 types of transactions • Service time variability • Average Service Time: 33μs • Open-loop experiment • 2752 TCP connections • 99 th percentile latency 34

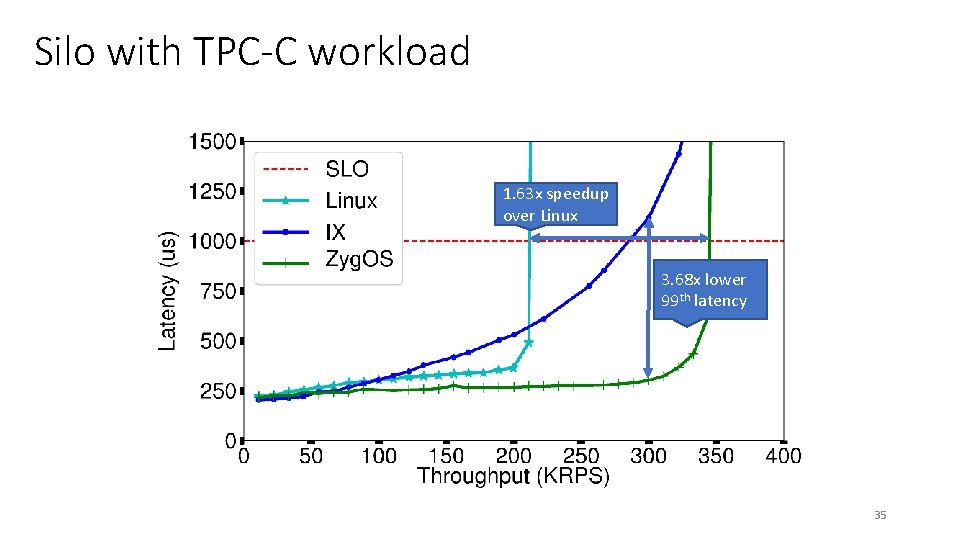

Silo with TPC-C workload 1. 63 x speedup over Linux 3. 68 x lower 99 th latency 35

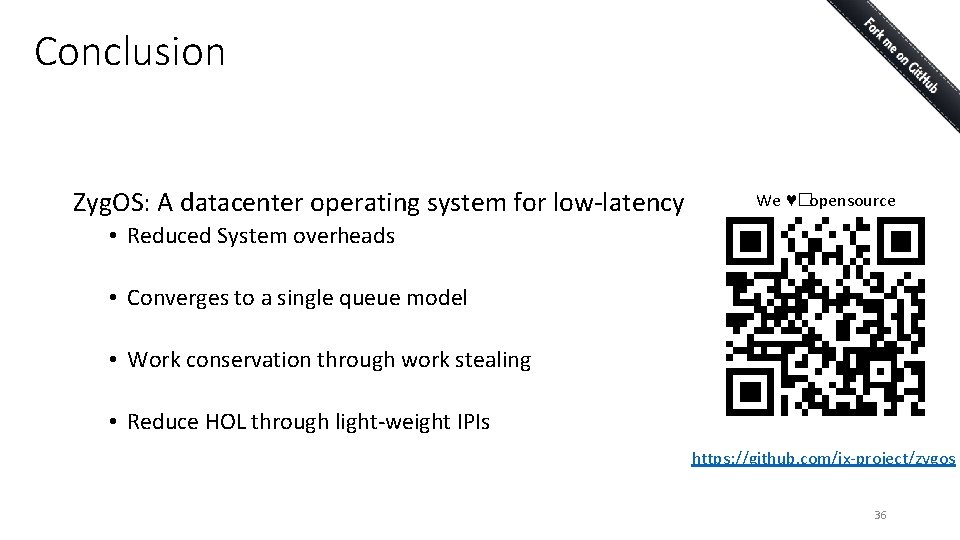

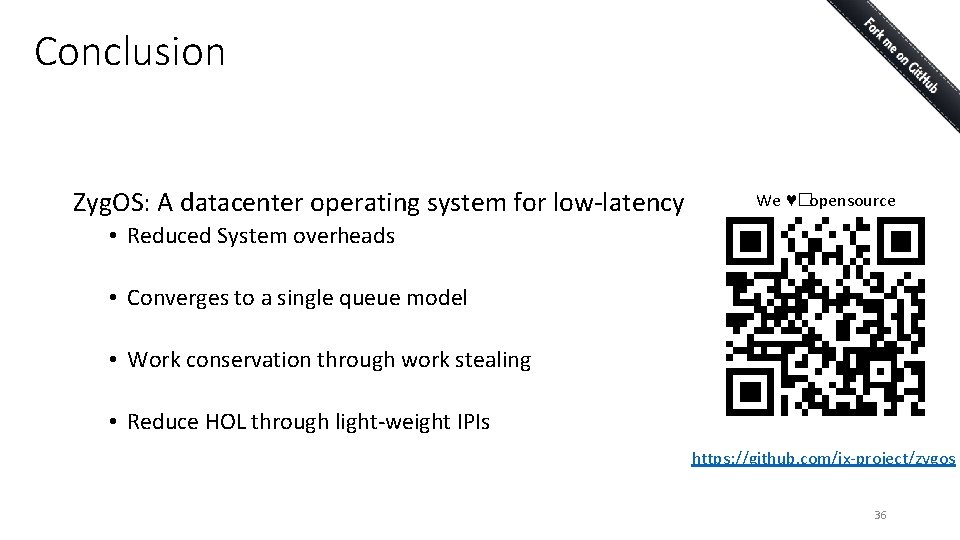

Conclusion Zyg. OS: A datacenter operating system for low-latency We ♥�opensource • Reduced System overheads • Converges to a single queue model • Work conservation through work stealing • Reduce HOL through light-weight IPIs https: //github. com/ix-project/zygos 36