You Thought You Understood Multithreading a deep investigation

- Slides: 51

You Thought You Understood Multithreading a deep investigation on how. NET multithreading primitives map to hardware and Windows Kernel Gaël Fraiteur Post. Sharp Technologies Founder & Principal@gfraiteur Engineer

Hello! My name is GAEL. No, I don’t think my accent is funny. my twitter @gfraiteur

Agenda 1. Hardware 2. Windows Kernel 3. Application @gfraiteur

Hardware Microarchitecture @gfraiteur

There was no RAM in Colossus, the world’s first electronic programmable computing device (19 @gfraiteur http: //en. wikipedia. org/wiki/File: Colossus. jpg

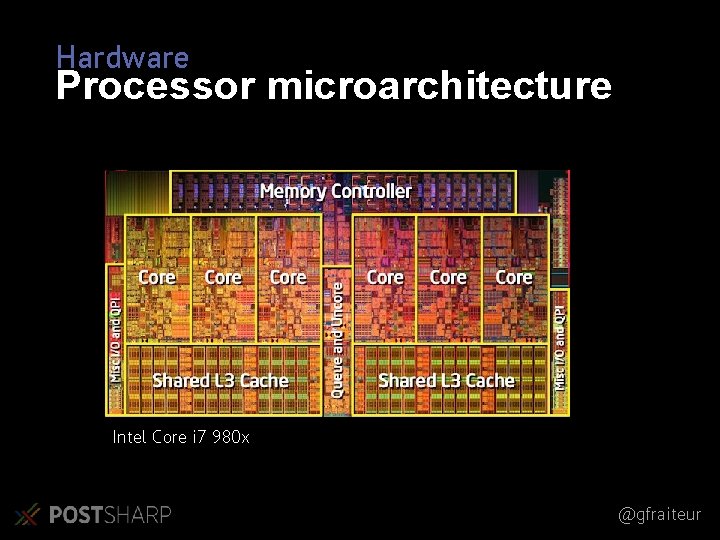

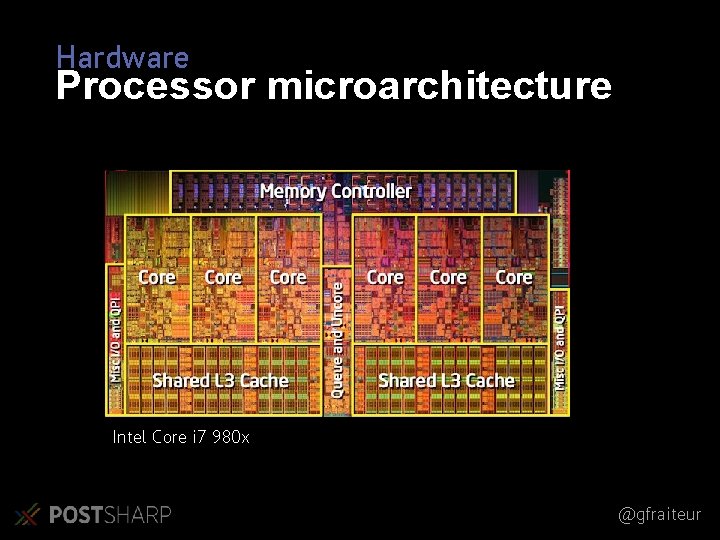

Hardware Processor microarchitecture Intel Core i 7 980 x @gfraiteur

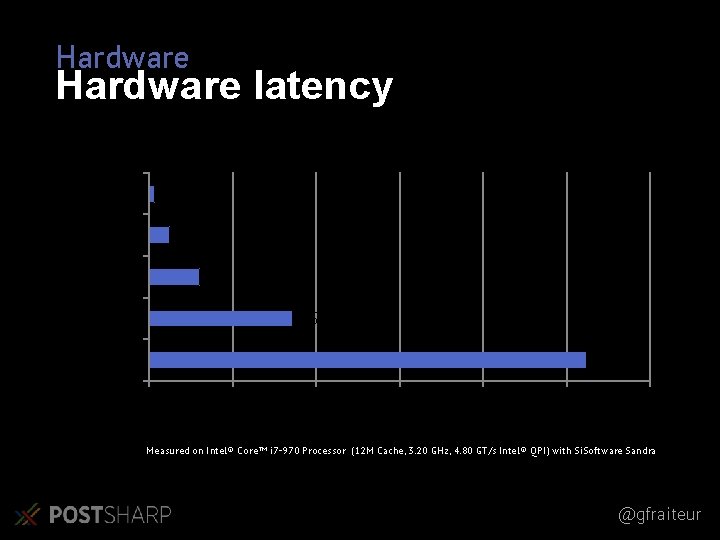

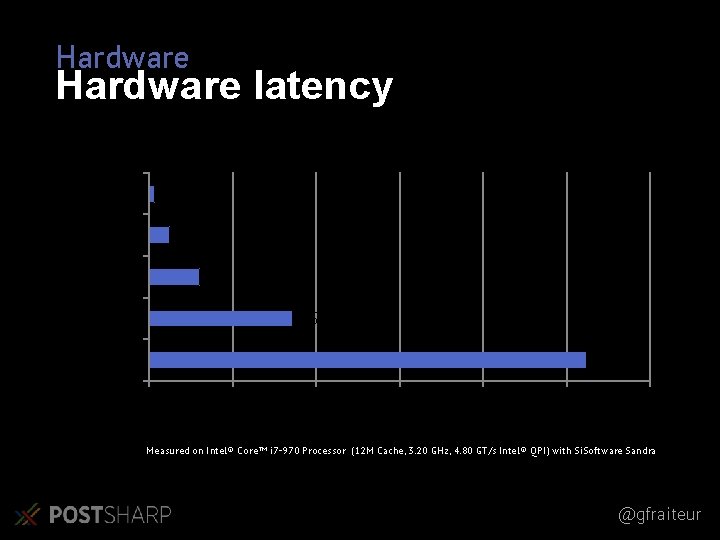

Hardware latency Latency CPU Cycle 0, 3 L 1 Cache 1, 2 L 2 Cache 3 L 3 Cache 8, 58 DRAM 26, 2 0 5 10 15 ns 20 25 30 Measured on Intel® Core™ i 7 -970 Processor (12 M Cache, 3. 20 GHz, 4. 80 GT/s Intel® QPI) with Si. Software Sandra @gfraiteur

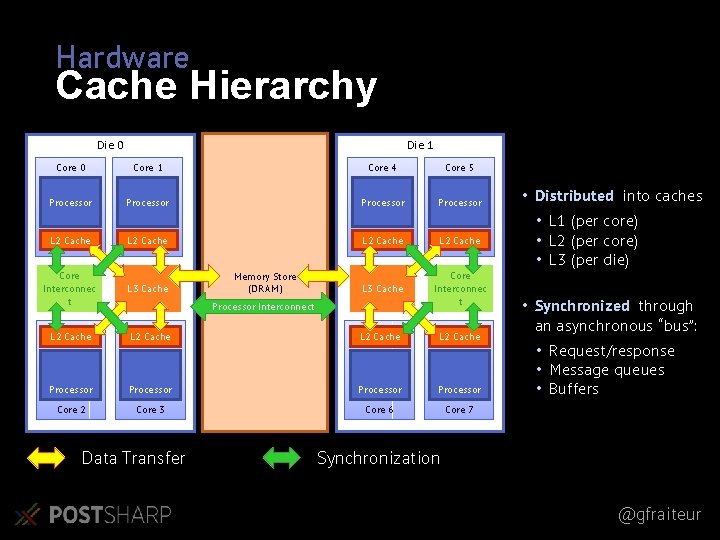

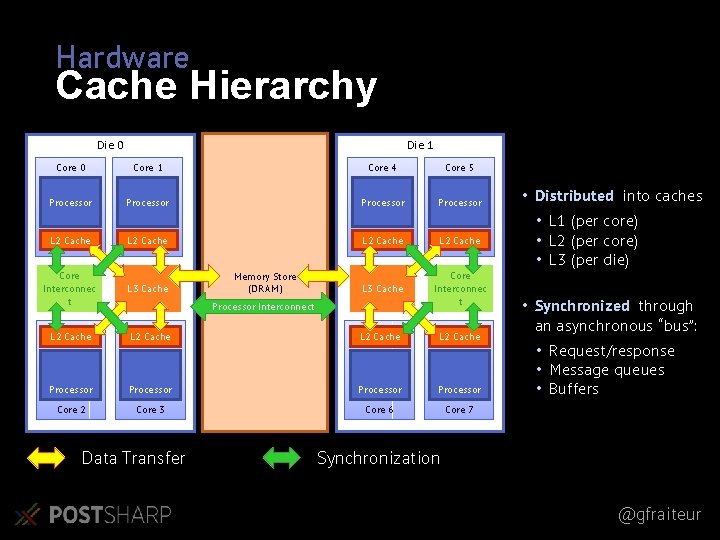

Hardware Cache Hierarchy Die 0 Die 1 Core 0 Core 1 Core 4 Core 5 Processor L 2 Cache L 3 Cache Core Interconnec t L 3 Cache L 2 Cache Processor Core 2 Core 3 Core 6 Core 7 Memory Store (DRAM) Processor Interconnect Data Transfer • Distributed into caches • L 1 (per core) • L 2 (per core) • L 3 (per die) • Synchronized through an asynchronous “bus”: • Request/response • Message queues • Buffers Synchronization @gfraiteur

Hardware Memory Ordering • Issues due to implementation details: • Asynchronous bus • Caching & Buffering • Out-of-order execution @gfraiteur

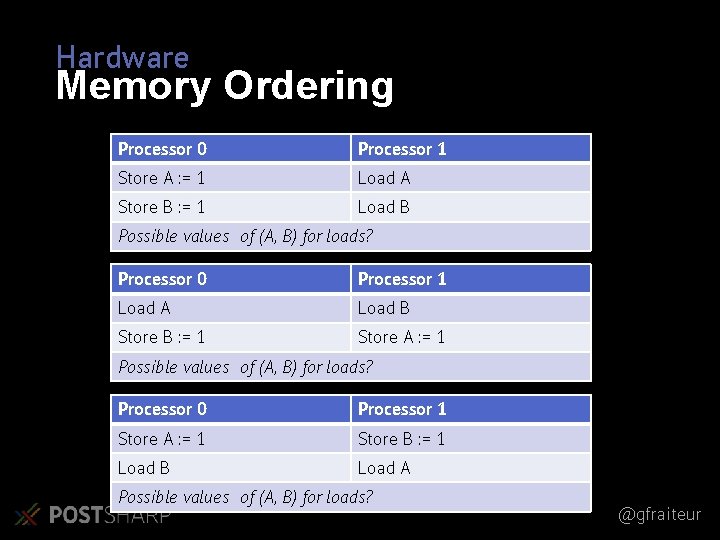

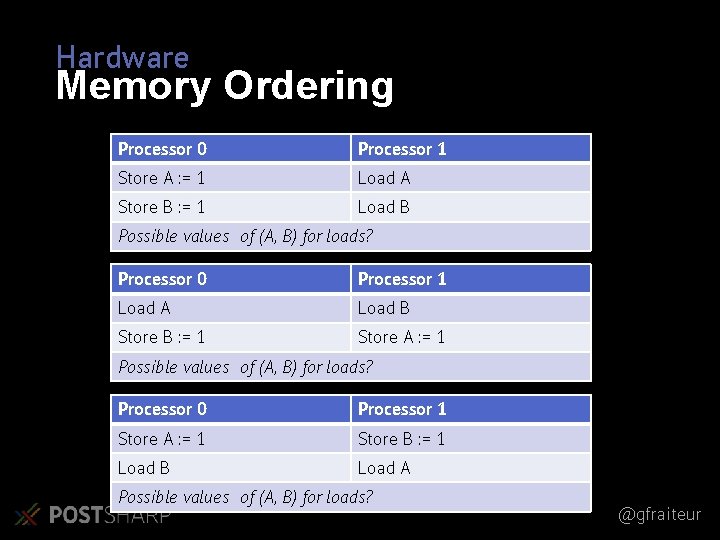

Hardware Memory Ordering Processor 0 Processor 1 Store A : = 1 Load A Store B : = 1 Load B Possible values of (A, B) for loads? Processor 0 Processor 1 Load A Load B Store B : = 1 Store A : = 1 Possible values of (A, B) for loads? Processor 0 Processor 1 Store A : = 1 Store B : = 1 Load B Load A Possible values of (A, B) for loads? @gfraiteur

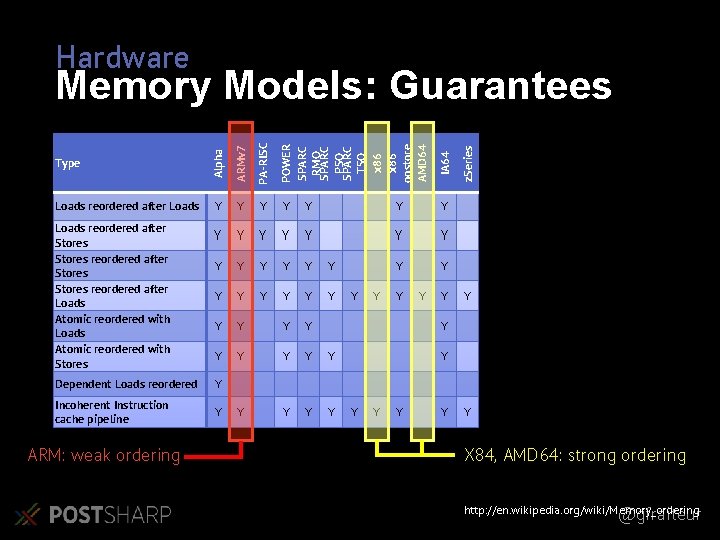

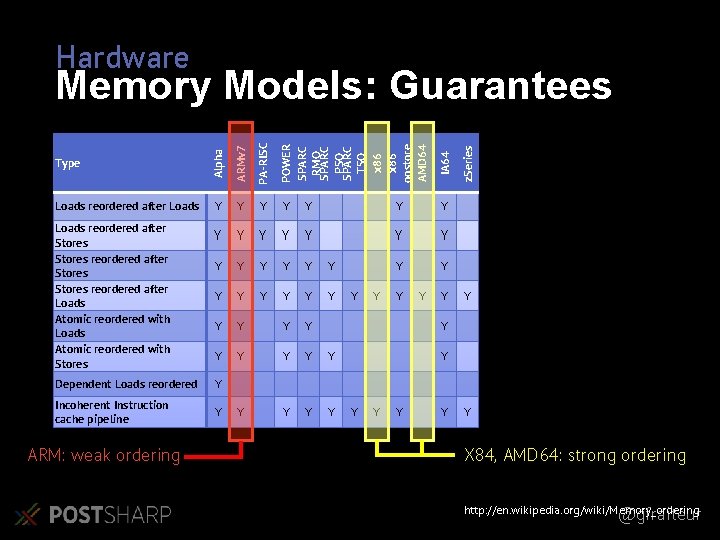

Hardware Y Y Y Loads reordered after Stores reordered after Loads Atomic reordered with Stores Y Y Y Y Y Y Y Y Dependent Loads reordered Y Incoherent Instruction cache pipeline Y Y Y ARM: weak ordering Y Y Y z. Series PA-RISC Y IA 64 ARMv 7 Loads reordered after Loads Type POWER SPARC RMO SPARC PSO SPARC TSO x 86 oostore AMD 64 Alpha Memory Models: Guarantees Y Y Y Y X 84, AMD 64: strong ordering http: //en. wikipedia. org/wiki/Memory_ordering @gfraiteur

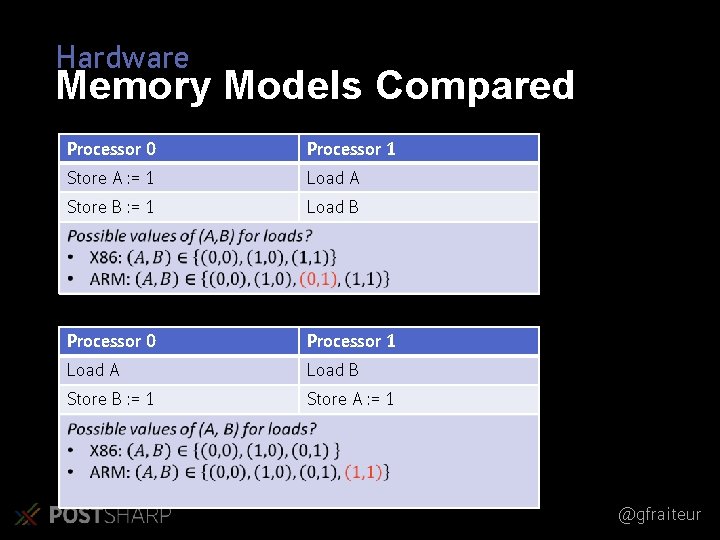

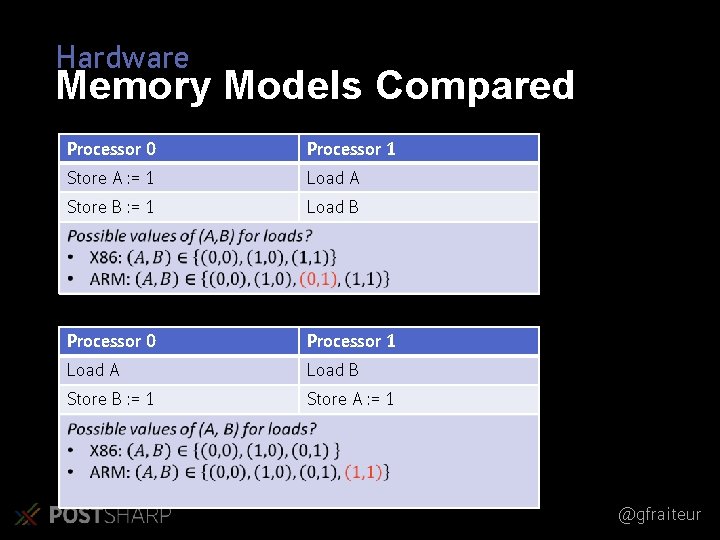

Hardware Memory Models Compared Processor 0 Processor 1 Store A : = 1 Load A Store B : = 1 Load B Processor 0 Processor 1 Load A Load B Store B : = 1 Store A : = 1 @gfraiteur

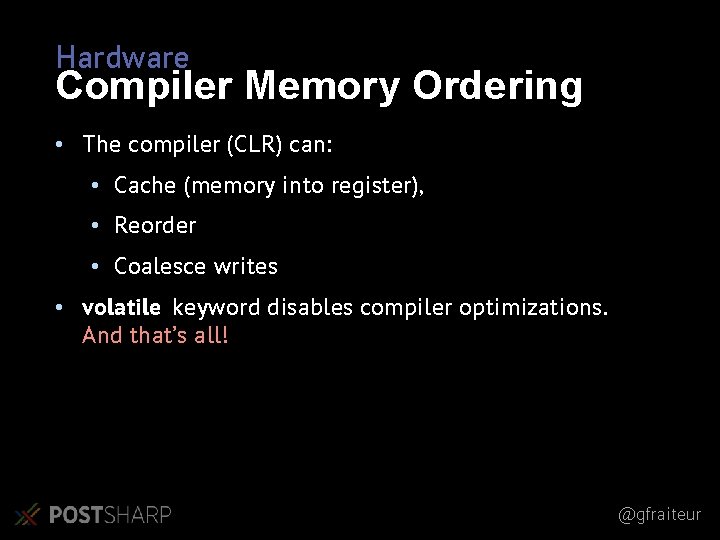

Hardware Compiler Memory Ordering • The compiler (CLR) can: • Cache (memory into register), • Reorder • Coalesce writes • volatile keyword disables compiler optimizations. And that’s all! @gfraiteur

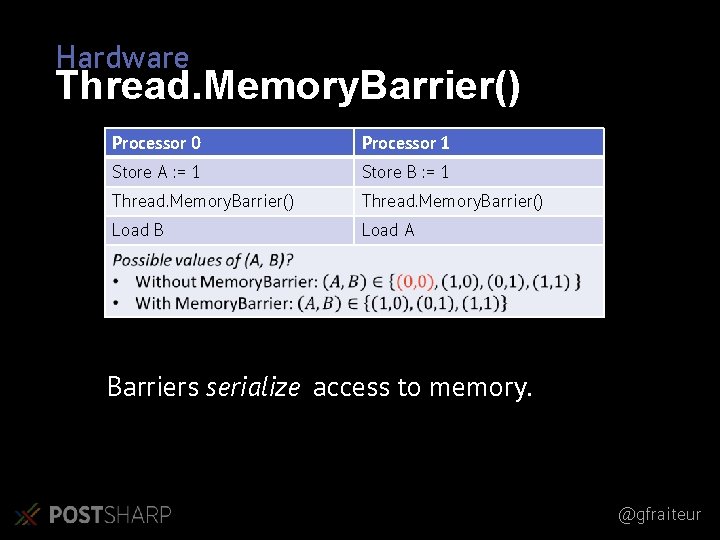

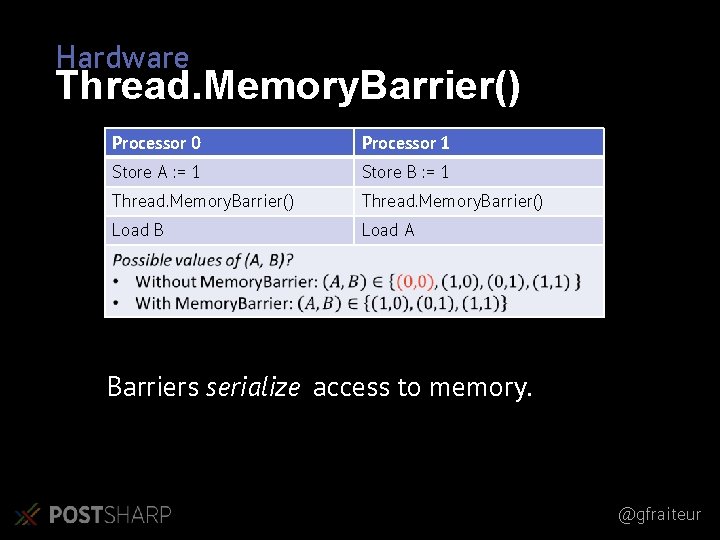

Hardware Thread. Memory. Barrier() Processor 0 Processor 1 Store A : = 1 Store B : = 1 Thread. Memory. Barrier() Load B Load A Barriers serialize access to memory. @gfraiteur

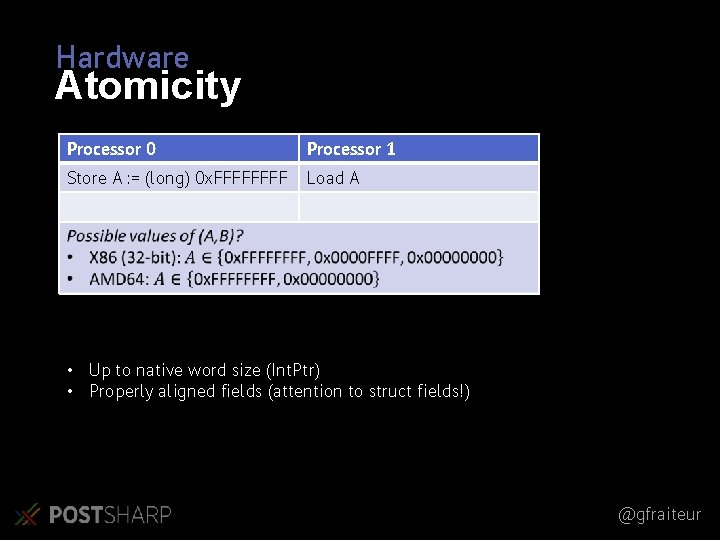

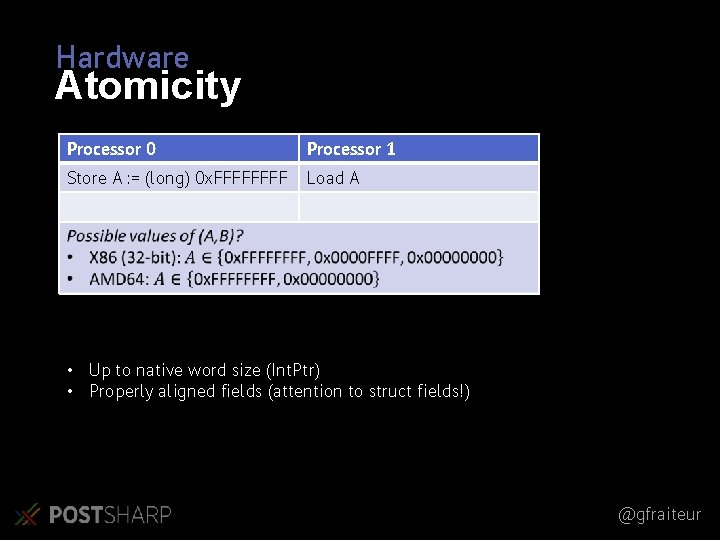

Hardware Atomicity Processor 0 Processor 1 Store A : = (long) 0 x. FFFF Load A • Up to native word size (Int. Ptr) • Properly aligned fields (attention to struct fields!) @gfraiteur

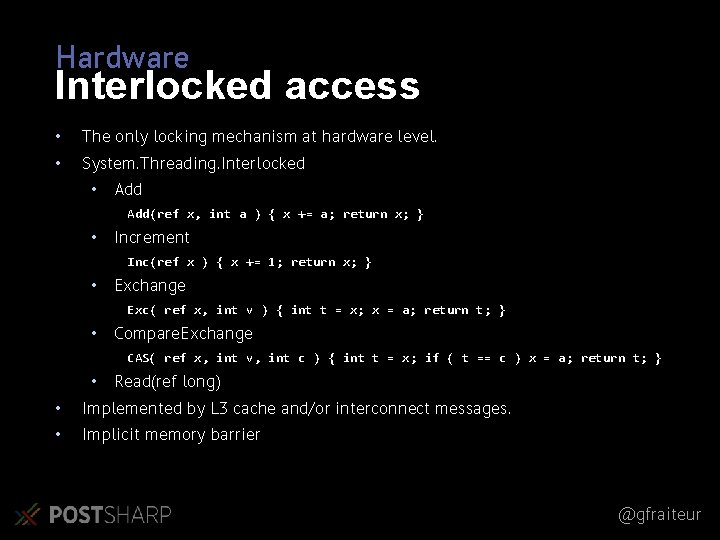

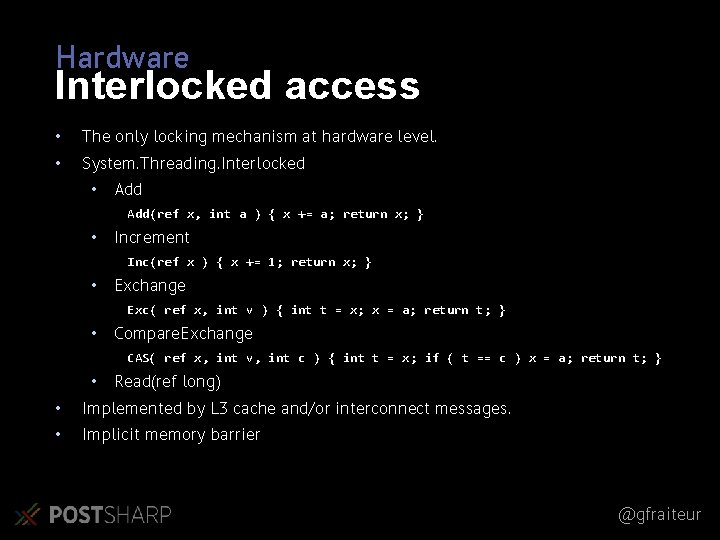

Hardware Interlocked access • The only locking mechanism at hardware level. • System. Threading. Interlocked • Add(ref x, int a ) { x += a; return x; } • Increment Inc(ref x ) { x += 1; return x; } • Exchange Exc( ref x, int v ) { int t = x; x = a; return t; } • Compare. Exchange CAS( ref x, int v, int c ) { int t = x; if ( t == c ) x = a; return t; } • Read(ref long) • Implemented by L 3 cache and/or interconnect messages. • Implicit memory barrier @gfraiteur

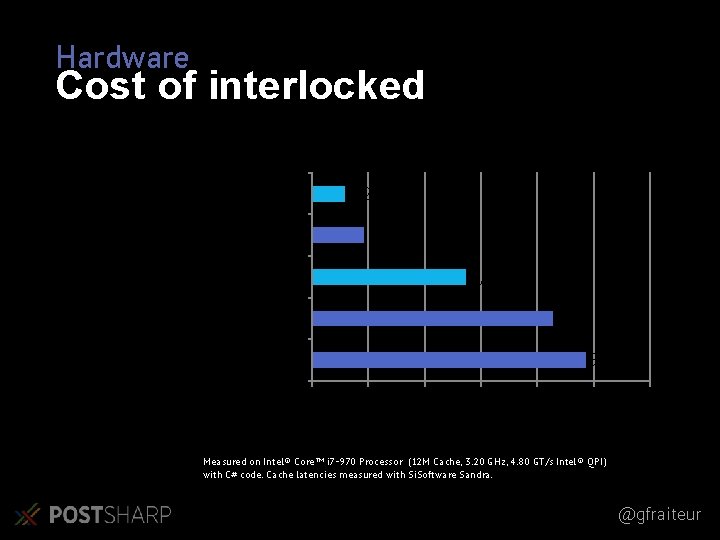

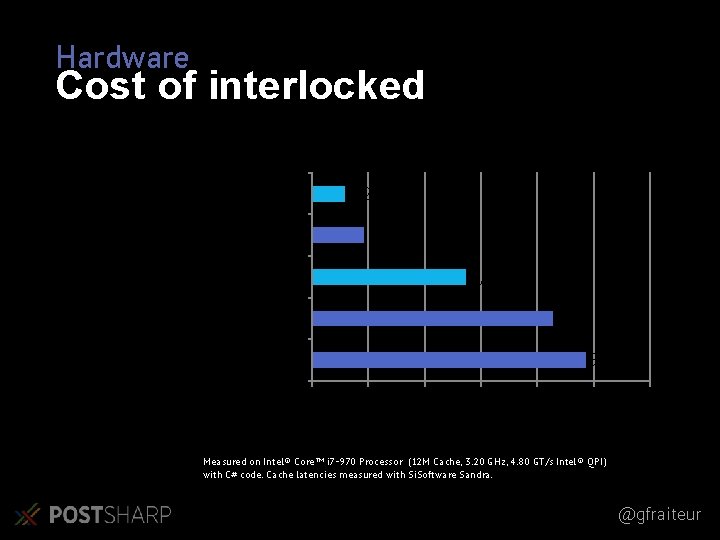

Hardware Cost of interlocked Latency 1, 2 L 1 Cache 1, 87 Non-interlocked increment 5, 5 Interlocked increment (non-contended) 8, 58 L 3 Cache 9, 75 Interlocked increment (contended, same socket) 0 2 4 6 ns 8 10 12 Measured on Intel® Core™ i 7 -970 Processor (12 M Cache, 3. 20 GHz, 4. 80 GT/s Intel® QPI) with C# code. Cache latencies measured with Si. Software Sandra. @gfraiteur

Hardware Cost of cache coherency @gfraiteur

Multitasking • No thread, no process at hardware level • There no such thing as “wait” • One core never does more than 1 “thing” at the same time (except Hyper. Threading) • Task-State Segment: • CPU State • Permissions (I/O, IRQ) • Memory Mapping • A task runs until interrupted by hardware or OS @gfraiteur

Lab: Non-Blocking Algorithms • Non-blocking stack (4. 5 MT/s) • Ring buffer (13. 2 MT/s) @gfraiteur

Operating System Windows Kernel @gfraiteur

@gfraiteur Side. Kick (1988). http: //en. wikipedia. org/wiki/Side. Ki

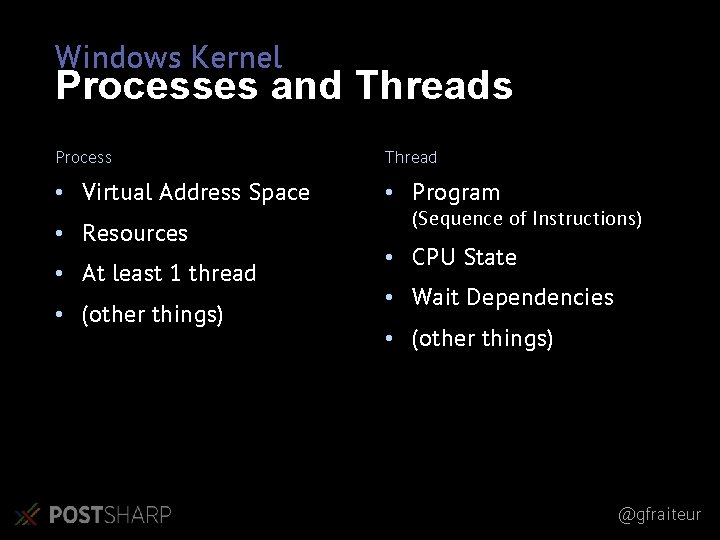

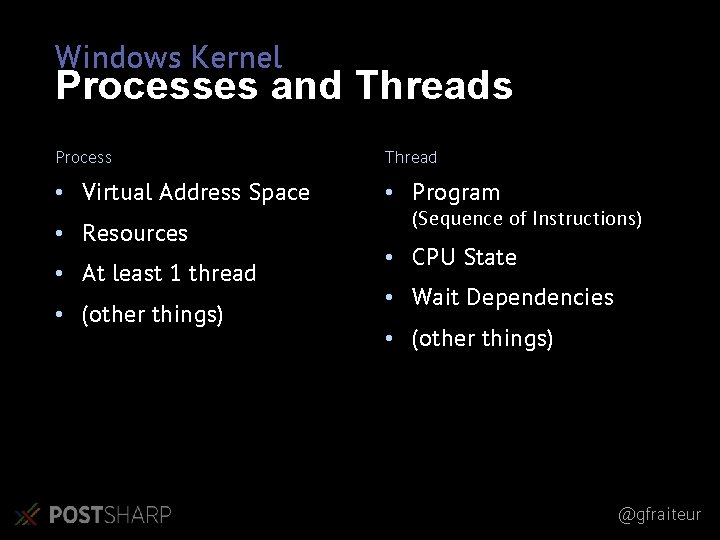

Windows Kernel Processes and Threads Process Thread • Virtual Address Space • Program • Resources • At least 1 thread • (other things) (Sequence of Instructions) • CPU State • Wait Dependencies • (other things) @gfraiteur

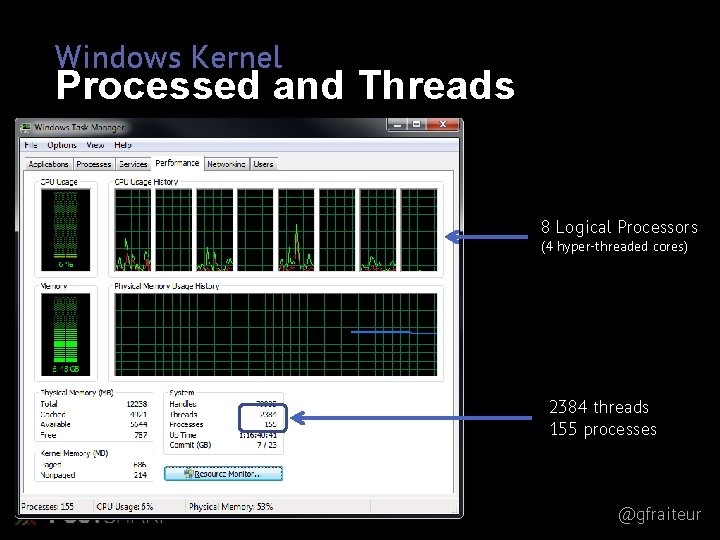

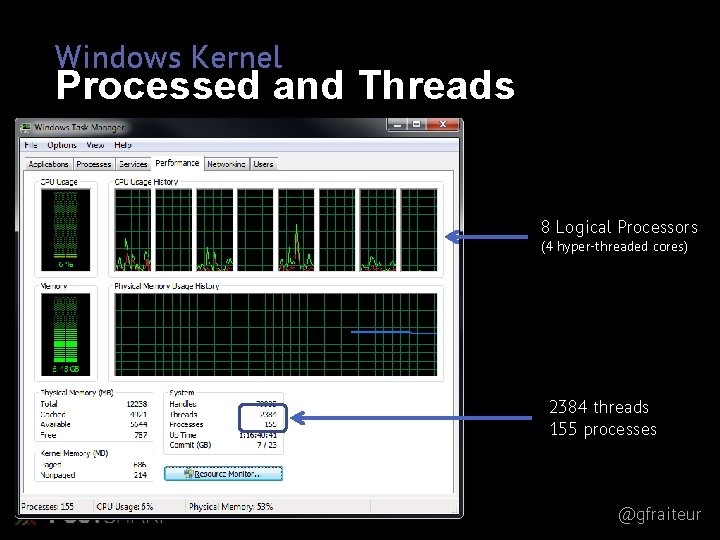

Windows Kernel Processed and Threads 8 Logical Processors (4 hyper-threaded cores) 2384 threads 155 processes @gfraiteur

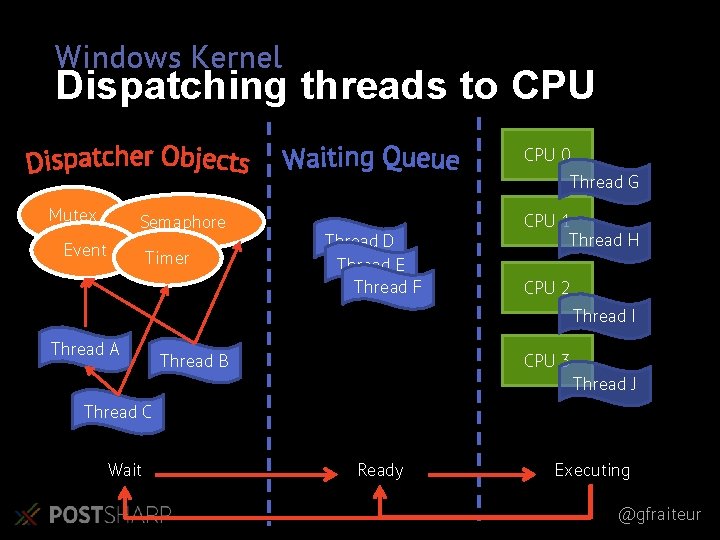

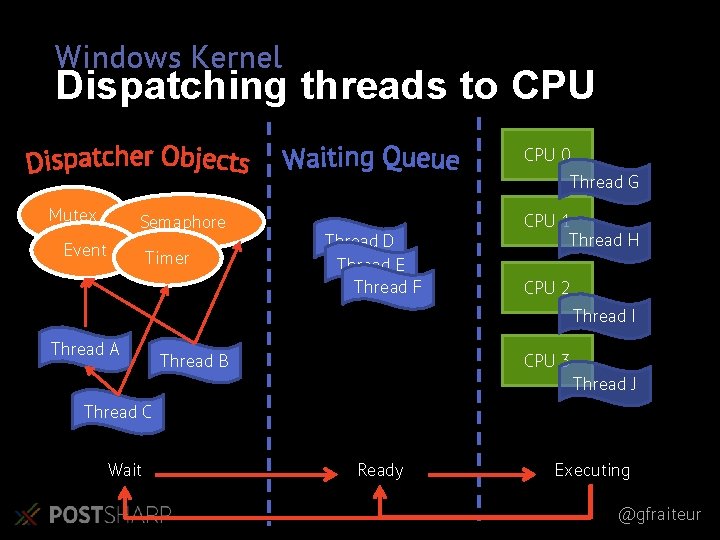

Windows Kernel Dispatching threads to CPU 0 Thread G Mutex Semaphore Event Timer Thread D Thread E Thread F CPU 1 Thread H CPU 2 Thread I Thread A Thread B CPU 3 Thread J Thread C Wait Ready Executing @gfraiteur

Windows Kernel Dispatcher Objects (Wait. Handle) Kinds of dispatcher objects Characteristics • Event • Live in the kernel • Mutex • Sharable among processes • Semaphore • Timer • Expensive! • Thread @gfraiteur

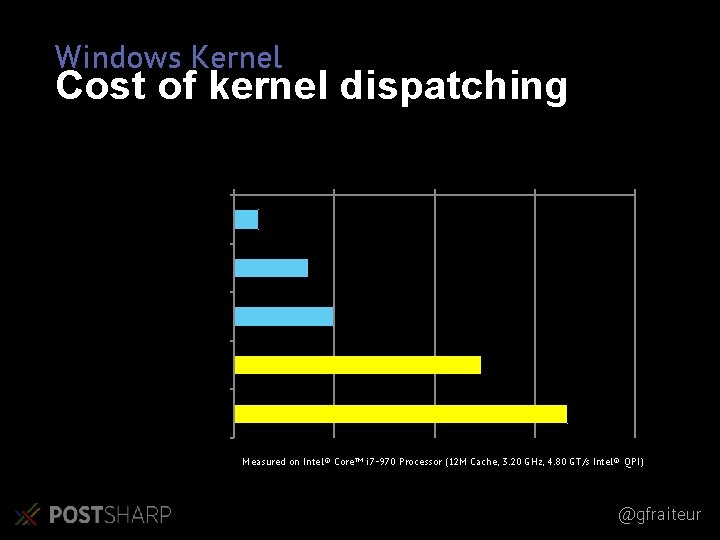

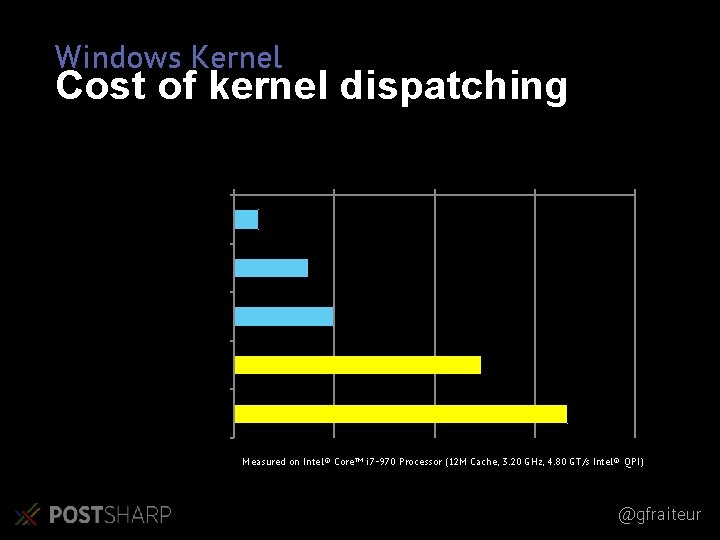

Windows Kernel Cost of kernel dispatching Duration (ns) 1 Normal increment Non-contented interlocked increment Contented interlocked increment Access kernel dispatcher object Create+dispose kernel dispatcher object 10 10000 2 6 10 295 2 093 Measured on Intel® Core™ i 7 -970 Processor (12 M Cache, 3. 20 GHz, 4. 80 GT/s Intel® QPI) @gfraiteur

Windows Kernel Wait Methods • Thread. Sleep • Thread. Yield • Thread. Join • Wait. Handle. Wait • (Thread. Spin. Wait) @gfraiteur

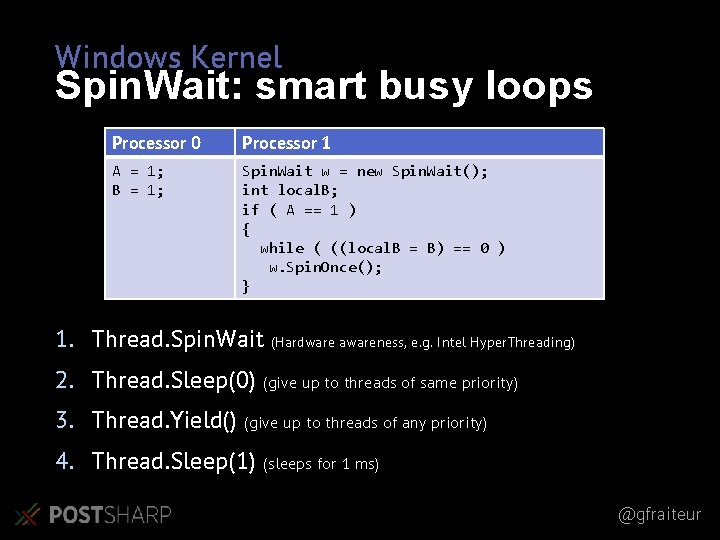

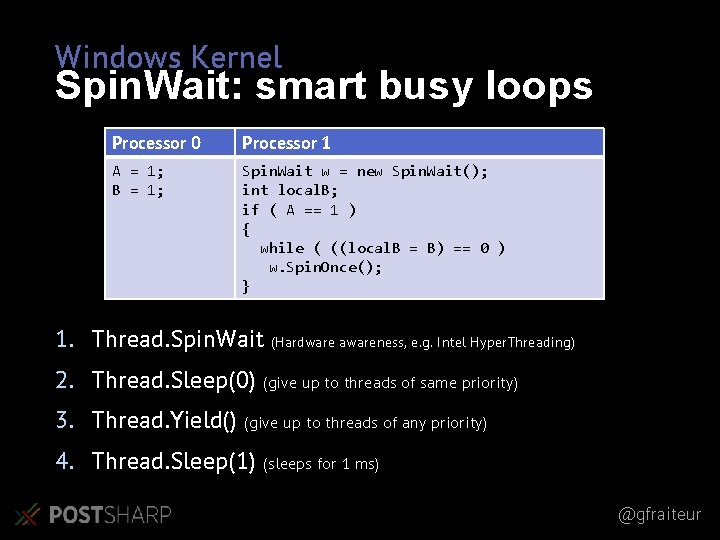

Windows Kernel Spin. Wait: smart busy loops Processor 0 Processor 1 A = 1; B = 1; Spin. Wait w = new Spin. Wait(); int local. B; if ( A == 1 ) { while ( ((local. B = B) == 0 ) w. Spin. Once(); } 1. Thread. Spin. Wait (Hardware awareness, e. g. Intel Hyper. Threading) 2. Thread. Sleep(0) (give up to threads of same priority) 3. Thread. Yield() (give up to threads of any priority) 4. Thread. Sleep(1) (sleeps for 1 ms) @gfraiteur

Application Programming . NET Framework @gfraiteur

Application Programming Design Objectives • Ease of use • High performance from applications! @gfraiteur

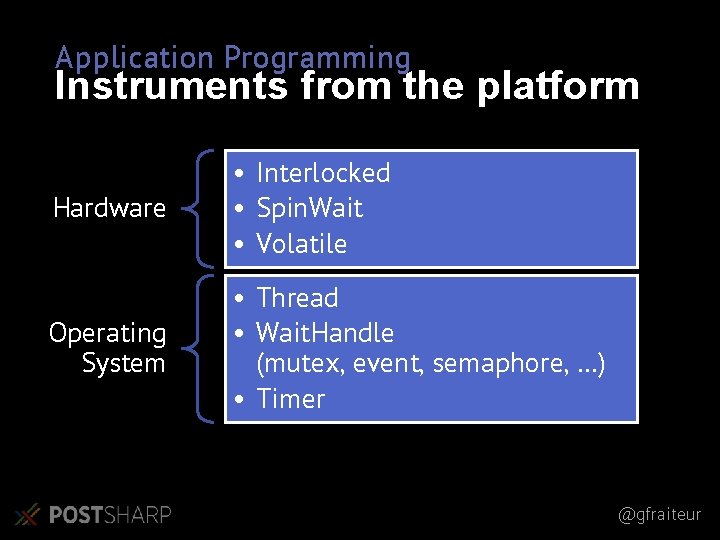

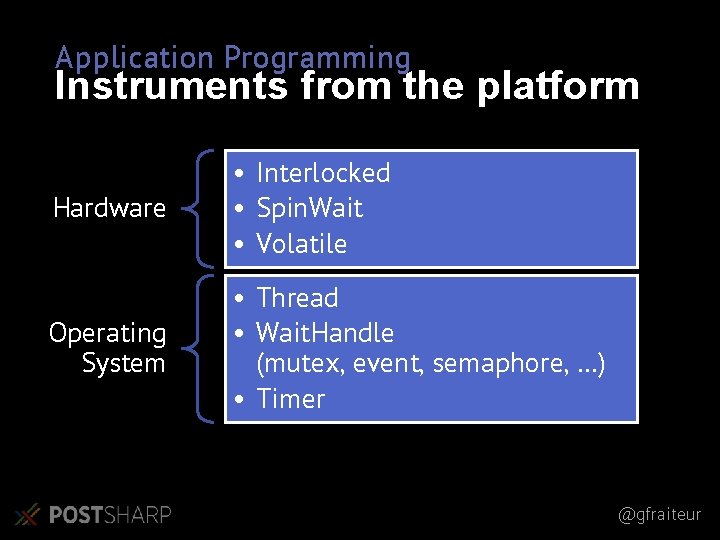

Application Programming Instruments from the platform Hardware • Interlocked • Spin. Wait • Volatile Operating System • Thread • Wait. Handle (mutex, event, semaphore, …) • Timer @gfraiteur

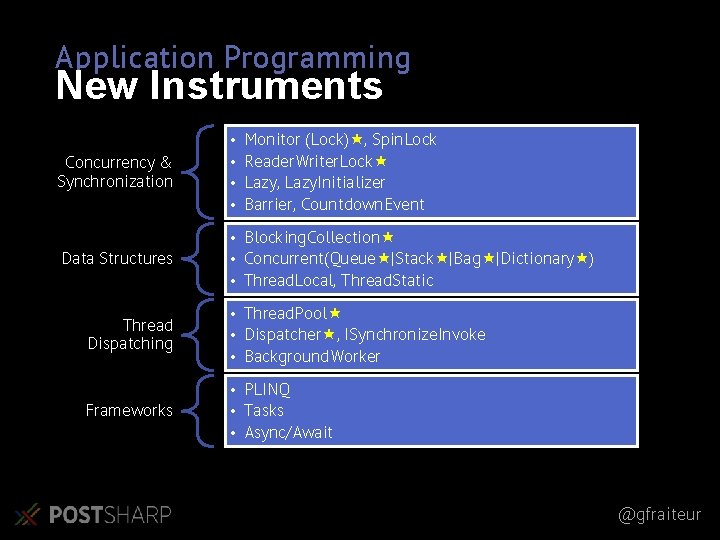

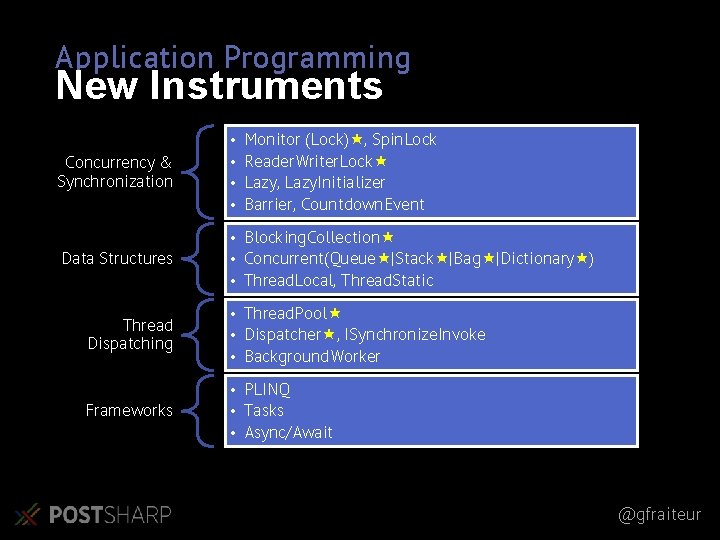

Application Programming New Instruments Concurrency & Synchronization • • Data Structures • Blocking. Collection • Concurrent(Queue |Stack |Bag |Dictionary ) • Thread. Local, Thread. Static Monitor (Lock) , Spin. Lock Reader. Writer. Lock Lazy, Lazy. Initializer Barrier, Countdown. Event Thread Dispatching • Thread. Pool • Dispatcher , ISynchronize. Invoke • Background. Worker Frameworks • PLINQ • Tasks • Async/Await @gfraiteur

Application Programming Concurrency & Synchronization @gfraiteur

Concurrency & Synchronization Monitor: the lock keyword 1. Start with interlocked operations (no contention) 2. Continue with “spin wait”. 3. Create kernel event and wait Good performance if low contention @gfraiteur

Concurrency & Synchronization Reader. Writer. Lock • Concurrent reads allowed • Writes require exclusive access @gfraiteur

Concurrency & Synchronization Lab: Reader. Writer. Lock @gfraiteur

Application Programming Data Structures @gfraiteur

Data Structures Blocking. Collection • Use case: pass messages between threads • Try. Take: wait for an item and take it • Add: wait for free capacity (if limited) and add item to queue • Complete. Adding : no more item @gfraiteur

Data Structures Lab: Blocking. Collection @gfraiteur

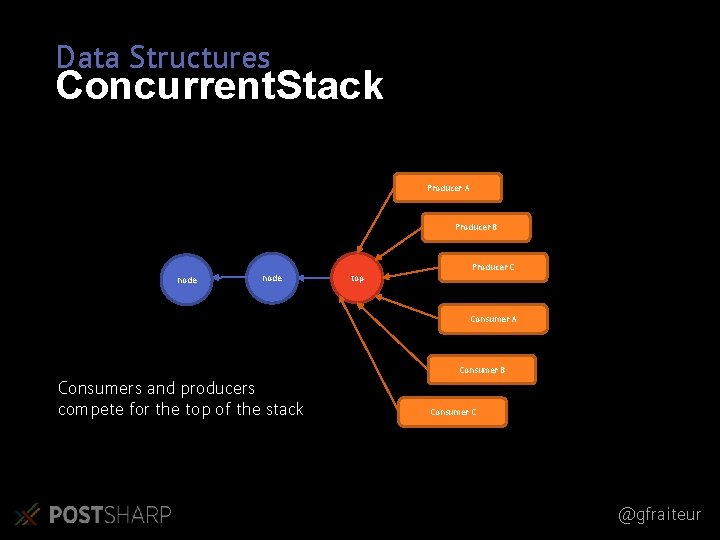

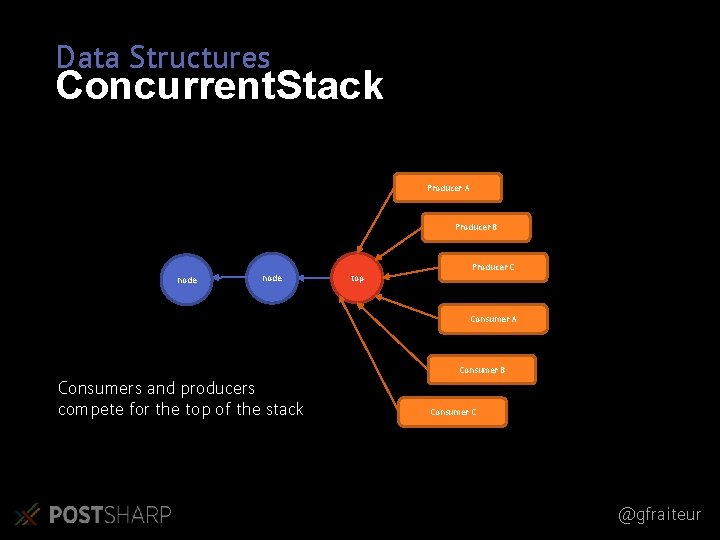

Data Structures Concurrent. Stack Producer A Producer B node top Producer C Consumer A Consumer B Consumers and producers compete for the top of the stack Consumer C @gfraiteur

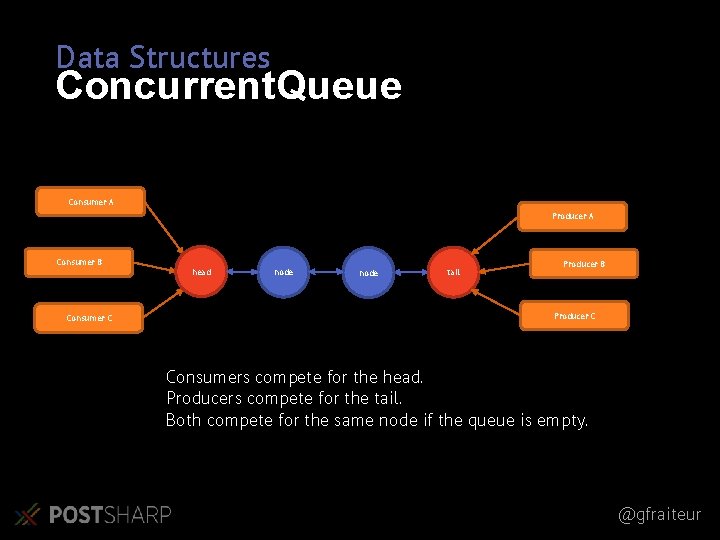

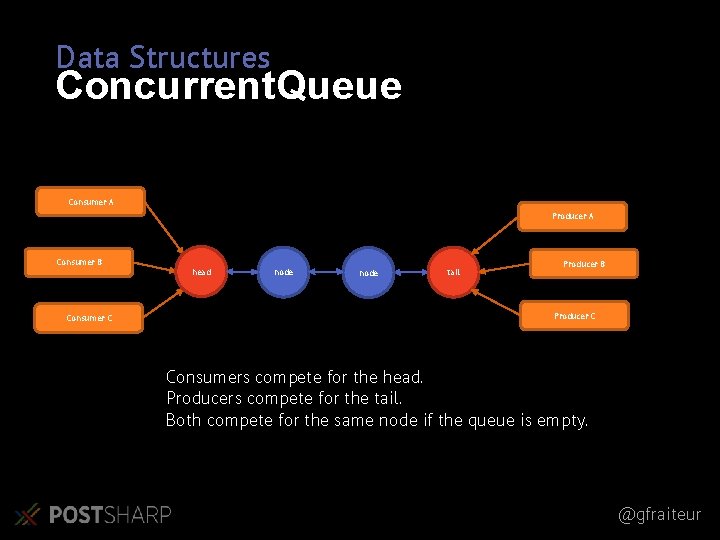

Data Structures Concurrent. Queue Consumer A Producer A Consumer B Consumer C head node tail Producer B Producer C Consumers compete for the head. Producers compete for the tail. Both compete for the same node if the queue is empty. @gfraiteur

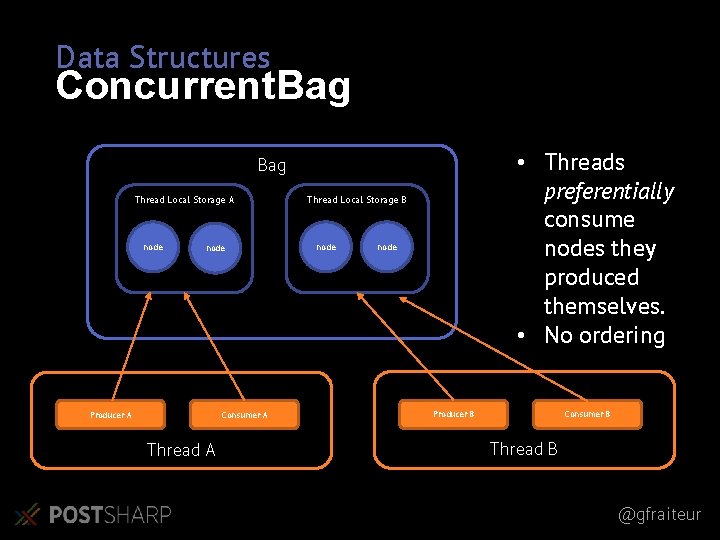

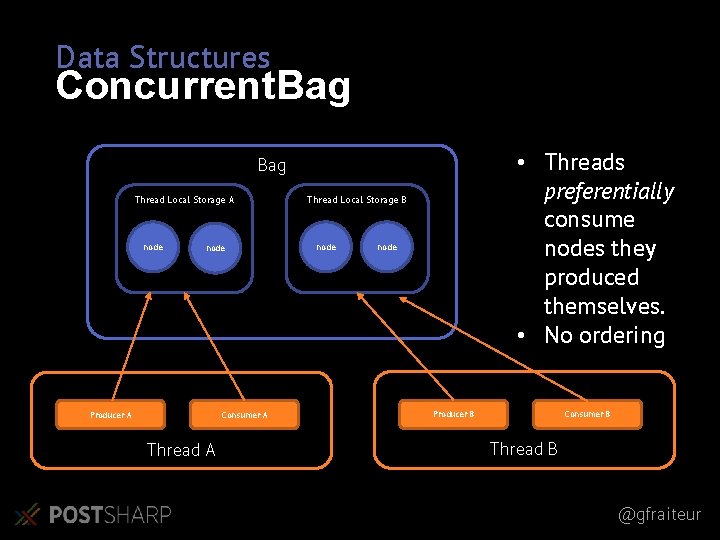

Data Structures Concurrent. Bag • Threads preferentially consume nodes they produced themselves. • No ordering Bag Thread Local Storage A node Producer A Consumer A Thread Local Storage B node Producer B Consumer B Thread B @gfraiteur

Data Structures Concurrent. Dictionary • Try. Add • Try. Update (Compare. Exchange) • Try. Remove • Add. Or. Update • Get. Or. Add @gfraiteur

Application Programming Thread Dispatching @gfraiteur

Thread Dispatching Thread. Pool • Problems solved: • Execute a task asynchronously (Queue. User. Work. Item) • Waiting for Wait. Handle (Register. Wait. For. Single. Object) • Creating a thread is expensive • Good for I/O intensive operations • Bad for CPU intensive operations @gfraiteur

Thread Dispatching Dispatcher • Execute a task in the UI thread • Synchronously: Dispatcher. Invoke • Asynchonously: Dispatcher. Begin. Invoke • Under the hood, uses the HWND message loop @gfraiteur

Thread Dispatching @gfraiteur

Q&A Gael Fraiteur gael@postsharp. net @gfraiteur

Summary @gfraiteur

http: //www. postsharp. net/ gael@postsharp. net @gfraiteur