Xen and the Art of Virtualization Steve Hand

- Slides: 28

Xen and the Art of Virtualization Steve Hand Keir Fraser, Ian Pratt, Christian Limpach, Dan Magenheimer (HP), Mike Wray (HP), R Neugebauer (Intel), M Williamson (Intel) GGF Brussels 20 September 2004 Computer Laboratory

Talk Outline ¾Background: Xeno. Servers ¾The Xen Virtual Machine Monitor § Architectural Evolution § System Performance § Subsystem Details… ¾Status & Future Work

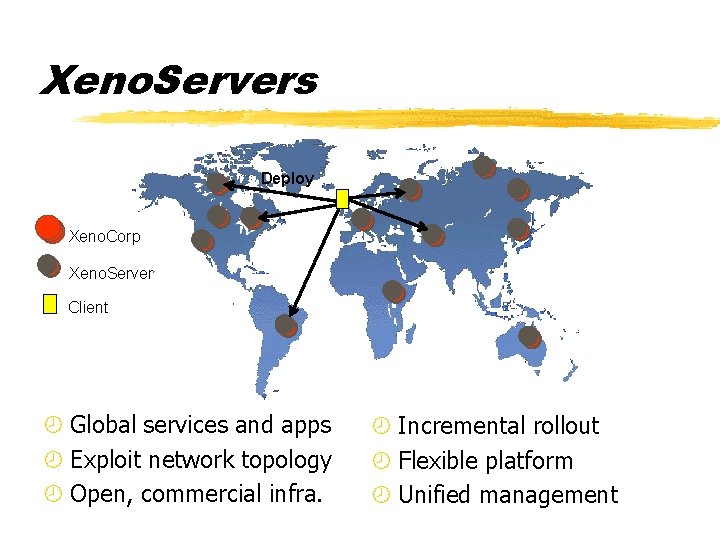

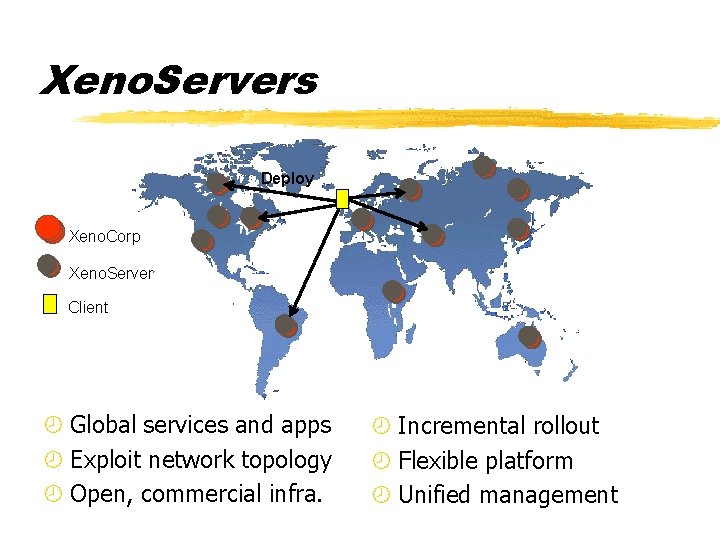

Xeno. Servers Deploy Xeno. Corp Xeno. Server Client ¾ Global services and apps ¾ Exploit network topology ¾ Open, commercial infra. ¾ Incremental rollout ¾ Flexible platform ¾ Unified management

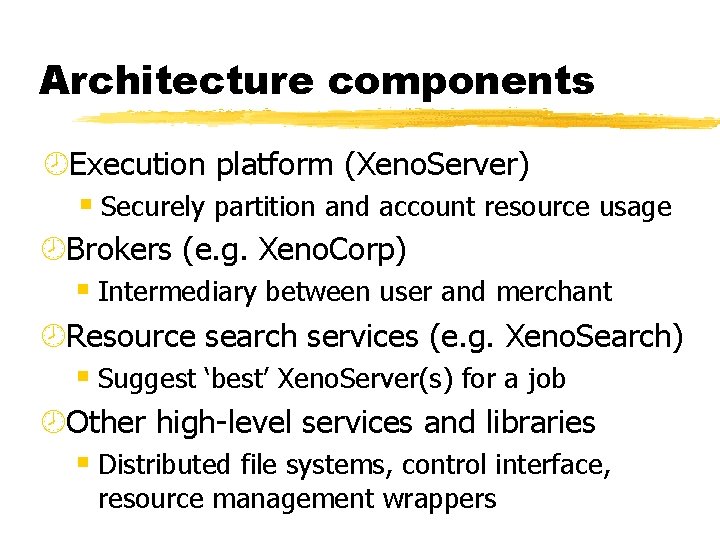

Architecture components ¾Execution platform (Xeno. Server) § Securely partition and account resource usage ¾Brokers (e. g. Xeno. Corp) § Intermediary between user and merchant ¾Resource search services (e. g. Xeno. Search) § Suggest ‘best’ Xeno. Server(s) for a job ¾Other high-level services and libraries § Distributed file systems, control interface, resource management wrappers

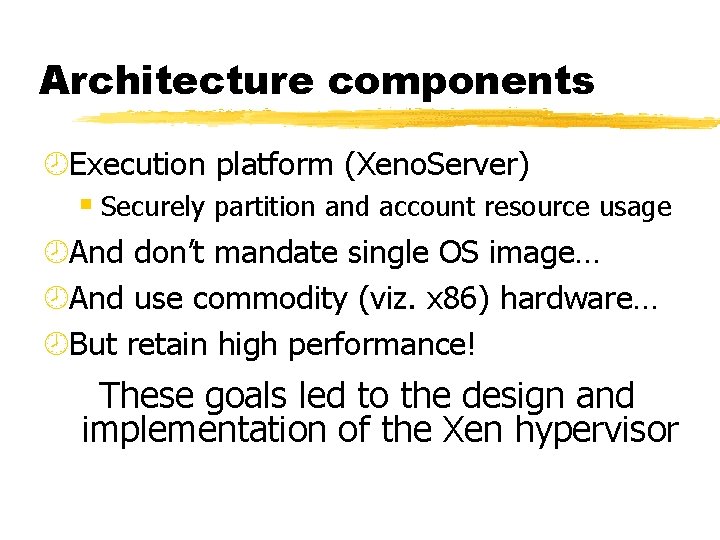

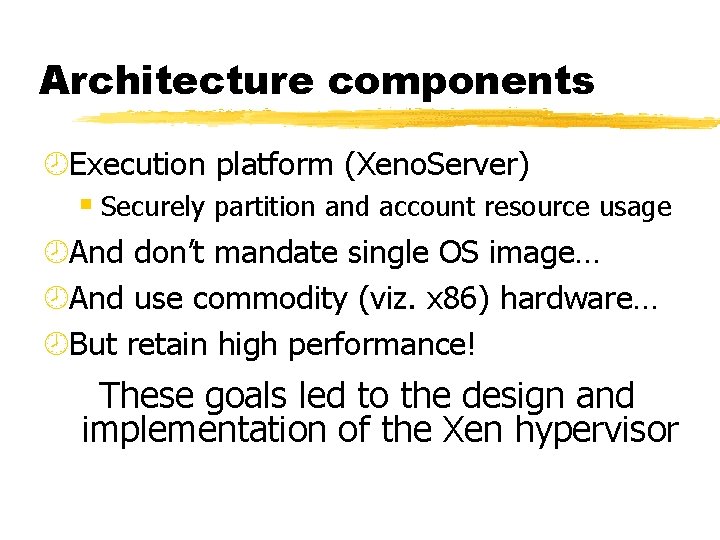

Architecture components ¾Execution platform (Xeno. Server) § Securely partition and account resource usage ¾And don’t mandate single OS image… ¾And use commodity (viz. x 86) hardware… ¾But retain high performance! These goals led to the design and implementation of the Xen hypervisor

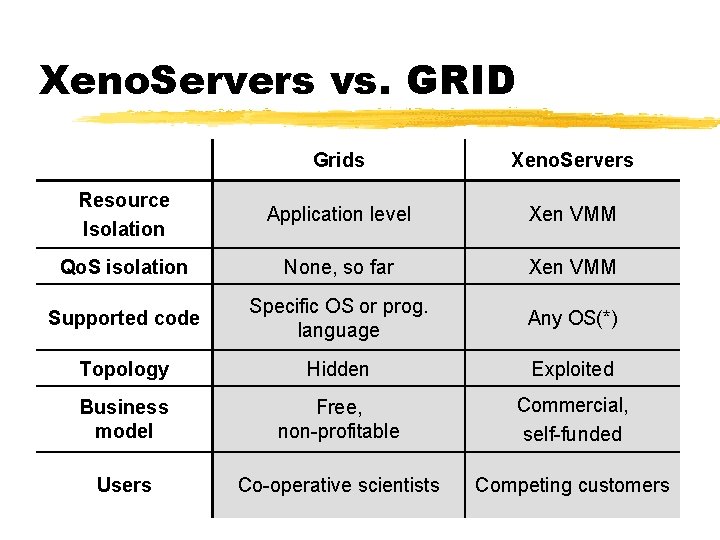

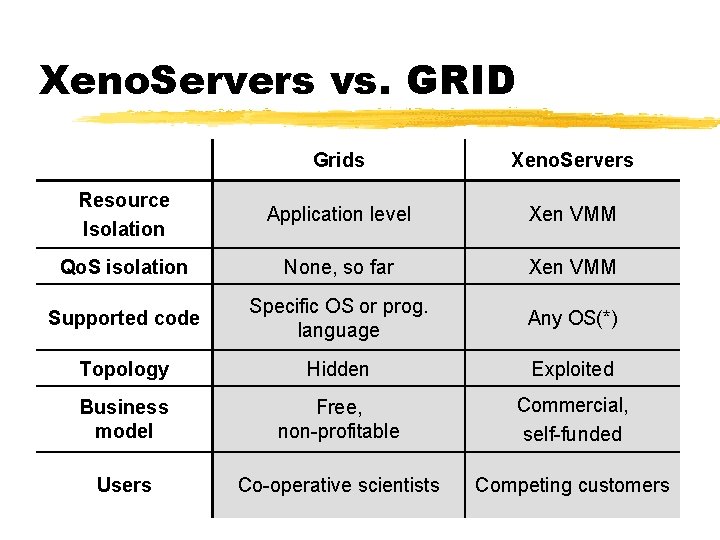

Xeno. Servers vs. GRID Grids Xeno. Servers Resource Isolation Application level Xen VMM Qo. S isolation None, so far Xen VMM Supported code Specific OS or prog. language Any OS(*) Topology Hidden Exploited Business model Free, non-profitable Commercial, self-funded Users Co-operative scientists Competing customers

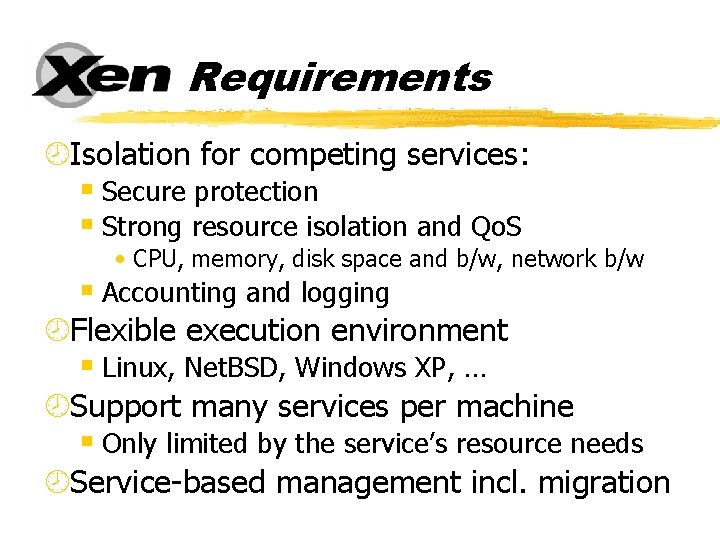

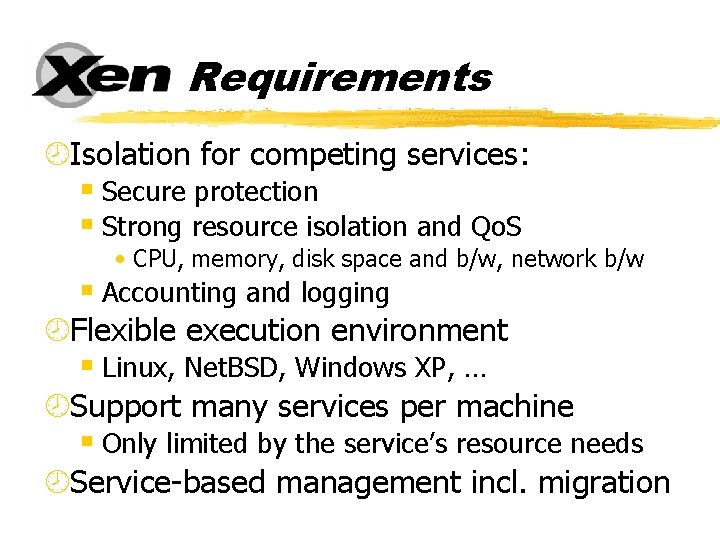

Requirements ¾Isolation for competing services: § Secure protection § Strong resource isolation and Qo. S • CPU, memory, disk space and b/w, network b/w § Accounting and logging ¾Flexible execution environment § Linux, Net. BSD, Windows XP, … ¾Support many services per machine § Only limited by the service’s resource needs ¾Service-based management incl. migration

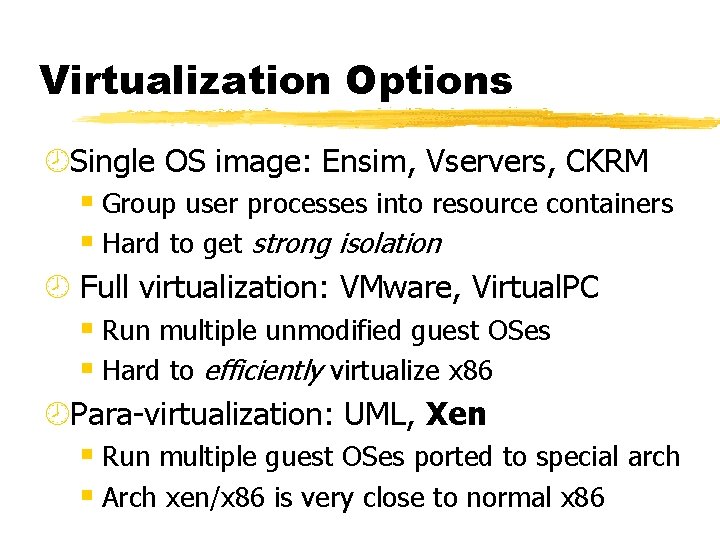

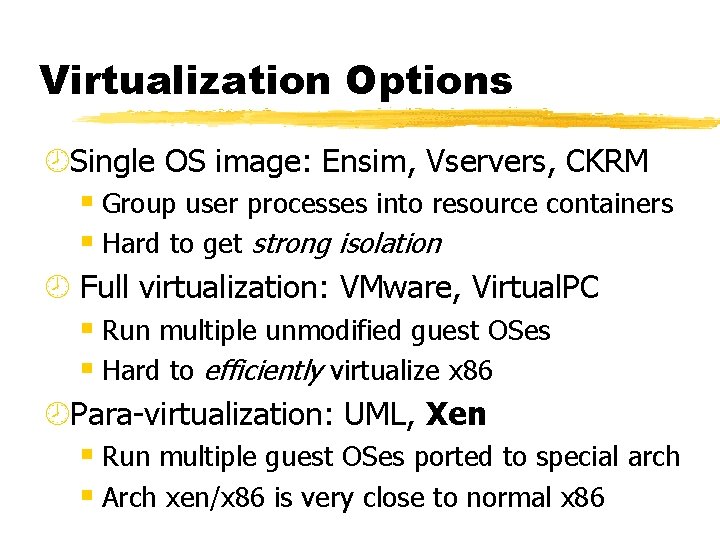

Virtualization Options ¾Single OS image: Ensim, Vservers, CKRM § Group user processes into resource containers § Hard to get strong isolation ¾ Full virtualization: VMware, Virtual. PC § Run multiple unmodified guest OSes § Hard to efficiently virtualize x 86 ¾Para-virtualization: UML, Xen § Run multiple guest OSes ported to special arch § Arch xen/x 86 is very close to normal x 86

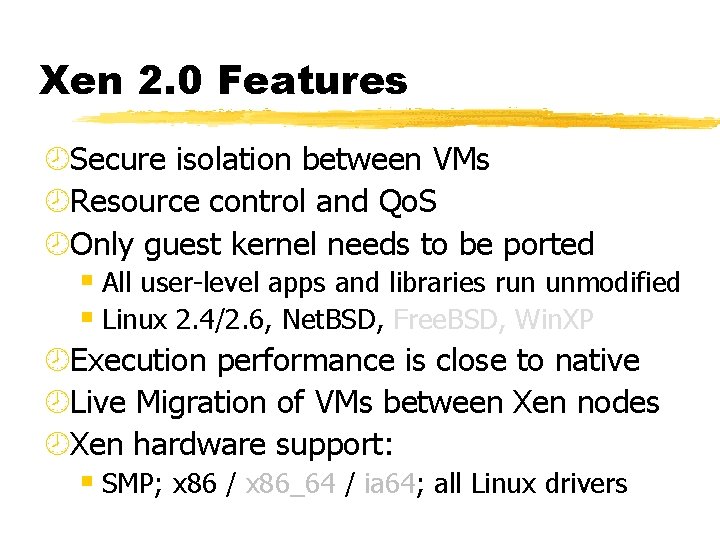

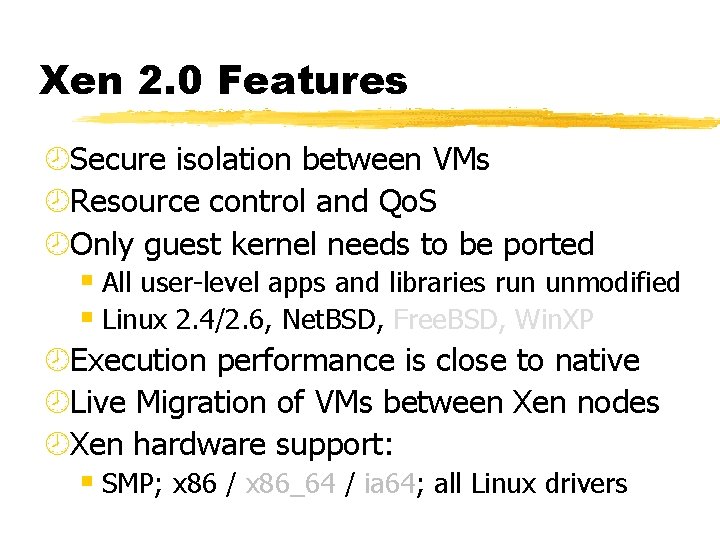

Xen 2. 0 Features ¾Secure isolation between VMs ¾Resource control and Qo. S ¾Only guest kernel needs to be ported § All user-level apps and libraries run unmodified § Linux 2. 4/2. 6, Net. BSD, Free. BSD, Win. XP ¾Execution performance is close to native ¾Live Migration of VMs between Xen nodes ¾Xen hardware support: § SMP; x 86 / x 86_64 / ia 64; all Linux drivers

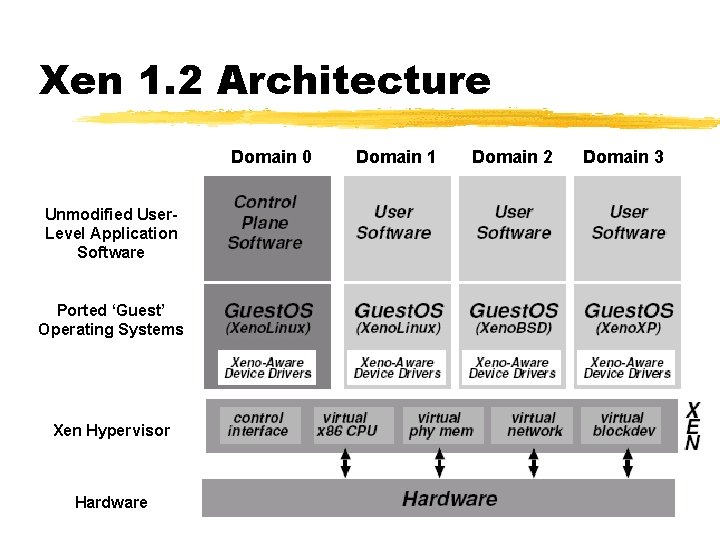

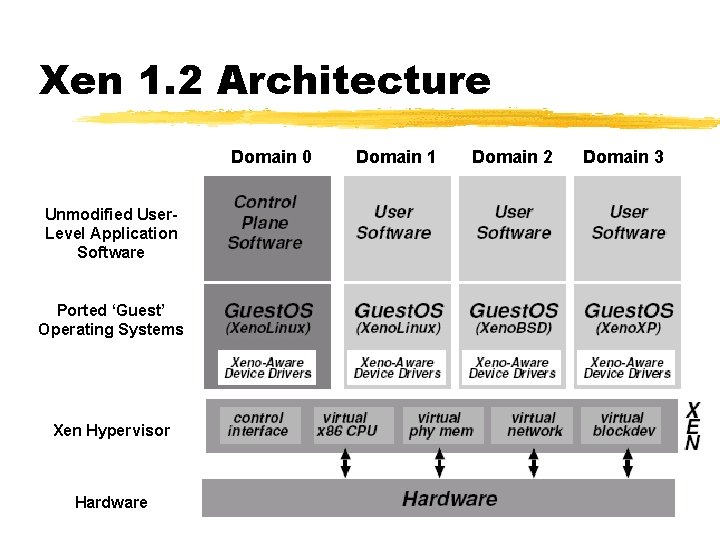

Xen 1. 2 Architecture Domain 0 Unmodified User. Level Application Software Ported ‘Guest’ Operating Systems Xen Hypervisor Hardware Domain 1 Domain 2 Domain 3

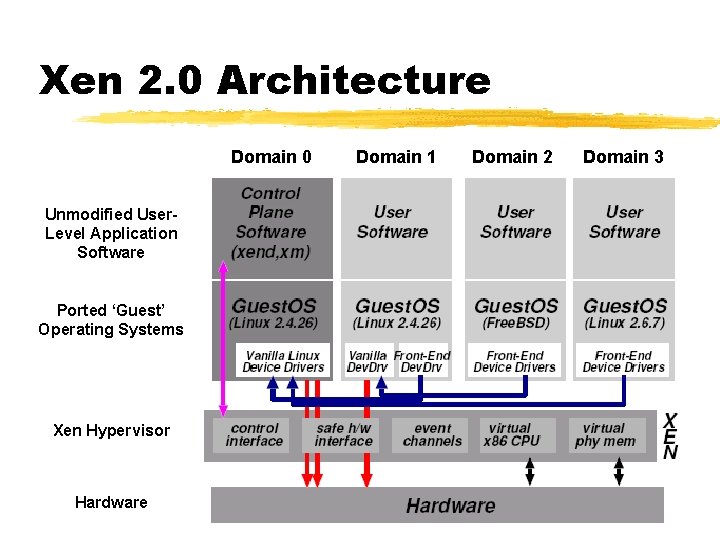

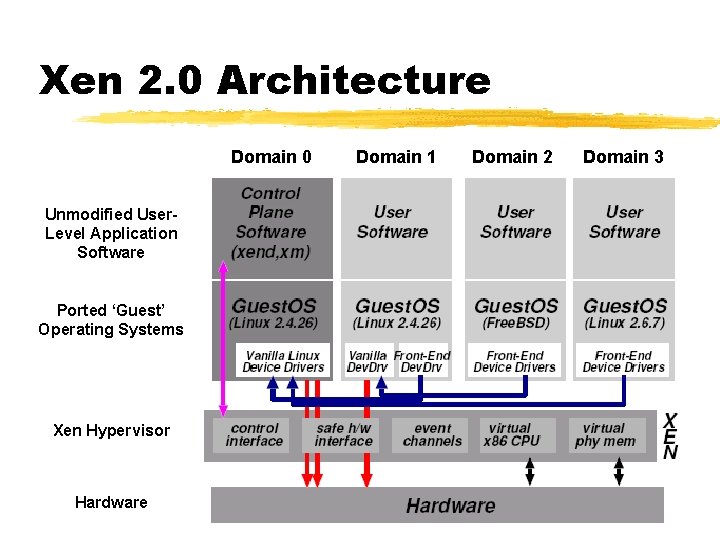

Xen 2. 0 Architecture Domain 0 Unmodified User. Level Application Software Ported ‘Guest’ Operating Systems Xen Hypervisor Hardware Domain 1 Domain 2 Domain 3

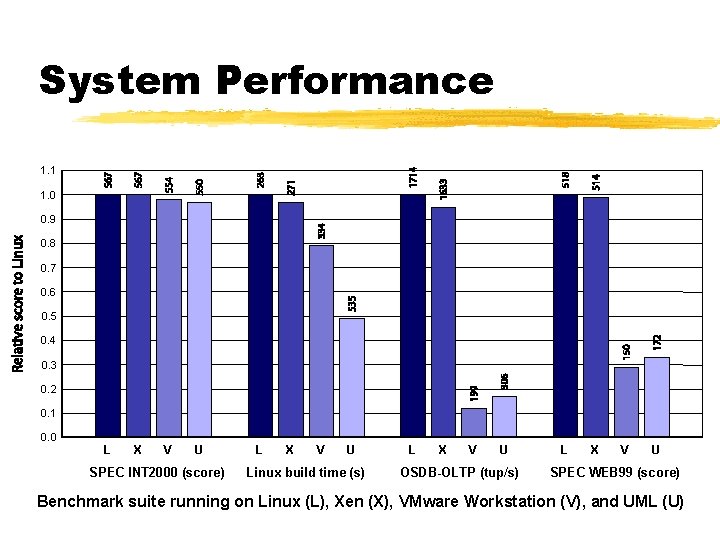

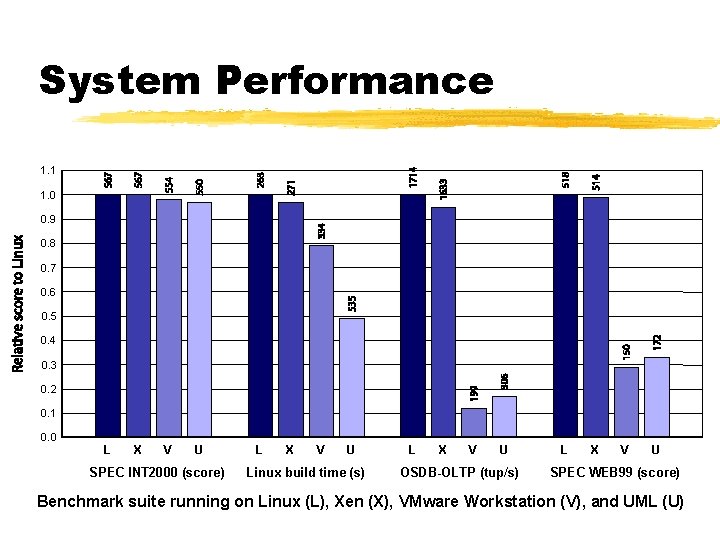

System Performance 1. 1 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0. 0 L X V U SPEC INT 2000 (score) L X V U Linux build time (s) L X V U OSDB-OLTP (tup/s) L X V U SPEC WEB 99 (score) Benchmark suite running on Linux (L), Xen (X), VMware Workstation (V), and UML (U)

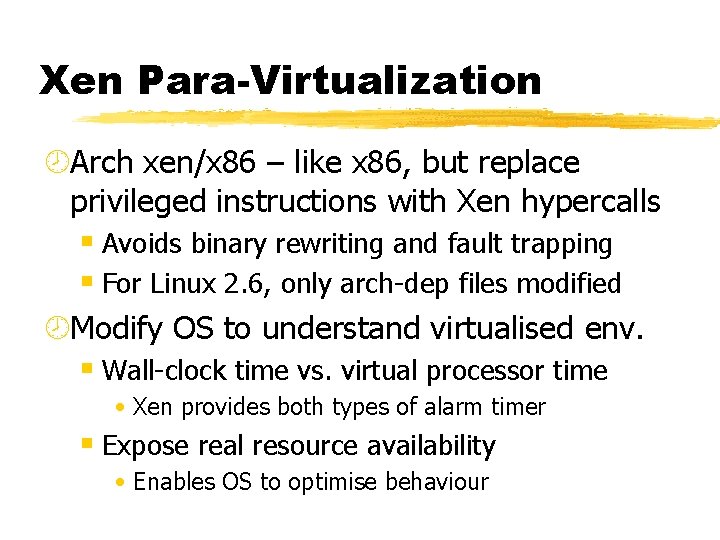

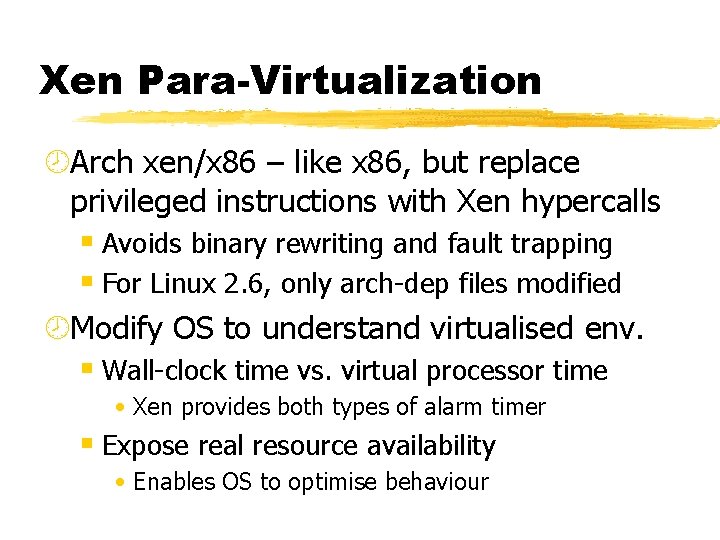

Xen Para-Virtualization ¾Arch xen/x 86 – like x 86, but replace privileged instructions with Xen hypercalls § Avoids binary rewriting and fault trapping § For Linux 2. 6, only arch-dep files modified ¾Modify OS to understand virtualised env. § Wall-clock time vs. virtual processor time • Xen provides both types of alarm timer § Expose real resource availability • Enables OS to optimise behaviour

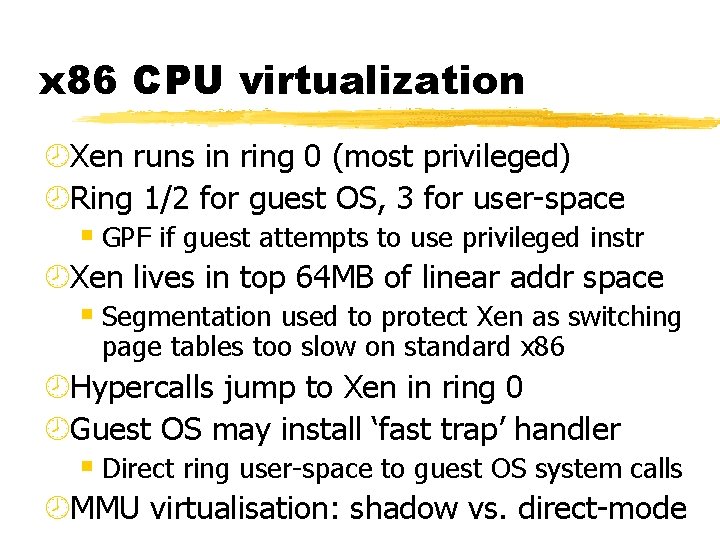

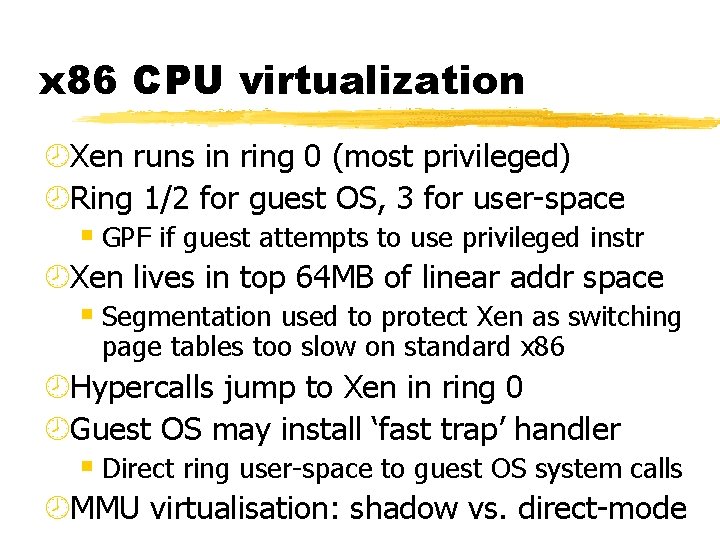

x 86 CPU virtualization ¾Xen runs in ring 0 (most privileged) ¾Ring 1/2 for guest OS, 3 for user-space § GPF if guest attempts to use privileged instr ¾Xen lives in top 64 MB of linear addr space § Segmentation used to protect Xen as switching page tables too slow on standard x 86 ¾Hypercalls jump to Xen in ring 0 ¾Guest OS may install ‘fast trap’ handler § Direct ring user-space to guest OS system calls ¾MMU virtualisation: shadow vs. direct-mode

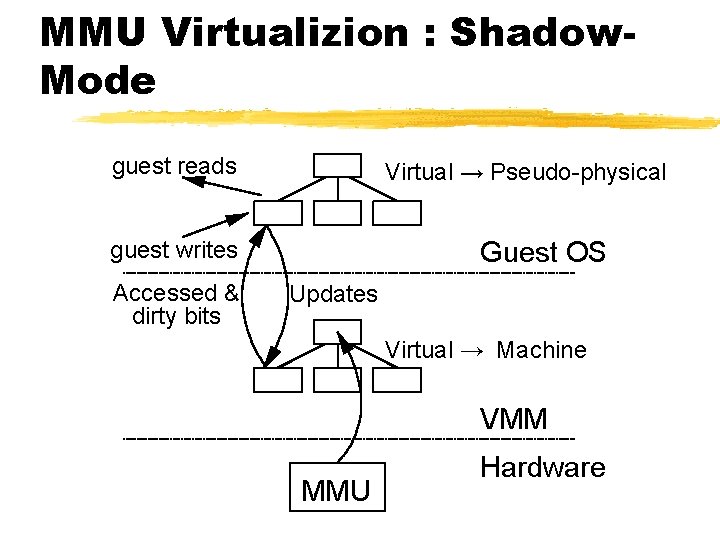

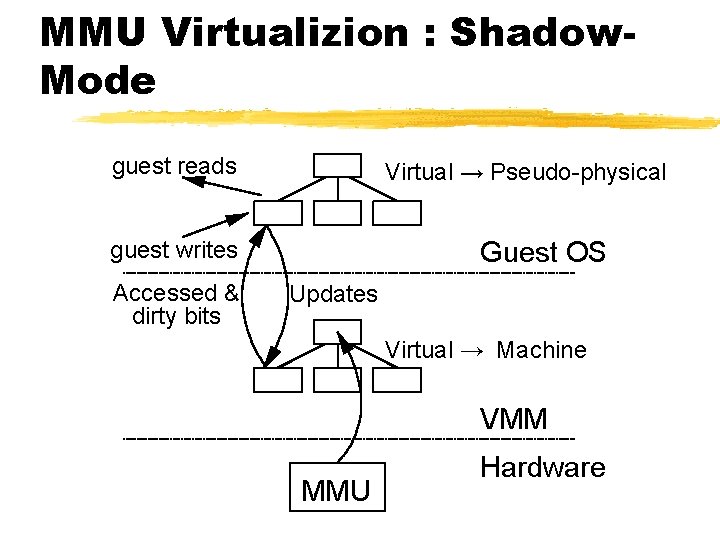

MMU Virtualizion : Shadow. Mode guest reads Virtual → Pseudo-physical Guest OS guest writes Accessed & dirty bits Updates Virtual → Machine VMM MMU Hardware

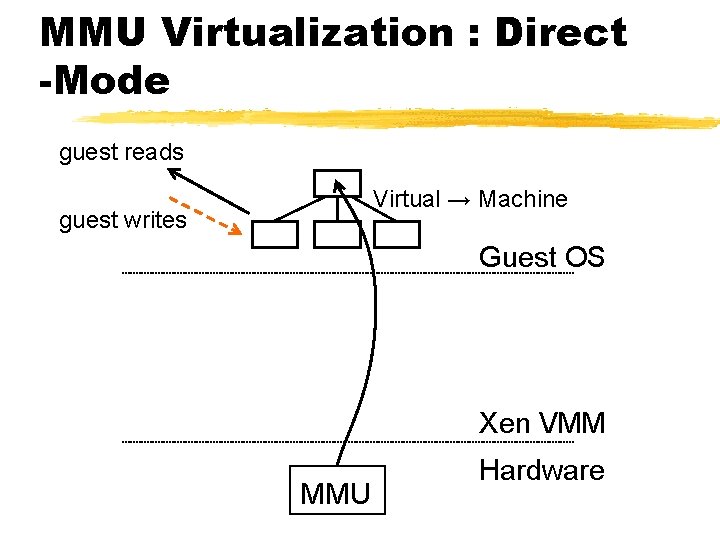

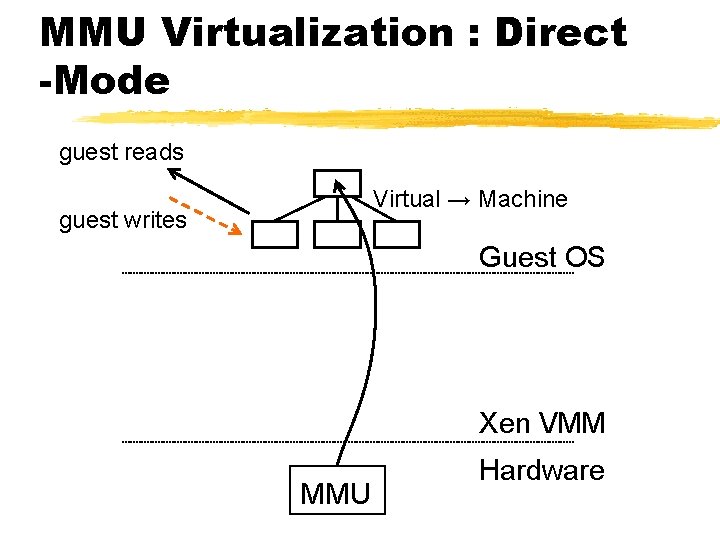

MMU Virtualization : Direct -Mode guest reads Virtual → Machine guest writes Guest OS Xen VMM MMU Hardware

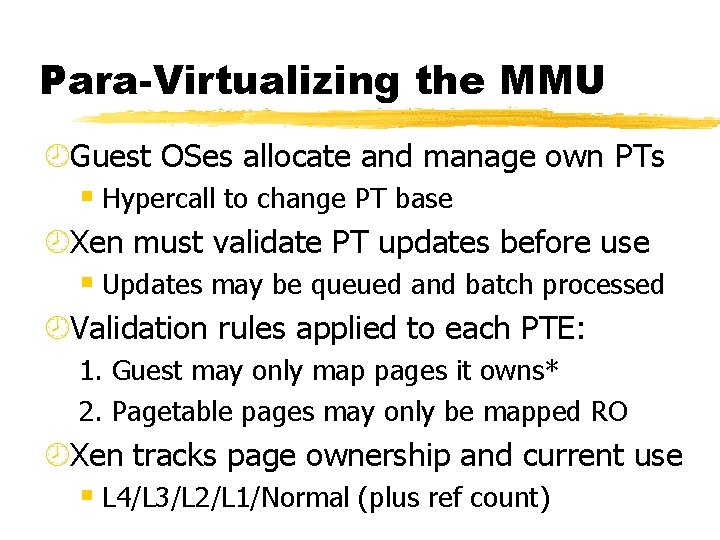

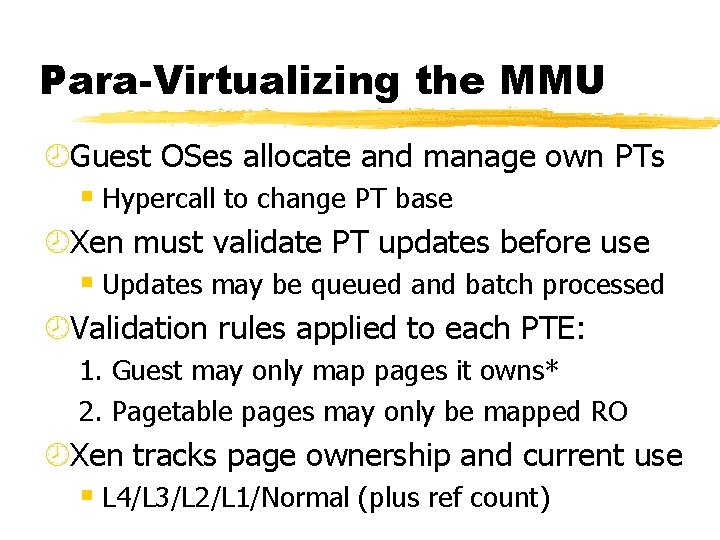

Para-Virtualizing the MMU ¾Guest OSes allocate and manage own PTs § Hypercall to change PT base ¾Xen must validate PT updates before use § Updates may be queued and batch processed ¾Validation rules applied to each PTE: 1. Guest may only map pages it owns* 2. Pagetable pages may only be mapped RO ¾Xen tracks page ownership and current use § L 4/L 3/L 2/L 1/Normal (plus ref count)

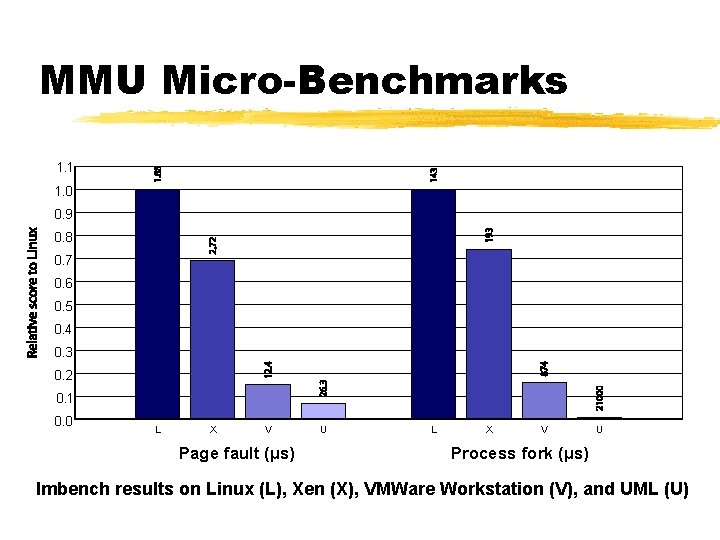

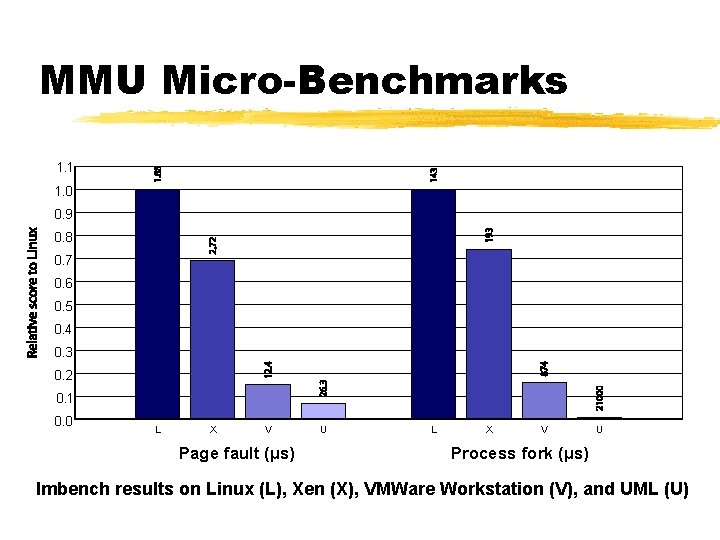

MMU Micro-Benchmarks 1. 1 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0. 0 L X V Page fault (µs) U L X V U Process fork (µs) lmbench results on Linux (L), Xen (X), VMWare Workstation (V), and UML (U)

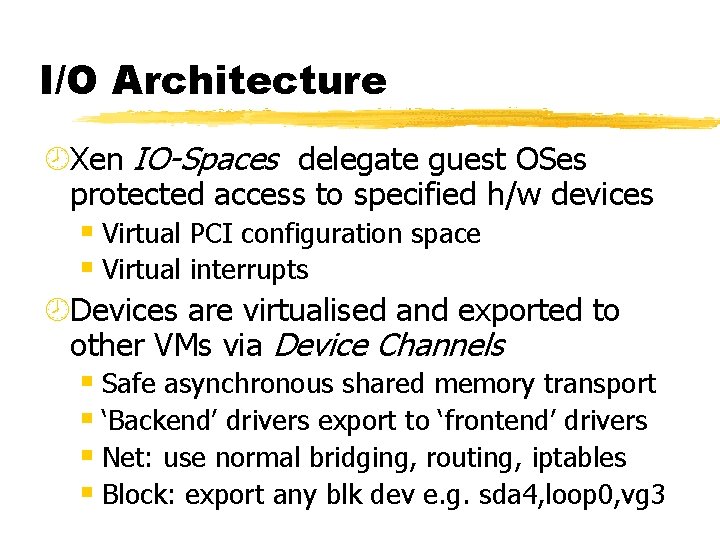

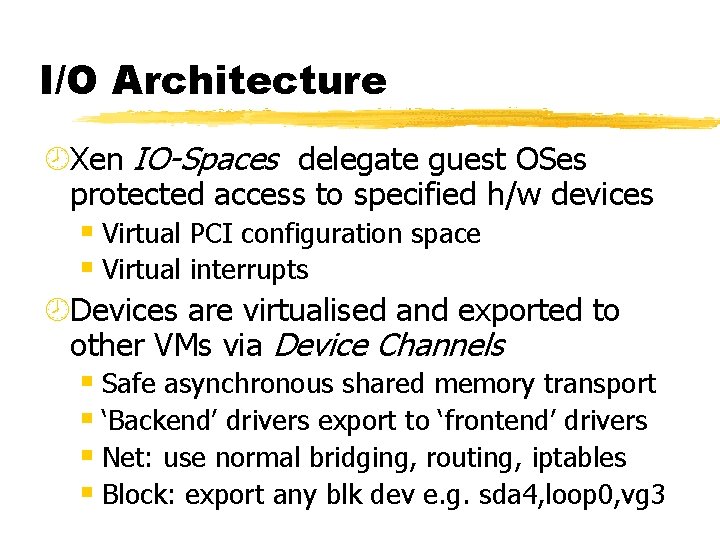

I/O Architecture ¾Xen IO-Spaces delegate guest OSes protected access to specified h/w devices § Virtual PCI configuration space § Virtual interrupts ¾Devices are virtualised and exported to other VMs via Device Channels § Safe asynchronous shared memory transport § ‘Backend’ drivers export to ‘frontend’ drivers § Net: use normal bridging, routing, iptables § Block: export any blk dev e. g. sda 4, loop 0, vg 3

Device Channel Interface

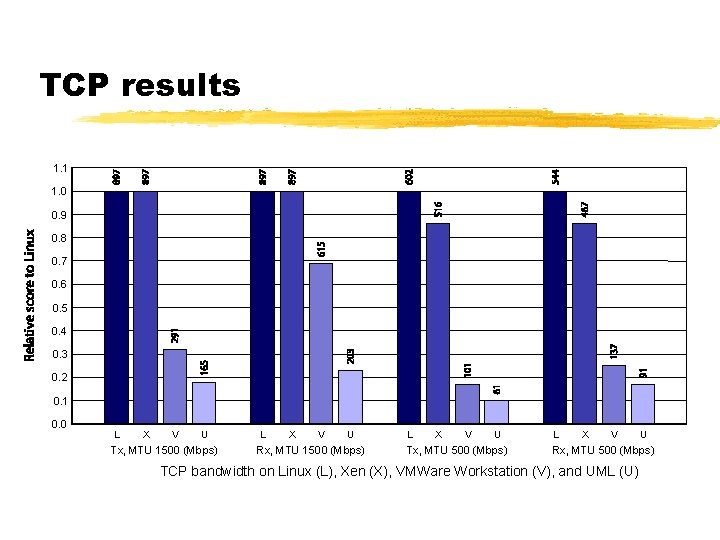

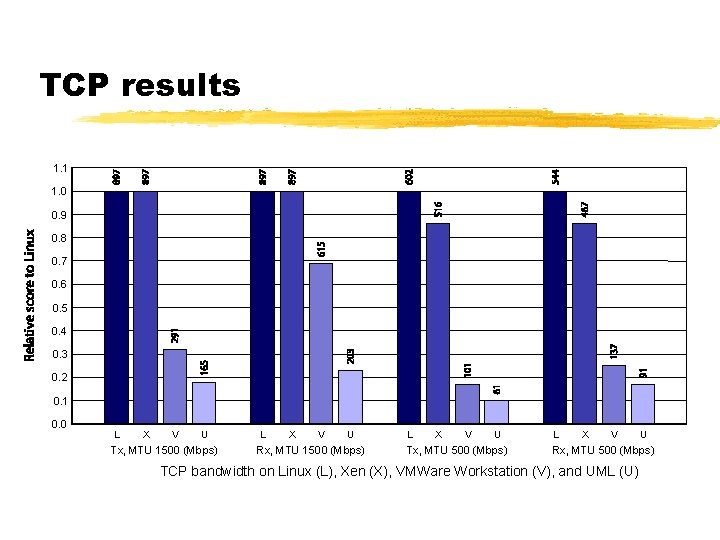

TCP results 1. 1 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0. 0 L X V U Tx, MTU 1500 (Mbps) L X V U Rx, MTU 1500 (Mbps) L X V U Tx, MTU 500 (Mbps) L X V U Rx, MTU 500 (Mbps) TCP bandwidth on Linux (L), Xen (X), VMWare Workstation (V), and UML (U)

Isolated Driver VMs

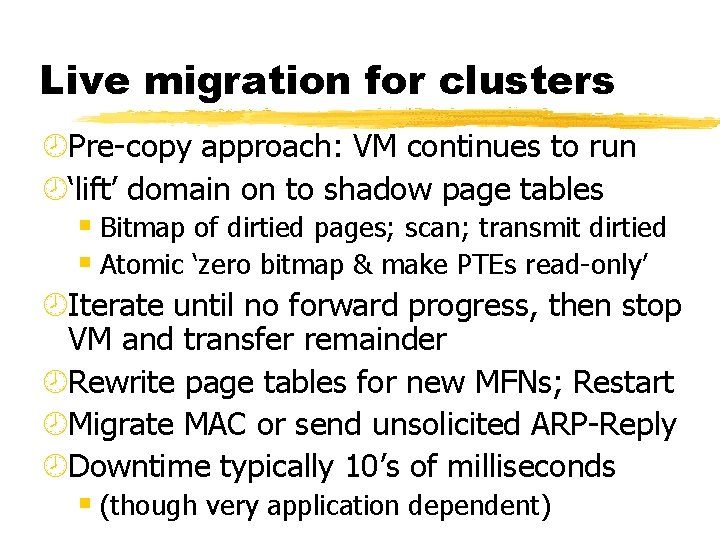

Live migration for clusters ¾Pre-copy approach: VM continues to run ¾‘lift’ domain on to shadow page tables § Bitmap of dirtied pages; scan; transmit dirtied § Atomic ‘zero bitmap & make PTEs read-only’ ¾Iterate until no forward progress, then stop VM and transfer remainder ¾Rewrite page tables for new MFNs; Restart ¾Migrate MAC or send unsolicited ARP-Reply ¾Downtime typically 10’s of milliseconds § (though very application dependent)

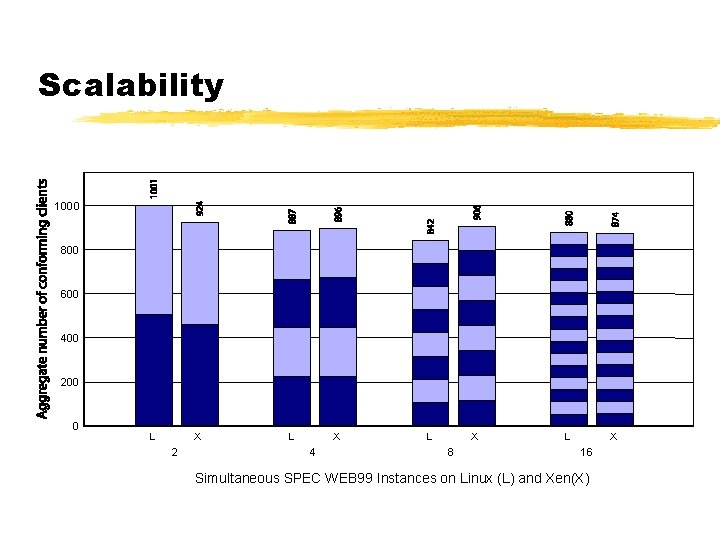

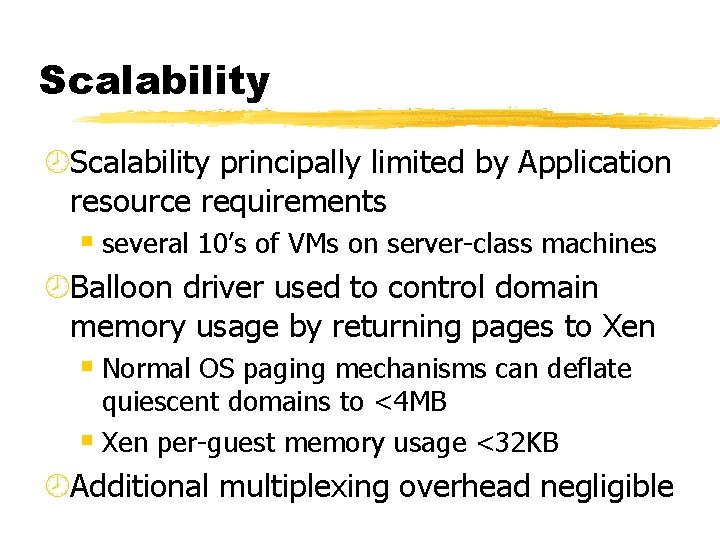

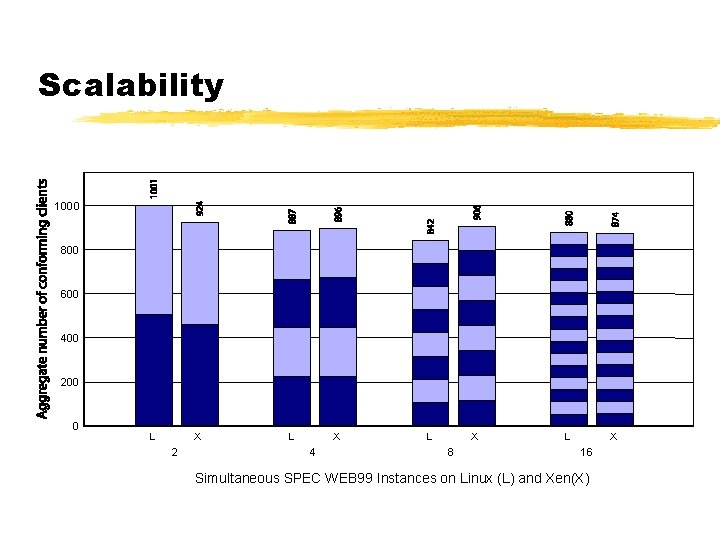

Scalability ¾Scalability principally limited by Application resource requirements § several 10’s of VMs on server-class machines ¾Balloon driver used to control domain memory usage by returning pages to Xen § Normal OS paging mechanisms can deflate quiescent domains to <4 MB § Xen per-guest memory usage <32 KB ¾Additional multiplexing overhead negligible

Scalability 1000 800 600 400 200 0 L X 2 L X 4 L X 8 L X 16 Simultaneous SPEC WEB 99 Instances on Linux (L) and Xen(X)

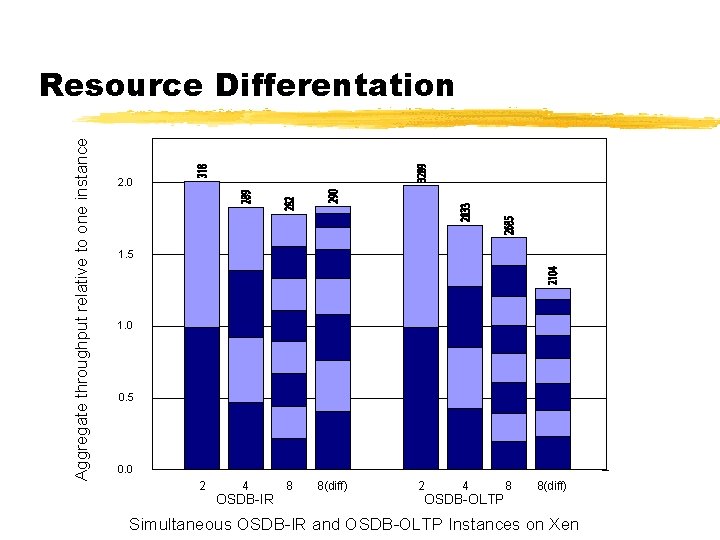

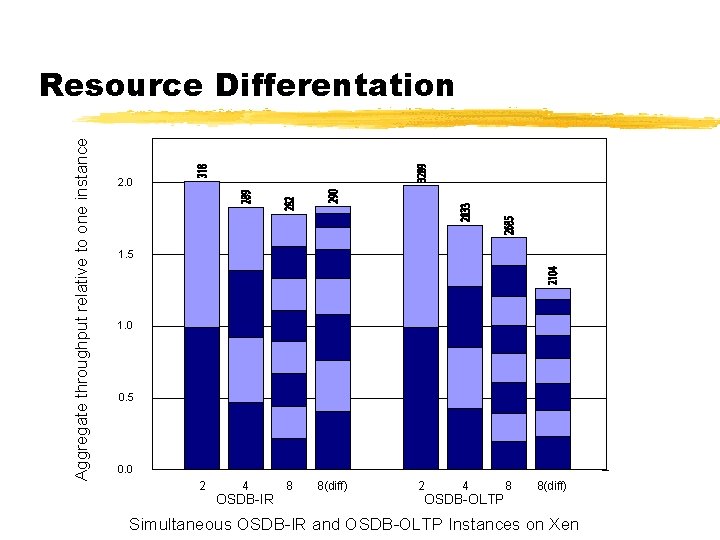

Aggregate throughput relative to one instance Resource Differentation 2. 0 1. 5 1. 0 0. 5 0. 0 2 4 OSDB-IR 8 8(diff) 2 4 OSDB-OLTP 8 8(diff) Simultaneous OSDB-IR and OSDB-OLTP Instances on Xen

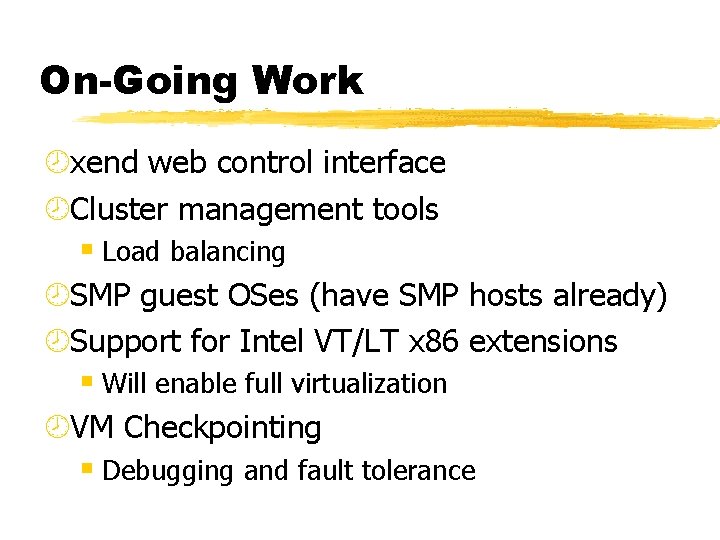

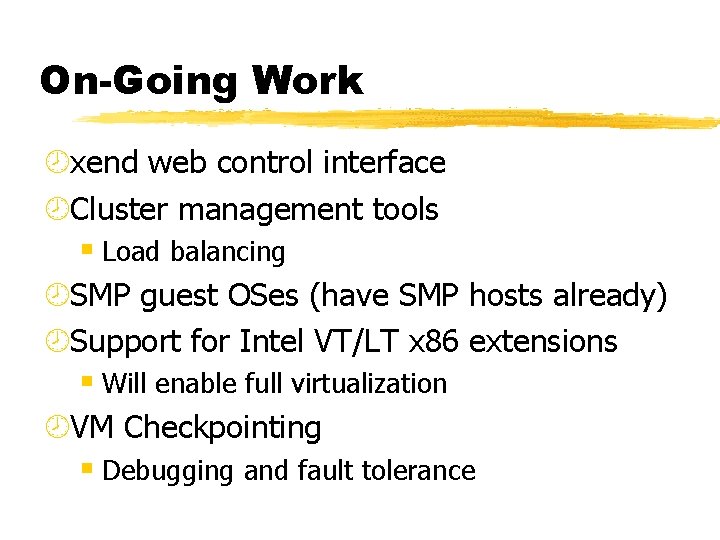

On-Going Work ¾xend web control interface ¾Cluster management tools § Load balancing ¾SMP guest OSes (have SMP hosts already) ¾Support for Intel VT/LT x 86 extensions § Will enable full virtualization ¾VM Checkpointing § Debugging and fault tolerance

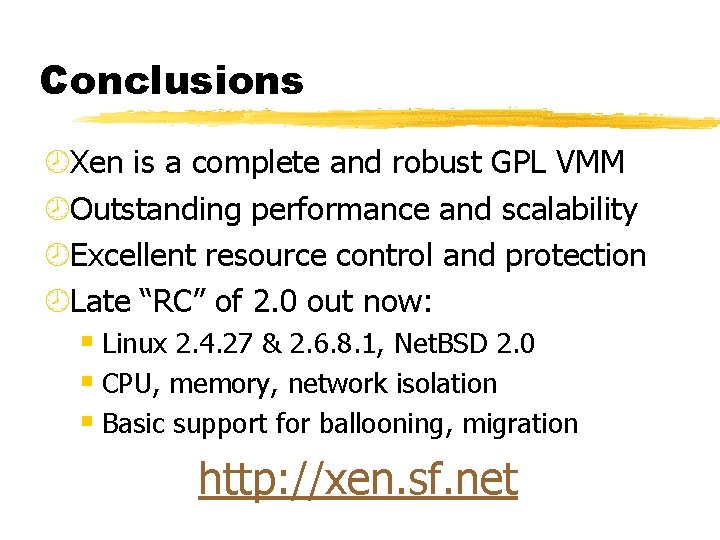

Conclusions ¾Xen is a complete and robust GPL VMM ¾Outstanding performance and scalability ¾Excellent resource control and protection ¾Late “RC” of 2. 0 out now: § Linux 2. 4. 27 & 2. 6. 8. 1, Net. BSD 2. 0 § CPU, memory, network isolation § Basic support for ballooning, migration http: //xen. sf. net