Workshop to Support Data Science Workflows Practical steps

- Slides: 65

Workshop to Support Data Science Workflows Practical steps for increasing the openness and reproducibility of data science Supporting Research Workflows in Data Science UVA November 11, 2016 Natalie Meyers

Objectives Session 1 • Understanding reproducible research • Setting up a reproducible project • Keeping track of things Session 2 • Containing bias • Sharing your work 2

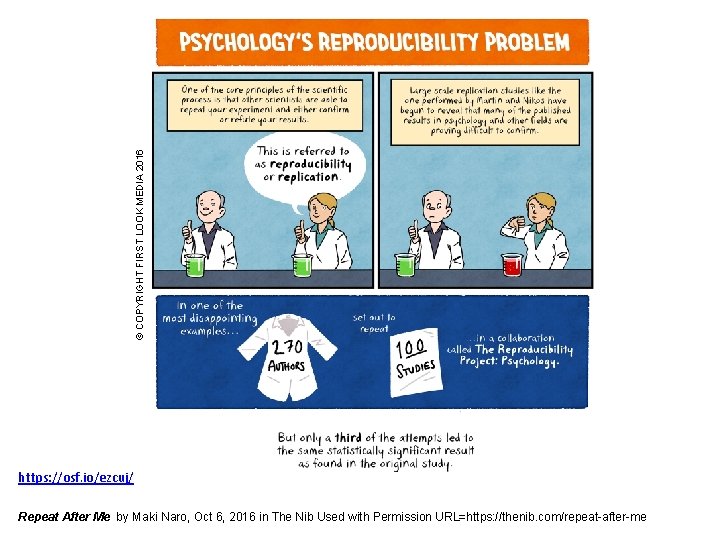

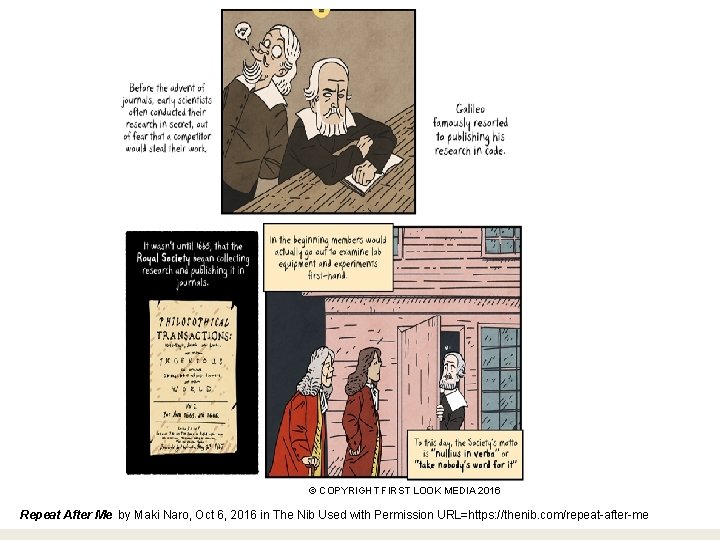

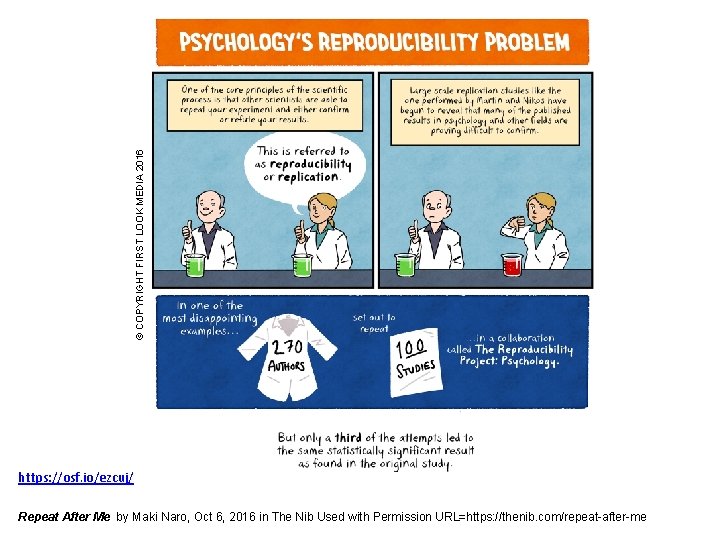

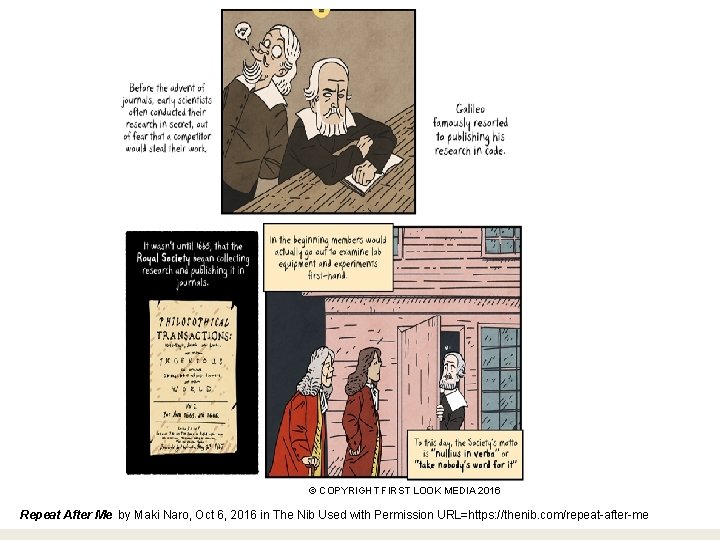

© COPYRIGHT FIRST LOOK MEDIA 2016 https: //osf. io/ezcuj/ Repeat After Me by Maki Naro, Oct 6, 2016 in The Nib Used with Permission URL=https: //thenib. com/repeat-after-me

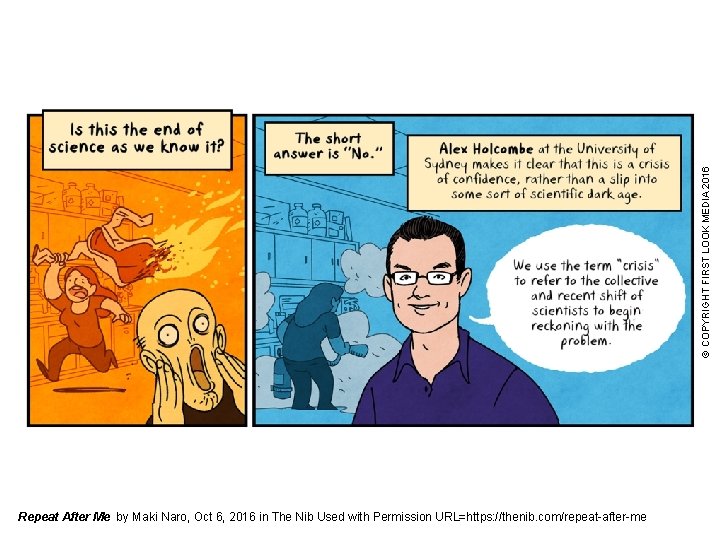

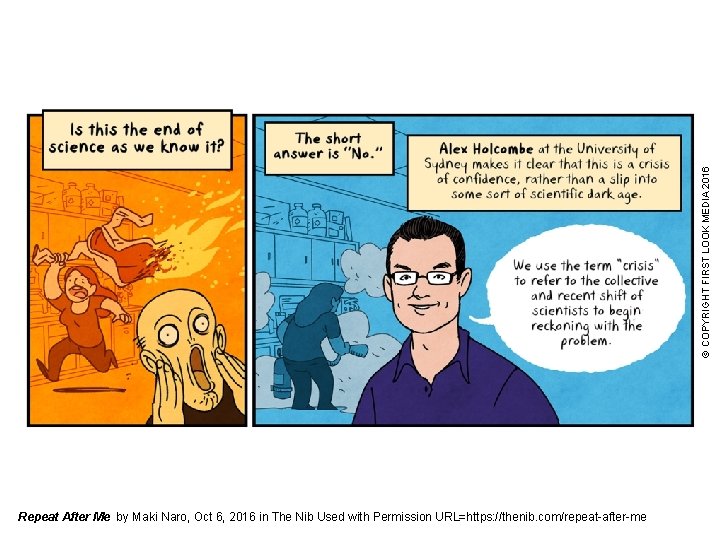

© COPYRIGHT FIRST LOOK MEDIA 2016 Repeat After Me by Maki Naro, Oct 6, 2016 in The Nib Used with Permission URL=https: //thenib. com/repeat-after-me

Technology to enable change Training to enact change Incentives to embrace change

OSF TOP Guidelines Badges for Open Practices Reproducibility Projects Pre. Reg & Registered Reports SHARE

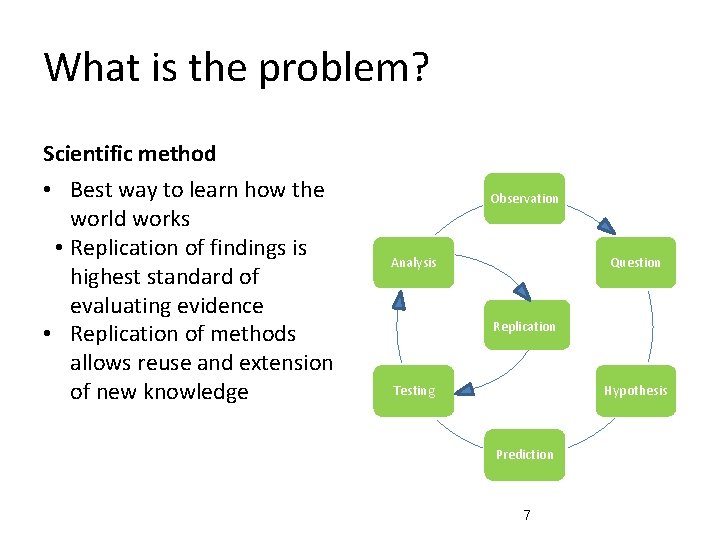

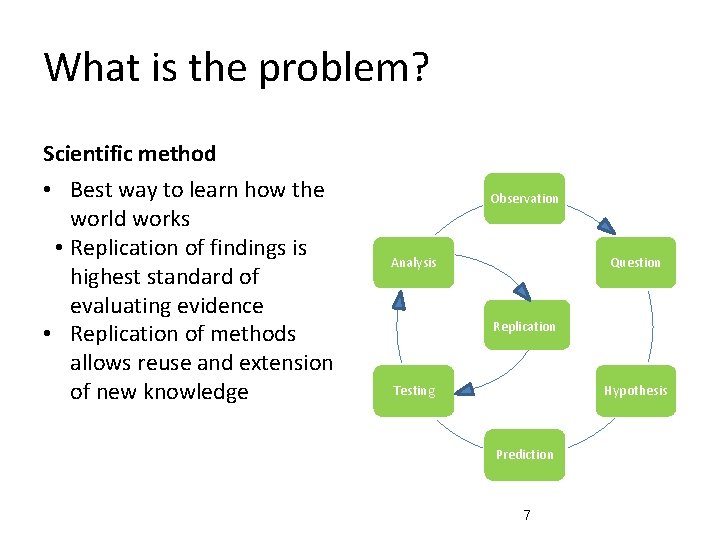

What is the problem? Scientific method • Best way to learn how the world works • Replication of findings is highest standard of evaluating evidence • Replication of methods allows reuse and extension of new knowledge Observation Analysis Question Replication Testing Hypothesis Prediction 7

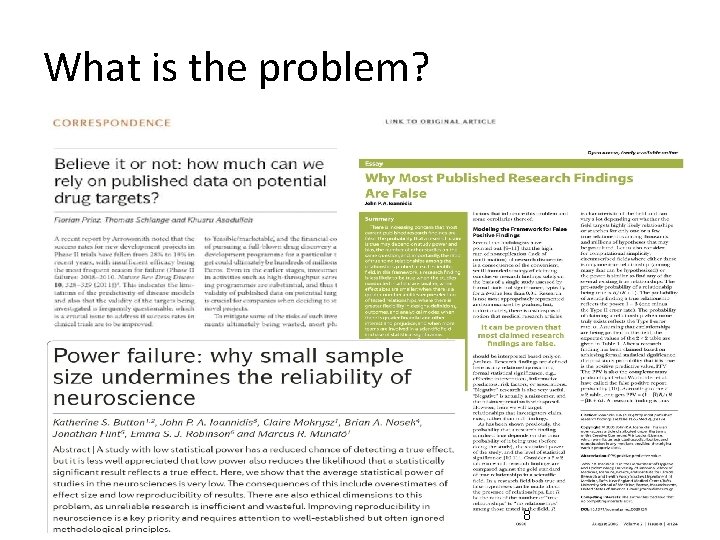

What is the problem? 8

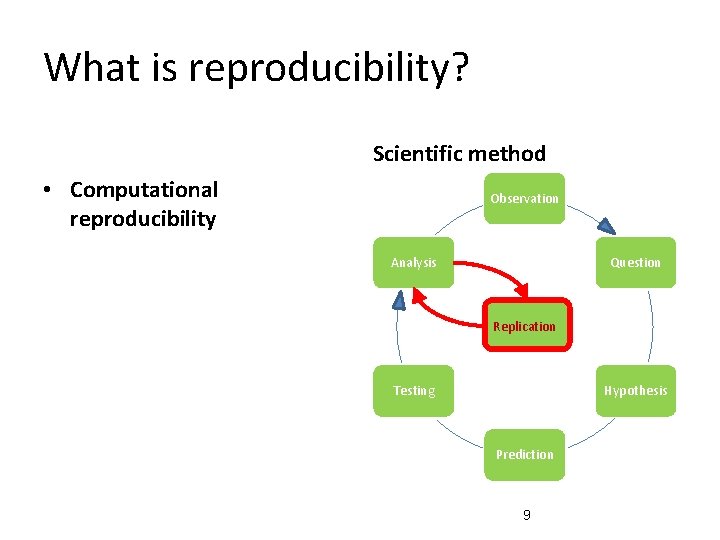

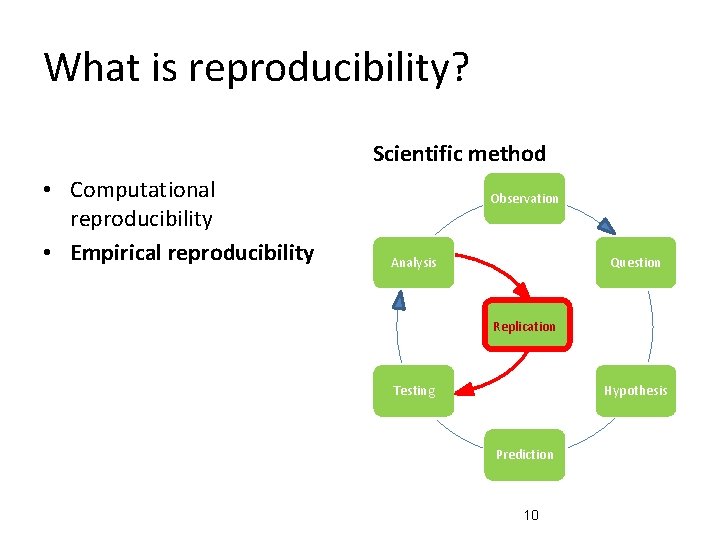

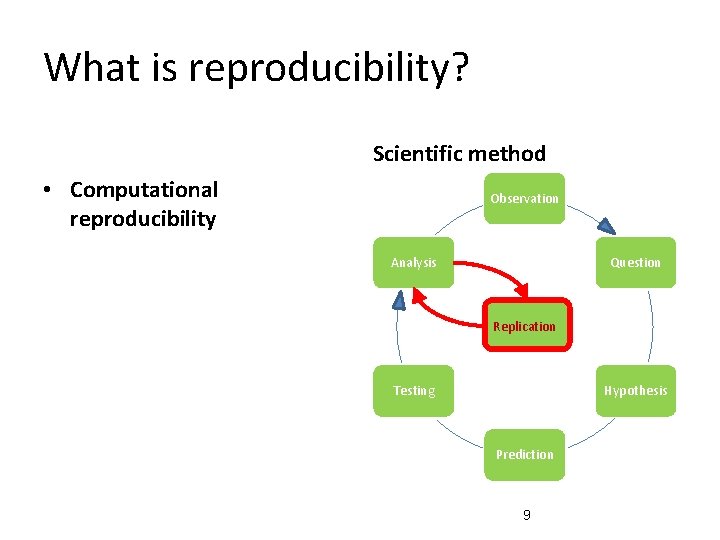

What is reproducibility? Scientific method • Computational reproducibility Observation Analysis Question Replication Testing Hypothesis Prediction 9

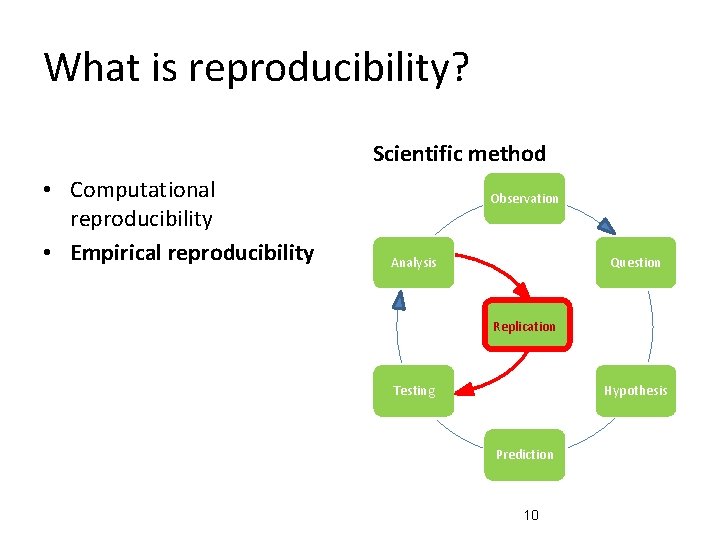

What is reproducibility? Scientific method • Computational reproducibility • Empirical reproducibility Observation Analysis Question Replication Testing Hypothesis Prediction 10

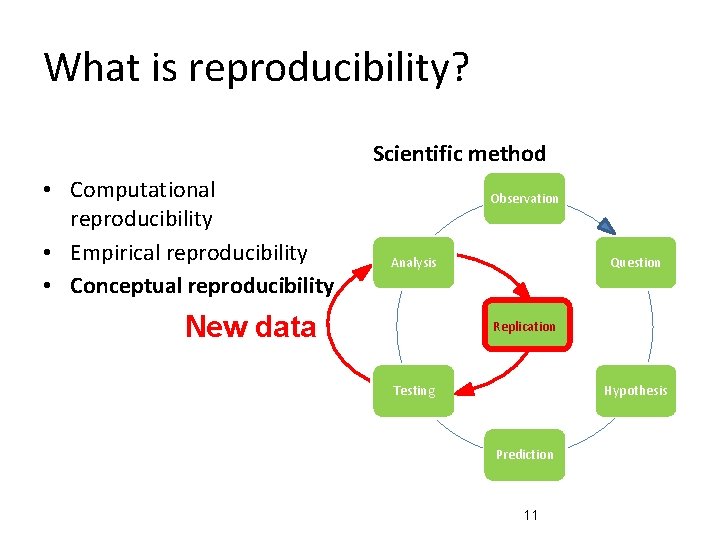

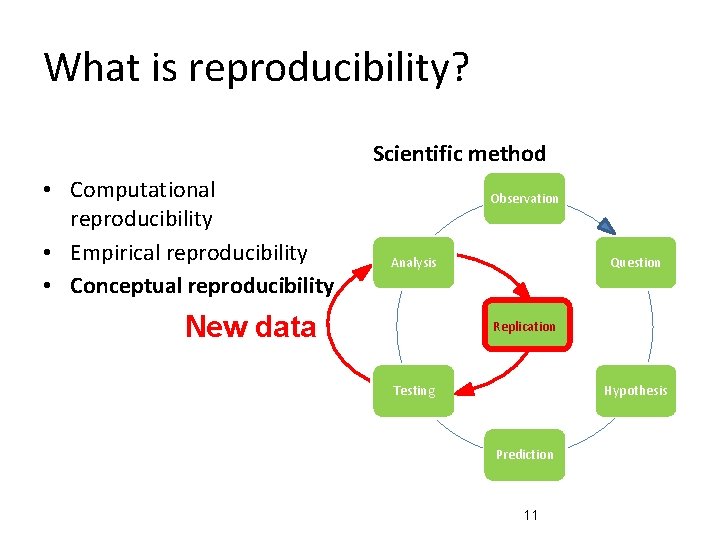

What is reproducibility? Scientific method • Computational reproducibility • Empirical reproducibility • Conceptual reproducibility Observation Analysis New data Question Replication Testing Hypothesis Prediction 11

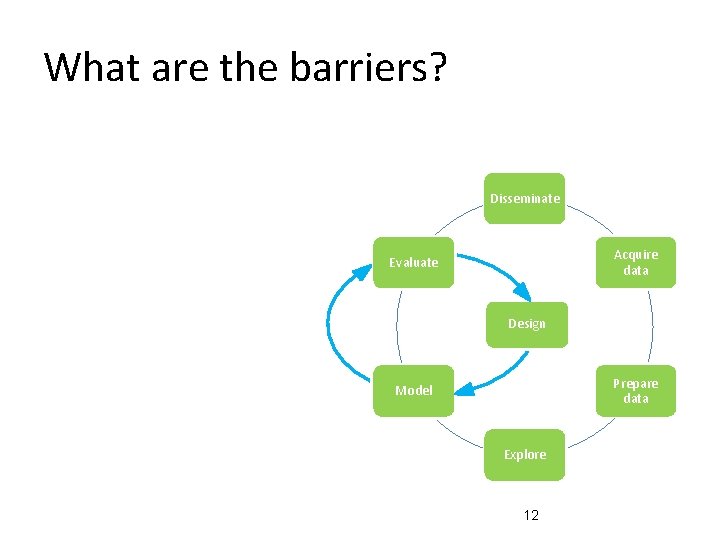

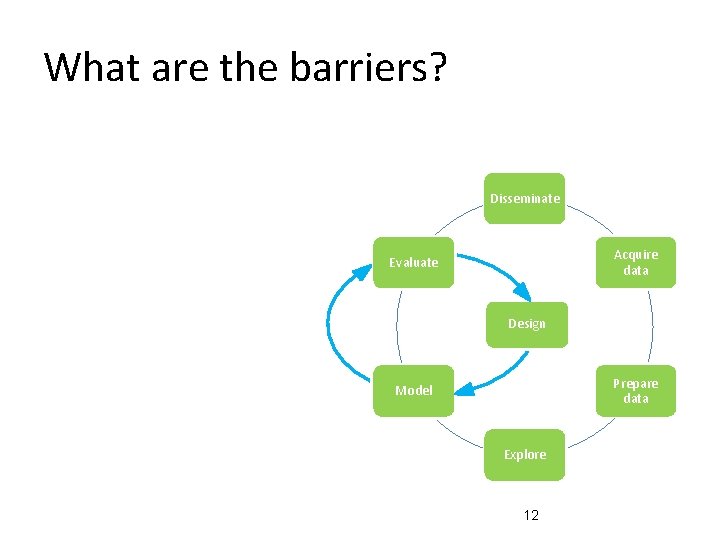

What are the barriers? Disseminate Acquire data Evaluate Design Prepare data Model Explore 12

What are the barriers? • Statistical –Low power –Researcher degrees of freedom • Transparency –Poor documentation –Poor reporting –Lack of sharing 13

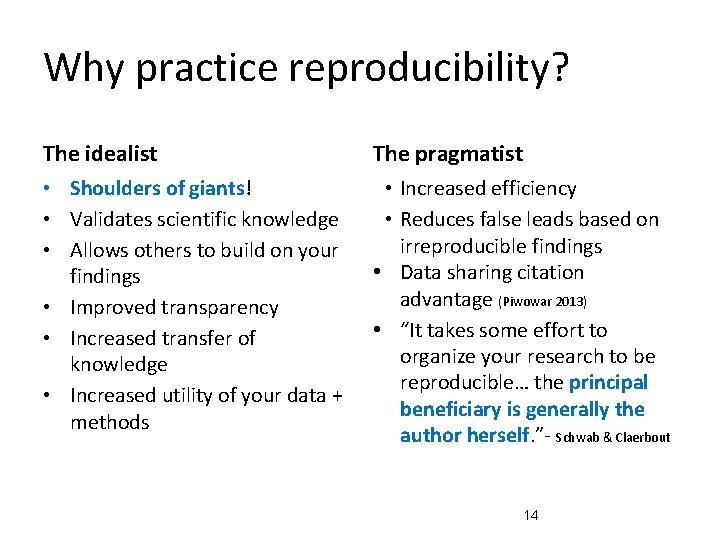

Why practice reproducibility? The idealist The pragmatist • Shoulders of giants! • Validates scientific knowledge • Allows others to build on your findings • Improved transparency • Increased transfer of knowledge • Increased utility of your data + methods • Increased efficiency • Reduces false leads based on irreproducible findings • Data sharing citation advantage (Piwowar 2013) • “It takes some effort to organize your research to be reproducible… the principal beneficiary is generally the author herself. ”- Schwab & Claerbout 14

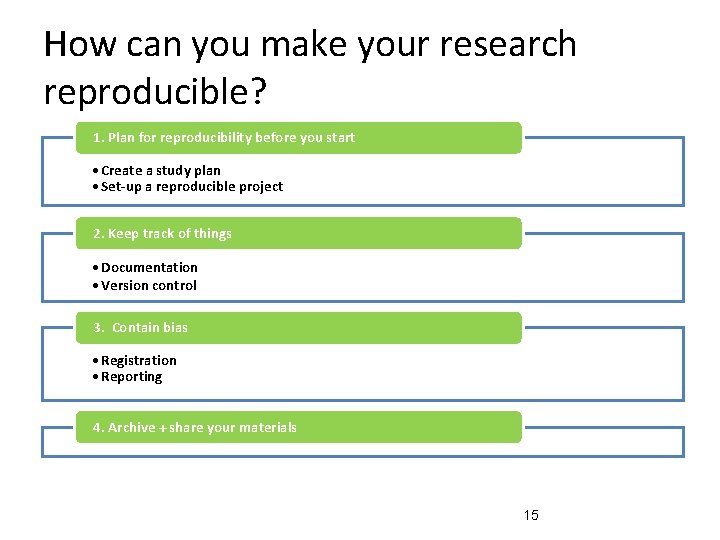

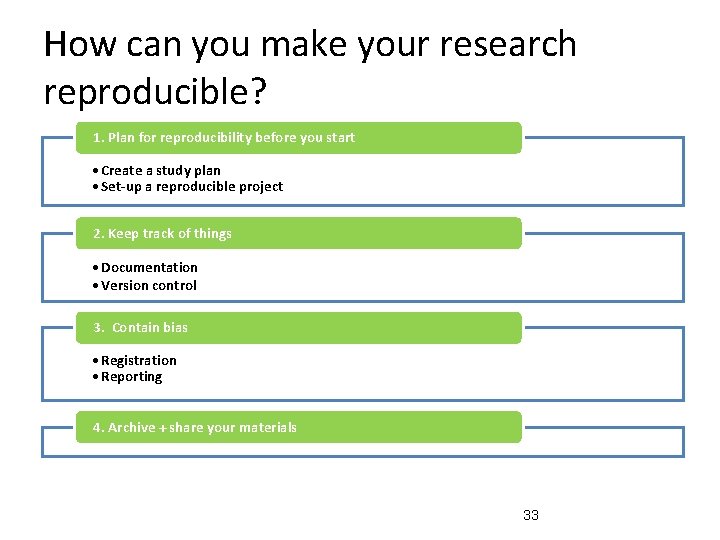

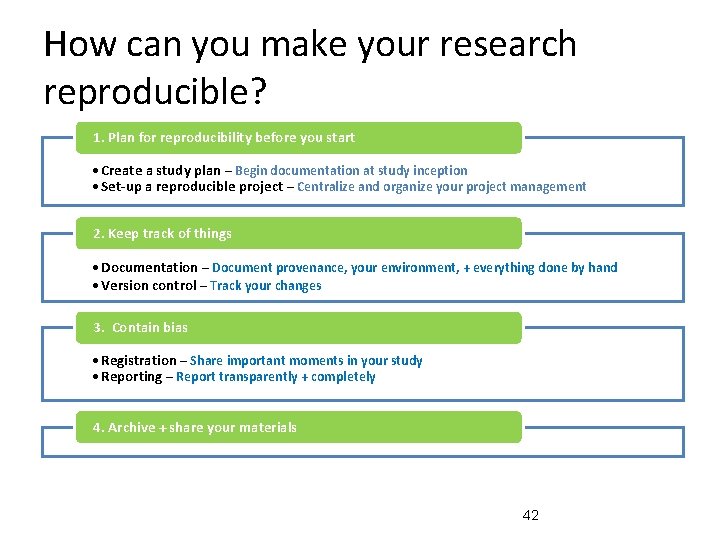

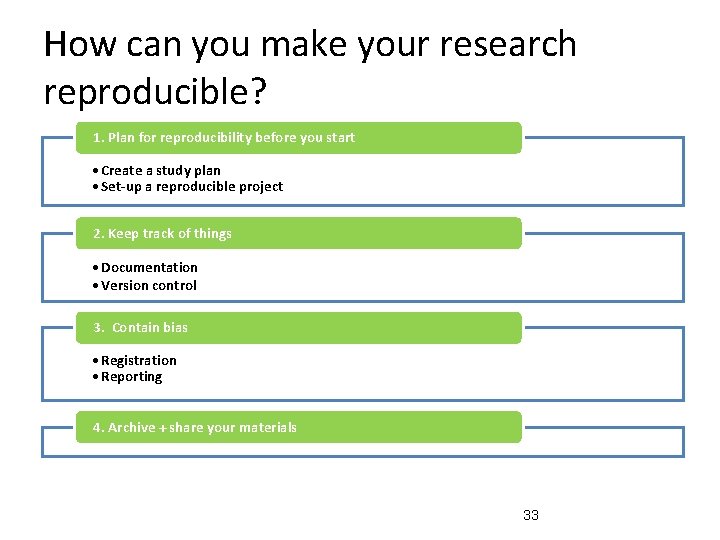

How can you make your research reproducible? 1. Plan for reproducibility before you start • Create a study plan • Set-up a reproducible project 2. Keep track of things • Documentation • Version control 3. Contain bias • Registration • Reporting 4. Archive + share your materials 15

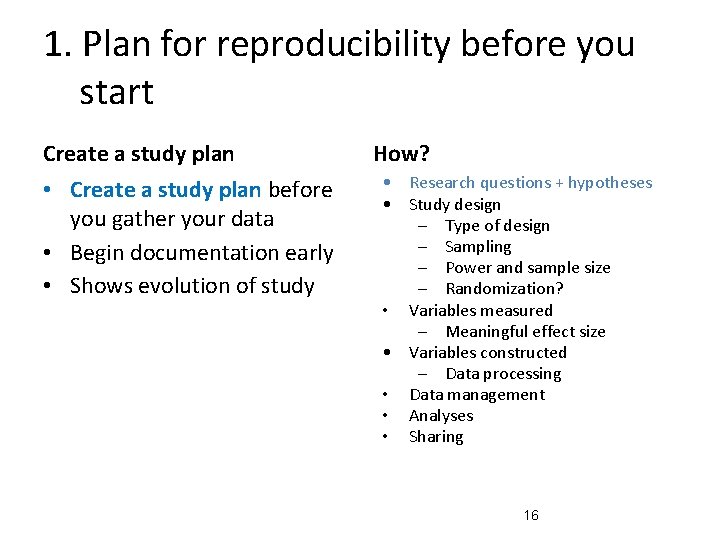

1. Plan for reproducibility before you start Create a study plan • Create a study plan before you gather your data • Begin documentation early • Shows evolution of study How? • Research questions + hypotheses • Study design – Type of design – Sampling – Power and sample size – Randomization? • Variables measured – Meaningful effect size • Variables constructed – Data processing • Data management • Analyses • Sharing 16

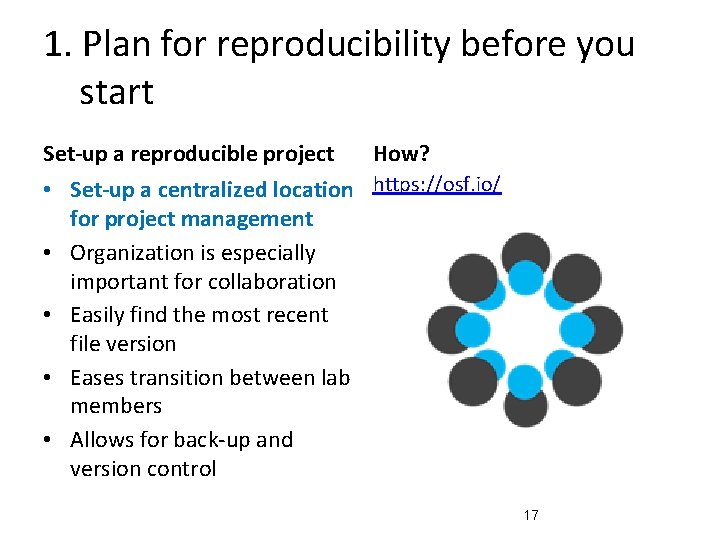

1. Plan for reproducibility before you start Set-up a reproducible project How? • Set-up a centralized location https: //osf. io/ for project management • Organization is especially important for collaboration • Easily find the most recent file version • Eases transition between lab members • Allows for back-up and version control 17

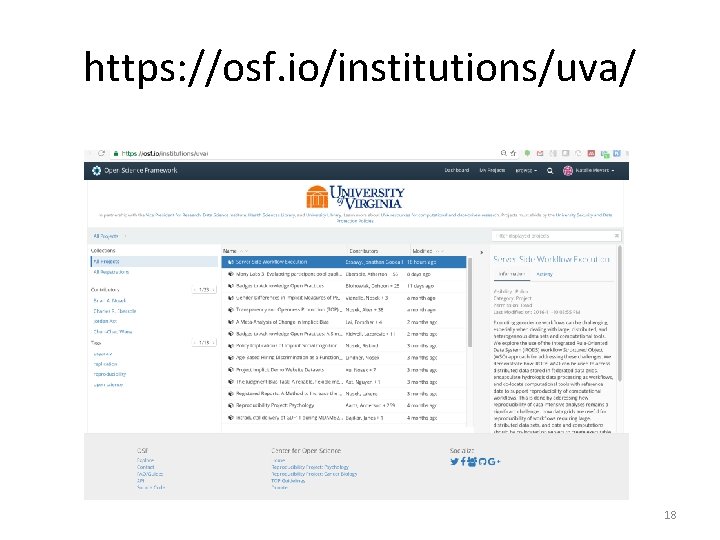

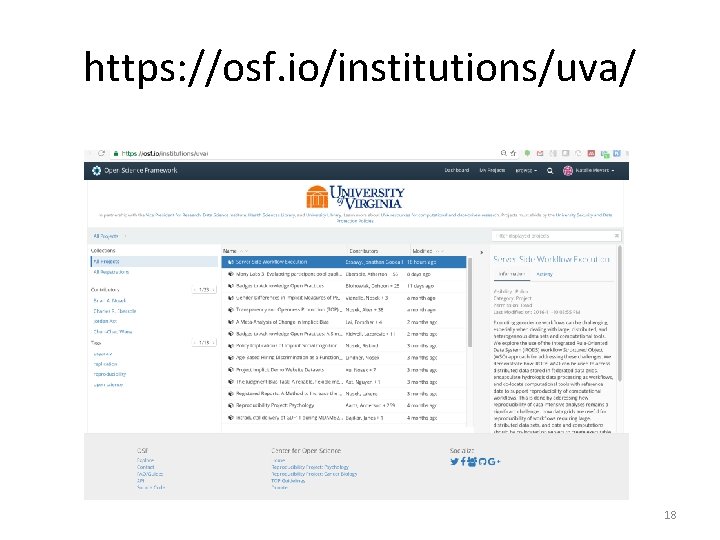

https: //osf. io/institutions/uva/ 18

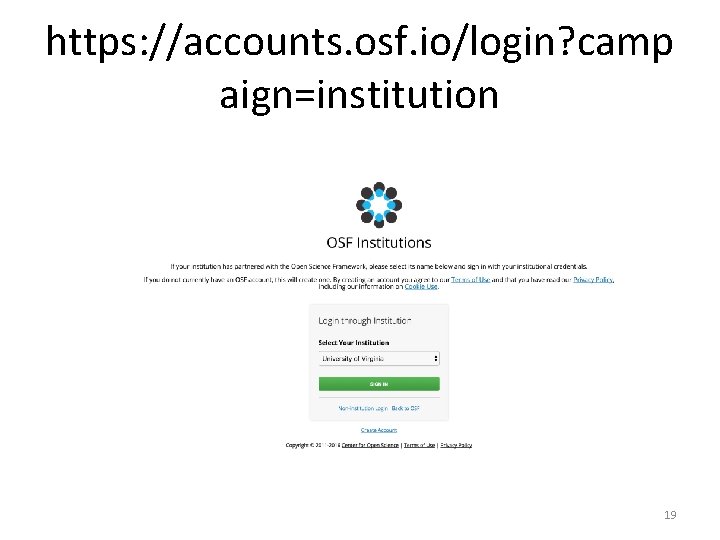

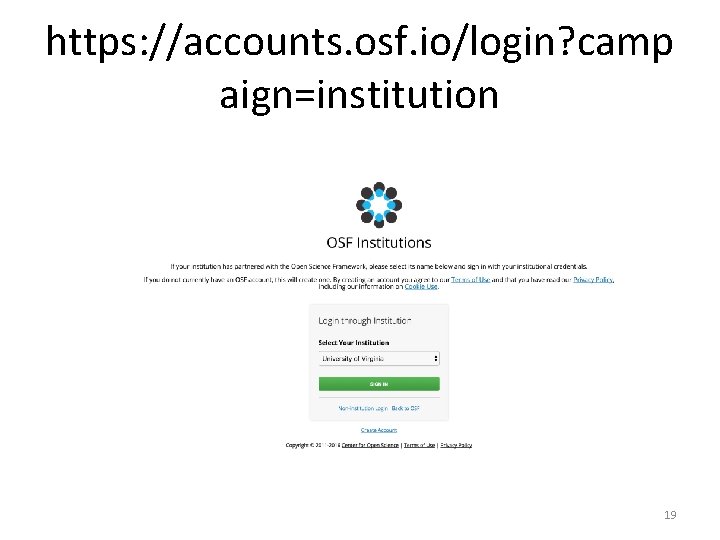

https: //accounts. osf. io/login? camp aign=institution 19

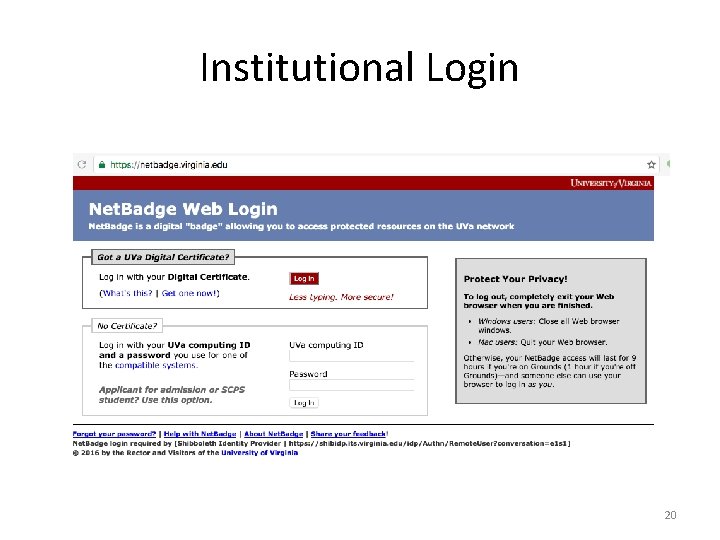

Institutional Login 20

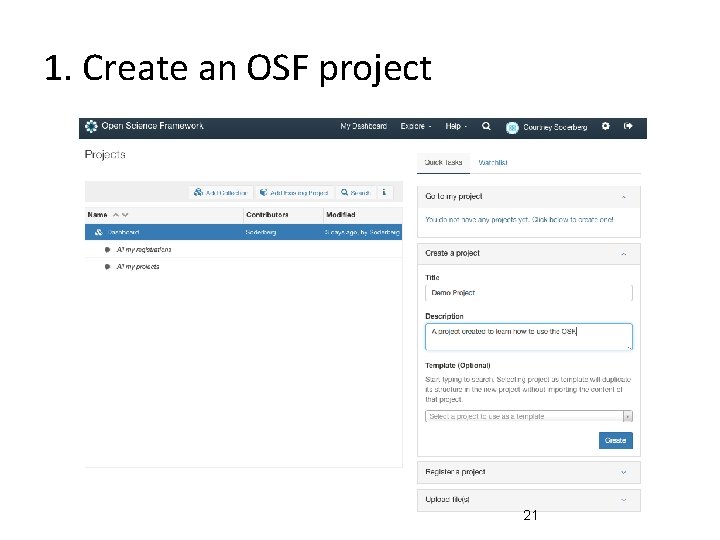

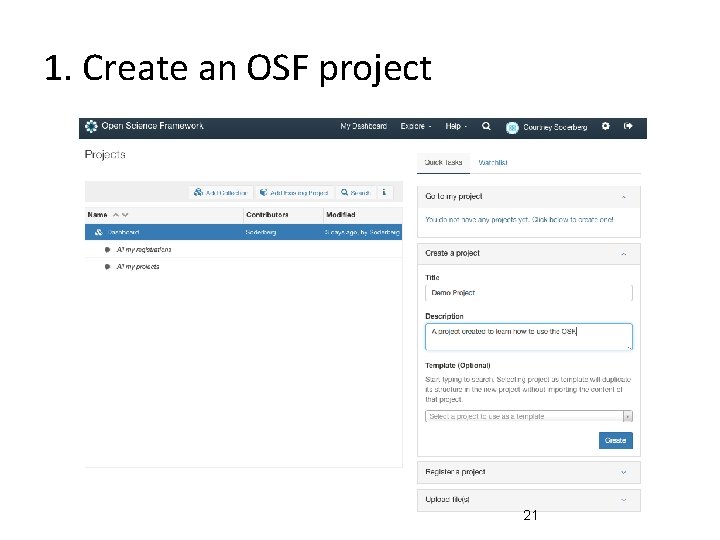

1. Create an OSF project 21

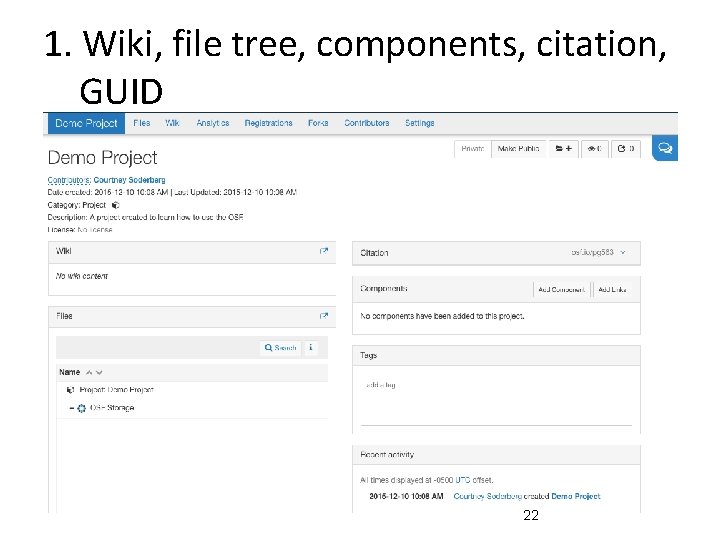

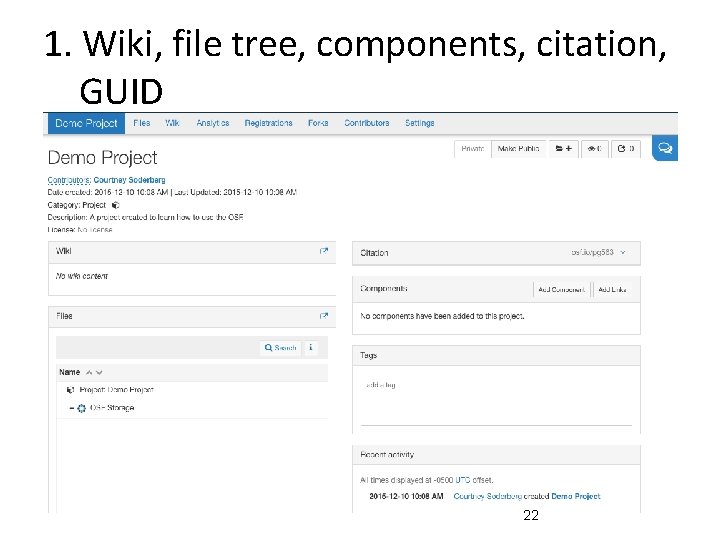

1. Wiki, file tree, components, citation, GUID 22

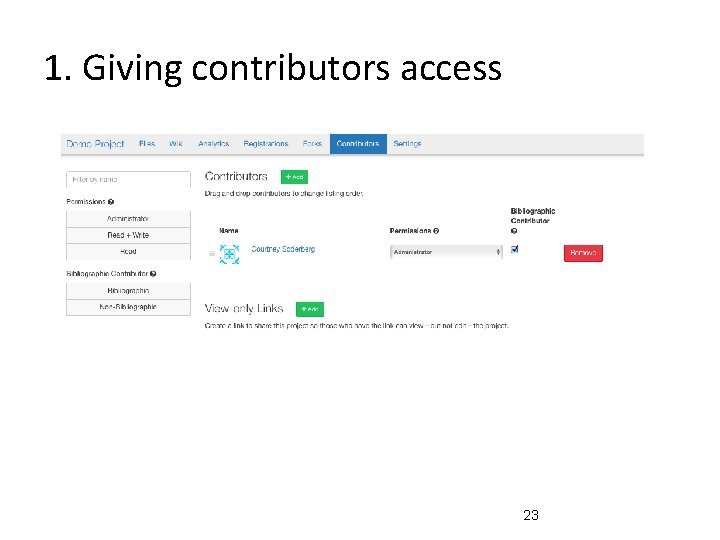

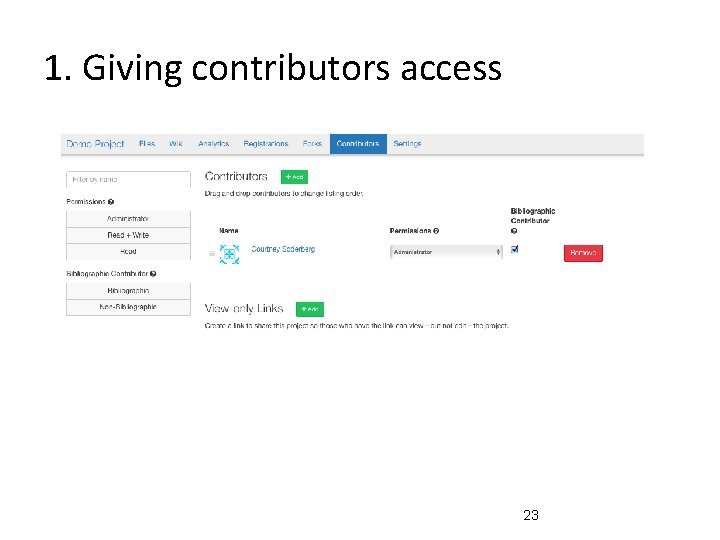

1. Giving contributors access 23

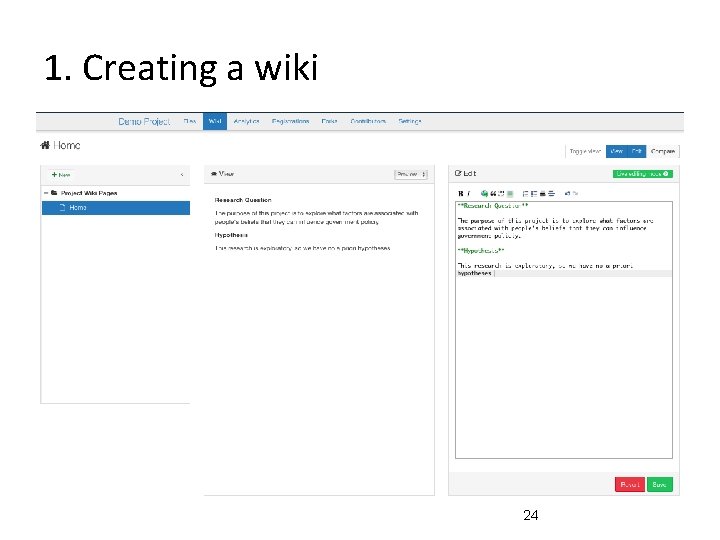

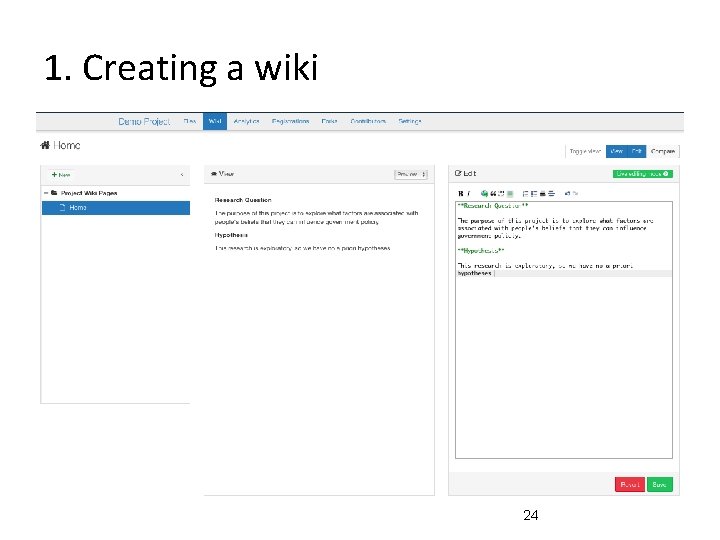

1. Creating a wiki 24

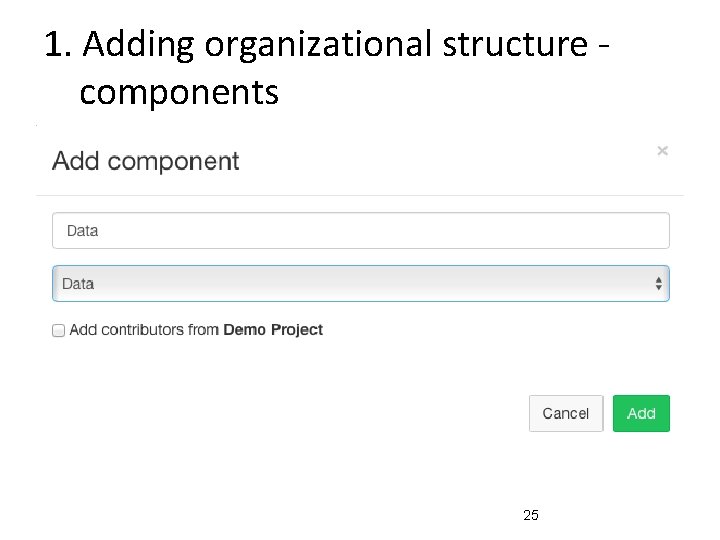

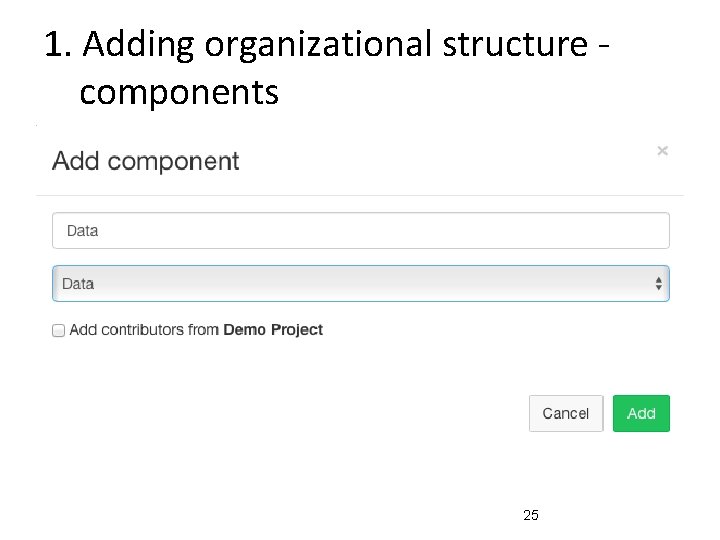

1. Adding organizational structure components 25

How can you make your research reproducible? 1. Plan for reproducibility before you start • Create a study plan • Set-up a reproducible project 2. Keep track of things • Documentation • Version control 3. Contain bias • Registration • Reporting 4. Archive + share your materials 26

2. Keep track of things Documentation • Document everything done by hand • Document your software environment (eg, dependencies, libraries, session. Info () in R) • Everything done by hand or not automated from data and code should be precisely documented: • Make raw data read only – You won’t edit it by accident – Forces you to document or code data processing • Document in code comments – README files 27

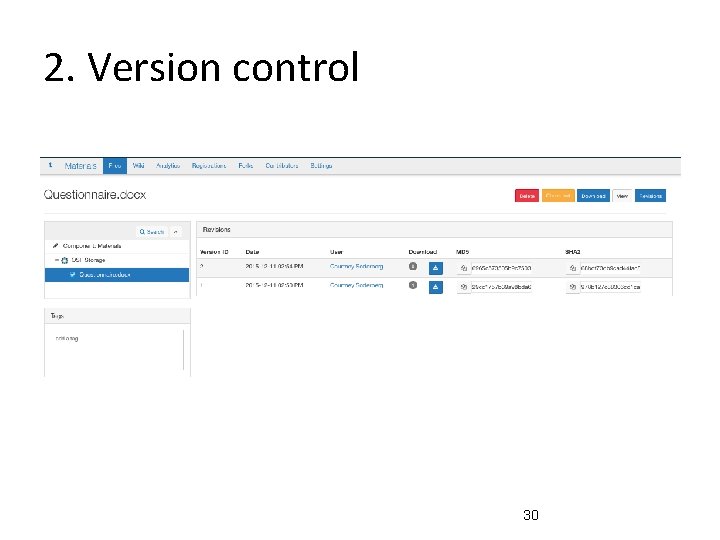

2. Keep track of things Version control • Track your changes • Everything created manually should use version control • Tracks changes to files, code, metadata • Allows you to revert to old versions • Make incremental changes: commit early, commit often • Git / Git. Hub / Bit. Bucket Version control for data • Metadata should be version controlled 28

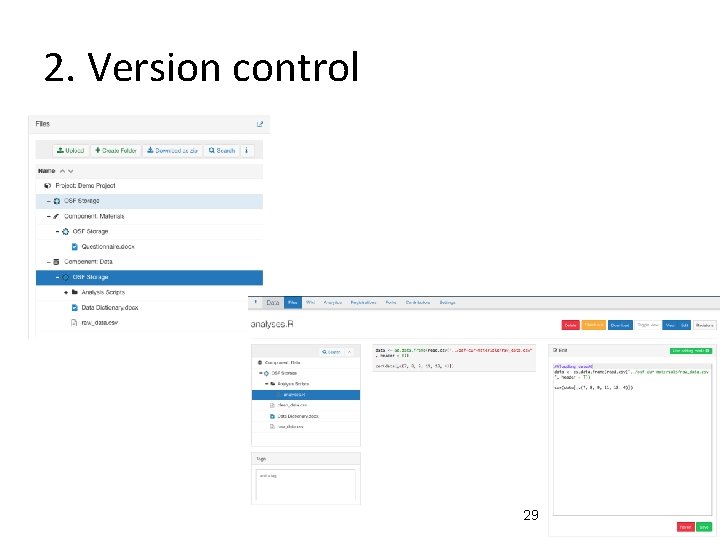

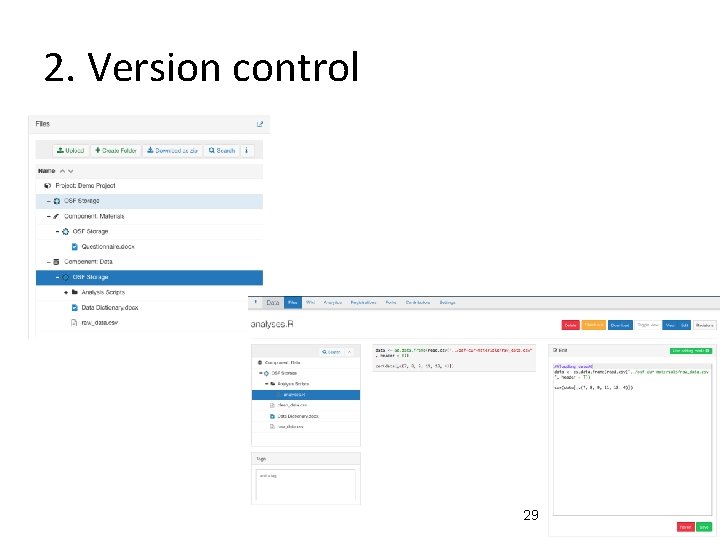

2. Version control 29

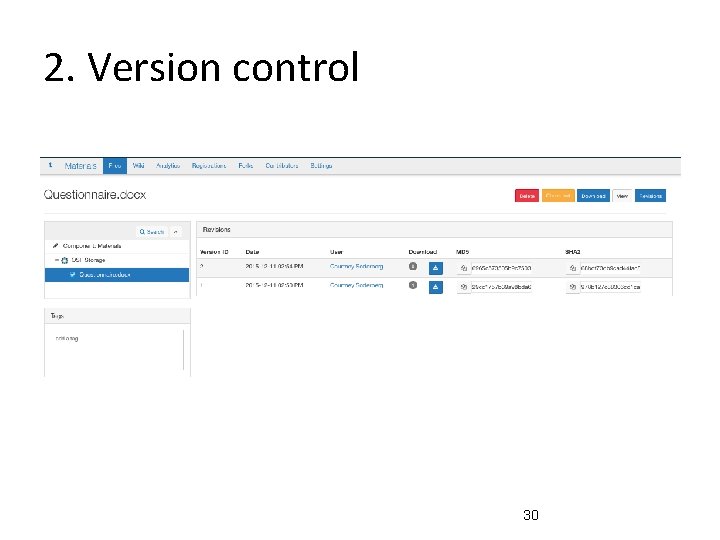

2. Version control 30

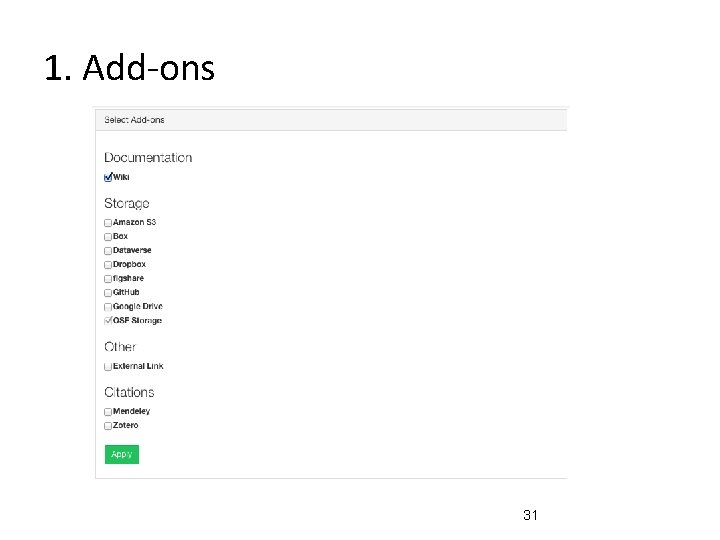

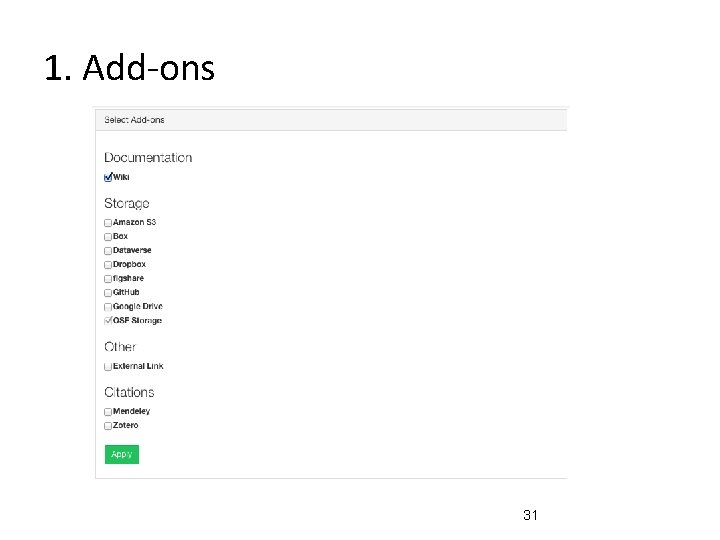

1. Add-ons 31

Objectives Session 1 • Understanding reproducible research • Setting up a reproducible project • Keeping track of things Session 2 • Containing bias • Sharing your work 32

How can you make your research reproducible? 1. Plan for reproducibility before you start • Create a study plan • Set-up a reproducible project 2. Keep track of things • Documentation • Version control 3. Contain bias • Registration • Reporting 4. Archive + share your materials 33

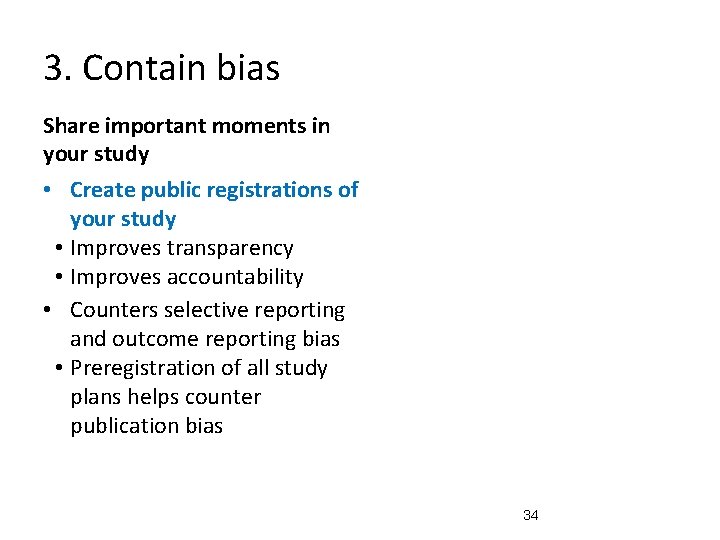

3. Contain bias Share important moments in your study • Create public registrations of your study • Improves transparency • Improves accountability • Counters selective reporting and outcome reporting bias • Preregistration of all study plans helps counter publication bias 34

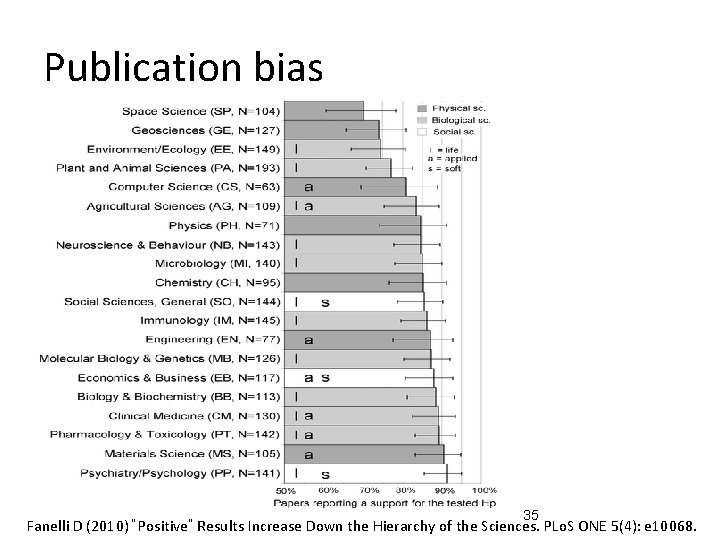

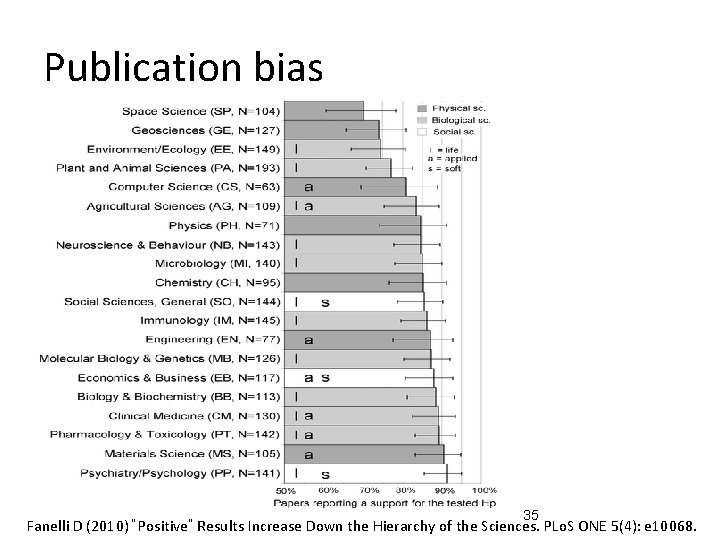

Publication bias 35 Fanelli D (2010) “Positive” Results Increase Down the Hierarchy of the Sciences. PLo. S ONE 5(4): e 10068.

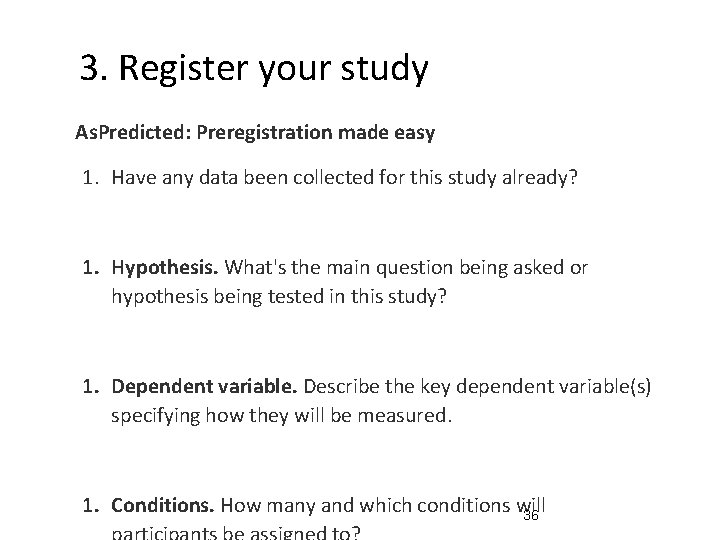

3. Register your study As. Predicted: Preregistration made easy 1. Have any data been collected for this study already? 1. Hypothesis. What's the main question being asked or hypothesis being tested in this study? 1. Dependent variable. Describe the key dependent variable(s) specifying how they will be measured. 1. Conditions. How many and which conditions will 36

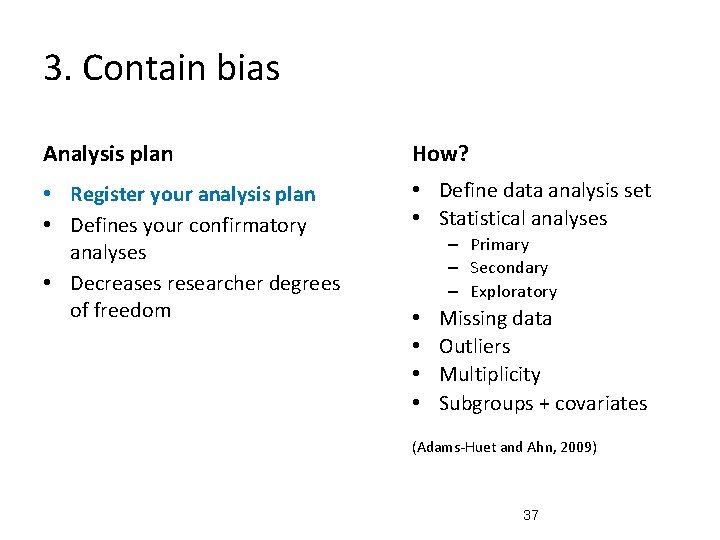

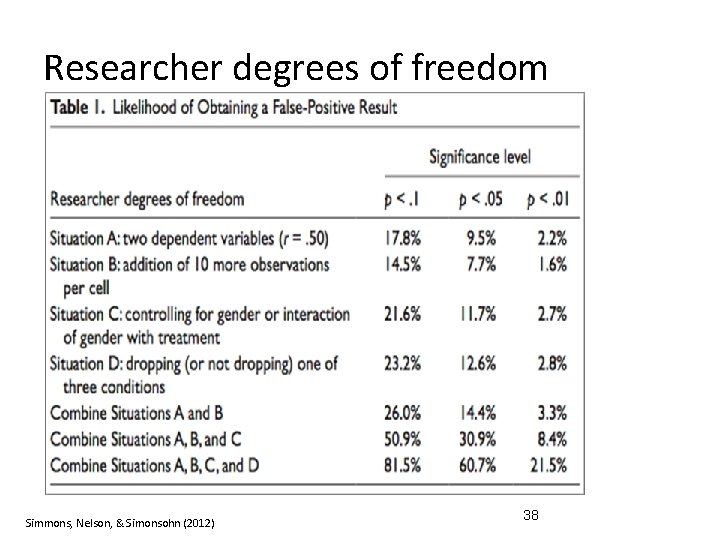

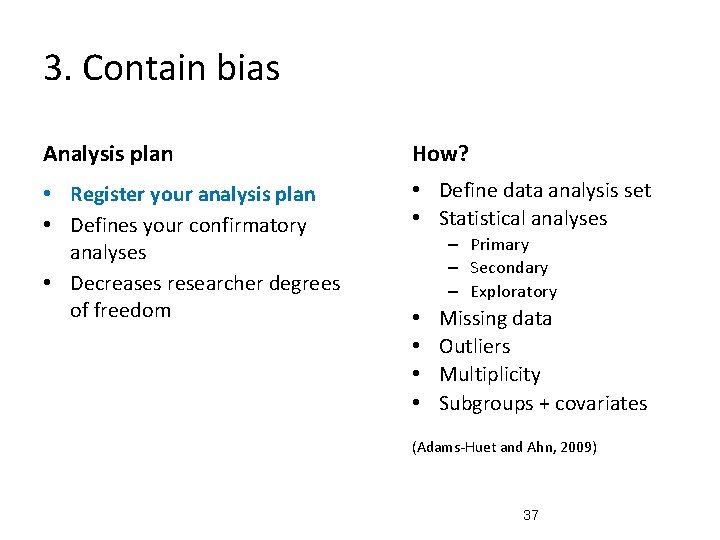

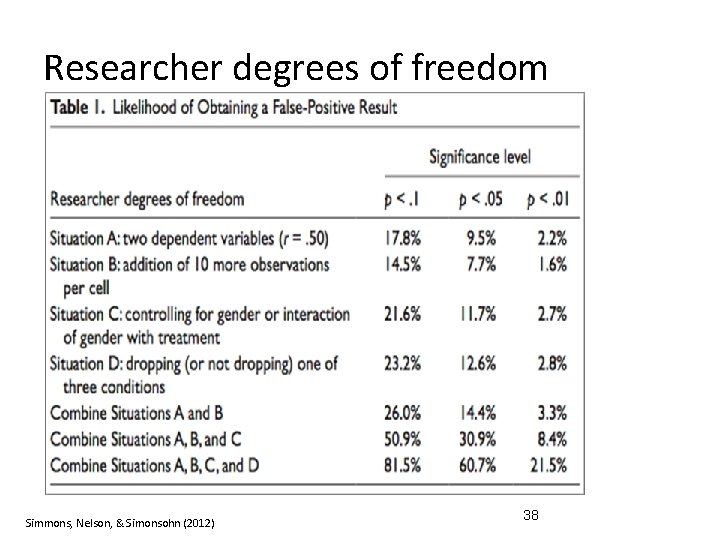

3. Contain bias Analysis plan • Register your analysis plan • Defines your confirmatory analyses • Decreases researcher degrees of freedom How? • Define data analysis set • Statistical analyses – Primary – Secondary – Exploratory • • Missing data Outliers Multiplicity Subgroups + covariates (Adams-Huet and Ahn, 2009) 37

Researcher degrees of freedom Simmons, Nelson, & Simonsohn (2012) 38

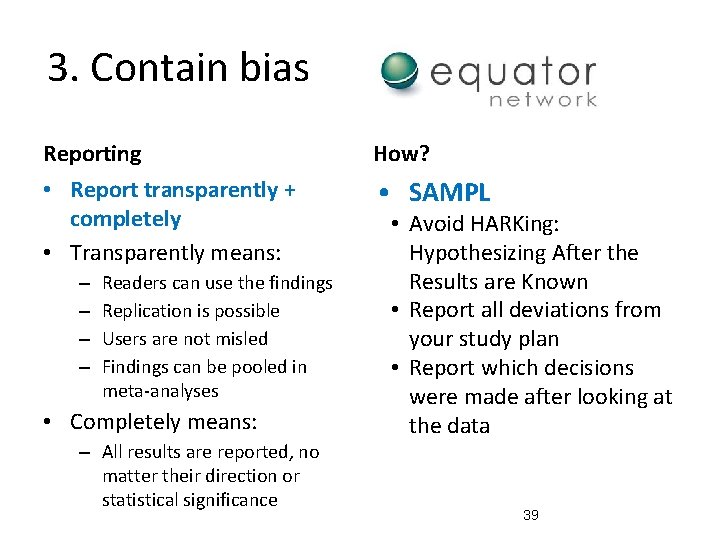

3. Contain bias Reporting • Report transparently + completely • Transparently means: – – Readers can use the findings Replication is possible Users are not misled Findings can be pooled in meta-analyses • Completely means: – All results are reported, no matter their direction or statistical significance How? • SAMPL • Avoid HARKing: Hypothesizing After the Results are Known • Report all deviations from your study plan • Report which decisions were made after looking at the data 39

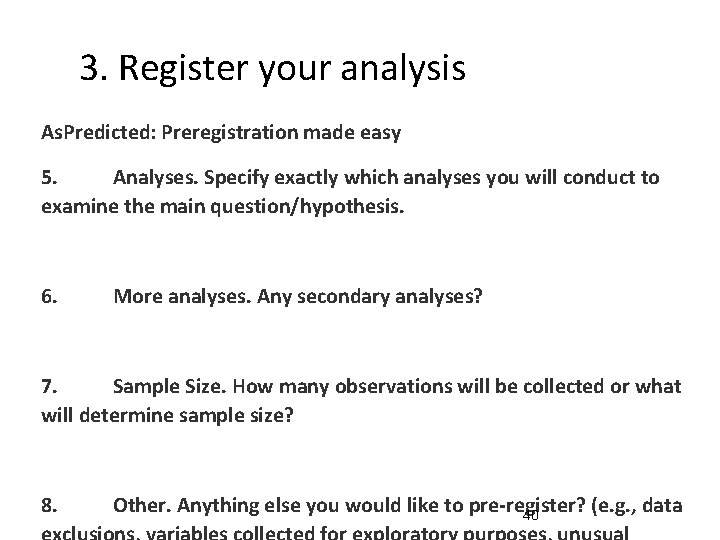

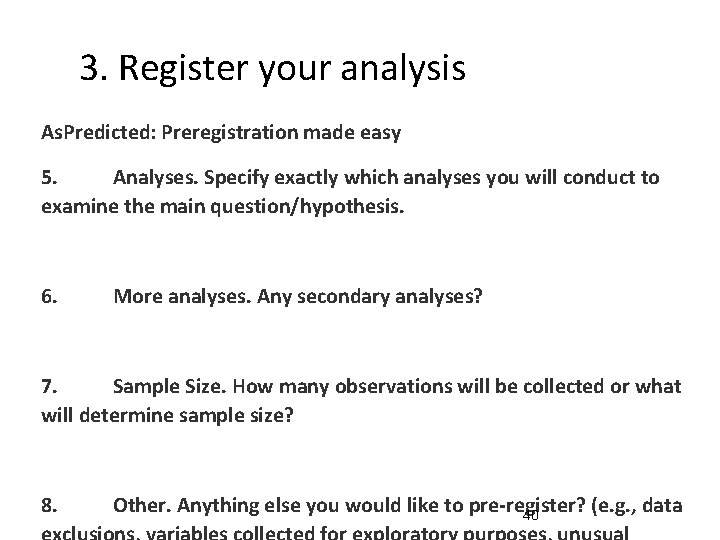

3. Register your analysis As. Predicted: Preregistration made easy 5. Analyses. Specify exactly which analyses you will conduct to examine the main question/hypothesis. 6. More analyses. Any secondary analyses? 7. Sample Size. How many observations will be collected or what will determine sample size? 8. Other. Anything else you would like to pre-register? (e. g. , data 40

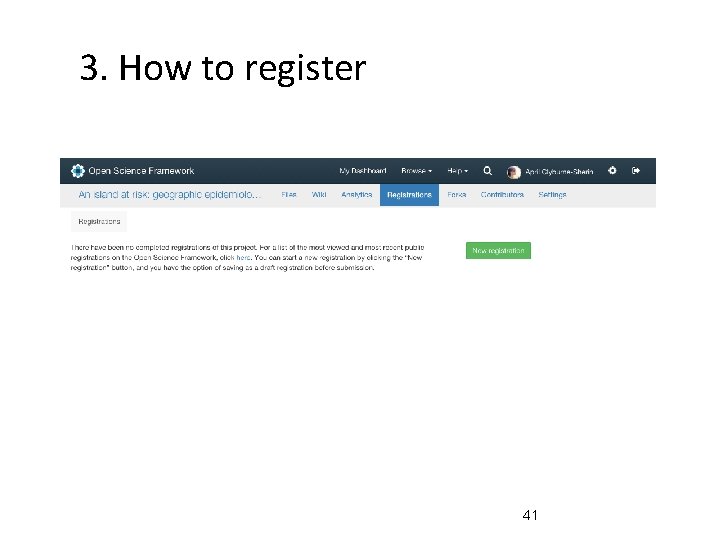

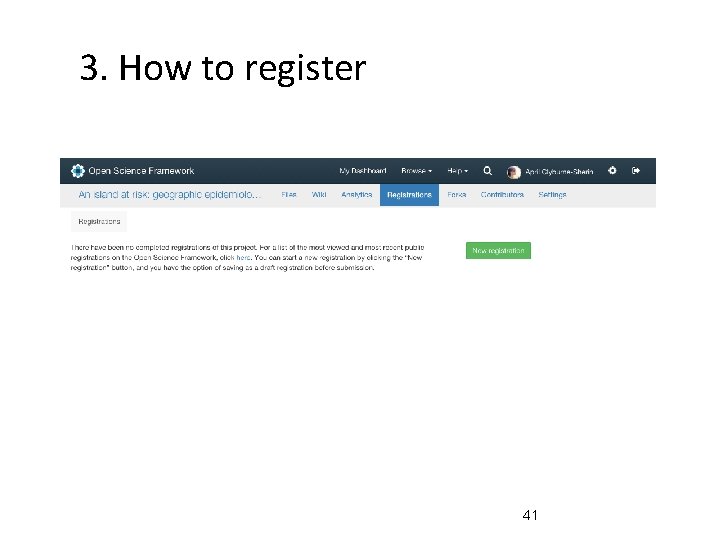

3. How to register 41

How can you make your research reproducible? 1. Plan for reproducibility before you start • Create a study plan – Begin documentation at study inception • Set-up a reproducible project – Centralize and organize your project management 2. Keep track of things • Documentation – Document provenance, your environment, + everything done by hand • Version control – Track your changes 3. Contain bias • Registration – Share important moments in your study • Reporting – Report transparently + completely 4. Archive + share your materials 42

© COPYRIGHT FIRST LOOK MEDIA 2016 Repeat After Me by Maki Naro, Oct 6, 2016 in The Nib Used with Permission URL=https: //thenib. com/repeat-after-me

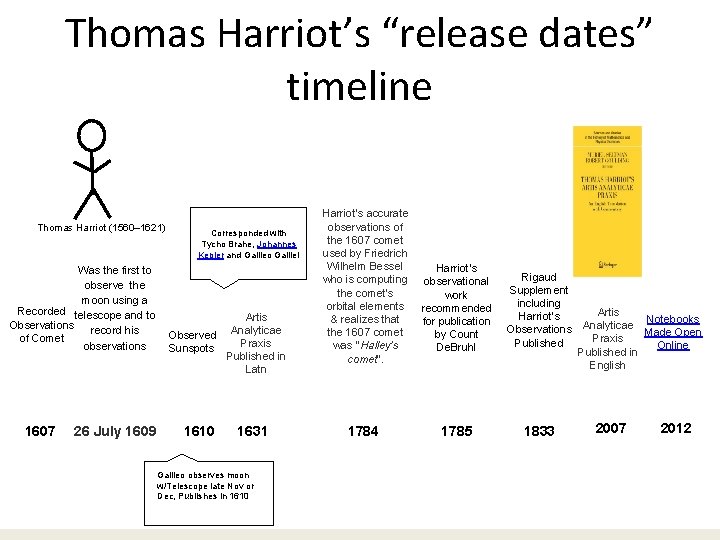

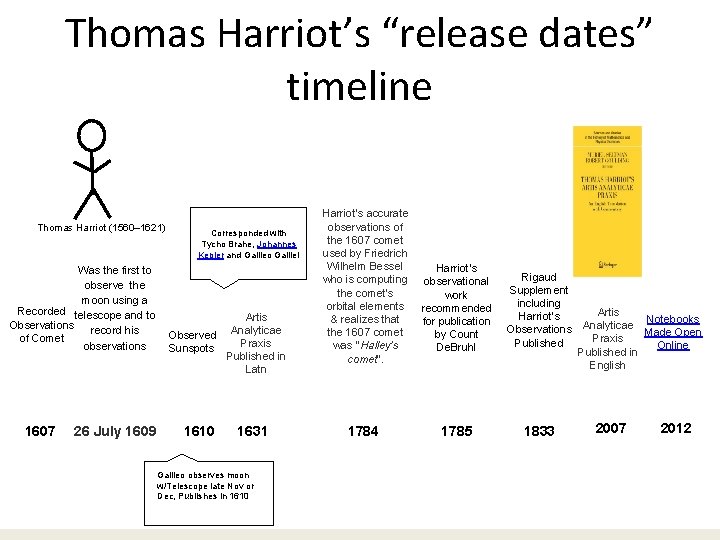

Thomas Harriot’s “release dates” timeline Thomas Harriot (1560– 1621) Was the first to observe the moon using a Recorded telescope and to Observations record his of Comet observations 1607 26 July 1609 Corresponded with Tycho Brahe, Johannes Kepler and Galileo Galilei Artis Analyticae Observed Praxis Sunspots Published in Latn 1610 1631 Galileo observes moon w/Telescope late Nov or Dec, Publishes in 1610 Harriot’s accurate observations of the 1607 comet used by Friedrich Wilhelm Bessel who is computing the comet's orbital elements & realizes that the 1607 comet was "Halley's comet". 1784 Harriot’s observational work recommended for publication by Count De. Bruhl Rigaud Supplement including Harriot’s Observations Published 1785 1833 Artis Notebooks Analyticae Made Open Praxis Online Published in English 2007 2012

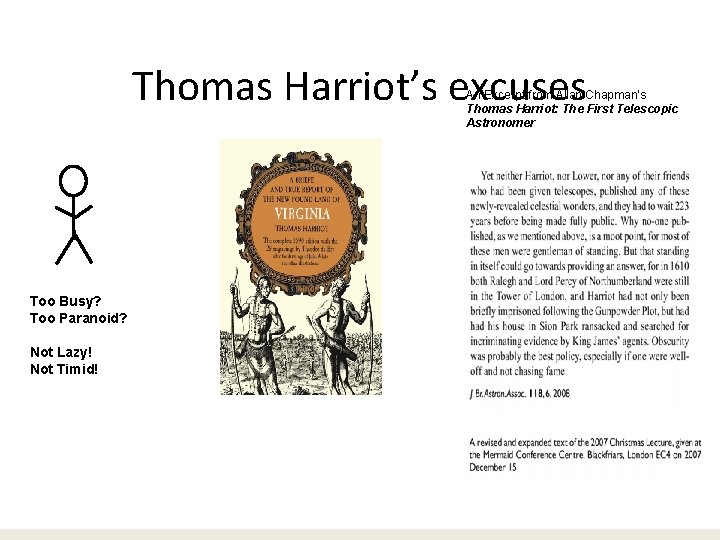

Thomas Harriot’s excuses An Excerpt from Allan Chapman’s Thomas Harriot: The First Telescopic Astronomer Too Busy? Too Paranoid? Not Lazy! Not Timid!

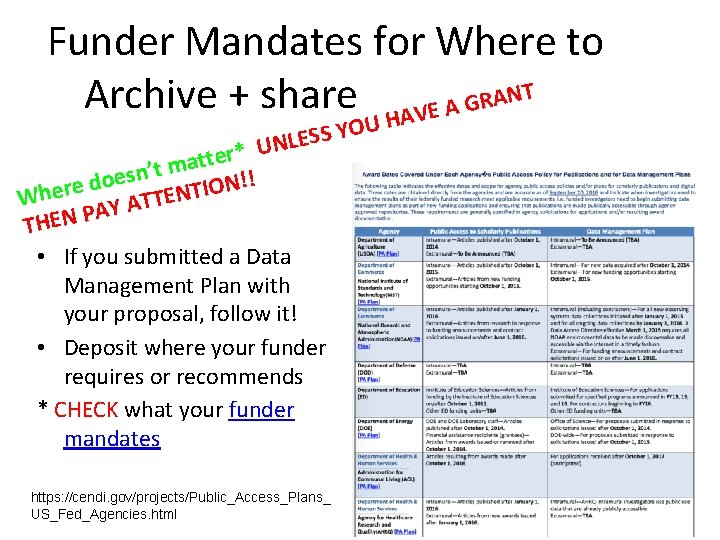

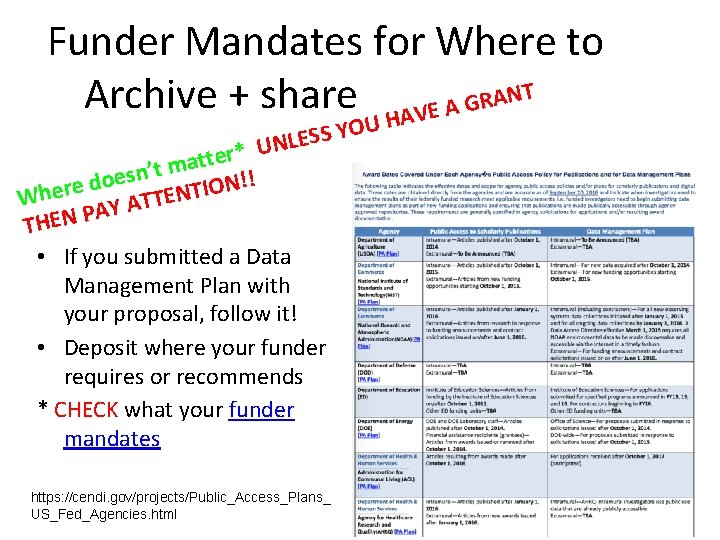

Funder Mandates for Where to Archive + share HAVE A GRANT U YO S S E L UN * r e t t a m t ’ n s oe !! d N e O r I e T Wh TEN T A Y PA N E H T • If you submitted a Data Management Plan with your proposal, follow it! • Deposit where your funder requires or recommends * CHECK what your funder mandates https: //cendi. gov/projects/Public_Access_Plans_ US_Fed_Agencies. html 46

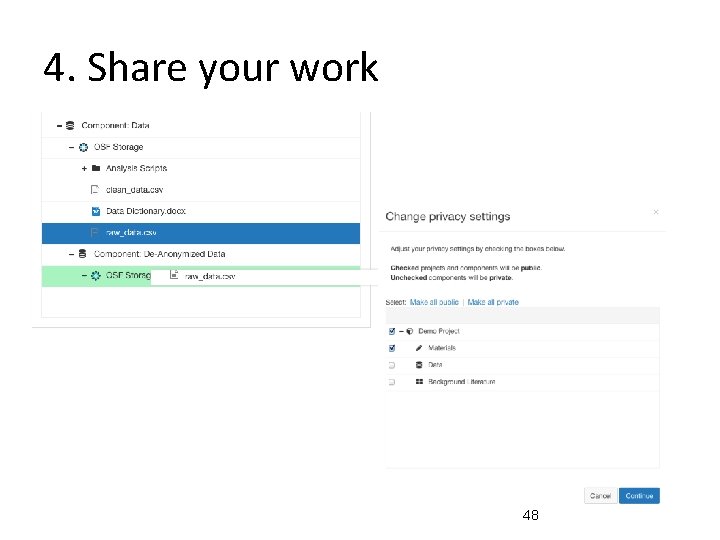

4. Archive + share your materials Share your materials • Where doesn’t matter*. That you share matters. • Get credit for your code, your data, your methods • Increase the impact of your research Open Science Framewor *BUT CHECK what your funder mandates 47

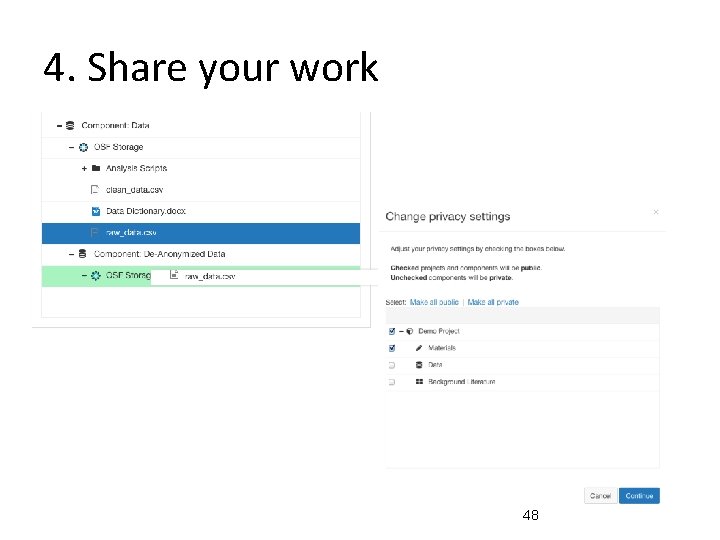

4. Share your work 48

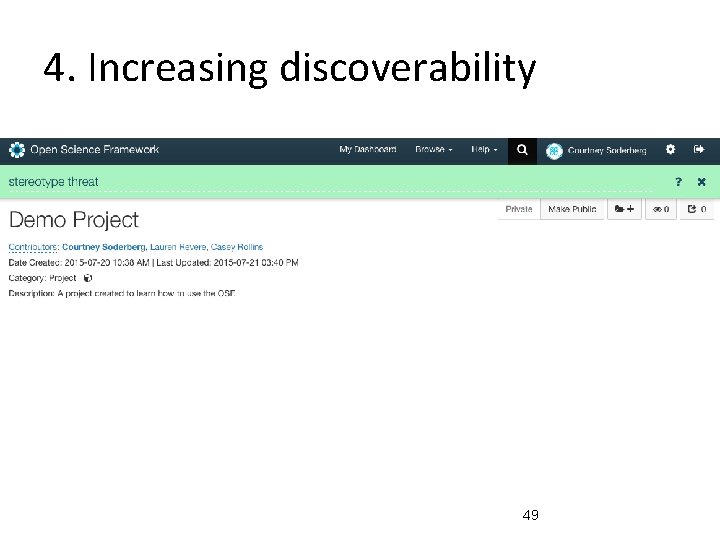

4. Increasing discoverability 49

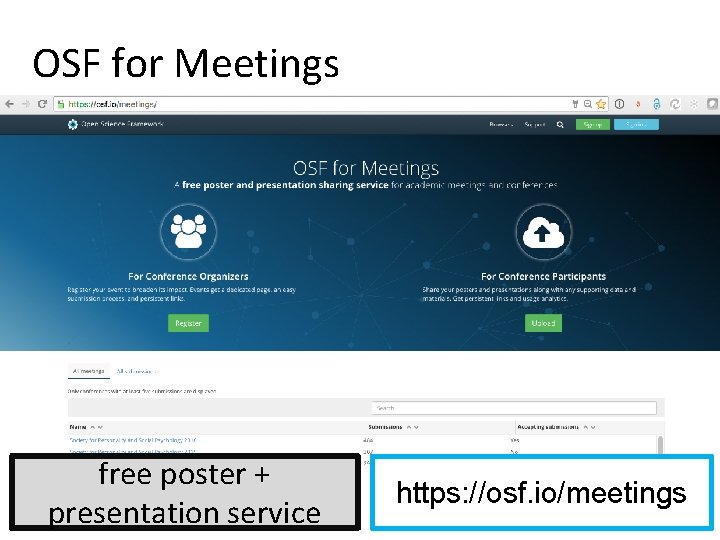

OSF for Meetings free poster + presentation service https: //osf. io/meetings

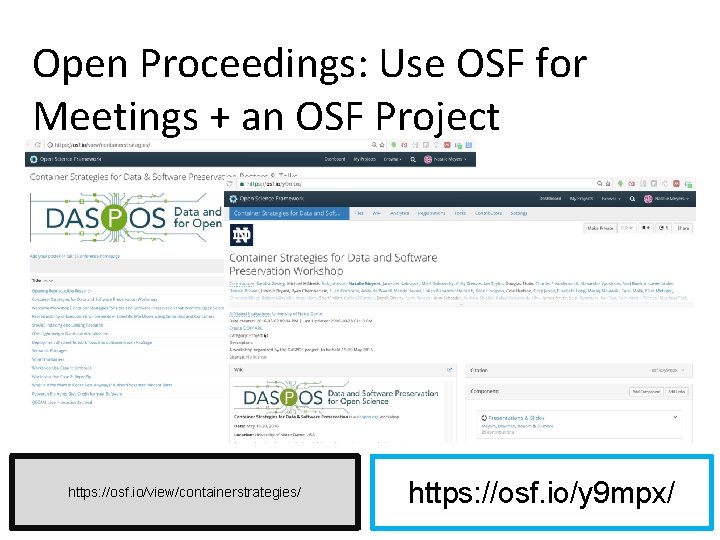

Open Proceedings: Use OSF for Meetings + an OSF Project https: //osf. io/view/containerstrategies/ https: //osf. io/y 9 mpx/

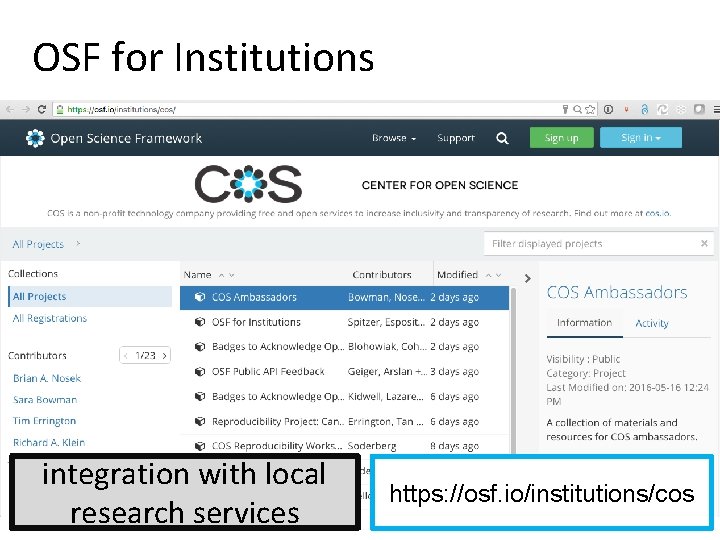

OSF for Institutions integration with local research services https: //osf. io/institutions/cos

• Reproducible Science Curriculum by How to learn more Jenny Bryan – https: //github. com/reproducible -science-curriculum/ • Literate programming • 23 Things Libraries for Research Data • Practical Steps for Increasing the Openness and Reproducibility of Research Data by Natalie Meyers – Literate Statistical Programming by Roger Peng – https: //www. youtube. com/watc h? v=Yc. Jb 1 HBc-1 Q • Version control – Version Control by Software Carpentry – http: //softwarecarpentry. org/v 4/vc/ • Data management – Data Management from Software Carpentry by Orion Buske – http: //softwarecarpentry. org/v 4/data/mgmt. ht ml

Reproducibility training free stats + methods training http: //cos. io/stats_consulting 54

Transparency and Openness Promotion (TOP) Guidelines http: //cos. io/top

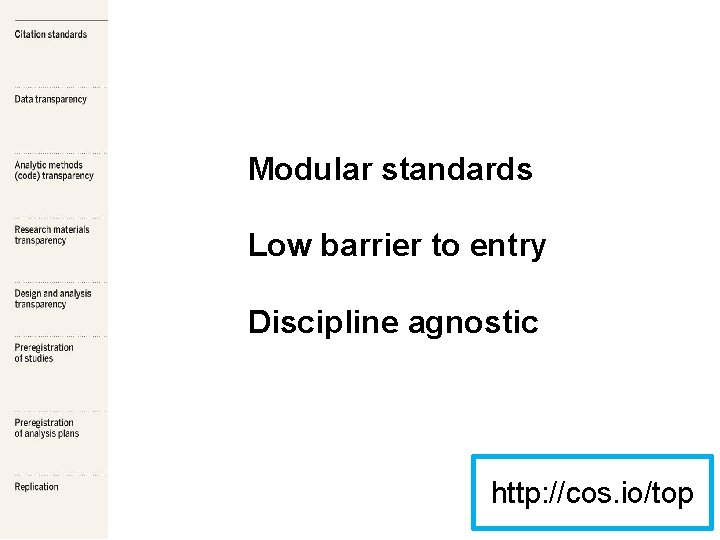

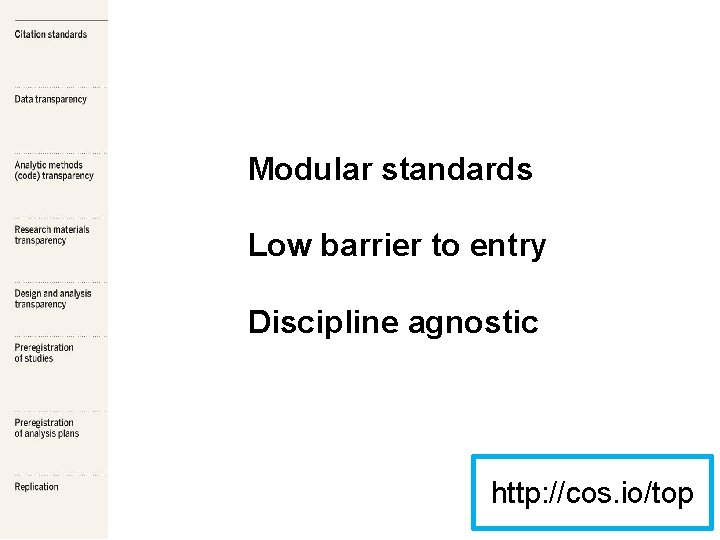

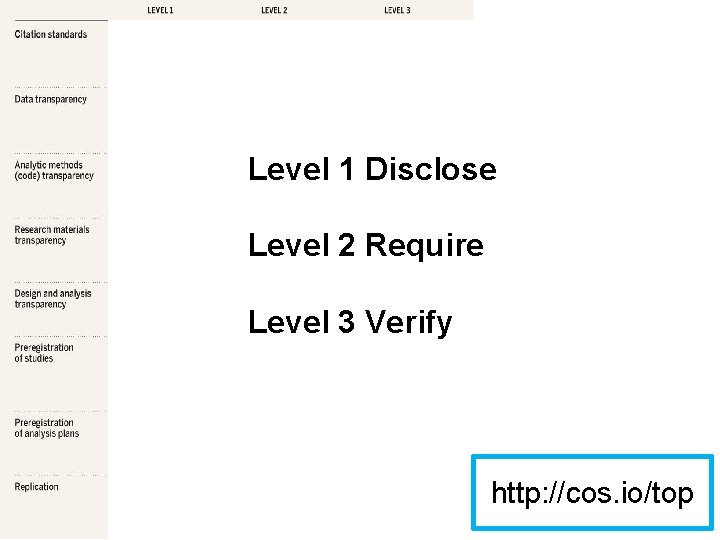

Modular standards Low barrier to entry Discipline agnostic http: //cos. io/top

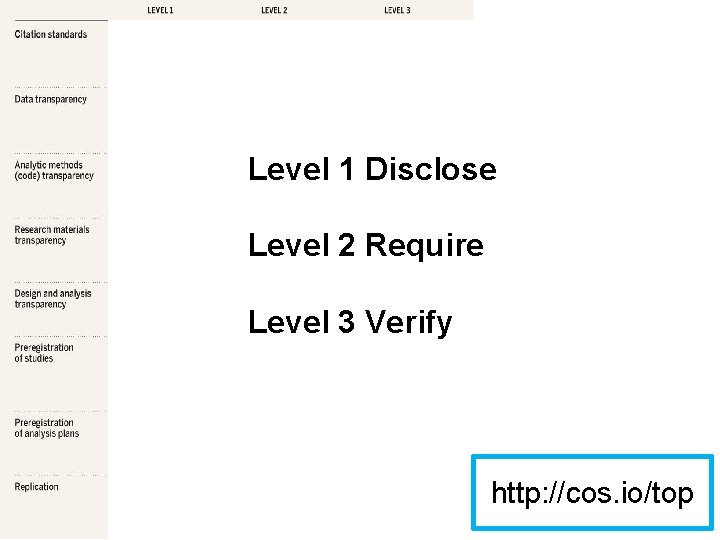

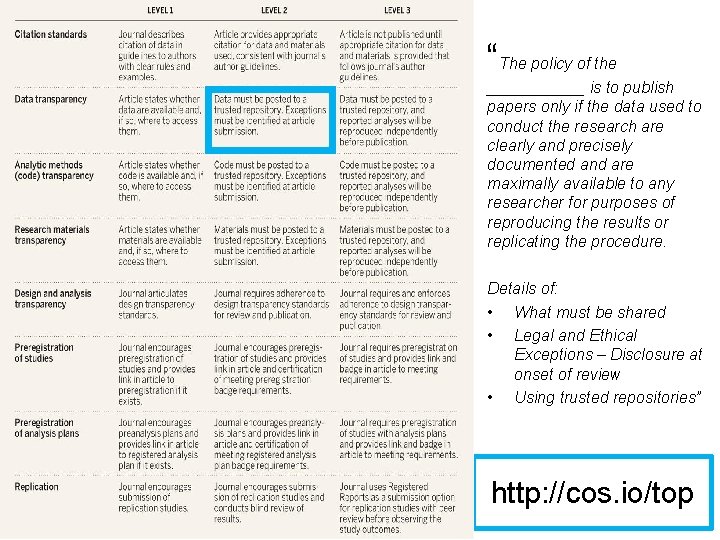

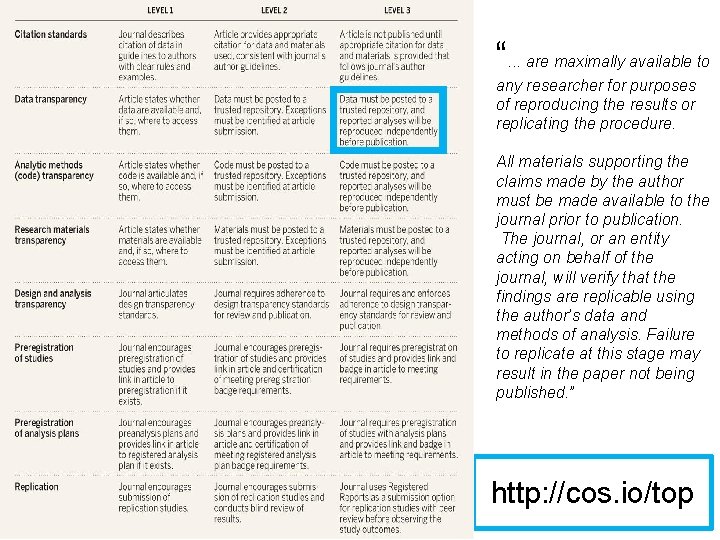

Level 1 Disclose Level 2 Require Level 3 Verify http: //cos. io/top

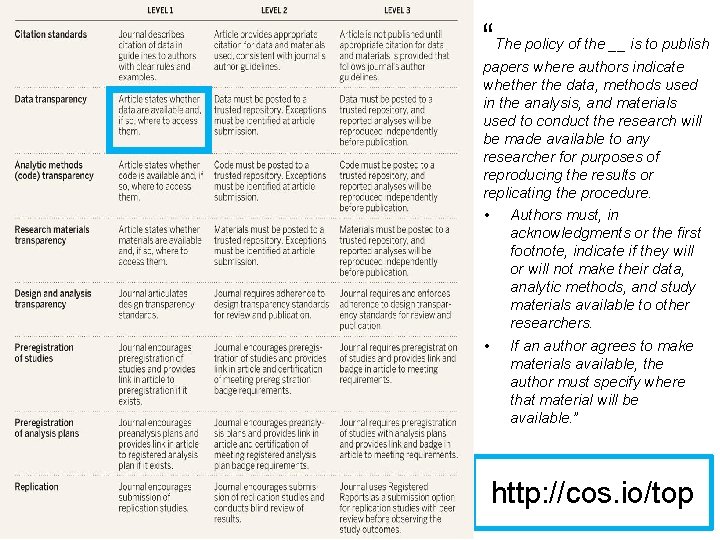

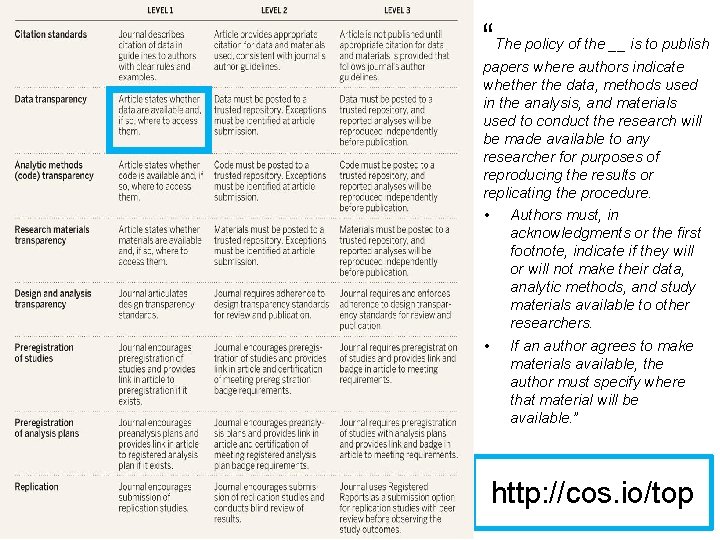

“The policy of the __ is to publish papers where authors indicate whether the data, methods used in the analysis, and materials used to conduct the research will be made available to any researcher for purposes of reproducing the results or replicating the procedure. • Authors must, in acknowledgments or the first footnote, indicate if they will or will not make their data, analytic methods, and study materials available to other researchers. • If an author agrees to make materials available, the author must specify where that material will be available. ” http: //cos. io/top

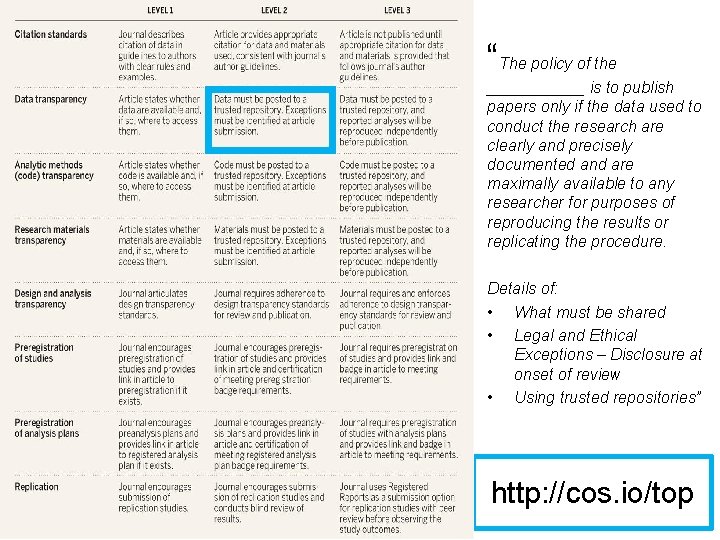

“The policy of the ______ is to publish papers only if the data used to conduct the research are clearly and precisely documented and are maximally available to any researcher for purposes of reproducing the results or replicating the procedure. Details of: • What must be shared • Legal and Ethical Exceptions – Disclosure at onset of review • Using trusted repositories” http: //cos. io/top

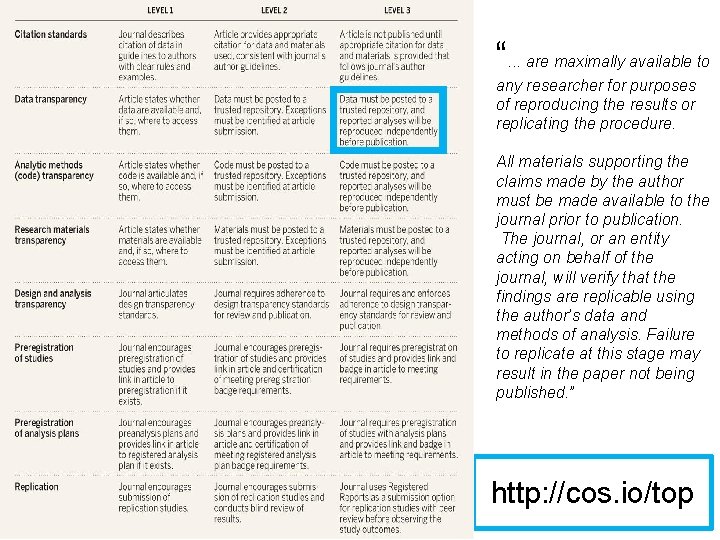

“. . . are maximally available to any researcher for purposes of reproducing the results or replicating the procedure. All materials supporting the claims made by the author must be made available to the journal prior to publication. The journal, or an entity acting on behalf of the journal, will verify that the findings are replicable using the author’s data and methods of analysis. Failure to replicate at this stage may result in the paper not being published. ” http: //cos. io/top

752 Journals 63 Organizations http: //cos. io/top

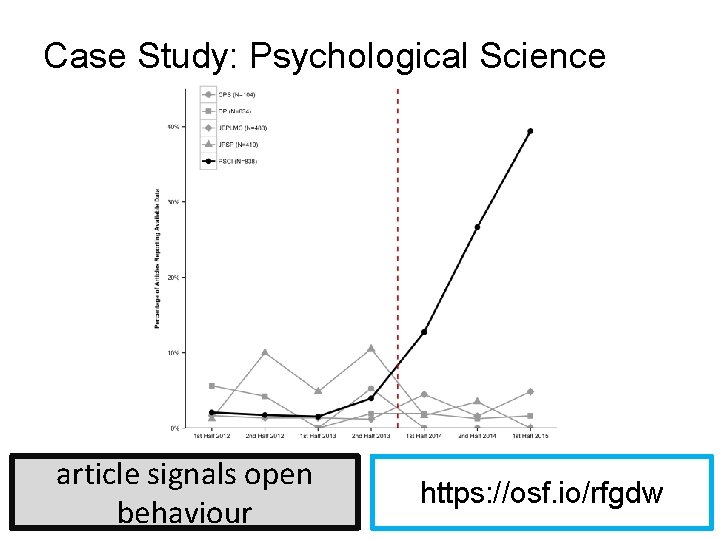

Badges for Open Practices article signals open behaviour https: //osf. io/rfgdw 62

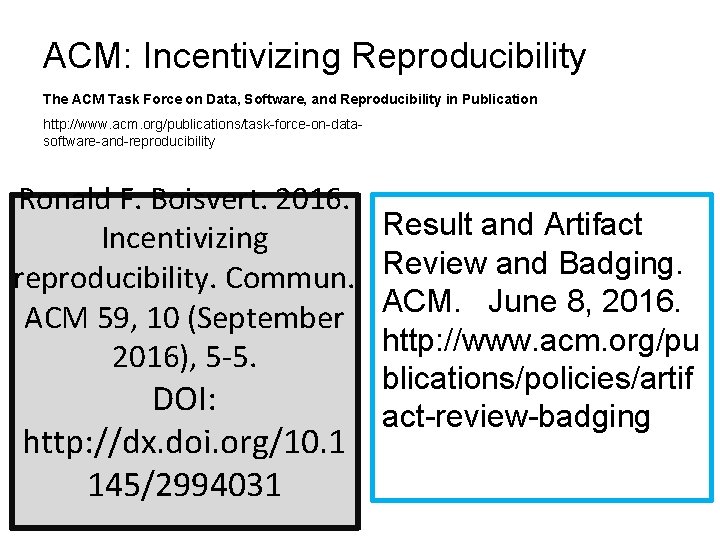

ACM: Incentivizing Reproducibility The ACM Task Force on Data, Software, and Reproducibility in Publication http: //www. acm. org/publications/task-force-on-datasoftware-and-reproducibility Ronald F. Boisvert. 2016. Incentivizing reproducibility. Commun. ACM 59, 10 (September 2016), 5 -5. DOI: http: //dx. doi. org/10. 1 145/2994031 Result and Artifact Review and Badging. ACM. June 8, 2016. http: //www. acm. org/pu blications/policies/artif act-review-badging

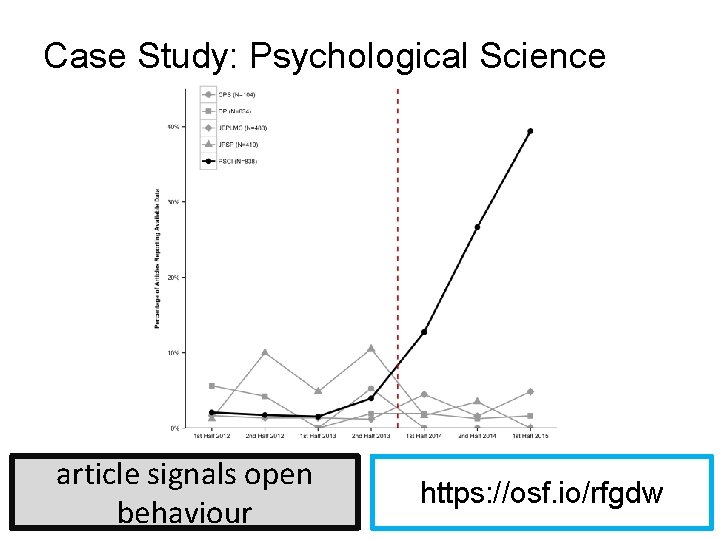

Case Study: Psychological Science article signals open behaviour https: //osf. io/rfgdw 64

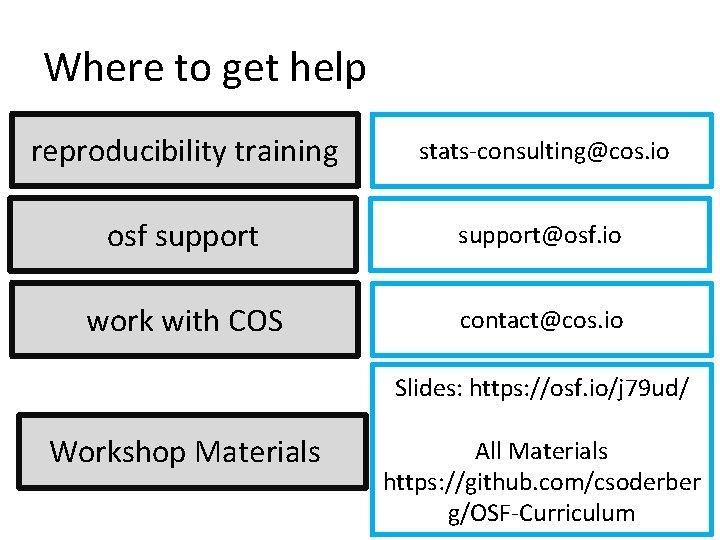

Where to get help reproducibility training stats-consulting@cos. io osf support@osf. io work with COS contact@cos. io Slides: https: //osf. io/j 79 ud/ Workshop Materials All Materials https: //github. com/csoderber 65 g/OSF-Curriculum