Workshop 1 PROJECT EVALUATION Quantification during project evaluation

- Slides: 15

Workshop 1: PROJECT EVALUATION • Quantification during project evaluation is essential for reducing the bias in the decision making process – Cost-benefit analysis is a key ingredient when judging projects and deciding on co-financing rates – Cost-effectiveness analysis cannot be considered a substitute of CBA in all cases. It can be useful when dealing with environment related projects • CBA or any other mechanism are only one criteria on the basis of which project selection must take place • Make sure that projects are sustainable

Workshop 1: PROJECT EVALUATION (cont. ) • Grants never transfer a white elephant project into a viable project • Co-financing rates should be determined according to performance indicators, such as contributions to the real economy and financial performance of projects • When doing “back of the envelope” analysis and thereby using a “rule of thumb” approach, this might help in identifying good or bad projects early on. This analysis / approach is not a substitute for thorough CBA whereby important elements such environmental impact play a role.

Workshop 2: Programme Level Evaluation Achieving a Balance (1) • Hard rationale for evaluation – efficiency, opportunity costs, market failures; (Economic Analysis Skills) • Securing “buy in” and installing an “evaluation mentality” among wide stakeholder partnership; (People Skills)

Workshop 2: Programme Level Evaluation (cont. ) Achieving a Balance (2) • Context of fast change “multiplicity and heterogeneity” with multi-level, multi-actor, multi-object components; (People & Institutional Skills) • Demand for robust, useable common standards and frameworks and devices to control variety and produce communicable results; (Technical, Analytical Skills)

Workshop 2: Programme Level Evaluation (cont. ) Achieving a Balance (3) • Need to create and sustain open programme learning with self imposed obligations; (People and Institutional Skills) • Space for managers to manage and be accountable for spend against consistent and followed through measures; (Management and Financial Control Skills)

Workshop 3 : Policy Evaluation Policies covered by papers : Sustainable regional development Gender equality Environment Information society Is there a thematic evaluation “trap’? Need to include an assessment of regional development Use and interpretation of indicators. Judgement. Aggregation. “Impossible” evaluation questions ? Need to focus on policy issues.

Workshop 4: Usefulness of impact analysis Provide an analytical framework to economic impacts Design alternative scenarios Identify trade-offs for policy decisions

Workshop 4: Usefulness of impact analysis (cont. ) Diversity Theoretical framework Level of aggregation Geographical / sectoral focus Top-down vs. bottom-up Methods of estimation Data requirements

Workshop 4: Usefulness of impact analysis (cont. ) User perspective Appropriateness Realism Accuracy Judgment

Workshop 4: Usefulness of impact analysis (cont. ) Challenges More interaction between experts and users Exploit complementarities between distinct approaches (macro aggregate vs. regional) Development of microstudies as inputs for macro-models Promote new approaches in line with EU policy agenda, e. g. sustainable development

Workshop 5: Capacity building • • Institutional and intellectual dimension Involves a wide range of factors Issue at the national level Needs guidance, support and training Needs to be inclusive Hindered by gaps Benefits from sharing experience Needs clarity of purpose?

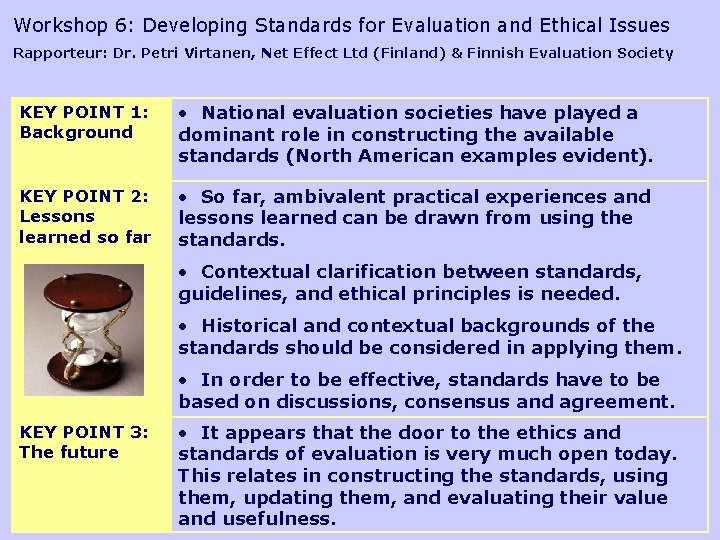

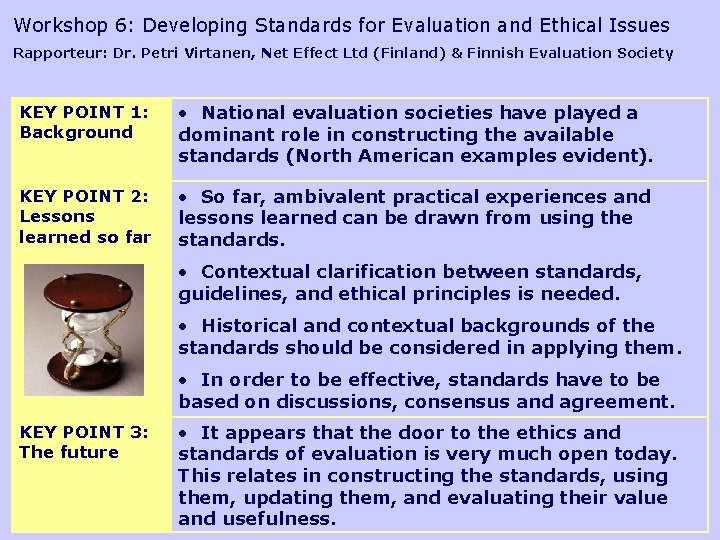

Workshop 6: Developing Standards for Evaluation and Ethical Issues Rapporteur: Dr. Petri Virtanen, Net Effect Ltd (Finland) & Finnish Evaluation Society KEY POINT 1: Background • National evaluation societies have played a dominant role in constructing the available standards (North American examples evident). KEY POINT 2: Lessons learned so far • So far, ambivalent practical experiences and lessons learned can be drawn from using the standards. • Contextual clarification between standards, guidelines, and ethical principles is needed. • Historical and contextual backgrounds of the standards should be considered in applying them. • In order to be effective, standards have to be based on discussions, consensus and agreement. KEY POINT 3: The future • It appears that the door to the ethics and standards of evaluation is very much open today. This relates in constructing the standards, using them, updating them, and evaluating their value and usefulness.

Workshop 7 : Learning from and using evaluations Evaluation without LEARNING is pointless Learning should occur between ALL STAKEHOLDERS Learning is MUTUAL Learning makes use of SUCCESSES and FAILURES Learning includes TACIT knowledge Learning should include the MISSING LINKS Learning should include the PAST, PRESENT and FUTURE

Workshop 8 : Evaluation, Accountability and Performance Accountability and learning at all stages in life cycle and all types of evaluation Ownership of evaluation is based on partnership – Commission, Member State, Region, Local level Quantified and Qualitative information – need for better balance Democratic accountability and learning politicians civil society

Workshop 8 : Evaluation, Accountability and Performance (cont. ) Transparency from start – dissemination and communication Incentives – link to processes More focused ex post evaluations themes quantitative / qualitative balance