Workload Selection and Characterization Andy Wang CIS 5930

- Slides: 72

Workload Selection and Characterization Andy Wang CIS 5930 Computer Systems Performance Analysis

Workloads • Types of workloads • Workload selection 2

Types of Workloads • • What is a Workload? Instruction Workloads Synthetic Workloads Real-World Benchmarks Application Benchmarks “Standard” Benchmarks Exercisers and Drivers 3

What is a Workload? • Workload: anything a computer is asked to do • Test workload: any workload used to analyze performance • Real workload: any observed during normal operations • Synthetic workload: created for controlled testing 4

Real Workloads • Advantage: represent reality • Disadvantage: uncontrolled – Can’t be repeated – Can’t be described simply – Difficult to analyze • Nevertheless, often useful for “final analysis” papers – E. g. , “We ran system foo and it works well” 5

Synthetic Workloads • Advantages: – Controllable – Repeatable – Portable to other systems – Easily modified • Disadvantage: can never be sure real world will be the same 6

Instruction Workloads • Useful only for CPU performance – But teach useful lessons for other situations • Development over decades – “Typical” instruction (ADD) – Instruction mix (by frequency of use) • Sensitive to compiler, application, architecture • Still used today (GFLOPS) – Processor clock rate • Only valid within processor family 7

Instruction Workloads (cont’d) • Modern complexity makes mixes invalid – Pipelining – Data/instruction caching – Prefetching • Kernel is inner loop that does useful work: – Sieve, matrix inversion, sort, etc. – Ignores setup, I/O, so can be timed by analysis if desired (at least in theory) 8

Synthetic Workloads • Complete programs – Designed specifically for measurement – May do real or “fake” work – May be adjustable (parameterized) • Two major classes: – Benchmarks – Exercisers 9

Real-World Benchmarks • • Pick a representative application Pick sample data Run it on system to be tested Modified Andrew Benchmark, MAB, is a real-world benchmark • Easy to do, accurate for that sample data • Fails to consider other applications, data 10

Application Benchmarks • Variation on real-world benchmarks • Choose most important subset of functions • Write benchmark to test those functions • Tests what computer will be used for • Need to be sure important characteristics aren’t missed • Mix of functions must reflect reality 11

“Standard” Benchmarks • Often need to compare general-purpose computer systems for general-purpose use – E. g. , should I buy a Sony or a Dell PC? – Tougher: Mac or PC? • Desire for an easy, comprehensive answer • People writing articles often need to compare tens of machines 12

“Standard” Benchmarks (cont’d) • Often need to make comparisons over time – Is this year’s CPU faster than last year’s • Probably yes, but by how much? • Don’t want to spend time writing own code – Could be buggy or not representative – Need to compare against other people’s results • “Standard” benchmarks offer solution 13

• • • Popular “Standard” Benchmarks Sieve, 8 queens, etc. Whetstone Linpack Dhrystone Debit/credit TPC SPEC MAB Winstone, webstone, etc. . 14

Sieve, etc. • Prime number sieve (Erastothenes) – Nested for loops – Often such small array that it’s silly • 8 queens – Recursive • Many others • Generally not representative of real problems 15

Whetstone • Dates way back (can compare against 70’s) • Based on real observed frequencies • Entirely synthetic (no useful result) – Modern optimizers may delete code • Mixed data types, but best for floating • Be careful of incomparable variants! 16

LINPACK • Based on real programs and data • Developed by supercomputer users • Great if you’re doing serious numerical computation 17

Dhrystone • Bad pun on “Whetstone” • Motivated by Whetstone’s perceived excessive emphasis on floating point • Very popular in PC world • Again, watch out for version mismatches 18

Debit/Credit Benchmark • Developed for transaction processing environments – CPU processing is usually trivial – Remarkably demanding I/O, scheduling requirements • Models real TPS workloads synthetically • Modern version is TPC benchmark 19

SPEC Suite • Result of multi-manufacturer consortium • Addresses flaws in existing benchmarks • Uses 10 real applications, trying to characterize specific real environments • Considers multiple CPUs • Geometric mean gives SPECmark for system • Becoming standard comparison method 20

Modified Andrew Benchmark • Used in research to compare file system, operating system designs • Based on software engineering workload • Exercises copying, compiling, linking • Probably ill-designed, but common use makes it important • Needs scaling up for modern systems 21

Winstone, Webstone, etc. • “Stone” has become suffix meaning “benchmark” • Many specialized suites to test specialized applications – Too many to review here • Important to understand strengths & drawbacks – Bias toward certain workloads – Assumptions about system under test 22

File. Bench • File system and storage benchmark – Can emulate different workload patterns • Mail, web server, file server, and database serevers – Multi-threaded 23

YCSB Benchmarks • Yahoo Cloud Serving Benchmarks • Released in 2010 • Compare key-value and cloud data serving systems 24

Exercisers and Drivers • For I/O, network, non-CPU measurements • Generate a workload, feed to internal or external measured system – I/O on local OS – Network • Sometimes uses dedicated system, interface hardware 25

Advantages of Exercisers • Easy to develop, port • Can incorporate measurement • Easy to parameterize, adjust 26

Disadvantages of Exercisers • High cost if external • Often too small compared to real workloads – Thus not representative – E. g. , may use caches “incorrectly” • Internal exercisers often don’t have real CPU activity – Affects overlap of CPU and I/O • Synchronization effects caused by loops 27

Workload Selection • Services exercised • Completeness – Sample service characterization • • Level of detail Representativeness Timeliness Other considerations 28

Services Exercised • What services does system actually use? – Network performance useless for matrix work • What metrics measure these services? – MIPS/GIPS for CPU speed – Bandwidth/latency for network, I/O – TPS for transaction processing 29

Completeness • Computer systems are complex – Effect of interactions hard to predict • Dynamic voltage scaling can speed up heavy loads (e. g. , accessing encrypted files) – So must be sure to test entire system • Important to understand balance between components – I. e. , don’t use 90% CPU mix to evaluate I/O -bound application 30

Component Testing • Sometimes only individual components are compared – Would a new CPU speed up our system? – How does IPV 6 affect Web server performance? • But component may not be directly related to performance – So be careful, do ANOVA (analysis of variance), don’t extrapolate too much 31

Service Testing • May be possible to isolate interfaces to just one component – E. g. , instruction mix for CPU • Consider services provided and used by that component • System often has layers of services – Can cut at any point and insert workload 32

Characterizing a Service • Identify service provided by major subsystem • List factors affecting performance • List metrics that quantify demands and performance • Identify workload provided to that service 33

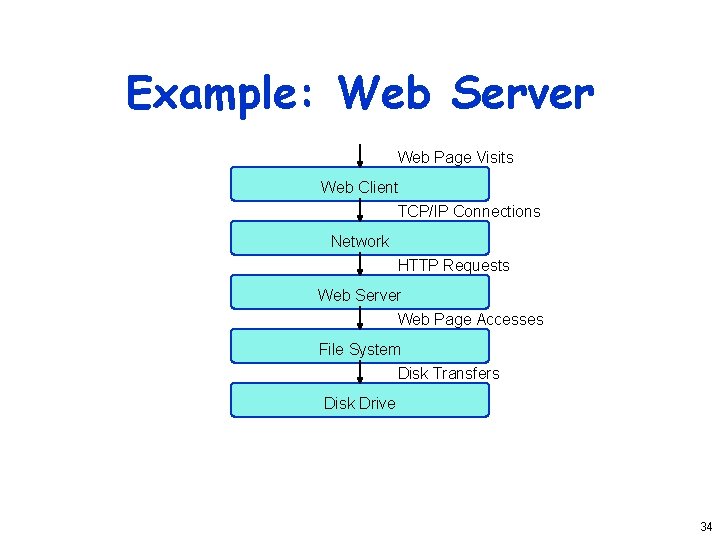

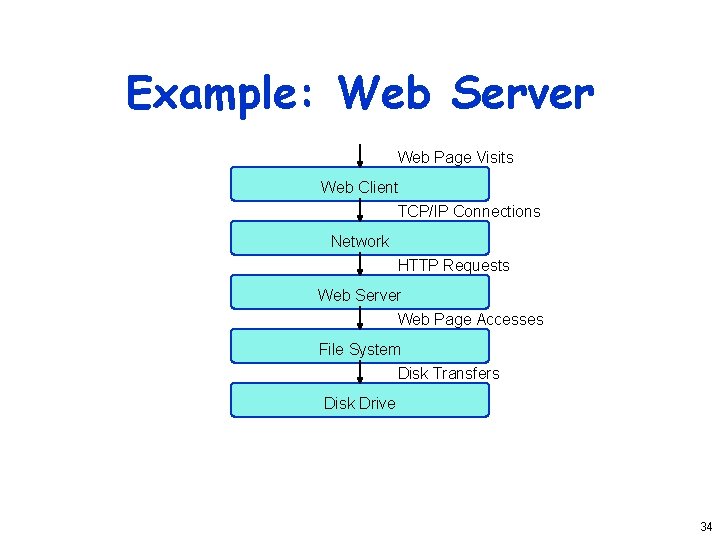

Example: Web Server Web Page Visits Web Client TCP/IP Connections Network HTTP Requests Web Server Web Page Accesses File System Disk Transfers Disk Drive 34

Web Client Analysis • Services: visit page, follow hyperlink, display page information • Factors: page size, number of links, fonts required, embedded graphics, sound • Metrics: reaction time, turnaround time • Workload: a list of pages to be visited and links to be followed 35

Network Analysis • Services: connect to server, transmit request, transfer data • Factors: packet size, protocol used • Metrics: connection setup time, response latency, achieved bandwidth • Workload: a series of connections to one or more servers, with data transfer 36

Web Server Analysis • Services: accept and validate connection, fetch & send HTTP data • Factors: Network performance, CPU speed, system load, disk subsystem performance • Metrics: response time, connections served • Workload: a stream of incoming HTTP connections and requests 37

File System Analysis • Services: open file, read file (writing often doesn’t matter for Web server) • Factors: disk drive characteristics, file system software, cache size, partition size • Metrics: response time, transfer rate • Workload: a series of file-transfer requests 38

Disk Drive Analysis • • Services: read sector, write sector Factors: seek time, transfer rate Metrics: response time Workload: a statistically-generated stream of read/write requests 39

Level of Detail • Detail trades off accuracy vs. cost • Highest detail is complete trace • Lowest is one request, usually most common • Intermediate approach: weight by frequency • We will return to this when we discuss workload characterization 40

Representativeness • Obviously, workload should represent desired application – Arrival rate of requests – Resource demands of each request – Resource usage profile of workload over time • Again, accuracy and cost trade off • Need to understand whether detail matters 41

Timeliness • Usage patterns change over time – File size grows to match disk size – Web pages grow to match network bandwidth • If using “old” workloads, must be sure user behavior hasn’t changed • Even worse, behavior may change after test, as result of installing new system – “Latent demand” phenomenon 42

Other Considerations • Loading levels – Full capacity – Beyond capacity – Actual usage • External components not considered as parameters • Repeatability of workload 43

Workload Characterization • • Terminology Averaging Specifying dispersion Single-parameter histograms Multi-parameter histograms Principal-component analysis Markov models Clustering 44

Workload Characterization Terminology • User (maybe nonhuman) requests service – Also called workload component or workload unit • Workload parameters or workload features model or characterize the workload 45

Selecting Workload Components • Most important: components should be external: at interface of SUT (system under test) • Components should be homogeneous • Should characterize activities of interest to the study 46

Choosing Workload Parameters • Select parameters that depend only on workload (not on SUT) • Prefer controllable parameters • Omit parameters that have no effect on system, even if important in real world 47

Averaging • Basic character of a parameter is its average value • Not just arithmetic mean • Good for uniform distributions or gross studies 48

Specifying Dispersion • Most parameters are non-uniform • Specifying variance or standard deviation brings major improvement over average • Average and s. d. (or C. O. V. ) together allow workloads to be grouped into classes – Still ignores exact distribution 49

Single-Parameter Histograms • Make histogram or kernel density estimate • Fit probability distribution to shape of histogram • Chapter 27 (not covered in course) lists many useful shapes • Ignores multiple-parameter correlations 50

Multi-Parameter Histograms • Use 3 -D plotting package to show 2 parameters – Or plot each datum as 2 -D point and look for “black spots” • Shows correlations – Allows identification of important parameters • Not practical for 3 or more parameters 51

Principal-Component Analysis (PCA) • How to analyze more than 2 parameters? • Could plot endless pairs – Still might not show complex relationships • Principal-component analysis solves problem mathematically – Rotates parameter set to align with axes – Sorts axes by importance 52

Advantages of PCA • • Handles more than two parameters Insensitive to scale of original data Detects dispersion Combines correlated parameters into single variable • Identifies variables by importance 53

Disadvantages of PCA • Tedious computation (if no software) • Still requires hand analysis of final plotted results • Often difficult to relate results back to original parameters 54

Markov Models • • Sometimes, distribution isn’t enough Requests come in sequences Sequencing affects performance Example: disk bottleneck – Suppose jobs need 1 disk access per CPU slice – CPU slice is much faster than disk – Strict alternation uses CPU better – Long disk access strings slow system 55

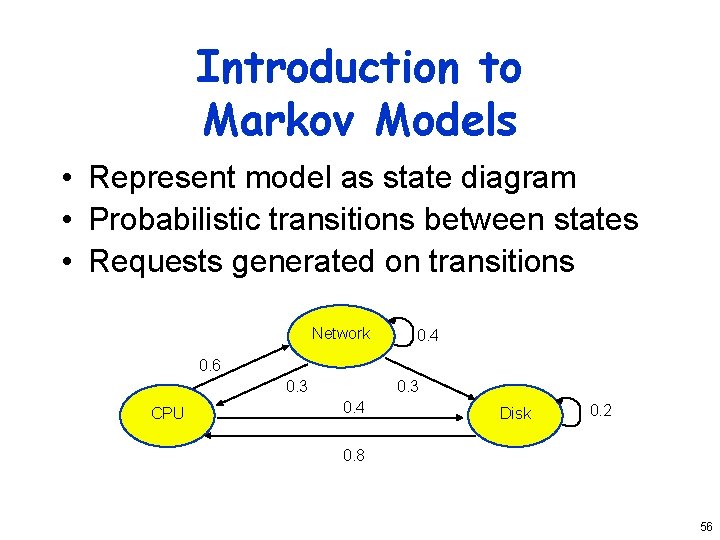

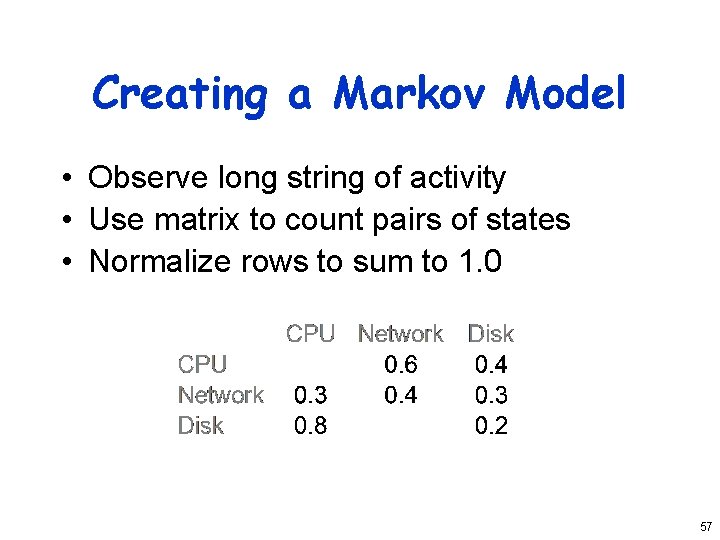

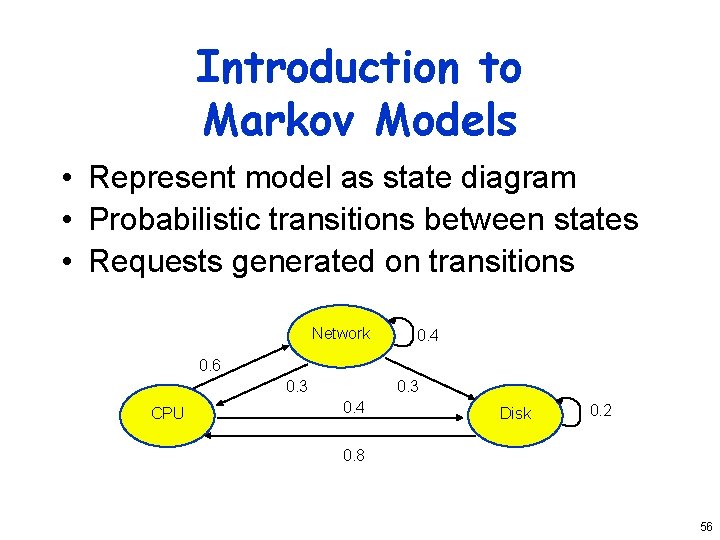

Introduction to Markov Models • Represent model as state diagram • Probabilistic transitions between states • Requests generated on transitions Network 0. 4 0. 6 0. 3 CPU 0. 3 0. 4 Disk 0. 2 0. 8 56

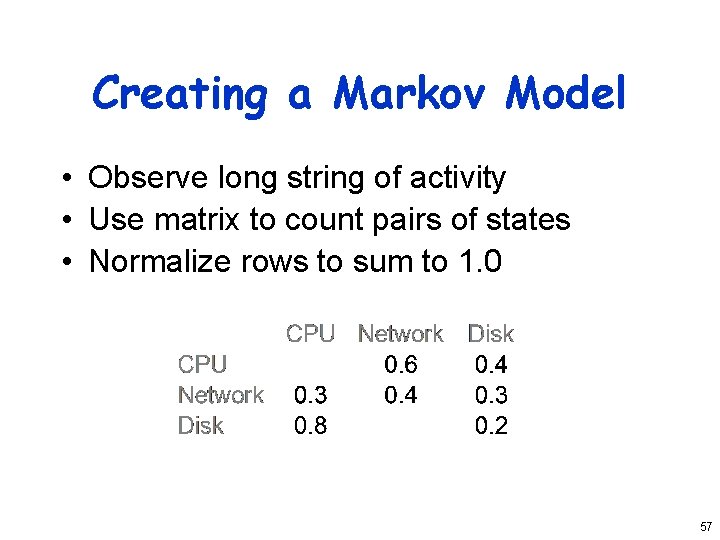

Creating a Markov Model • Observe long string of activity • Use matrix to count pairs of states • Normalize rows to sum to 1. 0 57

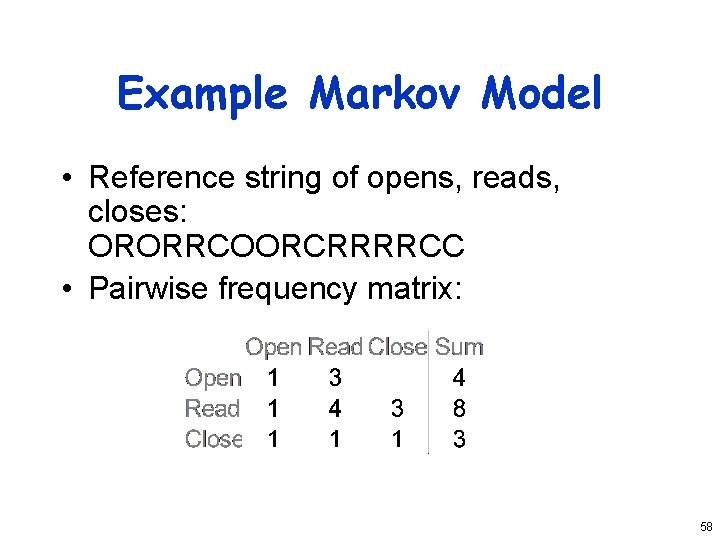

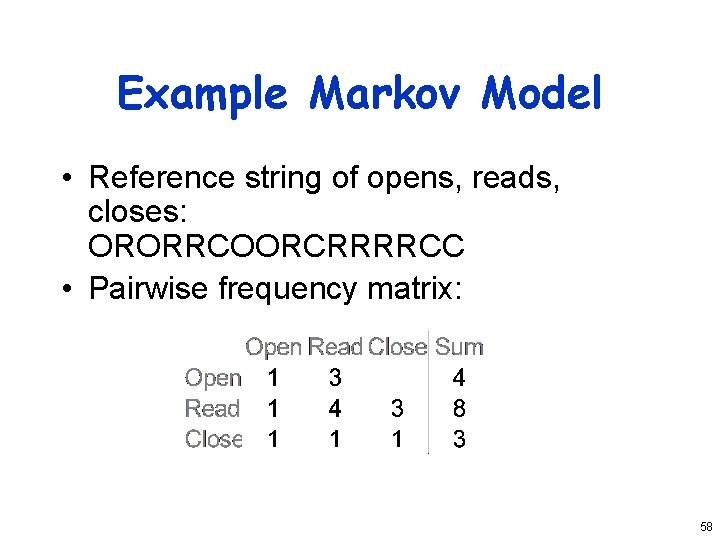

Example Markov Model • Reference string of opens, reads, closes: ORORRCOORCRRRRCC • Pairwise frequency matrix: 58

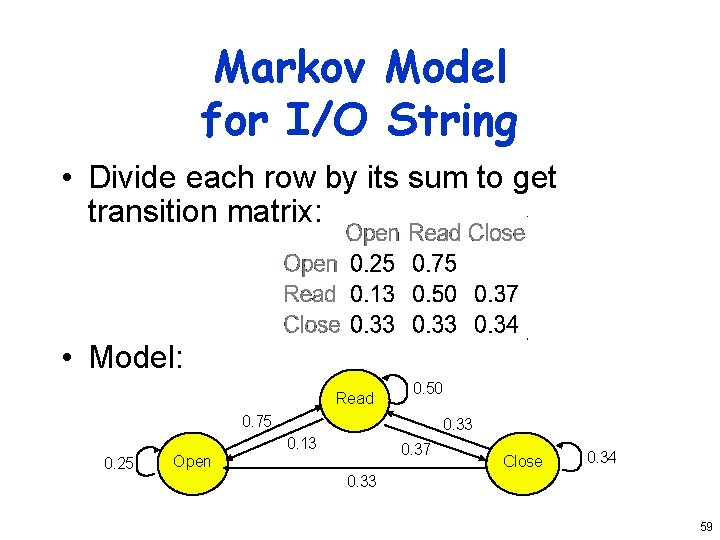

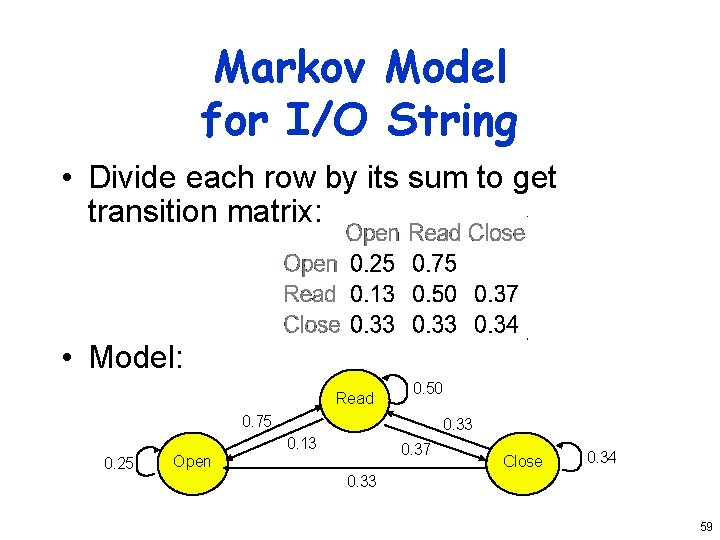

Markov Model for I/O String • Divide each row by its sum to get transition matrix: • Model: Read 0. 50 0. 75 0. 25 Open 0. 33 0. 13 0. 37 Close 0. 34 0. 33 59

Clustering • Often useful to break workload into categories • “Canonical example” of each category can be used to represent all samples • If many samples, generating categories is difficult • Solution: clustering algorithms 60

Steps in Clustering • • Select sample Choose and transform parameters Drop outliers Scale observations Choose distance measure Do clustering Use results to adjust parameters, repeat Choose representative components 61

Selecting A Sample • Clustering algorithms are often slow – Must use subset of all observations • Can test sample after clustering: does every observation fit into some cluster? • Sampling options – Random – Heaviest users of component under study 62

Choosing and Transforming Parameters • Goal is to limit complexity of problem • Concentrate on parameters with high impact, high variance – Use principal-component analysis – Drop a parameter, re-cluster, see if different • Consider transformations such as Sec. 15. 4 (logarithms, etc. ) 63

Dropping Outliers • Must get rid of observations that would skew results – Need great judgment here – No firm guidelines • Drop things that you know are “unusual” • Keep things that consume major resources – E. g. , daily backups 64

Scale Observations • Cluster analysis is often sensitive to parameter ranges, so scaling affects results • Options: – Scale to zero mean and unit variance – Weight based on importance or variance – Normalize range to [0, 1] – Normalize 95% of data to [0, 1] 65

Choosing a Distance Measure • Endless possibilities available • Represent observations as vectors in kspace • Popular measures include: – Euclidean distance, weighted or unweighted – Chi-squared distance – Rectangular distance 66

Clustering Methods • • Many algorithms available Computationally expensive (NP hard) Can be simple or hierarchical Many require you to specify number of desired clusters • Minimum Spanning Tree is not only option! 67

Minimum Spanning Tree Clustering • Start with each point in a cluster • Repeat until single cluster: – Compute centroid of each cluster – Compute intercluster distances – Find smallest distance – Merge clusters with smallest distance • Method produces stable results – But not necessarily optimum 68

K-Means Clustering • • One of most popular methods Number of clusters is input parameter First randomly assign points to clusters Repeat until no change: – Calculate center of each cluster: – Assign each point to cluster with nearest center 69

Interpreting Clusters • Art, not science • Drop small clusters (if little impact on performance) • Try to find meaningful characterizations • Choose representative components – Number proportional to cluster size or to total resource demands 70

Drawbacks of Clustering • Clustering is basically AI problem • Humans will often see patterns where computer sees none • Result is extremely sensitive to: – Choice of algorithm – Parameters of algorithm – Minor variations in points clustered • Results may not have functional meaning 71

White Slide