Workload Design Selecting Representative ProgramInput Pairs Lieven Eeckhout

- Slides: 21

Workload Design: Selecting Representative Program-Input Pairs Lieven Eeckhout Hans Vandierendonck Koen De Bosschere Ghent University, Belgium PACT 2002, September 23, 2002 PACT 2002

Introduction • Microprocessor design: simulation of workload = set of programs + inputs – constrained in size due to time limitation – taken from suites, e. g. , SPEC, TPC, Media. Bench • Workload design: – – which programs? which inputs? representative: large variation in behavior benchmark-input pairs should be “different” September 23, 2002 PACT 2002 2

Main idea • Workload design space is p-D space – with p = # relevant program characteristics – p is too large for understandable visualization – correlation between p characteristics • Idea: reduce p-D space to q-D space – – with q small (typically 2 to 4) without losing important information no correlation achieved by multivariate data analysis techniques: PCA and cluster analysis September 23, 2002 PACT 2002 3

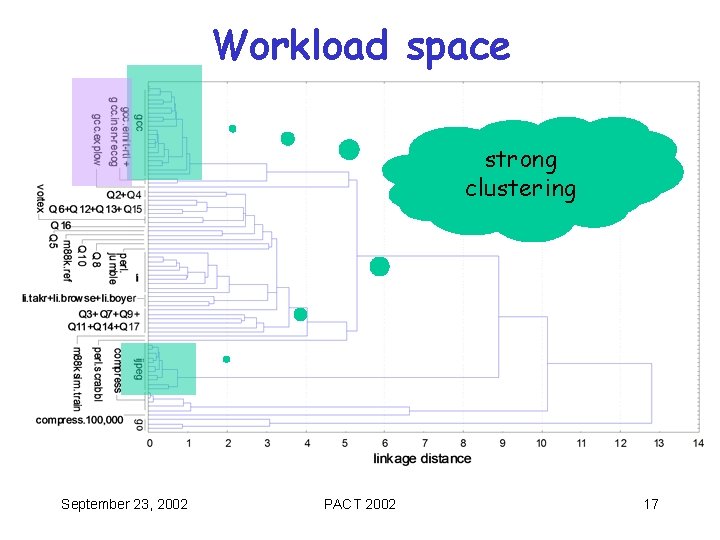

Goal • Measuring impact of input data sets on program behavior – “far away” or weak clustering: different behavior – “close” or strong clustering: similar behavior • Applications: – selecting representative program-input pairs • e. g. , one program-input pair per cluster • e. g. , take program-input pair with smallest dynamic instruction count – getting insight in influence of input data sets – profile-guided optimization September 23, 2002 PACT 2002 4

Overview • Introduction • Workload characterization • Data analysis – Principal components analysis (PCA) – Cluster analysis • Evaluation • Discussion • Conclusion September 23, 2002 PACT 2002 5

Workload characterization (1) • Instruction mix – int, logic, shift&byte, load/store, control • Branch prediction accuracy – bimodal (8 K*2 bits), gshare (8 K*2 bits) and hybrid (meta: 8 K*2 bits) branch predictor • Data and instruction cache miss rates – Five caches with varying size and associativity September 23, 2002 PACT 2002 6

Workload characterization (2) • Number of instructions between two taken branches • Instruction-Level Parallelism – IPC of an infinite-resource machine with only read-after-write dependencies • In total: p = 20 variables September 23, 2002 PACT 2002 7

Overview • Introduction • Workload characterization • Data analysis – Principal components analysis (PCA) – Cluster analysis • Evaluation • Discussion • Conclusion September 23, 2002 PACT 2002 8

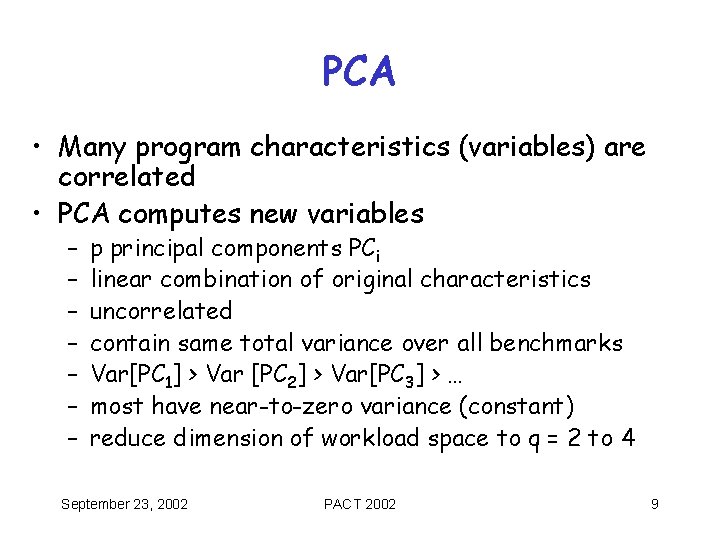

PCA • Many program characteristics (variables) are correlated • PCA computes new variables – – – – p principal components PCi linear combination of original characteristics uncorrelated contain same total variance over all benchmarks Var[PC 1] > Var [PC 2] > Var[PC 3] > … most have near-to-zero variance (constant) reduce dimension of workload space to q = 2 to 4 September 23, 2002 PACT 2002 9

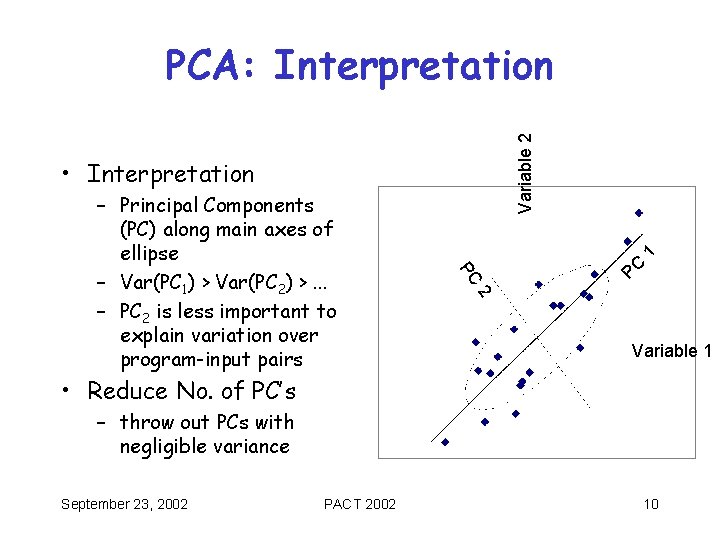

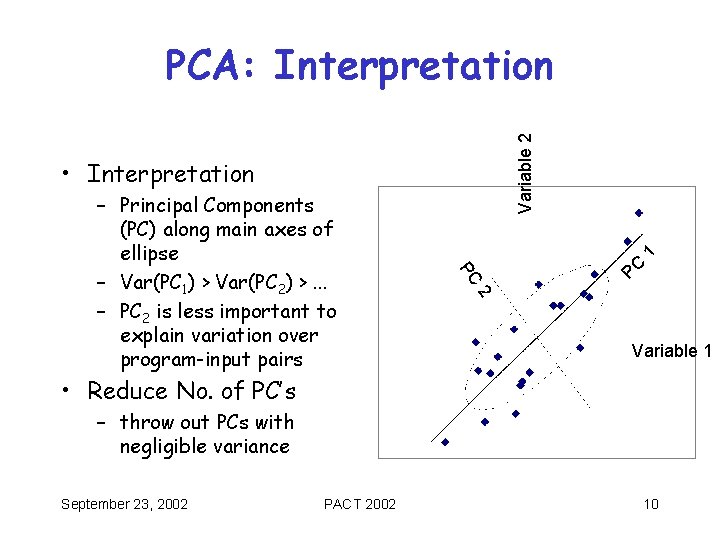

Variable 2 PCA: Interpretation PC PC 2 – Principal Components (PC) along main axes of ellipse – Var(PC 1) > Var(PC 2) >. . . – PC 2 is less important to explain variation over program-input pairs 1 • Interpretation Variable 1 • Reduce No. of PC’s – throw out PCs with negligible variance September 23, 2002 PACT 2002 10

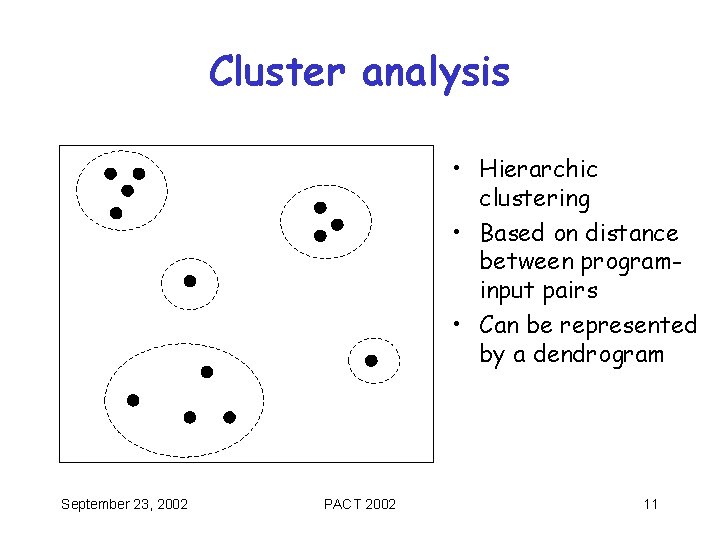

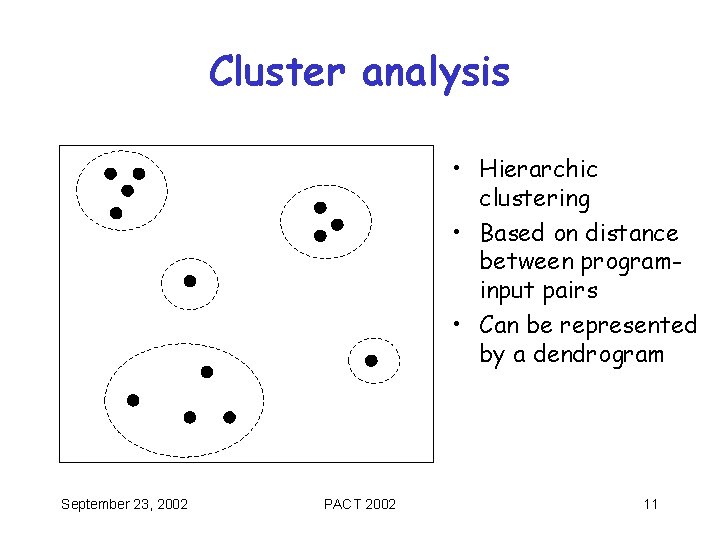

Cluster analysis • Hierarchic clustering • Based on distance between programinput pairs • Can be represented by a dendrogram September 23, 2002 PACT 2002 11

Overview • Introduction • Workload characterization • Data analysis – Principal components analysis (PCA) – Cluster analysis • Evaluation • Discussion • Conclusion September 23, 2002 PACT 2002 12

Methodology • Benchmarks – SPECint 95 • Inputs from SPEC: train and ref • Inputs from the web (ijpeg) • Reduced inputs (compress) – TPC-D on postgres v 6. 3 – Compiled with –O 4 on Alpha – 79 program-input pairs • ATOM – Instrumentation – Measuring characteristics • STATISTICA – Statistical analysis September 23, 2002 PACT 2002 13

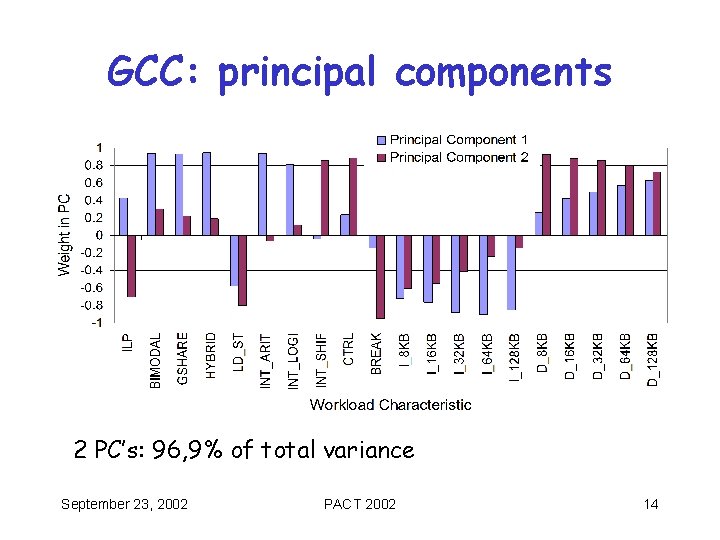

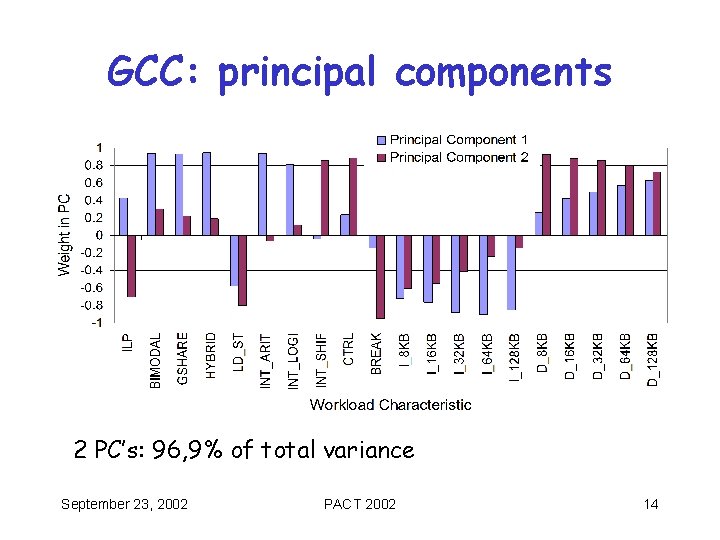

GCC: principal components 2 PC’s: 96, 9% of total variance September 23, 2002 PACT 2002 14

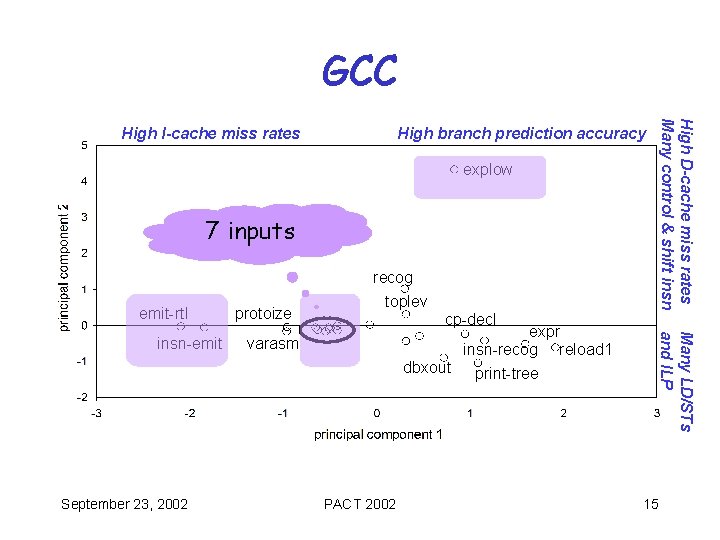

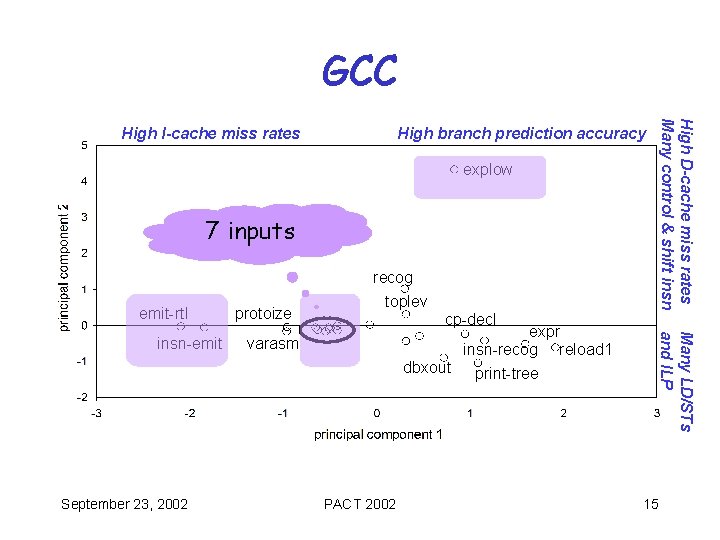

GCC High D-cache miss rates Many control & shift insn High I-cache miss rates High branch prediction accuracy explow 7 inputs emit-rtl September 23, 2002 cp-decl expr insn-recog reload 1 dbxout print-tree varasm PACT 2002 Many LD/STs and ILP insn-emit protoize recog toplev 15

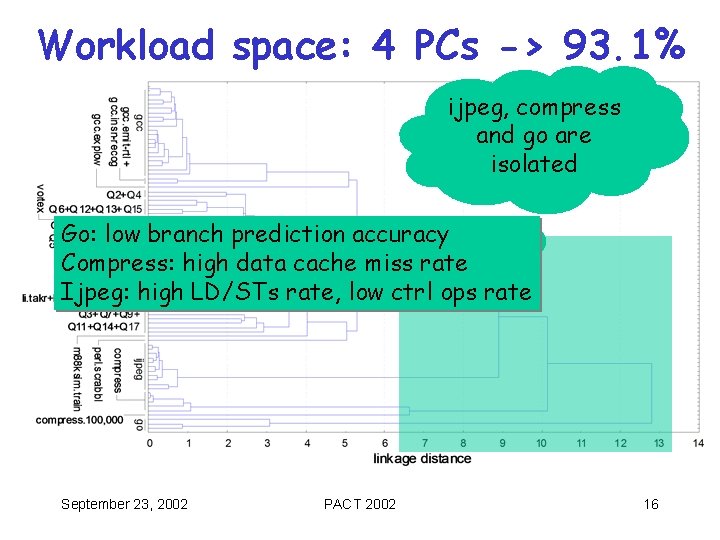

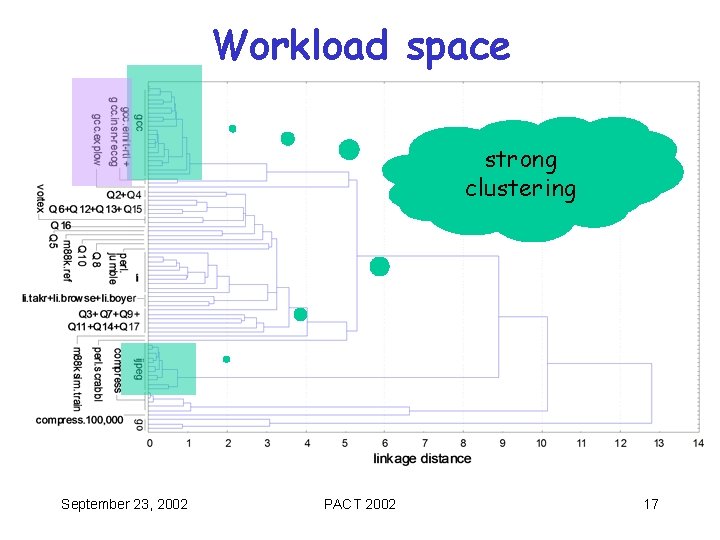

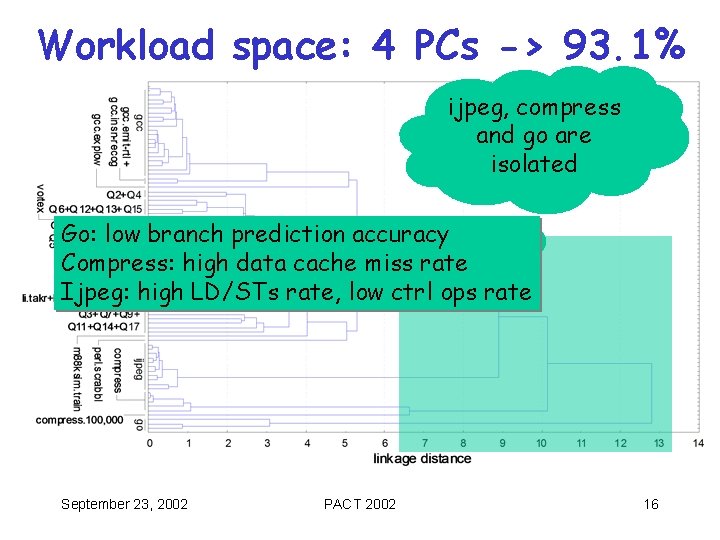

Workload space: 4 PCs -> 93. 1% ijpeg, compress and go are isolated Go: low branch prediction accuracy Compress: high data cache miss rate Ijpeg: high LD/STs rate, low ctrl ops rate September 23, 2002 PACT 2002 16

Workload space strong clustering September 23, 2002 PACT 2002 17

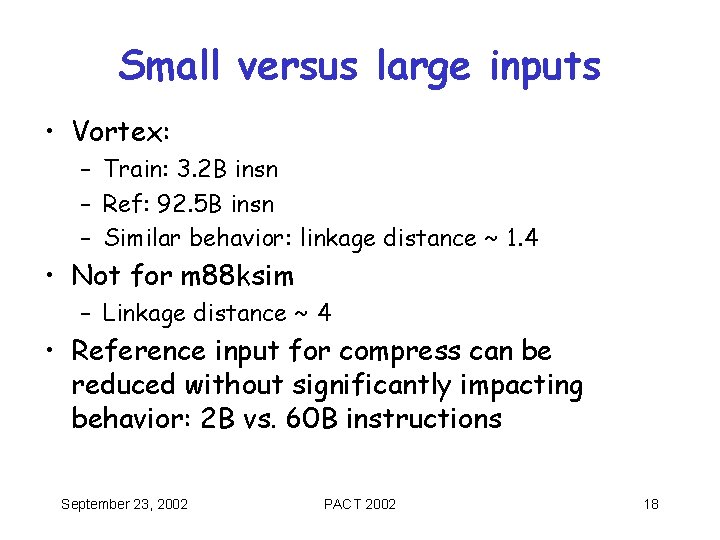

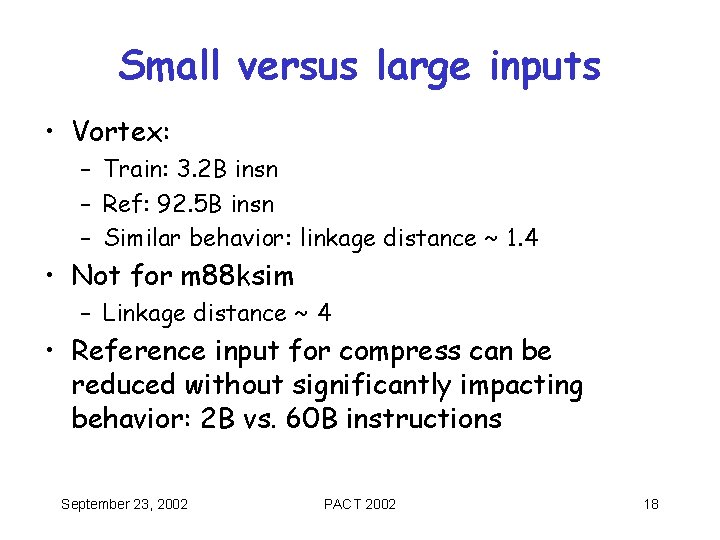

Small versus large inputs • Vortex: – Train: 3. 2 B insn – Ref: 92. 5 B insn – Similar behavior: linkage distance ~ 1. 4 • Not for m 88 ksim – Linkage distance ~ 4 • Reference input for compress can be reduced without significantly impacting behavior: 2 B vs. 60 B instructions September 23, 2002 PACT 2002 18

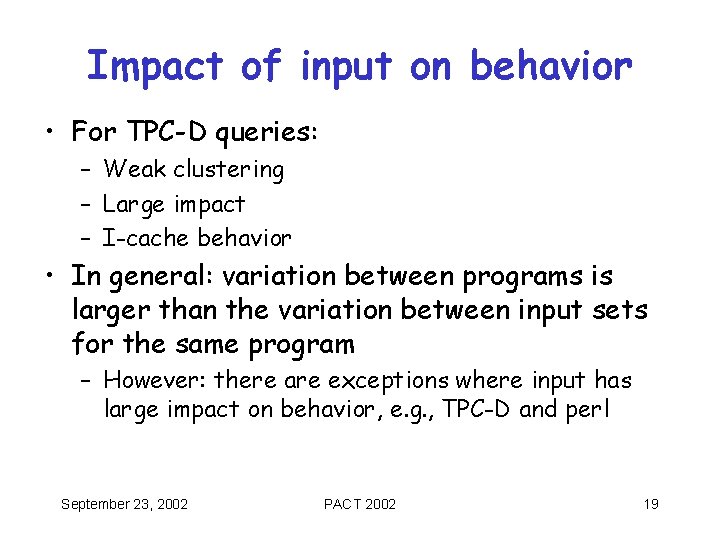

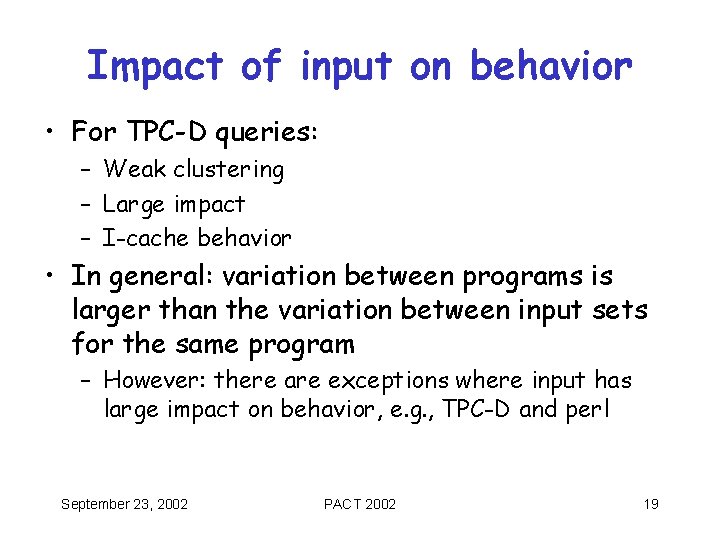

Impact of input on behavior • For TPC-D queries: – Weak clustering – Large impact – I-cache behavior • In general: variation between programs is larger than the variation between input sets for the same program – However: there are exceptions where input has large impact on behavior, e. g. , TPC-D and perl September 23, 2002 PACT 2002 19

Overview • Introduction • Workload characterization • Data analysis – Principal components analysis (PCA) – Cluster analysis • Evaluation • Discussion • Conclusion September 23, 2002 PACT 2002 20

Conclusion • Workload design – representative – not long running • Principal Components Analysis (PCA) and cluster analysis help in detecting input data sets resulting in similar or different behavior of a program • Applications: – workload design: representativeness while taking into account simulation time – impact of input data sets on program behavior – profile-guided optimizations September 23, 2002 PACT 2002 21