Word Error Rate Minimization Using an Integrated Confidence

![Reference o [1] Akio Kobayashi, Kazuo Onoe, Shoei Sato and Toru Imai , “Word Reference o [1] Akio Kobayashi, Kazuo Onoe, Shoei Sato and Toru Imai , “Word](https://slidetodoc.com/presentation_image_h2/765d9c29b5b18c2518f24786ea463e4f/image-2.jpg)

- Slides: 14

Word Error Rate Minimization Using an Integrated Confidence Measure Author : Akio Kobayashi, Kazuo Onoe and Toru Imai Reporter : CHEN TZAN HWEI NTNU SPEECH LAB

![Reference o 1 Akio Kobayashi Kazuo Onoe Shoei Sato and Toru Imai Word Reference o [1] Akio Kobayashi, Kazuo Onoe, Shoei Sato and Toru Imai , “Word](https://slidetodoc.com/presentation_image_h2/765d9c29b5b18c2518f24786ea463e4f/image-2.jpg)

Reference o [1] Akio Kobayashi, Kazuo Onoe, Shoei Sato and Toru Imai , “Word Error Rate Minimization Using an Integrated Confidence Measure”, Euro. Speech 2005 6/16/2021 NTNU SPEECH LAB 2

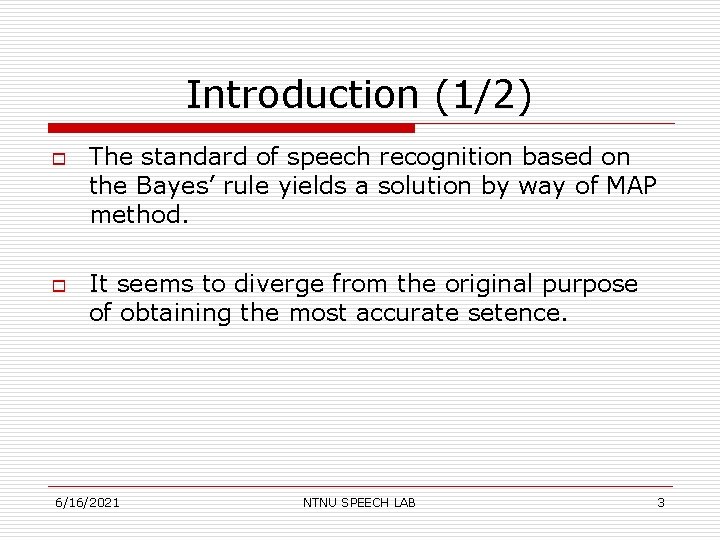

Introduction (1/2) o o The standard of speech recognition based on the Bayes’ rule yields a solution by way of MAP method. It seems to diverge from the original purpose of obtaining the most accurate setence. 6/16/2021 NTNU SPEECH LAB 3

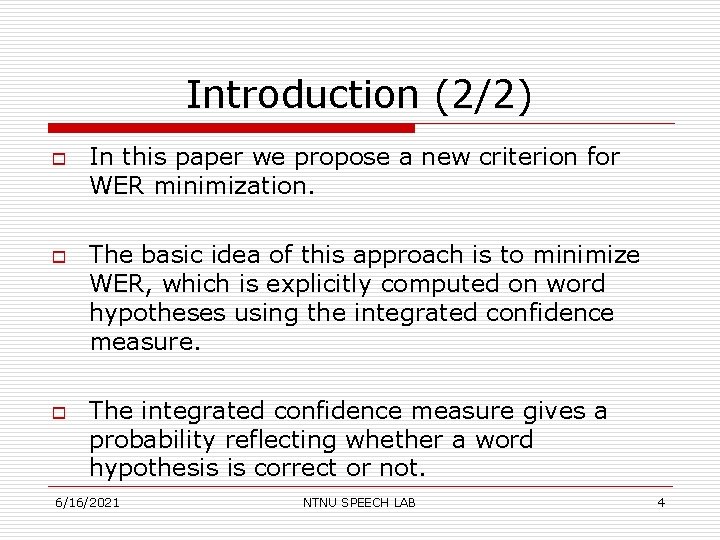

Introduction (2/2) o o o In this paper we propose a new criterion for WER minimization. The basic idea of this approach is to minimize WER, which is explicitly computed on word hypotheses using the integrated confidence measure. The integrated confidence measure gives a probability reflecting whether a word hypothesis is correct or not. 6/16/2021 NTNU SPEECH LAB 4

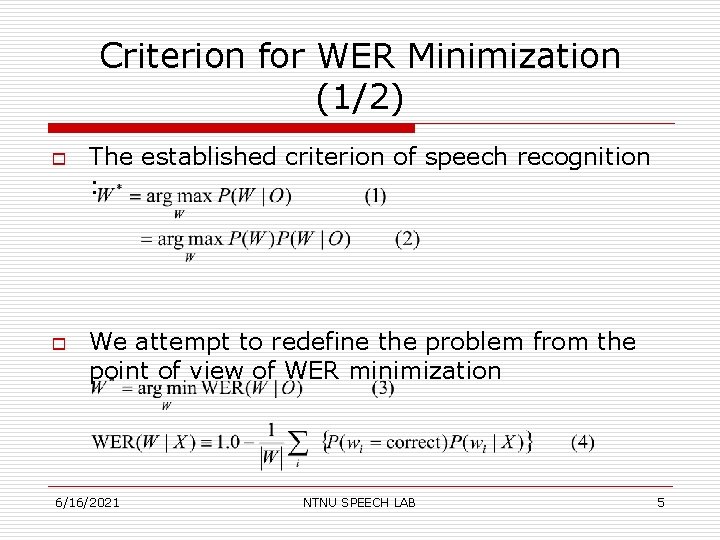

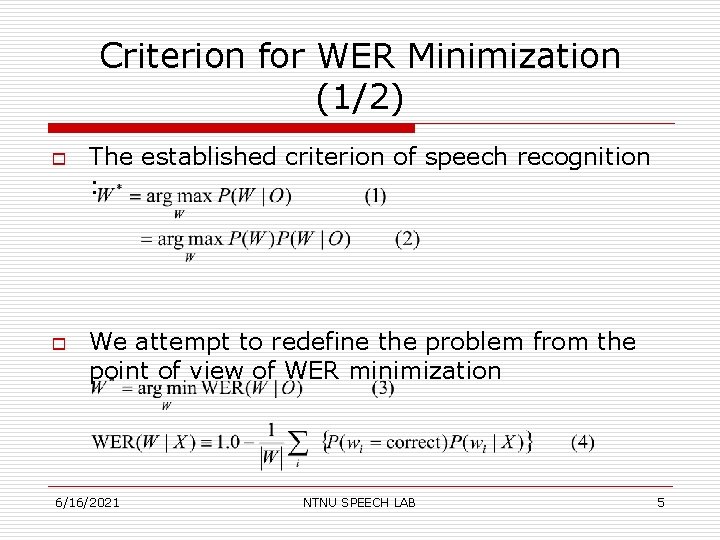

Criterion for WER Minimization (1/2) o o The established criterion of speech recognition : We attempt to redefine the problem from the point of view of WER minimization 6/16/2021 NTNU SPEECH LAB 5

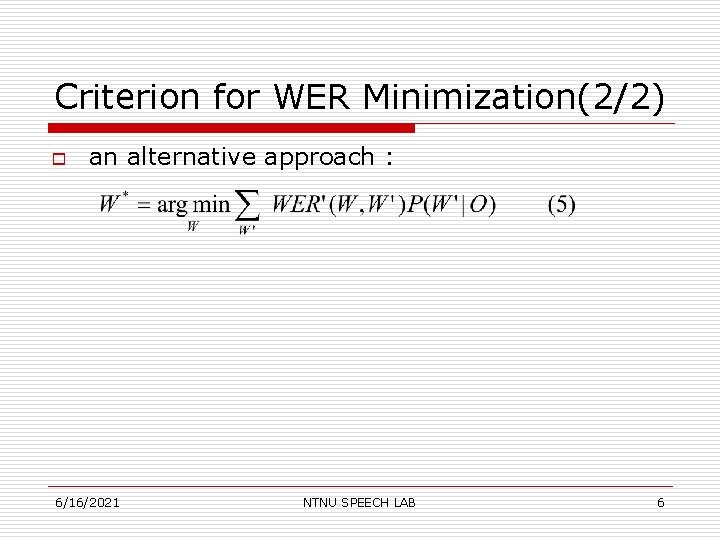

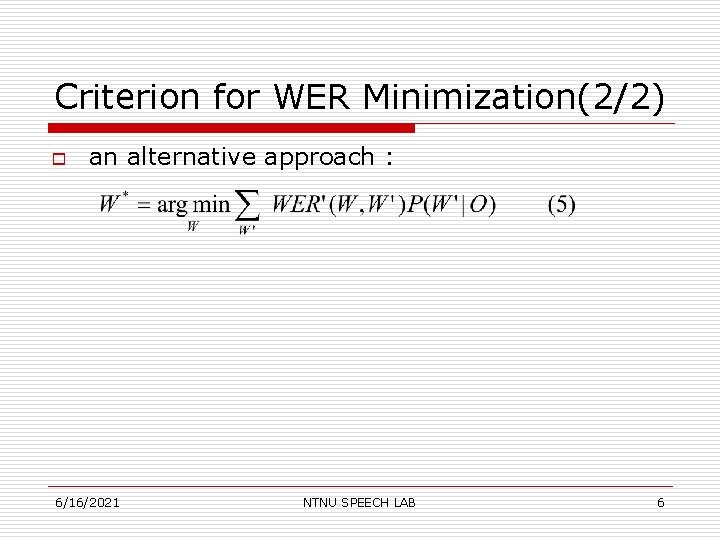

Criterion for WER Minimization(2/2) o an alternative approach : 6/16/2021 NTNU SPEECH LAB 6

Integrated Confidence Measure(1/2) o o Maximum entropy modeling Feature definition : 6/16/2021 NTNU SPEECH LAB 7

Integrated Confidence Measure(2/2) 6/16/2021 NTNU SPEECH LAB 8

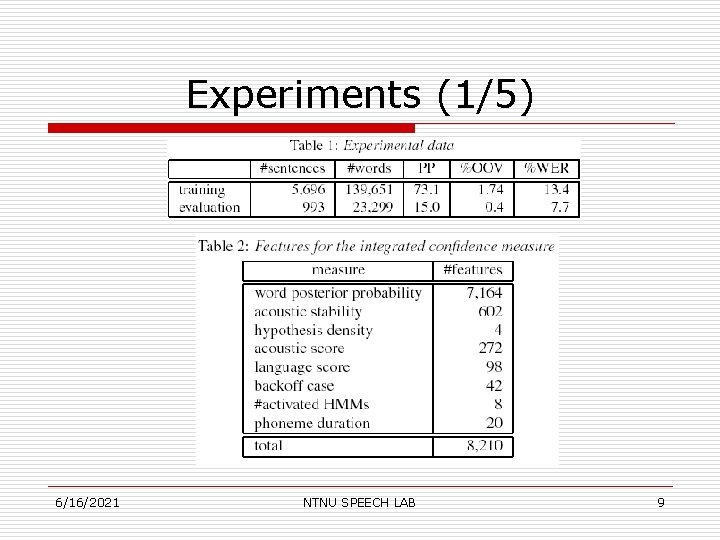

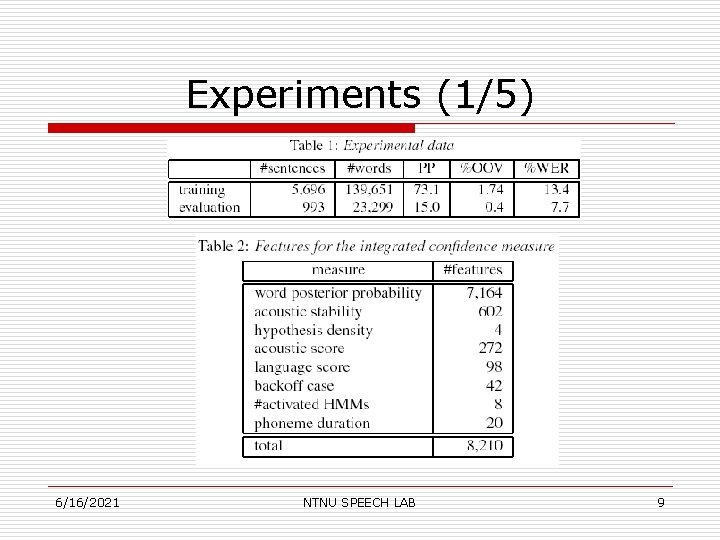

Experiments (1/5) 6/16/2021 NTNU SPEECH LAB 9

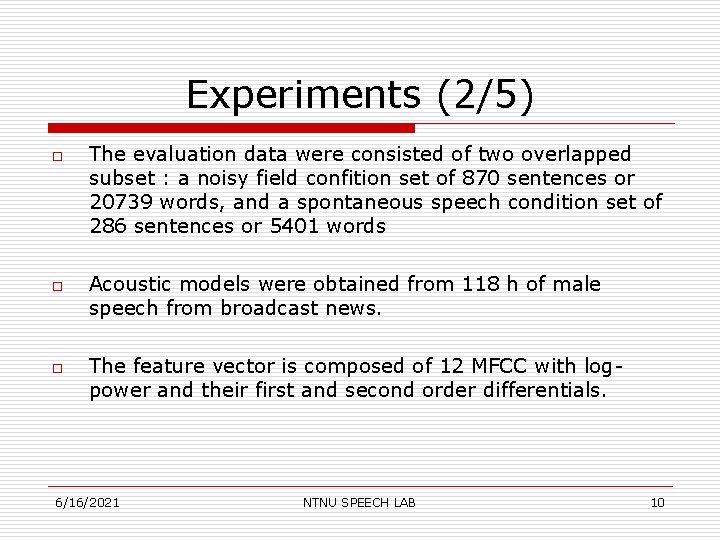

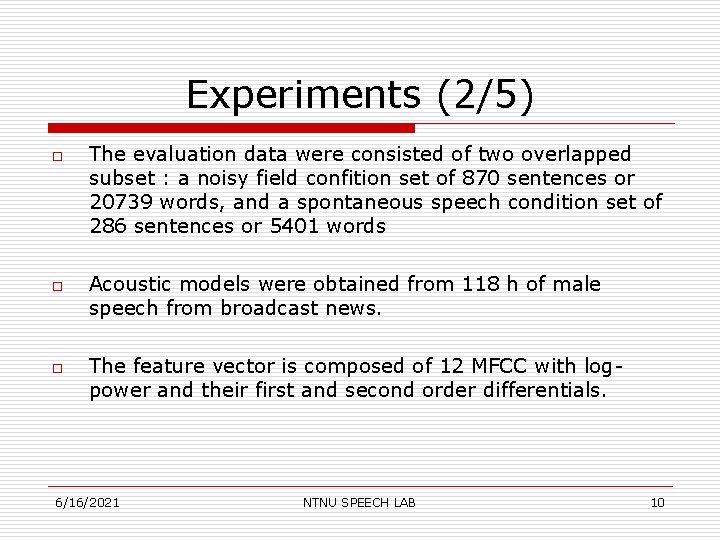

Experiments (2/5) o o o The evaluation data were consisted of two overlapped subset : a noisy field confition set of 870 sentences or 20739 words, and a spontaneous speech condition set of 286 sentences or 5401 words Acoustic models were obtained from 118 h of male speech from broadcast news. The feature vector is composed of 12 MFCC with logpower and their first and second order differentials. 6/16/2021 NTNU SPEECH LAB 10

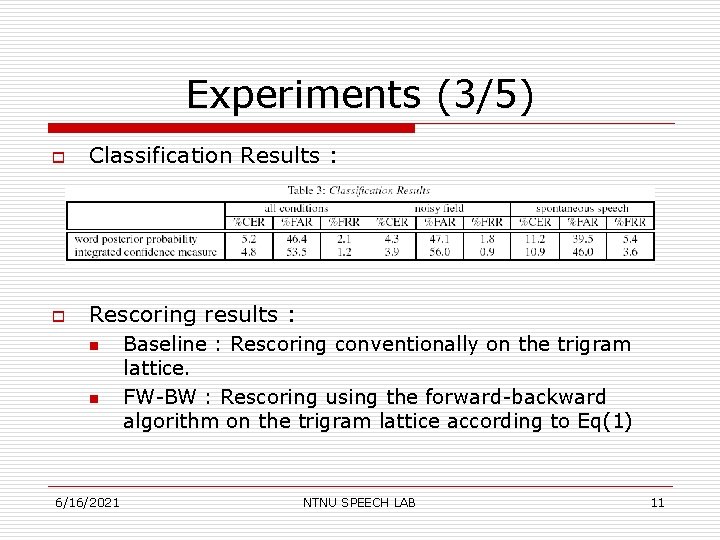

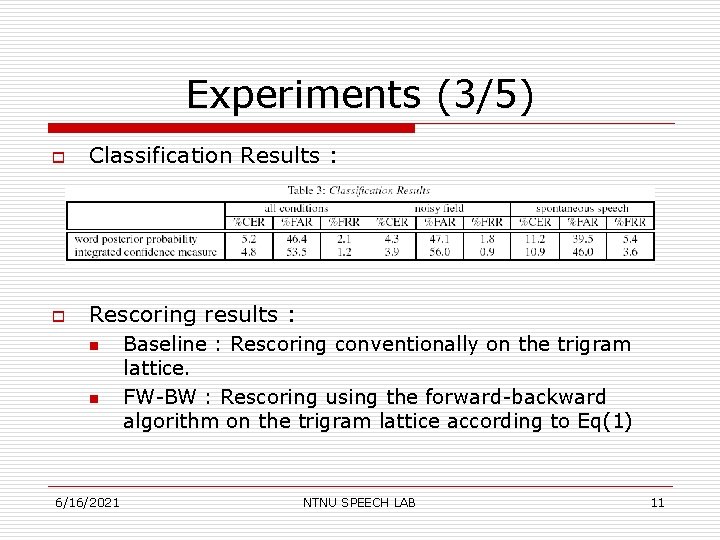

Experiments (3/5) o Classification Results : o Rescoring results : n n 6/16/2021 Baseline : Rescoring conventionally on the trigram lattice. FW-BW : Rescoring using the forward-backward algorithm on the trigram lattice according to Eq(1) NTNU SPEECH LAB 11

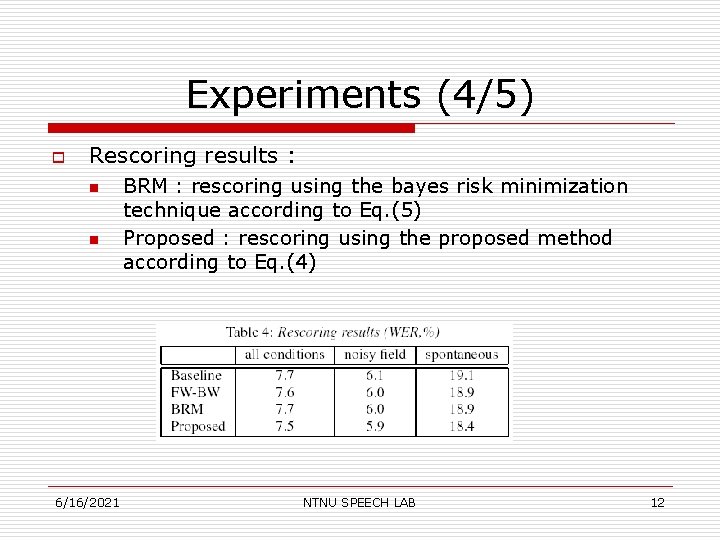

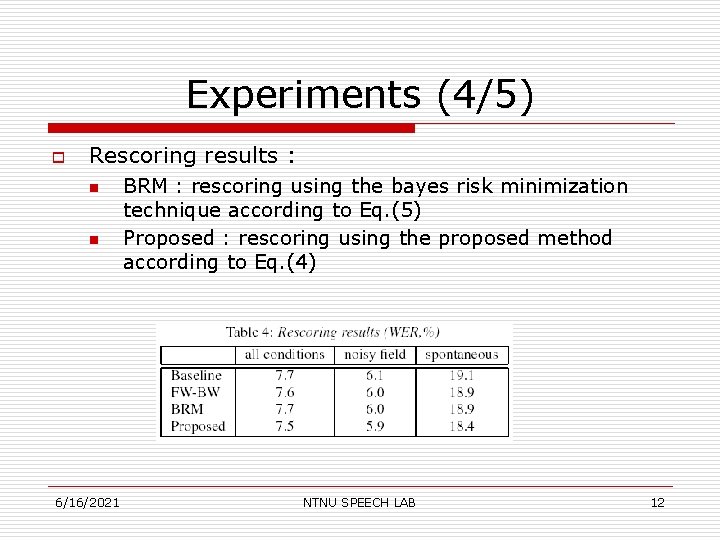

Experiments (4/5) o Rescoring results : n n 6/16/2021 BRM : rescoring using the bayes risk minimization technique according to Eq. (5) Proposed : rescoring using the proposed method according to Eq. (4) NTNU SPEECH LAB 12

Experiments (5/5) o o BRM just estimates the confidence of word hypotheses using a loss function based on the Levenshtein algorithm The score differential between sentence hypotheses is too large to shuffle their ranks. 6/16/2021 NTNU SPEECH LAB 13

Conclusions o o We proposed a new criterion of speech recognition based on WER minimization For further improvement, we will use not only the observations such as word posterior probabilities but also other knowledge sources. 6/16/2021 NTNU SPEECH LAB 14