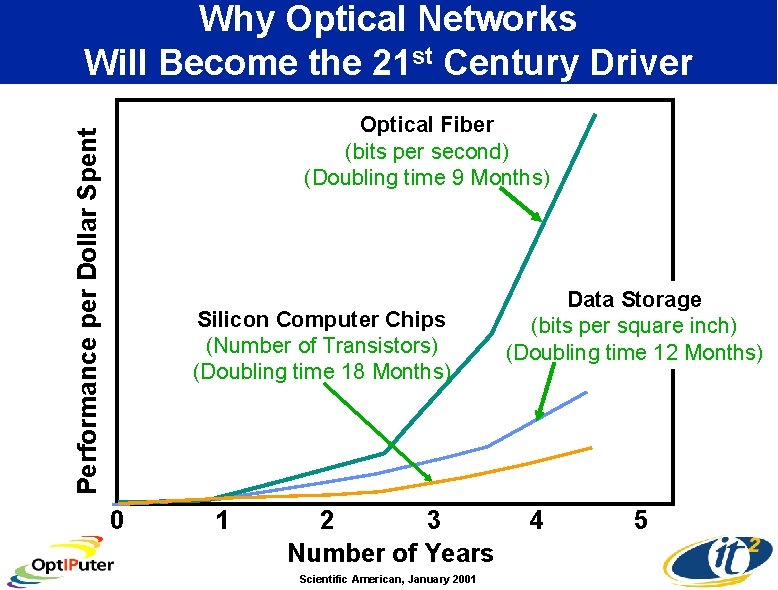

Why Optical Networks Will Become the 21 st

Why Optical Networks Will Become the 21 st Century Driver Performance per Dollar Spent Optical Fiber (bits per second) (Doubling time 9 Months) Silicon Computer Chips (Number of Transistors) (Doubling time 18 Months) 0 1 2 3 Number of Years Scientific American, January 2001 Data Storage (bits per square inch) (Doubling time 12 Months) 4 5

Imagining a Fiber Optic Infrastructure Supporting Interactive Visualization--SIGGRAPH 1989 “What we really have to do is eliminate distance between individuals who want to interact with other people and with other computers. ” ― Larry Smarr, Director National Center for Supercomputing Applications, UIUC ATT & Sun “Using satellite technology…demo of What It might be like to have high-speed fiber-optic links between advanced computers in two different geographic locations. ” ― Al Gore, Senator Chair, US Senate Subcommittee on Science, Technology and Space http: //sunsite. lanet. lv/ftp/sun-info/sunflash/1989/Aug/08. 21. 89. tele. video Source: Maxine Brown

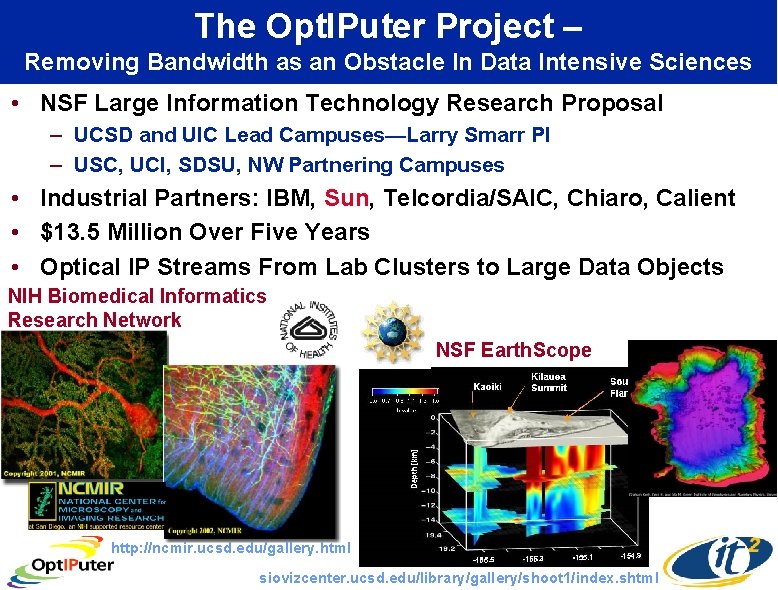

The Opt. IPuter Project – Removing Bandwidth as an Obstacle In Data Intensive Sciences • NSF Large Information Technology Research Proposal – UCSD and UIC Lead Campuses—Larry Smarr PI – USC, UCI, SDSU, NW Partnering Campuses • Industrial Partners: IBM, Sun, Telcordia/SAIC, Chiaro, Calient • $13. 5 Million Over Five Years • Optical IP Streams From Lab Clusters to Large Data Objects NIH Biomedical Informatics Research Network NSF Earth. Scope http: //ncmir. ucsd. edu/gallery. html siovizcenter. ucsd. edu/library/gallery/shoot 1/index. shtml

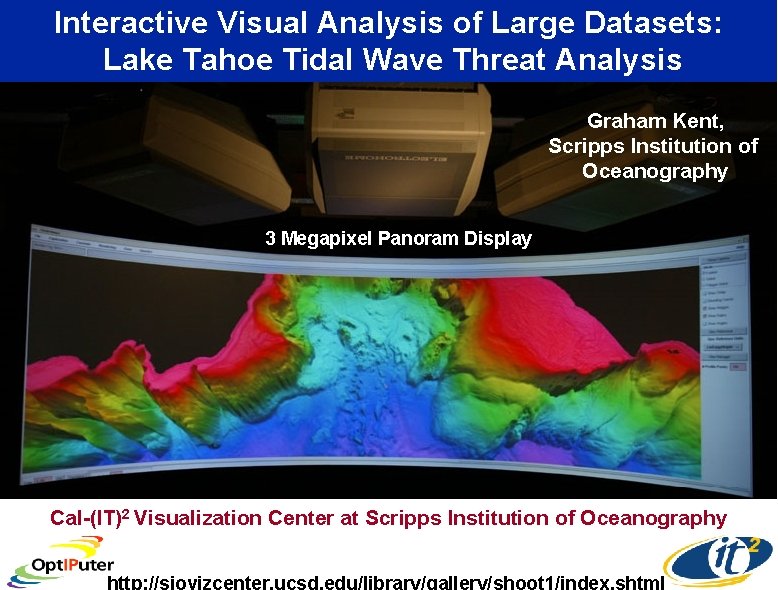

Interactive Visual Analysis of Large Datasets: Lake Tahoe Tidal Wave Threat Analysis Graham Kent, Scripps Institution of Oceanography 3 Megapixel Panoram Display Cal-(IT)2 Visualization Center at Scripps Institution of Oceanography http: //siovizcenter. ucsd. edu/library/gallery/shoot 1/index. shtml

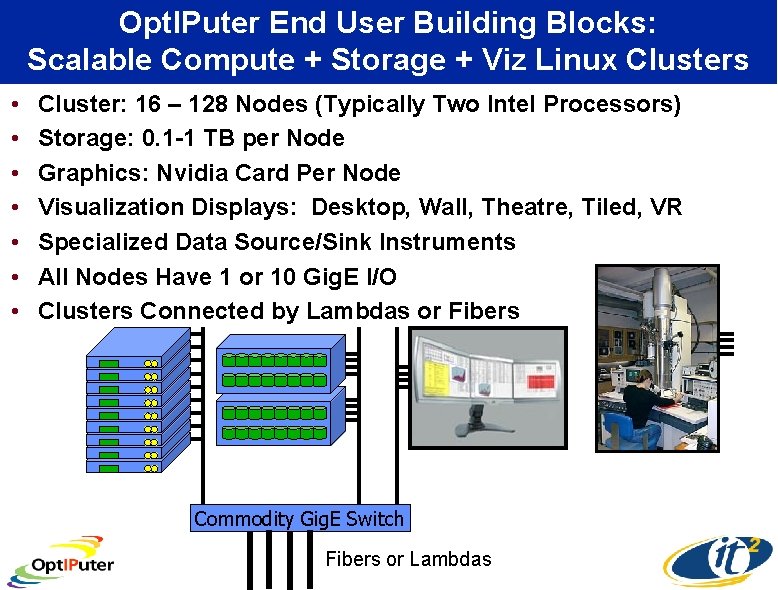

Opt. IPuter End User Building Blocks: Scalable Compute + Storage + Viz Linux Clusters • • Cluster: 16 – 128 Nodes (Typically Two Intel Processors) Storage: 0. 1 -1 TB per Node Graphics: Nvidia Card Per Node Visualization Displays: Desktop, Wall, Theatre, Tiled, VR Specialized Data Source/Sink Instruments All Nodes Have 1 or 10 Gig. E I/O Clusters Connected by Lambdas or Fibers Commodity Gig. E Switch Fibers or Lambdas

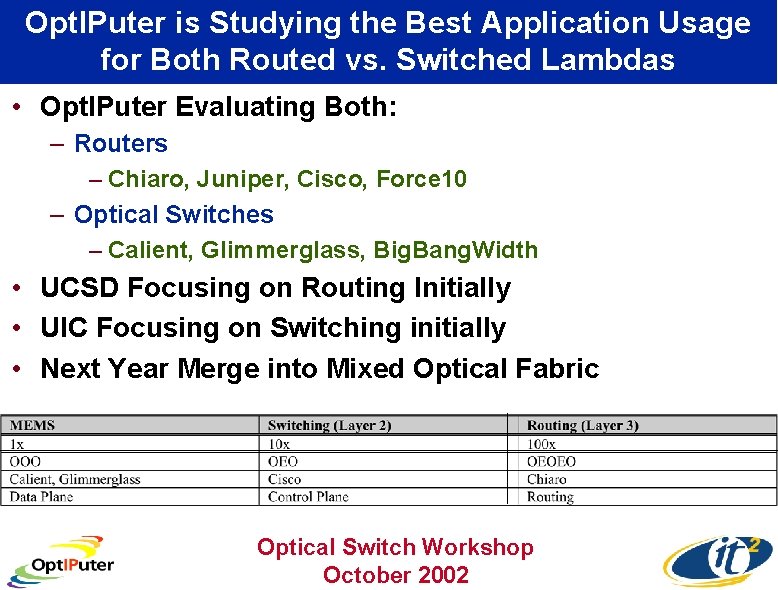

Opt. IPuter is Studying the Best Application Usage for Both Routed vs. Switched Lambdas • Opt. IPuter Evaluating Both: – Routers – Chiaro, Juniper, Cisco, Force 10 – Optical Switches – Calient, Glimmerglass, Big. Bang. Width • UCSD Focusing on Routing Initially • UIC Focusing on Switching initially • Next Year Merge into Mixed Optical Fabric Optical Switch Workshop October 2002

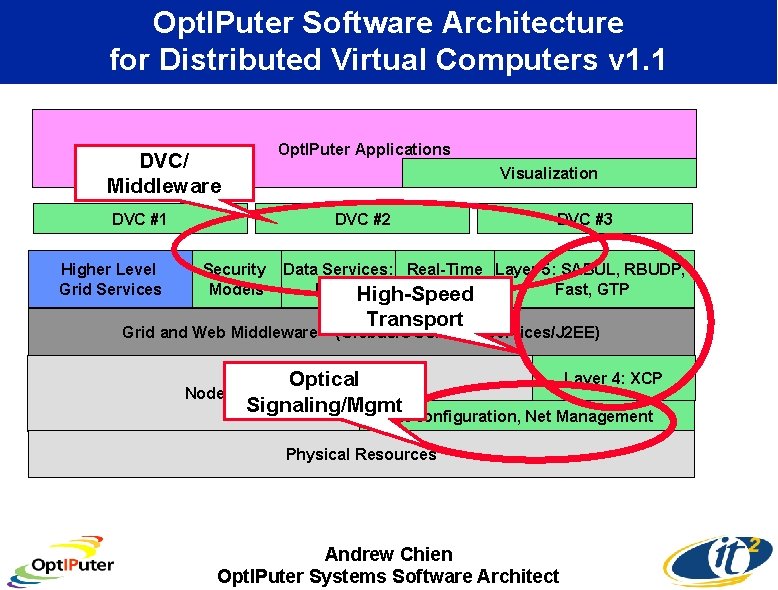

Opt. IPuter Software Architecture for Distributed Virtual Computers v 1. 1 Opt. IPuter Applications DVC/ Middleware Visualization DVC #1 Higher Level Grid Services DVC #2 Security Models DVC #3 Data Services: Real-Time Layer 5: SABUL, RBUDP, DWTPHigh-Speed Objects Fast, GTP Transport Grid and Web Middleware – (Globus/OGSA/Web. Services/J 2 EE) Layer 4: XCP Optical Signaling/Mgmtl-configuration, Net Management Node Operating Systems Physical Resources Andrew Chien Opt. IPuter Systems Software Architect

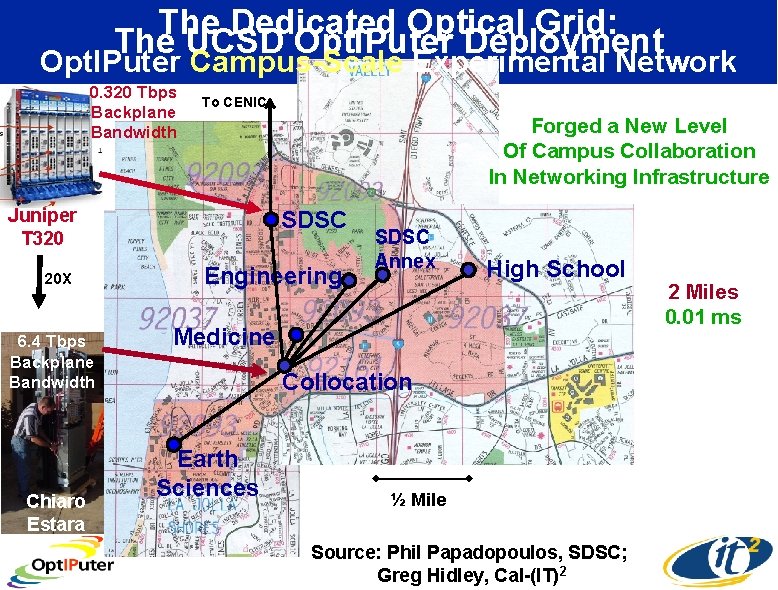

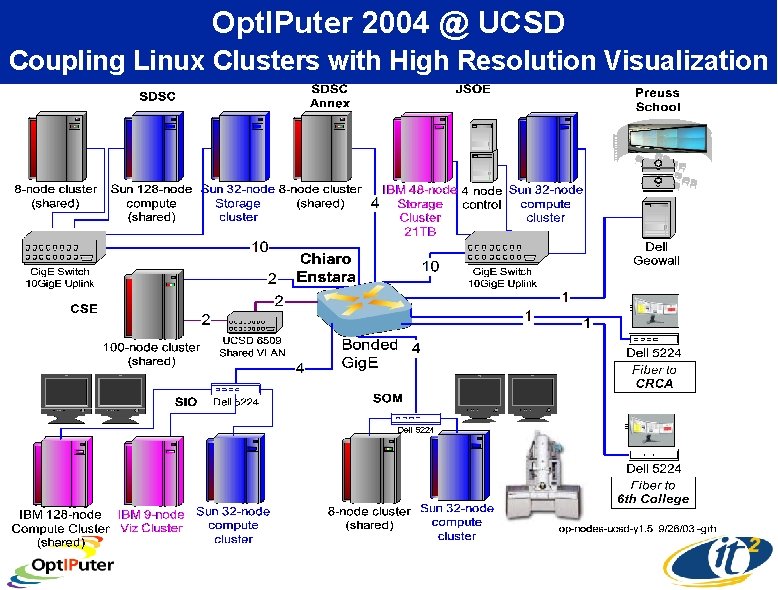

The Dedicated Optical Grid: The UCSD Opt. IPuter Deployment Opt. IPuter Campus-Scale Experimental Network 0. 320 Tbps Backplane Bandwidth Juniper T 320 To CENIC Forged a New Level Of Campus Collaboration In Networking Infrastructure SDSC JSOE Engineering 20 X 6. 4 Tbps Backplane Bandwidth Medicine Phys. Sci - SOM Keck SDSC Annex SDSC Preuss Annex High School CRCA 6 th College Collocation Node M Earth Sciences SIO Chiaro Estara ½ Mile Source: Phil Papadopoulos, SDSC; Greg Hidley, Cal-(IT)2 2 Miles 0. 01 ms

Opt. IPuter 2004 @ UCSD Coupling Linux Clusters with High Resolution Visualization

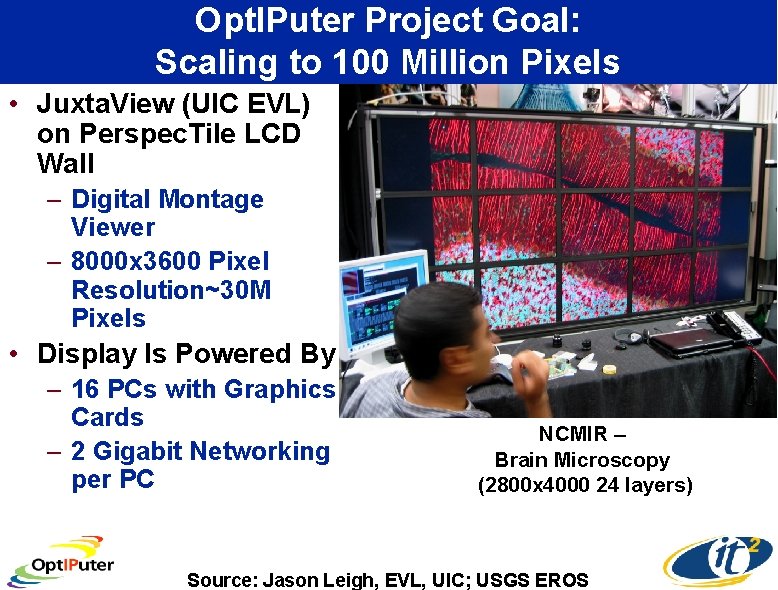

Opt. IPuter Project Goal: Scaling to 100 Million Pixels • Juxta. View (UIC EVL) on Perspec. Tile LCD Wall – Digital Montage Viewer – 8000 x 3600 Pixel Resolution~30 M Pixels • Display Is Powered By – 16 PCs with Graphics Cards – 2 Gigabit Networking per PC NCMIR – Brain Microscopy (2800 x 4000 24 layers) Source: Jason Leigh, EVL, UIC; USGS EROS

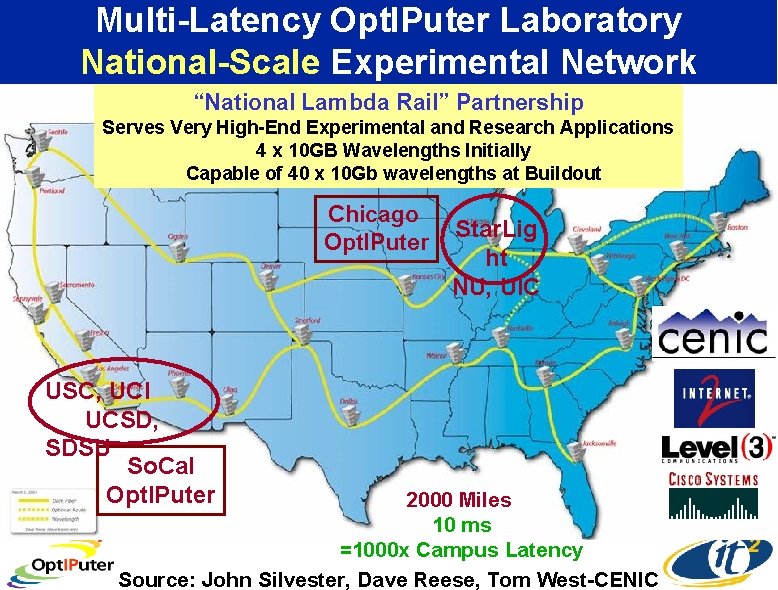

Multi-Latency Opt. IPuter Laboratory National-Scale Experimental Network “National Lambda Rail” Partnership Serves Very High-End Experimental and Research Applications 4 x 10 GB Wavelengths Initially Capable of 40 x 10 Gb wavelengths at Buildout Chicago Opt. IPuter USC, UCI UCSD, SDSU So. Cal Opt. IPuter Star. Lig ht NU, UIC 2000 Miles 10 ms =1000 x Campus Latency Source: John Silvester, Dave Reese, Tom West-CENIC

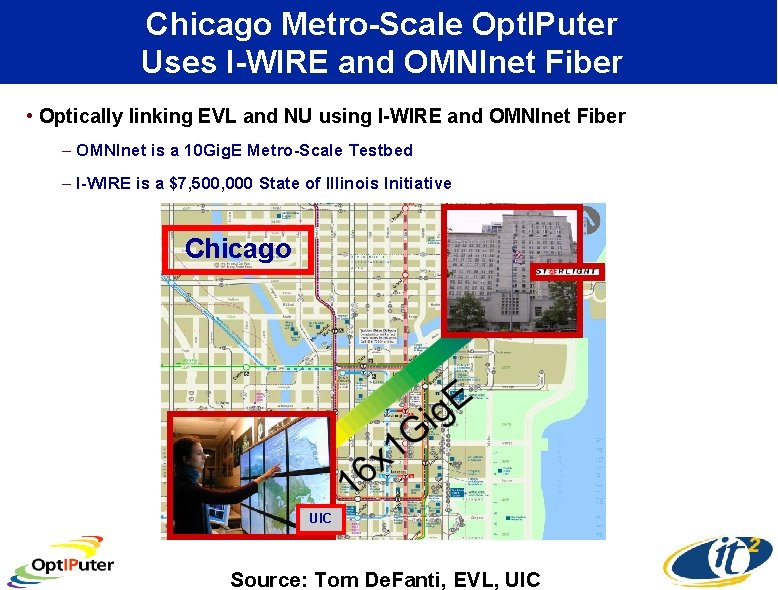

Chicago Metro-Scale Opt. IPuter Uses I-WIRE and OMNInet Fiber • Optically linking EVL and NU using I-WIRE and OMNInet Fiber – OMNInet is a 10 Gig. E Metro-Scale Testbed – I-WIRE is a $7, 500, 000 State of Illinois Initiative Chicago UIC Source: Tom De. Fanti, EVL, UIC

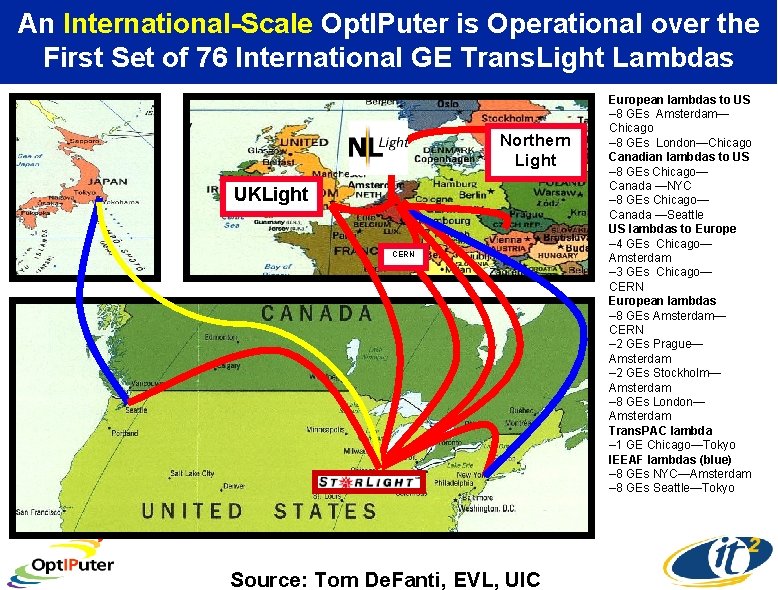

An International-Scale Opt. IPuter is Operational over the First Set of 76 International GE Trans. Light Lambdas Northern Light UKLight CERN Source: Tom De. Fanti, EVL, UIC European lambdas to US – 8 GEs Amsterdam— Chicago – 8 GEs London—Chicago Canadian lambdas to US – 8 GEs Chicago— Canada —NYC – 8 GEs Chicago— Canada —Seattle US lambdas to Europe – 4 GEs Chicago— Amsterdam – 3 GEs Chicago— CERN European lambdas – 8 GEs Amsterdam— CERN – 2 GEs Prague— Amsterdam – 2 GEs Stockholm— Amsterdam – 8 GEs London— Amsterdam Trans. PAC lambda – 1 GE Chicago—Tokyo IEEAF lambdas (blue) – 8 GEs NYC—Amsterdam – 8 GEs Seattle—Tokyo

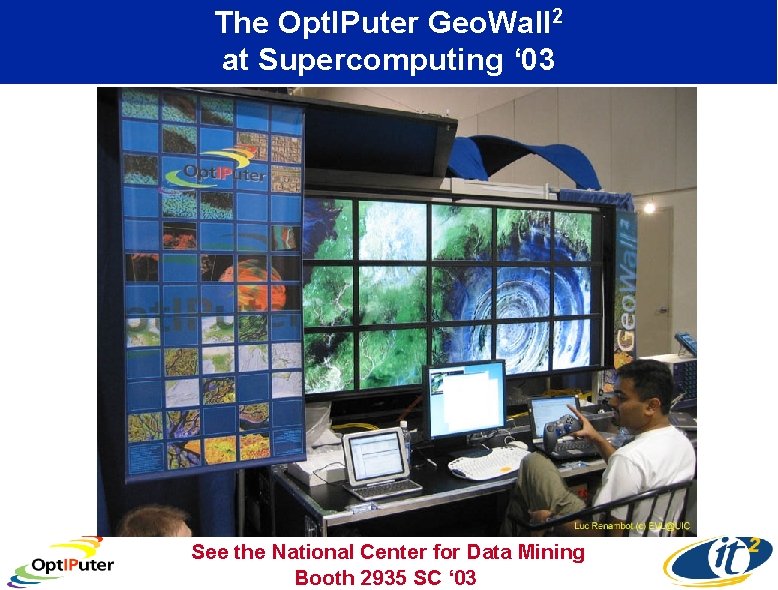

The Opt. IPuter Geo. Wall 2 at Supercomputing ‘ 03 See the National Center for Data Mining Booth 2935 SC ‘ 03

- Slides: 14