WholeBook Recognition using MutualEntropyDriven Model Adaptation Pingping Xiu

Whole-Book Recognition using Mutual-Entropy-Driven Model Adaptation Pingping Xiu* DR&R XV January 29, 2008 Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab Henry S. Baird

Whole-Book Recognition Motivation • Millions of books are being scanned cover-to-cover with OCR results available on the web. • Many documents are strikingly isogenous, containing only a small fraction of all possible languages, words, typefaces, image qualities, layout styles, etc. • Research in the last decade or so shows that isogeny can be exploited to improve recognition fully automatically. ( Nagy & Baird (1994), Hong (1995), Sarkar (2000), Veeramachaneni (2002), Sarkar, Baird & Zhang, (2003) ) Given: a book’s images, an initial buggy OCR transcription, & a dictionary that might be incomplete … can we: improve recognition accuracy substantially & fully automatically? Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

Fully-Automatic Model Adaptation Two models: initialized from the input iconic: a character-image classifier linguistic: a dictionary (list of valid words) Look for disagreements between the two models: measure mutual entropy between two probability distributions: • a posteriori probabilities of character classes • a posteriori probabilities of word classes Automatically correct both models to reduce disagreement: • We illustrate this approach on a small test set of real bookimage data. • We show that we can drive improvements in both models, and can lower recognition error rate. Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

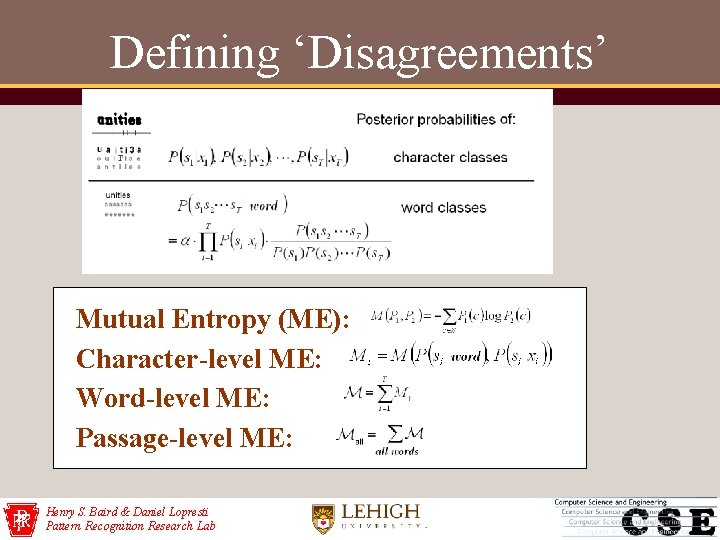

Defining ‘Disagreements’ Mutual Entropy (ME): Character-level ME: Word-level ME: Passage-level ME: Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

Model-Adaptation Policies How to correct the iconic model: characters with largest character-level disagreement first How to correct the linguistic model: words with largest word-level disagreement first Minimize this objective function: drive passage-level mutual entropy down Test experimentally: • can this policy achieve unsupervised improvement? • does it work better, the longer the passage length? Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

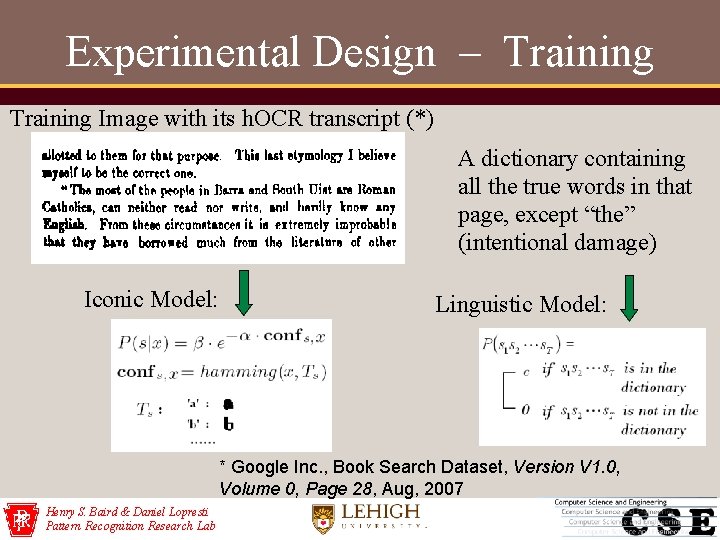

Experimental Design – Training Image with its h. OCR transcript (*) A dictionary containing all the true words in that page, except “the” (intentional damage) Iconic Model: Linguistic Model: * Google Inc. , Book Search Dataset, Version V 1. 0, Volume 0, Page 28, Aug, 2007 Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

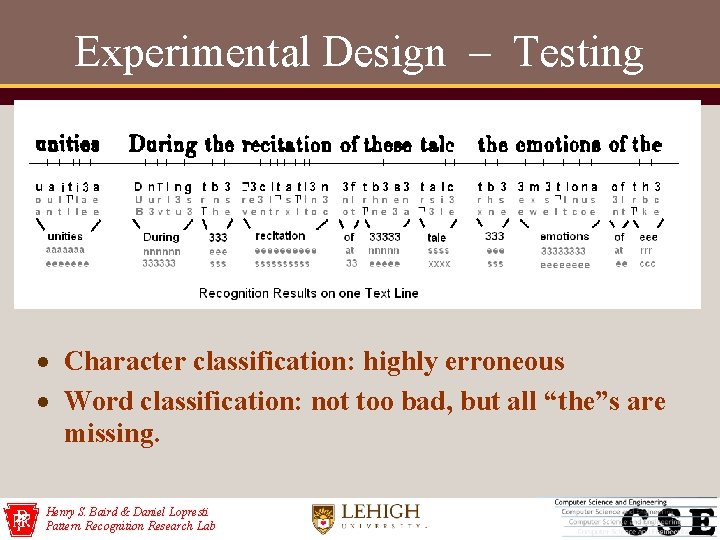

Experimental Design – Testing Character classification: highly erroneous Word classification: not too bad, but all “the”s are missing. Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

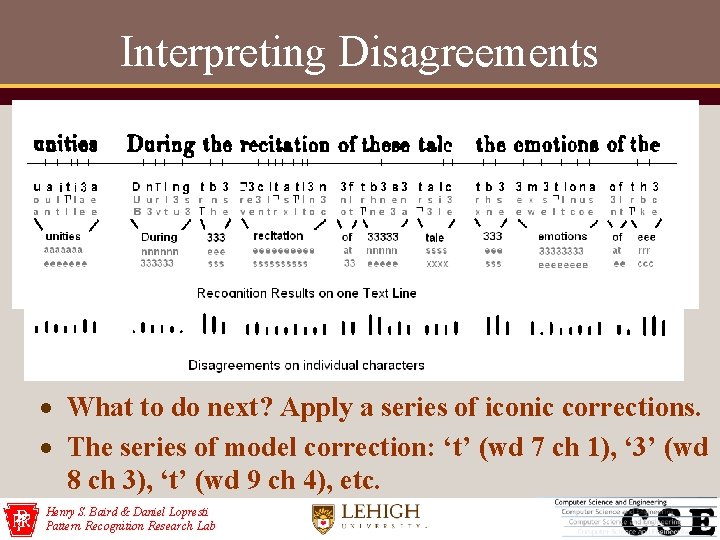

Interpreting Disagreements What to do next? Apply a series of iconic corrections. The series of model correction: ‘t’ (wd 7 ch 1), ‘ 3’ (wd 8 ch 3), ‘t’ (wd 9 ch 4), etc. Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

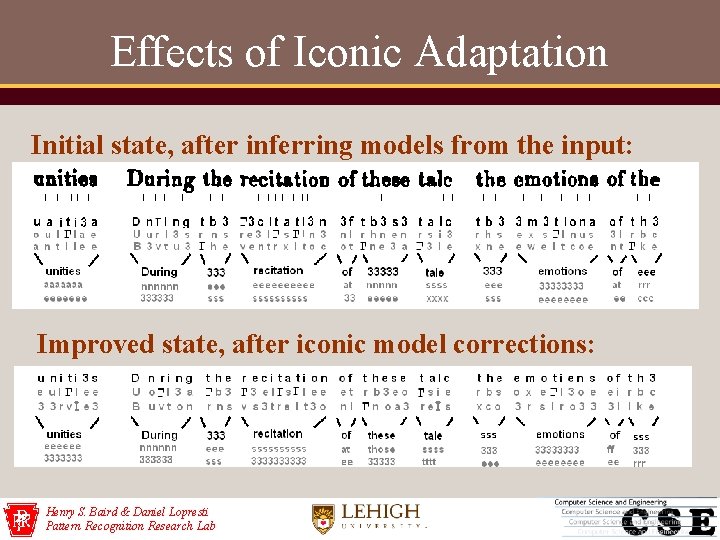

Effects of Iconic Adaptation Initial state, after inferring models from the input: Improved state, after iconic model corrections: Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

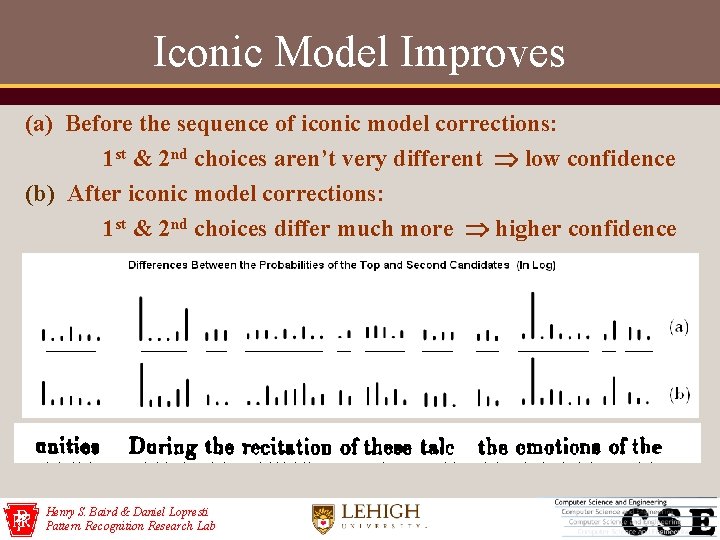

Iconic Model Improves (a) Before the sequence of iconic model corrections: 1 st & 2 nd choices aren’t very different low confidence (b) After iconic model corrections: 1 st & 2 nd choices differ much more higher confidence Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

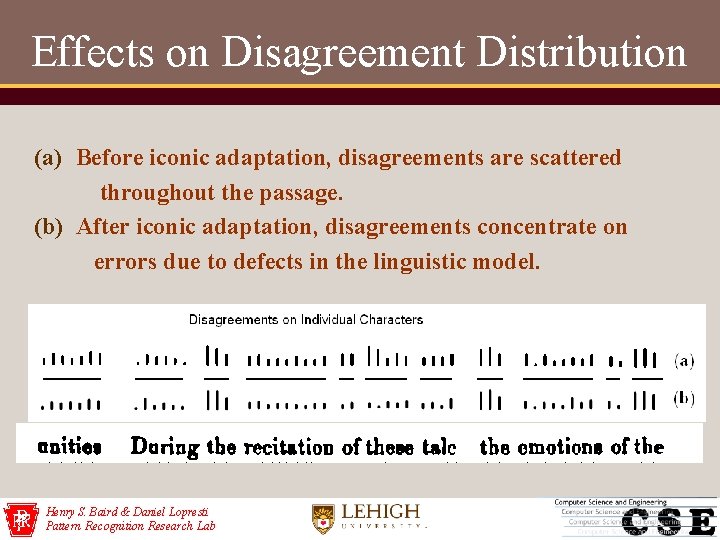

Effects on Disagreement Distribution (a) Before iconic adaptation, disagreements are scattered throughout the passage. (b) After iconic adaptation, disagreements concentrate on errors due to defects in the linguistic model. Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

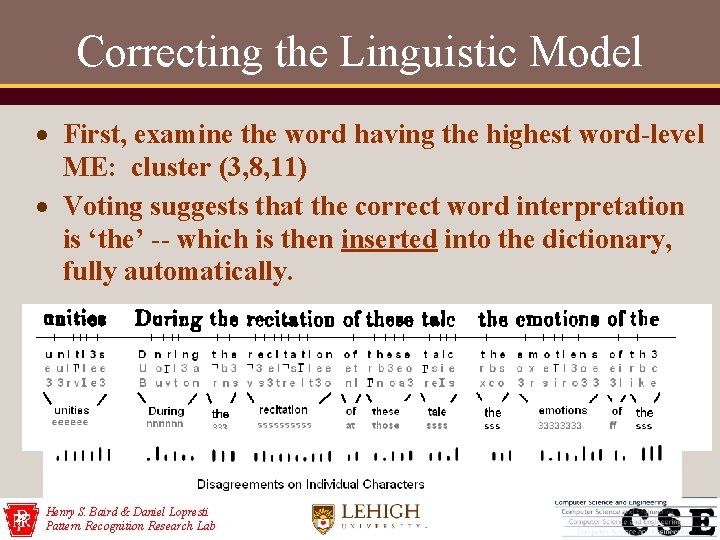

Correcting the Linguistic Model First, examine the word having the highest word-level ME: cluster (3, 8, 11) Voting suggests that the correct word interpretation is ‘the’ -- which is then inserted into the dictionary, fully automatically. Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

Algorithm & Results Summary Unsupervised (fully automatic). Greedy: multiple cycles, each cycle with two stages: iconic changes, then linguistic changes. Character-level ME suggests priorities for iconic model changes. Word-level ME suggests priorities for linguistic model changes. Every model change must reduce passage-level ME. Stopping rule: we’ve experimented with an adaptive threshold heuristic. In the real-data example in the paper, recognition error rate was driven to zero---of course, this is a very short passage. Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

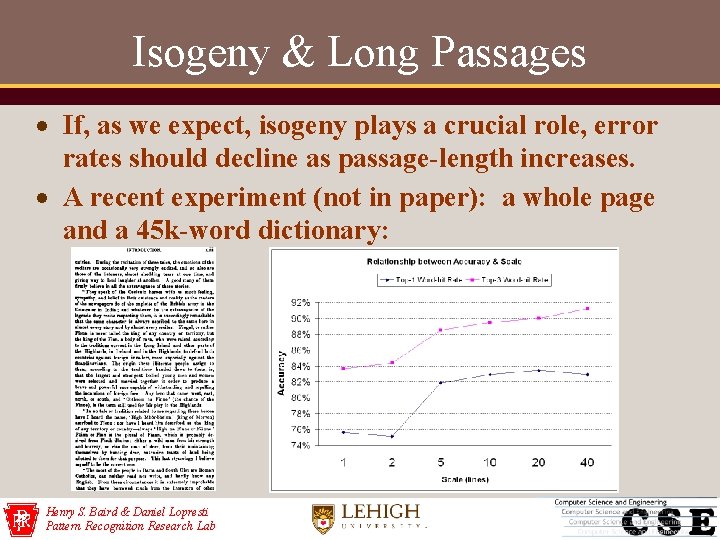

Isogeny & Long Passages If, as we expect, isogeny plays a crucial role, error rates should decline as passage-length increases. A recent experiment (not in paper): a whole page and a 45 k-word dictionary: Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

Discussion Mutual Entropy is only one information-theoretic framework for unsupervised model improvement Even using ME, other interesting greedy & branch-& -bound heuristics come to mind Minimizing passage-level ME may not necessarily minimize recognition error (experts disagree) Proofs of global optimality seem hard: but we’re investigating…. Scaling up to, say, 100 -page passages is urgently needed Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

Thanks! Pingping Xiu pix 206@lehigh. edu Henry Baird baird@cse. lehigh. edu Henry S. Baird & Daniel Lopresti Pattern Recognition Research Lab

- Slides: 16