Who is the Father of Deep Learning Dr

- Slides: 35

Who is the “Father of Deep Learning”? Dr. Charles C. Tappert Seidenberg School of CSIS, Pace University

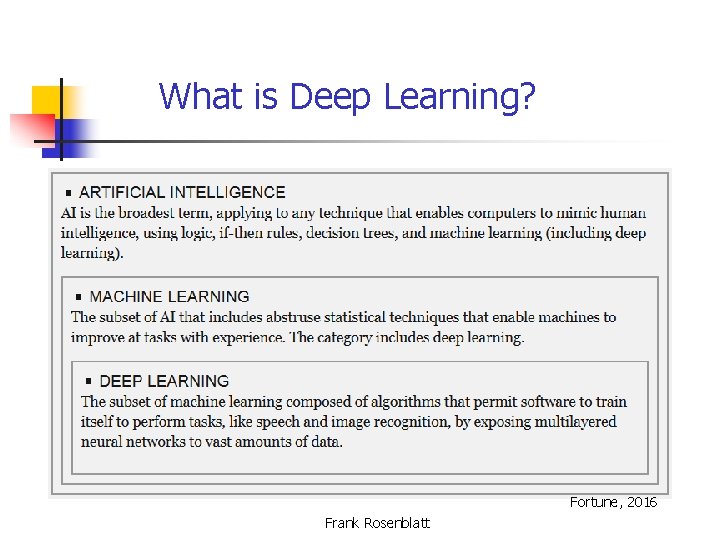

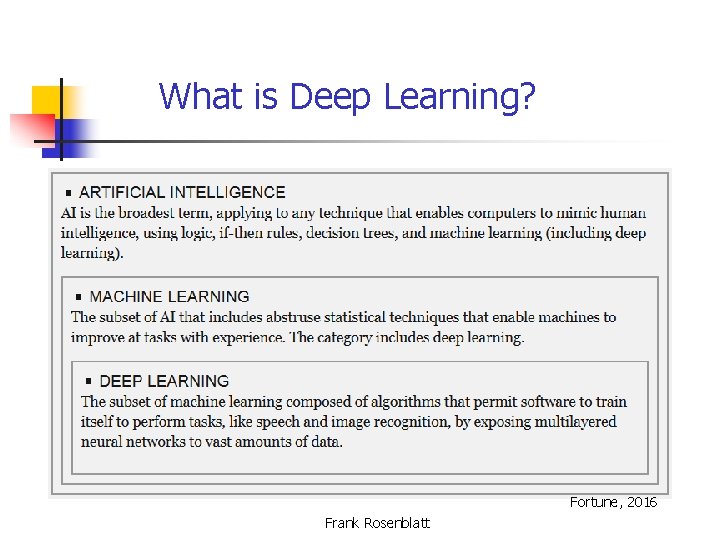

What is Deep Learning? Fortune, 2016 Frank Rosenblatt

Why is Deep Learning Important? n n Causing a revolution in Artificial Intelligence Electrifying the computing industry Transforming corporate America Why? – because over the last five years we have experienced quantum leaps in the quality of many everyday technologies Fortune, 2016 Frank Rosenblatt

Why is Deep Learning Important? n Major advances in Image Recognition n Search and automatically organize collections of photos n n Speech Technologies work much better n n n Apple, Amazon, Microsoft, Facebook Speech recognition: Apple’s Siri, Amazon’s Alexa, Microsoft’s Cortana, Chinese Baidu speech interfaces Translation of spoken sentences: Google Translate Deep learning also improving medical applications, robotics, autonomous drones, self-driving cars, etc. Frank Rosenblatt

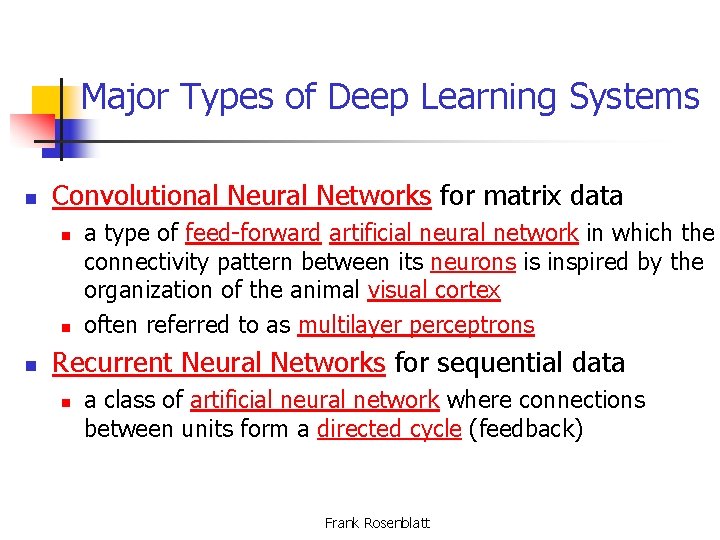

Major Types of Deep Learning Systems n Convolutional Neural Networks for matrix data n n n a type of feed-forward artificial neural network in which the connectivity pattern between its neurons is inspired by the organization of the animal visual cortex often referred to as multilayer perceptrons Recurrent Neural Networks for sequential data n a class of artificial neural network where connections between units form a directed cycle (feedback) Frank Rosenblatt

Google Search on “Father of Deep Learning” n Results of Google search on “Father of Deep Learning” n n n n Geoff Hinton for restricted Boltzmann machines stacked as deep-belief networks Yann Le. Cun for convolutional neural networks Yoshua Bengio, whose team is behind Theano (and whose research contributions are many) Andrew Ng, who helped build Google Brain Jürgen Schmidhuber, who developed recurrent nets and Long Short-Term Memory (LSTM), a recurrent neural network (RNN) architecture Frank Rosenblatt for his creation of perceptrons This presentation builds a case for naming Frank Rosenblatt as the “Father of Deep Learning” Frank Rosenblatt

Dr. Frank Rosenblatt, 1928 -1971 The “Father of Deep Learning” n n Ph. D Experimental Psychology, Cornell, 1956 Developed neural networks called perceptrons A probabilistic model for information storage and organization in the brain n Key properties n n n Association or learning Generalization to new patterns Distributed memory Biologically plausible brain model Cornell Aeronautical Lab (1957 -1959), Cornell (1960 -71) Frank Rosenblatt

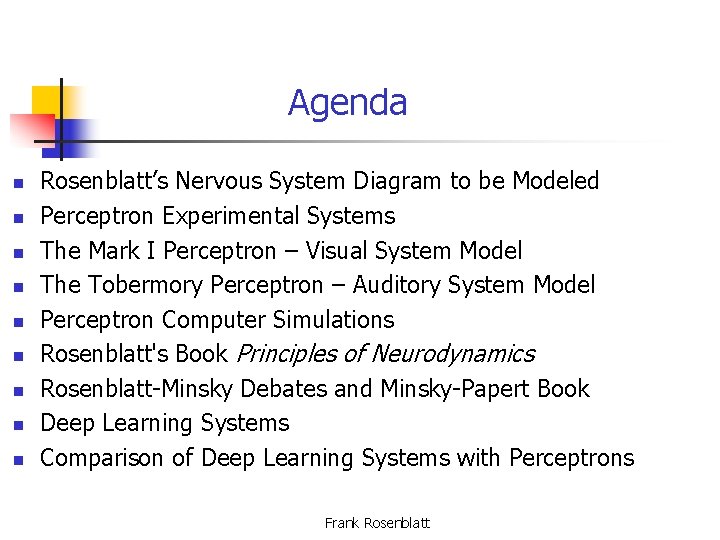

Agenda n n n n n Rosenblatt’s Nervous System Diagram to be Modeled Perceptron Experimental Systems The Mark I Perceptron – Visual System Model The Tobermory Perceptron – Auditory System Model Perceptron Computer Simulations Rosenblatt's Book Principles of Neurodynamics Rosenblatt-Minsky Debates and Minsky-Papert Book Deep Learning Systems Comparison of Deep Learning Systems with Perceptrons Frank Rosenblatt

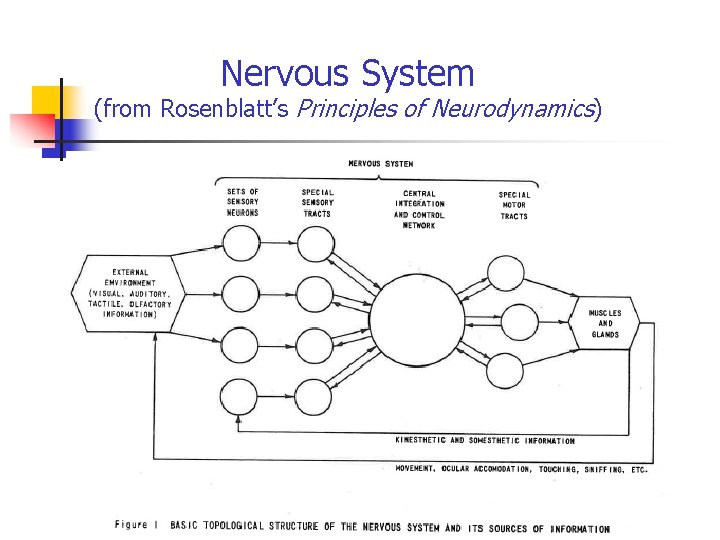

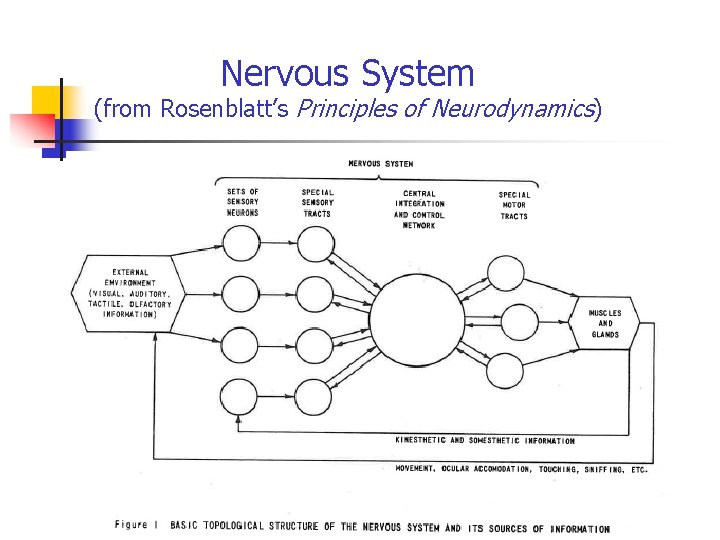

Nervous System (from Rosenblatt’s Principles of Neurodynamics) Frank Rosenblatt

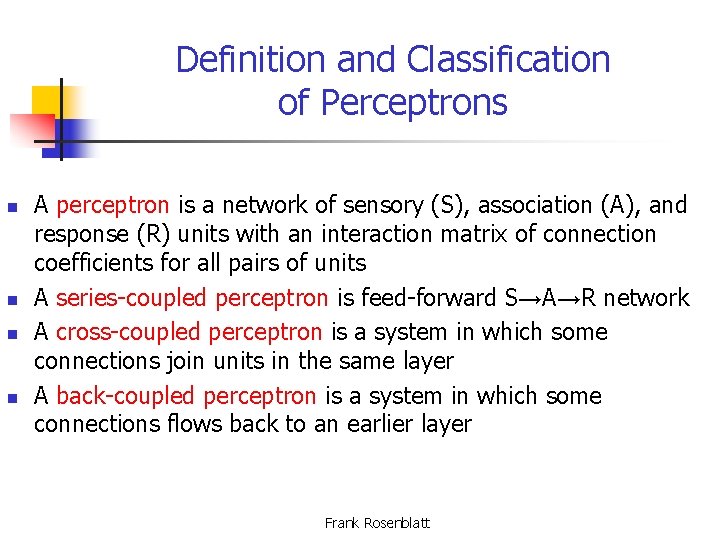

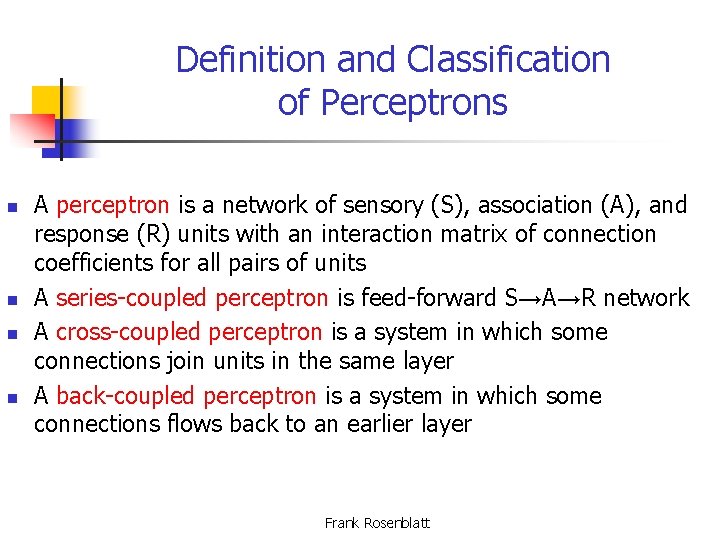

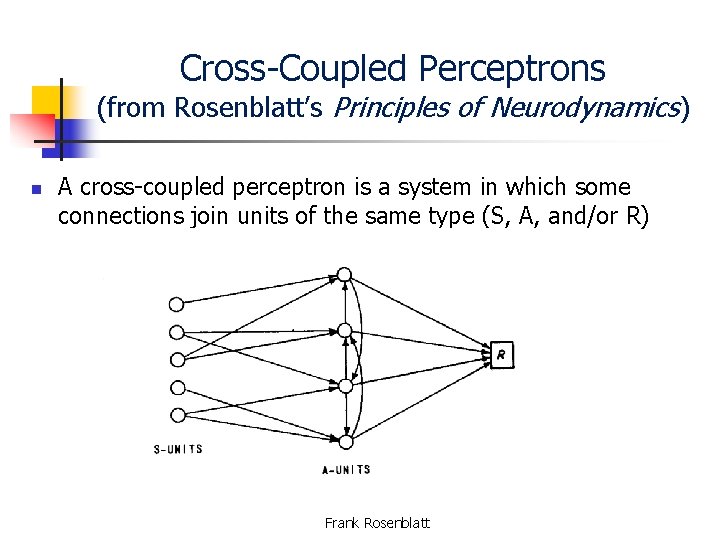

Definition and Classification of Perceptrons n n A perceptron is a network of sensory (S), association (A), and response (R) units with an interaction matrix of connection coefficients for all pairs of units A series-coupled perceptron is feed-forward S→A→R network A cross-coupled perceptron is a system in which some connections join units in the same layer A back-coupled perceptron is a system in which some connections flows back to an earlier layer Frank Rosenblatt

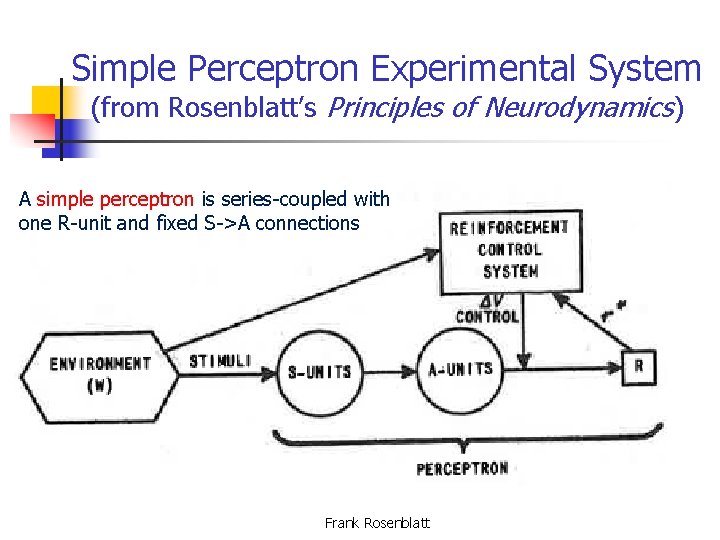

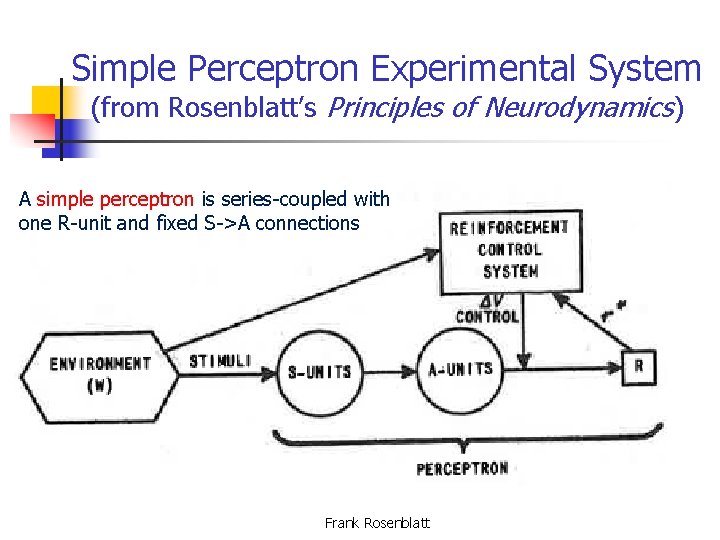

Simple Perceptron Experimental System (from Rosenblatt’s Principles of Neurodynamics) A simple perceptron is series-coupled with one R-unit and fixed S->A connections Frank Rosenblatt

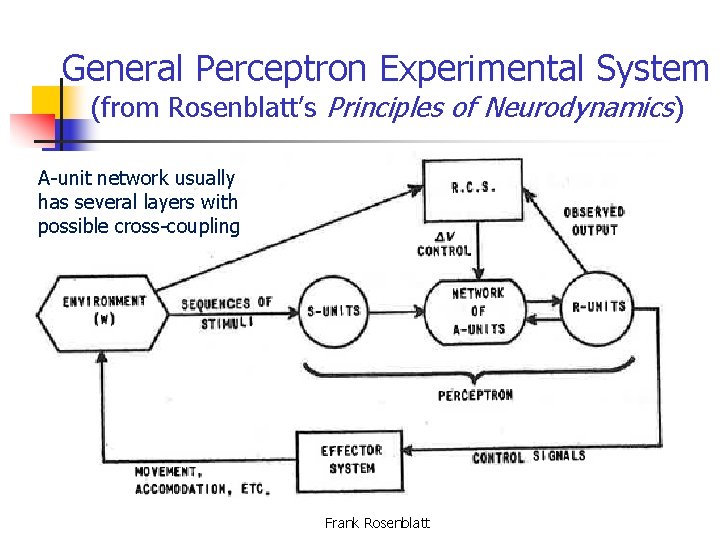

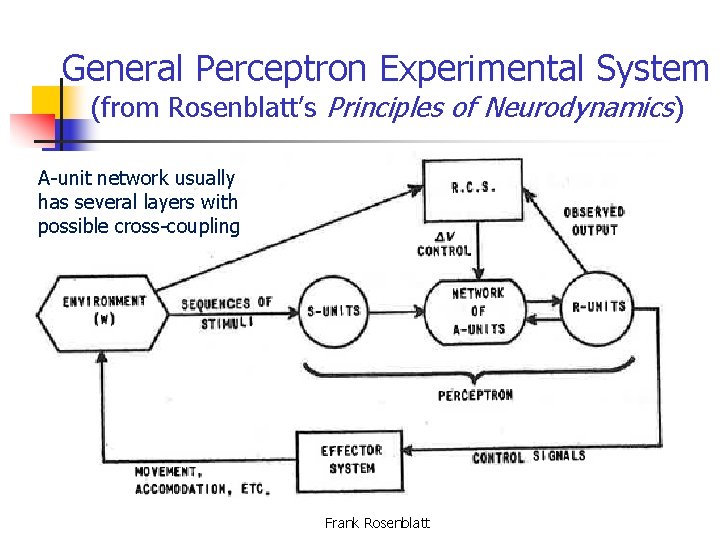

General Perceptron Experimental System (from Rosenblatt’s Principles of Neurodynamics) A-unit network usually has several layers with possible cross-coupling Frank Rosenblatt

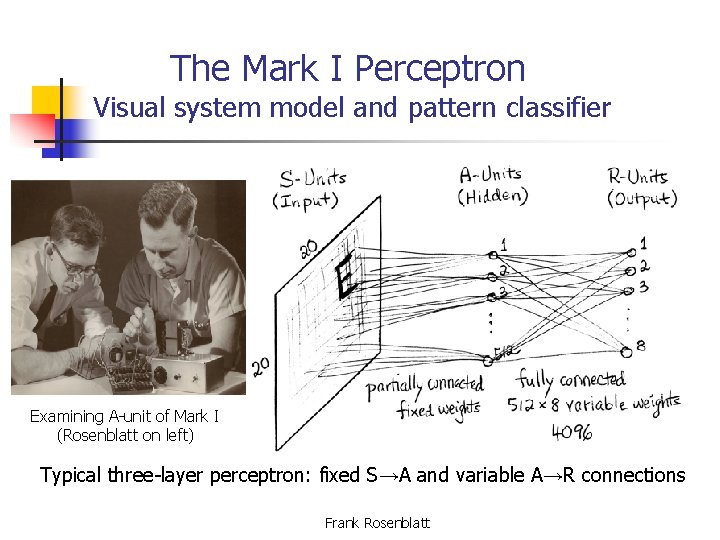

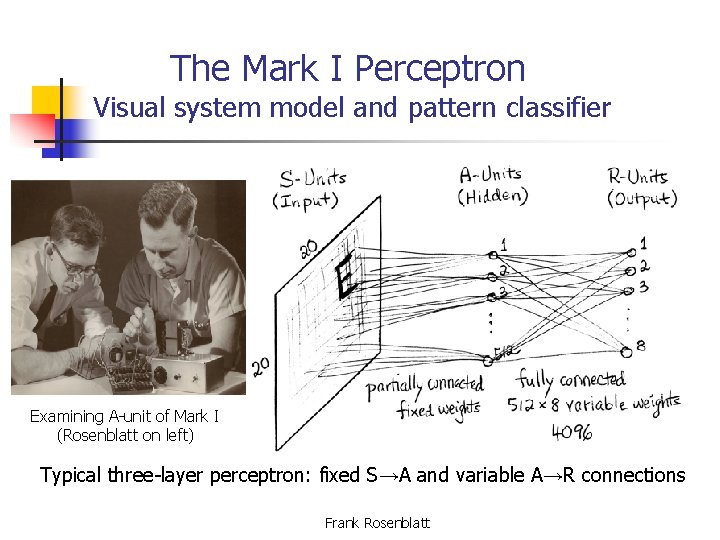

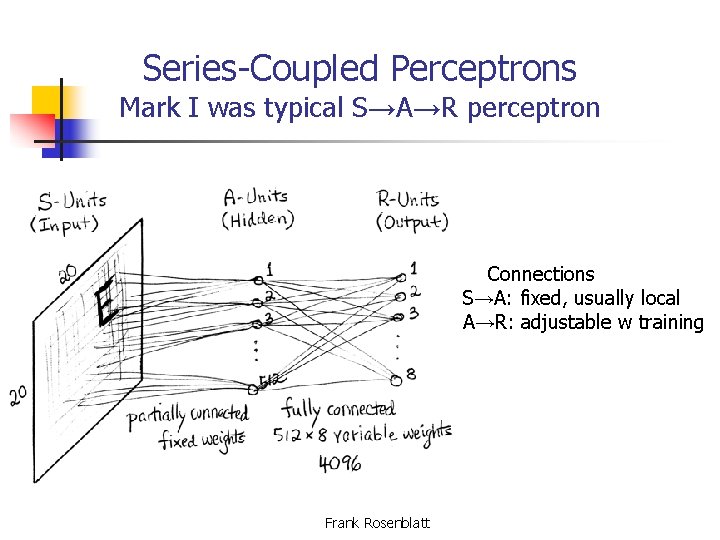

The Mark I Perceptron Visual system model and pattern classifier Examining A-unit of Mark I (Rosenblatt on left) Typical three-layer perceptron: fixed S→A and variable A→R connections Frank Rosenblatt

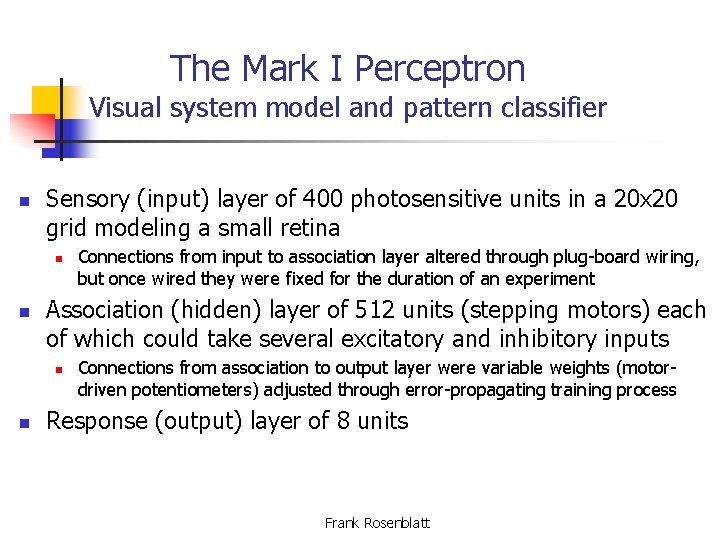

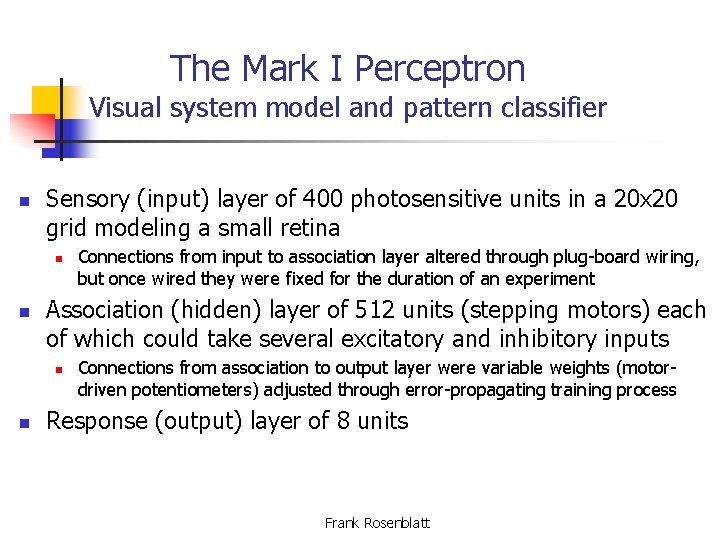

The Mark I Perceptron Visual system model and pattern classifier n Sensory (input) layer of 400 photosensitive units in a 20 x 20 grid modeling a small retina n n Association (hidden) layer of 512 units (stepping motors) each of which could take several excitatory and inhibitory inputs n n Connections from input to association layer altered through plug-board wiring, but once wired they were fixed for the duration of an experiment Connections from association to output layer were variable weights (motordriven potentiometers) adjusted through error-propagating training process Response (output) layer of 8 units Frank Rosenblatt

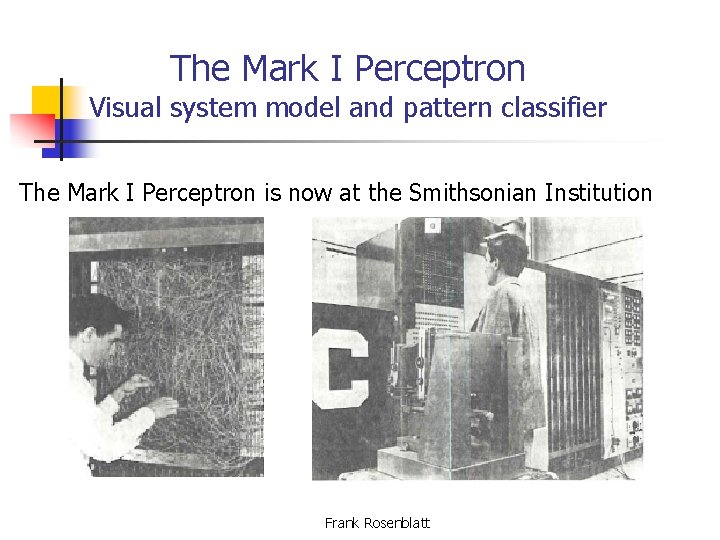

The Mark I Perceptron Visual system model and pattern classifier The Mark I Perceptron is now at the Smithsonian Institution Frank Rosenblatt

The Tobermory Perceptron Auditory system model and pattern classifier n Named after talking cat, Tobermory, in story by H. H. Munro (aka Saki) n Large machine at that time n n n S-units: 45 band-pass filters and 80 difference detectors A-units: 1600 A 1 -units (20 time samples per detector) & 1000 A 2 -units R-units: 12, with 12, 000 adaptive weights A 2→R-units. Frank Rosenblatt

Perceptron Computer Simulations n n Hardware implementations made good demonstrations but software simulations were far more flexible In the 1960 s these computer simulations required machine language coding for speed and memory usage Simulation software package – user could specify the number of layers, the number of units per layer, type of connections between layers, etc. Computer time at Cornell and NYU Frank Rosenblatt

Rosenblatt's Book Principles of Neurodynamics, 1962 n n n Part I: historical review of brain modeling approaches, physiological and psychological considerations, and basic definitions and concepts of the perceptron approach Part II: three-layer, series-coupled perceptrons – mathematical underpinnings and experimental results Part III: multi-layer and cross-coupled perceptrons Part VI: back-coupled perceptrons Book used to teach an interdisciplinary course "Theory of Brain Mechanisms" that drew students from Cornell's Engineering and Liberal Arts colleges Frank Rosenblatt

Series-Coupled Perceptrons n n n A perceptron is a network of sensory (S), association (A), and response (R) units with an interaction matrix of connection coefficients for all pairs of units A series-coupled perceptron is feed-forward S→A→R network An simple perceptron is series-coupled with one R-unit connected to every A-unit and fixed S→A connections Frank Rosenblatt

Series-Coupled Perceptron Theorems n Convergence Theorem: Given a simple perceptron, a stimulus world W, and any classification C(W) for which a solution exists, then if all stimuli in W re-occur in finite time, the error correction procedure will always find a solution n n Perceptrons were the first neural networks that could learn the weights! Solution Existence Theorem: The class of simple perceptrons for which a solution exists to every classification C(W) of possible environments W is non-empty n There exists an S→A→R feedforward perceptron that can solve any classification problem! Frank Rosenblatt

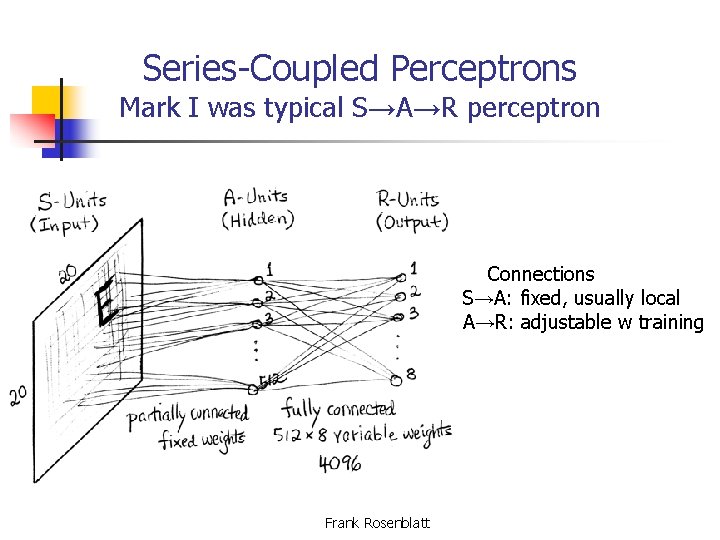

Series-Coupled Perceptrons Mark I was typical S→A→R perceptron Connections S→A: fixed, usually local A→R: adjustable w training Frank Rosenblatt

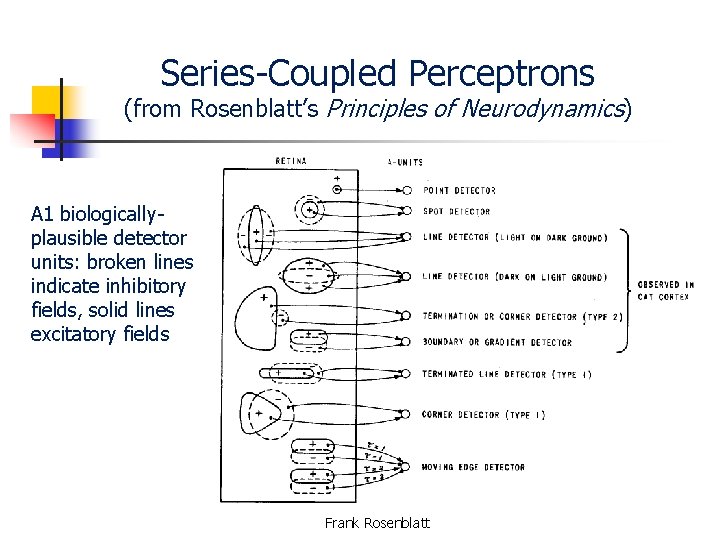

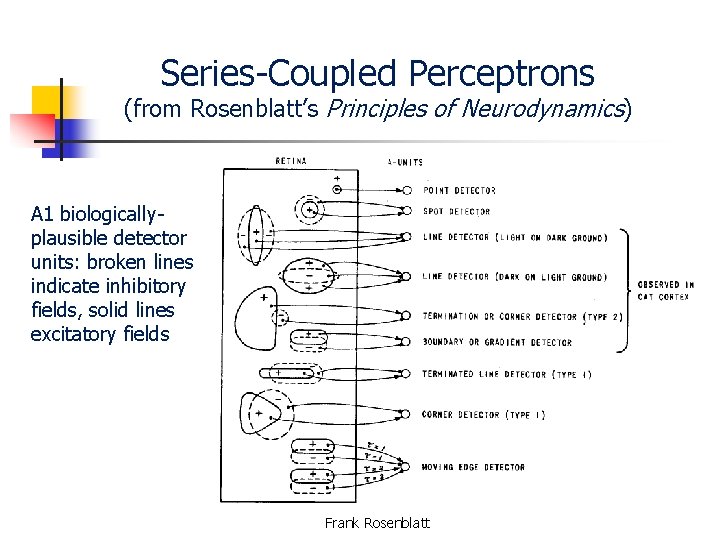

Series-Coupled Perceptrons (from Rosenblatt’s Principles of Neurodynamics) A 1 biologicallyplausible detector units: broken lines indicate inhibitory fields, solid lines excitatory fields Frank Rosenblatt

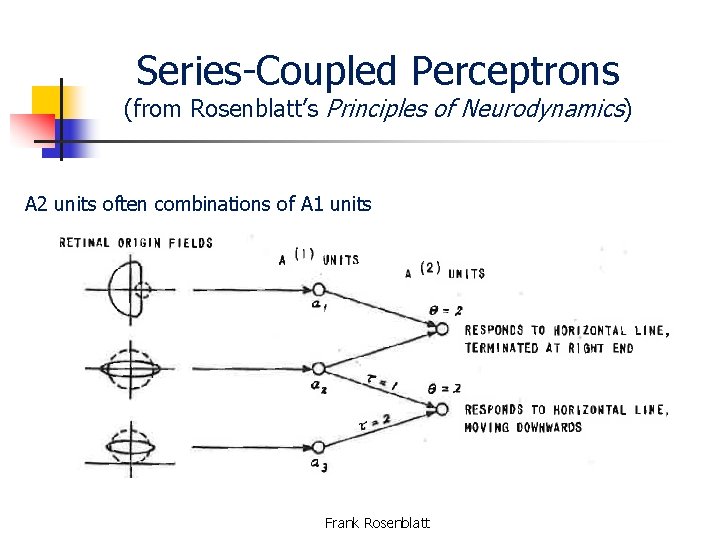

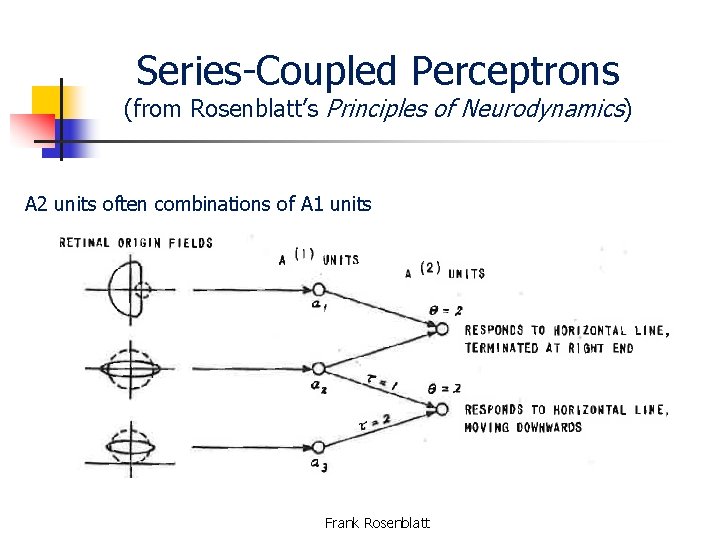

Series-Coupled Perceptrons (from Rosenblatt’s Principles of Neurodynamics) A 2 units often combinations of A 1 units Frank Rosenblatt

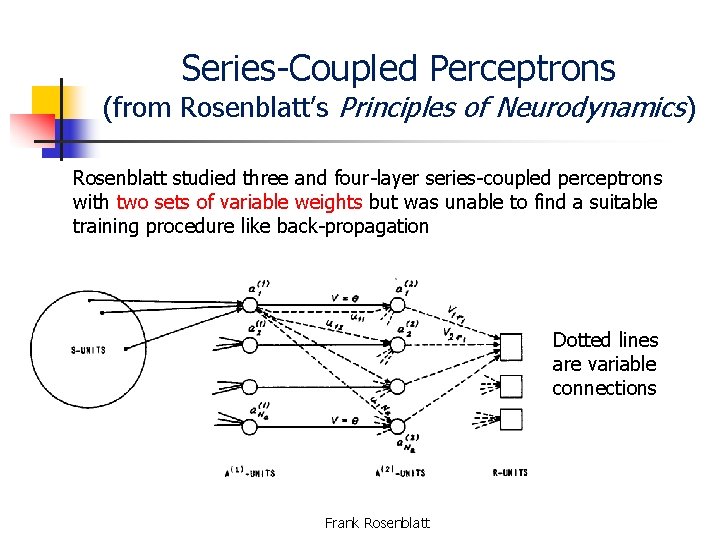

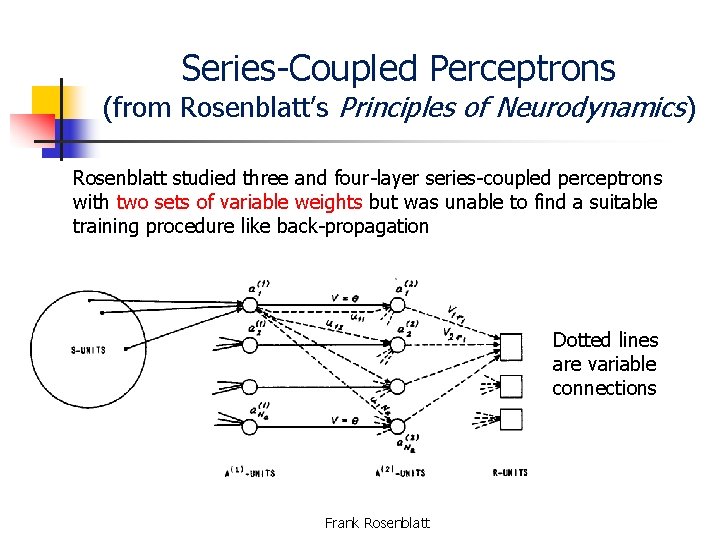

Series-Coupled Perceptrons (from Rosenblatt’s Principles of Neurodynamics) Rosenblatt studied three and four-layer series-coupled perceptrons with two sets of variable weights but was unable to find a suitable training procedure like back-propagation Dotted lines are variable connections Frank Rosenblatt

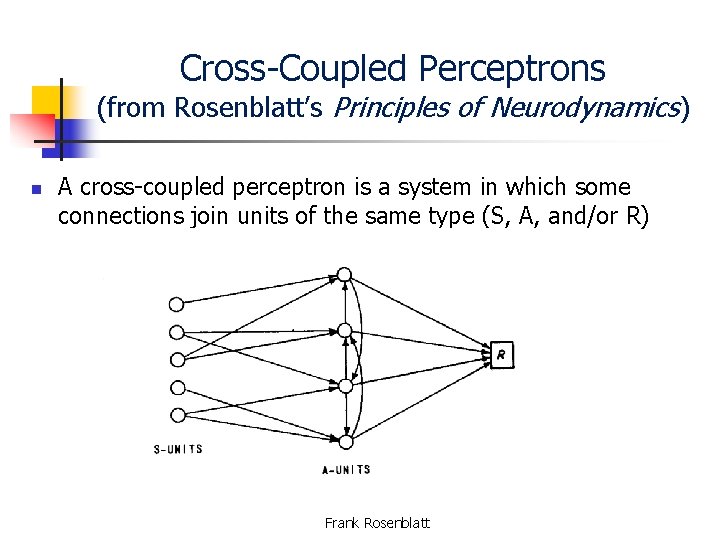

Cross-Coupled Perceptrons (from Rosenblatt’s Principles of Neurodynamics) n A cross-coupled perceptron is a system in which some connections join units of the same type (S, A, and/or R) Frank Rosenblatt

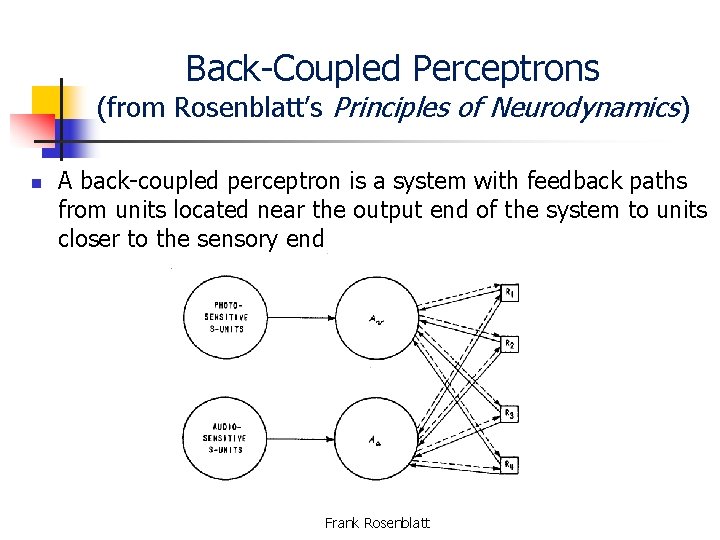

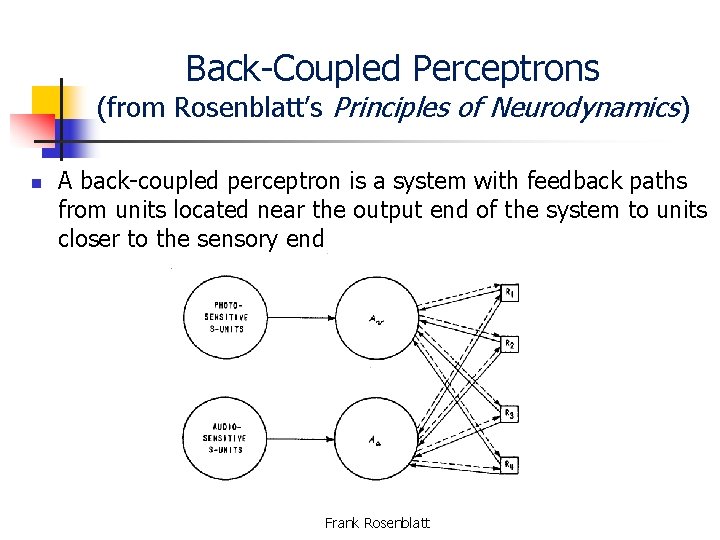

Back-Coupled Perceptrons (from Rosenblatt’s Principles of Neurodynamics) n A back-coupled perceptron is a system with feedback paths from units located near the output end of the system to units closer to the sensory end Frank Rosenblatt

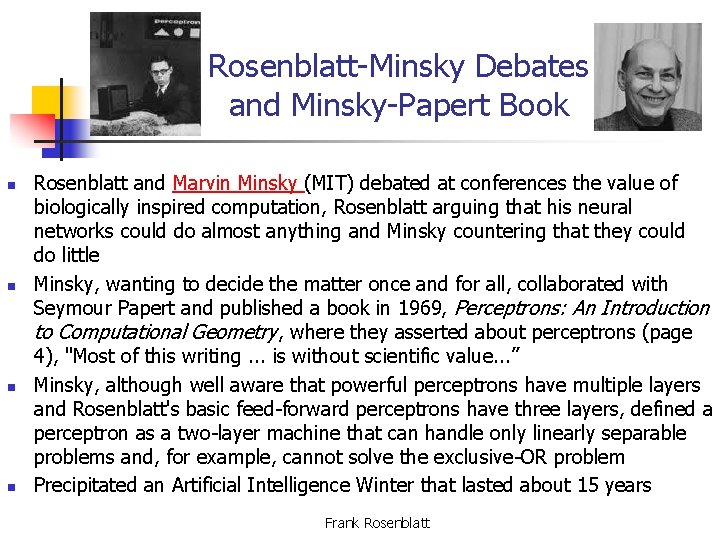

Rosenblatt-Minsky Debates and Minsky-Papert Book n n Rosenblatt and Marvin Minsky (MIT) debated at conferences the value of biologically inspired computation, Rosenblatt arguing that his neural networks could do almost anything and Minsky countering that they could do little Minsky, wanting to decide the matter once and for all, collaborated with Seymour Papert and published a book in 1969, Perceptrons: An Introduction to Computational Geometry, where they asserted about perceptrons (page 4), "Most of this writing. . . is without scientific value. . . ” Minsky, although well aware that powerful perceptrons have multiple layers and Rosenblatt's basic feed-forward perceptrons have three layers, defined a perceptron as a two-layer machine that can handle only linearly separable problems and, for example, cannot solve the exclusive-OR problem Precipitated an Artificial Intelligence Winter that lasted about 15 years Frank Rosenblatt

Three Wave Development of Deep Learning (Deep Learning by Goodfellow, Bengio, and Courville) n 1940 s-1960 s: Early Neural Networks (Cybernetics? ) n n 1980 s-1990 s: Connectionism n n n Rosenblatt’s perceptron – developed from Hebb’s synaptic strengthening ideas and Mc. Culloch-Pitts Neuron Key idea – variations of stochastic gradient descent Wave killed by Minsky 1969, lead to “AI Winter” Rumelhart, et al. Key idea – backpropagation 2006 -present: Deep Learning n n Started with Hinton’s deep belief network Key idea – hierarchy of many layers in the neural network

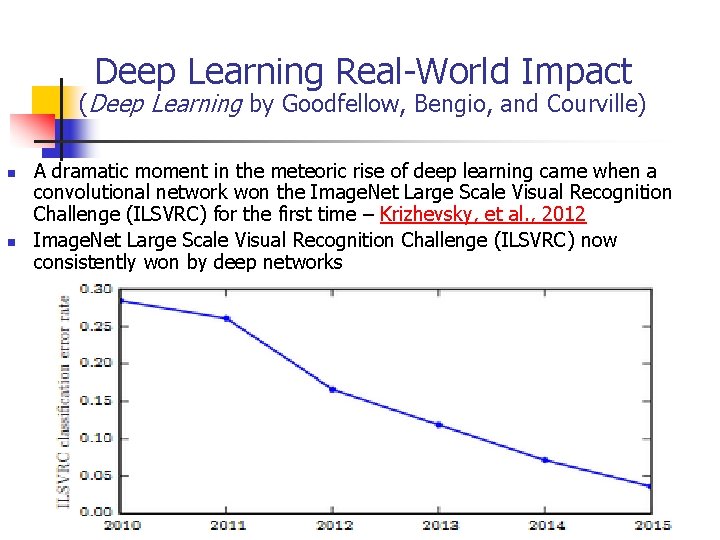

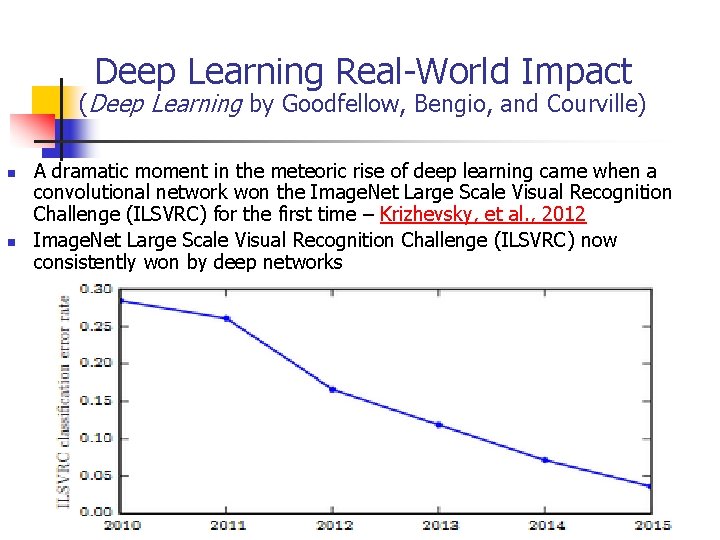

Deep Learning Real-World Impact (Deep Learning by Goodfellow, Bengio, and Courville) n n A dramatic moment in the meteoric rise of deep learning came when a convolutional network won the Image. Net Large Scale Visual Recognition Challenge (ILSVRC) for the first time – Krizhevsky, et al. , 2012 Image. Net Large Scale Visual Recognition Challenge (ILSVRC) now consistently won by deep networks

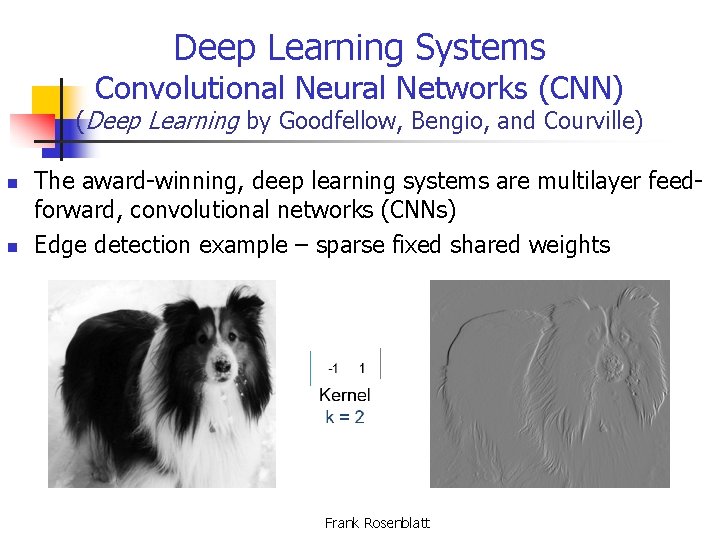

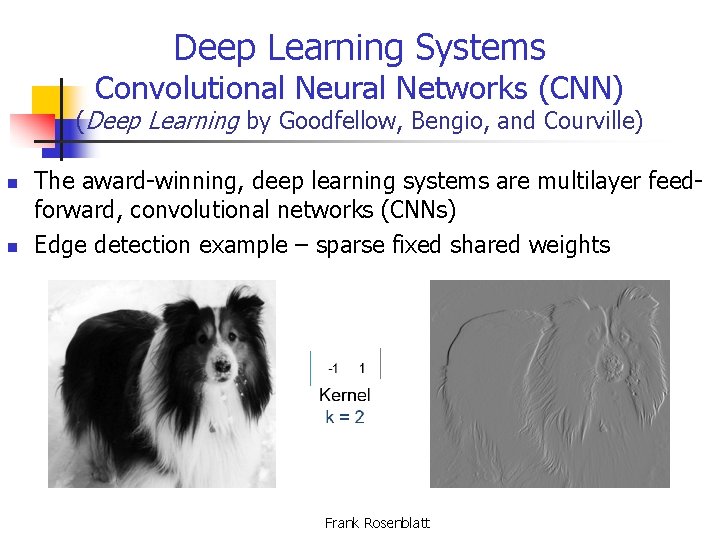

Deep Learning Systems Convolutional Neural Networks (CNN) (Deep Learning by Goodfellow, Bengio, and Courville) n n The award-winning, deep learning systems are multilayer feedforward, convolutional networks (CNNs) Edge detection example – sparse fixed shared weights Frank Rosenblatt

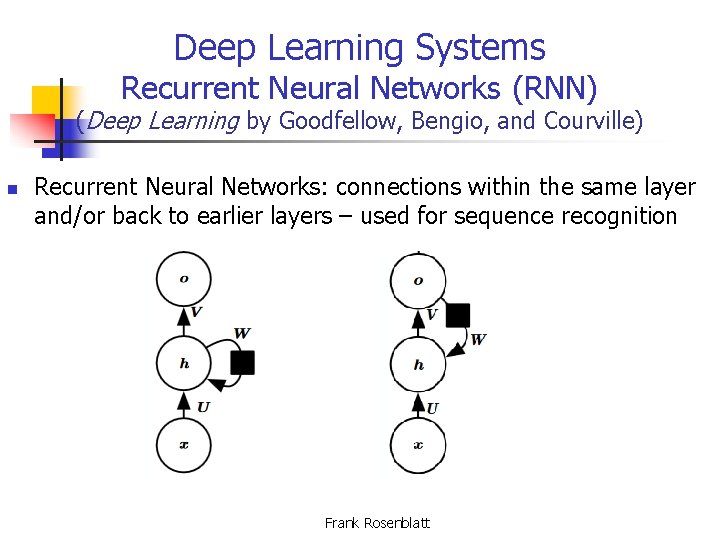

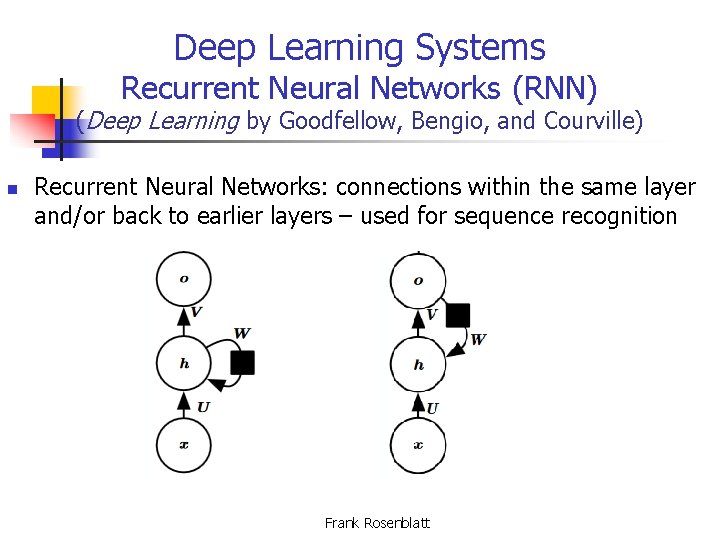

Deep Learning Systems Recurrent Neural Networks (RNN) (Deep Learning by Goodfellow, Bengio, and Courville) n Recurrent Neural Networks: connections within the same layer and/or back to earlier layers – used for sequence recognition Frank Rosenblatt

Deep Learning CNNs vs Perceptrons Award-winning CNNs n n The award-winning, deep learning systems are multilayer feed-forward, convolutional neural networks (CNNs) These are just multilayer perceptrons with fixed, biologically-inspired connections in the early layers n And it is interesting that they are generally called multilayer perceptrons (MLP) Frank Rosenblatt

Deep Learning RNNs vs Perceptrons Recurrent Neural Networks n n Recurrent Neural Networks have connections within the same layer and/or connections back to earlier layers These are just cross-coupled and back-coupled perceptrons Frank Rosenblatt

Deep Learning Systems vs Perceptrons Conclusions n Although conceptually the same as perceptrons, the deep learning networks have the advantages of n n n Increased computing power + GPUs (50 years from 1960 s) Massive training data (big data) not available in 1960 s Backpropagation algorithm not available in 1960 s n n Allows training of complete system, pulling everything together Nevertheless, we make a strong case for recognizing Frank Rosenblatt as the “Father of Deep Learning” Frank Rosenblatt

References n n n Roger Parloff, Why Deep Learning is Suddenly Changing Your Life, Fortune, 2016 Frank Rosenblatt, Principles of Neurodynamics, Spartan, 1962 Marvin Minsky and Seymour Papert, Perceptrons: An Introduction to Computational Geometry, MIT Press, 1969 Ian Goodfellow, Yoshua Bengio, and Aaron Courville, Deep Learning, MIT Press, 2016 Various links to Wikipedia Frank Rosenblatt