Where did it all start ENIAC picture from

![Where did it all start? ENIAC [picture from Wikipedia] n n n At Penn Where did it all start? ENIAC [picture from Wikipedia] n n n At Penn](https://slidetodoc.com/presentation_image_h/0b3b97630953161e27da6a299587b80d/image-2.jpg)

- Slides: 33

![Where did it all start ENIAC picture from Wikipedia n n n At Penn Where did it all start? ENIAC [picture from Wikipedia] n n n At Penn](https://slidetodoc.com/presentation_image_h/0b3b97630953161e27da6a299587b80d/image-2.jpg)

Where did it all start? ENIAC [picture from Wikipedia] n n n At Penn Lt Gillon, Eckert and Mauchley Cost $486, 804. 22, in 1946 (~$6. 3 M today) 5000 ops/second 19 K vacuum tubes Power = 200 K Watts 67 m 3, 27 tons(!) 2

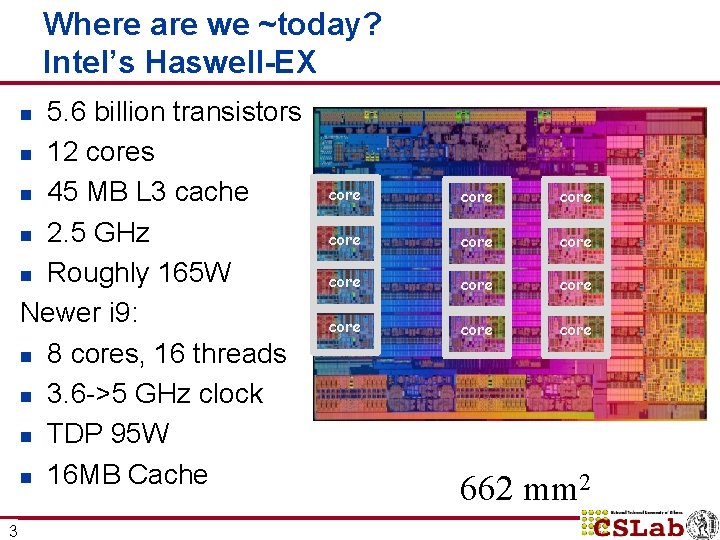

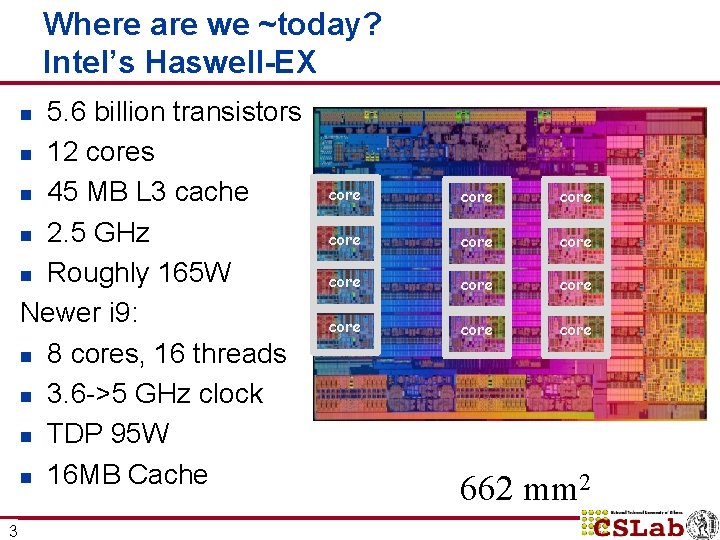

Where are we ~today? Intel’s Haswell-EX 5. 6 billion transistors n 12 cores n 45 MB L 3 cache n 2. 5 GHz n Roughly 165 W Newer i 9: n 8 cores, 16 threads n 3. 6 ->5 GHz clock n TDP 95 W n 16 MB Cache n 3 core core core 662 mm 2

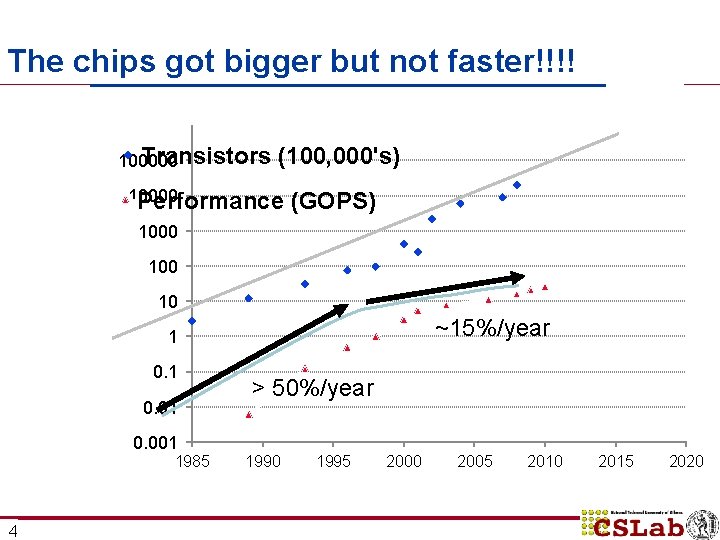

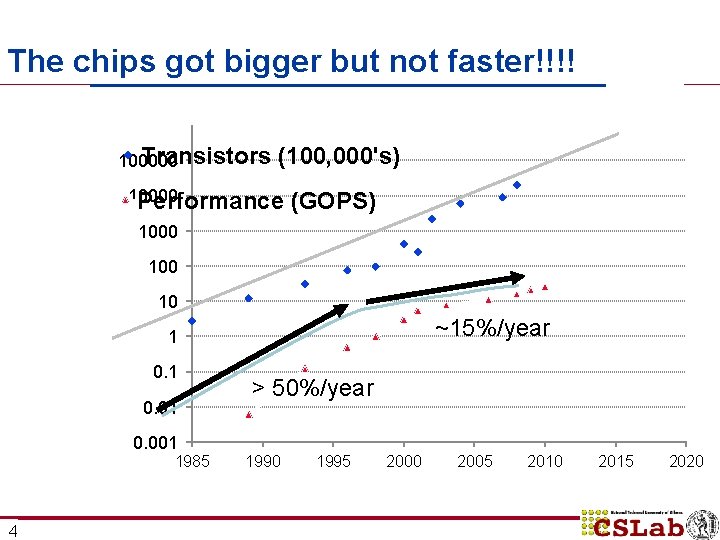

2 UDC ERC Vision E The chips got bigger but not faster!!!! Transistors 100000 (100, 000's) 10000 Performance (GOPS) 1000 10 ~15%/year 1 0. 01 > 50%/year 0. 001 1985 4 1990 1995 2000 2005 2010 2015 2020

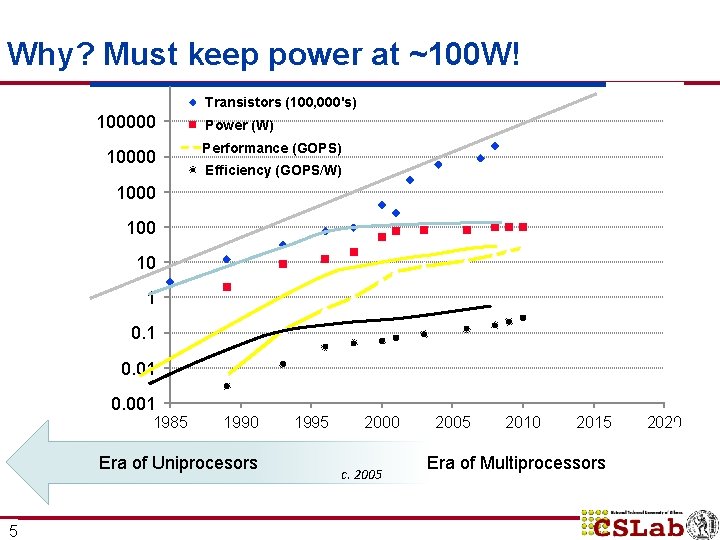

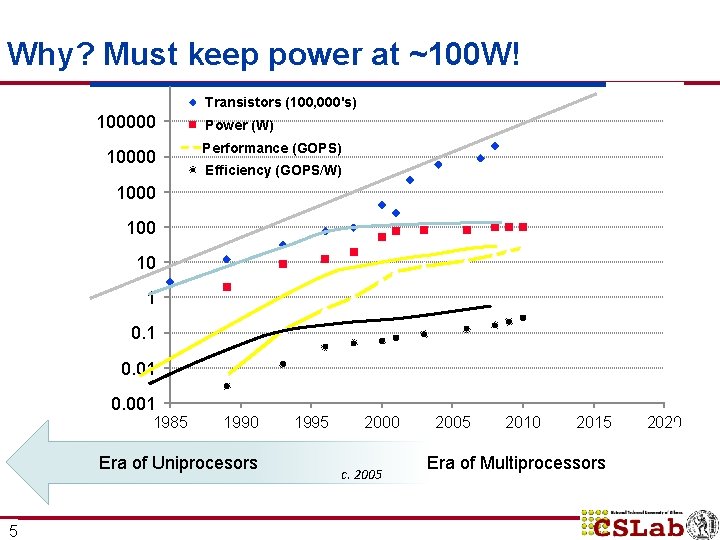

2 UDCkeep Why? EMust power at ~100 W! ERC Vision Transistors (100, 000's) 100000 10000 Power (W) Performance (GOPS) Efficiency (GOPS/W) 1000 10 1 0. 01 0. 001 1985 1990 Era of Uniprocesors 5 1995 2000 c. 2005 2010 2015 Era of Multiprocessors 2020

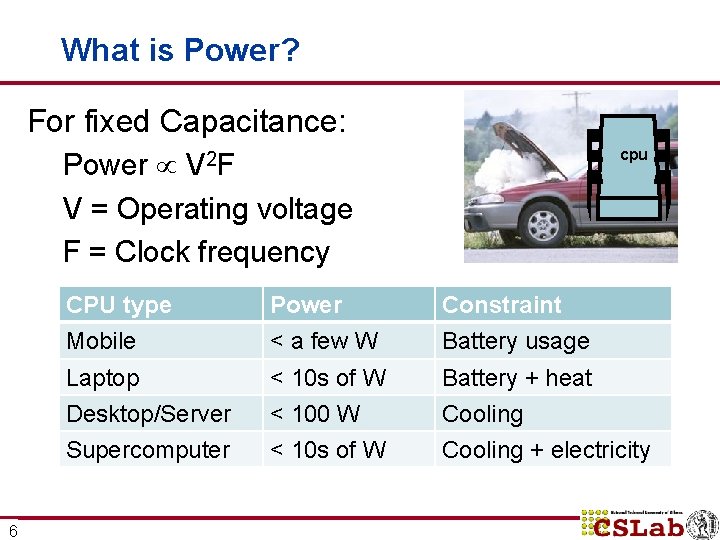

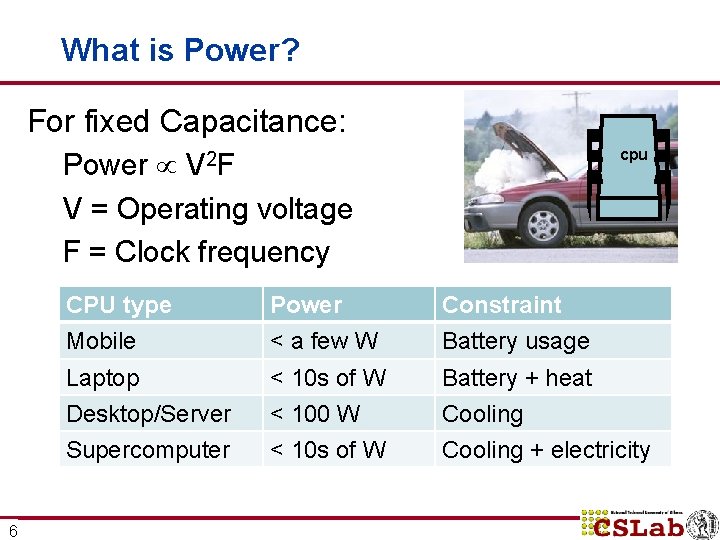

What is Power? For fixed Capacitance: Power V = Operating voltage F = Clock frequency cpu V 2 F 6 CPU type Power Constraint Mobile < a few W Battery usage Laptop < 10 s of W Battery + heat Desktop/Server < 100 W Cooling Supercomputer < 10 s of W Cooling + electricity

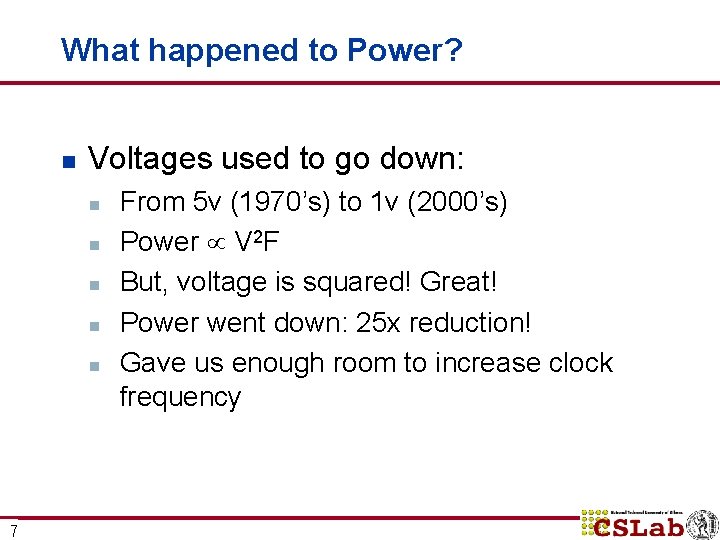

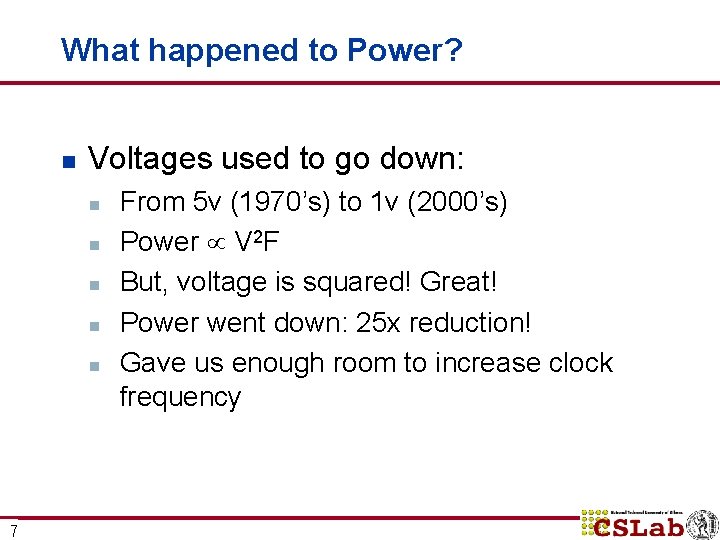

What happened to Power? n Voltages used to go down: n n n 7 From 5 v (1970’s) to 1 v (2000’s) Power V 2 F But, voltage is squared! Great! Power went down: 25 x reduction! Gave us enough room to increase clock frequency

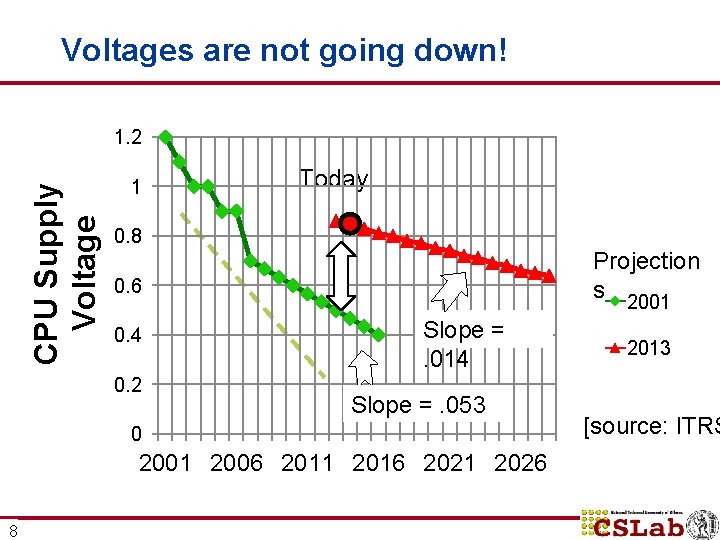

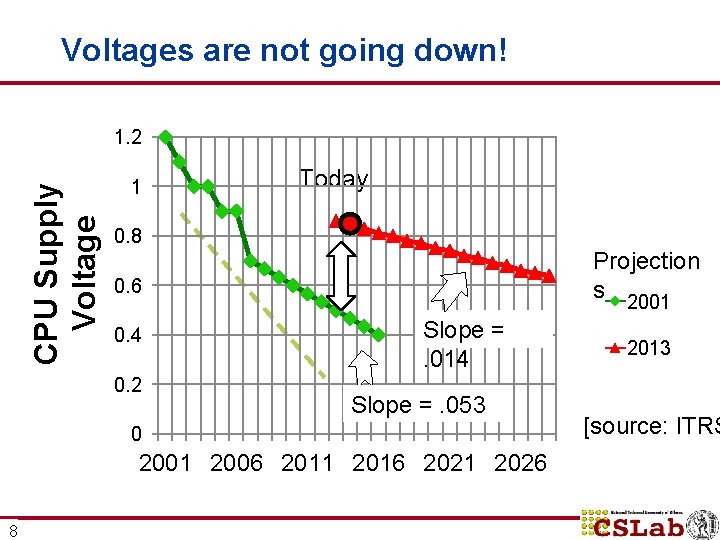

Voltages are not going down! CPU Supply Voltage 1. 2 1 Today 0. 8 Projection s 2001 0. 6 0. 4 0. 2 Slope =. 014 Slope =. 053 0 2001 2006 2011 2016 2021 2026 8 2013 [source: ITRS

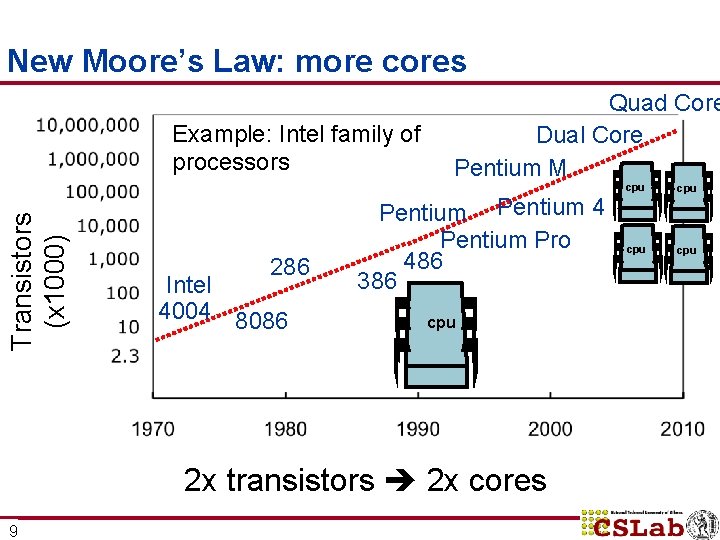

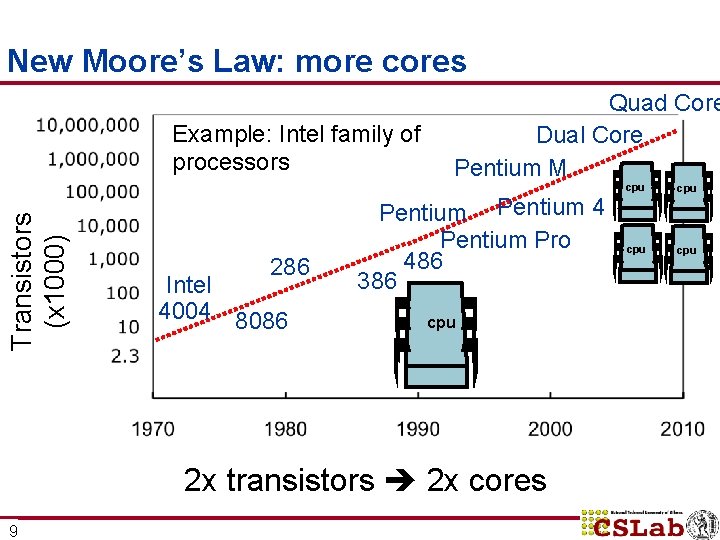

New Moore’s Law: more cores Transistors (x 1000) Example: Intel family of processors 286 Intel 4004 8086 Quad Core Dual Core Pentium M Pentium 4 Pentium Pro 486 386 cpu 2 x transistors 2 x cores 9 cpu cpu

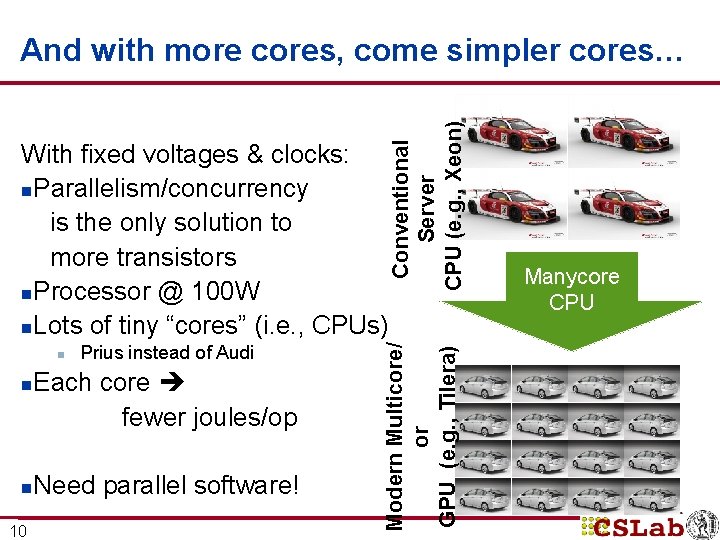

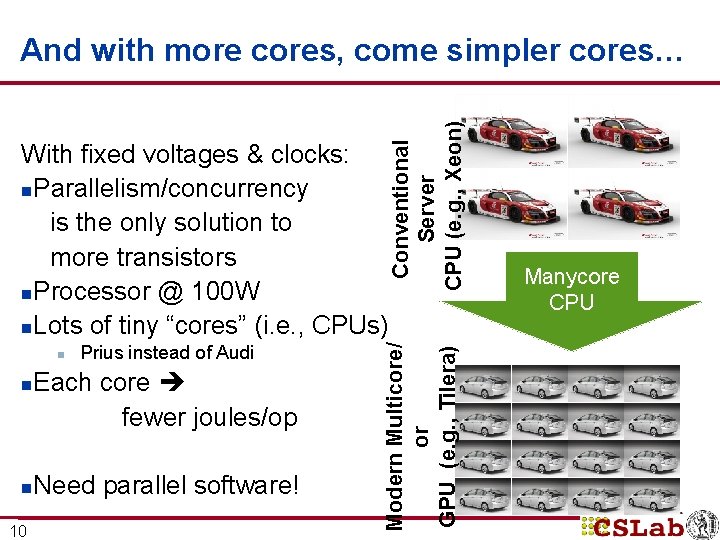

Conventional Server CPU (e. g. , Xeon) And with more cores, come simpler cores… n n n 10 Prius instead of Audi Each core fewer joules/op Need parallel software! Modern Multicore/ or GPU (e. g. , Tilera) With fixed voltages & clocks: n. Parallelism/concurrency is the only solution to more transistors n. Processor @ 100 W n. Lots of tiny “cores” (i. e. , CPUs) Manycore CPU

Terms of interest n n n 11 Multicores & Multiprocessors Cache Coherence Memory Ordering Synchronization Interconnects

Hardware Jargon n n Processor & core used interchangeably Cores run threads Each chip has multiple cores Cores may be heterogeneous n n n Each board can have multiple chips/sockets Each platform can have multiple boards n n 12 CPU cores vs. GPU cores vs. accelerators Mobile platforms usually a single chip/single board Datacenters 10’s to 100’s of thousands of boards

Issues: Where we are going? n n n 13 How do we connect the cores together? How do we make sure they see one copy of memory (or something we can understand & program)? How do we implement synchronization? How do we build cores that run multiple operations with a single instruction? How do we build cores that run multiple threads? What is inside a GPU?

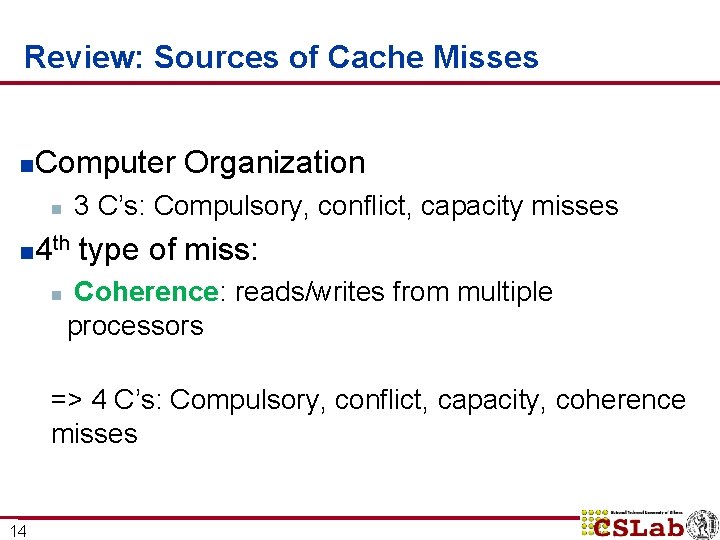

Review: Sources of Cache Misses n Computer Organization 3 C’s: Compulsory, conflict, capacity misses n 4 type of miss: n th n Coherence: reads/writes from multiple processors => 4 C’s: Compulsory, conflict, capacity, coherence misses 14

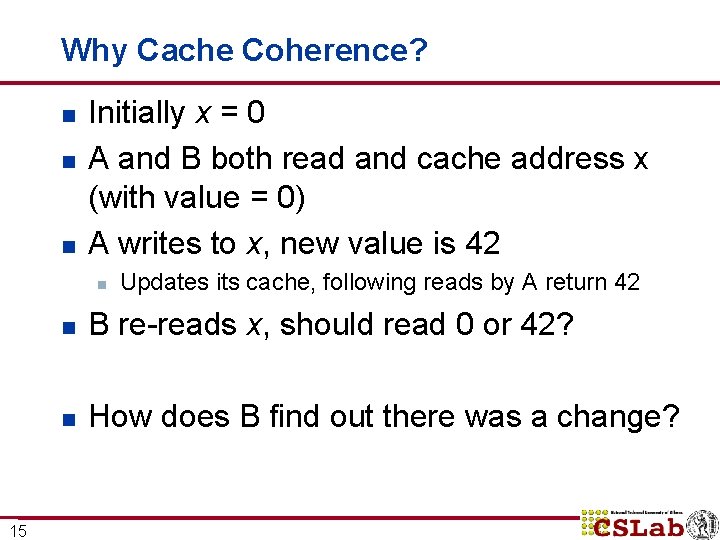

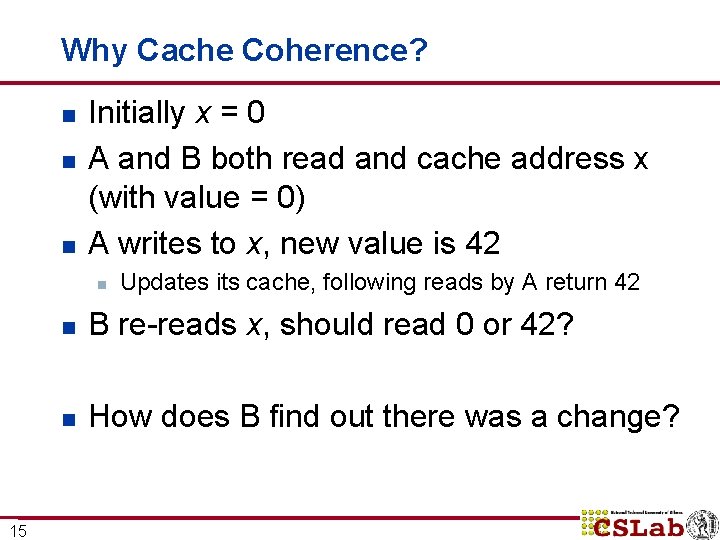

Why Cache Coherence? n n n Initially x = 0 A and B both read and cache address x (with value = 0) A writes to x, new value is 42 n 15 Updates its cache, following reads by A return 42 n B re-reads x, should read 0 or 42? n How does B find out there was a change?

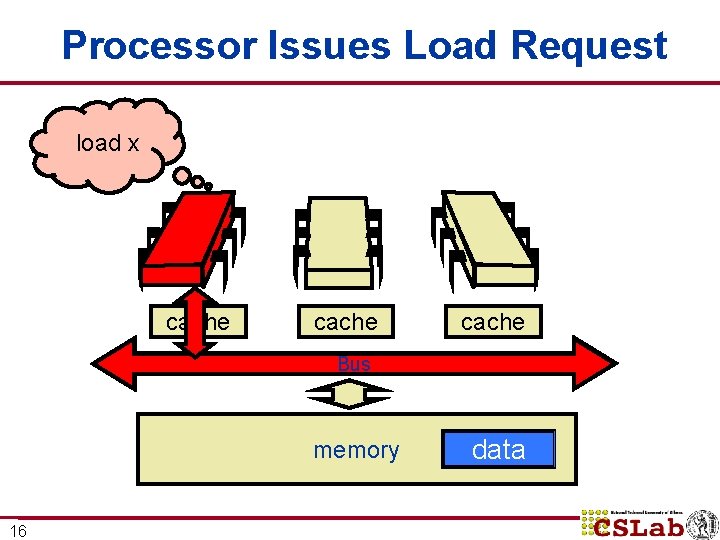

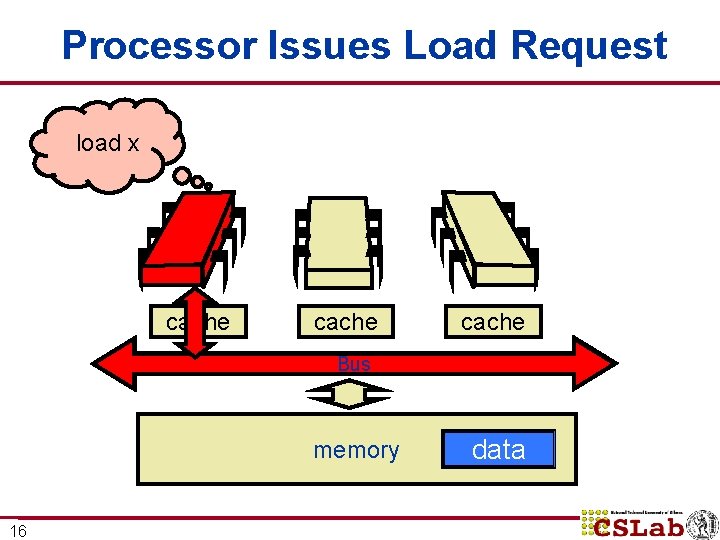

Processor Issues Load Request load x cache Bus memory 16 data

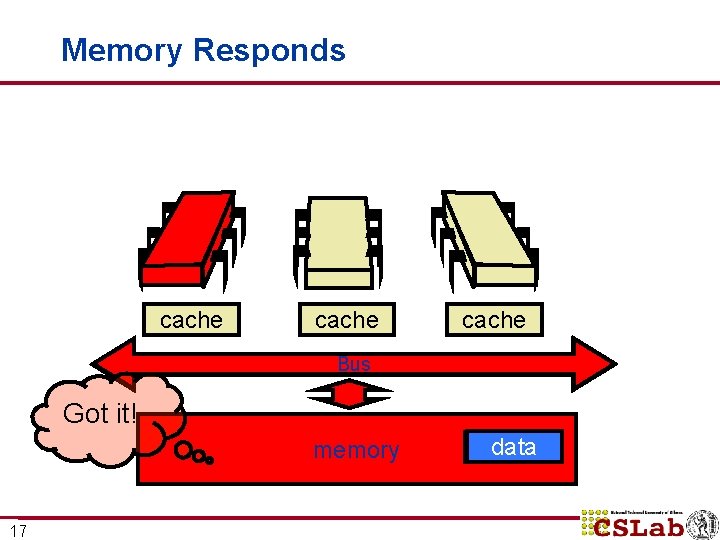

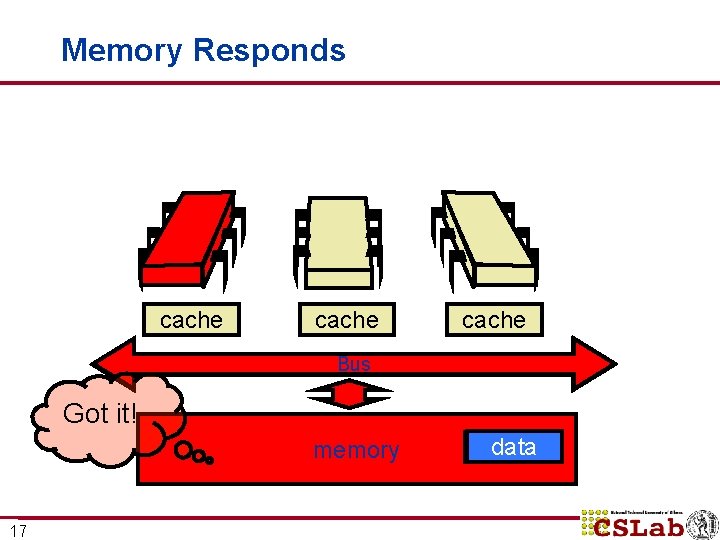

Memory Responds cache Bus Got it! memory 17 data

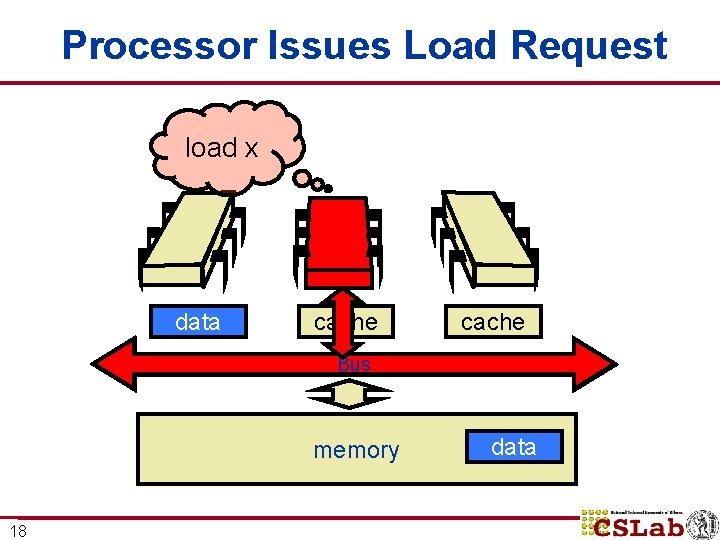

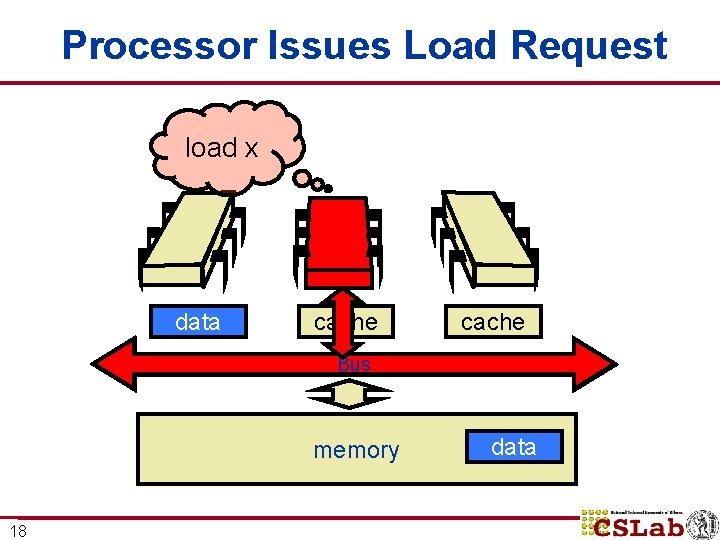

Processor Issues Load Request load x data cache Bus memory 18 data

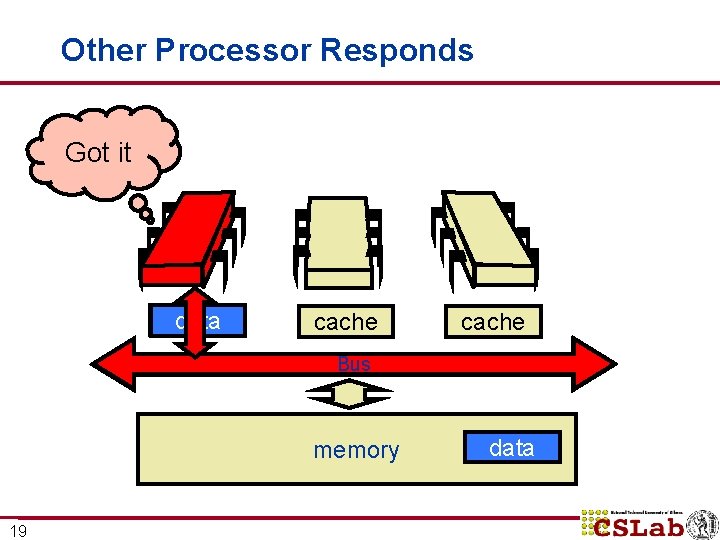

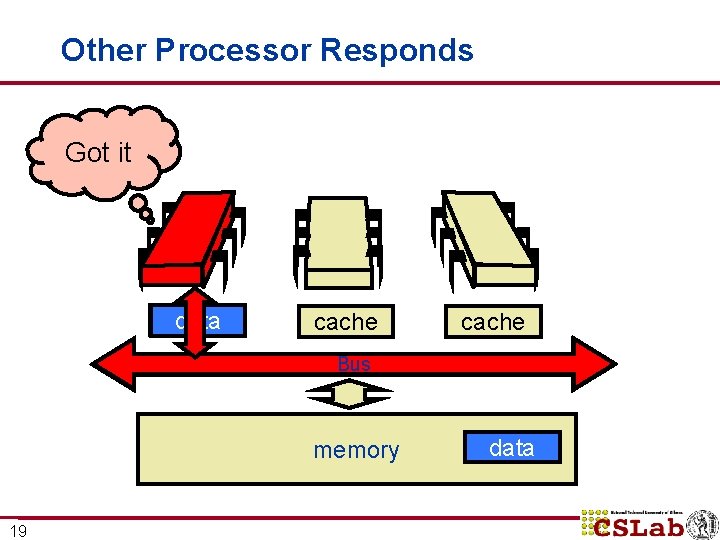

Other Processor Responds Got it data cache Bus memory 19 data

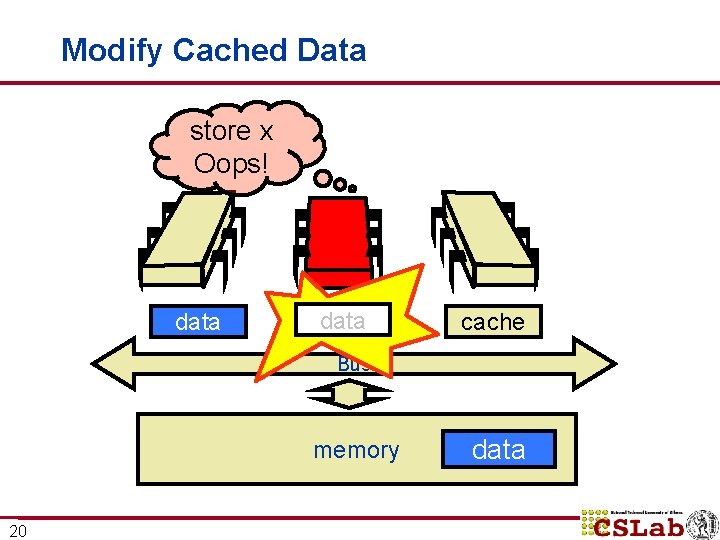

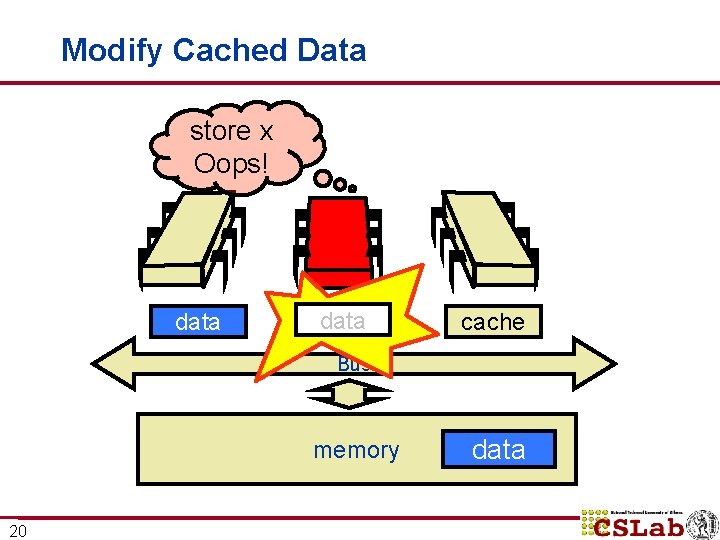

Modify Cached Data store x Oops! data cache Bus memory 20 data

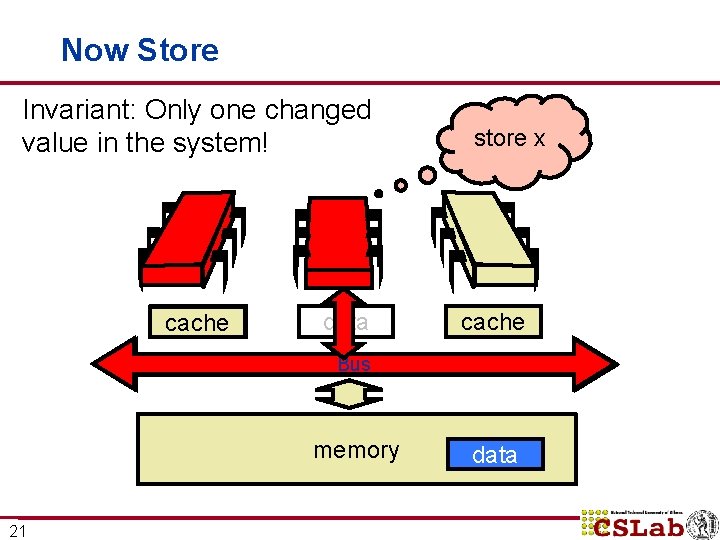

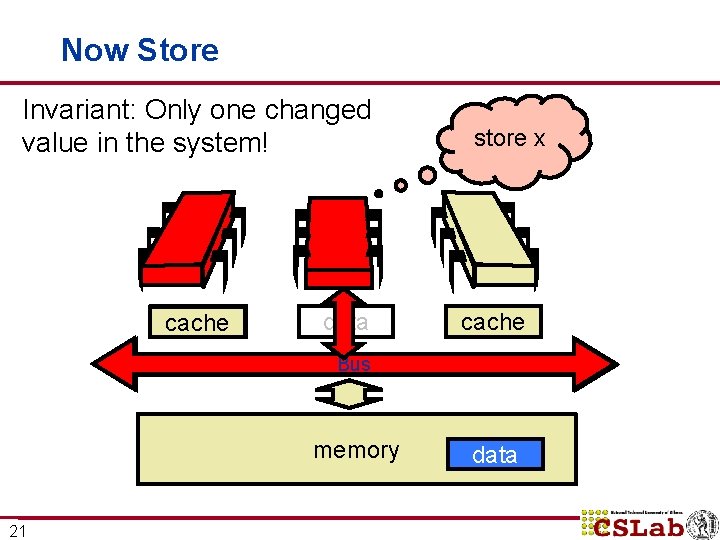

Now Store Invariant: Only one changed value in the system! data cache data store x cache Bus memory 21 data

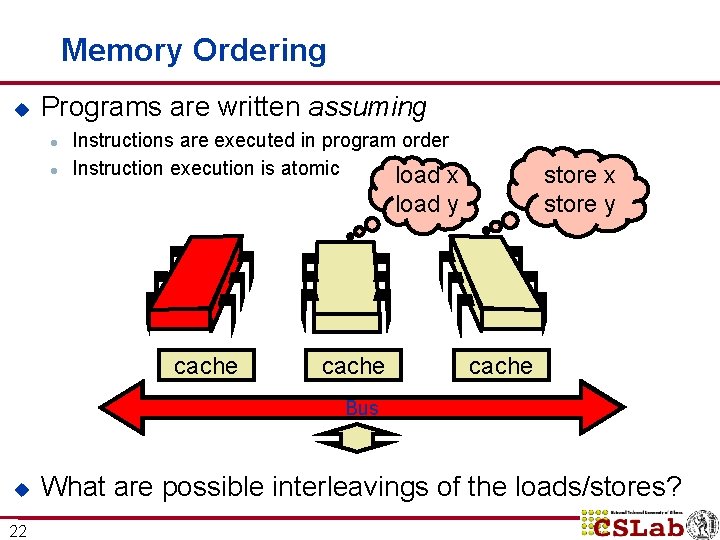

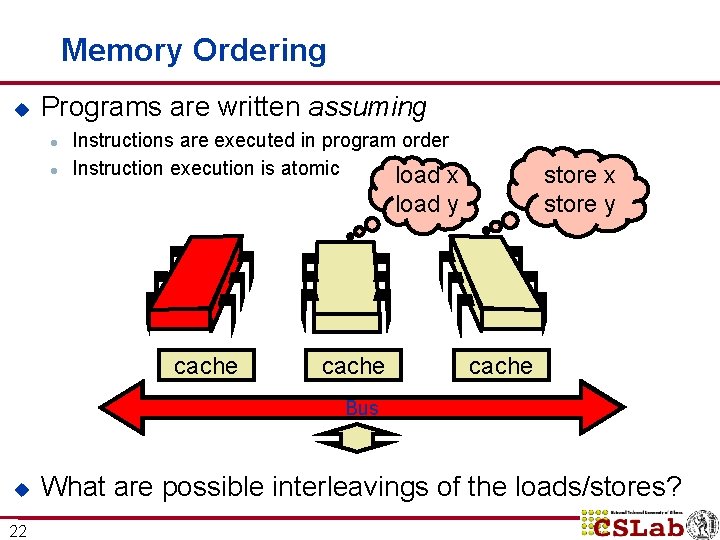

Memory Ordering u Programs are written assuming l l Instructions are executed in program order Instruction execution is atomic load x store y load y cache Bus u 22 What are possible interleavings of the loads/stores?

Example: Memory Ordering In the previous example: n Assume x and y have the value 0 to begin with n Both stores write the value 1 into x and y n What are all possible state values read in various interleavings? 23

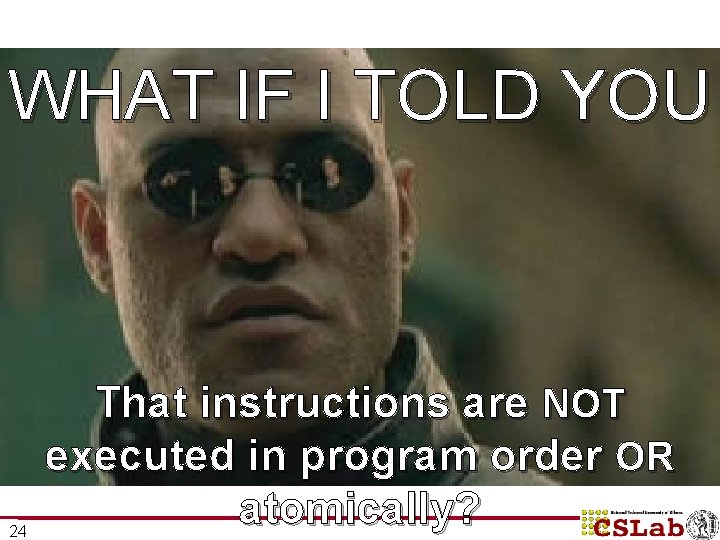

WHAT IF I TOLD YOU 24 That instructions are NOT executed in program order OR atomically?

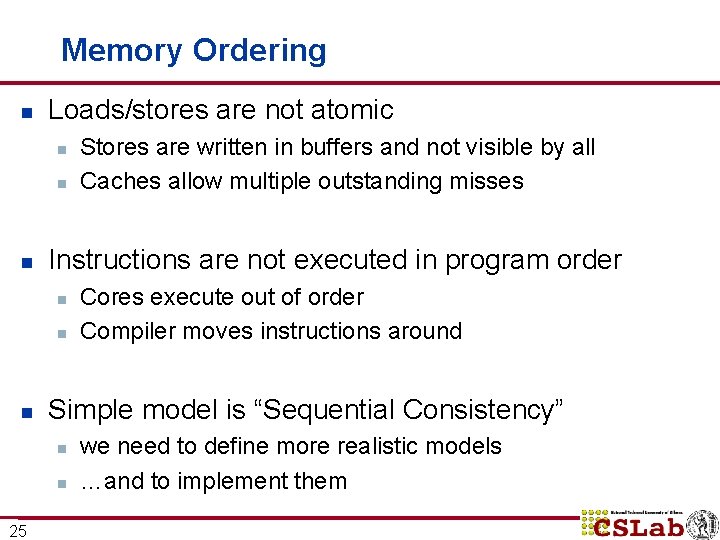

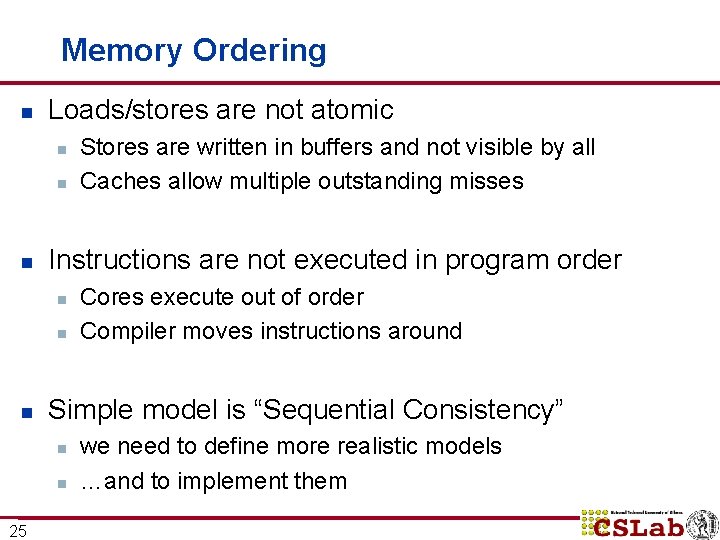

Memory Ordering n Loads/stores are not atomic n n n Instructions are not executed in program order n n n Cores execute out of order Compiler moves instructions around Simple model is “Sequential Consistency” n n 25 Stores are written in buffers and not visible by all Caches allow multiple outstanding misses we need to define more realistic models …and to implement them

Synchronization n A spectrum of synchronization primitives is defined n n 26 Lock, compare and swap, etc Barrier Messages Higher level constructs: Transactional Memory (in Haswell, etc)

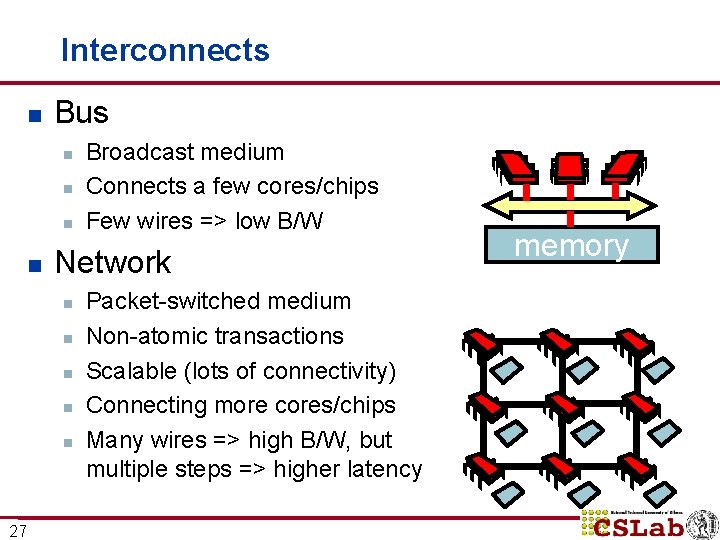

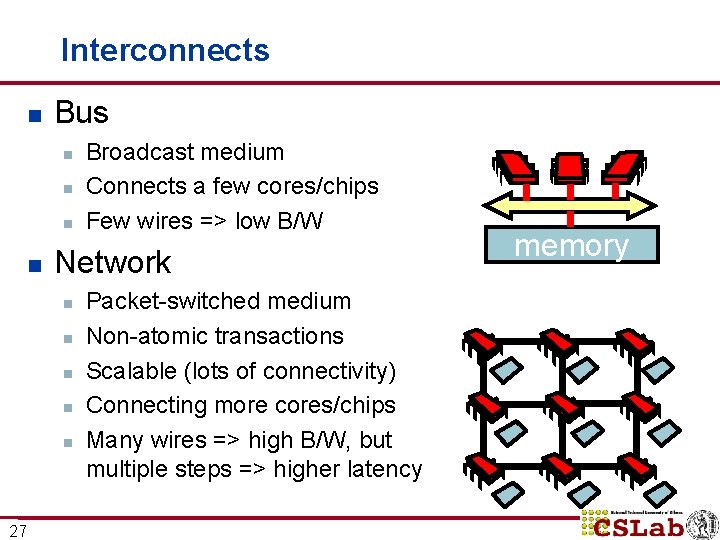

Interconnects n Bus n n Network n n n 27 Broadcast medium Connects a few cores/chips Few wires => low B/W Packet-switched medium Non-atomic transactions Scalable (lots of connectivity) Connecting more cores/chips Many wires => high B/W, but multiple steps => higher latency memory

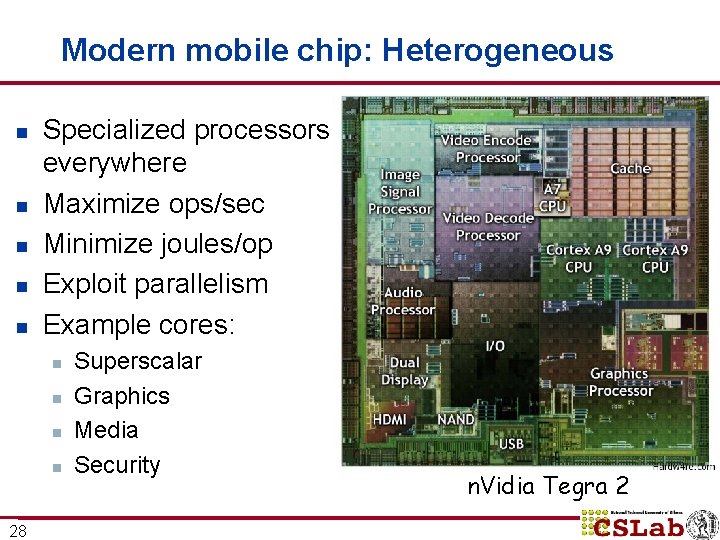

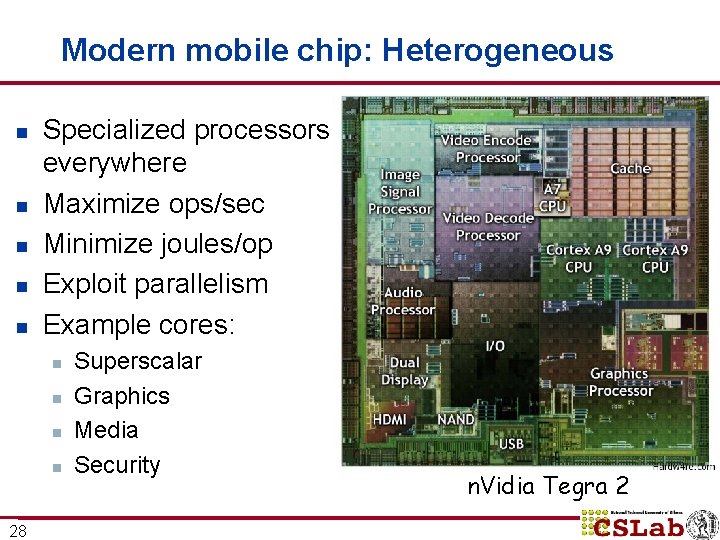

Modern mobile chip: Heterogeneous n n n Specialized processors everywhere Maximize ops/sec Minimize joules/op Exploit parallelism Example cores: n n 28 Superscalar Graphics Media Security n. Vidia Tegra 2

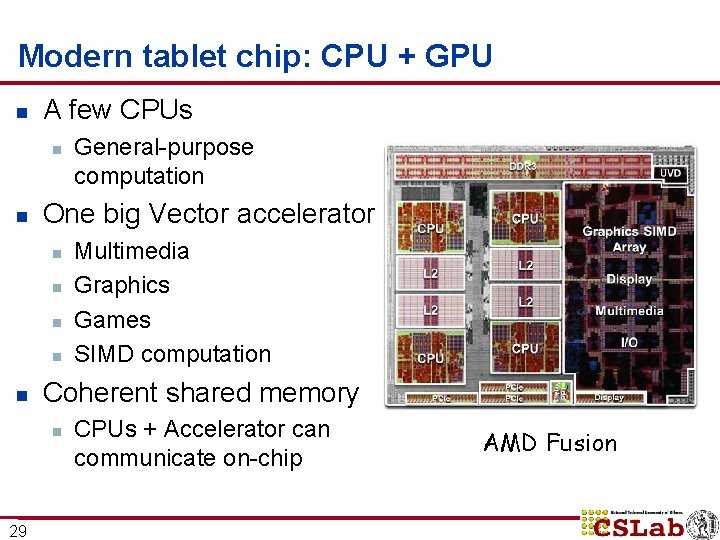

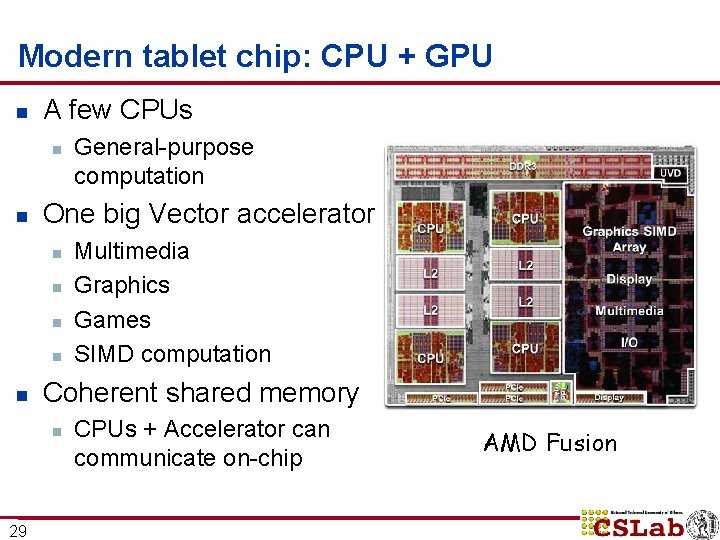

Modern tablet chip: CPU + GPU n A few CPUs n n One big Vector accelerator n n n Multimedia Graphics Games SIMD computation Coherent shared memory n 29 General-purpose computation CPUs + Accelerator can communicate on-chip AMD Fusion

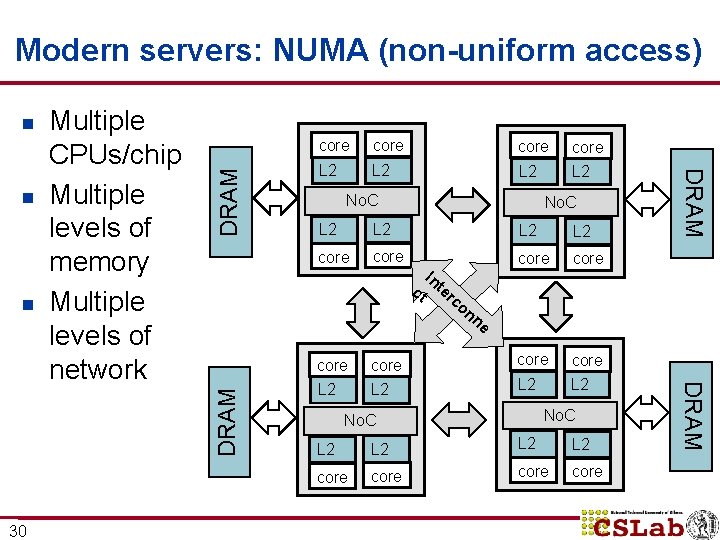

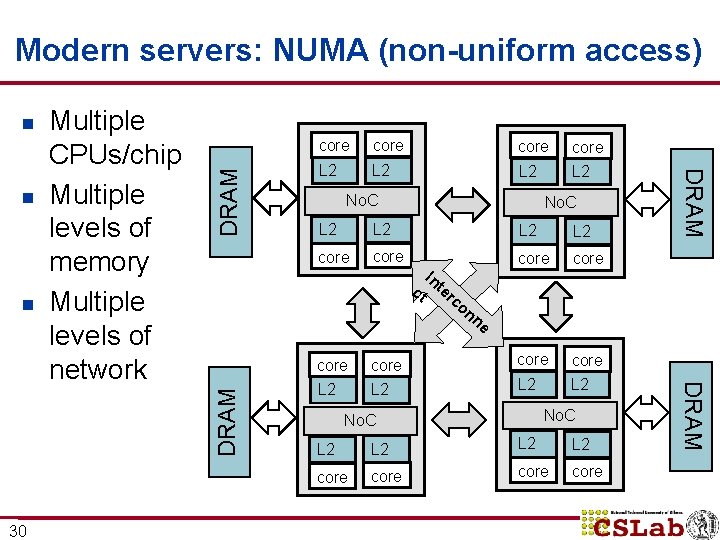

n core L 2 L 2 No. C L 2 L 2 core In core L 2 L 2 No. C L 2 L 2 core DRAM 30 core ct ter co nn e DRAM n core DRAM n Multiple CPUs/chip Multiple levels of memory Multiple levels of network DRAM Modern servers: NUMA (non-uniform access)

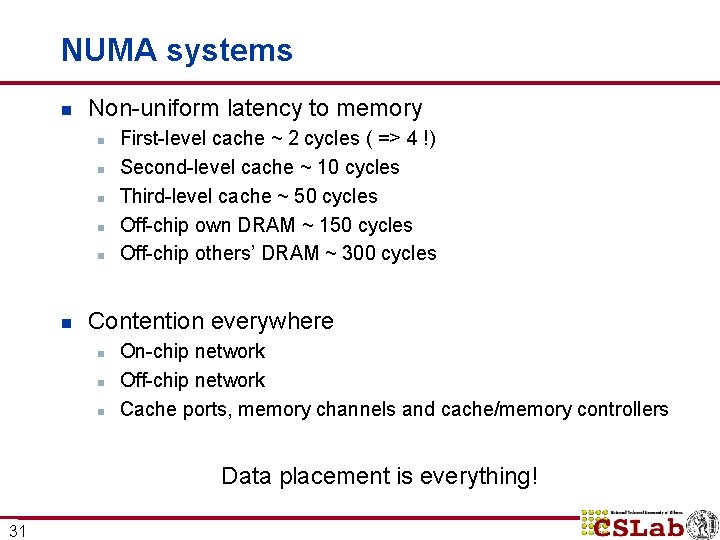

NUMA systems n Non-uniform latency to memory n n n First-level cache ~ 2 cycles ( => 4 !) Second-level cache ~ 10 cycles Third-level cache ~ 50 cycles Off-chip own DRAM ~ 150 cycles Off-chip others’ DRAM ~ 300 cycles Contention everywhere n n n On-chip network Off-chip network Cache ports, memory channels and cache/memory controllers Data placement is everything! 31

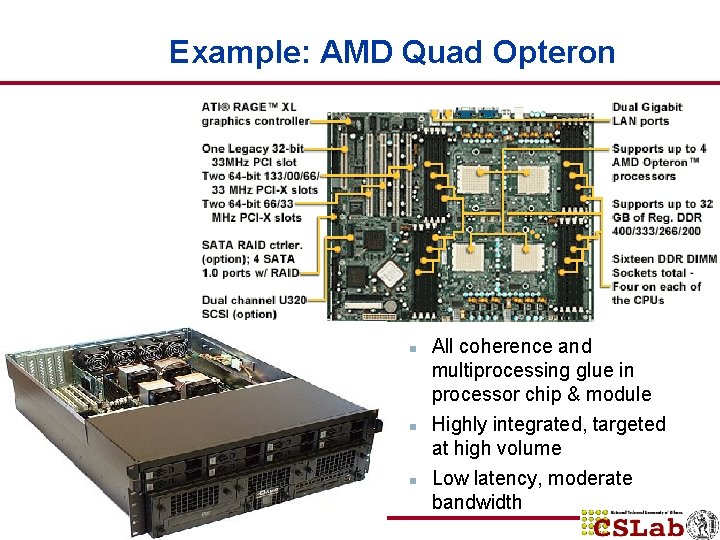

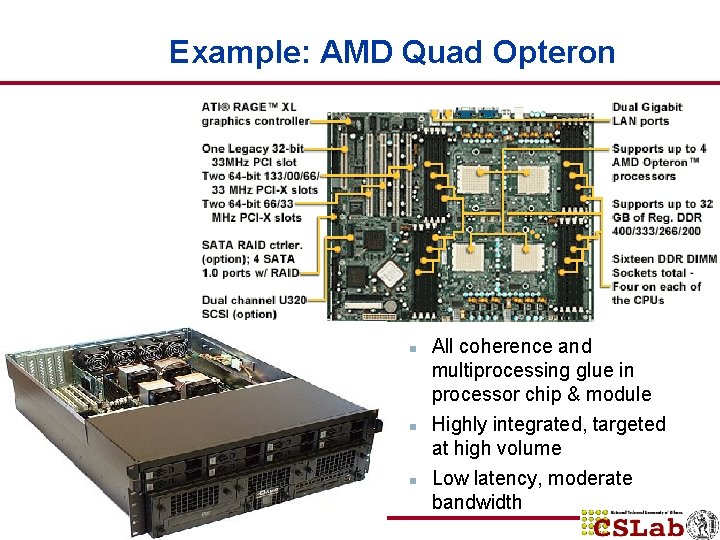

Example: AMD Quad Opteron n 32 All coherence and multiprocessing glue in processor chip & module Highly integrated, targeted at high volume Low latency, moderate bandwidth

Summary n Multiprocessor architecture n n n n 33 Cache coherence Memory ordering Interconnects Multithreading/Vector/GPU Lots of fundamental tradeoffs to consider in design Lots and lots of critical techniques to handle multiprocessor problems Lots of interesting examples of good/bad design choices