Whats new in Condor Whats coming Condor Week

- Slides: 41

What’s new in Condor? What’s coming? Condor Week 2012 Condor Project Computer Sciences Department University of Wisconsin-Madison

13 Years of Condor Week Edition of “What’s New in Condor” www. condorproject. org 2

Hint: Example: www. condorproject. org 3

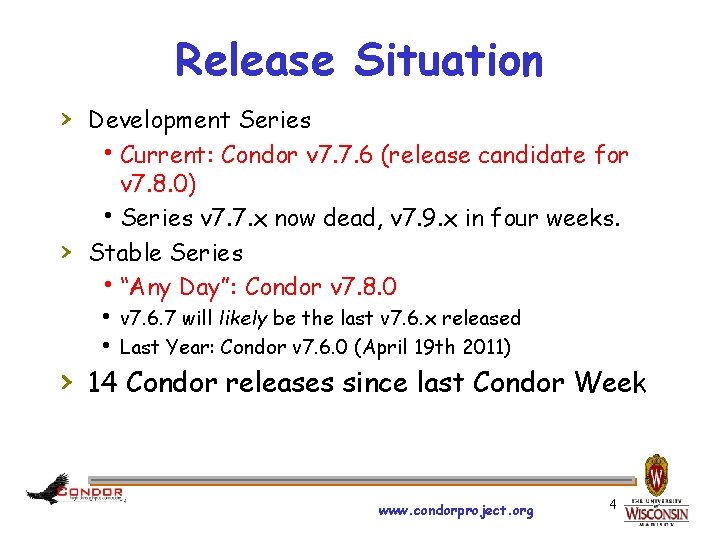

Release Situation › Development Series h. Current: Condor v 7. 7. 6 (release candidate for › v 7. 8. 0) h. Series v 7. 7. x now dead, v 7. 9. x in four weeks. Stable Series h“Any Day”: Condor v 7. 8. 0 h v 7. 6. 7 will likely be the last v 7. 6. x released h Last Year: Condor v 7. 6. 0 (April 19 th 2011) › 14 Condor releases since last Condor Week www. condorproject. org 4

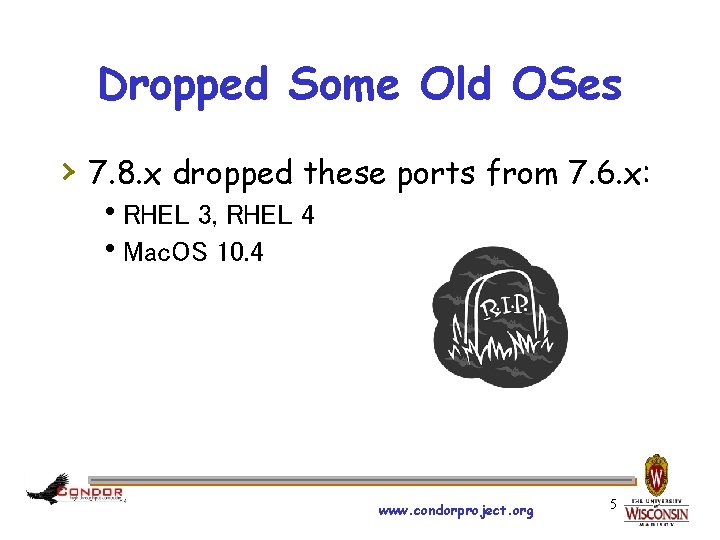

Dropped Some Old OSes › 7. 8. x dropped these ports from 7. 6. x: h. RHEL 3, RHEL 4 h. Mac. OS 10. 4 www. condorproject. org 5

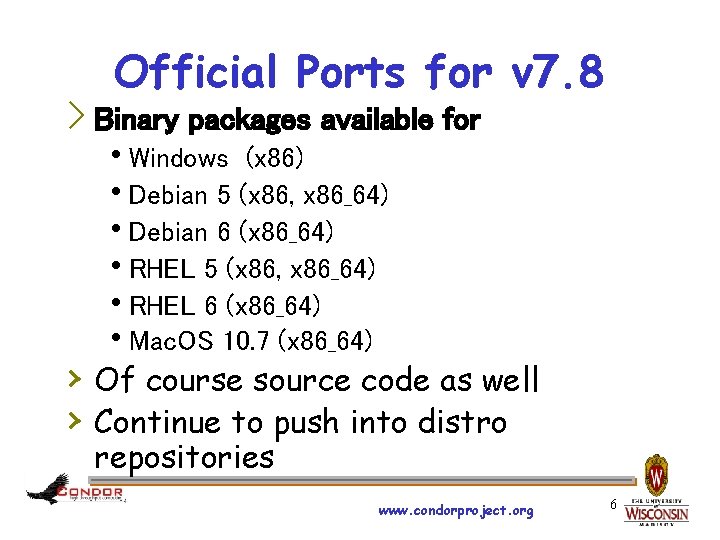

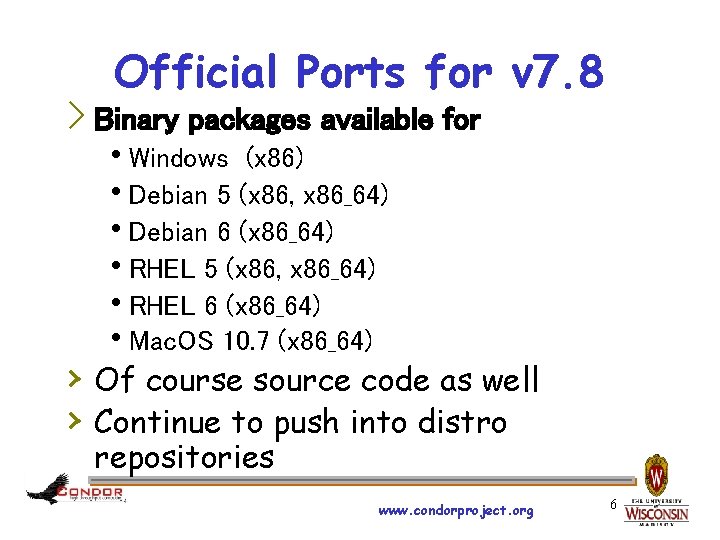

Official Ports for v 7. 8 › Binary packages available for h. Windows (x 86) h. Debian 5 (x 86, x 86_64) h. Debian 6 (x 86_64) h. RHEL 5 (x 86, x 86_64) h. RHEL 6 (x 86_64) h. Mac. OS 10. 7 (x 86_64) › Of course source code as well › Continue to push into distro repositories www. condorproject. org 6

New goodies in v 7. 6 › › › Scalability enhancements (always…) File Transfer enhancements Grid/Cloud Universe enhancements Hierarchical Accounting Groups Keyboard detection on Vista/Win 7 h. Just put “KBDD” in Daemon_List › Sizeable amount of “Snow Leopard” work… www. condorproject. org 7

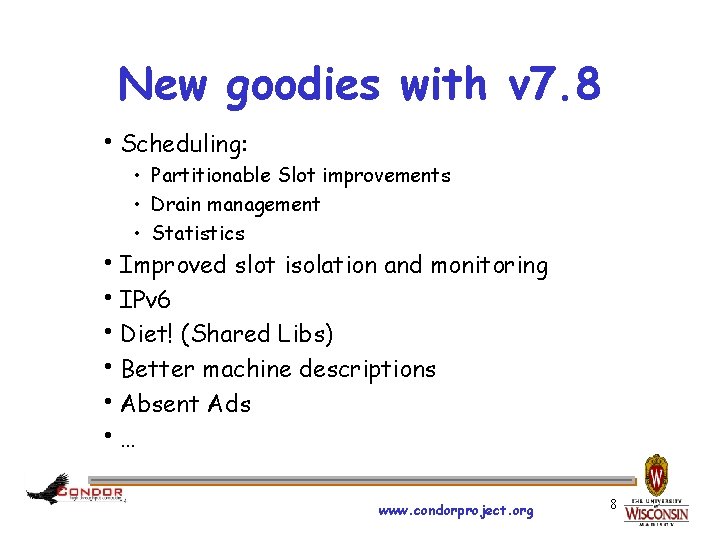

New goodies with v 7. 8 h. Scheduling: • Partitionable Slot improvements • Drain management • Statistics h. Improved slot isolation and monitoring h. IPv 6 h. Diet! (Shared Libs) h. Better machine descriptions h. Absent Ads h… www. condorproject. org 8

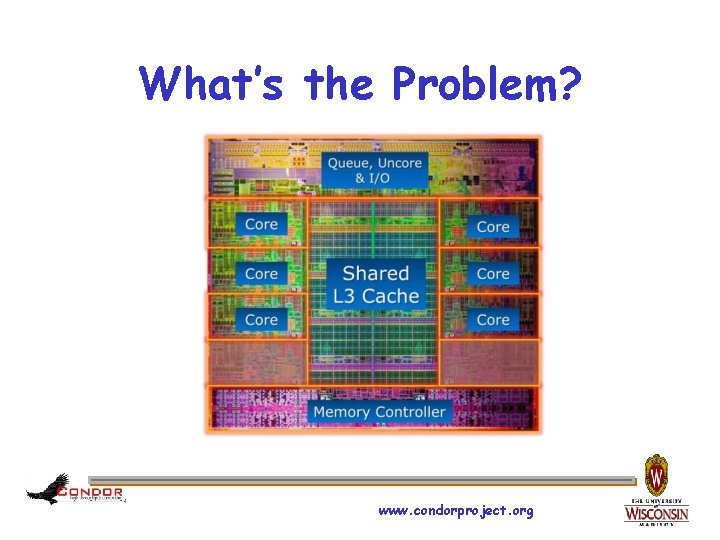

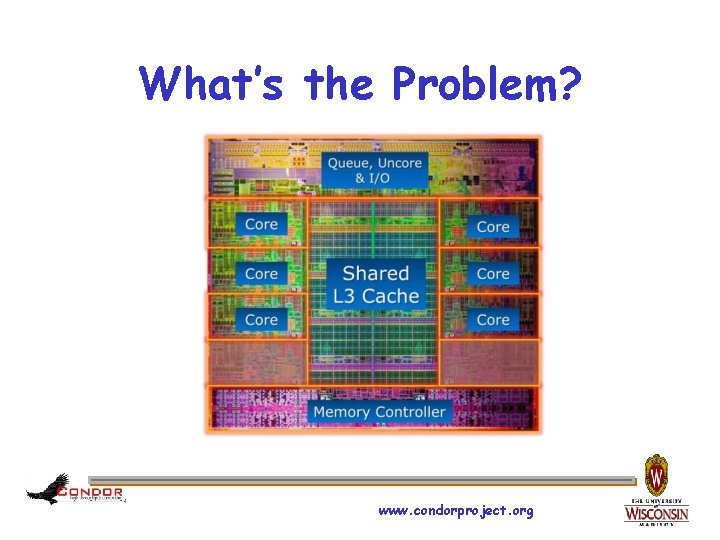

What’s the Problem? www. condorproject. org

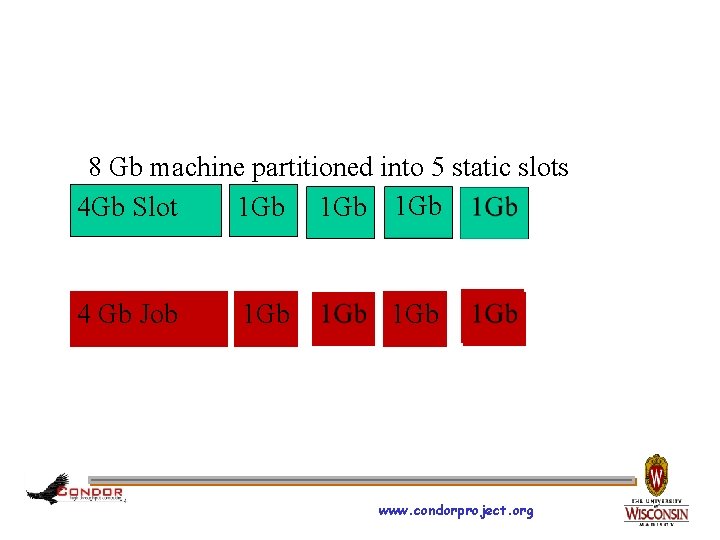

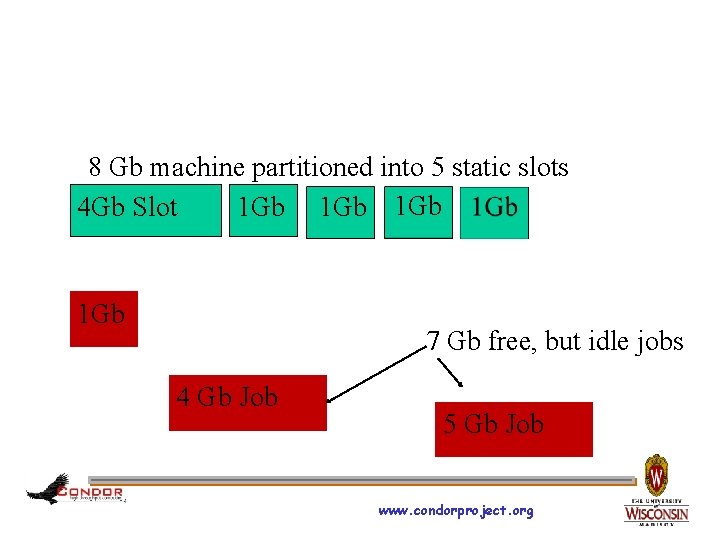

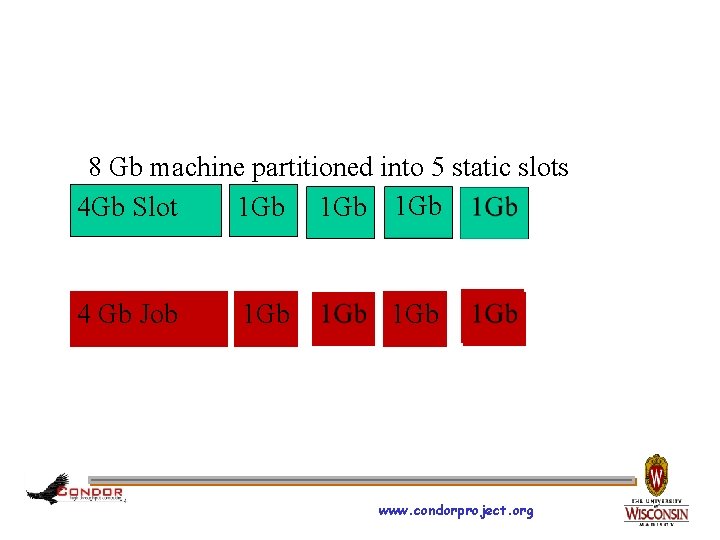

8 Gb machine partitioned into 5 static slots 4 Gb Slot 1 Gb 1 Gb 4 Gb Job 1 Gb www. condorproject. org

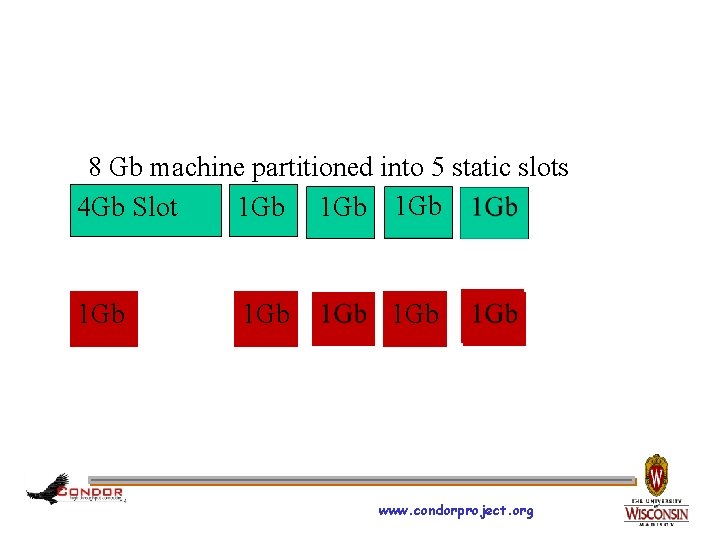

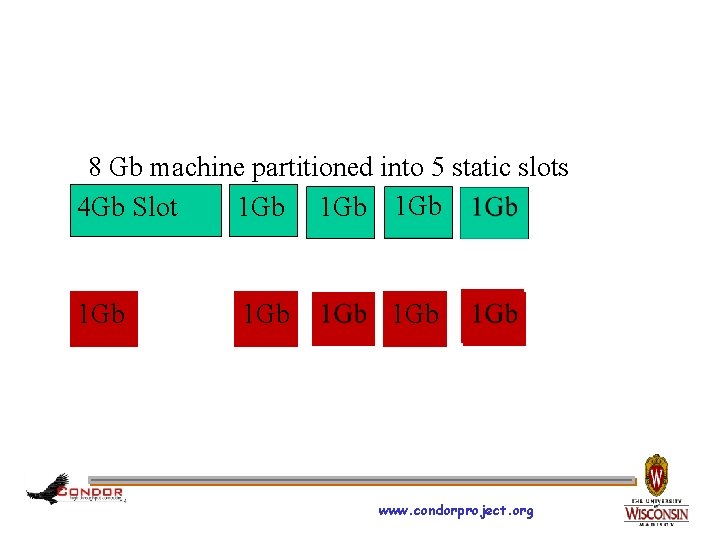

8 Gb machine partitioned into 5 static slots 4 Gb Slot 1 Gb 1 Gb 1 Gb www. condorproject. org

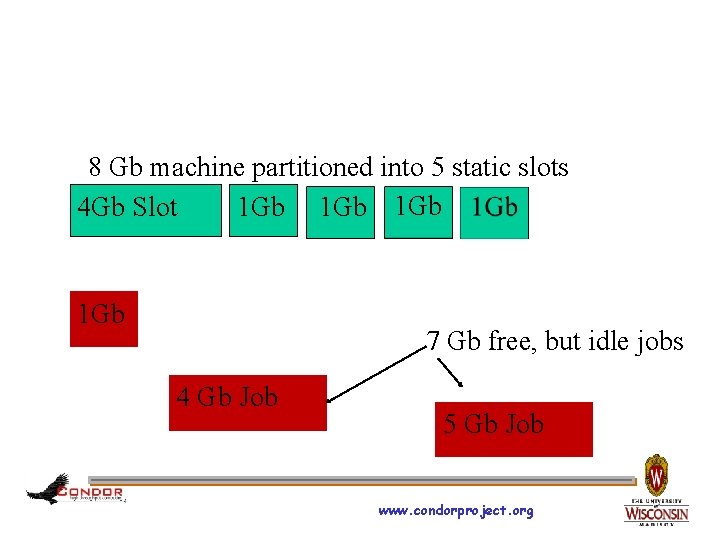

8 Gb machine partitioned into 5 static slots 4 Gb Slot 1 Gb 1 Gb 7 Gb free, but idle jobs 4 Gb Job 5 Gb Job www. condorproject. org

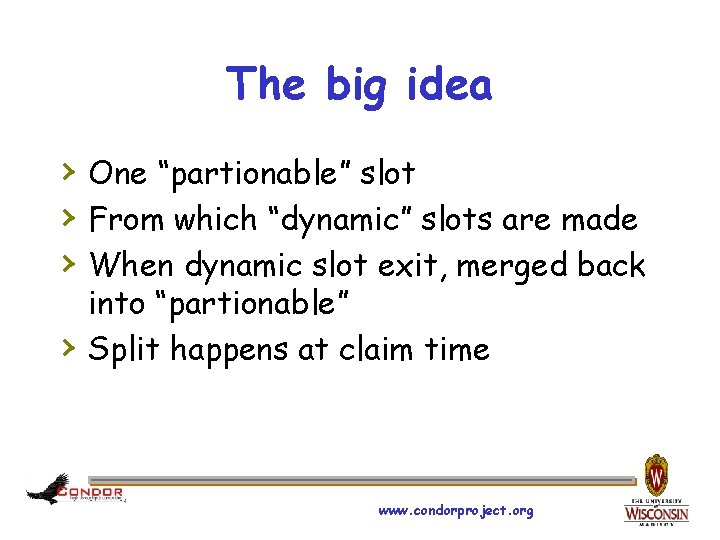

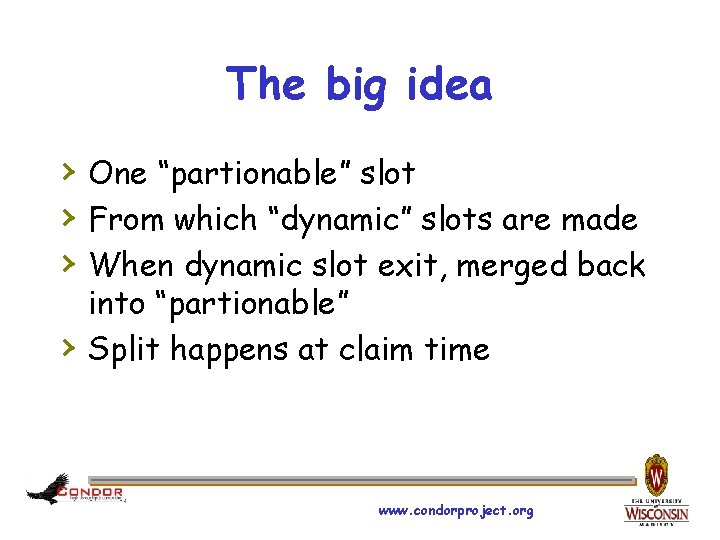

The big idea › One “partionable” slot › From which “dynamic” slots are made › When dynamic slot exit, merged back › into “partionable” Split happens at claim time www. condorproject. org

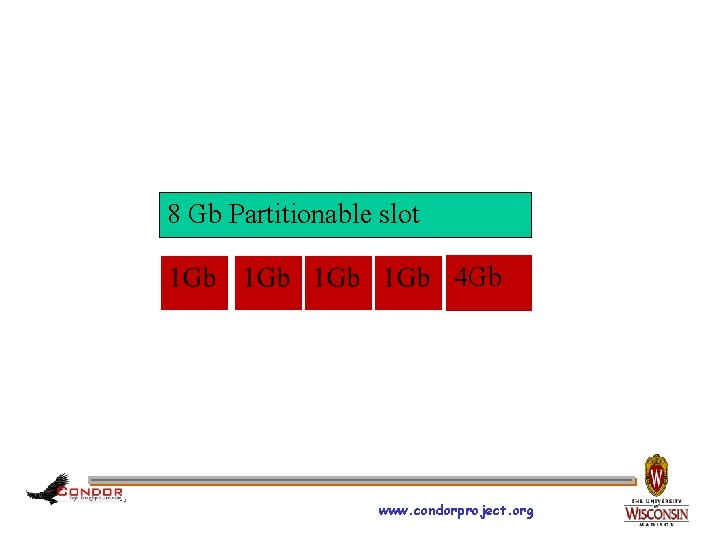

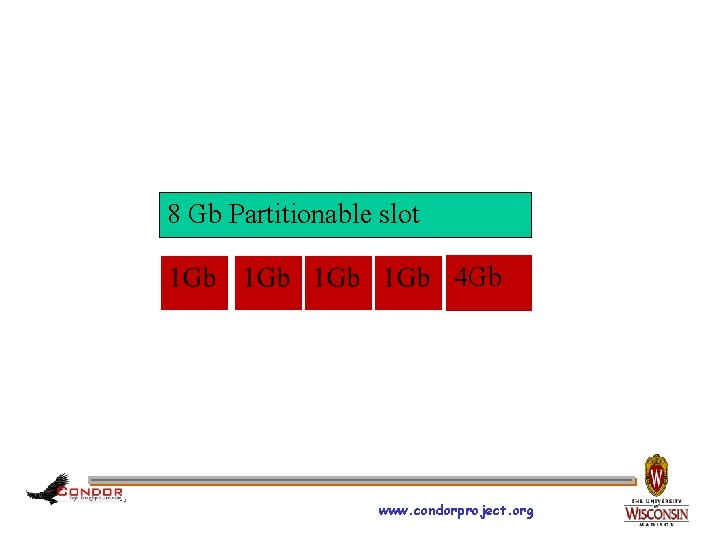

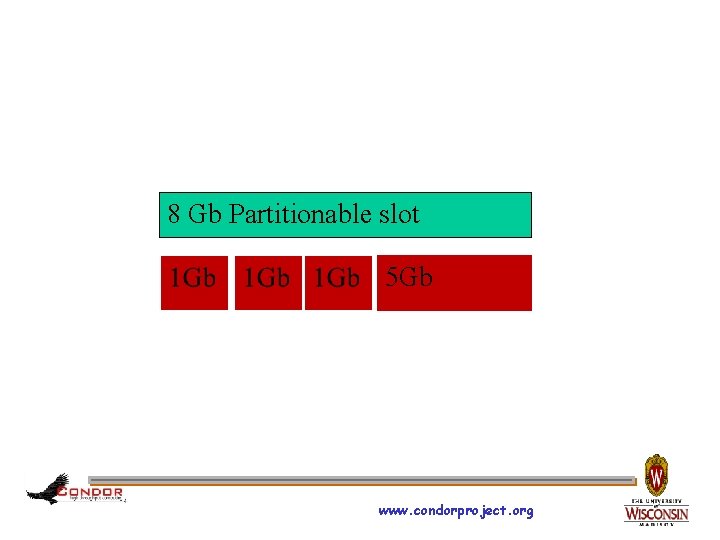

8 Gb Partitionable slot 4 Gb www. condorproject. org

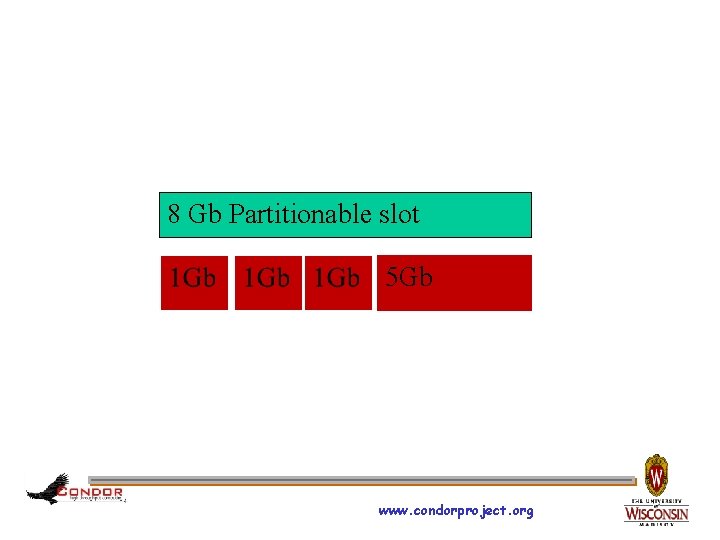

8 Gb Partitionable slot 5 Gb www. condorproject. org

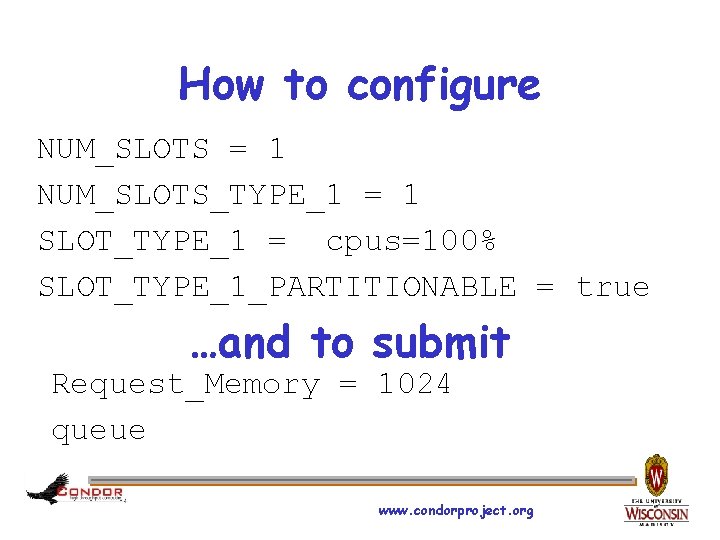

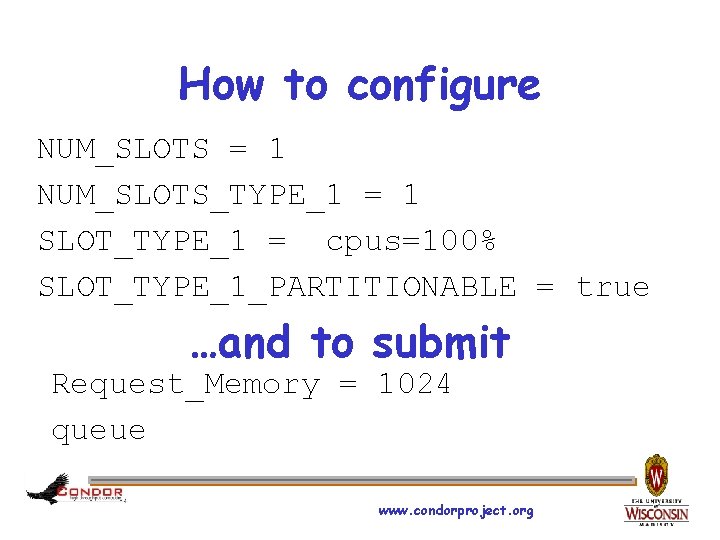

How to configure NUM_SLOTS = 1 NUM_SLOTS_TYPE_1 = 1 SLOT_TYPE_1 = cpus=100% SLOT_TYPE_1_PARTITIONABLE = true …and to submit Request_Memory = 1024 queue www. condorproject. org

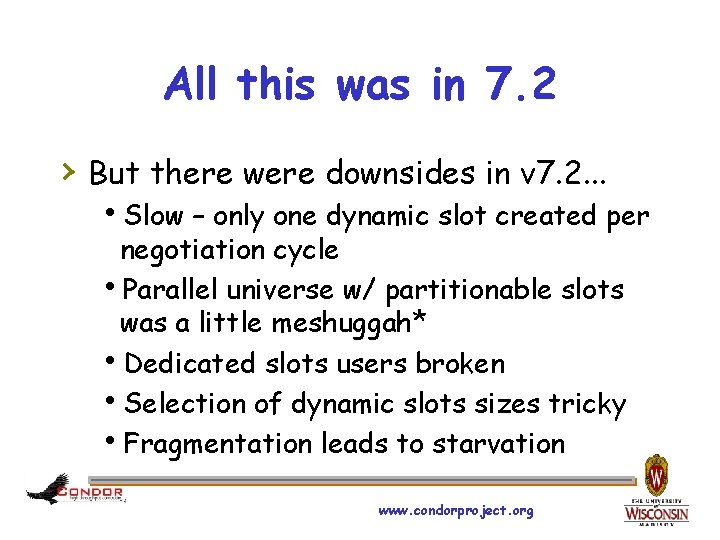

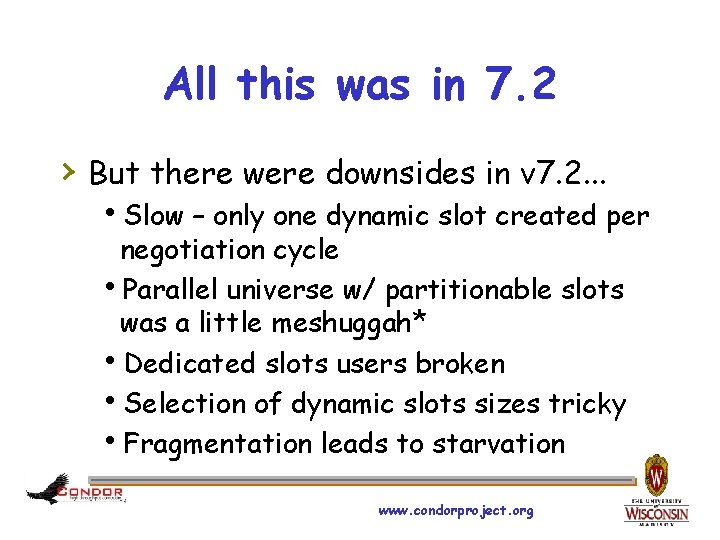

All this was in 7. 2 › But there were downsides in v 7. 2. . . h. Slow – only one dynamic slot created per negotiation cycle h. Parallel universe w/ partitionable slots was a little meshuggah* h. Dedicated slots users broken h. Selection of dynamic slots sizes tricky h. Fragmentation leads to starvation www. condorproject. org

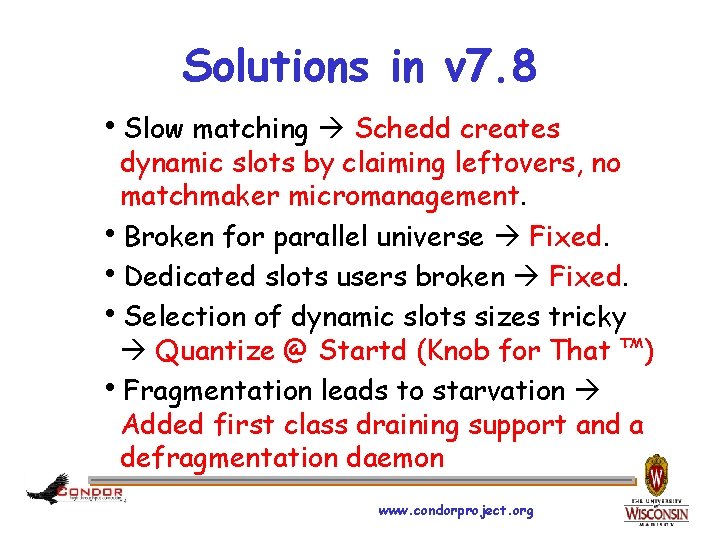

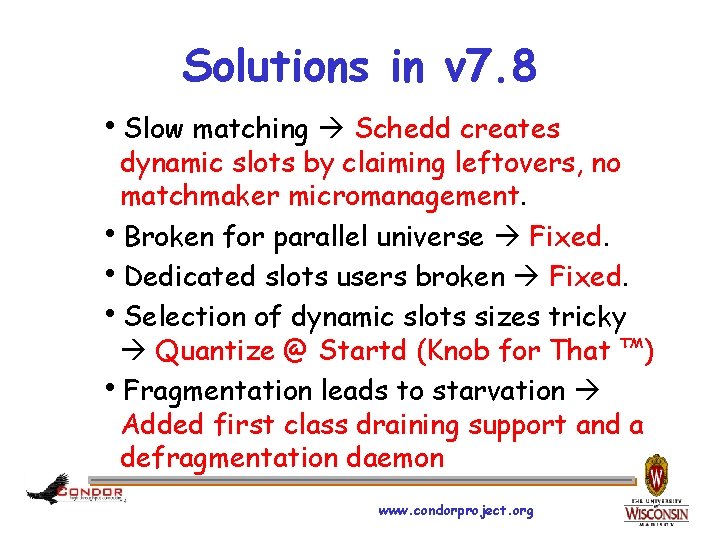

Solutions in v 7. 8 h. Slow matching Schedd creates dynamic slots by claiming leftovers, no matchmaker micromanagement. h. Broken for parallel universe Fixed. h. Dedicated slots users broken Fixed. h. Selection of dynamic slots sizes tricky Quantize @ Startd (Knob for That ™) h. Fragmentation leads to starvation Added first class draining support and a defragmentation daemon www. condorproject. org

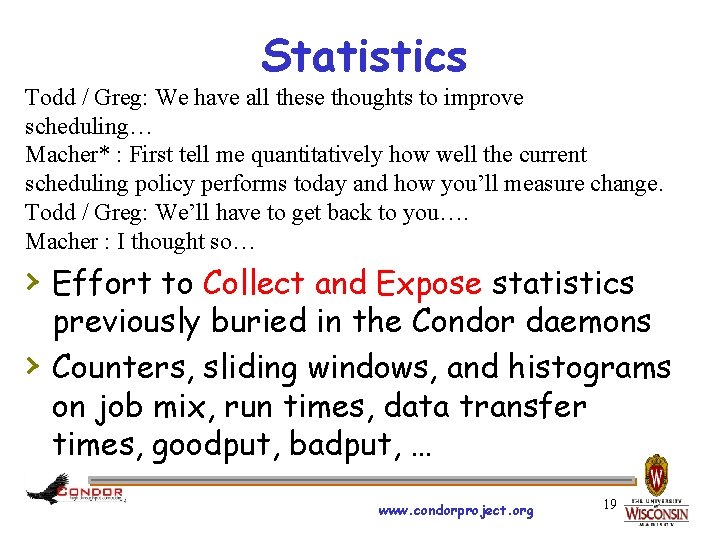

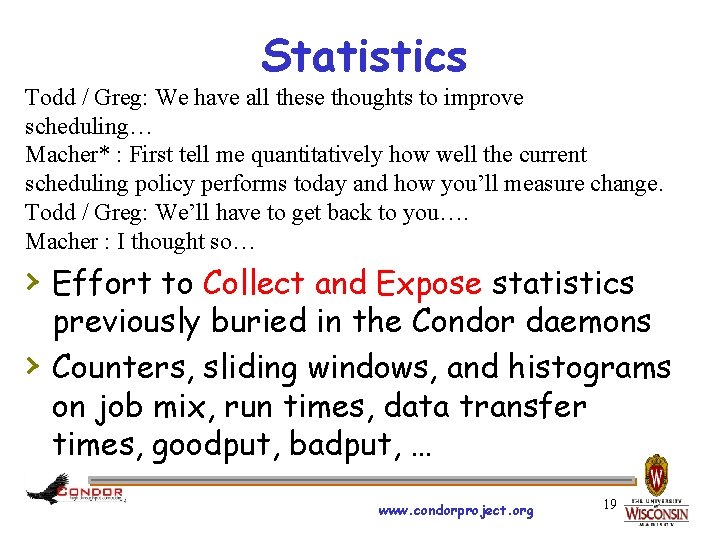

Statistics Todd / Greg: We have all these thoughts to improve scheduling… Macher* : First tell me quantitatively how well the current scheduling policy performs today and how you’ll measure change. Todd / Greg: We’ll have to get back to you…. Macher : I thought so… › Effort to Collect and Expose statistics › previously buried in the Condor daemons Counters, sliding windows, and histograms on job mix, run times, data transfer times, goodput, badput, … www. condorproject. org 19

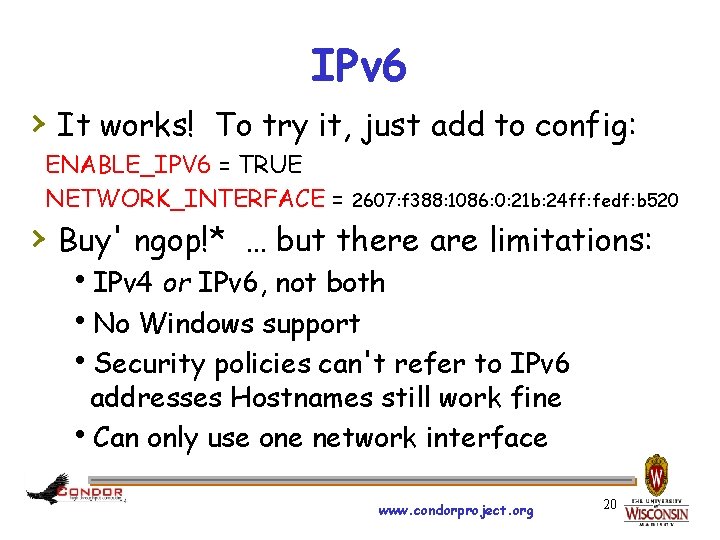

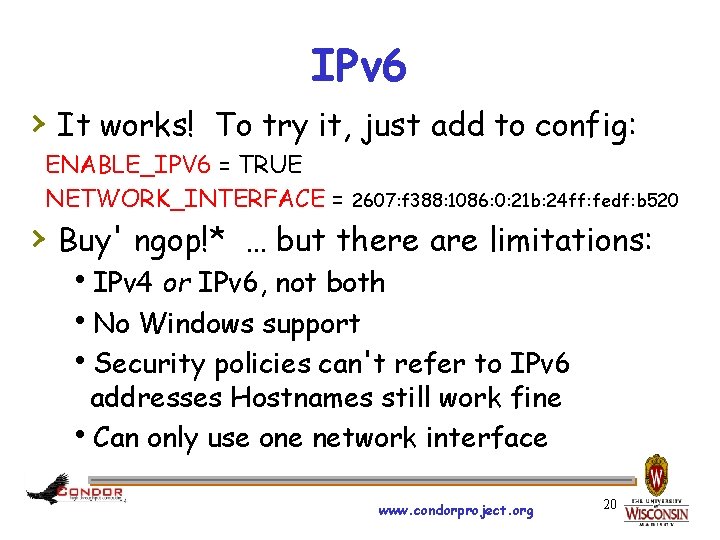

IPv 6 › It works! To try it, just add to config: ENABLE_IPV 6 = TRUE NETWORK_INTERFACE = 2607: f 388: 1086: 0: 21 b: 24 ff: fedf: b 520 › Buy' ngop!* … but there are limitations: h. IPv 4 or IPv 6, not both h. No Windows support h. Security policies can't refer to IPv 6 addresses Hostnames still work fine h. Can only use one network interface www. condorproject. org 20

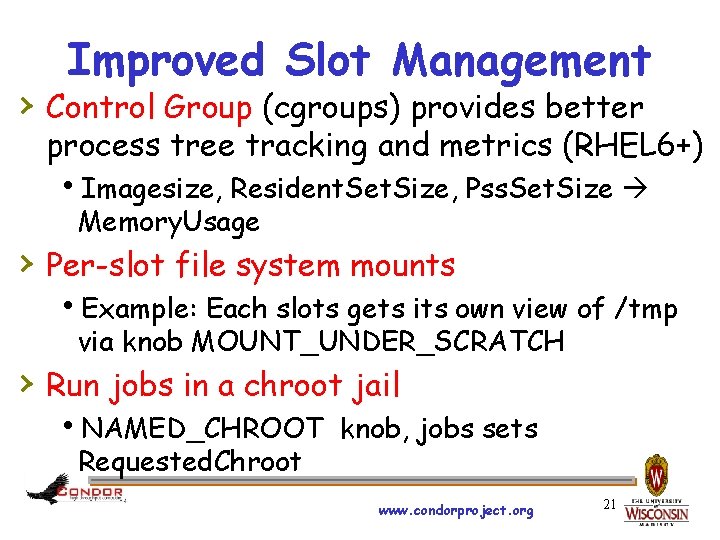

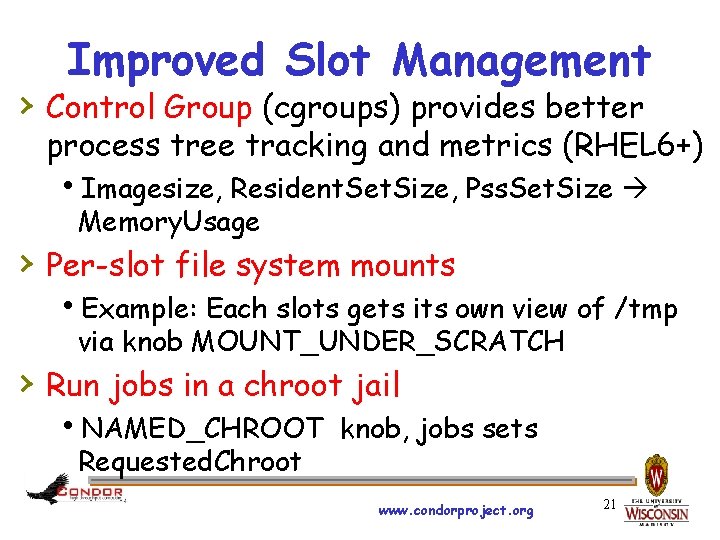

Improved Slot Management › Control Group (cgroups) provides better process tree tracking and metrics (RHEL 6+) h. Imagesize, Resident. Set. Size, Pss. Set. Size Memory. Usage › Per-slot file system mounts h. Example: Each slots gets its own view of /tmp via knob MOUNT_UNDER_SCRATCH › Run jobs in a chroot jail h. NAMED_CHROOT knob, jobs sets Requested. Chroot www. condorproject. org 21

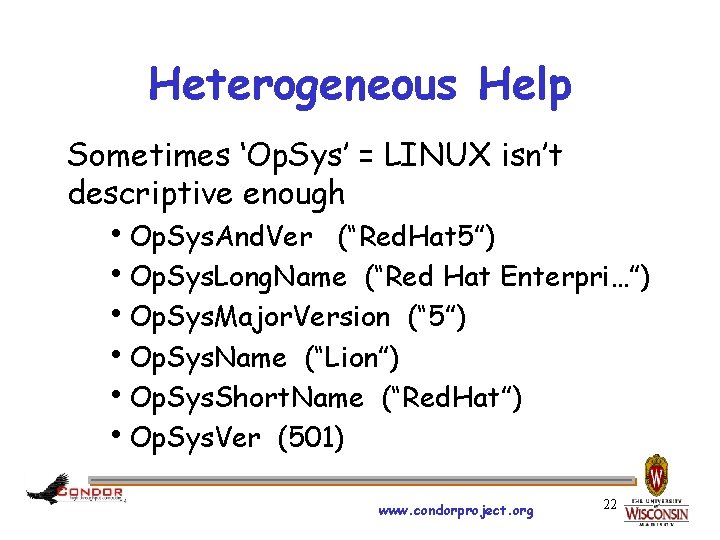

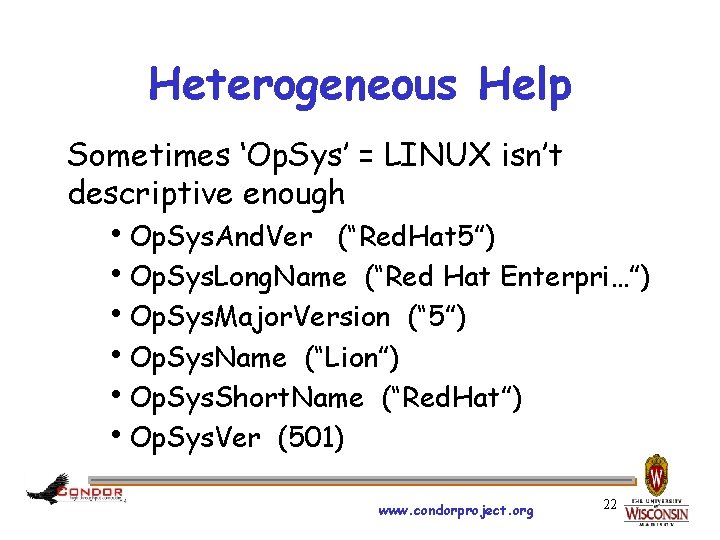

Heterogeneous Help Sometimes ‘Op. Sys’ = LINUX isn’t descriptive enough h. Op. Sys. And. Ver (“Red. Hat 5”) h. Op. Sys. Long. Name (“Red Hat Enterpri…”) h. Op. Sys. Major. Version (“ 5”) h. Op. Sys. Name (“Lion”) h. Op. Sys. Short. Name (“Red. Hat”) h. Op. Sys. Ver (501) www. condorproject. org 22

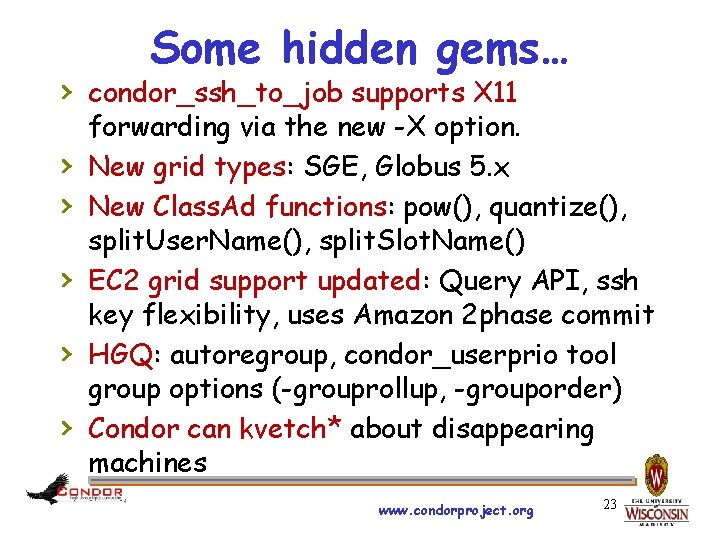

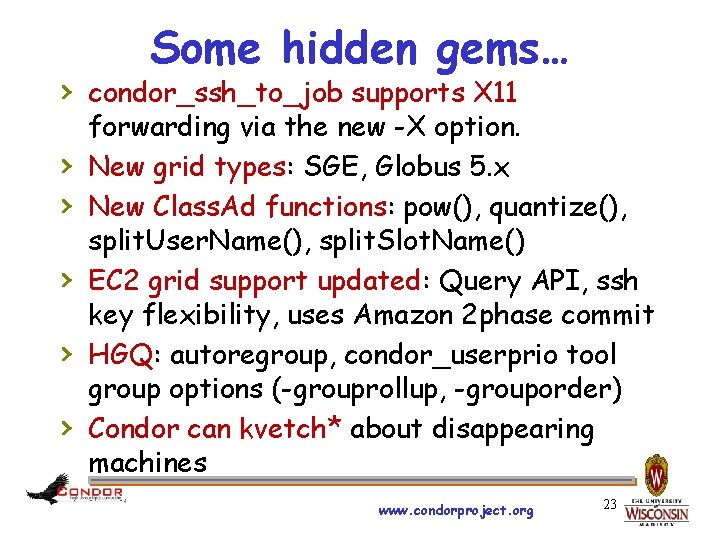

Some hidden gems… › condor_ssh_to_job supports X 11 › › › forwarding via the new -X option. New grid types: SGE, Globus 5. x New Class. Ad functions: pow(), quantize(), split. User. Name(), split. Slot. Name() EC 2 grid support updated: Query API, ssh key flexibility, uses Amazon 2 phase commit HGQ: autoregroup, condor_userprio tool group options (-grouprollup, -grouporder) Condor can kvetch* about disappearing machines www. condorproject. org 23

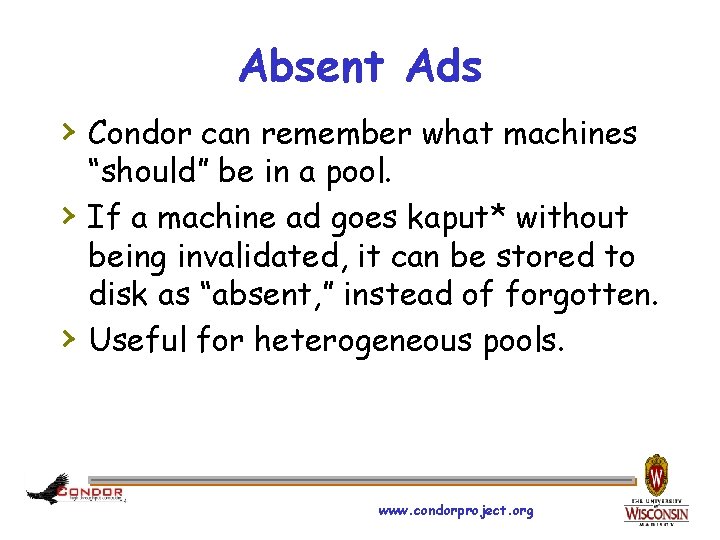

Absent Ads › Condor can remember what machines › › “should” be in a pool. If a machine ad goes kaput* without being invalidated, it can be stored to disk as “absent, ” instead of forgotten. Useful for heterogeneous pools. www. condorproject. org

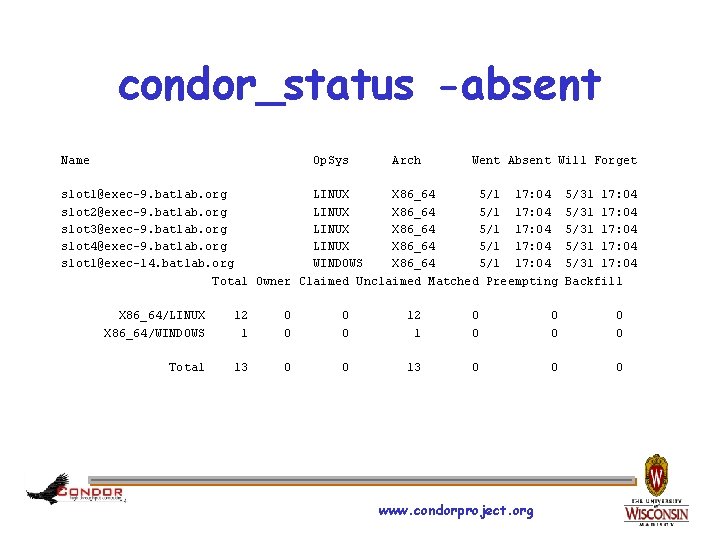

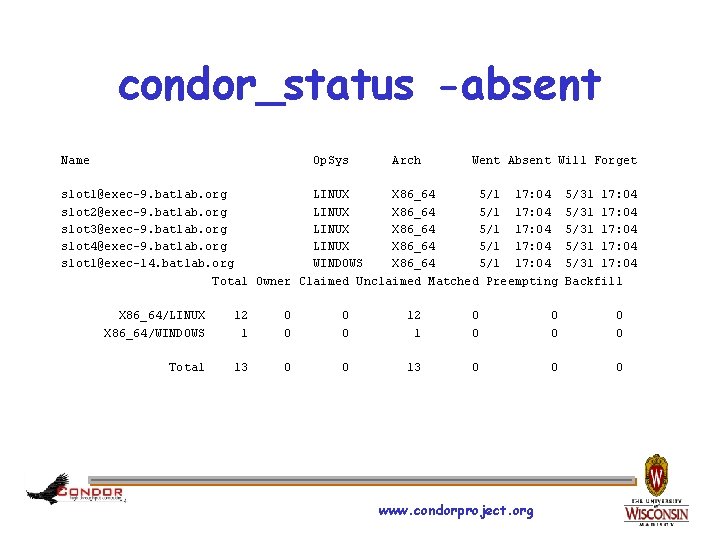

condor_status -absent Name Op. Sys Arch Went Absent Will Forget slot 1@exec-9. batlab. org LINUX X 86_64 5/1 17: 04 slot 2@exec-9. batlab. org LINUX X 86_64 5/1 17: 04 slot 3@exec-9. batlab. org LINUX X 86_64 5/1 17: 04 slot 4@exec-9. batlab. org LINUX X 86_64 5/1 17: 04 slot 1@exec-14. batlab. org WINDOWS X 86_64 5/1 17: 04 Total Owner Claimed Unclaimed Matched Preempting 5/31 17: 04 5/31 17: 04 Backfill X 86_64/LINUX X 86_64/WINDOWS 12 1 0 0 0 Total 13 0 0 0 www. condorproject. org

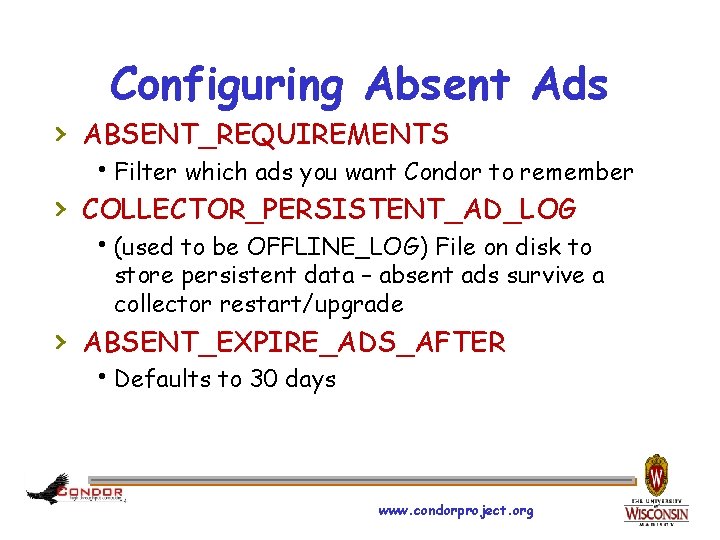

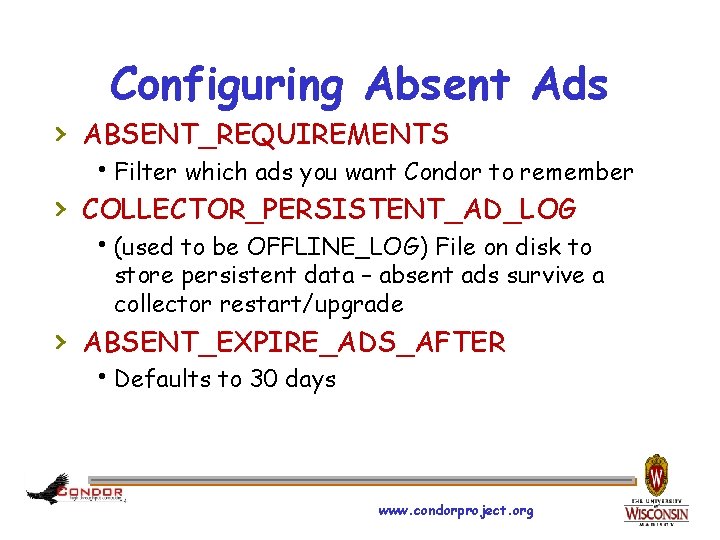

Configuring Absent Ads › ABSENT_REQUIREMENTS h. Filter which ads you want Condor to remember › COLLECTOR_PERSISTENT_AD_LOG h(used to be OFFLINE_LOG) File on disk to store persistent data – absent ads survive a collector restart/upgrade › ABSENT_EXPIRE_ADS_AFTER h. Defaults to 30 days www. condorproject. org

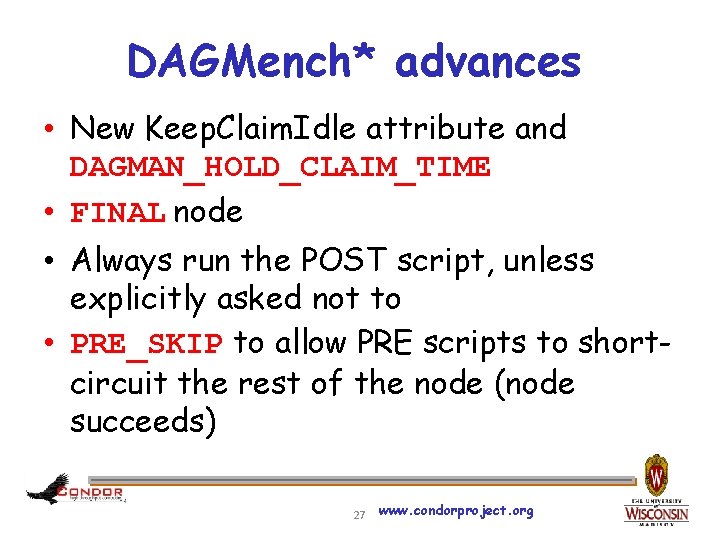

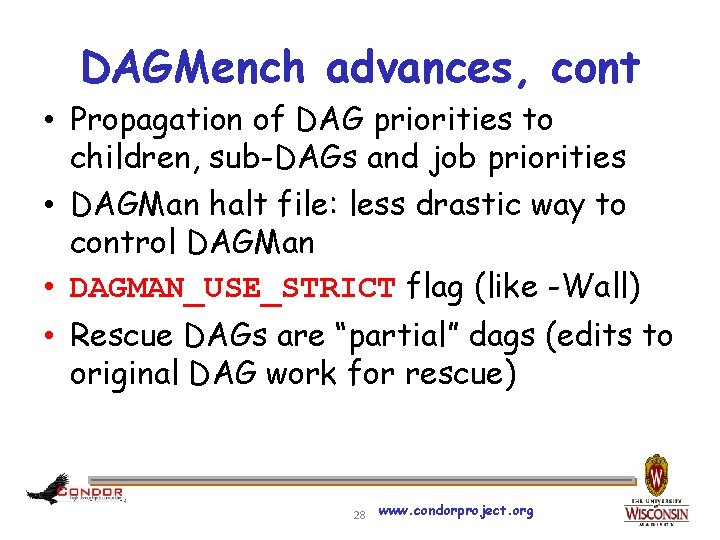

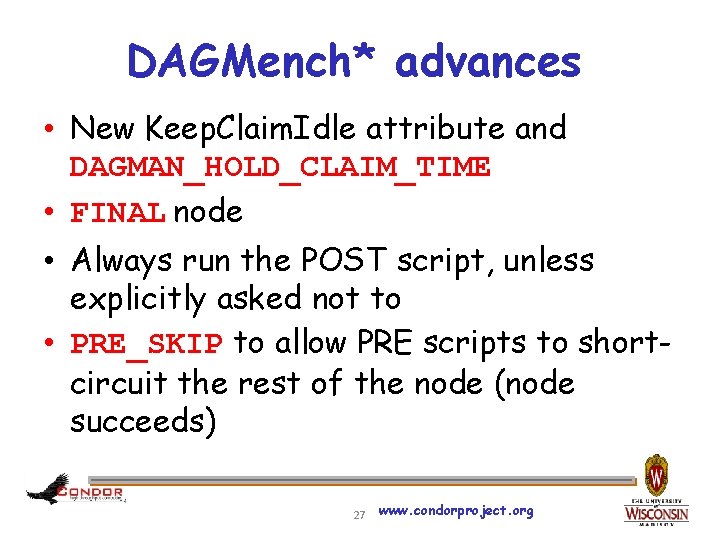

DAGMench* advances • New Keep. Claim. Idle attribute and DAGMAN_HOLD_CLAIM_TIME • FINAL node • Always run the POST script, unless explicitly asked not to • PRE_SKIP to allow PRE scripts to shortcircuit the rest of the node (node succeeds) 27 www. condorproject. org

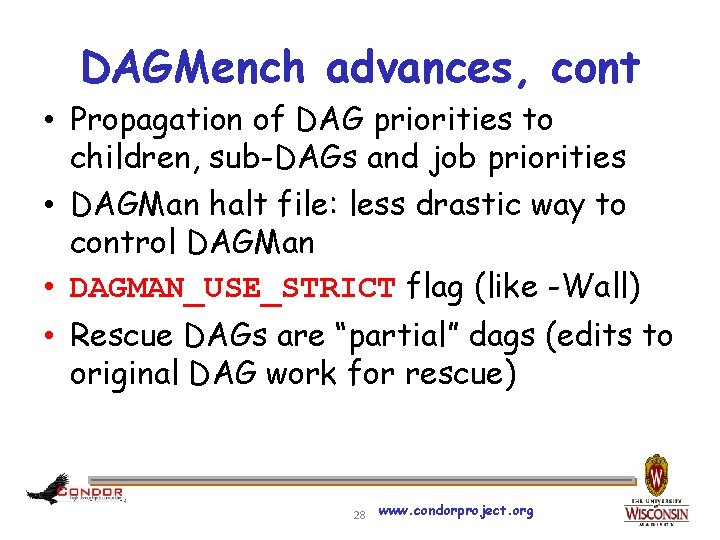

DAGMench advances, cont • Propagation of DAG priorities to children, sub-DAGs and job priorities • DAGMan halt file: less drastic way to control DAGMan • DAGMAN_USE_STRICT flag (like -Wall) • Rescue DAGs are “partial” dags (edits to original DAG work for rescue) 28 www. condorproject. org

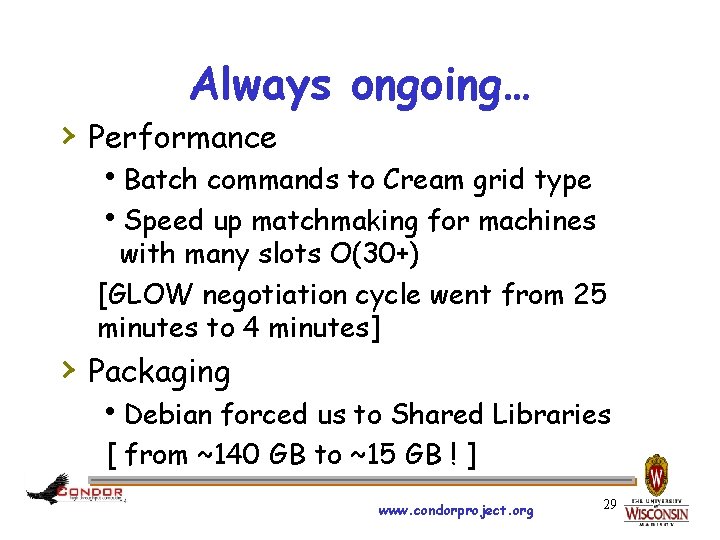

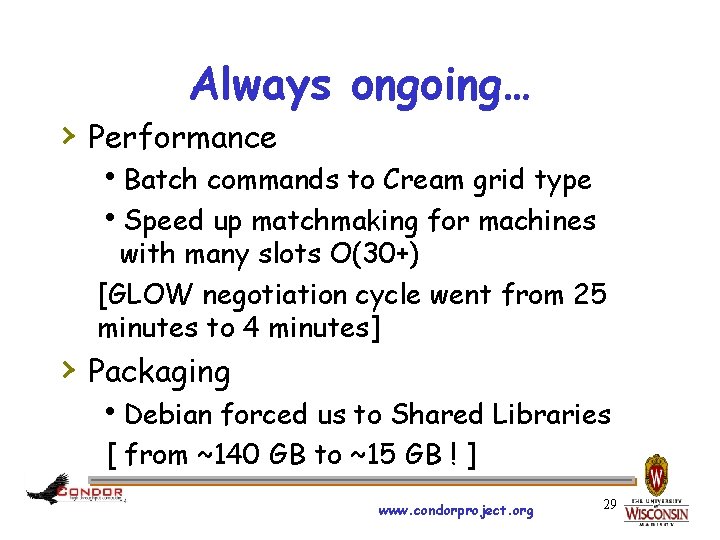

Always ongoing… › Performance h. Batch commands to Cream grid type h. Speed up matchmaking for machines with many slots O(30+) [GLOW negotiation cycle went from 25 minutes to 4 minutes] › Packaging h. Debian forced us to Shared Libraries [ from ~140 GB to ~15 GB ! ] www. condorproject. org 29

www. condorproject. org 30

Terms of License Any and all dates in these slides are relative from a date hereby unspecified in the event of a likely situation involving a frequent condition. Viewing, use, reproduction, display, modification and redistribution of these slides, with or without modification, in source and binary forms, is permitted only after a deposit by said user into Pay. Pal accounts registered to Todd Tannenbaum …. www. condorproject. org 31

What to do, what to do… › Talk to › › › community Prioritize, categorize Plan (Design Document) Implement https: //condor-wiki. cs. wisc. edu/index. cgi/tktview? tn=2961 www. condorproject. org 32

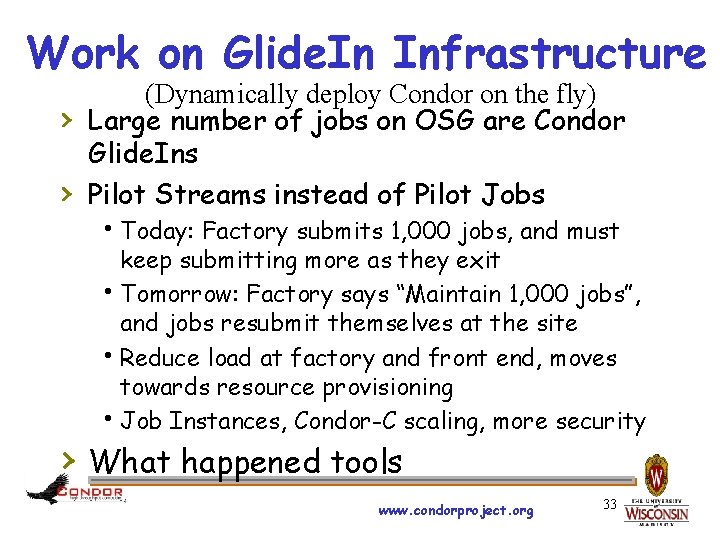

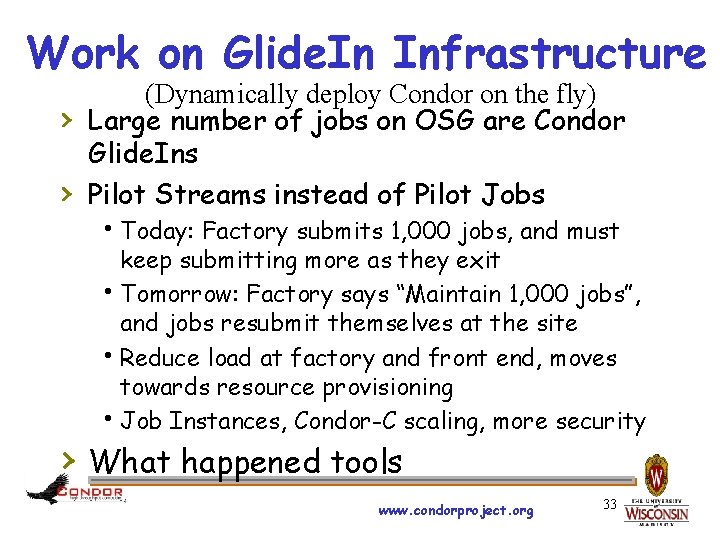

Work on Glide. In Infrastructure › › (Dynamically deploy Condor on the fly) Large number of jobs on OSG are Condor Glide. Ins Pilot Streams instead of Pilot Jobs h. Today: Factory submits 1, 000 jobs, and must keep submitting more as they exit h. Tomorrow: Factory says “Maintain 1, 000 jobs”, and jobs resubmit themselves at the site h. Reduce load at factory and front end, moves towards resource provisioning h. Job Instances, Condor-C scaling, more security › What happened tools www. condorproject. org 33

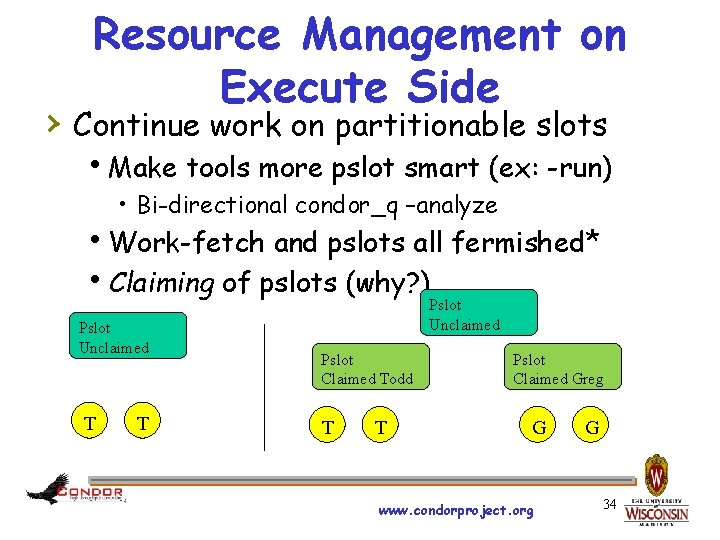

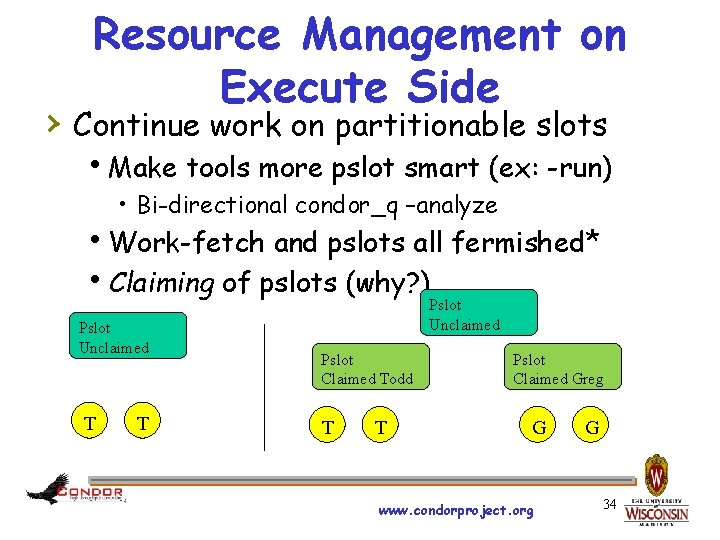

Resource Management on Execute Side › Continue work on partitionable slots h. Make tools more pslot smart (ex: -run) • Bi-directional condor_q –analyze h. Work-fetch and pslots all fermished* h. Claiming of pslots (why? ) Pslot Unclaimed T T Pslot Unclaimed Pslot Claimed Todd T T Pslot Claimed Greg G www. condorproject. org G 34

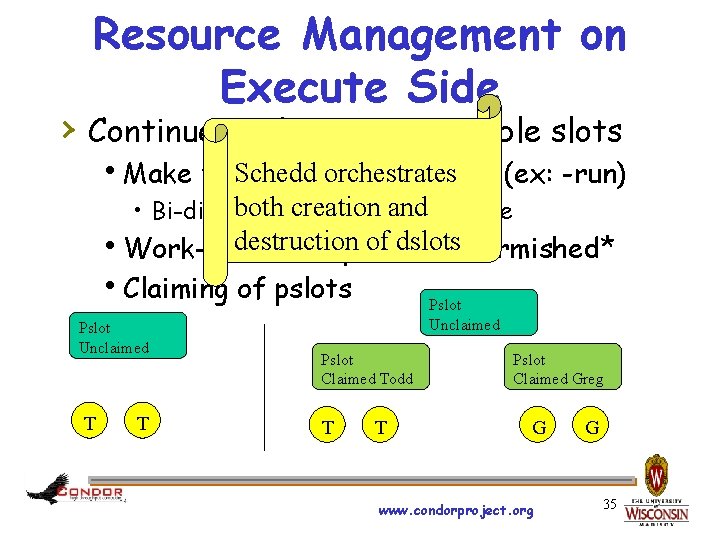

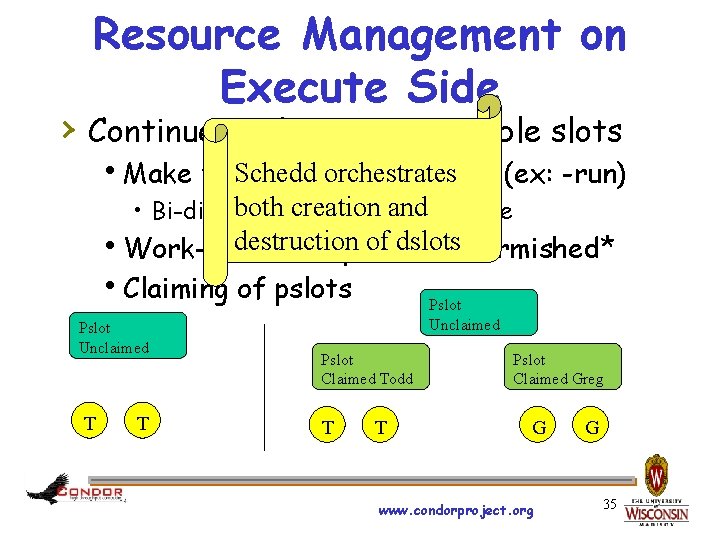

Resource Management on Execute Side › Continue work on partitionable slots Schedd orchestrates h. Make tools more pslot smart (ex: -run) both creation and–analyze • Bi-directional condor_q destruction of dslots h. Work-fetch and pslots all fermished* h. Claiming of pslots Pslot Unclaimed T T Pslot Unclaimed Pslot Claimed Todd T T Pslot Claimed Greg G www. condorproject. org G 35

Resource Management on Execute Side, cont. › GPUs h. Support “out of the box” for CUDA : discovery, monitor, provision, isolate, validate › Continue Job Sandboxing work h. On Linux: Continue to leverage cgroups esp RAM usage isolation, network isolation via network namespaces h. Use Job. Objects, IO manager on Windows www. condorproject. org 36

Resource Management on Submit Side › Local Universe Jobs managed by a co› located Startd Sandbox Movement h. Offload sandbox movement from the submit machine h. Leverage HTTP caching in a more usedfriendly manner › Optimize Shadow usage www. condorproject. org 37

Oy vey!* Last but not least… › Class. Ad Scalability: (1) Memory, (2) › › › Performance Heard earlier about: Bosco Work, UCS work Ckpt in Vanilla Universe Overlap transfer of sandbox results with launch of the next job www. condorproject. org 38

Challenge! “Overlap transfer of sandbox results with launch of the next job” › Will it help your job mix? How much time is spent transferring back output? › With Condor v 7. 7. 2 or greater, schedd statistics can answer that! www. condorproject. org 39

Thank you! Keep the community chatter going on condor-users! www. condorproject. org 40

Requirements = Honor. Mom. And. Dad==True && Steal =? = False && Murder =? = False… Rank = Kindness + Modesty … www. condorproject. org 41

Whats condor

Whats condor Whats a condor

Whats a condor Week by week plans for documenting children's development

Week by week plans for documenting children's development What goes up but never comes down

What goes up but never comes down Growing pains for the new nation

Growing pains for the new nation El significado de los símbolos patrios

El significado de los símbolos patrios Dr milena ruiz

Dr milena ruiz Condor distributed computing

Condor distributed computing Condor aero club

Condor aero club Condor soaring

Condor soaring Bagne de poulo condor

Bagne de poulo condor Apis daten condor

Apis daten condor Condor job flavour

Condor job flavour Airbus lms

Airbus lms The condor cluster

The condor cluster I'd rather be a spider than a snail

I'd rather be a spider than a snail Condor distributed computing

Condor distributed computing Condor de1668

Condor de1668 Condor scheduler

Condor scheduler Condor homepage

Condor homepage Condor grid

Condor grid Condor atm

Condor atm Purpose of discourse analysis

Purpose of discourse analysis Condor software

Condor software Condor grid

Condor grid Condor v barron knights

Condor v barron knights Snyder introduction to the california condor download

Snyder introduction to the california condor download Coming down the pike

Coming down the pike Good afternoon ladies and gentlemen

Good afternoon ladies and gentlemen Trees larkin

Trees larkin Alliteration and simile

Alliteration and simile Every afternoon as they were coming from school

Every afternoon as they were coming from school The second coming 27

The second coming 27 The coming kingdom andy woods

The coming kingdom andy woods Leonard bernstein something's coming

Leonard bernstein something's coming Knapps stage model

Knapps stage model Coming down of the holy spirit

Coming down of the holy spirit Mine eyes have seen the coming of the lord

Mine eyes have seen the coming of the lord Lower end media users

Lower end media users John announced

John announced Jesus is coming soon revelation

Jesus is coming soon revelation Its friday th

Its friday th