What should be done at the Low Level

What should be done at the Low Level? 16 -721: Learning-Based Methods in Vision A. Efros, CMU, Spring 2009

Class Introductions • • • Name: Research area / project / advisor What you want to learn in this class? When I am not working, I _______ Favorite fruit:

Analysis Projects / Presentations Wed: Varun note-taker: Dan Next Wed: Dan note-taker: Edward Dan and Edward need to meet with me ASAP Varun needs to meet second time

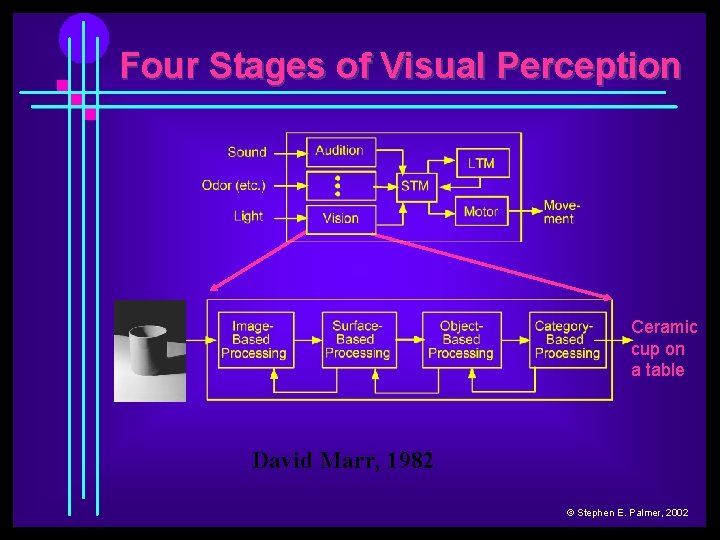

Four Stages of Visual Perception Ceramic cup on a table David Marr, 1982 © Stephen E. Palmer, 2002

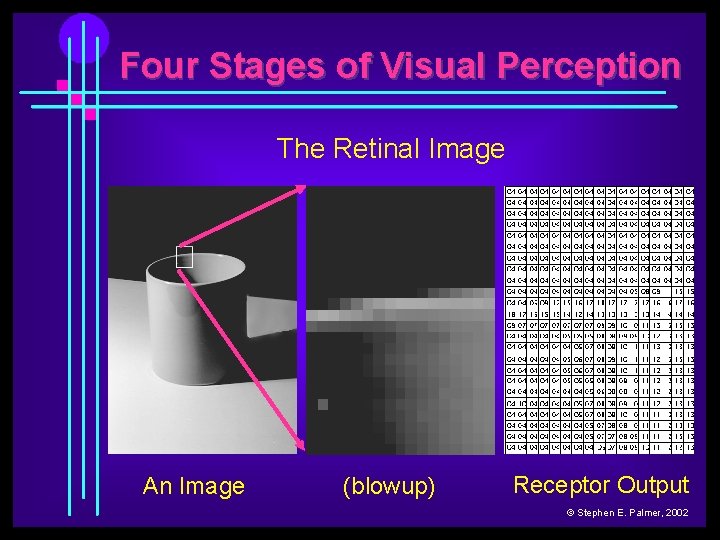

Four Stages of Visual Perception The Retinal Image An Image (blowup) Receptor Output © Stephen E. Palmer, 2002

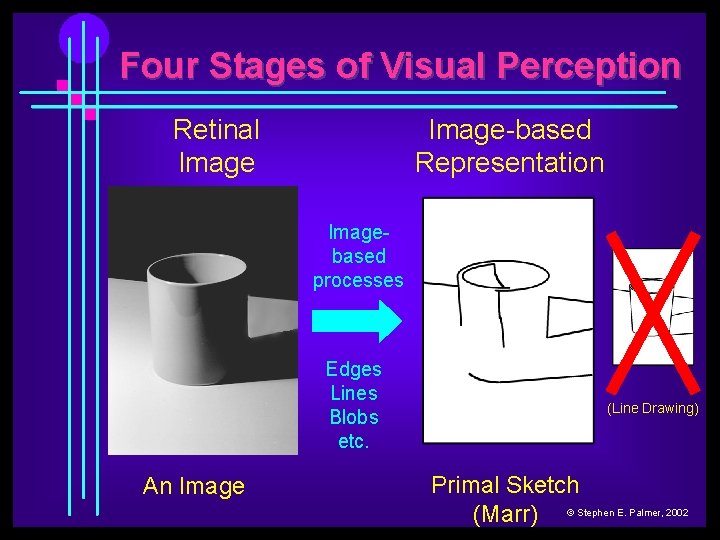

Four Stages of Visual Perception Retinal Image-based Representation Imagebased processes Edges Lines Blobs etc. An Image (Line Drawing) Primal Sketch (Marr) © Stephen E. Palmer, 2002

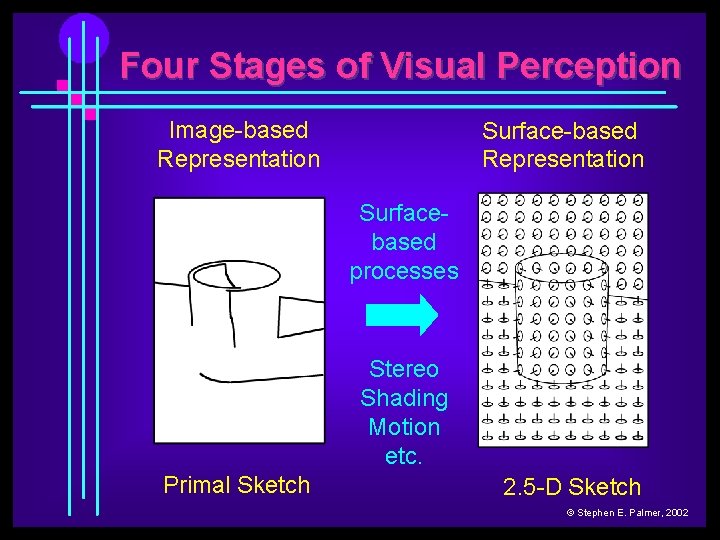

Four Stages of Visual Perception Image-based Representation Surfacebased processes Stereo Shading Motion etc. Primal Sketch 2. 5 -D Sketch © Stephen E. Palmer, 2002

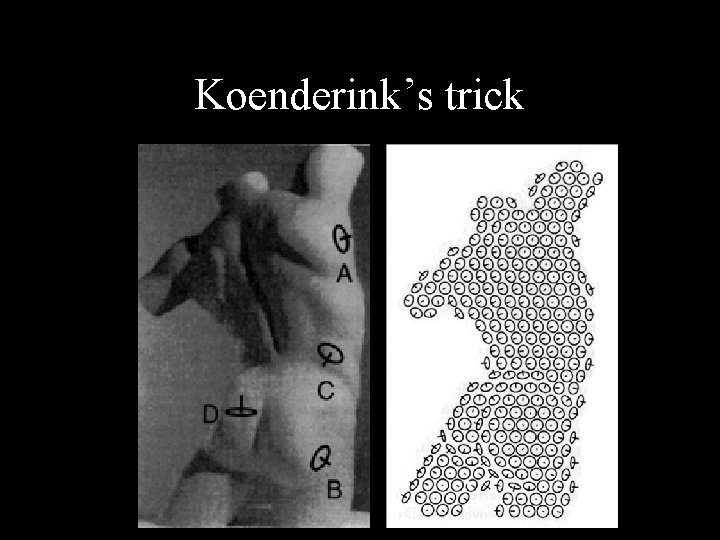

Koenderink’s trick

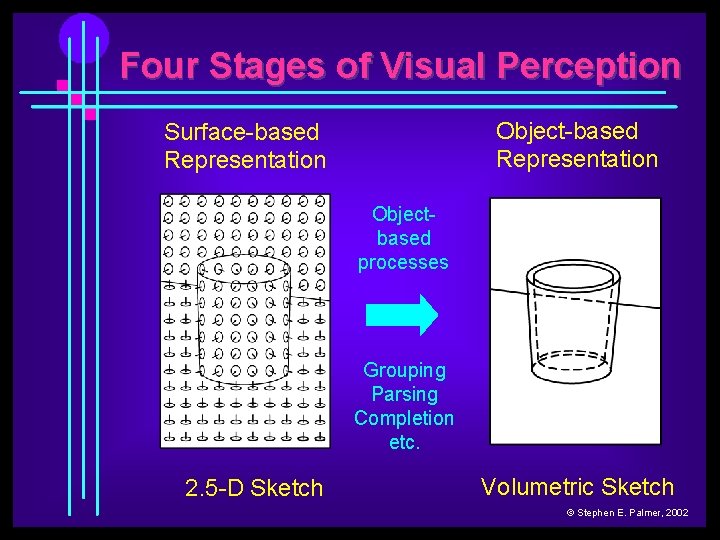

Four Stages of Visual Perception Object-based Representation Surface-based Representation Objectbased processes Grouping Parsing Completion etc. 2. 5 -D Sketch Volumetric Sketch © Stephen E. Palmer, 2002

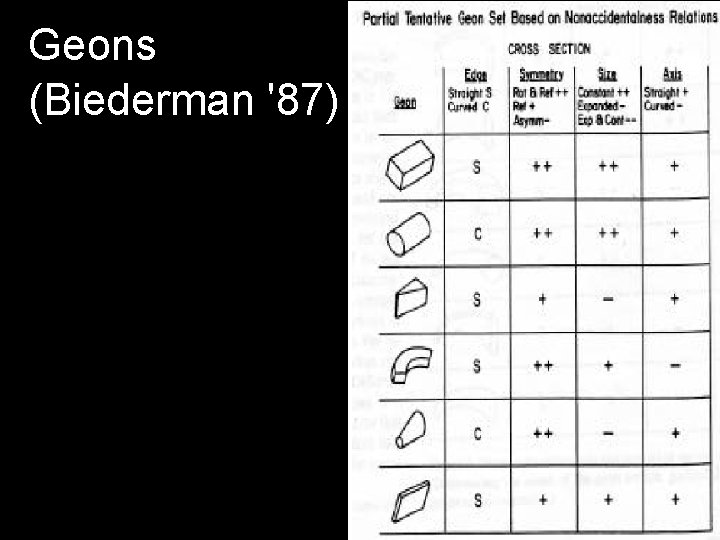

Geons (Biederman '87)

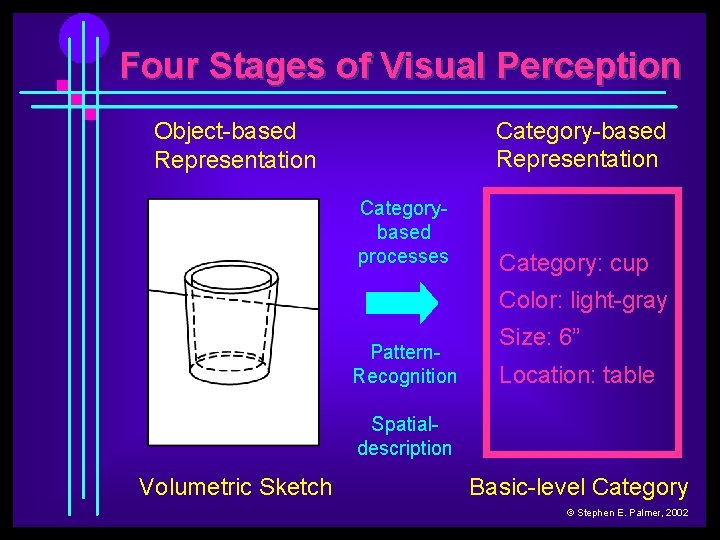

Four Stages of Visual Perception Category-based Representation Object-based Representation Categorybased processes Category: cup Color: light-gray Pattern. Recognition Size: 6” Location: table Spatialdescription Volumetric Sketch Basic-level Category © Stephen E. Palmer, 2002

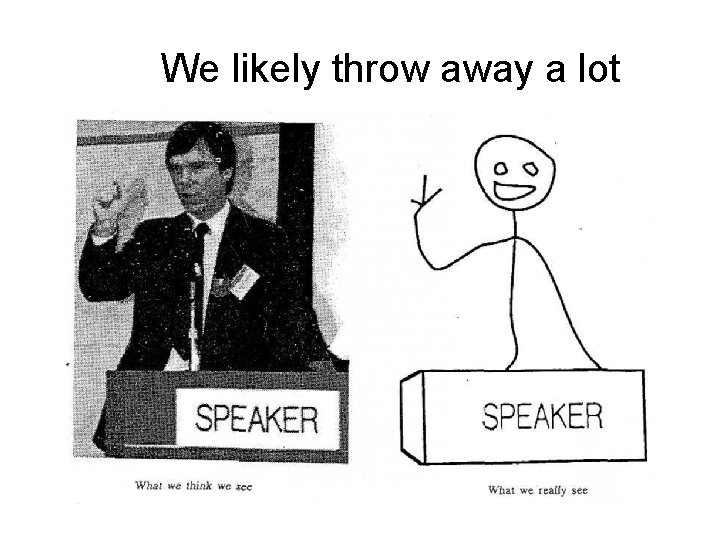

We likely throw away a lot

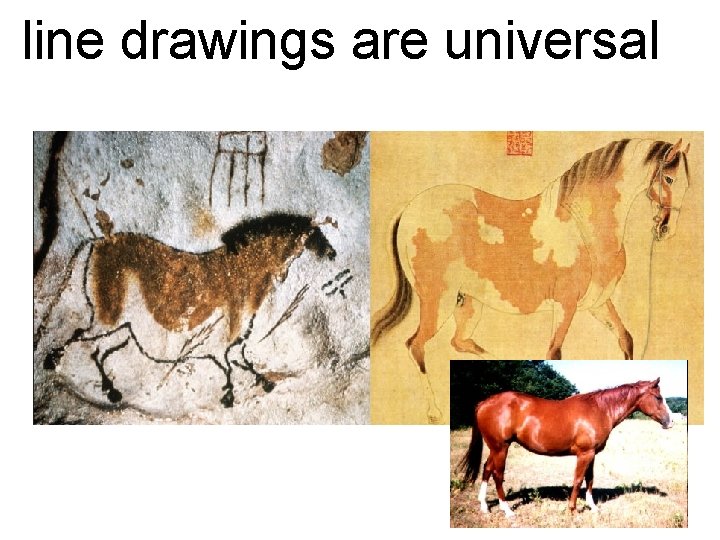

line drawings are universal

However, things are not so simple… ● Problems with feed-forward model of processing…

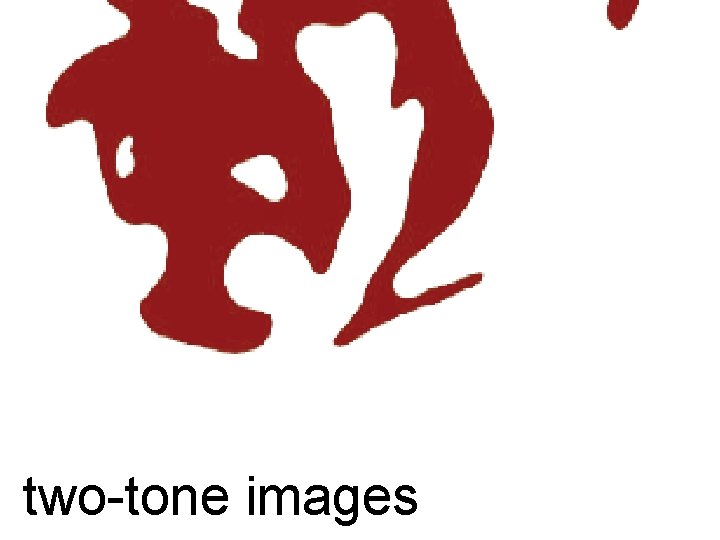

two-tone images

“attached shadow” contour hair (not shadow!) “cast shadow” contour inferred external contours

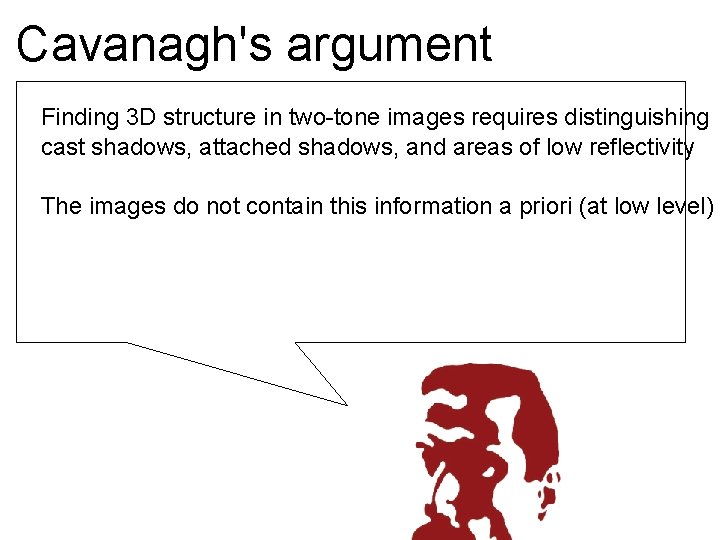

Cavanagh's argument Finding 3 D structure in two-tone images requires distinguishing cast shadows, attached shadows, and areas of low reflectivity The images do not contain this information a priori (at low level)

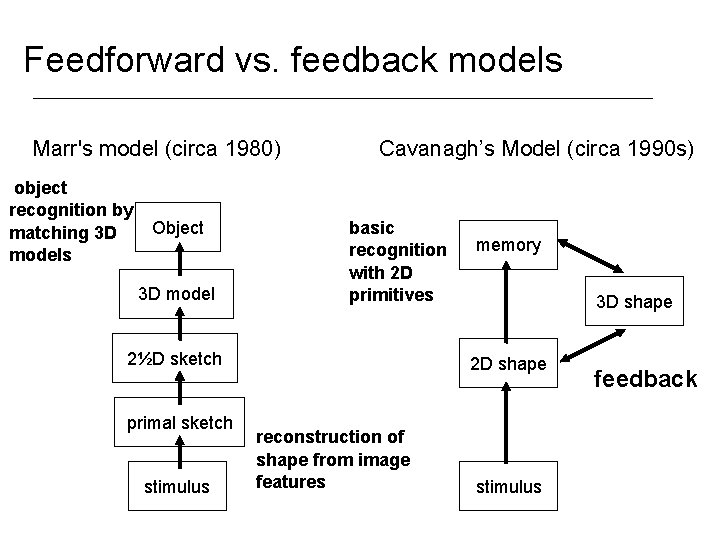

Feedforward vs. feedback models Marr's model (circa 1980) object recognition by matching 3 D models Object 3 D model Cavanagh’s Model (circa 1990 s) basic recognition with 2 D primitives 2½D sketch primal sketch stimulus memory 3 D shape 2 D shape reconstruction of shape from image features stimulus feedback

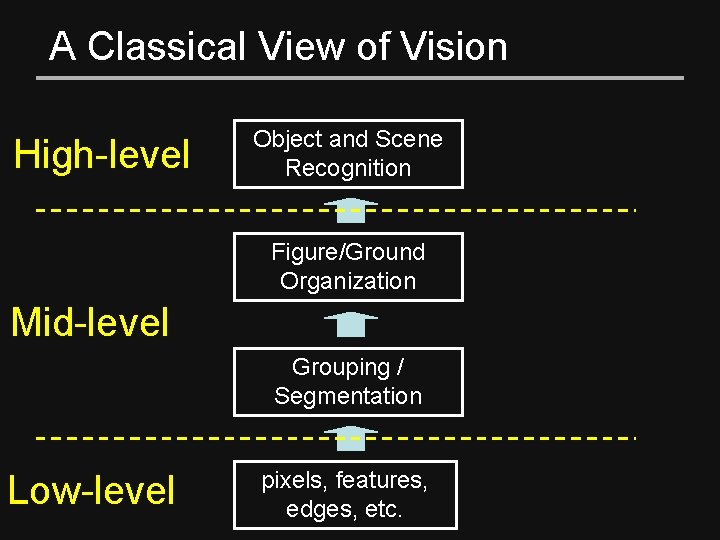

A Classical View of Vision High-level Object and Scene Recognition Figure/Ground Organization Mid-level Grouping / Segmentation Low-level pixels, features, edges, etc.

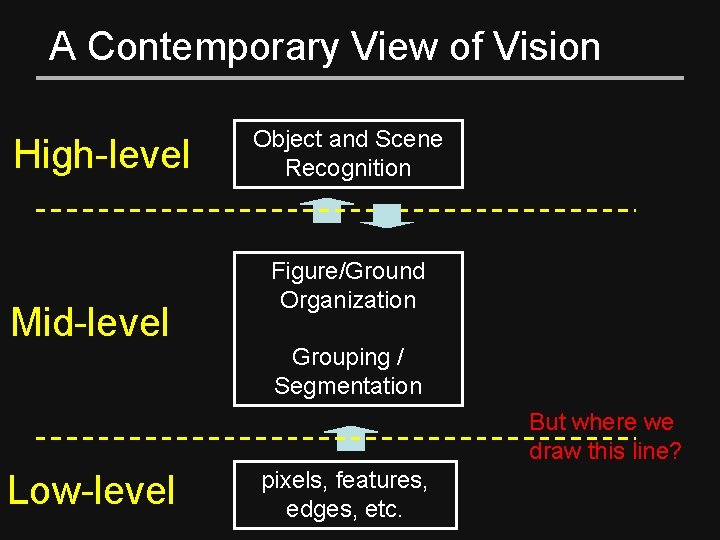

A Contemporary View of Vision High-level Mid-level Object and Scene Recognition Figure/Ground Organization Grouping / Segmentation But where we draw this line? Low-level pixels, features, edges, etc.

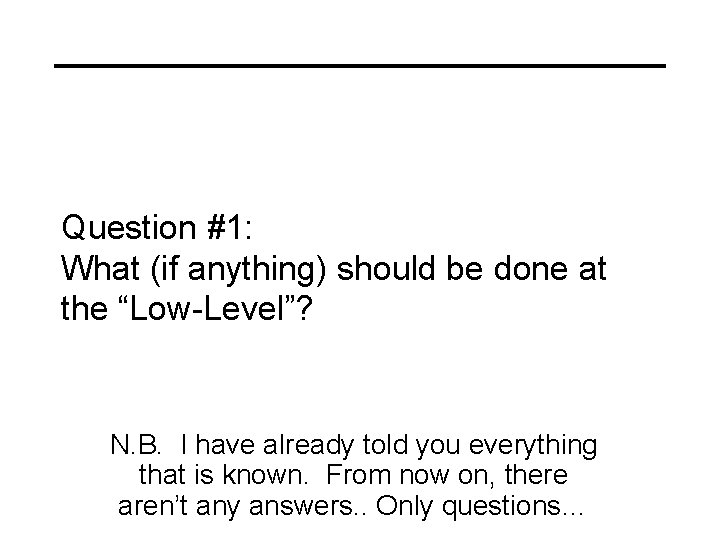

Question #1: What (if anything) should be done at the “Low-Level”? N. B. I have already told you everything that is known. From now on, there aren’t any answers. . Only questions…

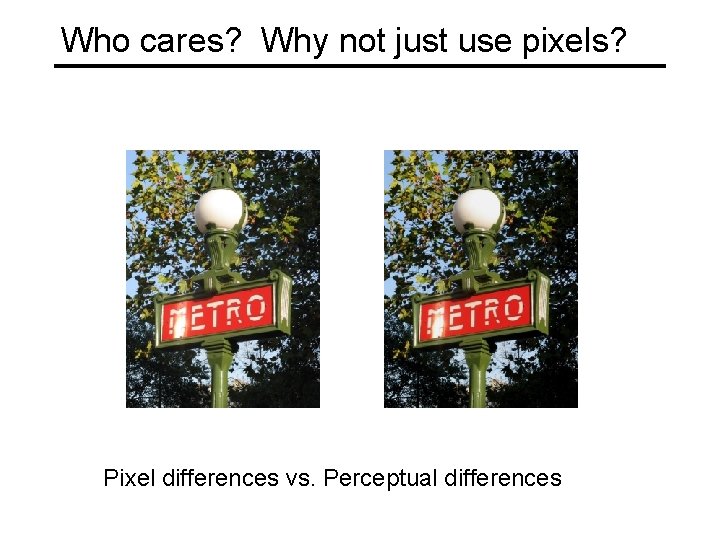

Who cares? Why not just use pixels? Pixel differences vs. Perceptual differences

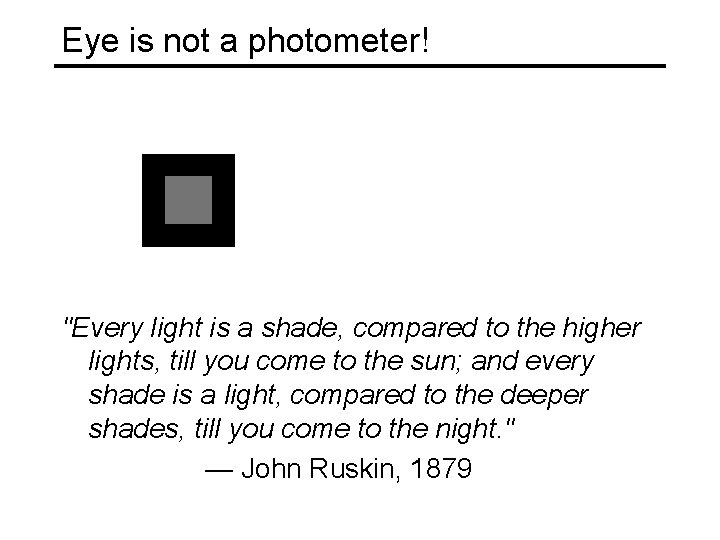

Eye is not a photometer! "Every light is a shade, compared to the higher lights, till you come to the sun; and every shade is a light, compared to the deeper shades, till you come to the night. " — John Ruskin, 1879

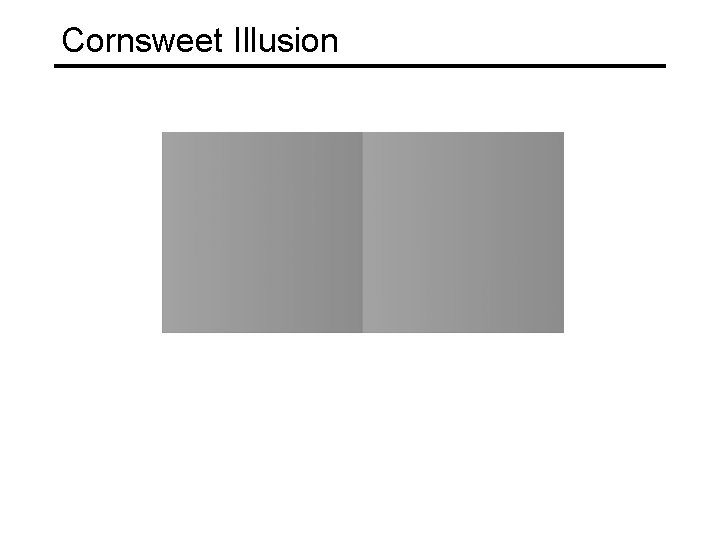

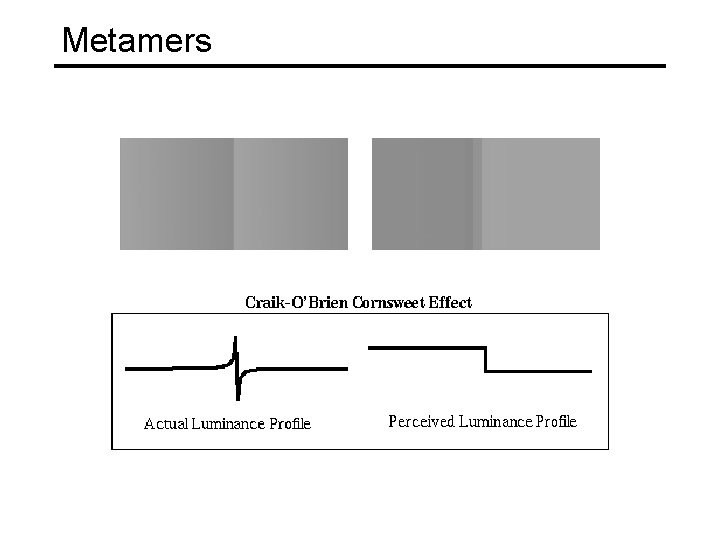

Cornsweet Illusion

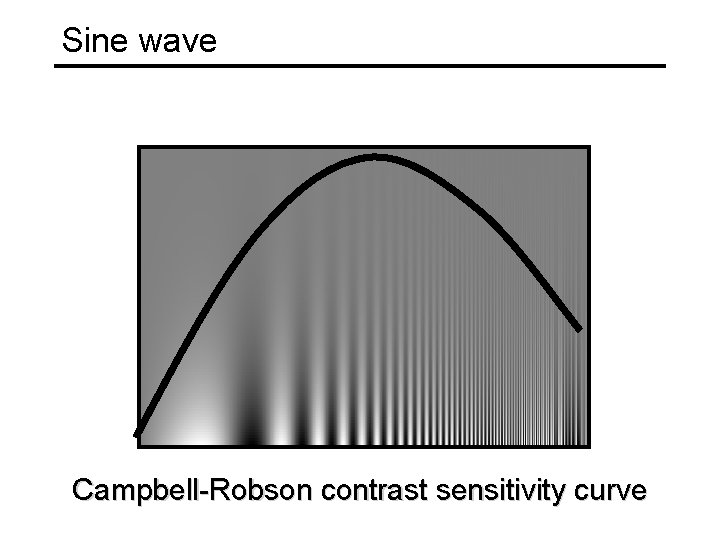

Sine wave Campbell-Robson contrast sensitivity curve

Metamers

Question #1: What (if anything) should be done at the “Low-Level”? i. e. What input stimulus should we be invariant to?

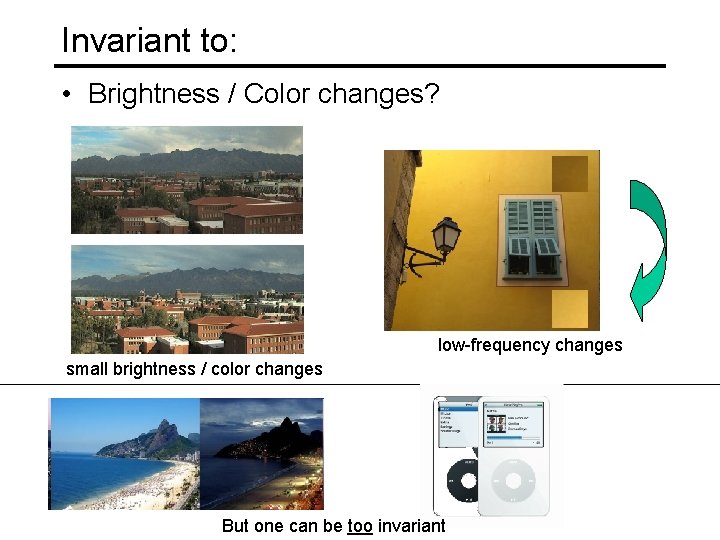

Invariant to: • Brightness / Color changes? low-frequency changes small brightness / color changes But one can be too invariant

Invariant to: • Edge contrast / reversal? I shouldn’t care what background I am on! but be careful of exaggerating noise

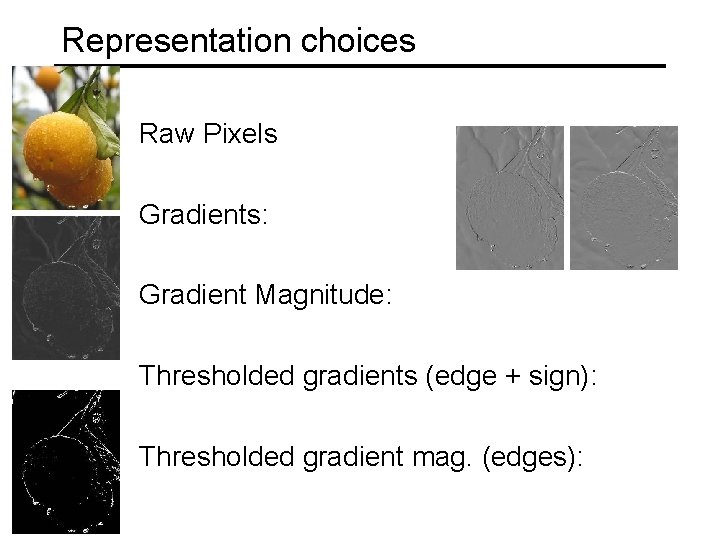

Representation choices Raw Pixels Gradients: Gradient Magnitude: Thresholded gradients (edge + sign): Thresholded gradient mag. (edges):

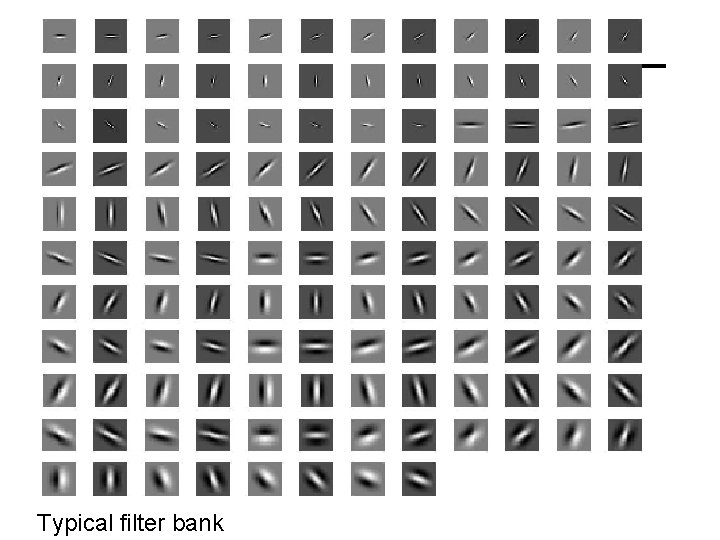

Typical filter bank

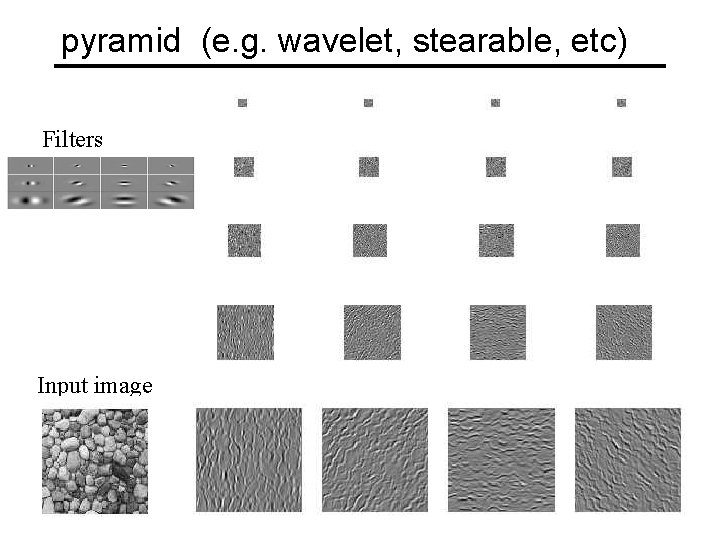

pyramid (e. g. wavelet, stearable, etc) Filters Input image

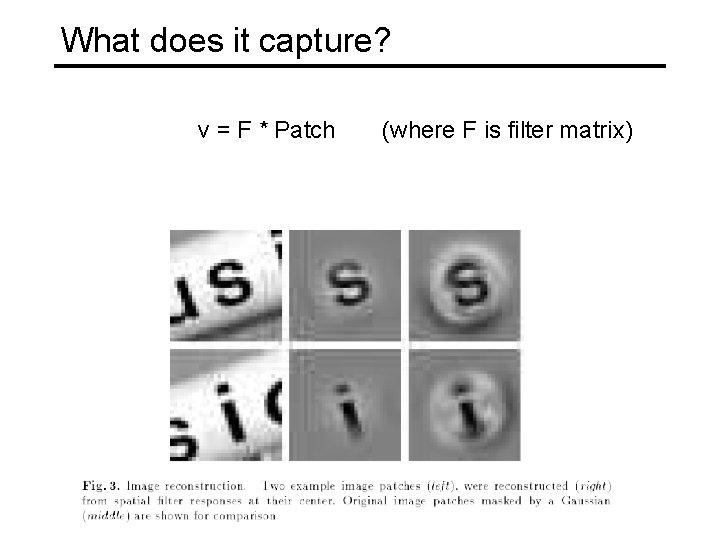

What does it capture? v = F * Patch (where F is filter matrix)

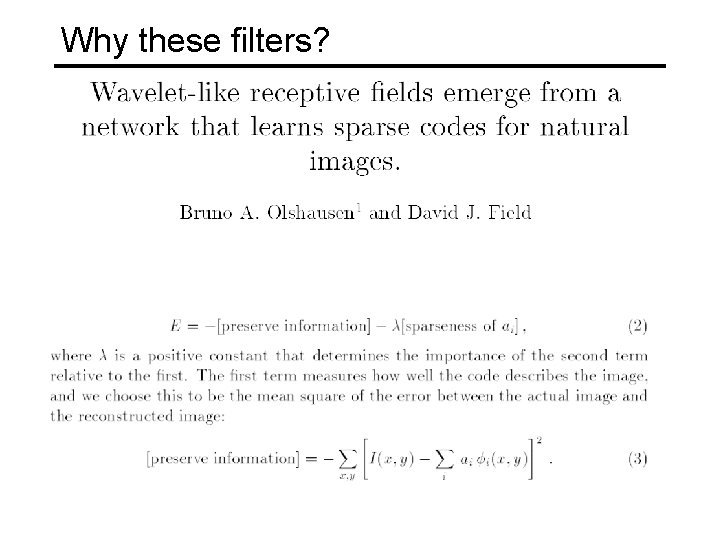

Why these filters?

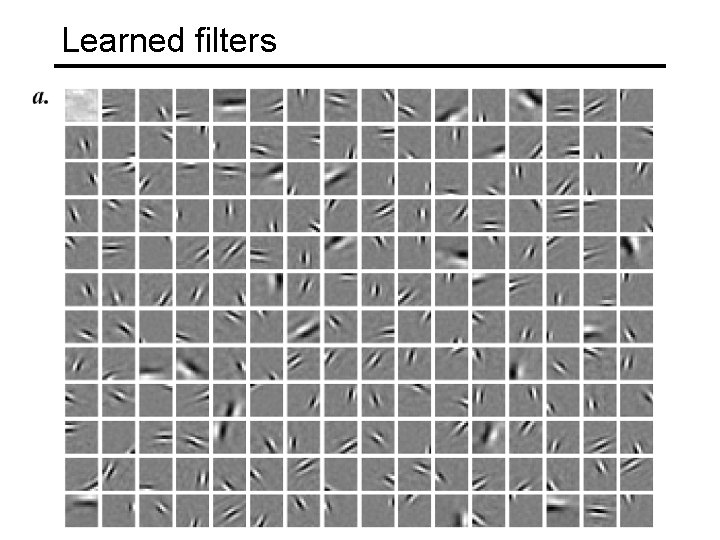

Learned filters

Spatial invariance • Rotation, Translation, Scale • Yes, but not too much… • In brain: complex cells – partial invariance • In Comp. Vision: histogram-binning methods (SIFT, GIST, Shape Context, etc) or, equivalently, blurring (e. g. Geometric Blur) -will discuss later

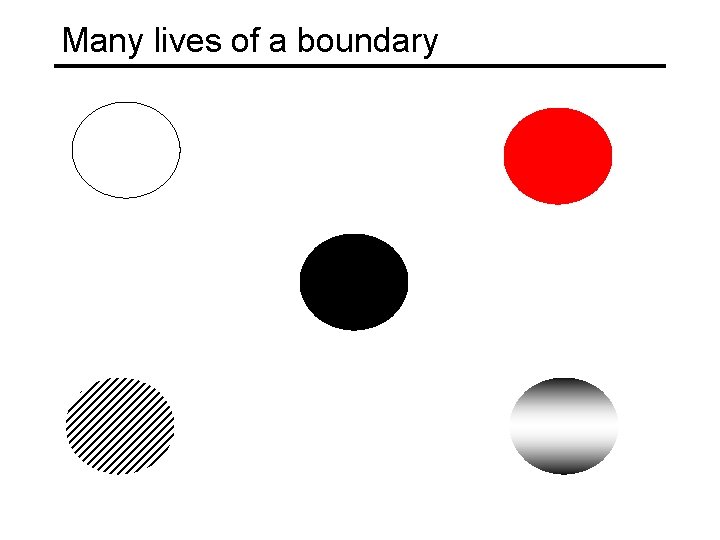

Many lives of a boundary

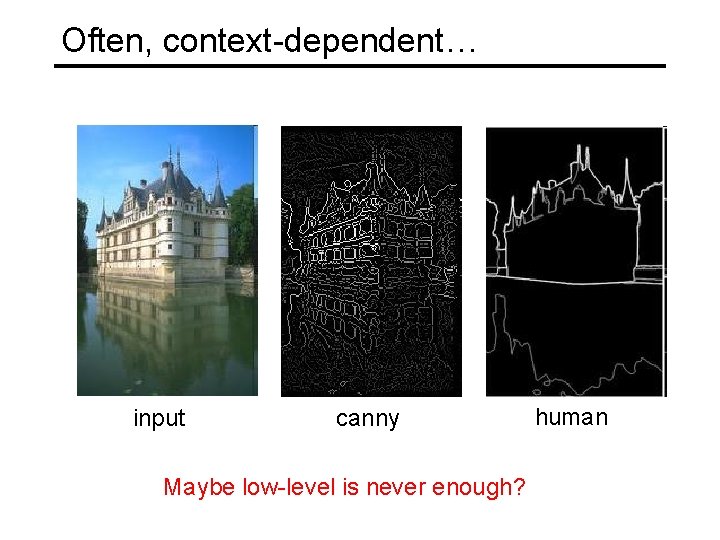

Often, context-dependent… input canny Maybe low-level is never enough? human

- Slides: 41