What Makes an Algorithm Great Richard M Karp

- Slides: 36

What Makes an Algorithm Great? Richard M. Karp FOCS 50 & ACO 20 October 24, 2009

Algorithm-Centric Definition of Computer Science Computer science is the study of algorithms, their applications, and their realization in computing systems (computers, programs and networks). “Algorithms are tiny but powerful” Richard Lipton

What Makes an Algorithm Great? • • • Elegant Surprising Interesting analysis Asymptotically efficient Efficient in practice Key to an important application Historically important Broadly applicable Has implications for complexity theory Produces beautiful output

Ancient and Classical Algorithms • • • Positional number representation (Alkarismi) Chinese remainder theorem Euclidean algorithm Gaussian elimination Fast Fourier Transform

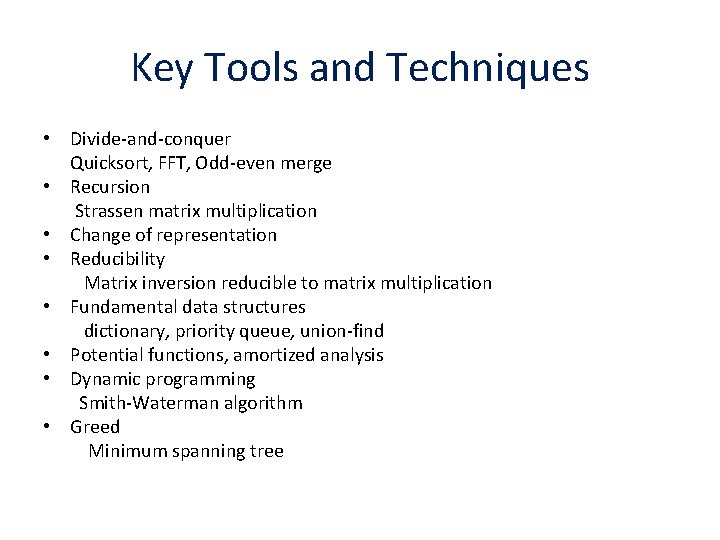

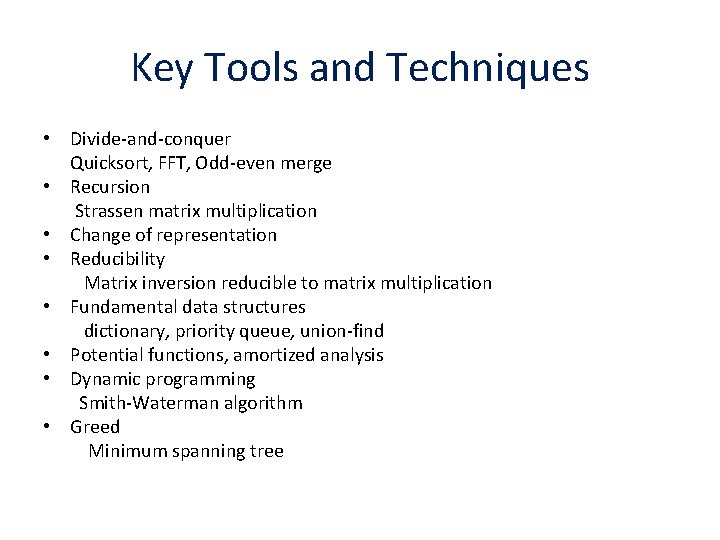

Key Tools and Techniques • Divide-and-conquer Quicksort, FFT, Odd-even merge • Recursion Strassen matrix multiplication • Change of representation • Reducibility Matrix inversion reducible to matrix multiplication • Fundamental data structures dictionary, priority queue, union-find • Potential functions, amortized analysis • Dynamic programming Smith-Waterman algorithm • Greed Minimum spanning tree

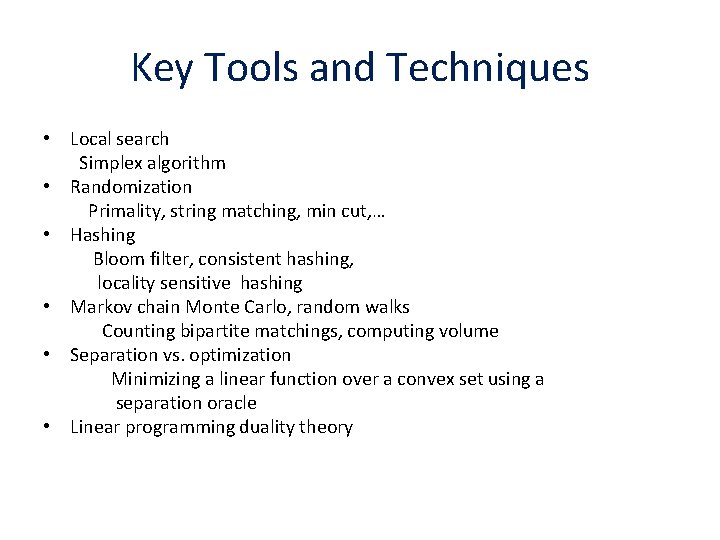

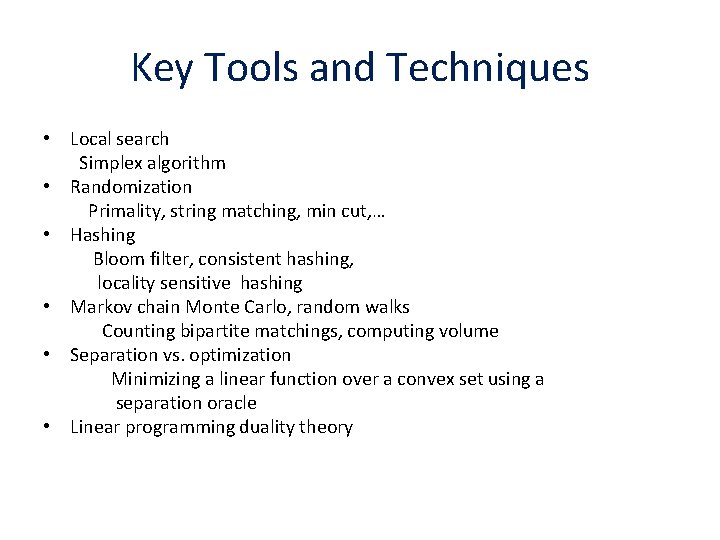

Key Tools and Techniques • Local search Simplex algorithm • Randomization Primality, string matching, min cut, … • Hashing Bloom filter, consistent hashing, locality sensitive hashing • Markov chain Monte Carlo, random walks Counting bipartite matchings, computing volume • Separation vs. optimization Minimizing a linear function over a convex set using a separation oracle • Linear programming duality theory

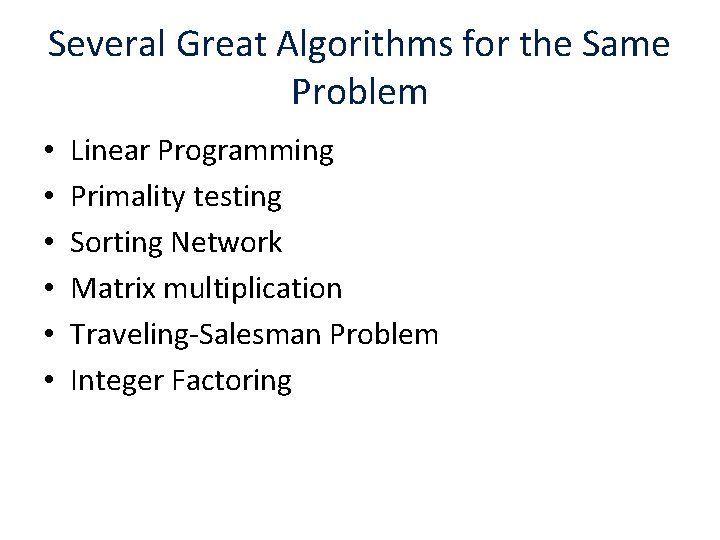

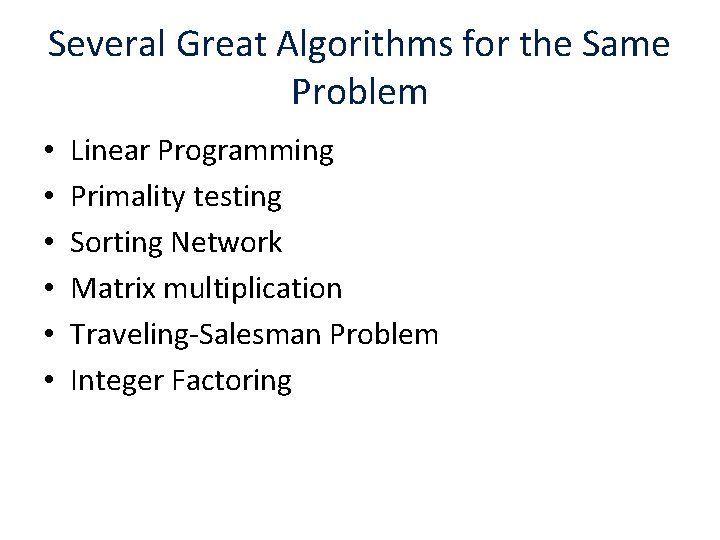

Several Great Algorithms for the Same Problem • • • Linear Programming Primality testing Sorting Network Matrix multiplication Traveling-Salesman Problem Integer Factoring

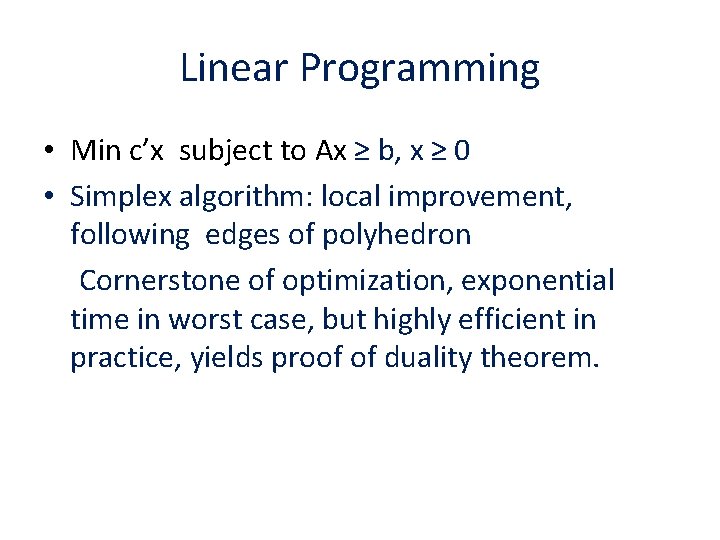

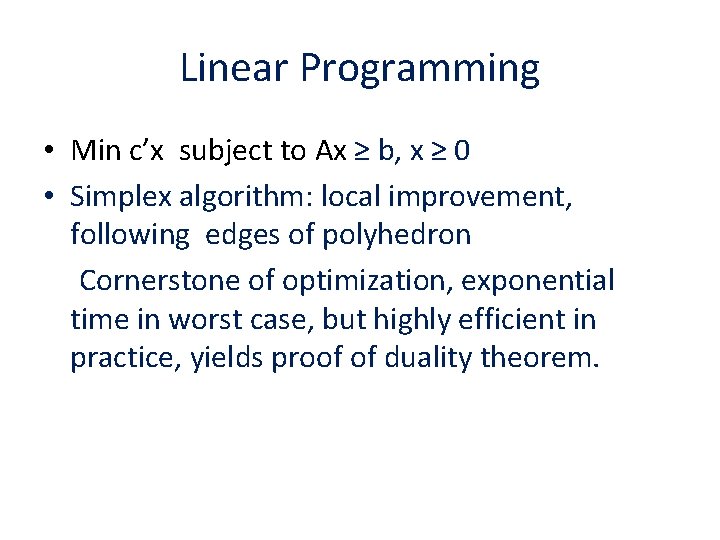

Linear Programming • Min c’x subject to Ax ≥ b, x ≥ 0 • Simplex algorithm: local improvement, following edges of polyhedron Cornerstone of optimization, exponential time in worst case, but highly efficient in practice, yields proof of duality theorem.

Linear Programming • Ellipsoid algorithm: encloses optimal point in shrinking sequence of ellipsoids Establishes that linear programming lies in P; polynomial time but quite inefficient in practice. Yields separation = optimization principle. • Interior-point algorithms: follow central path through interior of polyhedron. Polynomial time and efficient in practice. Competitive with simplex algorithm. Elegant in concept, but analysis is complicated.

Primality Testing • Miller-Rabin randomized algorithm: random trials for witnesses of compositeness. Low-degree polynomial time, negligible false positive probability, highly efficient and widely used, essential for public key cryptography. • AKS algorithm: verifies identity (x-a)n = xn -a mod (n, x r -1) for polynomial-size range of a, r Establishes that primality lies in P, but not useful in practice despite improvements by Lenstra. Pomerance and others.

Sorting Network • Sorts n keys using fixed sequence of comparison-exchange operations. • Batcher Odd-Even Merge(n, n) = 2 Merge(n/2, n/2) + n Merge(n, n) = O(n log n), Sort(n) = O(n log 2 n) Best practical sorting network

AKS Sorting Network • Requires C n log n comparisons, for large C. Asymptotically optimal up to constant factor, but not useful in practice. • Beautiful analysis: based on uniform binary tree with n leaves; tries to assign each key to leaf corresponding to its rank. Keys enter at root, migrate down tree. Error recovery: if key goes in wrong direction it eventually backs up and returns to correct path.

Matrix Multiplication/Matrix Inversion • Gaussian elimination O(n 3) • Strassen algorithm (1969) O(n 2. 81) Shocking breakthrough. Elegant recursive structure. Useful in practice. • Coppersmith-Winograd (1990) O(n 2. 38) Deep mathematical structure. Not useful in practice.

Traveling-Salesman Problem • Greedy linear-time heuristics • Christofides factor-1. 5 approximation algorithms • Lin-Kernighan local improvement heuristic • Arora- Mitchell PTAS for Euclidean TSP • Cutting-plane methods (Applegate, Bixby, Chvatal, Cook) exact solution of huge TSPs. Not practically useful, but a tour de force.

Integer Factoring • Quadratic Sieve • Number field sieve: Time exp(c (log n) 1/3 (log n)2/3) • Shor’s polynomial-time quantum algorithm

Early Discoveries in Combinatorial Optimization • Greedy algorithms for minimum spanning tree. Boruvka(1926), Prim, Kruskal • Dijkstra/Dantzig shortest path algorithms • Max flow/min cut theorem and Ford-Fulkerson algorithm • Hungarian algorithm for assignment problem • Gale-Shapley stable matching algorithm • Edmonds “blossom” algorithm for maximum matching

Stable Matching • n boys, n girls. Each person ranks members of opposite sex according to desirability as partner. • A 1 -1 matching is stable if no pair are motivated to abandon their partners and elope. • O(n 2) algorithm to find stable matching: in each round, an unmatched man proposes to the most desirable woman who has not rejected him; she tentatively accepts him if he is more desirable than her current partner.

Paths, Trees and Flowers (1965) • Edmonds polynomial-time algorithm for non-bipartite matching based on shrinking odd cycles contracted in search for an augmenting path, recursively finding an augmenting path, and unshrinking. • Edmonds also discussed polynomial-time computation and verification, and gave informal definitions of P and NP.

Basic Data Structures • Dictionary based on k-ary search trees B-trees Splay trees • Priority queue based on heaps • Union-Find data structures Improvements based on these ideas, plus randomization and other algorithmic ideas, greatly improved the running times of all the basic combinatorial algorithms.

Randomized Algorithms • Primality testing (Solovay-Strassen, Miller-Rabin) raised consciousness of TCS community • Volume of a convex body • Counting bipartite matchings (Jerrum, Sinclair, Vigoda) • Min-cut and min s-t cut (Karger, Bencur) • String matching • Hashing: Bloom filter, universal hashing, consistent hashing, locality-sensitive hashing.

Estimating the Volume of a Convex Body • No deterministic polynomial-time algorithm based on membership tests can approximate the volume to within an exponential factor. • Approximating the volume is reducible to approximate uniform sampling. • Different random walks (grid walk, ball walk, hit-and-run) within a convex body converge rapidly to a uniform distribution. Beginning with Dyer, Frieze, Kannan (1991) a series of successively improved algorithms sample from a ndimensional convex body with bounded relative error in time polynomial in n. Current champion O(n 4) [Lovasz, Vempala (2003)].

Probabilistic Analysis • For many problems, simple algorithms work well with high probability on Erdos-Renyi random graphs and instances drawn from other simple distributions. Rarely do algorithms perform well both in worst case and on average: • Two examples: Hopcroft-Karp bipartite matching algorithm and differencing heuristic for number partitioning.

Number Partitioning • Partition a set of n integers into two subsets to minimize the difference of their sums. • Differencing algorithm [Karmarkar, Karp] • Repeat until only one number remains: replace the two largest numbers by their difference if the final number is d, can easily reconstruct a partition with difference d. • If the numbers are drawn independently from a uniform [0, 1] or exponential distribution the expected value of d is O(2 - n logn ). [B. Yakir]. • The differencing algorithm and the standard LPT algorithm have the same worst-case performance.

Lin-Kernighan Heuristic Algorithm • Euclidean traveling-salesman problem: find the shortest tour that visits a set of n points. • Lin-Kernighan algorithm repeatedly improves current tour by breaking a small number of links and reconnecting the resulting sub-tours. Key is an efficient algorithm for finding such an improvement if it exists.

Shotgun Sequencing of Human Genome • Problem: reconstruct genetically active part of human genome from millions of reads (short substrings) and paired reads (pairs of reads at approximately known distance). • Acquisition of data and reconstruction algorithms based on detailed knowledge of structure of repeated sequences within the human genome. Method is tailored to one important instance.

Simulated Annealing Metaheuristic • Applies to broad class of combinatorial optimization problems. Based on analogy with controlled cooling in metallurgy. • Requires a neighborhood structure on the set of feasible solutions. • Repeat: given current solution x, choose a random neighbor x’; move to x’ with probability min(1, exp(-(cost(x’) – cost(x))/T)); • Temperature T gradually reduced according to a cooling schedule, making cost-increasing moves increasingly unlikely to be accepted.

Algorithmic Proofs in Complexity Theory • A nondeterministic Turing machine accepts an input if there exists a computation on that input leading to an accepting state. • NONDETERMINISTIC SPACE(s(n)) is closed under complement (Immerman, Szelepscenyi). Proof based on an inductive algorithm for computing the number of Turing machine configurations reachable from a given initial configuration. • Undirected connectivity is in LOGSPACE (Reingold) • Some problems require more time than space (Hopcroft, Paul, Valiant). Proved by showing that a n-step straight-line algebraic algorithm can be simulated with o(n) working storage.

IP = PSPACE (Shamir) • IP: set of properties having interactive proofs, in which a prover convinces a verifier that the property holds. • IP=PSPACE proven by giving a generic interactive proof of the validity of a quantified boolean formula using arithmetization to convert the formula to an algebraic identity over a finite field.

Algorithms Enabling Important Applications • • Fast Fourier transform RSA public key encryption Miller-Rabin primality testing Berlekamp and Cantor-Zassenhaus polynomial factoring (Reed-Solomon decoding) Lempel-Ziv text compression Page rank (Google) Consistent hashing (Akamai) Viterbi algorithm for hidden Markov model

Algorithms Enabling Important Applications • Smith-Waterman sequence alignment • Whole-genome shotgun sequencing • Spectral decomposition of matrices; approximation of matrices by low rank or sparse matrices. • Model checking algorithms using temporal logic and binary decision diagrams

Randomized Algorithms for Information Transmission • Digital Fountain erasure codes for content delivery • Code Division Multiple Access (CDMA) (Qualcomm) • Compressed sensing of images

LT Code (Luby) • A file consists of n packets (source symbols). • Goal: encode the file so that it can be decoded at many destinations, each of which may unpredictably receive a different set of transmitted code symbols. • The LT code operates like a “digital fountain, ” generating an unlimited number of random code symbols, each of which is the XOR of some set of source symbols. Reception of slightly more than n code symbols enables efficient decoding of the file (with high probability). • To generate a code symbol, draw a positive integer d from a “magical” probability distribution, and transmit the XOR of a random set of d source symbols. • Decoding algorithm is based on simple value propagation.

Code Division Multiple Access (Viterbi) • Allows multiple users to send information simultaneously over a single communication channel. Adapts to asynchronous transmission and arrrival and departure of users. • Each sender-receiver pair is assigned a pseudorandom sequence v in {1, -1}k. To send a binary sequence, each 1 is encoded as v, each 0 as –v. • The encoded messages of the senders are summed and transmitted. • To decode one bit, the decoder takes the inner product of a transmitted k -tuple with v. Values greater than 0 are interpreted as 1, values less than 0, as 0. • The contributions of the other sender-receiver pairs nearly cancel and do not affect reception (with high probability).

Compressed Sensing (Candes, Romberg, Tao, Donoho) • A spectacular advance in compression of images and other signals. • Principle: sparse signals can be approximated accurately by a small number of random projections. • Problem: compress a vector f in Rn without significant information loss. • Assumption: There is a basis (v 1, v 2, …, vn) for Rn (for example, the wavelet basis) in which f has a sparse representation; i. e. , f = ∑ <f, vj> vj in which most of the coefficients <f, vj> are negligible.

Compressed Sensing • f = ∑ <f, vj > vj • Choose a random set (w 1, w 2, …, wm) of m orthonormal vectors in Rn where m << n. • Transmit <f, wk>, k = 1, 2, …m • Using linear programming, approximately reconstruct f as f* = ∑ <z, vj> vj where z in Rn is the vector of minimum L 1 norm satisfying <f*, wk> = <f, wk>, k = 1, 2, …, m

Et Cetera • • • Parallel algorithms Geometric algorithms Approximation algorithms On-line algorithms Property Testing Sublinear-time algorithms Streaming algorithms Quantum algorithms Cryptographic algorithms and more …