What is TMVA One framework for most common

- Slides: 18

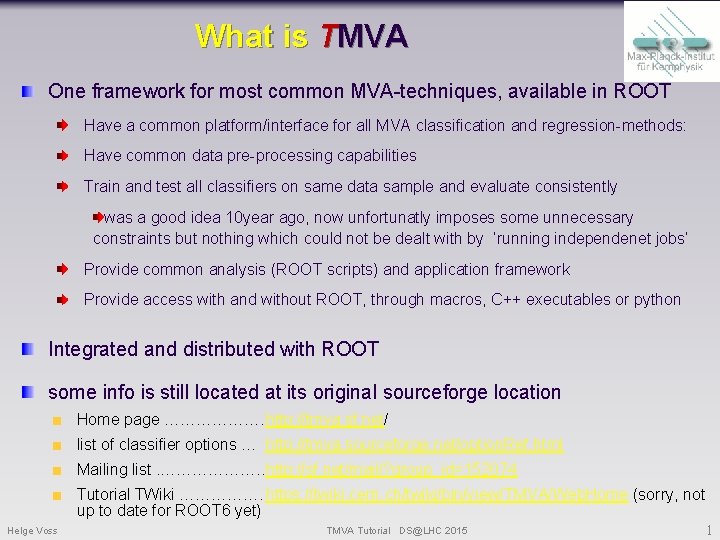

What is TMVA One framework for most common MVA-techniques, available in ROOT Have a common platform/interface for all MVA classification and regression-methods: Have common data pre-processing capabilities Train and test all classifiers on same data sample and evaluate consistently was a good idea 10 year ago, now unfortunatly imposes some unnecessary constraints but nothing which could not be dealt with by ‘running independenet jobs’ Provide common analysis (ROOT scripts) and application framework Provide access with and without ROOT, through macros, C++ executables or python Integrated and distributed with ROOT some info is still located at its original sourceforge location Home page ………………. http: //tmva. sf. net/ list of classifier options … http: //tmva. sourceforge. net/option. Ref. html Mailing list. ………………. . http: //sf. net/mail/? group_id=152074 Tutorial TWiki ……………. https: //twiki. cern. ch/twiki/bin/view/TMVA/Web. Home (sorry, not up to date for ROOT 6 yet) Helge Voss TMVA Tutorial DS@LHC 2015 1

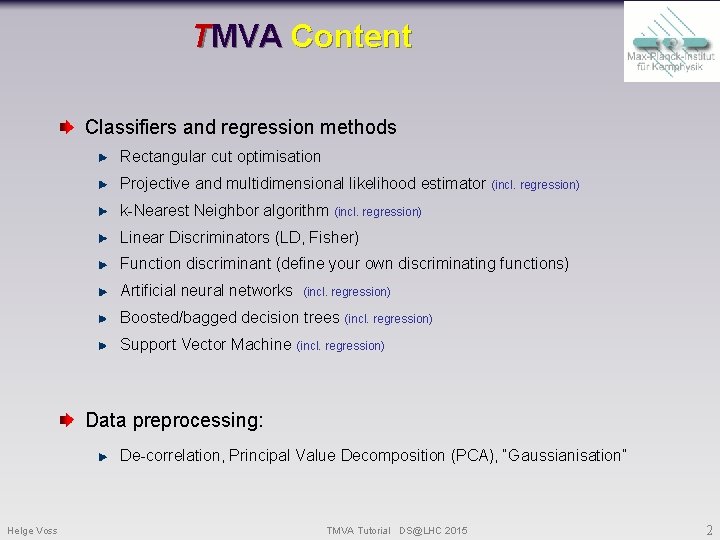

TMVA Content Classifiers and regression methods Rectangular cut optimisation Projective and multidimensional likelihood estimator (incl. regression) k-Nearest Neighbor algorithm (incl. regression) Linear Discriminators (LD, Fisher) Function discriminant (define your own discriminating functions) Artificial neural networks (incl. regression) Boosted/bagged decision trees (incl. regression) Support Vector Machine (incl. regression) Data preprocessing: De-correlation, Principal Value Decomposition (PCA), “Gaussianisation” Helge Voss TMVA Tutorial DS@LHC 2015 2

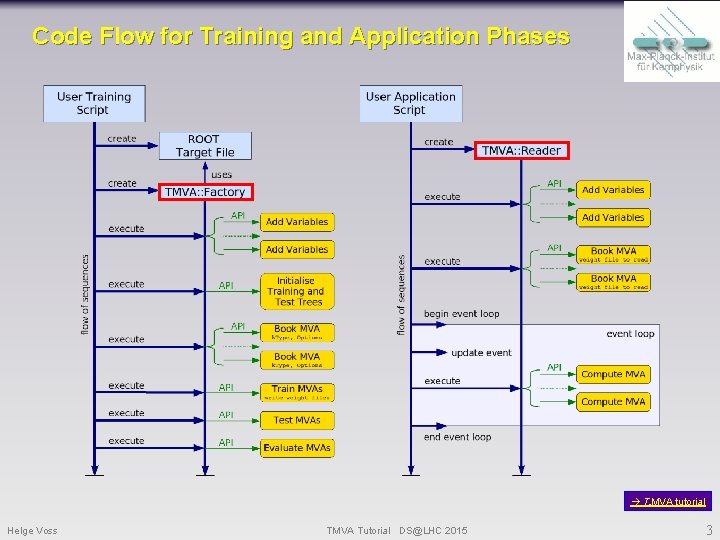

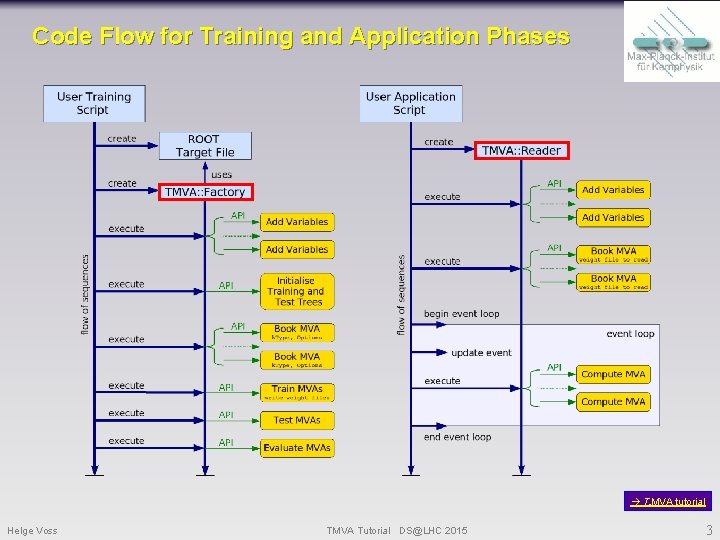

Code Flow for Training and Application Phases T MVA tutorial Helge Voss TMVA Tutorial DS@LHC 2015 3

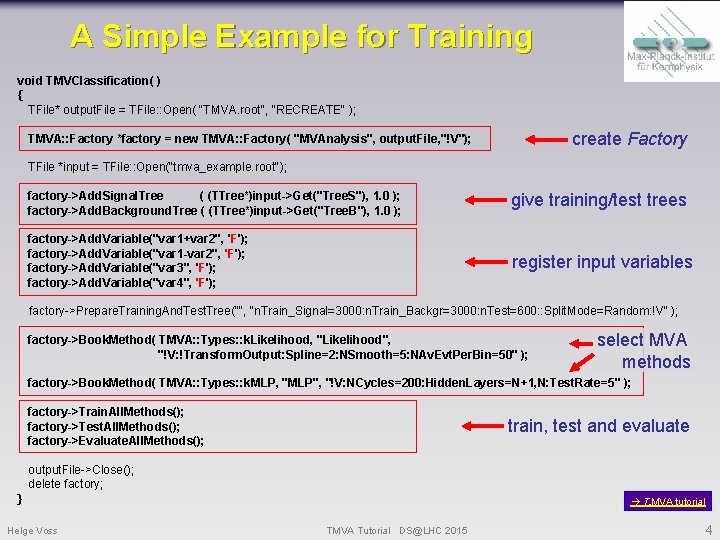

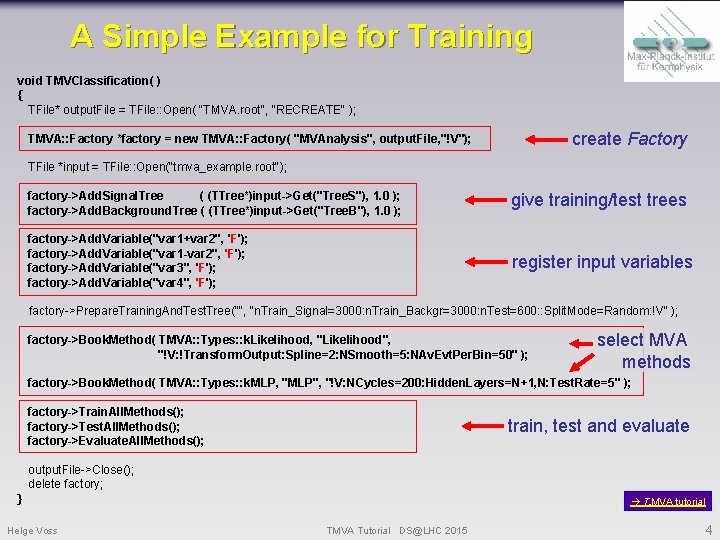

A Simple Example for Training void TMVClassification( ) { TFile* output. File = TFile: : Open( "TMVA. root", "RECREATE" ); create Factory TMVA: : Factory *factory = new TMVA: : Factory( "MVAnalysis", output. File, "!V"); TFile *input = TFile: : Open("tmva_example. root"); factory->Add. Signal. Tree ( (TTree*)input->Get("Tree. S"), 1. 0 ); factory->Add. Background. Tree ( (TTree*)input->Get("Tree. B"), 1. 0 ); give training/test trees factory->Add. Variable("var 1+var 2", 'F'); factory->Add. Variable("var 1 -var 2", 'F'); factory->Add. Variable("var 3", 'F'); factory->Add. Variable("var 4", 'F'); register input variables factory->Prepare. Training. And. Test. Tree("", “n. Train_Signal=3000: n. Train_Backgr=3000: n. Test=600: : Split. Mode=Random: !V" ); factory->Book. Method( TMVA: : Types: : k. Likelihood, "Likelihood", "!V: !Transform. Output: Spline=2: NSmooth=5: NAv. Evt. Per. Bin=50" ); select MVA methods factory->Book. Method( TMVA: : Types: : k. MLP, "MLP", "!V: NCycles=200: Hidden. Layers=N+1, N: Test. Rate=5" ); factory->Train. All. Methods(); factory->Test. All. Methods(); factory->Evaluate. All. Methods(); train, test and evaluate output. File->Close(); delete factory; } Helge Voss T MVA tutorial TMVA Tutorial DS@LHC 2015 4

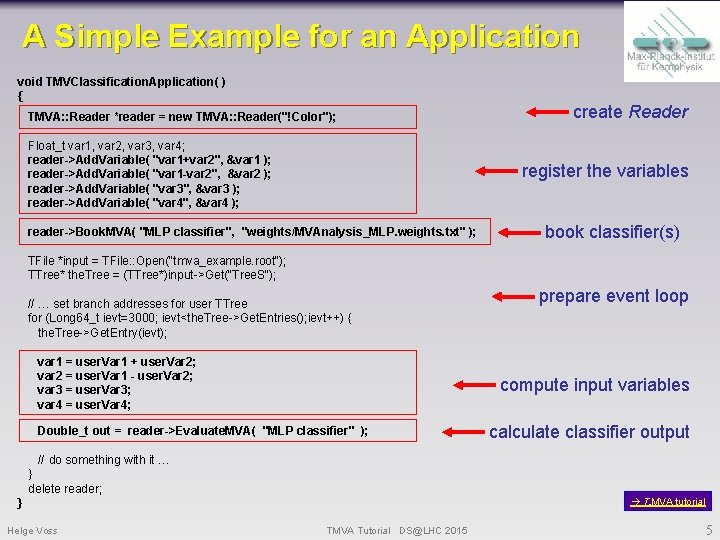

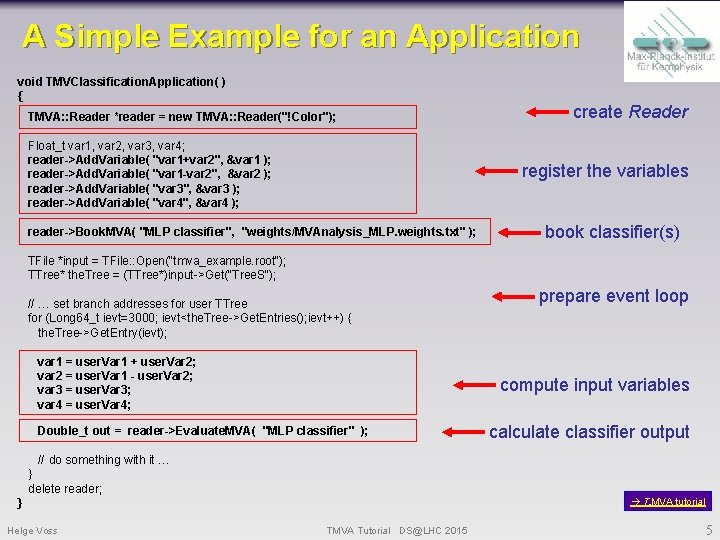

A Simple Example for an Application void TMVClassification. Application( ) { TMVA: : Reader *reader = new TMVA: : Reader("!Color"); Float_t var 1, var 2, var 3, var 4; reader->Add. Variable( "var 1+var 2", &var 1 ); reader->Add. Variable( "var 1 -var 2", &var 2 ); reader->Add. Variable( "var 3", &var 3 ); reader->Add. Variable( "var 4", &var 4 ); create Reader register the variables reader->Book. MVA( "MLP classifier", "weights/MVAnalysis_MLP. weights. txt" ); book classifier(s) TFile *input = TFile: : Open("tmva_example. root"); TTree* the. Tree = (TTree*)input->Get("Tree. S"); // … set branch addresses for user TTree for (Long 64_t ievt=3000; ievt<the. Tree->Get. Entries(); ievt++) { the. Tree->Get. Entry(ievt); var 1 = user. Var 1 + user. Var 2; var 2 = user. Var 1 - user. Var 2; var 3 = user. Var 3; var 4 = user. Var 4; prepare event loop compute input variables Double_t out = reader->Evaluate. MVA( "MLP classifier" ); calculate classifier output // do something with it … } delete reader; T MVA tutorial } Helge Voss TMVA Tutorial DS@LHC 2015 5

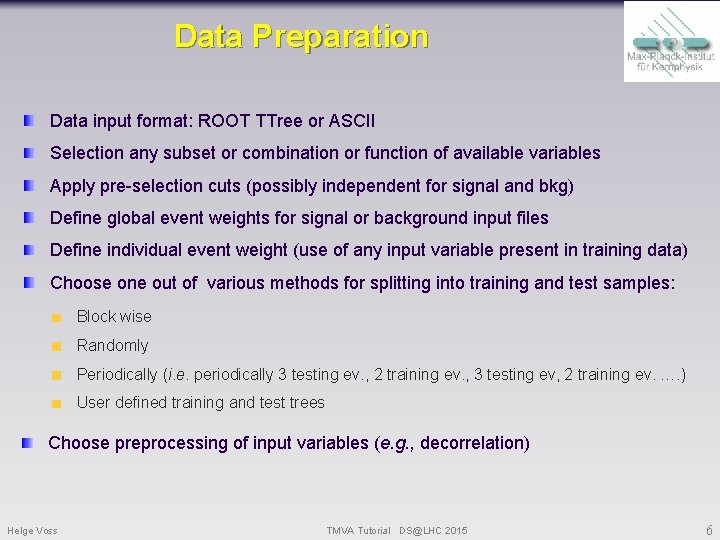

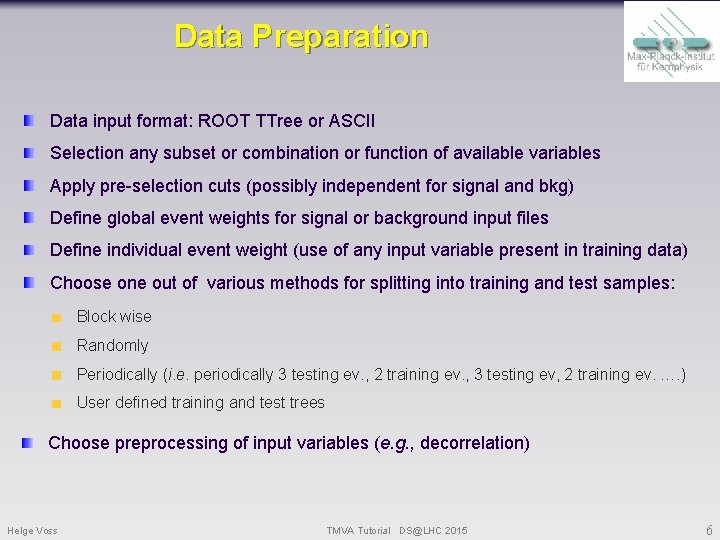

Data Preparation Data input format: ROOT TTree or ASCII Selection any subset or combination or function of available variables Apply pre-selection cuts (possibly independent for signal and bkg) Define global event weights for signal or background input files Define individual event weight (use of any input variable present in training data) Choose one out of various methods for splitting into training and test samples: Block wise Randomly Periodically (i. e. periodically 3 testing ev. , 2 training ev. , 3 testing ev, 2 training ev. …. ) User defined training and test trees Choose preprocessing of input variables (e. g. , decorrelation) Helge Voss TMVA Tutorial DS@LHC 2015 6

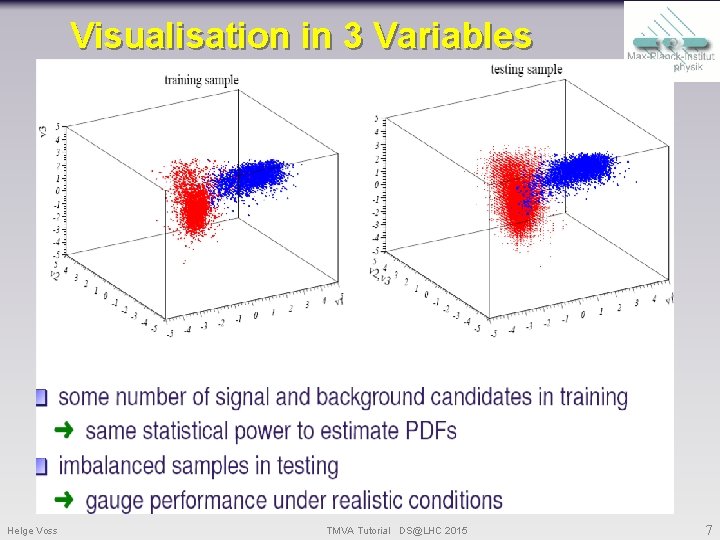

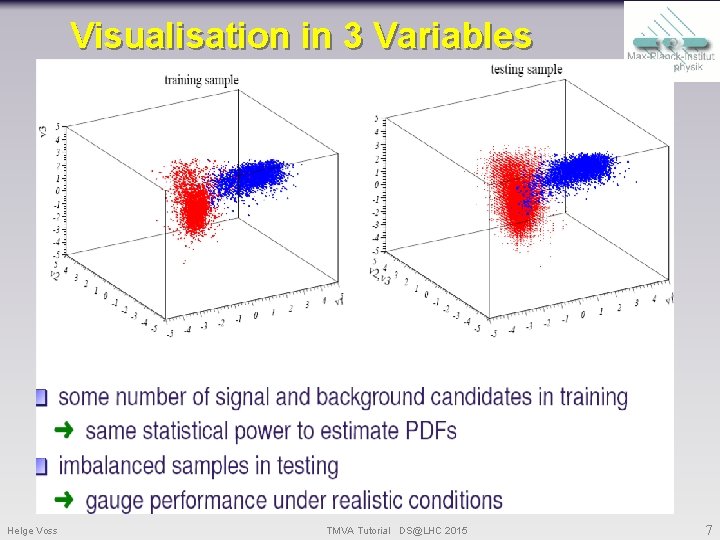

Visualisation in 3 Variables Helge Voss TMVA Tutorial DS@LHC 2015 7

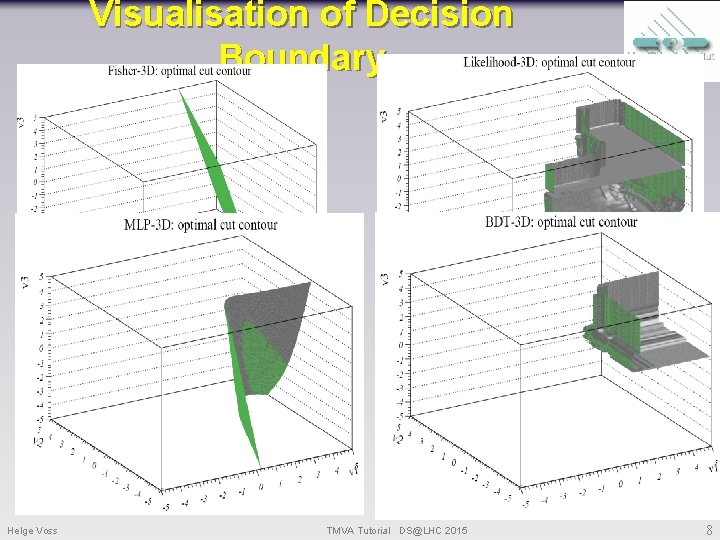

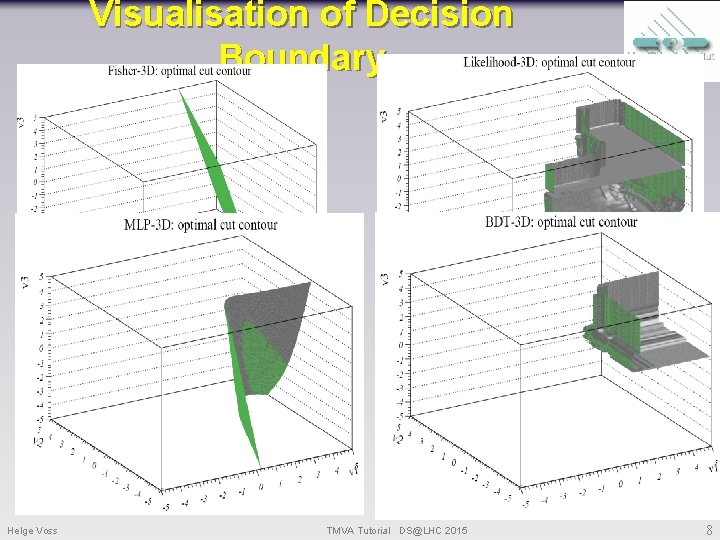

Visualisation of Decision Boundary Helge Voss TMVA Tutorial DS@LHC 2015 8

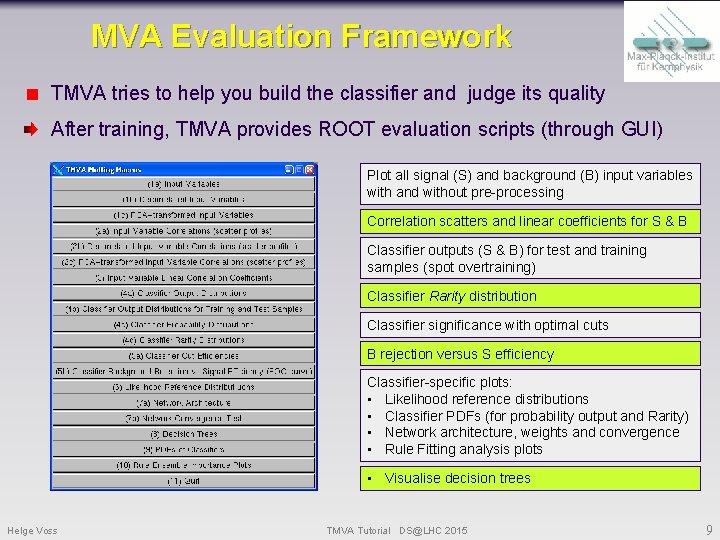

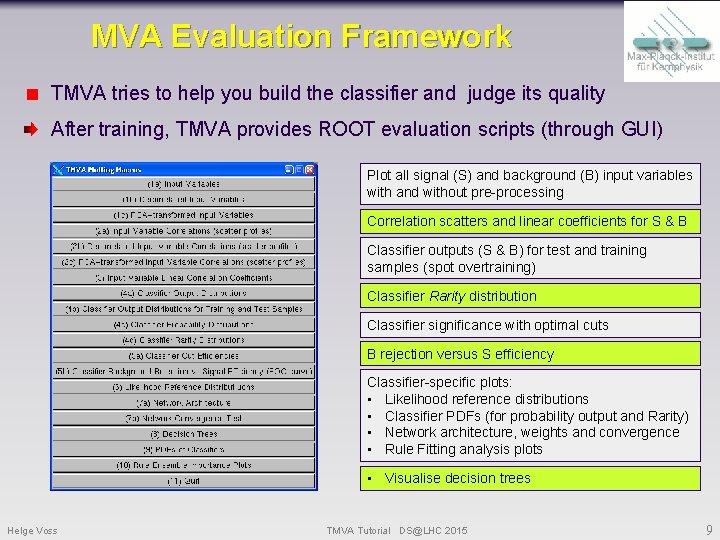

MVA Evaluation Framework TMVA tries to help you build the classifier and judge its quality After training, TMVA provides ROOT evaluation scripts (through GUI) Plot all signal (S) and background (B) input variables with and without pre-processing Correlation scatters and linear coefficients for S & B Classifier outputs (S & B) for test and training samples (spot overtraining) Classifier Rarity distribution Classifier significance with optimal cuts B rejection versus S efficiency Classifier-specific plots: • Likelihood reference distributions • Classifier PDFs (for probability output and Rarity) • Network architecture, weights and convergence • Rule Fitting analysis plots • Visualise decision trees Helge Voss TMVA Tutorial DS@LHC 2015 9

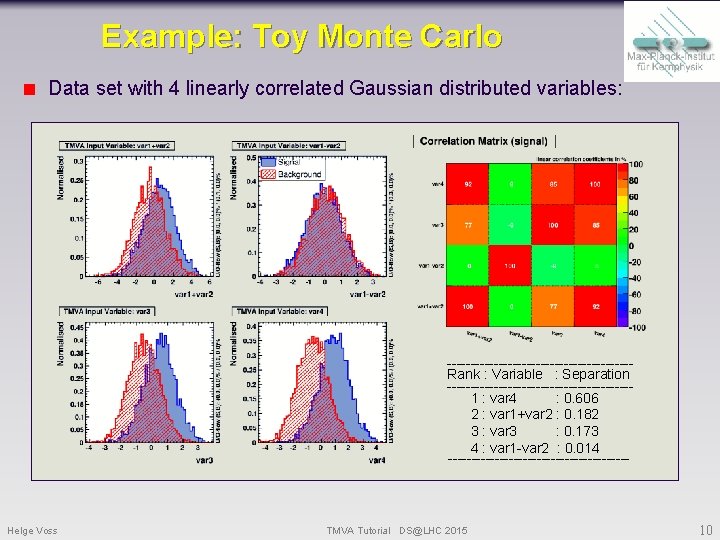

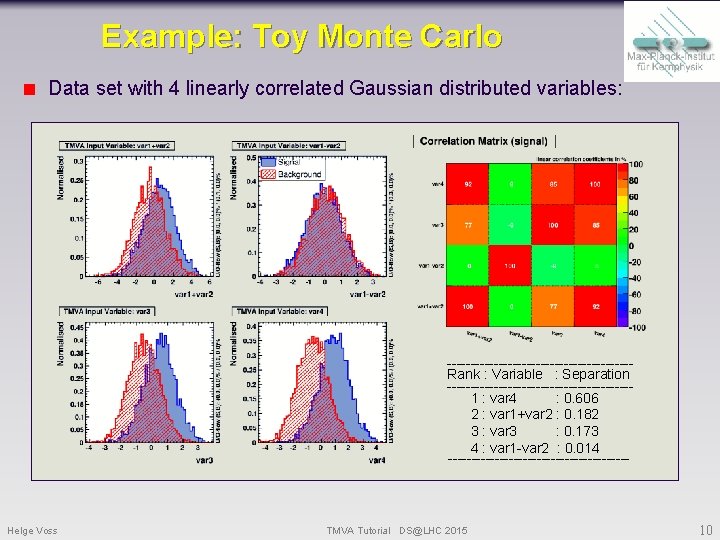

Example: Toy Monte Carlo Data set with 4 linearly correlated Gaussian distributed variables: --------------------Rank : Variable : Separation --------------------1 : var 4 : 0. 606 2 : var 1+var 2 : 0. 182 3 : var 3 : 0. 173 4 : var 1 -var 2 : 0. 014 -------------------- Helge Voss TMVA Tutorial DS@LHC 2015 10

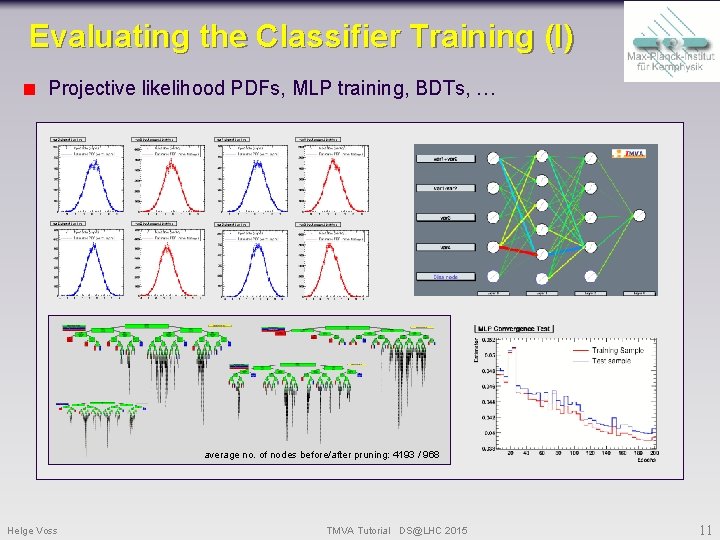

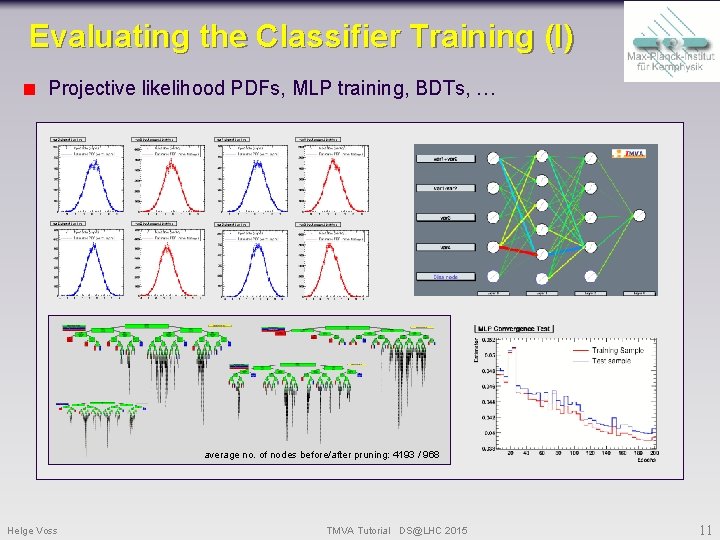

Evaluating the Classifier Training (I) Projective likelihood PDFs, MLP training, BDTs, … average no. of nodes before/after pruning: 4193 / 968 Helge Voss TMVA Tutorial DS@LHC 2015 11

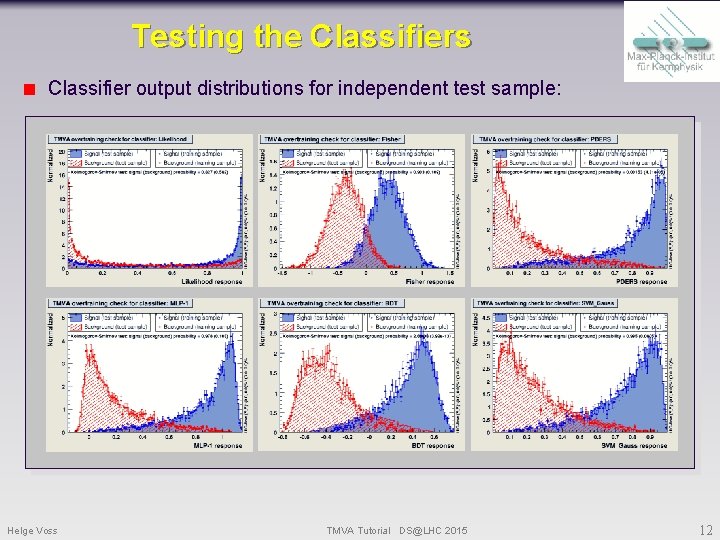

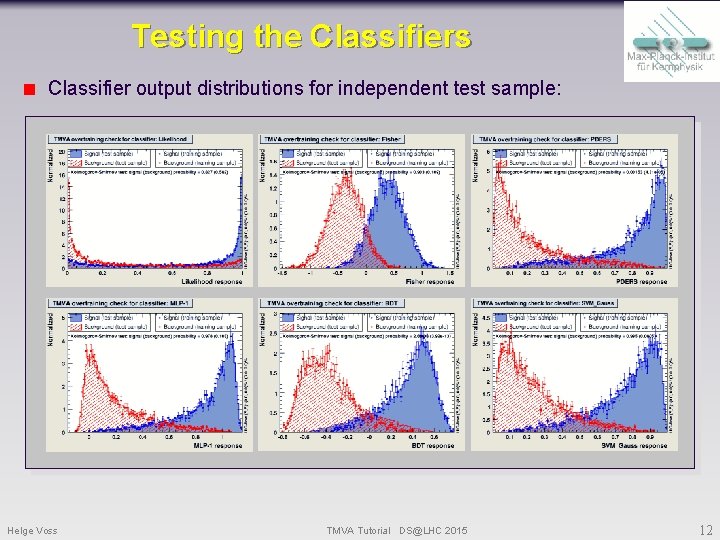

Testing the Classifiers Classifier output distributions for independent test sample: Helge Voss TMVA Tutorial DS@LHC 2015 12

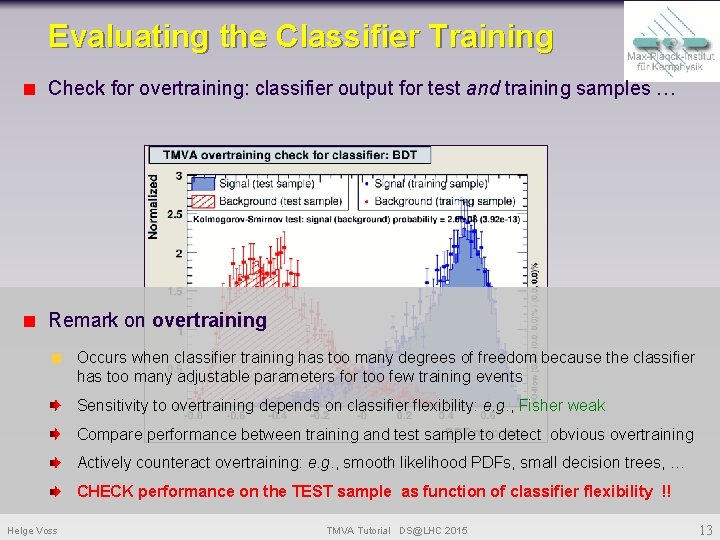

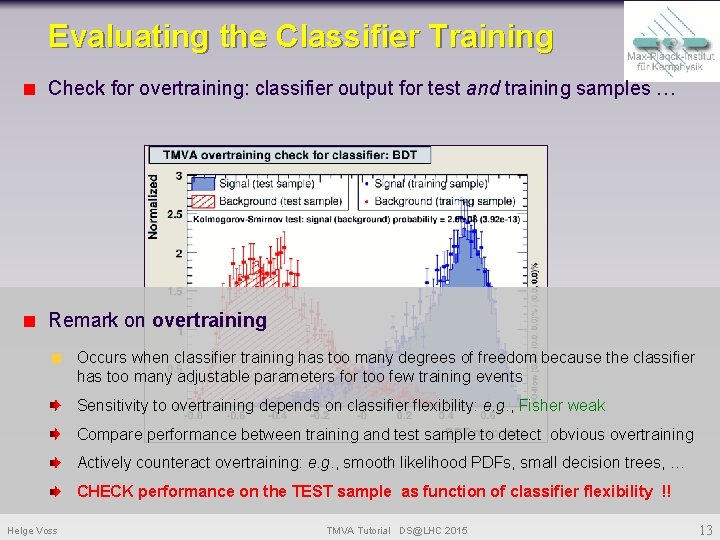

Evaluating the Classifier Training Check for overtraining: classifier output for test and training samples … Remark on overtraining Occurs when classifier training has too many degrees of freedom because the classifier has too many adjustable parameters for too few training events Sensitivity to overtraining depends on classifier flexibility: e. g. , Fisher weak Compare performance between training and test sample to detect obvious overtraining Actively counteract overtraining: e. g. , smooth likelihood PDFs, small decision trees, … CHECK performance on the TEST sample as function of classifier flexibility !! Helge Voss TMVA Tutorial DS@LHC 2015 13

Kolmogorov Smirnov Tests if two sample distributions are compatible with coming from the same parent distribution Statistical test: if they are indeed random samples of the same parent distribution, then the KS-test gives an uniformly distributed value between 0 and 1 !! Note: that means an average value of 0. 5 !! Helge Voss TMVA Tutorial DS@LHC 2015 14

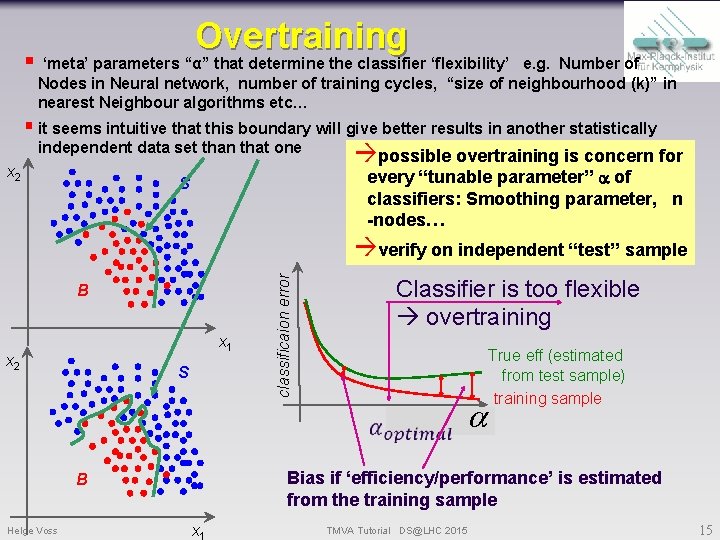

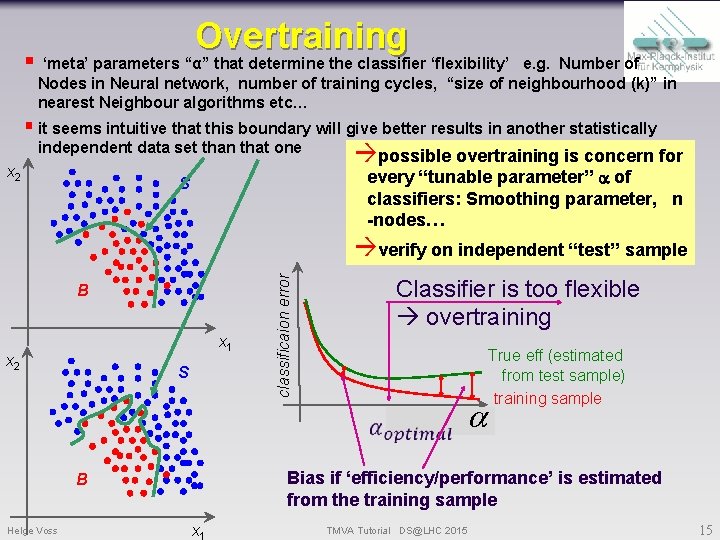

§ Overtraining ‘meta’ parameters “α” that determine the classifier ‘flexibility’ e. g. Number of Nodes in Neural network, number of training cycles, “size of neighbourhood (k)” in nearest Neighbour algorithms etc… § it seems intuitive that this boundary will give better results in another statistically independent data set than that one x 2 possible overtraining is concern for every “tunable parameter” a of classifiers: Smoothing parameter, n -nodes… S B x 1 x 2 S Classifier is too flexible overtraining True eff (estimated from test sample) training sample training cycles a Bias if ‘efficiency/performance’ is estimated from the training sample B Helge Voss classificaion error verify on independent “test” sample x TMVA Tutorial DS@LHC 2015 15

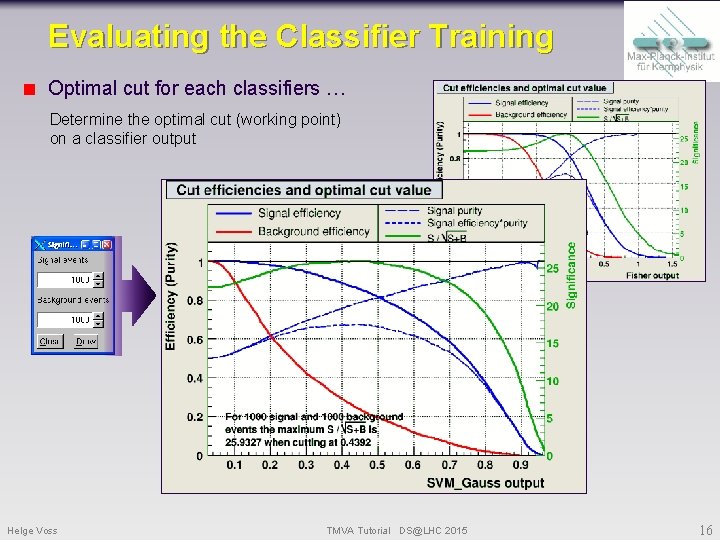

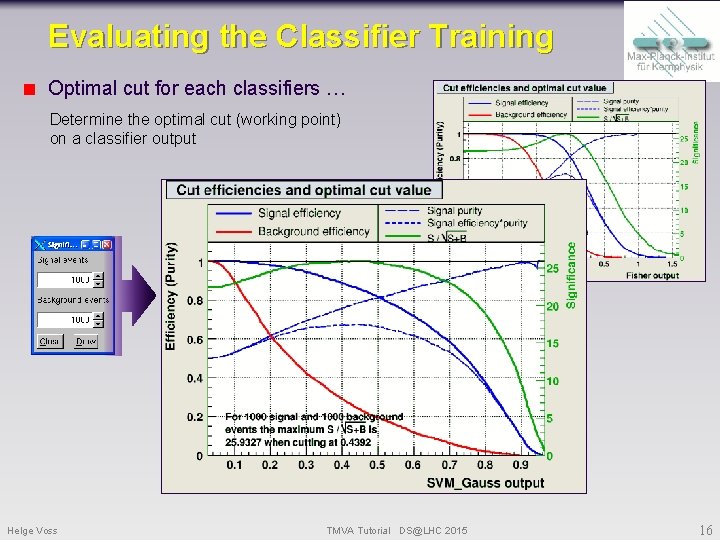

Evaluating the Classifier Training Optimal cut for each classifiers … Determine the optimal cut (working point) on a classifier output Helge Voss TMVA Tutorial DS@LHC 2015 16

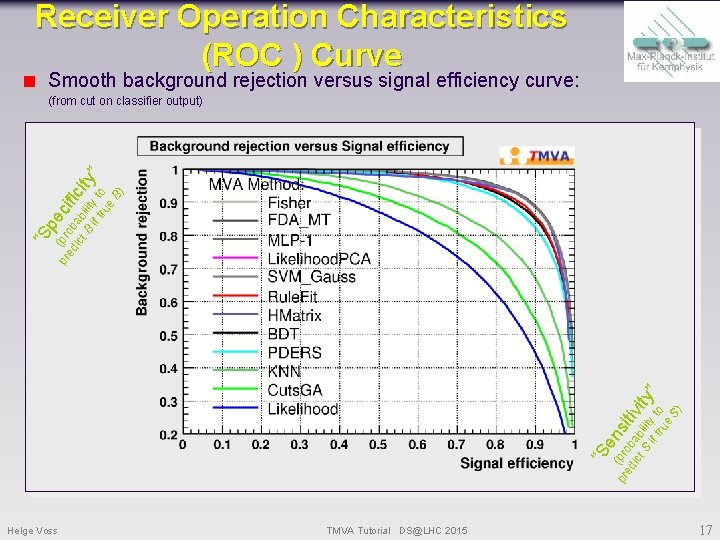

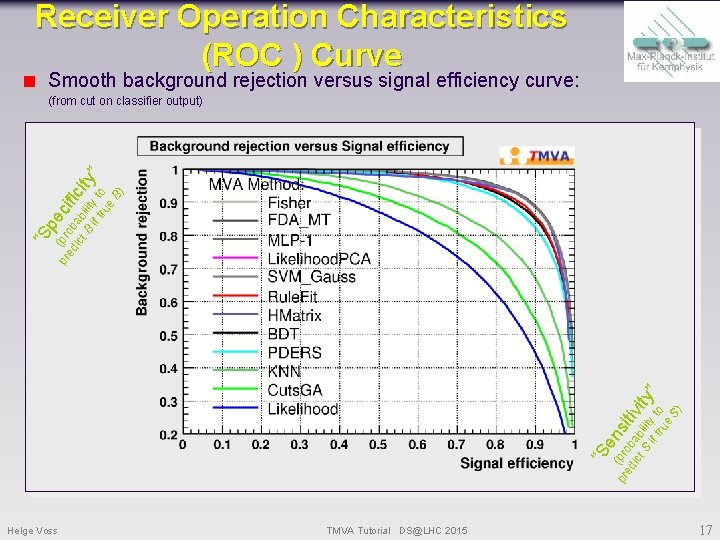

Receiver Operation Characteristics (ROC ) Curve Smooth background rejection versus signal efficiency curve: “S e pr (pro ns ed b iti ict ab vi S ility ty if t to ” ru e S) “S p pr (pro ec ed b ifi ict ab ci B ility ty if t to ” ru e B) (from cut on classifier output) Helge Voss TMVA Tutorial DS@LHC 2015 17

Hands-On Tutorial § wget http: //hvoss. home. cern. ch/hvoss/DSLHC 2015 TMVAExercises. pdf § wget http: //hvoss. home. cern. ch/hvoss/DSLHC 2015 TMVAExercises. tgz Helge Voss TMVA Tutorial DS@LHC 2015 18