What is System Research Why does it Matter

- Slides: 43

What is System Research? Why does it Matter? Zheng Zhang Research Manager System Research Group Microsoft Research Asia

Outline l. A perspective of computer system research ¡ By Roy Levin (Manager Director of MSR-SVC) l Overview l Example of MSRA/SRG activities projects

What is Systems Research? l What makes it research? ¡ ¡ l Something no one has done before. Something that might not work. Universities are known for doing Systems Research, but Industry does it too: ¡ ¡ ¡ VAX Clusters (DEC) Cedar (Xerox) System R (IBM) Non. Stop (Tandem) And, more recently, Microsoft

What is Systems Research? l What specialties does it encompass? Computer Architecture Operating Systems Programming Languages Distributed Applications Security Simulation (and others. . . ) l Networks Protocols Databases Measurement Design + implementation + validation ¡ (implementation includes simulation)

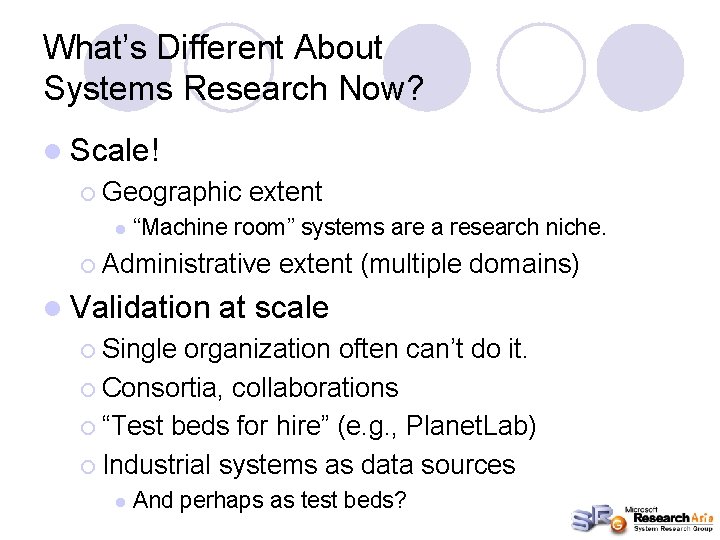

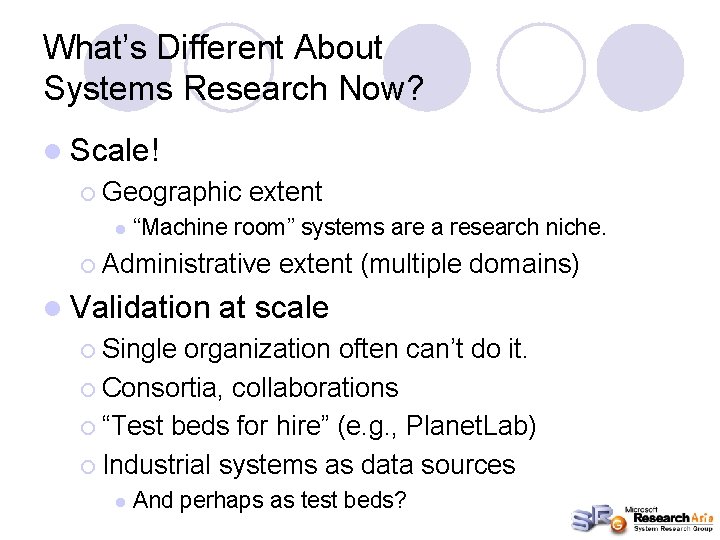

What’s Different About Systems Research Now? l Scale! ¡ Geographic l extent “Machine room” systems are a research niche. ¡ Administrative l Validation extent (multiple domains) at scale ¡ Single organization often can’t do it. ¡ Consortia, collaborations ¡ “Test beds for hire” (e. g. , Planet. Lab) ¡ Industrial systems as data sources l And perhaps as test beds?

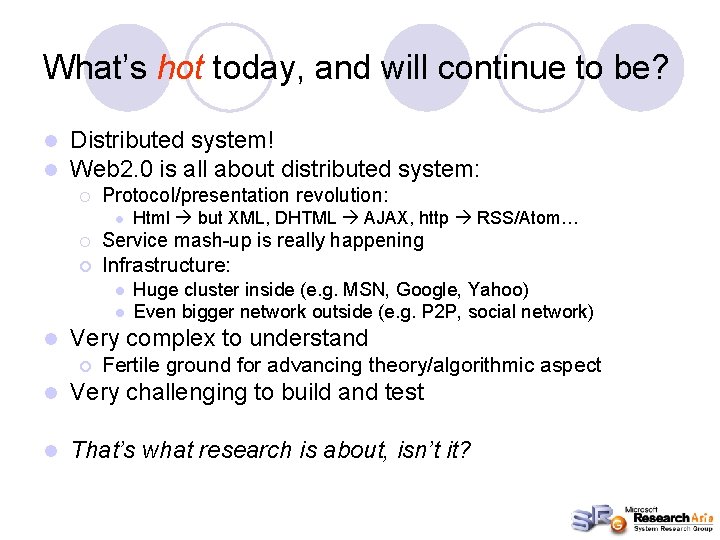

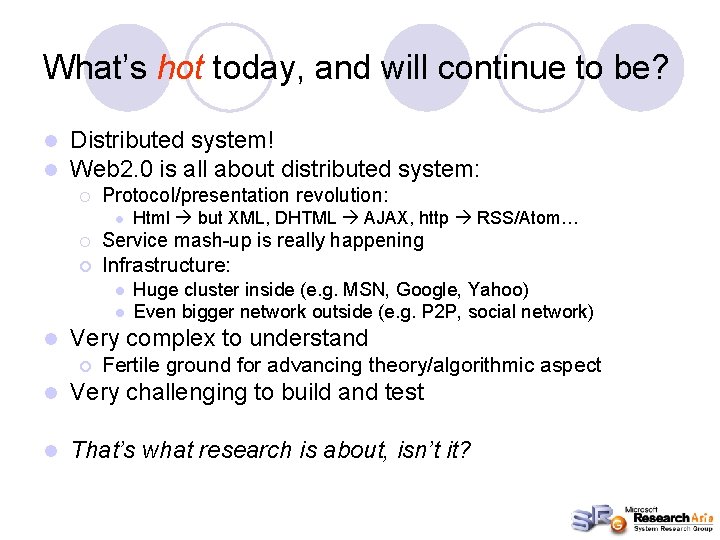

What’s hot today, and will continue to be? l l Distributed system! Web 2. 0 is all about distributed system: ¡ Protocol/presentation revolution: l ¡ Service mash-up is really happening ¡ Infrastructure: l l l Html but XML, DHTML AJAX, http RSS/Atom… Huge cluster inside (e. g. MSN, Google, Yahoo) Even bigger network outside (e. g. P 2 P, social network) Very complex to understand ¡ Fertile ground for advancing theory/algorithmic aspect l Very challenging to build and test l That’s what research is about, isn’t it?

MSRA/SRG Activity Overview

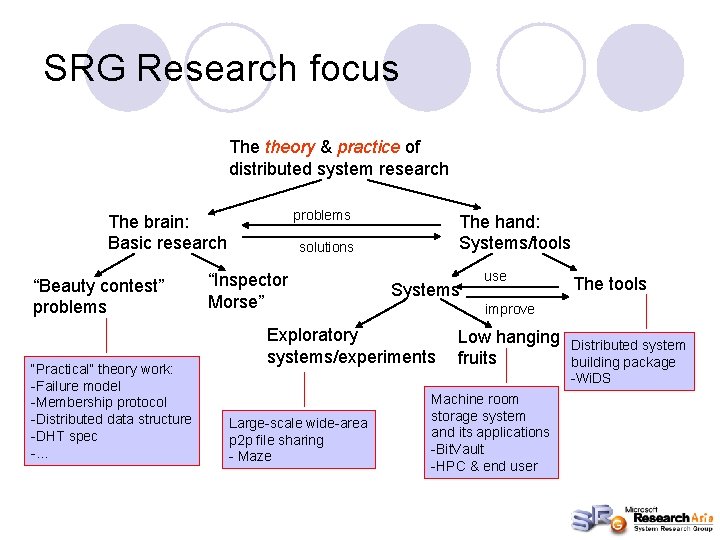

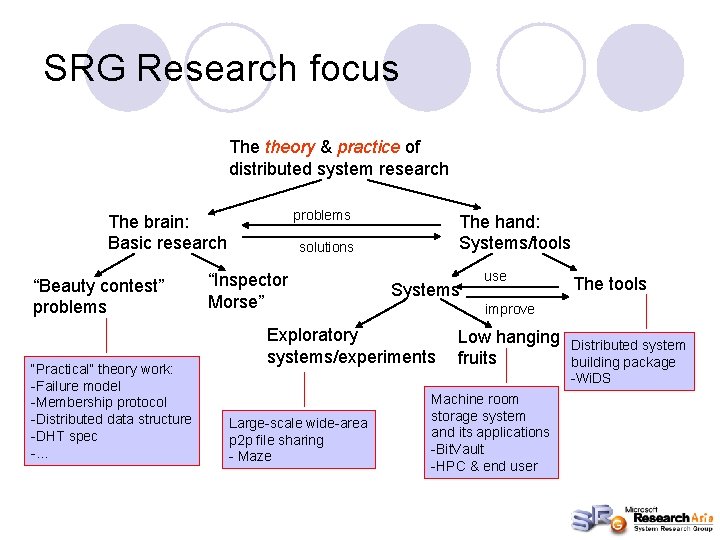

SRG Research focus The theory & practice of distributed system research problems The brain: Basic research “Beauty contest” problems “Practical” theory work: -Failure model -Membership protocol -Distributed data structure -DHT spec -… The hand: Systems/tools solutions “Inspector Morse” Systems The tools improve Exploratory systems/experiments Large-scale wide-area p 2 p file sharing - Maze use Low hanging fruits Machine room storage system and its applications -Bit. Vault -HPC & end user Distributed system building package -Wi. DS

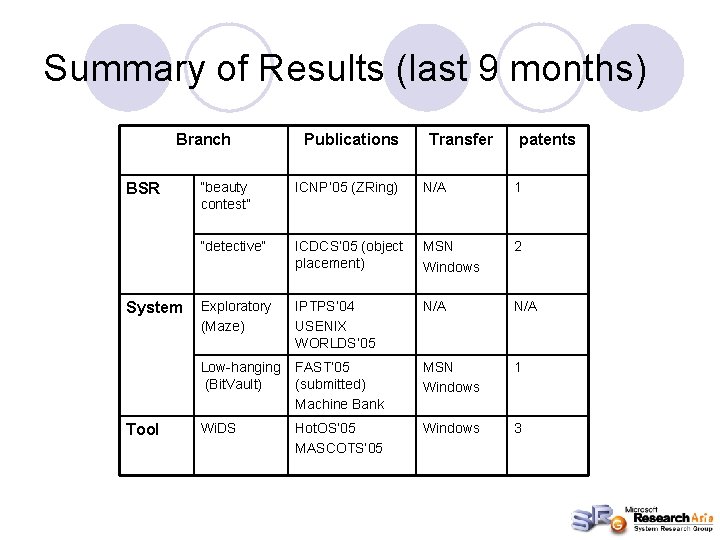

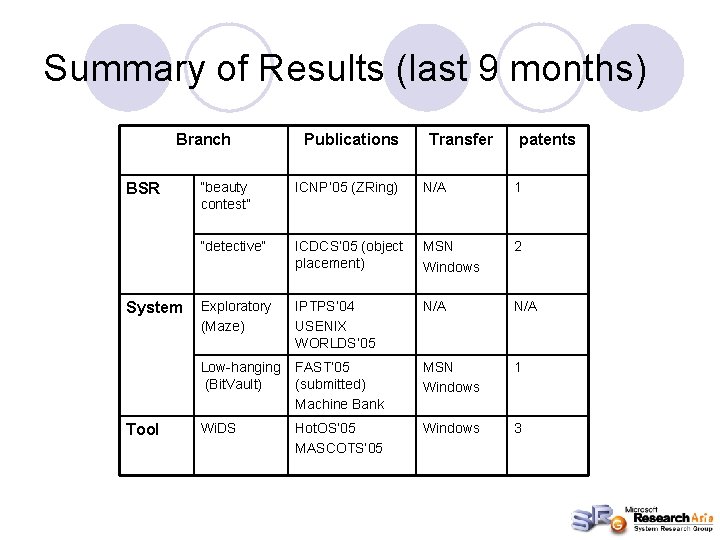

Summary of Results (last 9 months) Branch BSR System Tool Publications Transfer patents “beauty contest” ICNP’ 05 (ZRing) N/A 1 “detective” ICDCS’ 05 (object placement) MSN Windows 2 Exploratory (Maze) IPTPS’ 04 USENIX WORLDS’ 05 N/A Low-hanging (Bit. Vault) FAST’ 05 (submitted) Machine Bank MSN Windows 1 Wi. DS Hot. OS’ 05 MASCOTS’ 05 Windows 3

Some projects in SRG l Building large-scale system ¡ Bit. Vault, Wi. DS and BSR involvement l Large-scale P 2 P system ¡ A collaboration project with Beijing University l My view on Grid and P 2 P computing l Which one would you like to hear?

Bit. Vault and Wi. DS Plus contribution from BSR

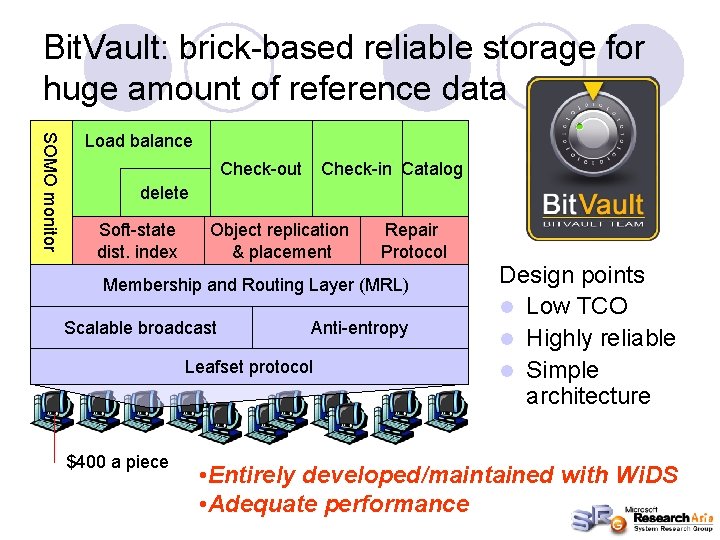

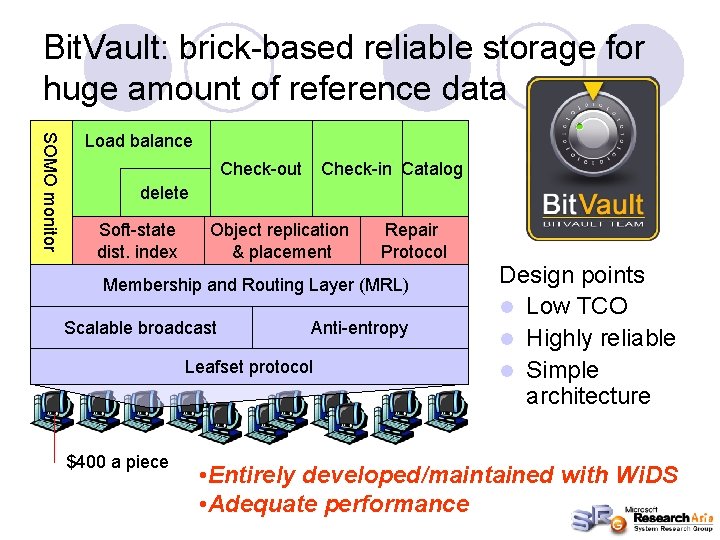

Bit. Vault: brick-based reliable storage for huge amount of reference data SOMO monitor Load balance Check-out Check-in Catalog delete Soft-state dist. index Object replication & placement Repair Protocol Membership and Routing Layer (MRL) Scalable broadcast Anti-entropy Leafset protocol $400 a piece Design points l Low TCO l Highly reliable l Simple architecture • Entirely developed/maintained with Wi. DS • Adequate performance

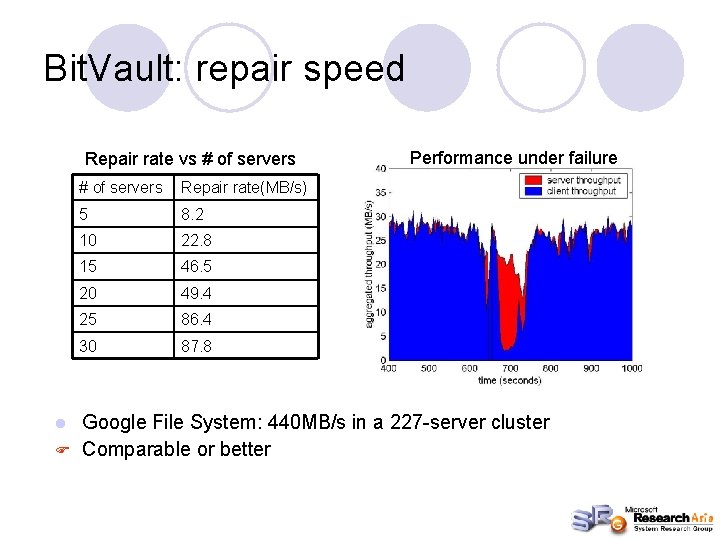

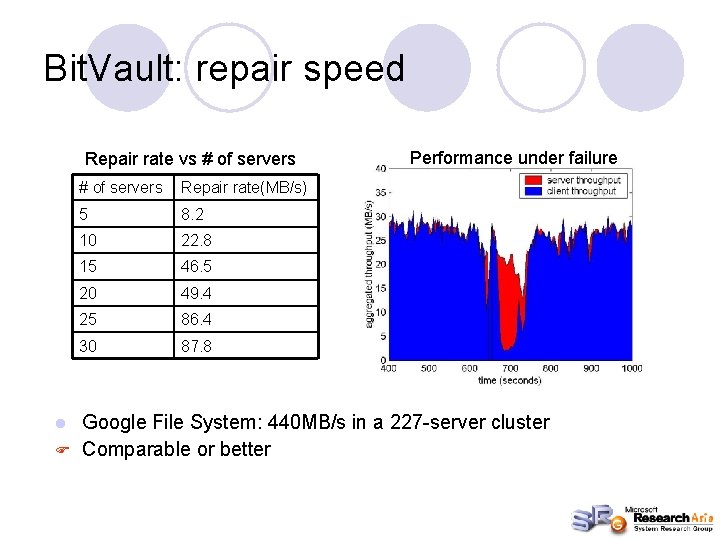

Bit. Vault: repair speed Repair rate vs # of servers Repair rate(MB/s) 5 8. 2 10 22. 8 15 46. 5 20 49. 4 25 86. 4 30 87. 8 Performance under failure Google File System: 440 MB/s in a 227 -server cluster F Comparable or better l

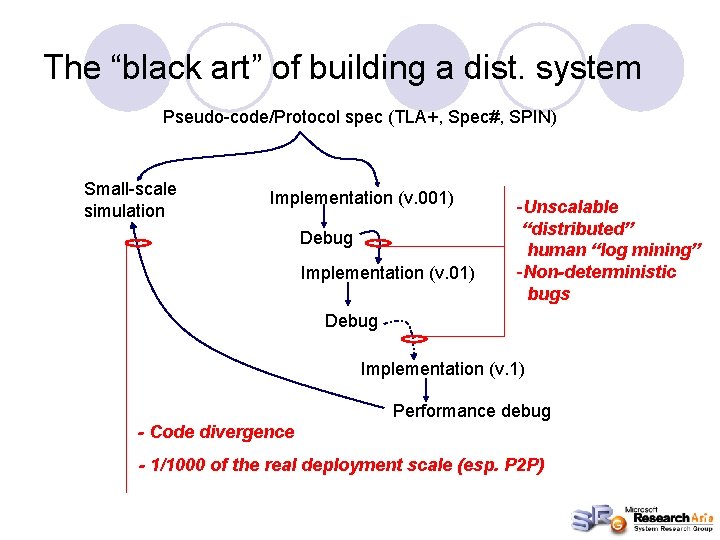

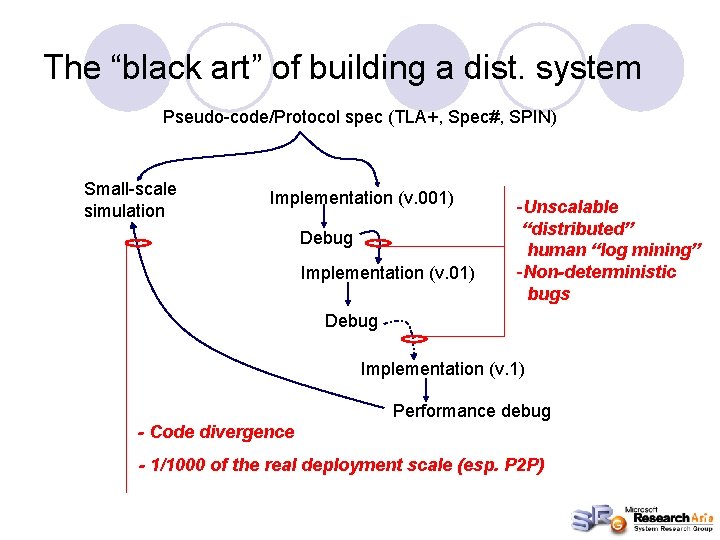

The “black art” of building a dist. system Pseudo-code/Protocol spec (TLA+, Spec#, SPIN) Small-scale simulation Implementation (v. 001) Debug Implementation (v. 01) -Unscalable “distributed” human “log mining” -Non-deterministic bugs Debug Implementation (v. 1) Performance debug - Code divergence - 1/1000 of the real deployment scale (esp. P 2 P)

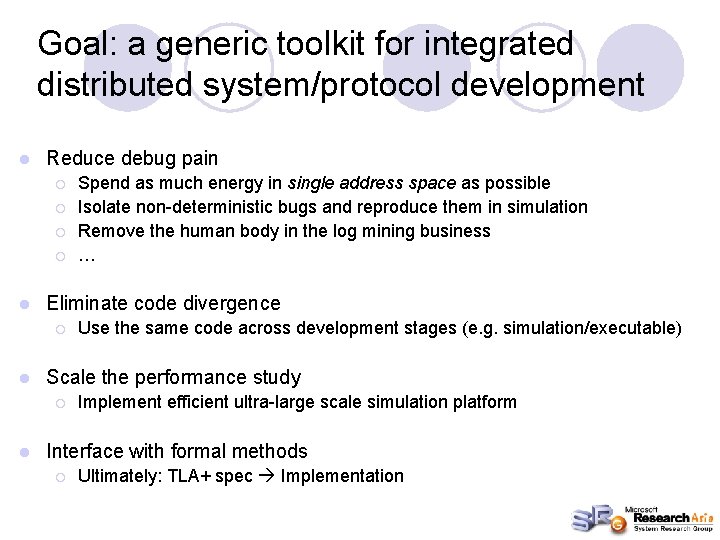

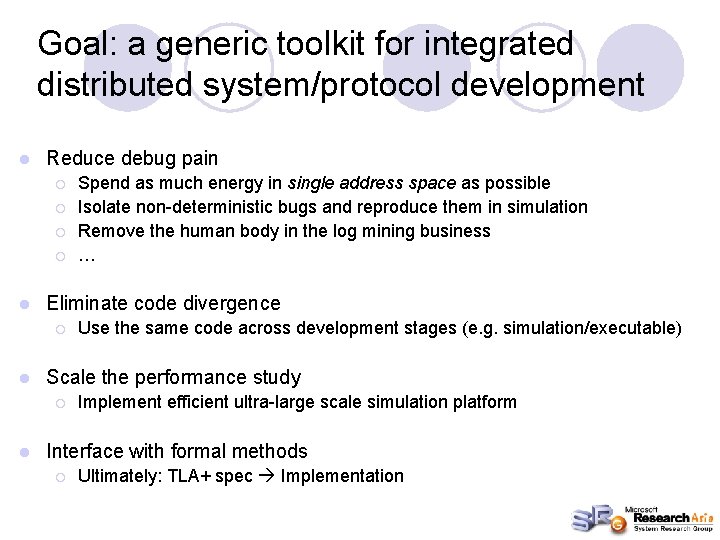

Goal: a generic toolkit for integrated distributed system/protocol development l Reduce debug pain ¡ ¡ l Eliminate code divergence ¡ l Use the same code across development stages (e. g. simulation/executable) Scale the performance study ¡ l Spend as much energy in single address space as possible Isolate non-deterministic bugs and reproduce them in simulation Remove the human body in the log mining business … Implement efficient ultra-large scale simulation platform Interface with formal methods ¡ Ultimately: TLA+ spec Implementation

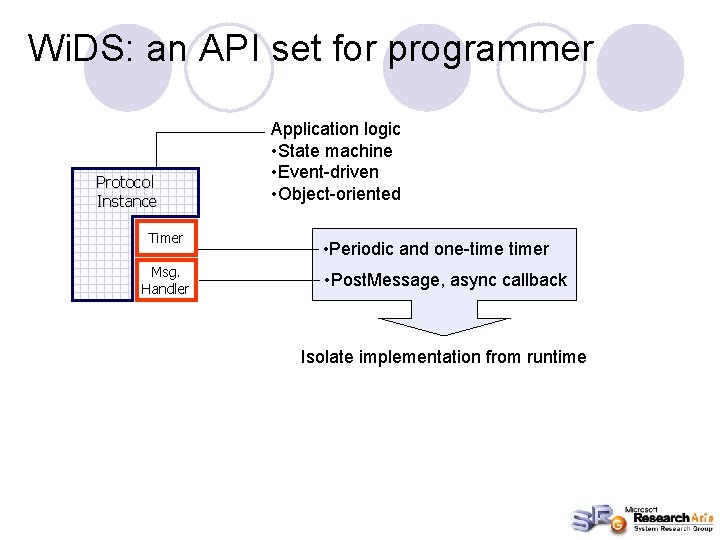

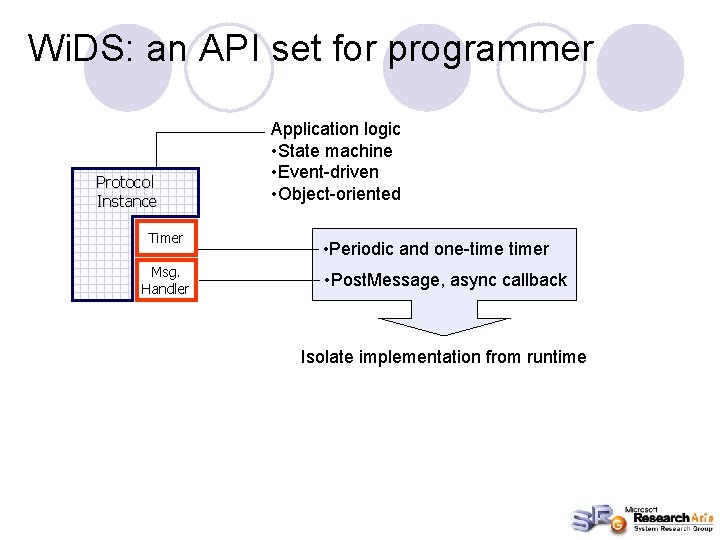

Wi. DS: an API set for programmer Protocol Instance Timer Msg. Handler Application logic • State machine • Event-driven • Object-oriented • Periodic and one-timer • Post. Message, async callback Isolate implementation from runtime

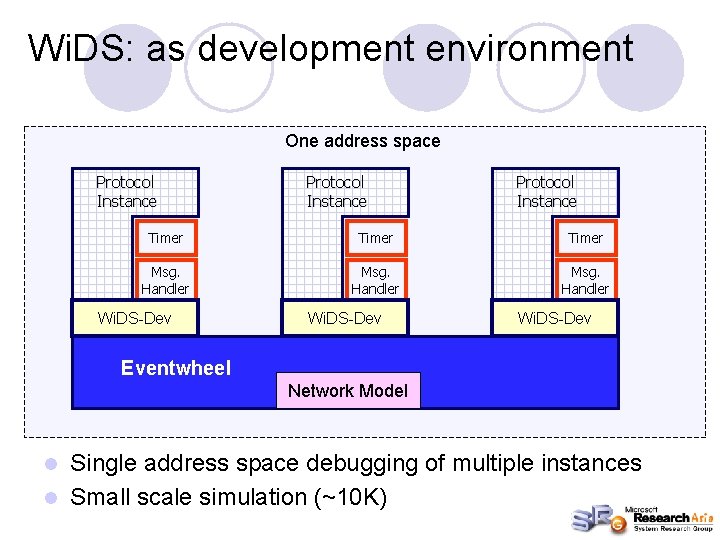

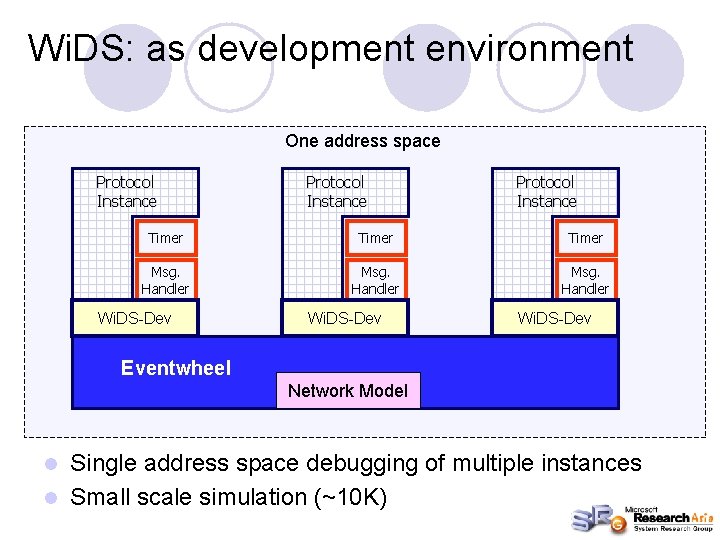

Wi. DS: as development environment One address space Protocol Instance Timer Msg. Handler Wi. DS-Dev Eventwheel Network Model Single address space debugging of multiple instances l Small scale simulation (~10 K) l

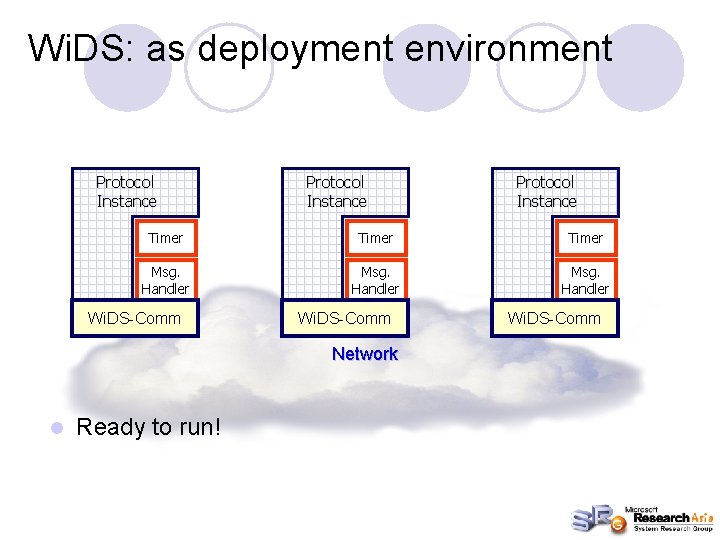

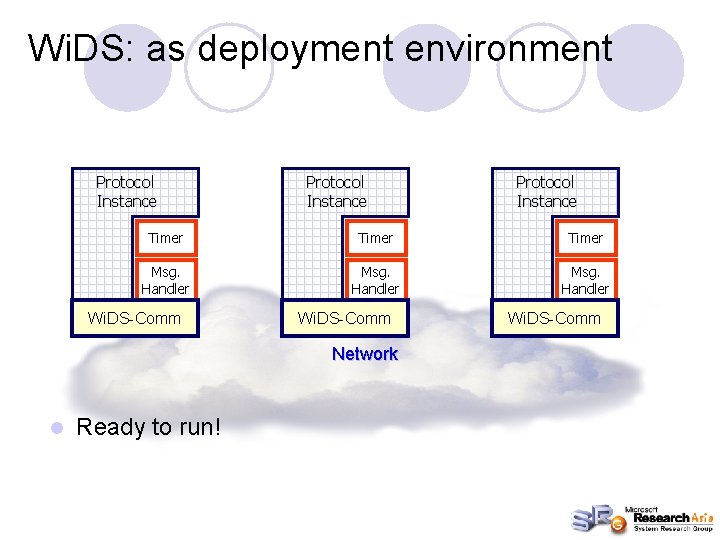

Wi. DS: as deployment environment Protocol Instance Timer Msg. Handler Wi. DS-Comm Network l Protocol Instance Ready to run! Wi. DS-Comm

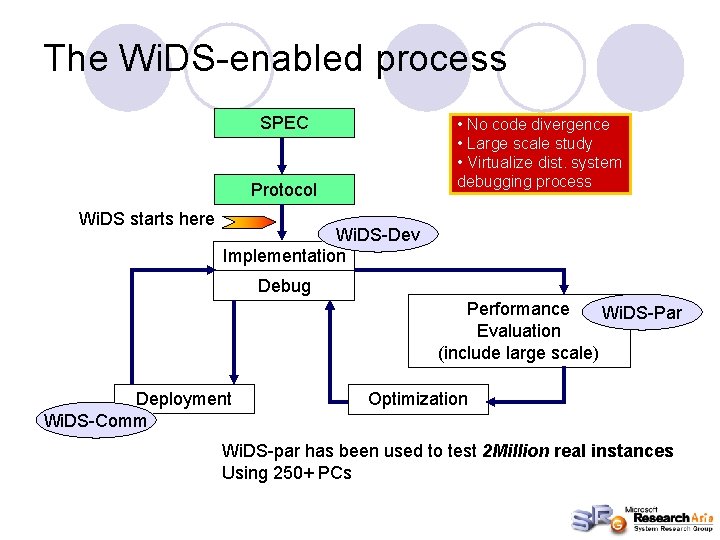

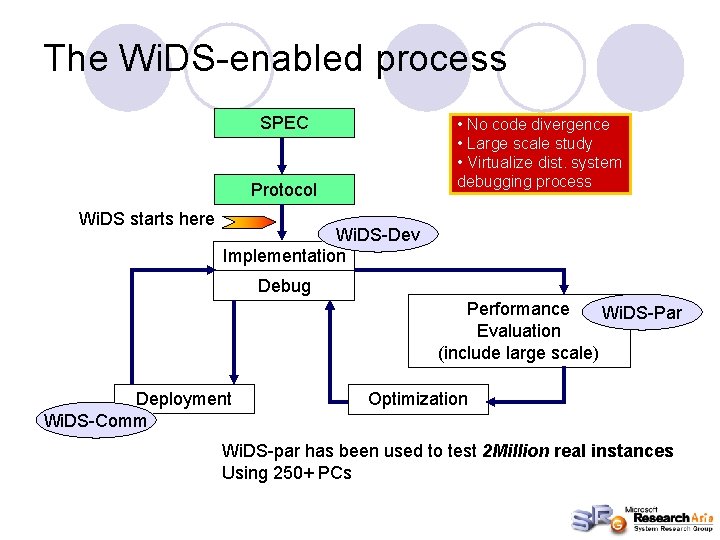

The Wi. DS-enabled process SPEC • No code divergence • Large scale study • Virtualize dist. system debugging process Protocol Wi. DS starts here Wi. DS-Dev Implementation Debug Performance Wi. DS-Par Evaluation (include large scale) Deployment Wi. DS-Comm Optimization Wi. DS-par has been used to test 2 Million real instances Using 250+ PCs

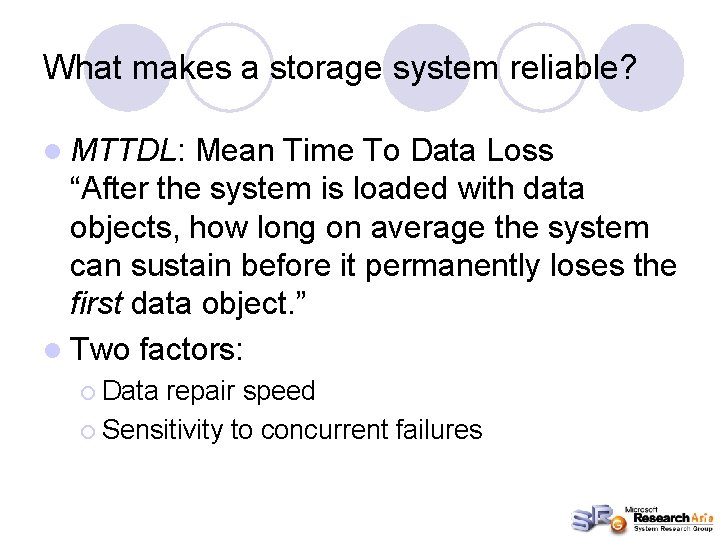

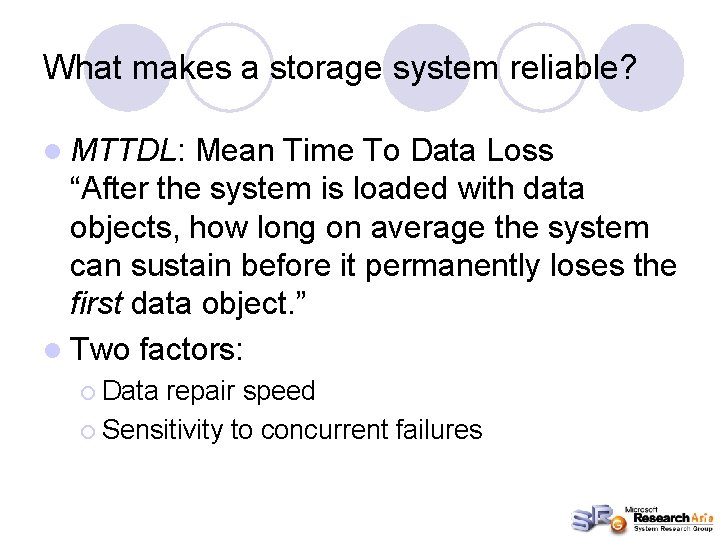

What makes a storage system reliable? l MTTDL: Mean Time To Data Loss “After the system is loaded with data objects, how long on average the system can sustain before it permanently loses the first data object. ” l Two factors: ¡ Data repair speed ¡ Sensitivity to concurrent failures

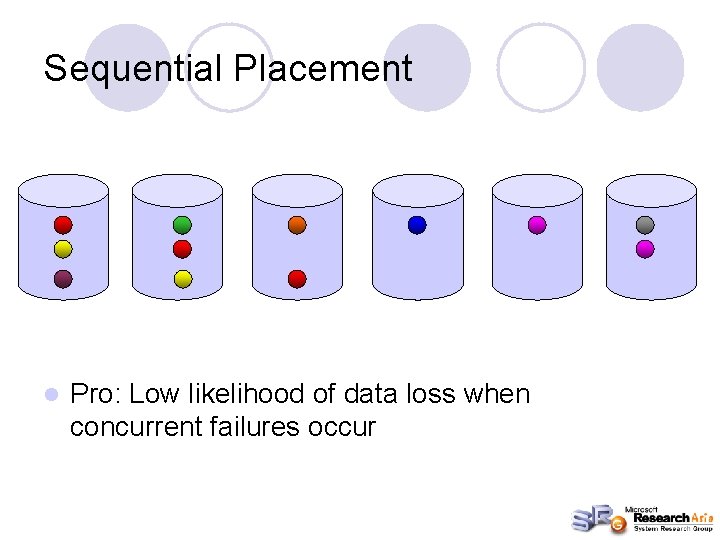

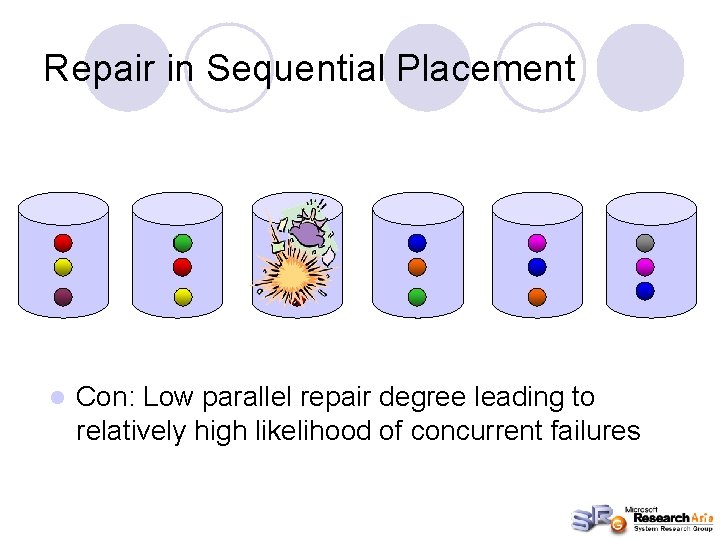

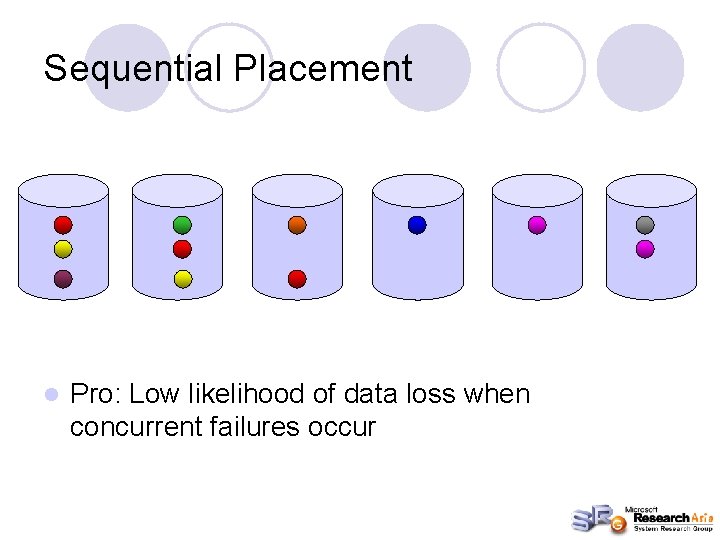

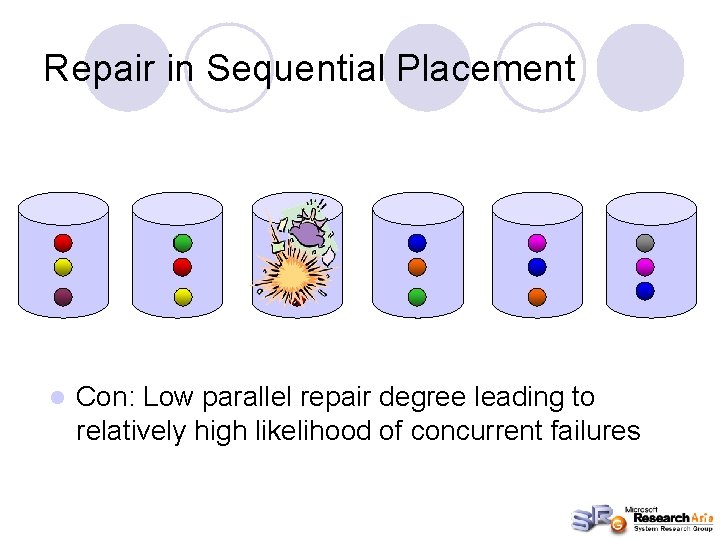

Sequential Placement l Pro: Low likelihood of data loss when concurrent failures occur

Repair in Sequential Placement l Con: Low parallel repair degree leading to relatively high likelihood of concurrent failures

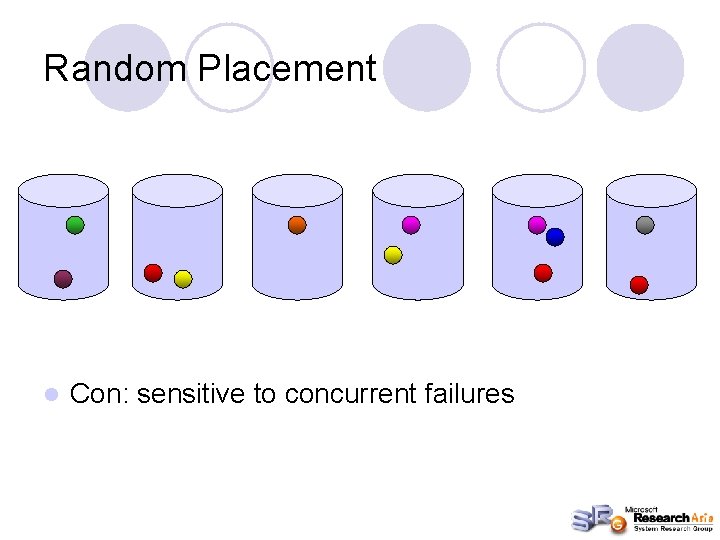

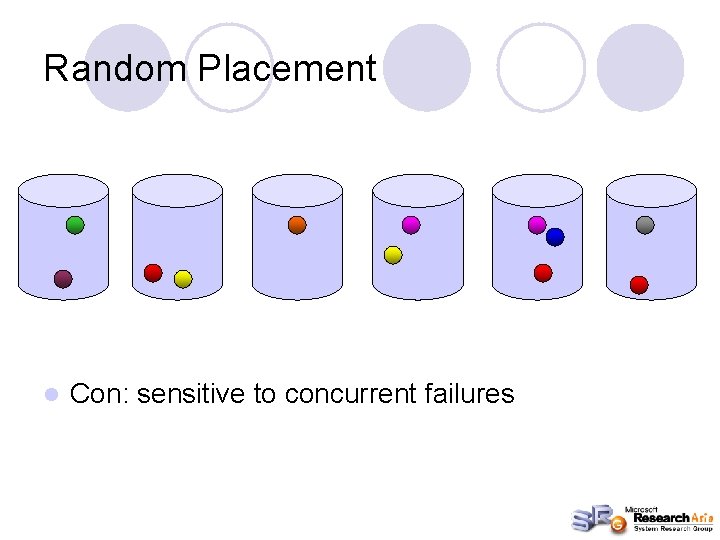

Random Placement l Con: sensitive to concurrent failures

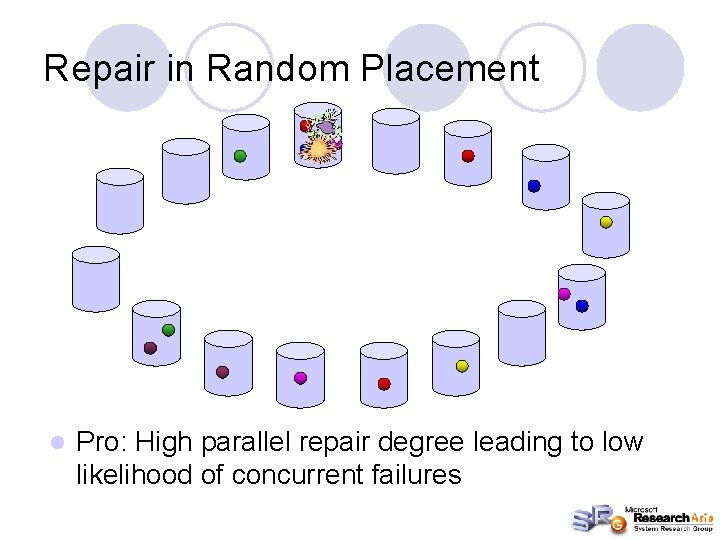

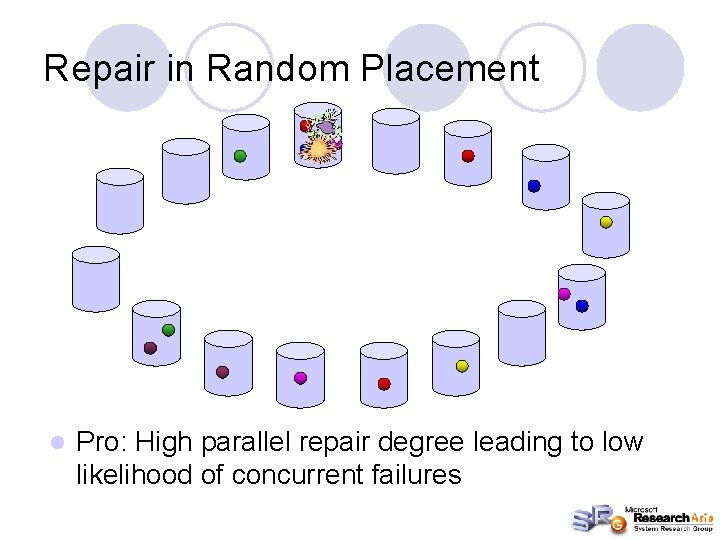

Repair in Random Placement l Pro: High parallel repair degree leading to low likelihood of concurrent failures

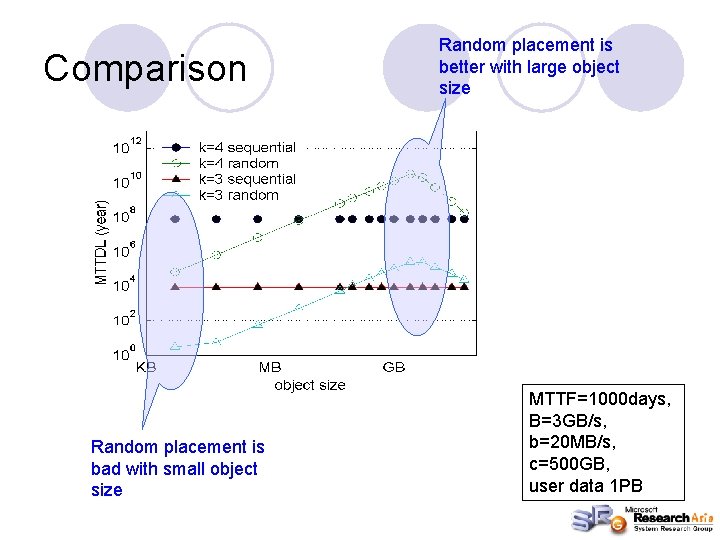

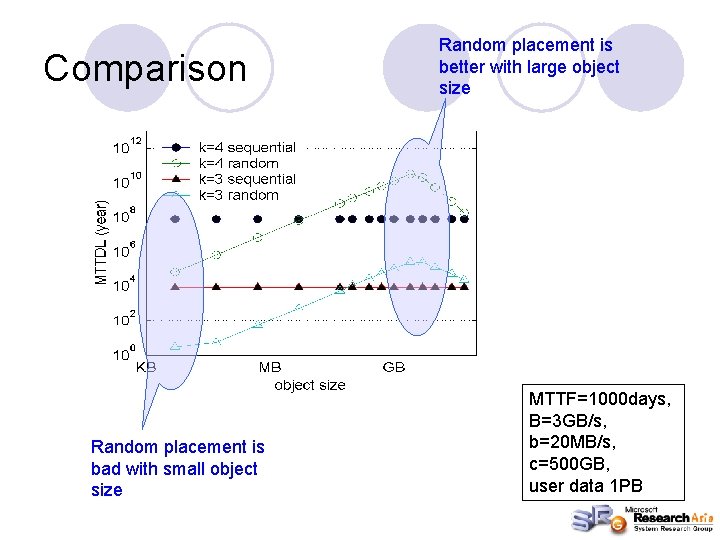

Comparison Random placement is bad with small object size Random placement is better with large object size MTTF=1000 days, B=3 GB/s, b=20 MB/s, c=500 GB, user data 1 PB

ICDCS’ 05 result summary Established the first framework of object placement’s impact on reliability l Upshot: l ¡ ¡ Spread your replicas as widely as you can Up to the point when BW is fully utilized for repair l l More than that will hurt reliability Core algorithm adopted by many MSN large scale storage projects/products

Ongoing work: l More on object placement: ¡ ¡ l We are looking at system with extremely longevity Heterogeneity capacity and other dynamics have not been factored in Improving Wi. DS further: ¡ ¡ It is still so hard to debug!! Idea: l l Replay facility to take logs from deployment Time-travel inside simulation Use model and invariance checker to identify fault location and path See SOSP’ 05 poster

Maze With Beijing Univ Maze Team

Maze File Sharing System l The largest in China ¡ ¡ l On CERNET, popular with college students Population: 1. 4 million registered accounts; 30, 000+ online users More than 200 million files More than 13 TB (!) transfer everyday Completely developed, operated and deployed by an academic team ¡ ¡ Logs added since the collaboration w/ MSRA last year Enable detailed study at all angles

Rare System for Academic Studies WORLD’ 04: system architecture l IPTPS’ 05: The “free-rider” problem l AEPP’ 05: Statistics of shared objects and traffic pattern l Incentive to promote sharing collusion and cheating l Trust and fairness Can we defeat collusion? l

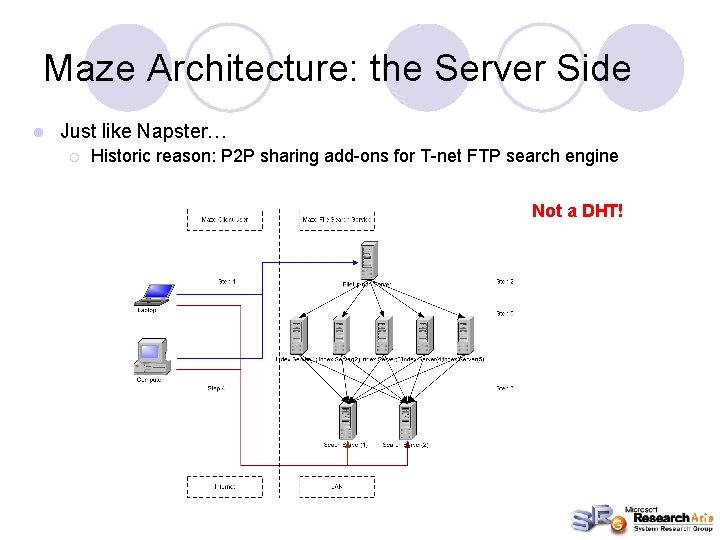

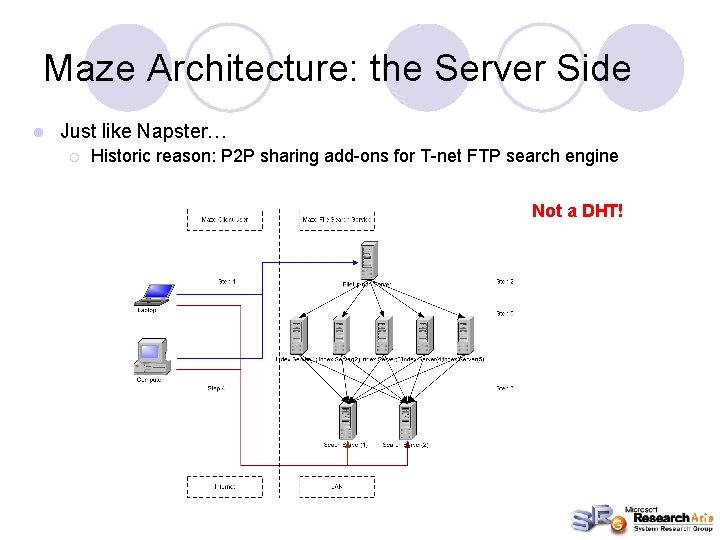

Maze Architecture: the Server Side l Just like Napster… ¡ Historic reason: P 2 P sharing add-ons for T-net FTP search engine Not a DHT!

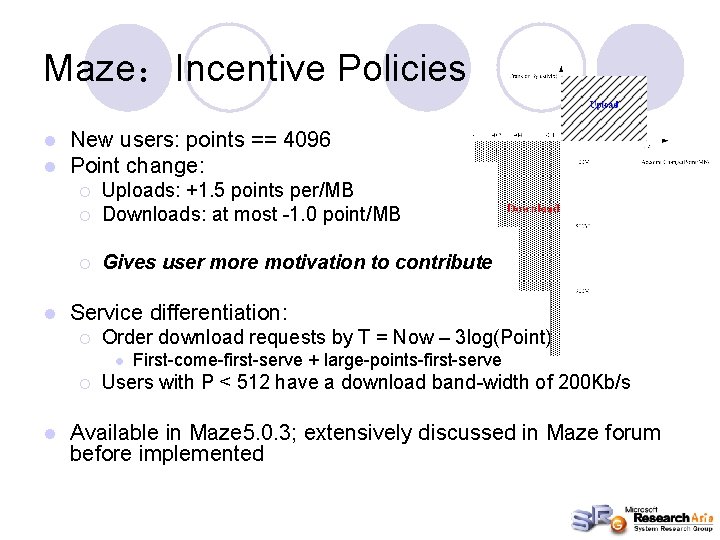

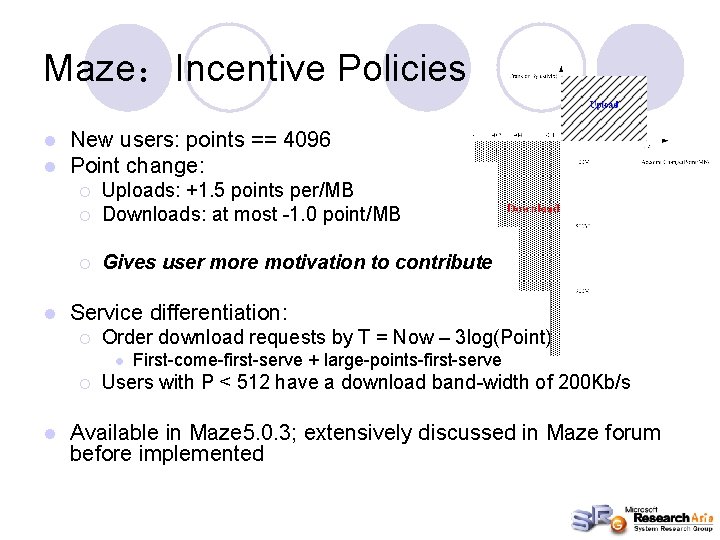

Maze:Incentive Policies l l New users: points == 4096 Point change: ¡ Uploads: +1. 5 points per/MB Downloads: at most -1. 0 point/MB ¡ Gives user more motivation to contribute ¡ l Service differentiation: ¡ Order download requests by T = Now – 3 log(Point) l ¡ l First-come-first-serve + large-points-first-serve Users with P < 512 have a download band-width of 200 Kb/s Available in Maze 5. 0. 3; extensively discussed in Maze forum before implemented

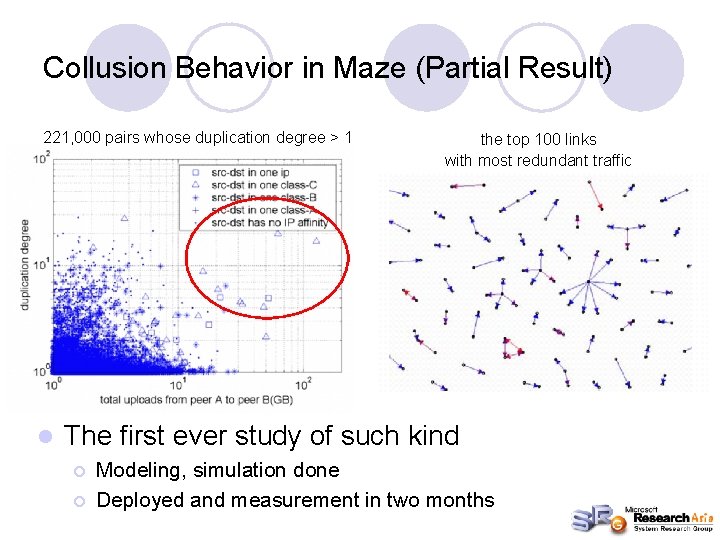

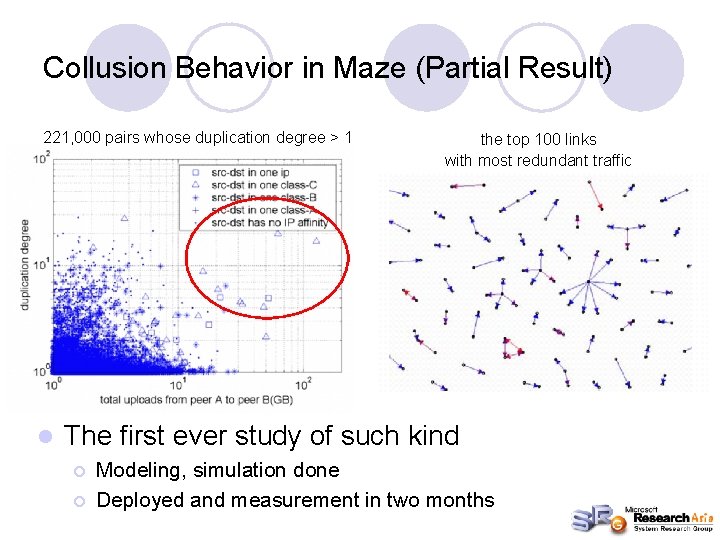

Collusion Behavior in Maze (Partial Result) 221, 000 pairs whose duplication degree > 1 l the top 100 links with most redundant traffic The first ever study of such kind ¡ ¡ Modeling, simulation done Deployed and measurement in two months

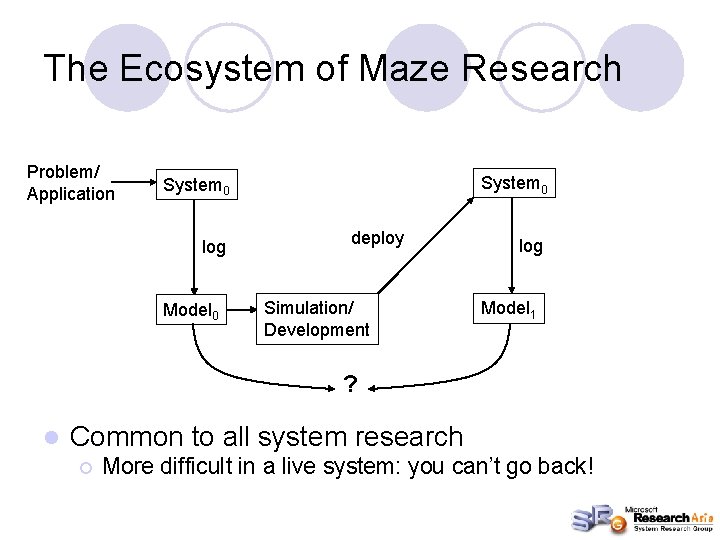

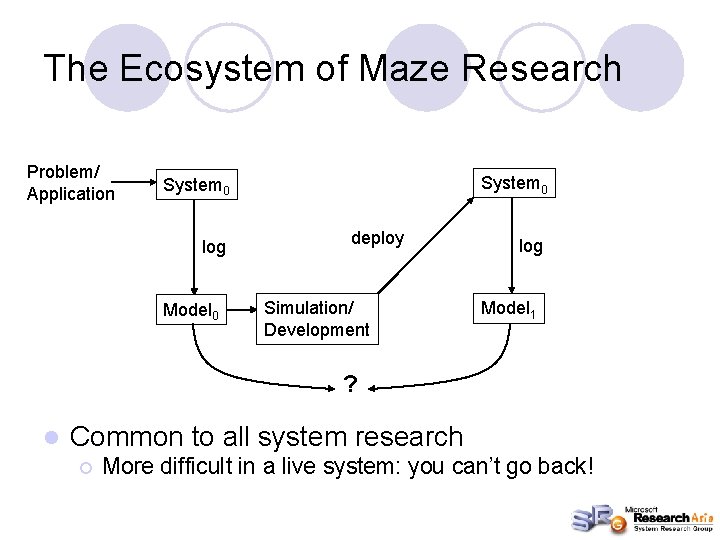

The Ecosystem of Maze Research Problem/ Application System 0 log Model 0 deploy Simulation/ Development log Model 1 ? l Common to all system research ¡ More difficult in a live system: you can’t go back!

Grid and P 2 P Computing

Knowing the Gap is Often More Important (Or you risk falling off the cliff!) The gap is often a manifest of LAW (speed of light) l The gap between Wide-Area (GRID) and cluster/HPC can be just as wide as between HPC and sensor network l l Many impossibility theory exist ¡ ¡ ¡ Negative results are not a bad thing The bad thing is that many are unaware! Example: l l The impossibility of consensus in asynchronous network The impossibility of achieving consistency, availability and partition resilient simultaneously

What is Grid? Or, the Problem that I see l Historically associated with HPC ¡ l Problematically borrowing concept from an environment governed by a different law ¡ ¡ l Internet as a grand JVM unlikely Need to extract common system infrastructure after gaining enough application experiences Sharing and collaboration are labels applied without careful investigation ¡ l Dressed up when running short on gas Where/what is the 80 -20 sweet-spot? Likewise, adding the P 2 P spin should be carefully done

What is Grid? (cont. ) l Grid <= HPC + Web Service ¡ l HPC isn’t done yet; check google Why? You need HPC to run the apps, or store the data ¡ Service has clear boundary ¡ Interoperable protocol bound the services ¡

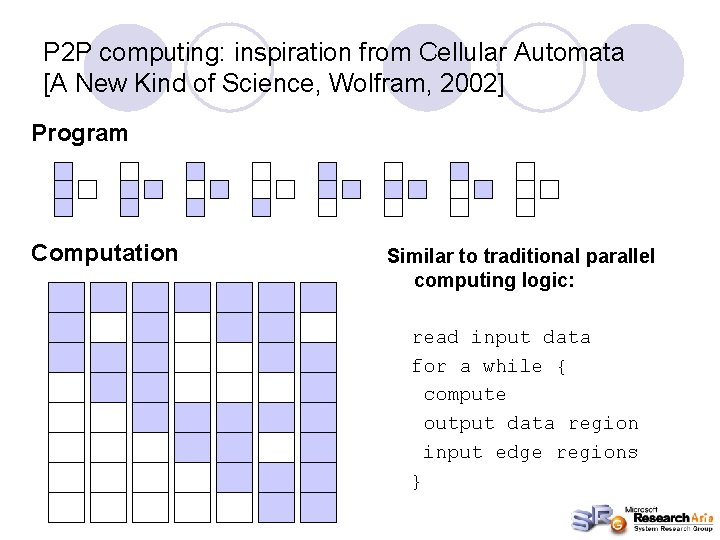

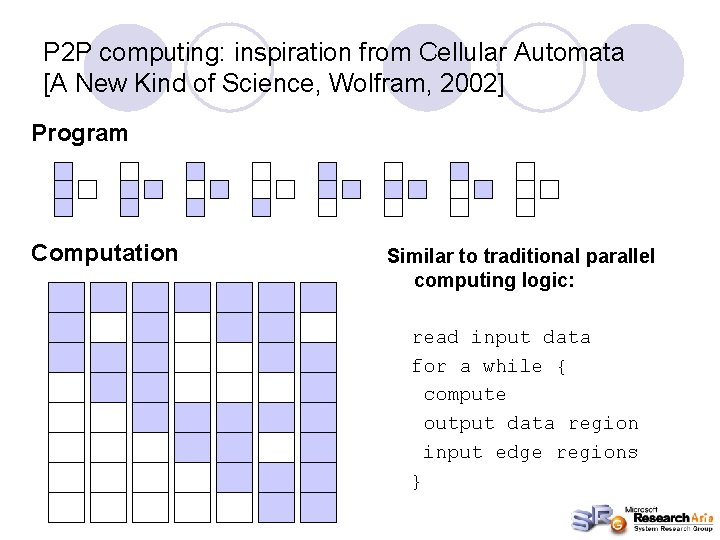

P 2 P computing: inspiration from Cellular Automata [A New Kind of Science, Wolfram, 2002] Program Computation Similar to traditional parallel computing logic: read input data for a while { compute output data region input edge regions }

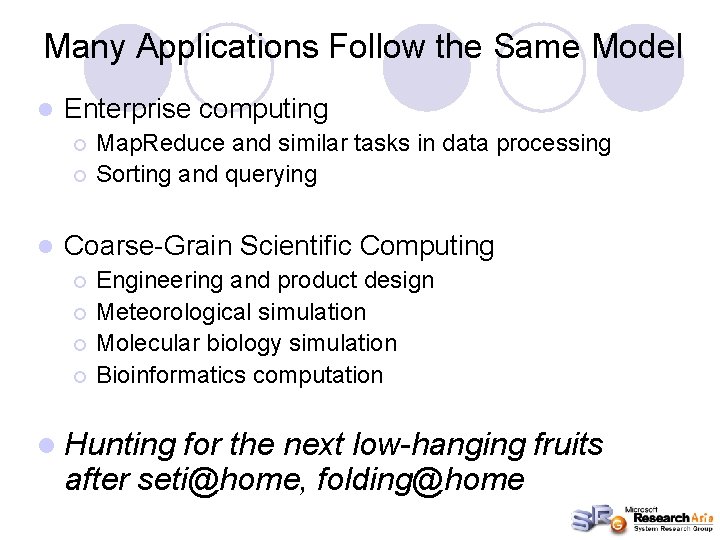

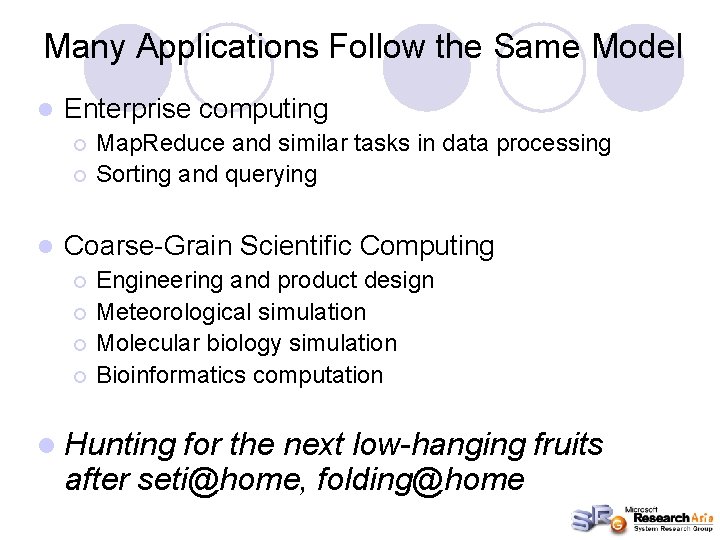

Many Applications Follow the Same Model l Enterprise computing Map. Reduce and similar tasks in data processing ¡ Sorting and querying ¡ l Coarse-Grain Scientific Computing Engineering and product design ¡ Meteorological simulation ¡ Molecular biology simulation ¡ Bioinformatics computation ¡ l Hunting for the next low-hanging fruits after seti@home, folding@home

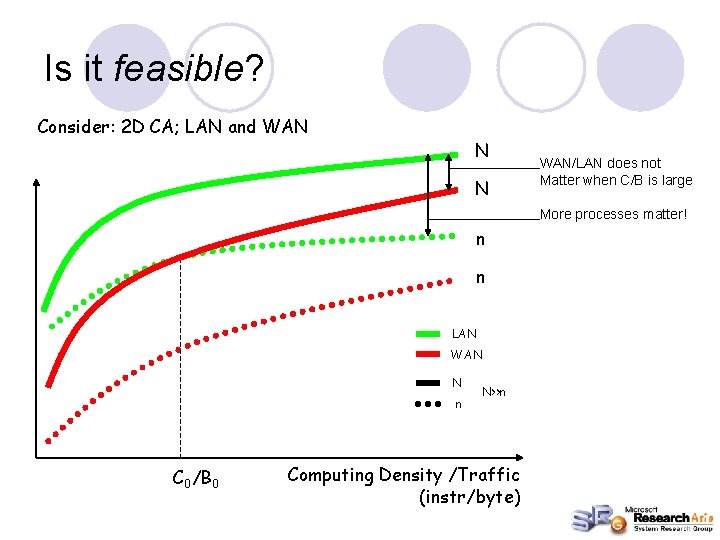

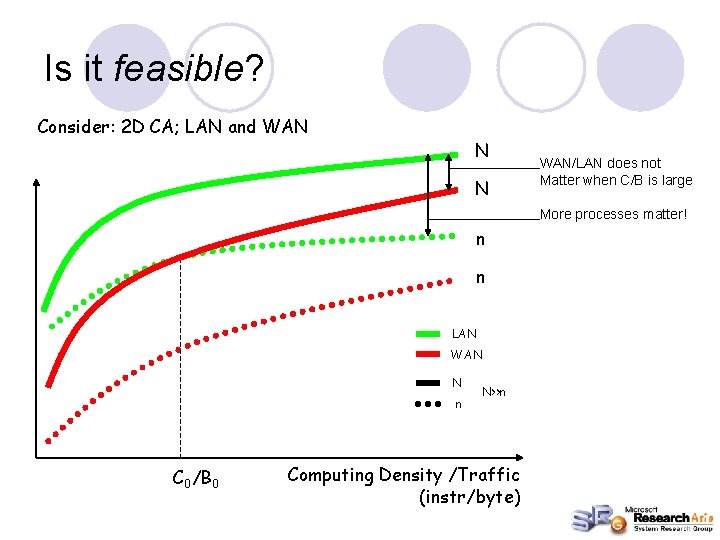

Is it feasible? Consider: 2 D CA; LAN and WAN N N WAN/LAN does not Matter when C/B is large More processes matter! n n LAN WAN N n C 0/B 0 N>>n Computing Density /Traffic (instr/byte)

What’s the point of Grid, or not-Grid? Copy ready concepts across context is easy l But it often does not work l ¡ l Context is governed by the law of physics Need to start building and testing applications ¡ Then we can define what “Grid OS” is truly about

Thanks