What do you know when you know the

- Slides: 40

What do you know when you know the test results? The meanings of educational assessments Annual Conference of the International Association for Educational Assessment, Cambridge, UK: September 10 th, 2008. www. dylanwiliam. net

Overview of presentation What have we learned about assessment? The importance of (un)reliability Evolving conceptions of validity (Mis)uses of assessments for educational accountability Some prospects for the future 2

Reliability vs. consistency Classical measures of reliability are meaningful only for groups are designed for continuous measures Scores versus grades Scores suffer from spurious accuracy Grades suffer from spurious precision Classification consistency A more technically appropriate measure of the reliability of assessment Closer to the intuitive meaning of reliability 3

Validity Traditional definition: a property of assessments A test is valid to the extent that it assesses what it purports to assess Key properties (content validity) Relevance Representativeness “Trinitarian’ views of validity Content validity Criterion-related validity Concurrent validity Predictive validity Construct validity 4

Predictive validity~0. 4 Probability of selecting best candidate with coin flip: 0. 50 Probability of selecting best candidate with predictor: 0. 60 5

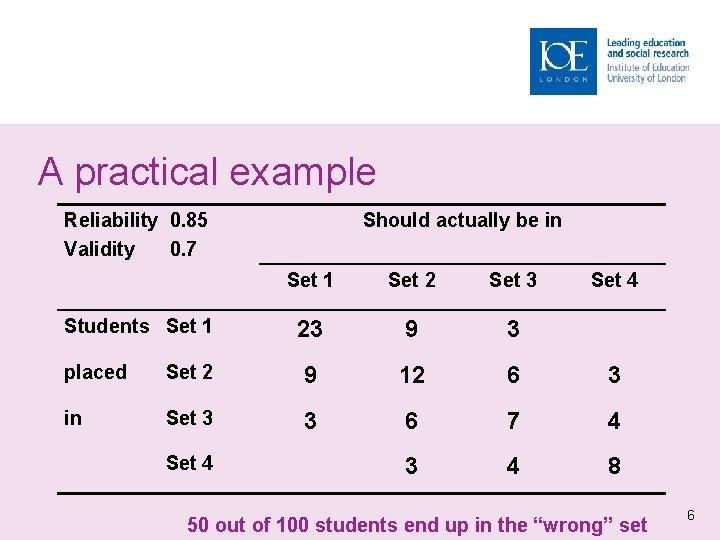

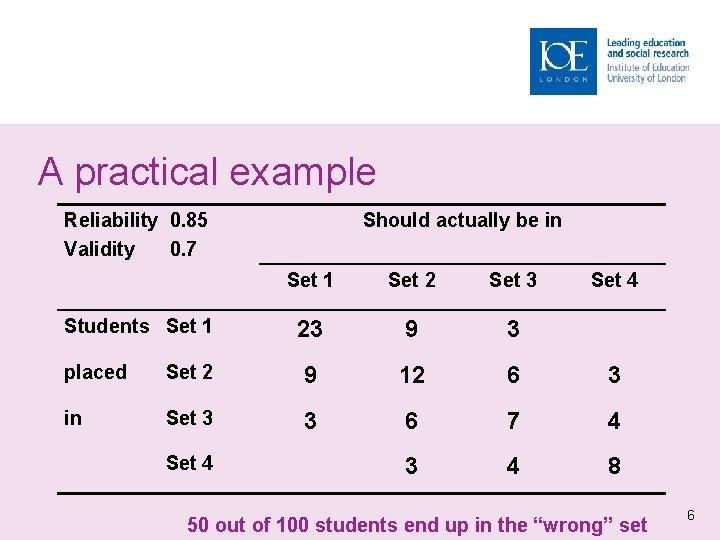

A practical example Reliability 0. 85 Validity 0. 7 Students Set 1 Should actually be in Set 1 Set 2 Set 3 23 9 3 Set 4 placed Set 2 9 12 6 3 in Set 3 3 6 7 4 3 4 8 Set 4 50 out of 100 students end up in the “wrong” set 6

A made-up—but real—problem Consider the following scenario: We wish to select candidates for a medical degree programme We have an assessment that predicts performance on the programme with a correlation of 0. 71 We have 1000 applicants for 100 places The logical solution Choose the 100 students with the highest score on the predictor. 7

But what if… Correlation = 0. 71 8

Effects of hyperselectivity If there are equal numbers of males and females, and males and females have equal mean scores, but the standard deviation of the male scores is 20% greater than the standard deviation of the female scores Percent of cohort selected Percent of females selected 50 50 40 49 30 47 20 44 10 39 5 35 2 31 1 28 0. 1 11 9

Validity is a property of inferences, not of assessments “One validates, not a test, but an interpretation of data arising from a specified procedure” (Cronbach, 1971; emphasis in original) The phrase “A valid test” is therefore a category error (like “A happy rock”) No such thing as a valid (or indeed invalid) assessment No such thing as a biased assessment Reliability is a pre-requisite for validity Talking about “reliability and validity” is like talking about “swallows and birds” Validity includes reliability 10

Modern conceptions of validity “Validity is an integrative evaluative judgment of the degree to which empirical evidence and theoretical rationales support the adequacy and appropriateness of inferences and actions based on test scores or other modes of assessment” (Messick, 1989 p. 13) Validity subsumes all aspects of assessment quality Reliability Representativeness (content coverage) Relevance Predictiveness But not impact (Popham: right concern, wrong concept) 11

Consequential validity? No such thing! As has been stressed several times already, it is not that adverse social consequences of test use render the use invalid, but, rather, that adverse social consequences should not be attributable to any source of test invalidity such as construct-irrelevant variance. If the adverse social consequences are empirically traceable to sources of test invalidity, then the validity of the test use is jeopardized. If the social consequences cannot be so traced—or if the validation process can discount sources of test invalidity as the likely determinants, or at least render them less plausible—then the validity of the test use is not overturned. Adverse social consequences associated with valid test interpretation and use may implicate the attributes validly assessed, to be sure, as they function under the existing social conditions of the applied setting, but they are not in themselves indicative of invalidity. (Messick, 1989, p. 88 -89) 12

Threats to validity Inadequate reliability Construct-irrelevant variance Differences in scores are caused, in part, by differences not relevant to the construct of interest The assessment assesses things it shouldn’t The assessment is “too big” Construct under-representation Differences in the construct are not reflected in scores The assessment doesn’t assess things it should The assessment is “too small” With clear construct definition all of these are technical—not value— issues But they interact strongly… 13

Some practical applications

School effectiveness Do differences in student achievement outcomes support inferences about school quality? Key issues: Construct-irrelevant variance Differences in prior achievement Construct under-representation Systematic exclusion of important areas of achievement 15

Construct-irrelevant variance

17

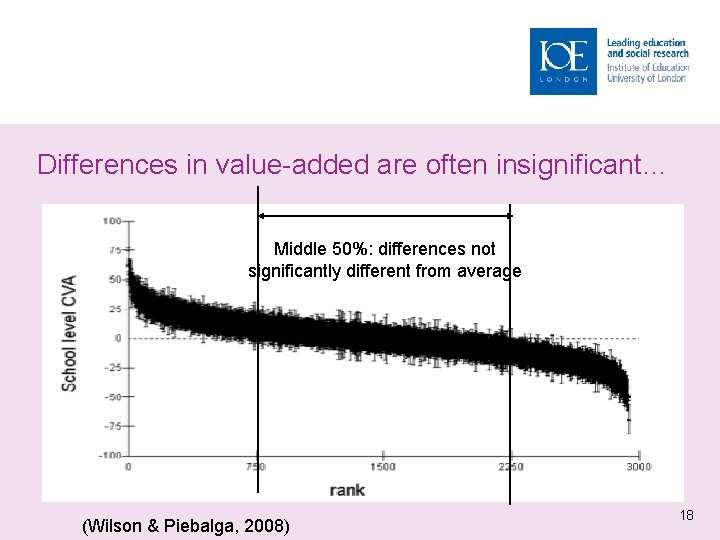

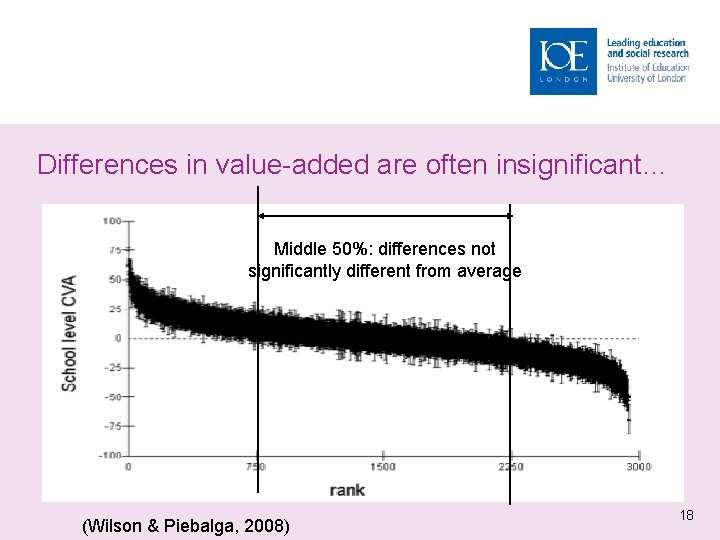

Differences in value-added are often insignificant… Middle 50%: differences not significantly different from average (Wilson & Piebalga, 2008) 18

…are usually small… In England: 7% of the variability in secondary school examination scores are attributable to the school 93% of the variability in secondary school examination scores are nothing to do with the school 19

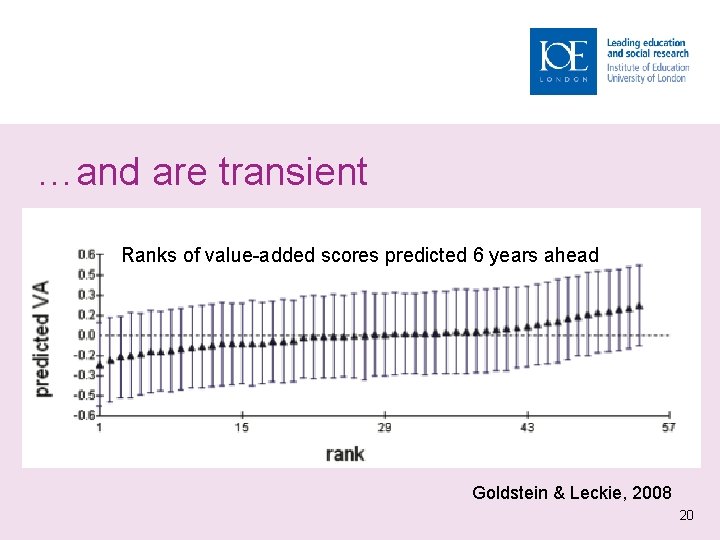

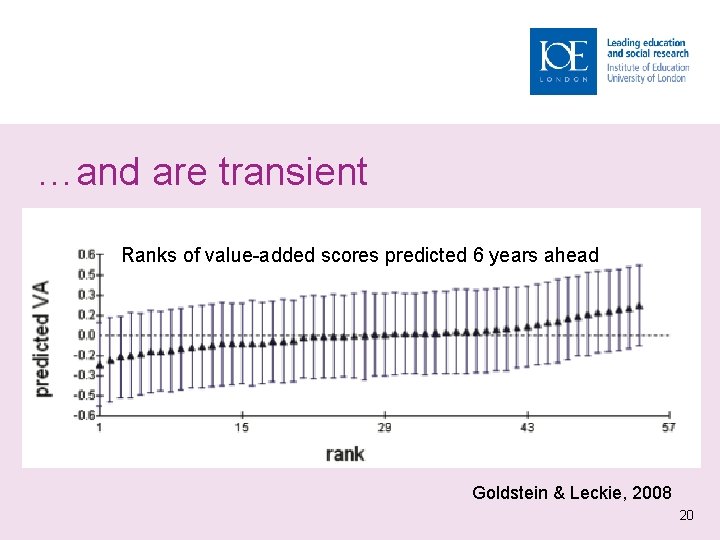

…and are transient Ranks of value-added scores predicted 6 years ahead Goldstein & Leckie, 2008 20

Construct underrepresentation

Learning is slower than usually assumed 860+570=? Source: Leverhulme Numeracy Research Programme 22

…so the overlap between cohorts is large The spread of achievement within each cohort is greater 23 than generally assumed

…but is made worse by the standard procedures of test design Consider the fate of an item that all 7 th grade students answer incorrectly and all 8 th grade students answer correctly It will not be included in any 7 th grade test because it fails to discriminate between 7 th graders It will not be included in any 8 th grade test because it fails to discriminate between 8 th graders Conclusion: any item that really tells you what students are learning will not be included in a standarized test 24

The social consequences of inadequate assessments

The Macnamara Fallacy The first step is to measure whatever can be easily measured. This is OK as far as it goes. The second step is to disregard that which can’t easily be measured or to give it an arbitrary quantitative value. This is artificial and misleading. The third step is to presume that what can’t be measured easily really isn’t important. This is blindness. The fourth step is to say that what can’t be easily measured really doesn’t exist. This is suicide. (Handy, 1994 p. 219) 26

Goodhart’s law (Campbell’s law) All performance indicators lose their meaning when adopted as policy targets: Inflation and money supply Railtrack’s performance targets National Health Service waiting lists National or provincial school achievement targets The clearer you are about what you want, the more likely you are to get it, but the less likely it is to mean anything 27

Effects of narrow assessment Incentives to teach to the test Focus on some subjects at the expense of others Focus on some aspects of a subject at the expense of others Focus on some students at the expense of others (“bubble” students) Consequences Learning that is Narrow Shallow Transient 28

Written examinations … they have occasioned and made well nigh imperative the use of mechanical and rote methods of teaching; they have occasioned cramming and the most vicious habits of study; they have caused much of the overpressure charged upon schools, some of which is real; they have tempted both teachers and pupils to dishonesty; and last but not least, they have permitted a mechanical method of school supervision. (White, 1888 p 517 -518). 29

The Lake Wobegon effect revisited “All the women are strong, all the men are goodlooking, and all the children are above average. ” Garrison Keillor 30

Achievement of English 16 -year-olds 31

32

What does it all mean? Reliability requires random sampling from the domain of interest Increasing reliability requires increasing the size of the sample Using teacher assessment in certification is attractive: Increases reliability (increased test time) Increases validity (addresses aspects of construct under-representation) But problematic Lack of trust (“Fox guarding the hen house”) Problems of biased inferences (construct-irrelevant variance) Can introduce new kinds of construct under-representation (“banking” models of assessment) 33

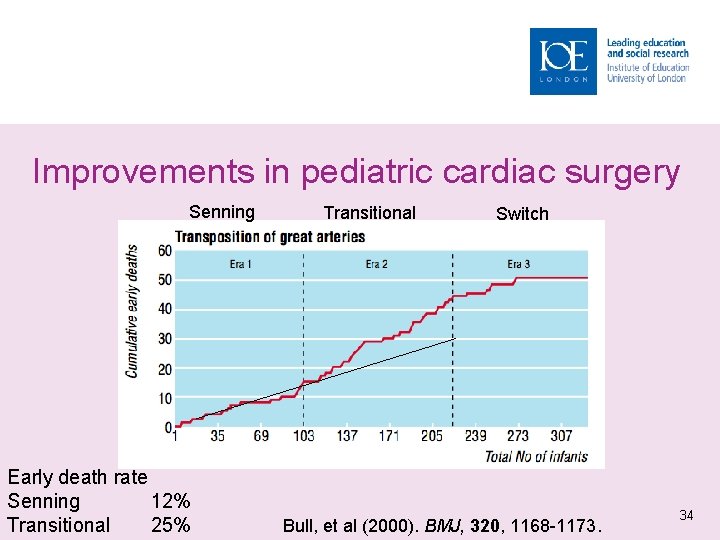

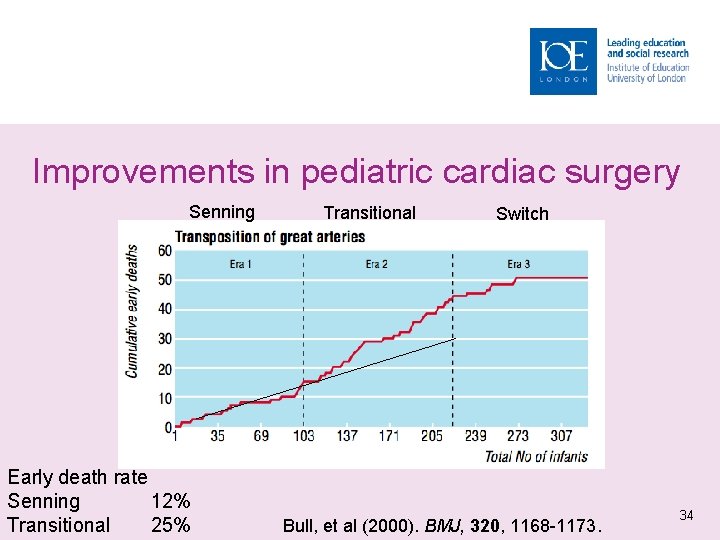

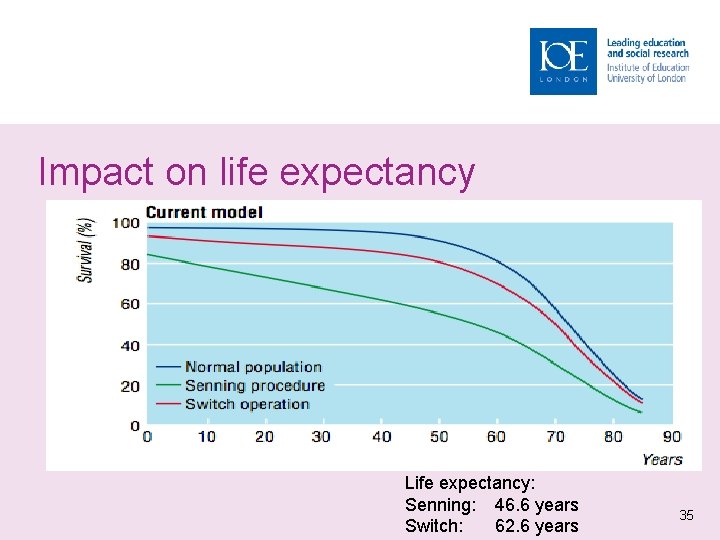

Improvements in pediatric cardiac surgery Senning Early death rate Senning 12% Transitional 25% Transitional Switch Bull, et al (2000). BMJ, 320, 1168 -1173. 34

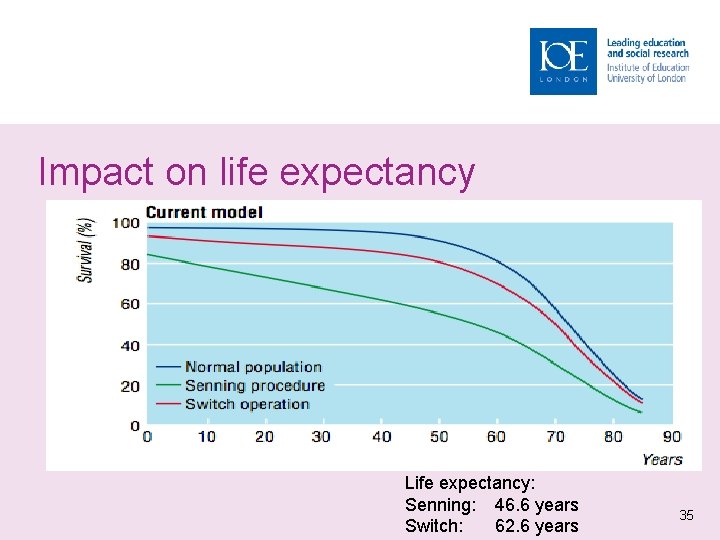

Impact on life expectancy Life expectancy: Senning: 46. 6 years Switch: 62. 6 years 35

The challenge To design an assessment system that is: Distributed So that evidence collection is not undertaken entirely at the end Synoptic So that learning has to accumulate Extensive So that all important aspects are covered (breadth and depth) Manageable So that costs are proportionate to benefits Trusted So that stakeholders have faith in the outcomes This is not rocket science It’s much harder than that 36

The effects of context 37

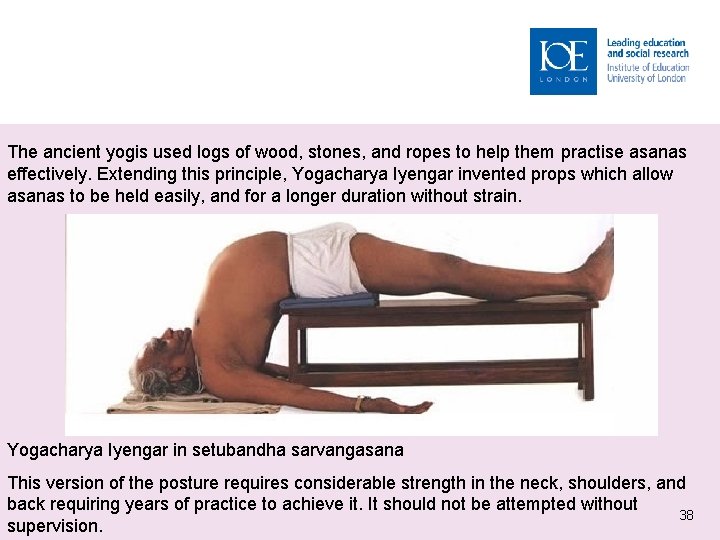

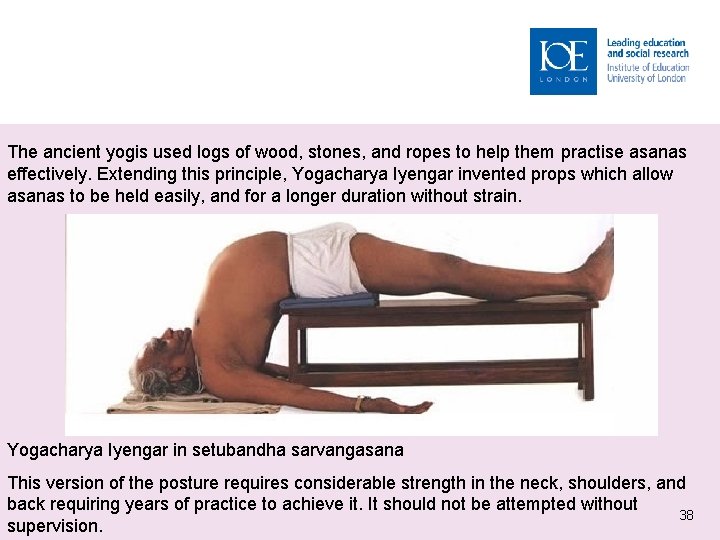

The ancient yogis used logs of wood, stones, and ropes to help them practise asanas effectively. Extending this principle, Yogacharya Iyengar invented props which allow asanas to be held easily, and for a longer duration without strain. Yogacharya Iyengar in setubandha sarvangasana This version of the posture requires considerable strength in the neck, shoulders, and back requiring years of practice to achieve it. It should not be attempted without 38 supervision.

39

The effects of context Beliefs about what constitutes learning; in the value of competition between students; in the value of competition between schools; that test results measure school effectiveness; about the trustworthiness in numerical data, with bias towards a single number; that the key to schools’ effectiveness is strong top-down management; that teachers need to be told what to do, or conversely that they have all the answers In the context of your own assessment system, which beliefs are most significant for education reform? can be changed? 40