Welcome Condor Week 2006 www cs wisc educondor

- Slides: 33

Welcome!!! Condor Week 2006 www. cs. wisc. edu/condor

1986 -2006 Celebrating 20 years since we first installed Condor in our department www. cs. wisc. edu/condor

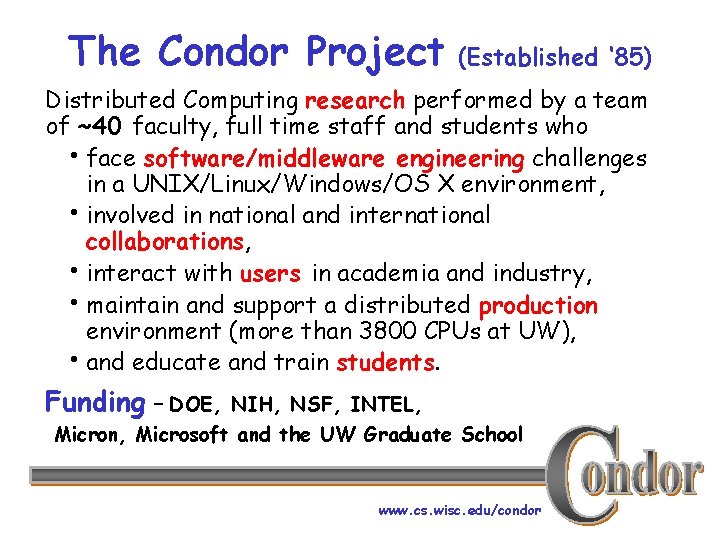

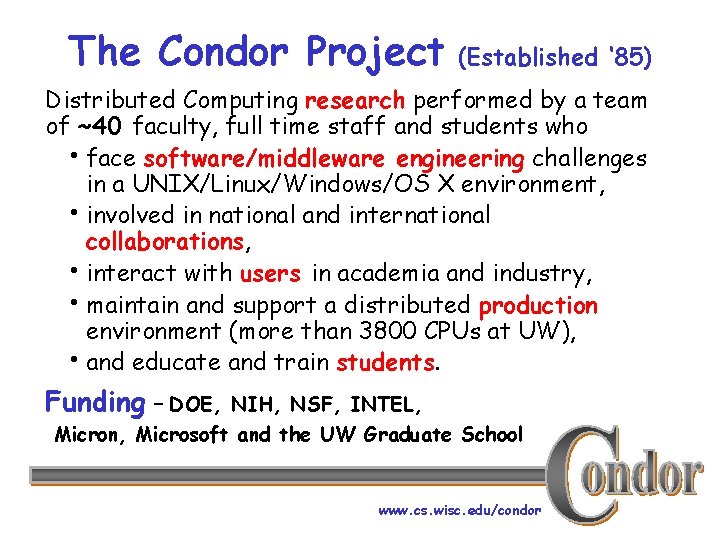

The Condor Project (Established ‘ 85) Distributed Computing research performed by a team of ~40 faculty, full time staff and students who hface software/middleware engineering challenges in a UNIX/Linux/Windows/OS X environment, hinvolved in national and international collaborations, hinteract with users in academia and industry, hmaintain and support a distributed production environment (more than 3800 CPUs at UW), hand educate and train students. Funding – DOE, NIH, NSF, INTEL, Micron, Microsoft and the UW Graduate School www. cs. wisc. edu/condor

S u p p o r t un F e r a w t f So Resea y t i l a n o cti rch www. cs. wisc. edu/condor

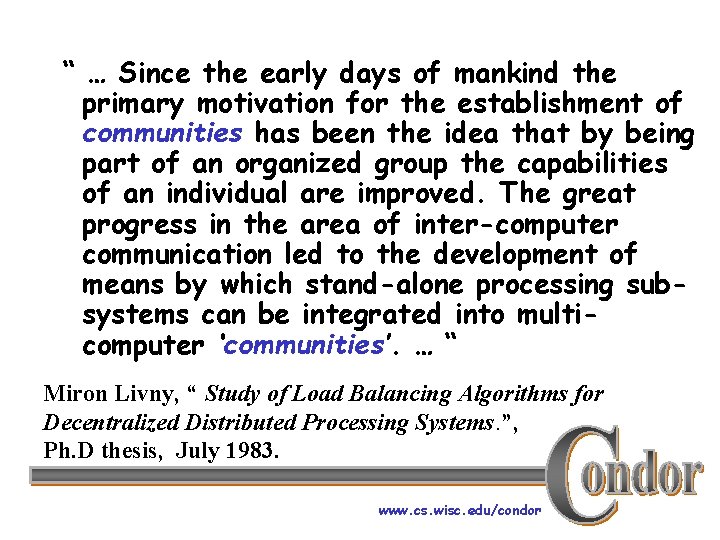

“ … Since the early days of mankind the primary motivation for the establishment of communities has been the idea that by being part of an organized group the capabilities of an individual are improved. The great progress in the area of inter-computer communication led to the development of means by which stand-alone processing subsystems can be integrated into multicomputer ‘communities’. … “ Miron Livny, “ Study of Load Balancing Algorithms for Decentralized Distributed Processing Systems. ”, Ph. D thesis, July 1983. www. cs. wisc. edu/condor

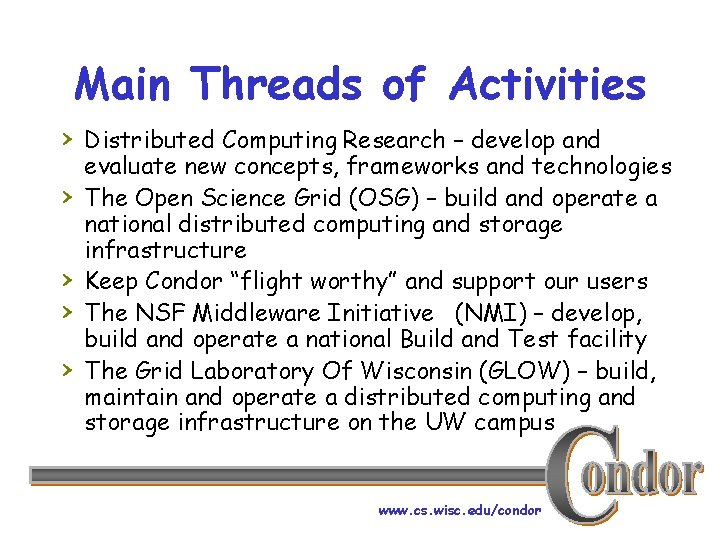

Main Threads of Activities › Distributed Computing Research – develop and › › evaluate new concepts, frameworks and technologies The Open Science Grid (OSG) – build and operate a national distributed computing and storage infrastructure Keep Condor “flight worthy” and support our users The NSF Middleware Initiative (NMI) – develop, build and operate a national Build and Test facility The Grid Laboratory Of Wisconsin (GLOW) – build, maintain and operate a distributed computing and storage infrastructure on the UW campus www. cs. wisc. edu/condor

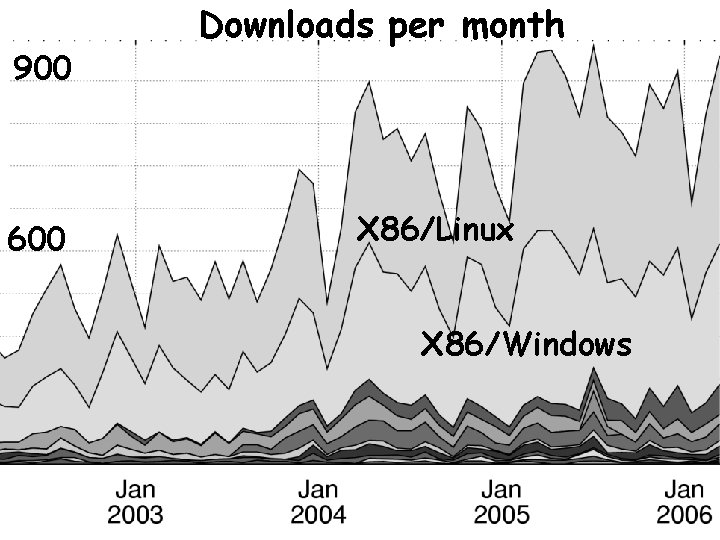

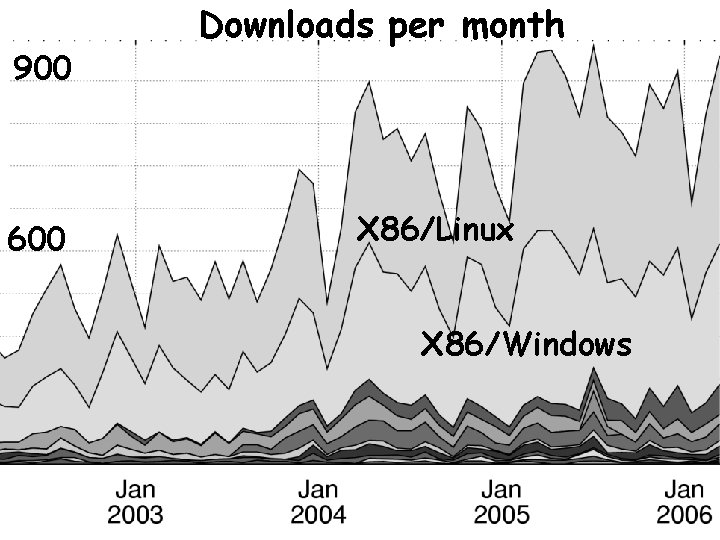

900 600 Downloads per month X 86/Linux X 86/Windows www. cs. wisc. edu/condor

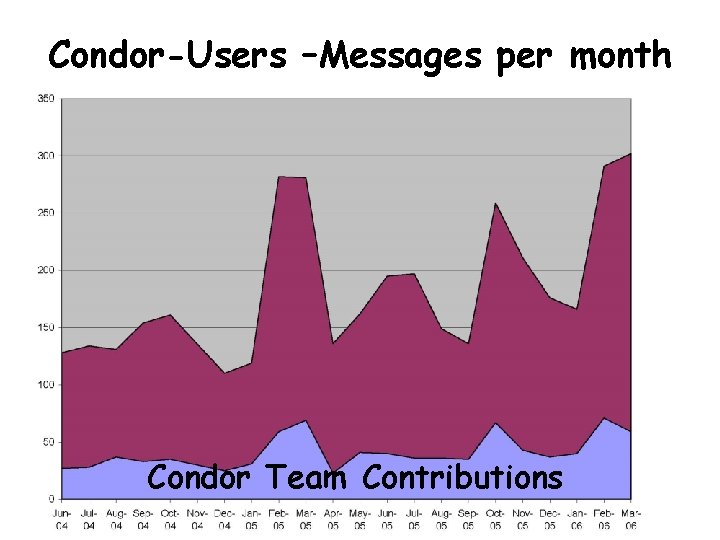

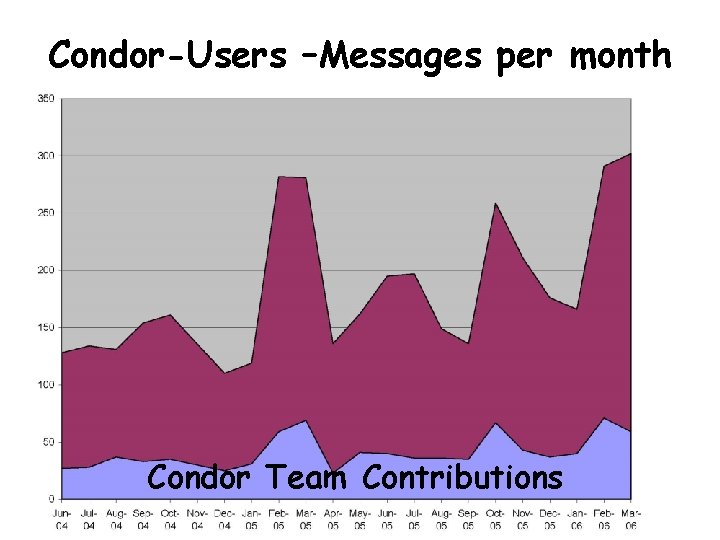

Condor-Users –Messages per month Condor Team Contributions www. cs. wisc. edu/condor

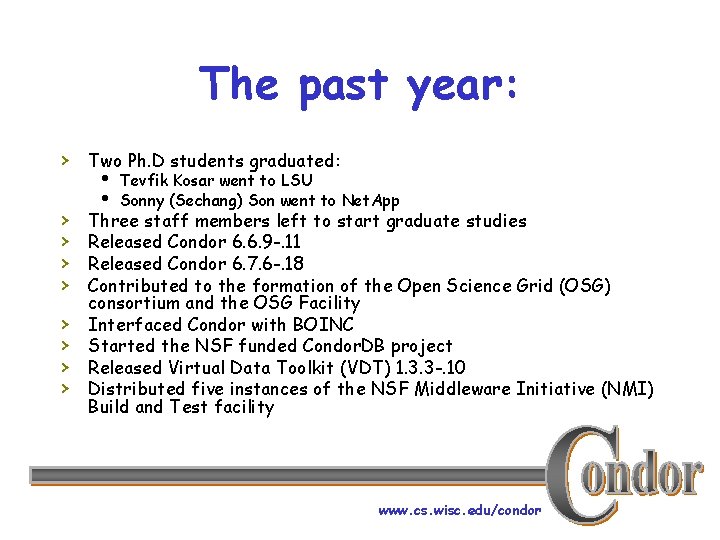

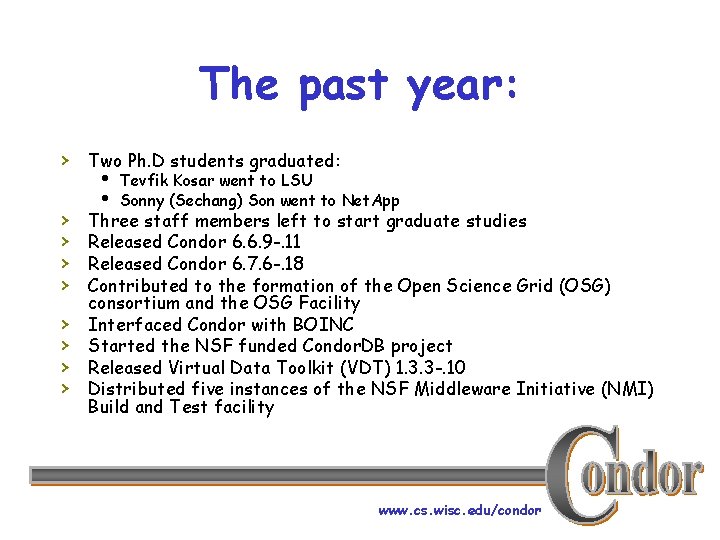

The past year: › Two Ph. D students graduated: › › › › h Tevfik Kosar went to LSU h Sonny (Sechang) Son went to Net. App Three staff members left to start graduate studies Released Condor 6. 6. 9 -. 11 Released Condor 6. 7. 6 -. 18 Contributed to the formation of the Open Science Grid (OSG) consortium and the OSG Facility Interfaced Condor with BOINC Started the NSF funded Condor. DB project Released Virtual Data Toolkit (VDT) 1. 3. 3 -. 10 Distributed five instances of the NSF Middleware Initiative (NMI) Build and Test facility www. cs. wisc. edu/condor

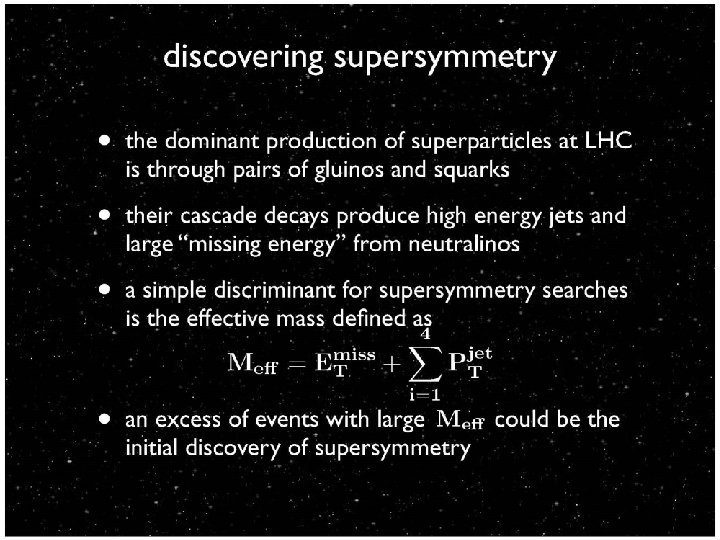

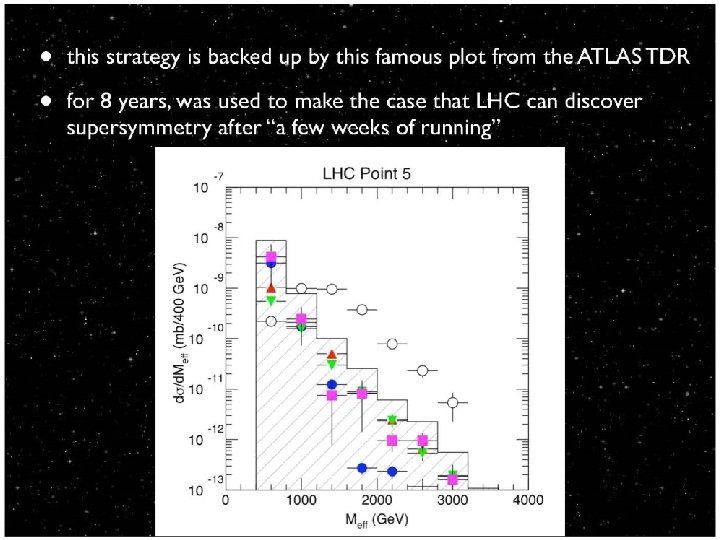

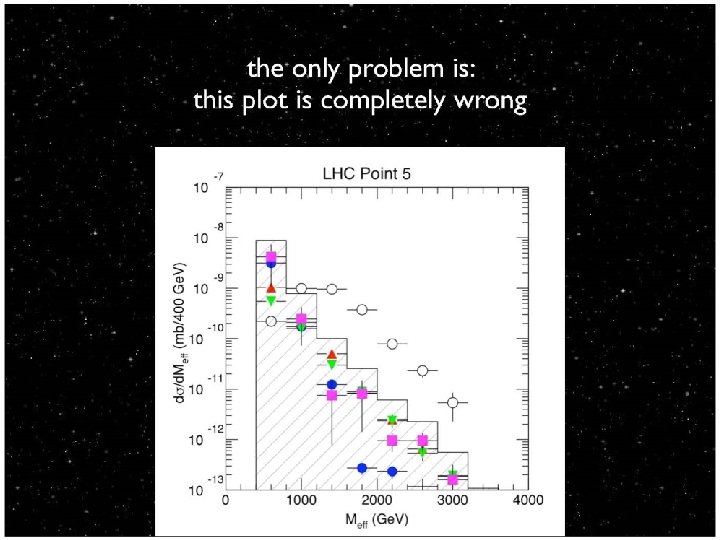

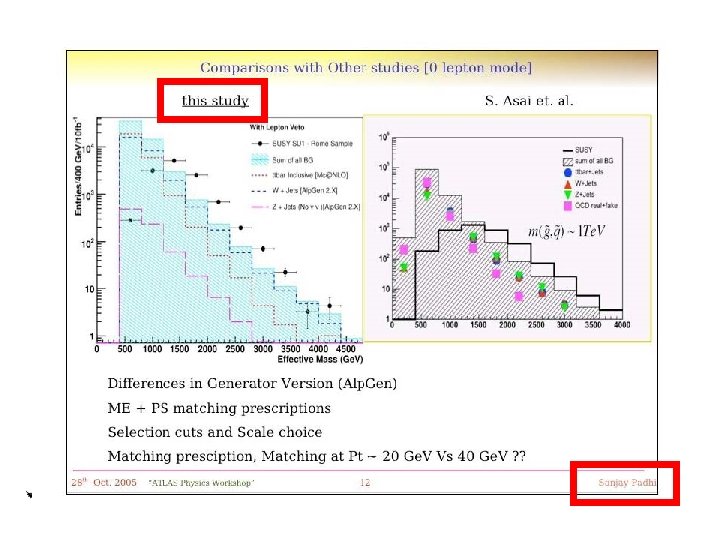

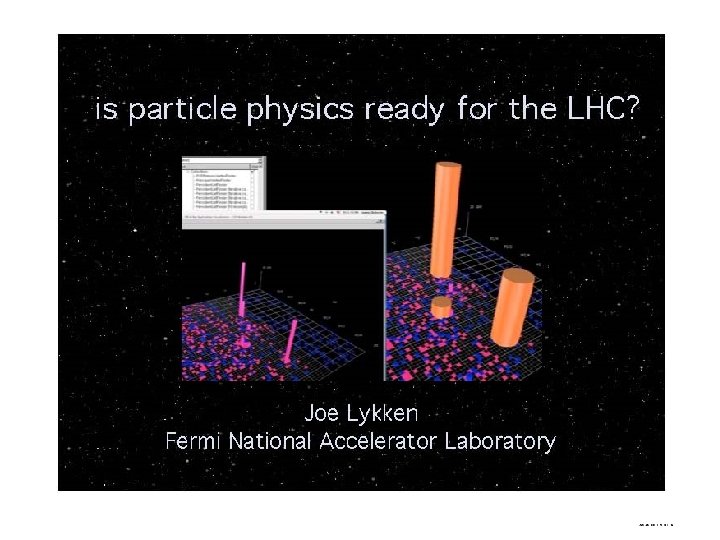

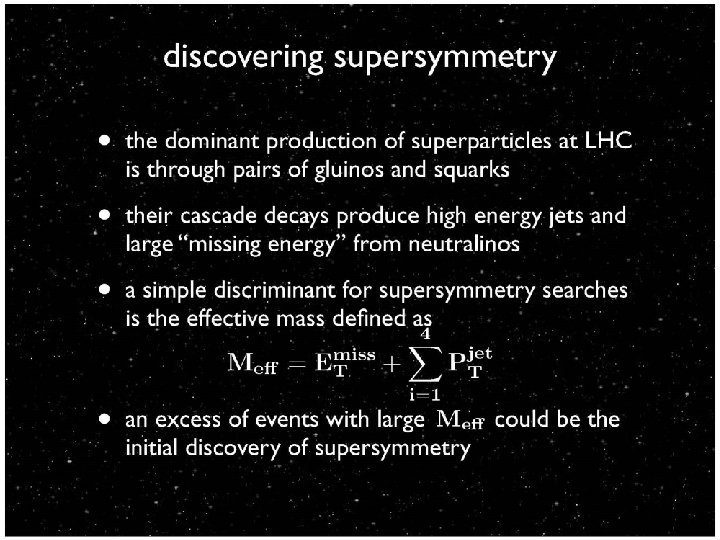

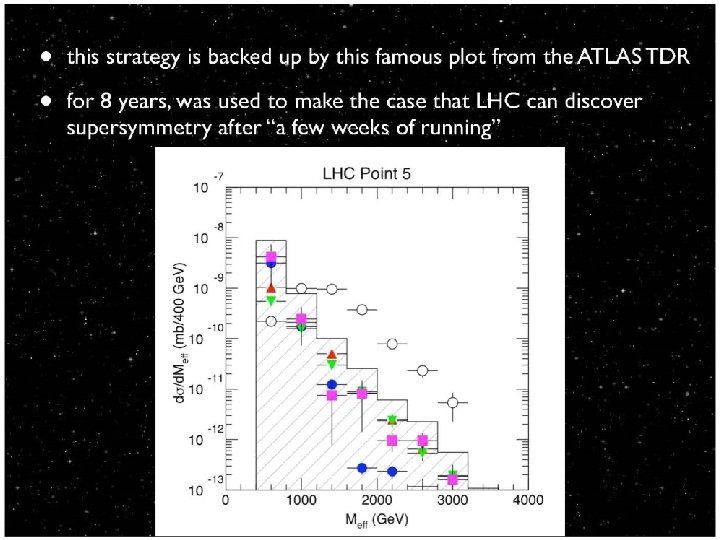

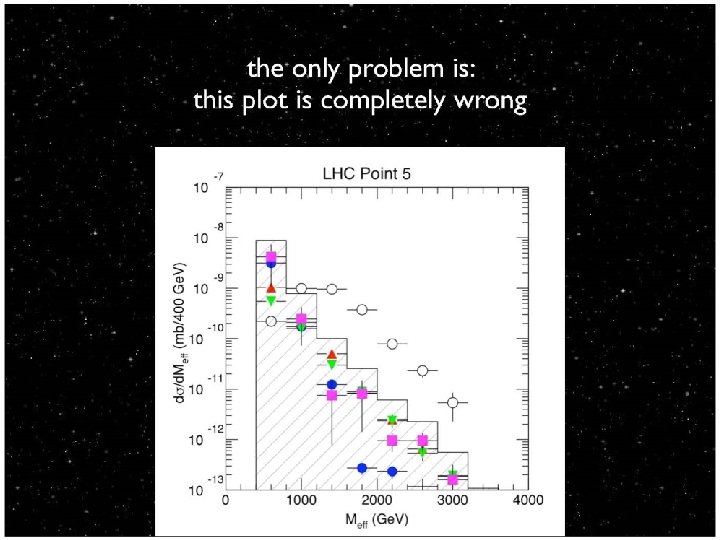

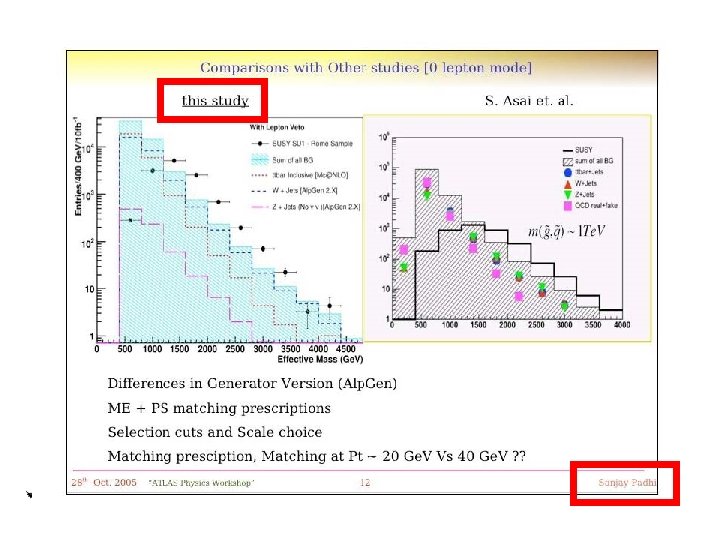

The search for SUSY › Sanjay Padhi is a UW Chancellor Fellow who › › is working at the group of Prof. Sau Lan Wu at CERN Using Condor Technologies he established a “grid access point” in his office at CERN Through this access-point he managed to harness in 3 month (12/05 -2/06) more that 500 CPU years from the LHC Computing Grid (LCG) the Open Science Grid (OSG) and UW Condor resources www. cs. wisc. edu/~miron

www. cs. wisc. edu/~miron

www. cs. wisc. edu/~miron

www. cs. wisc. edu/~miron

www. cs. wisc. edu/~miron

www. cs. wisc. edu/~miron

www. cs. wisc. edu/~miron

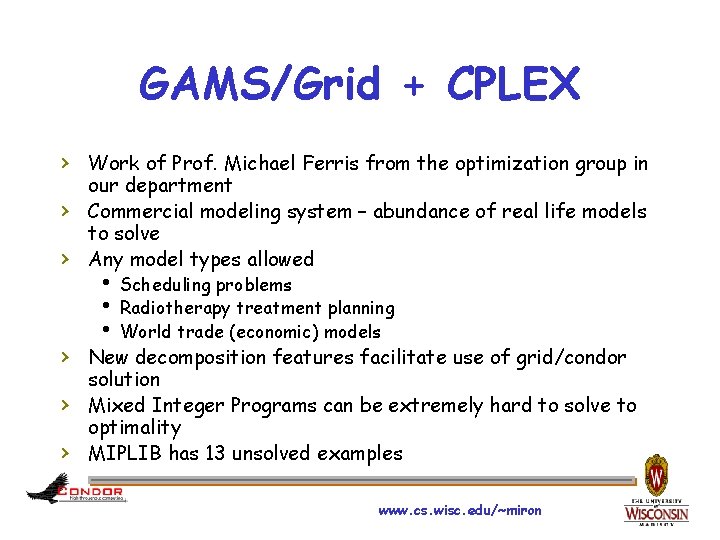

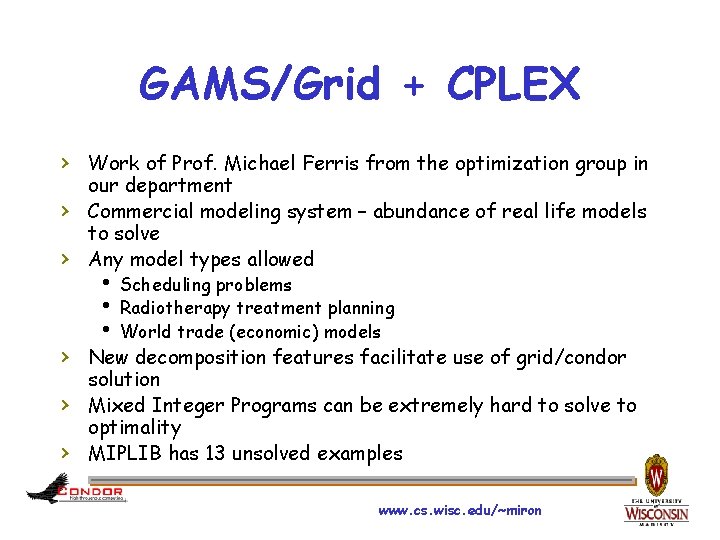

GAMS/Grid + CPLEX › Work of Prof. Michael Ferris from the optimization group in › › our department Commercial modeling system – abundance of real life models to solve Any model types allowed h Scheduling problems h Radiotherapy treatment planning h World trade (economic) models › New decomposition features facilitate use of grid/condor › › solution Mixed Integer Programs can be extremely hard to solve to optimality MIPLIB has 13 unsolved examples www. cs. wisc. edu/~miron

Tool and expertise combined › Various decomposition schemes coupled with h Fastest commercial solver - CPLEX h Shared file system / condor_chirp for inter-process communication h Sophisticated problem domain branching and cuts › Takes over 1 year of computation and goes nowhere – but knowledge gained! › Adaptive refinement strategy › Dedicated resources › “Timtab 2” and “a 1 c 1 s 1” problems solved to optimality (using over 650 machines running tasks each of which take between 1 hour and 5 days) www. cs. wisc. edu/~miron

Function Shipping, Data Shipping, or maybe simply Object Shipping? www. cs. wisc. edu/condor

Customer requests: Place y@S at L! System delivers. www. cs. wisc. edu/condor

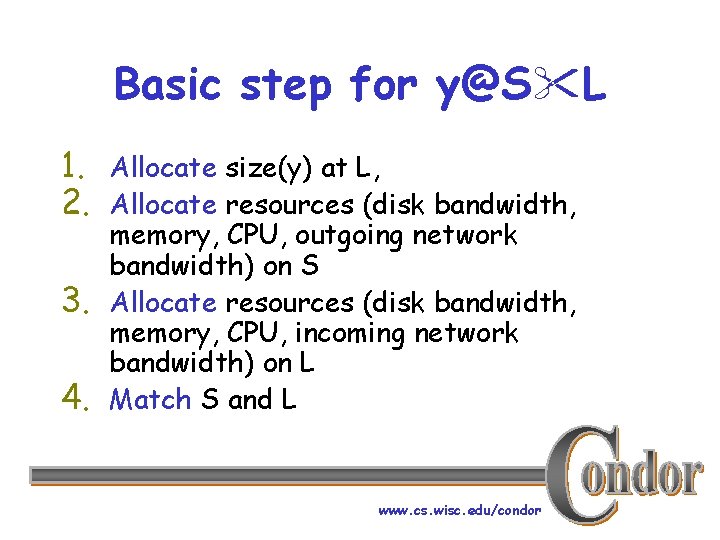

Basic step for y@S L 1. Allocate size(y) at L, 2. Allocate resources (disk bandwidth, 3. 4. memory, CPU, outgoing network bandwidth) on S Allocate resources (disk bandwidth, memory, CPU, incoming network bandwidth) on L Match S and L www. cs. wisc. edu/condor

Or in other words, it takes two (or more) to Tango (or to place data)! www. cs. wisc. edu/condor

When the “source” plays “nice” it “asks” for permission to place data at “destination” in advance www. cs. wisc. edu/condor

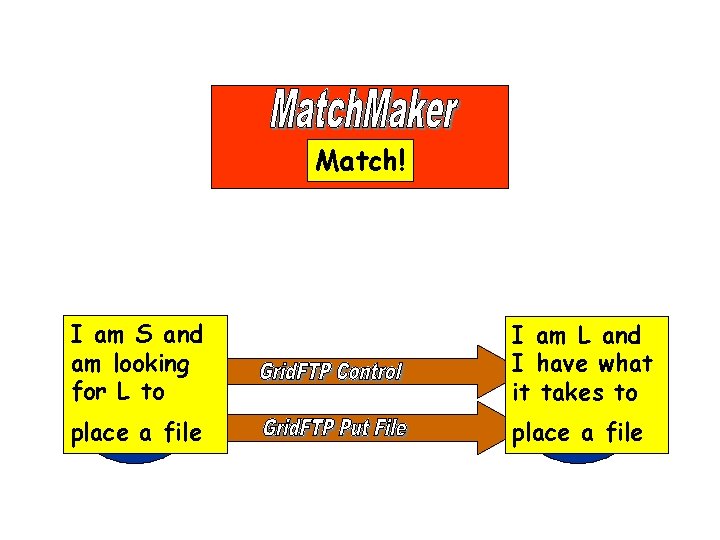

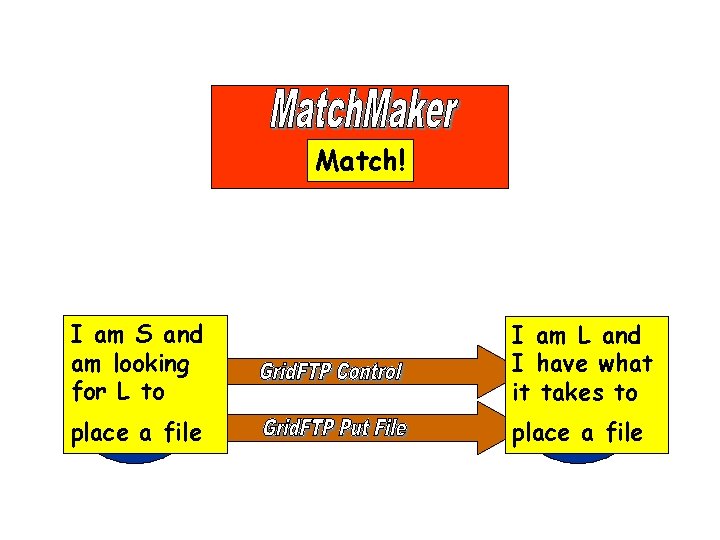

Match! I am S and am looking for L to I am L and I have what it takes to place a file www. cs. wisc. edu/condor

The SC’ 05 effort Joint with the Globus Grid. FTP team www. cs. wisc. edu/condor

Stork controls number of outgoing connections Destination advertises incoming www. cs. wisc. edu/condorconnections

A Master Worker view of the same effort www. cs. wisc. edu/condor

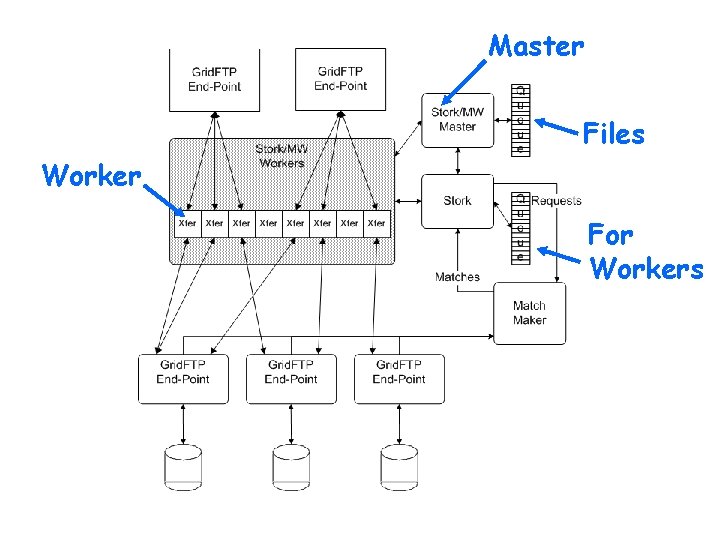

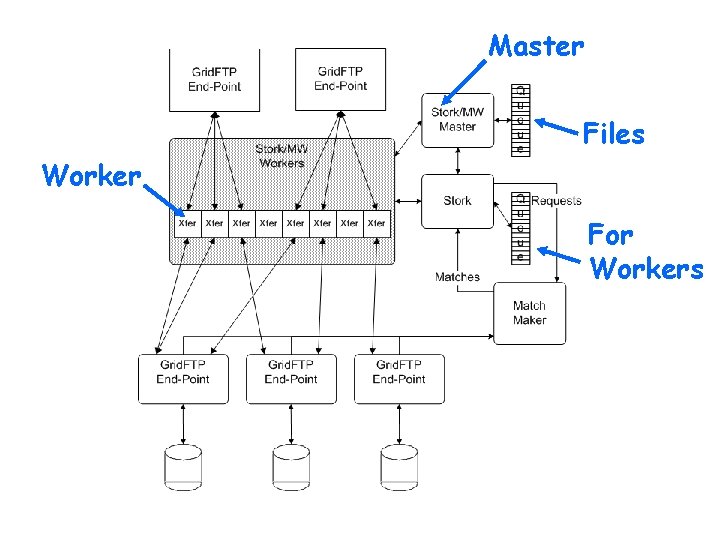

Master Files Worker For Workers www. cs. wisc. edu/condor

When the “source” does not play “nice”, destination must protect itself www. cs. wisc. edu/condor

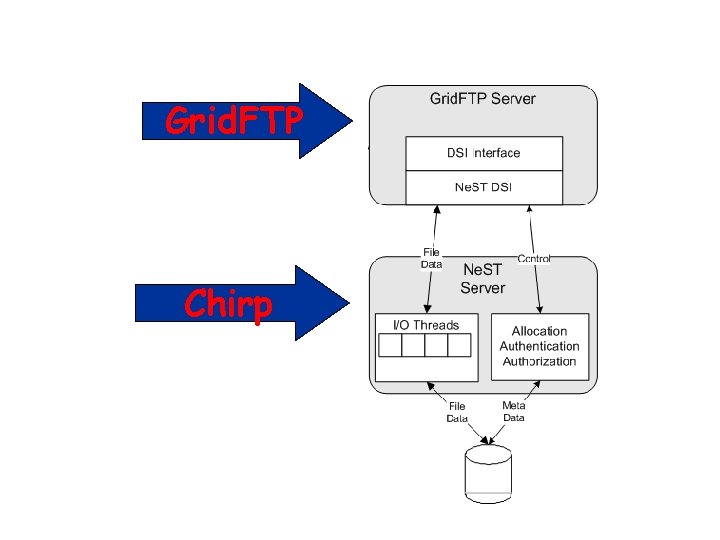

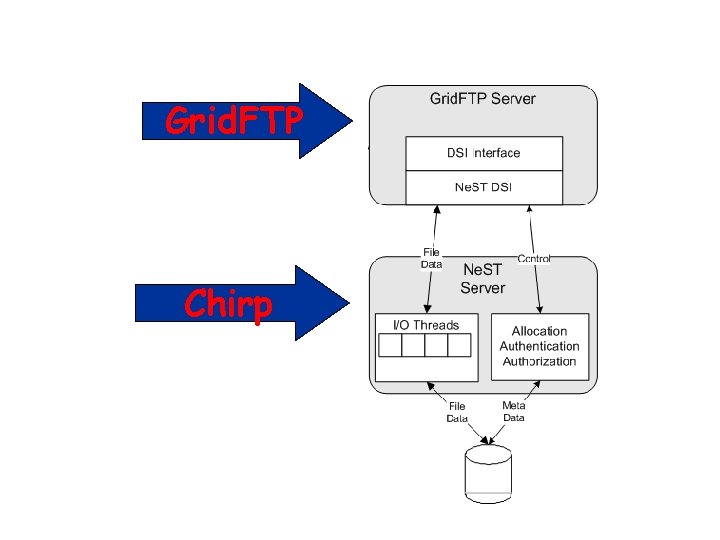

Ne. ST Manages storage space and connections for a Grid. FTP server with commands like: h. ADD_NEST_USER h. ADD_USER_TO_LOT h. ATTACH_LOT_TO_FILE h. TERMINATE_LOT www. cs. wisc. edu/condor

Grid. FTP Chirp www. cs. wisc. edu/condor

Thank you for building such a wonderful community www. cs. wisc. edu/condor

Week by week plans for documenting children's development

Week by week plans for documenting children's development Good morning year 5

Good morning year 5 Welcome to week 5

Welcome to week 5 Grade 4 assignment

Grade 4 assignment Welcome to holy week

Welcome to holy week Welcome to another week

Welcome to another week Welcome to week 3

Welcome to week 3 Welcome to week 3

Welcome to week 3 Wisc cs

Wisc cs Asis zeka testi puan aralığı

Asis zeka testi puan aralığı Thinking, language, memory, and reasoning are all part of

Thinking, language, memory, and reasoning are all part of Cube wisc

Cube wisc 1.09 quiz measurement lab

1.09 quiz measurement lab Wisc picture concepts

Wisc picture concepts Prorrateo wisc v

Prorrateo wisc v Cifrario wisc

Cifrario wisc Perbedaan tes binet dan wisc

Perbedaan tes binet dan wisc Wisc-iv scores

Wisc-iv scores Icd 10 f 70

Icd 10 f 70 Sejarah tes wais

Sejarah tes wais Backing and veering

Backing and veering Wisc-online heat transfer

Wisc-online heat transfer Skala wisc-r

Skala wisc-r Ci wechsler

Ci wechsler Wisc v descriptive categories

Wisc v descriptive categories Wisc vpn

Wisc vpn Condor v barron knights

Condor v barron knights Condor de1668

Condor de1668 Bagne de poulo condor

Bagne de poulo condor The condor experience

The condor experience Whats a condor

Whats a condor Three days of the condor cda

Three days of the condor cda The condor cluster

The condor cluster Snyder introduction to the california condor download

Snyder introduction to the california condor download