Week 8 Presentation Predictive coding Dr Rawat Tamique

- Slides: 8

Week 8 Presentation Predictive coding (Dr. Rawat) Tamique de Brito

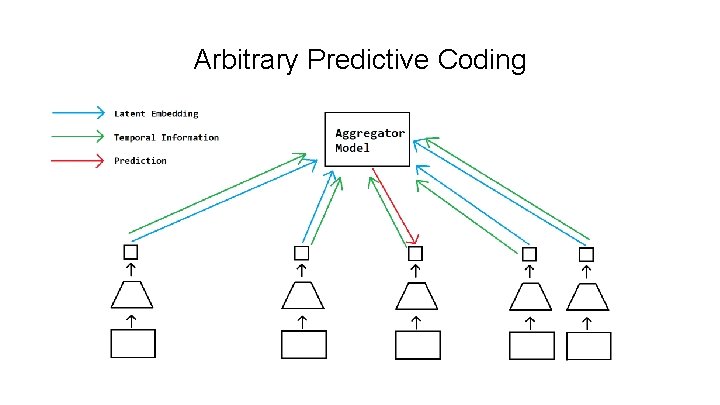

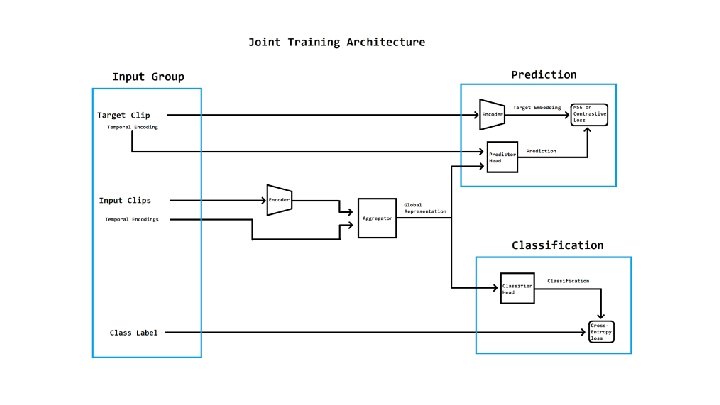

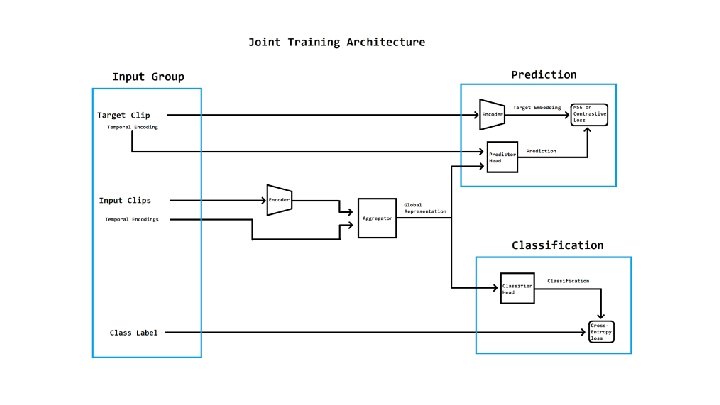

Summary of project concept ● Combining self-supervised arbitrary predictive coding with semi-supervised learning to learn improved video representations ○ ○ Predictive coding has been used with predicting future representations; however, we are experimenting with using arbitrary predictions Using action-recognition dataset as labelled data ● The approach is as follows: ○ ○ ○ Take a group of video clips from different times and encode them (encoder is trainable) Pair clip encodings with temporal encodings and aggregate into global representation Take a target clip and encode it Use the global representation and the temporal encoding of the target to predict the target’s representation Can train jointly with classification for partially-labeled data

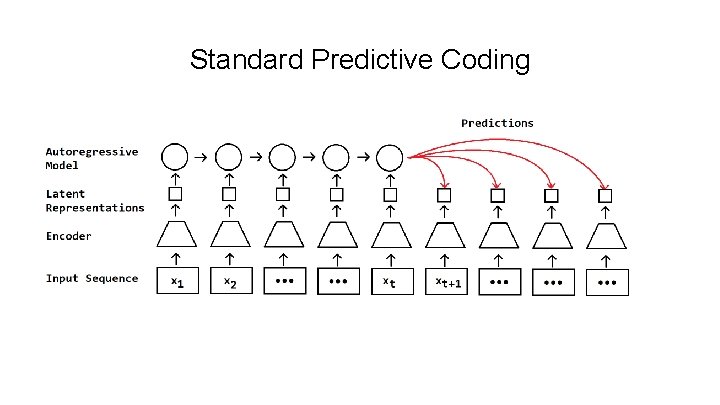

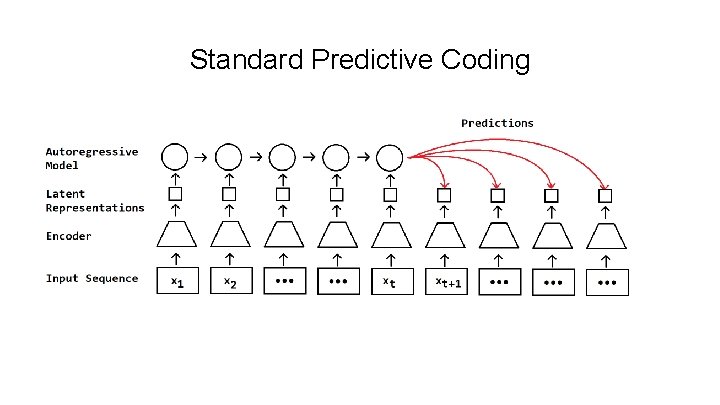

Standard Predictive Coding

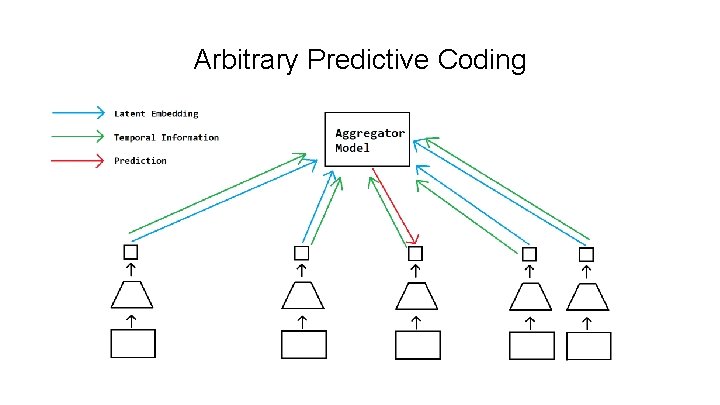

Arbitrary Predictive Coding

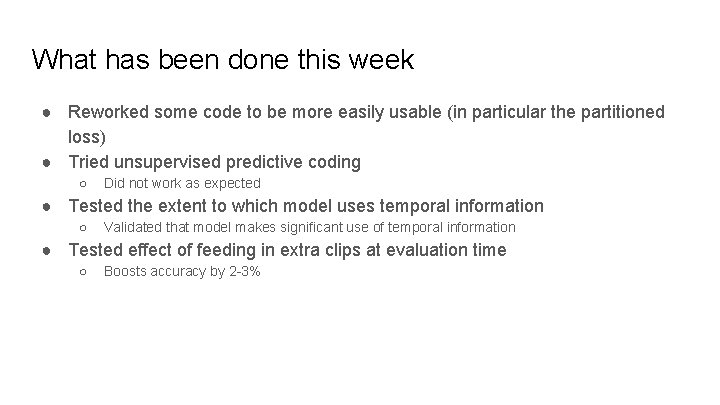

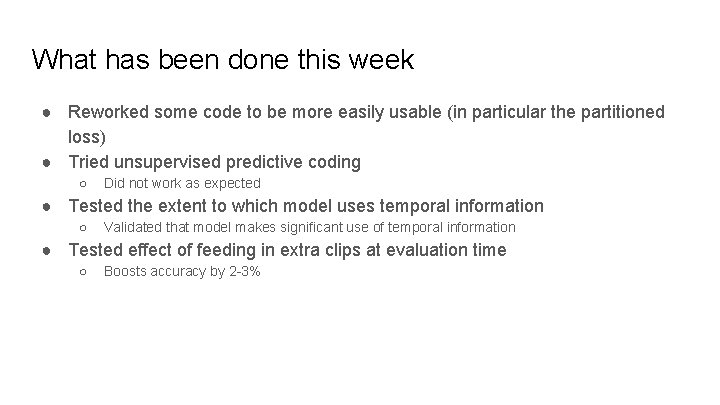

What has been done this week ● Reworked some code to be more easily usable (in particular the partitioned loss) ● Tried unsupervised predictive coding ○ Did not work as expected ● Tested the extent to which model uses temporal information ○ Validated that model makes significant use of temporal information ● Tested effect of feeding in extra clips at evaluation time ○ Boosts accuracy by 2 -3%

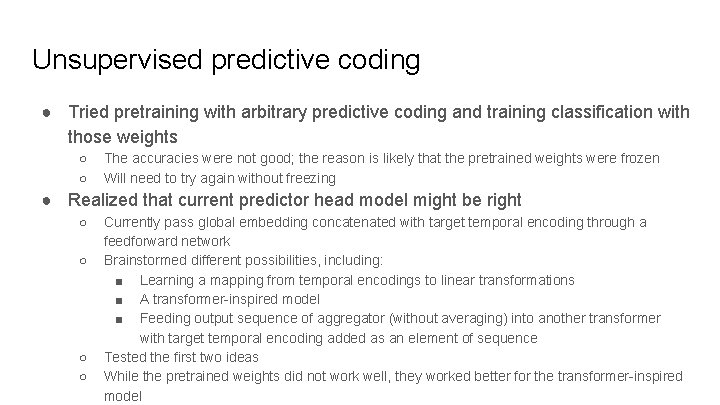

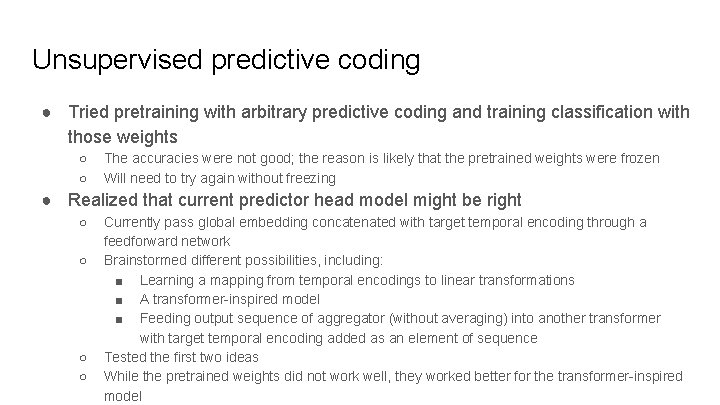

Unsupervised predictive coding ● Tried pretraining with arbitrary predictive coding and training classification with those weights ○ ○ The accuracies were not good; the reason is likely that the pretrained weights were frozen Will need to try again without freezing ● Realized that current predictor head model might be right ○ ○ Currently pass global embedding concatenated with target temporal encoding through a feedforward network Brainstormed different possibilities, including: ■ Learning a mapping from temporal encodings to linear transformations ■ A transformer-inspired model ■ Feeding output sequence of aggregator (without averaging) into another transformer with target temporal encoding added as an element of sequence Tested the first two ideas While the pretrained weights did not work well, they worked better for the transformer-inspired model

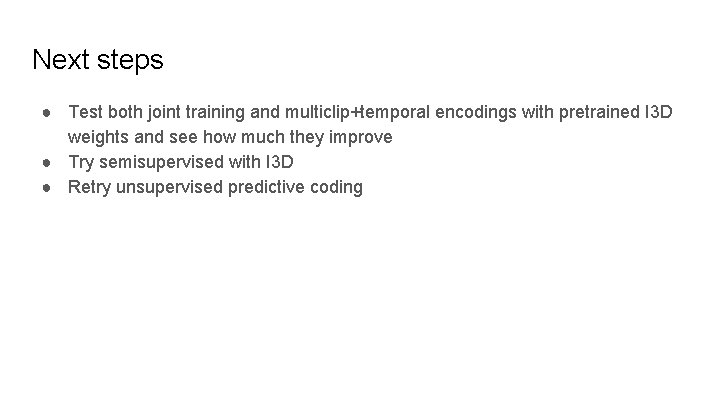

Next steps ● Test both joint training and multiclip+temporal encodings with pretrained I 3 D weights and see how much they improve ● Try semisupervised with I 3 D ● Retry unsupervised predictive coding