Web Scraping Lecture 8 Storing Data Topics n

Web Scraping Lecture 8 – Storing Data Topics n Storing data n Downloading CSV, My. SQL n Readings: n Chapters 5, and 4 February 2, 2017

Overview Last Time: Lecture 6 slides 30 - end; Lecture 7 Slides 1 -31 § Crawling from Chapter 3: Lecture 6 Slides 29 -40 § Getting code again: https: //github. com/REMitchell/python-scraping § 3 -crawl. Site. py 4 -get. External. Links. py 5 -get. All. External. Links. py § § • Chapter 4 • • APIs JSON Today: • Iterators, generators and yield • Chapter 4 • • • APIs JSON Javascript – 2 References – - Scrapy site/user manual CSCE 590 Web Scraping Spring 2017

Reg Expressions – Lookahead patterns (? =. . . ) Matches if. . . matches next, but doesn’t consume any of the string. § This is called a lookahead assertion. § For example, Isaac (? =Asimov) will match 'Isaac ' only if it’s followed by 'Asimov'. (? !. . . ) Matches if. . . doesn’t match next. § This is a negative lookahead assertion. § For example, Isaac (? !Asimov) will match 'Isaac ' only if it’s not followed by 'Asimov'. (? #. . . ) A comment; the contents of the parentheses are simply ignored. – 3– CSCE 590 Web Scraping Spring 2017

Chapter 4: Using APIs API - In computer programming, an application programming interface (API) is a set of subroutine definitions, protocols, and tools for building application software. § https: //en. wikipedia. org/wiki/Application_programming_interface A web API is an application programming interface (API) for either a web server or a web browser. § Program request in HTML § Response in XML or JSON – 4– CSCE 590 Web Scraping Spring 2017

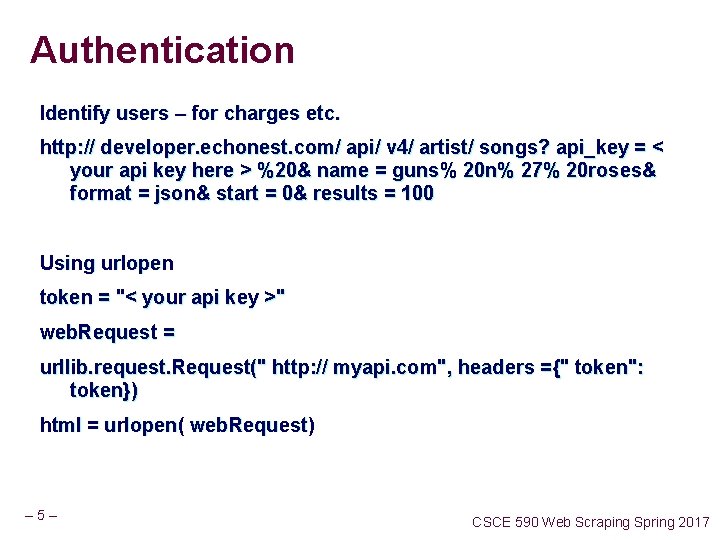

Authentication Identify users – for charges etc. http: // developer. echonest. com/ api/ v 4/ artist/ songs? api_key = < your api key here > %20& name = guns% 20 n% 27% 20 roses& format = json& start = 0& results = 100 Using urlopen token = "< your api key >" web. Request = urllib. request. Request(" http: // myapi. com", headers ={" token": token}) html = urlopen( web. Request) – 5– CSCE 590 Web Scraping Spring 2017

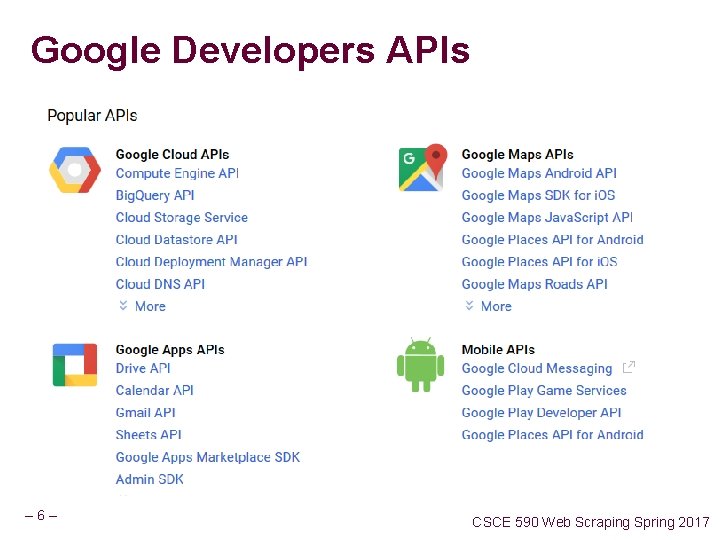

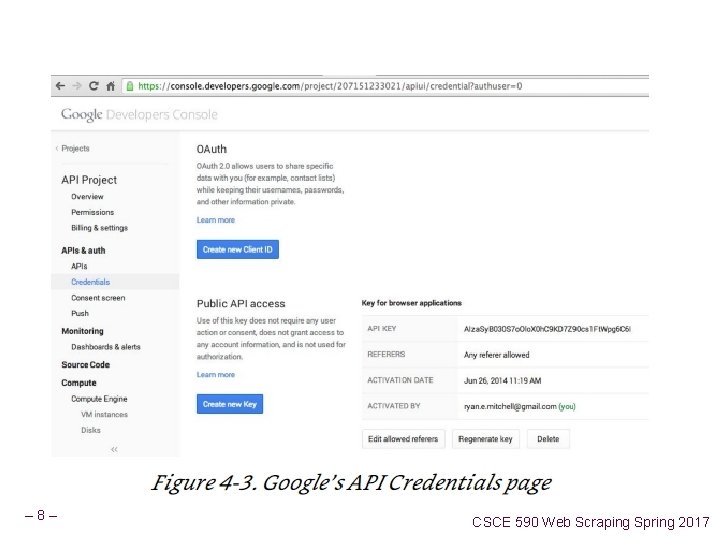

Google Developers APIs – 6– CSCE 590 Web Scraping Spring 2017

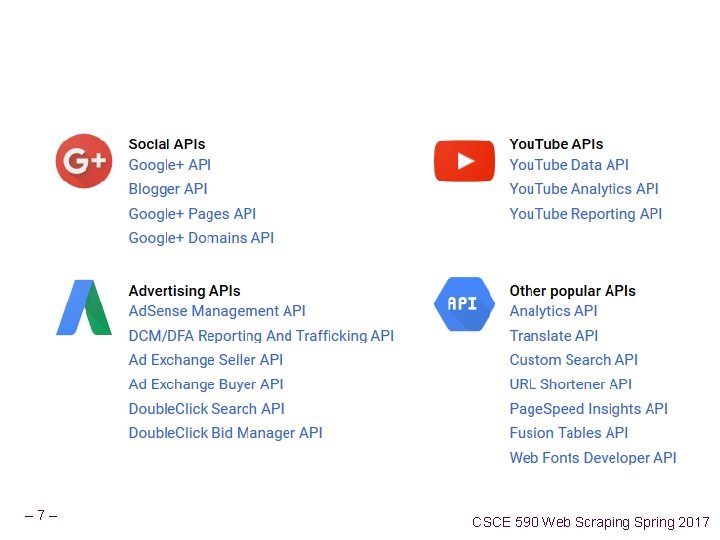

– 7– CSCE 590 Web Scraping Spring 2017

– 8– CSCE 590 Web Scraping Spring 2017

Mining the Social Web; so Twitter Later – 9– CSCE 590 Web Scraping Spring 2017

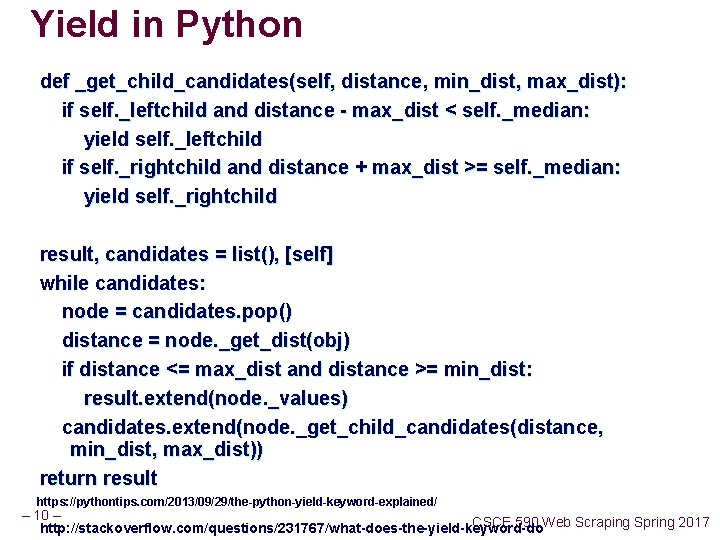

Yield in Python def _get_child_candidates(self, distance, min_dist, max_dist): if self. _leftchild and distance - max_dist < self. _median: yield self. _leftchild if self. _rightchild and distance + max_dist >= self. _median: yield self. _rightchild result, candidates = list(), [self] while candidates: node = candidates. pop() distance = node. _get_dist(obj) if distance <= max_dist and distance >= min_dist: result. extend(node. _values) candidates. extend(node. _get_child_candidates(distance, min_dist, max_dist)) return result https: //pythontips. com/2013/09/29/the-python-yield-keyword-explained/ – 10 – CSCE 590 Web Scraping Spring 2017 http: //stackoverflow. com/questions/231767/what-does-the-yield-keyword-do

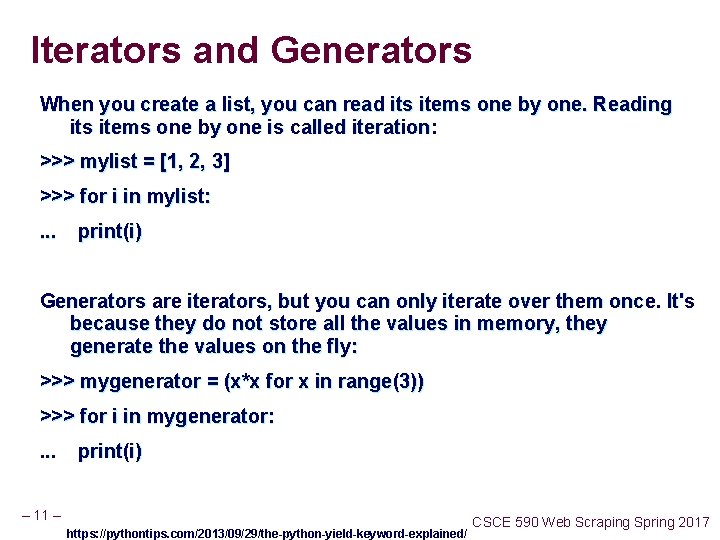

Iterators and Generators When you create a list, you can read its items one by one. Reading its items one by one is called iteration: >>> mylist = [1, 2, 3] >>> for i in mylist: . . . print(i) Generators are iterators, but you can only iterate over them once. It's because they do not store all the values in memory, they generate the values on the fly: >>> mygenerator = (x*x for x in range(3)) >>> for i in mygenerator: . . . print(i) – 11 – https: //pythontips. com/2013/09/29/the-python-yield-keyword-explained/ CSCE 590 Web Scraping Spring 2017

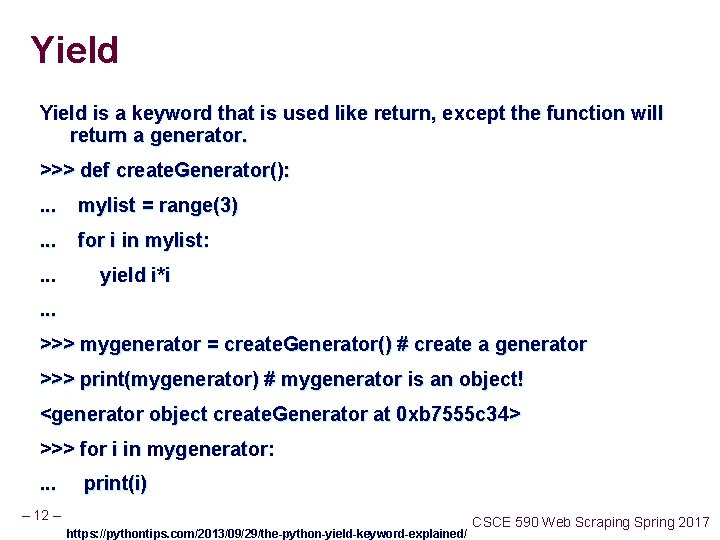

Yield is a keyword that is used like return, except the function will return a generator. >>> def create. Generator(): . . . mylist = range(3). . . for i in mylist: . . . yield i*i. . . >>> mygenerator = create. Generator() # create a generator >>> print(mygenerator) # mygenerator is an object! <generator object create. Generator at 0 xb 7555 c 34> >>> for i in mygenerator: . . . print(i) – 12 – https: //pythontips. com/2013/09/29/the-python-yield-keyword-explained/ CSCE 590 Web Scraping Spring 2017

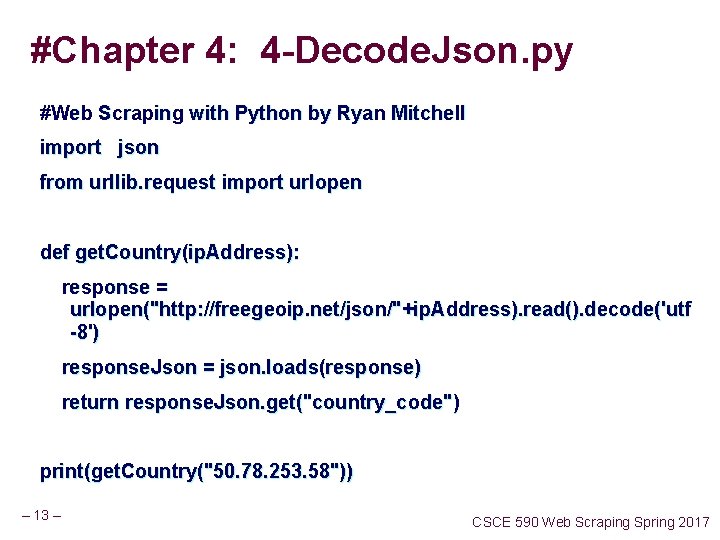

#Chapter 4: 4 -Decode. Json. py #Web Scraping with Python by Ryan Mitchell import json from urllib. request import urlopen def get. Country(ip. Address): response = urlopen("http: //freegeoip. net/json/"+ip. Address). read(). decode('utf -8') response. Json = json. loads(response) return response. Json. get("country_code") print(get. Country("50. 78. 253. 58")) – 13 – CSCE 590 Web Scraping Spring 2017

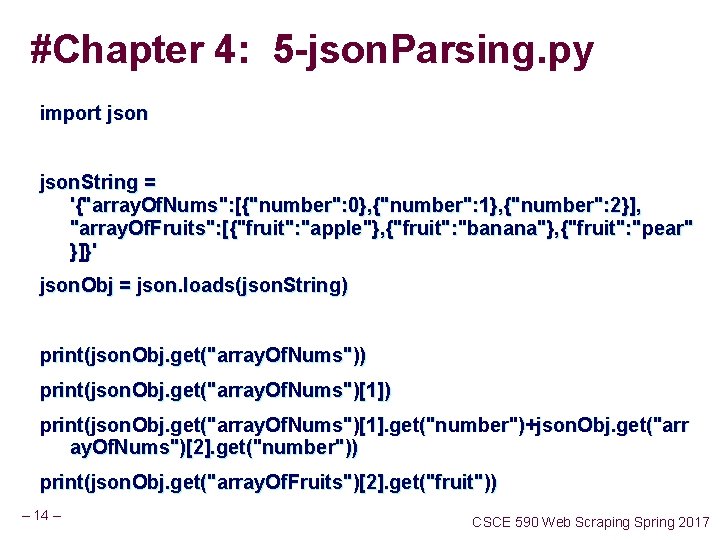

#Chapter 4: 5 -json. Parsing. py import json. String = '{"array. Of. Nums": [{"number": 0}, {"number": 1}, {"number": 2}], "array. Of. Fruits": [{"fruit": "apple"}, {"fruit": "banana"}, {"fruit": "pear" }]}' json. Obj = json. loads(json. String) print(json. Obj. get("array. Of. Nums")[1]) print(json. Obj. get("array. Of. Nums")[1]. get("number")+json. Obj. get("arr ay. Of. Nums")[2]. get("number")) print(json. Obj. get("array. Of. Fruits")[2]. get("fruit")) – 14 – CSCE 590 Web Scraping Spring 2017

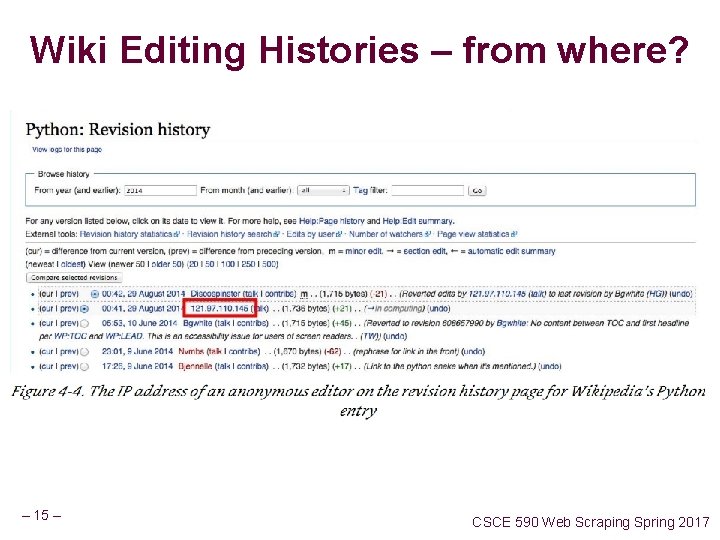

Wiki Editing Histories – from where? – 15 – CSCE 590 Web Scraping Spring 2017

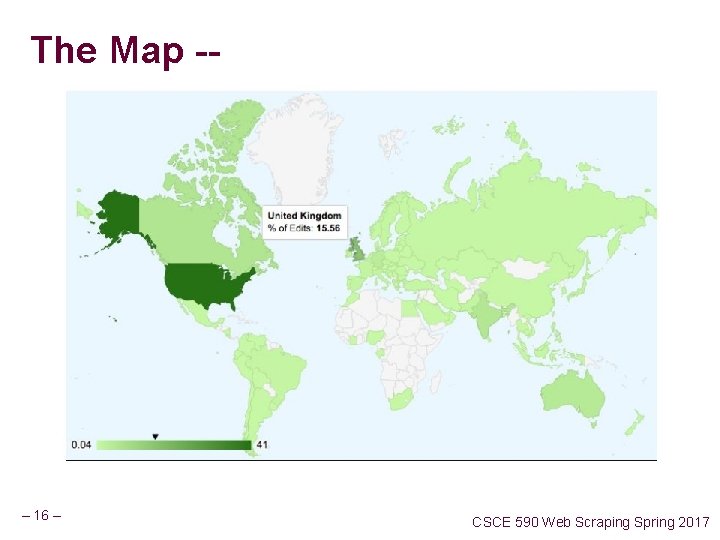

The Map -- – 16 – CSCE 590 Web Scraping Spring 2017

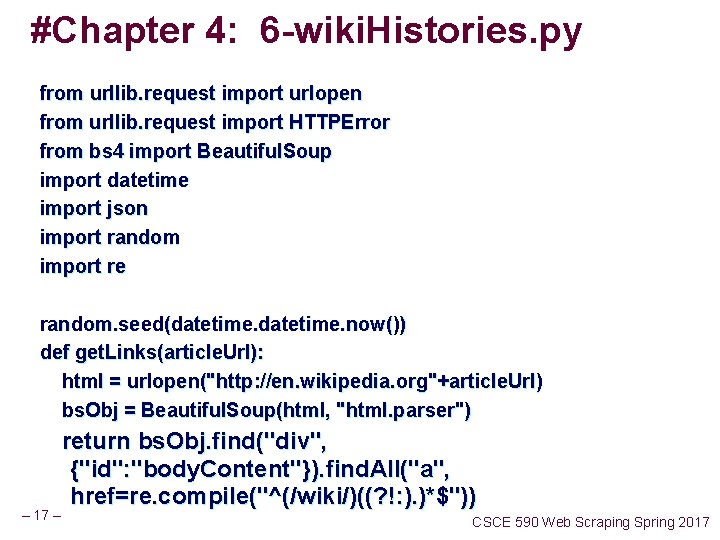

#Chapter 4: 6 -wiki. Histories. py from urllib. request import urlopen from urllib. request import HTTPError from bs 4 import Beautiful. Soup import datetime import json import random import re random. seed(datetime. now()) def get. Links(article. Url): html = urlopen("http: //en. wikipedia. org"+article. Url) bs. Obj = Beautiful. Soup(html, "html. parser") return bs. Obj. find("div", – 17 – {"id": "body. Content"}). find. All("a", href=re. compile("^(/wiki/)((? !: ). )*$")) CSCE 590 Web Scraping Spring 2017

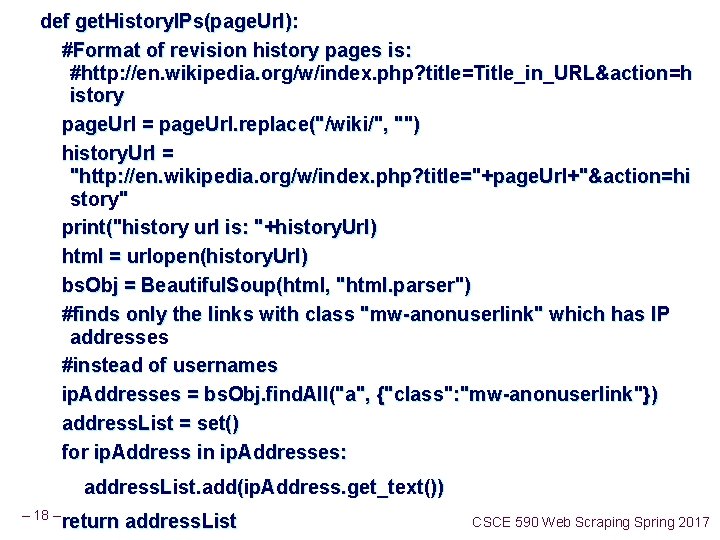

def get. History. IPs(page. Url): #Format of revision history pages is: #http: //en. wikipedia. org/w/index. php? title=Title_in_URL&action=h istory page. Url = page. Url. replace("/wiki/", "") history. Url = "http: //en. wikipedia. org/w/index. php? title="+page. Url+"&action=hi story" print("history url is: "+history. Url) html = urlopen(history. Url) bs. Obj = Beautiful. Soup(html, "html. parser") #finds only the links with class "mw-anonuserlink" which has IP addresses #instead of usernames ip. Addresses = bs. Obj. find. All("a", {"class": "mw-anonuserlink"}) address. List = set() for ip. Address in ip. Addresses: address. List. add(ip. Address. get_text()) – 18 – return address. List CSCE 590 Web Scraping Spring 2017

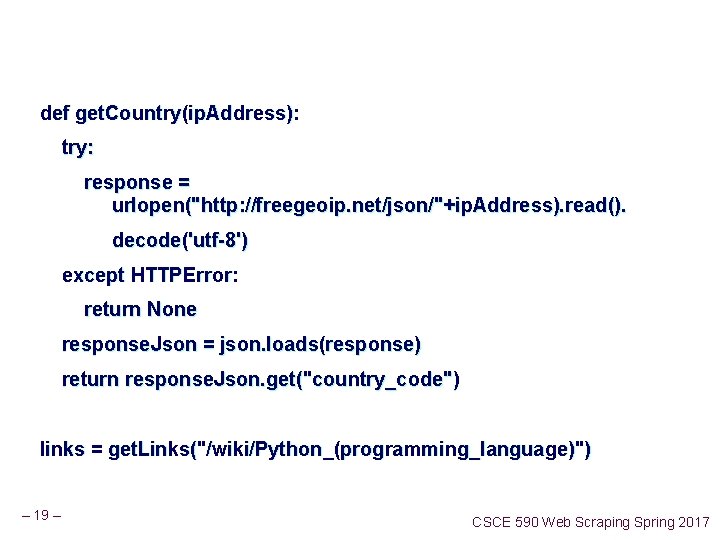

def get. Country(ip. Address): try: response = urlopen("http: //freegeoip. net/json/"+ip. Address). read(). decode('utf-8') except HTTPError: return None response. Json = json. loads(response) return response. Json. get("country_code") links = get. Links("/wiki/Python_(programming_language)") – 19 – CSCE 590 Web Scraping Spring 2017

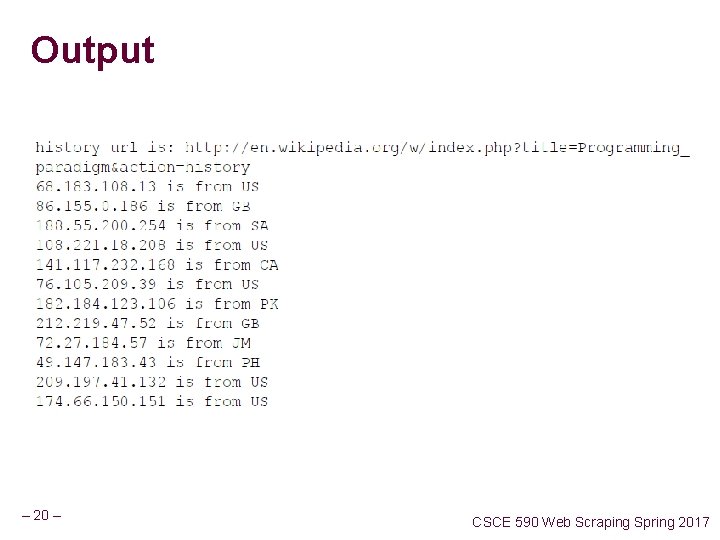

Output – 20 – CSCE 590 Web Scraping Spring 2017

Chapter 5 - Storing Data Files Csv files Json, xml – 21 – CSCE 590 Web Scraping Spring 2017

Downloading images : to copy or not As you are scraping do you download images or just store links? Advantages to not copying? § Scrapers run much faster, and require much less bandwidth, when they don’t have to download files. § You save space on your own machine by storing only the URLs. § It is easier to write code that only stores URLs and doesn’t need to deal with additional file downloads. § You can lessen the load on the host server by avoiding large file downloads. – 22 – CSCE 590 Web Scraping Spring 2017

Advantages to not copying? § Embedding these URLs in your own website or application is known as hotlinking and doing it is a very quick way to get you in hot water on the Internet. § You do not want to use someone else’s server cycles to host media for your own applications. § The file hosted at any particular URL is subject to change. This might lead to embarrassing effects if, say, you’re embedding a hotlinked image on a public blog. § If you’re storing the URLs with the intent to store the file later, for further research, it might eventually go missing or be changed to something completely irrelevant at a later date. § Mitchell, Ryan. Web Scraping with Python: Collecting Data from the Modern Web (Kindle Locations 1877 -1882). O'Reilly Media. – 23 – CSCE 590 Web Scraping Spring 2017 Kindle Edition.

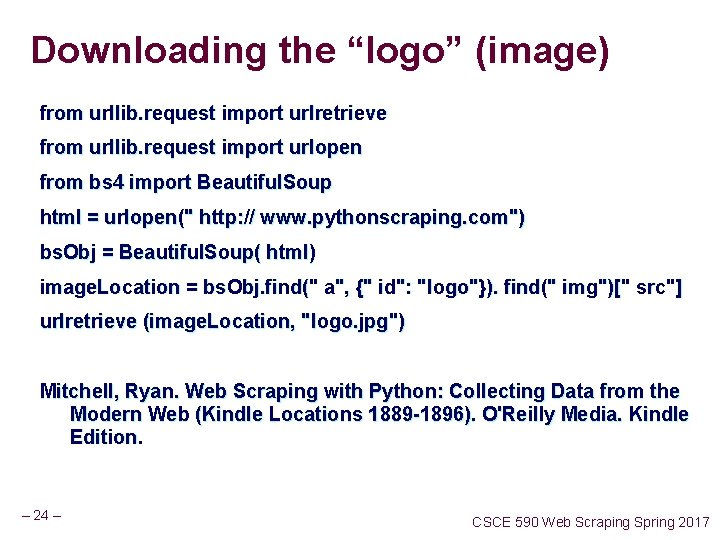

Downloading the “logo” (image) from urllib. request import urlretrieve from urllib. request import urlopen from bs 4 import Beautiful. Soup html = urlopen(" http: // www. pythonscraping. com") bs. Obj = Beautiful. Soup( html) image. Location = bs. Obj. find(" a", {" id": "logo"}). find(" img")[" src"] urlretrieve (image. Location, "logo. jpg") Mitchell, Ryan. Web Scraping with Python: Collecting Data from the Modern Web (Kindle Locations 1889 -1896). O'Reilly Media. Kindle Edition. – 24 – CSCE 590 Web Scraping Spring 2017

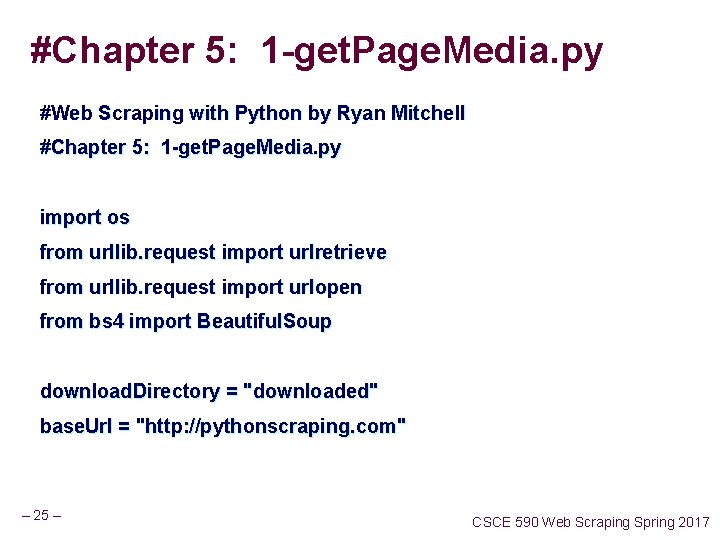

#Chapter 5: 1 -get. Page. Media. py #Web Scraping with Python by Ryan Mitchell #Chapter 5: 1 -get. Page. Media. py import os from urllib. request import urlretrieve from urllib. request import urlopen from bs 4 import Beautiful. Soup download. Directory = "downloaded" base. Url = "http: //pythonscraping. com" – 25 – CSCE 590 Web Scraping Spring 2017

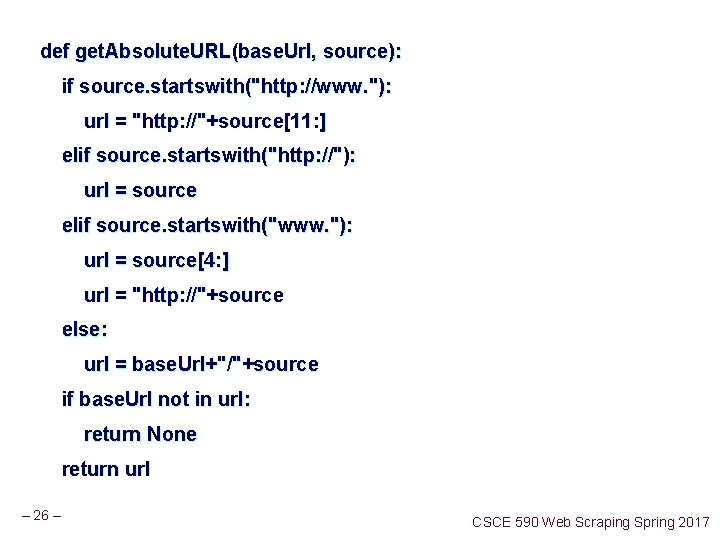

def get. Absolute. URL(base. Url, source): if source. startswith("http: //www. "): url = "http: //"+source[11: ] elif source. startswith("http: //"): url = source elif source. startswith("www. "): url = source[4: ] url = "http: //"+source else: url = base. Url+"/"+source if base. Url not in url: return None return url – 26 – CSCE 590 Web Scraping Spring 2017

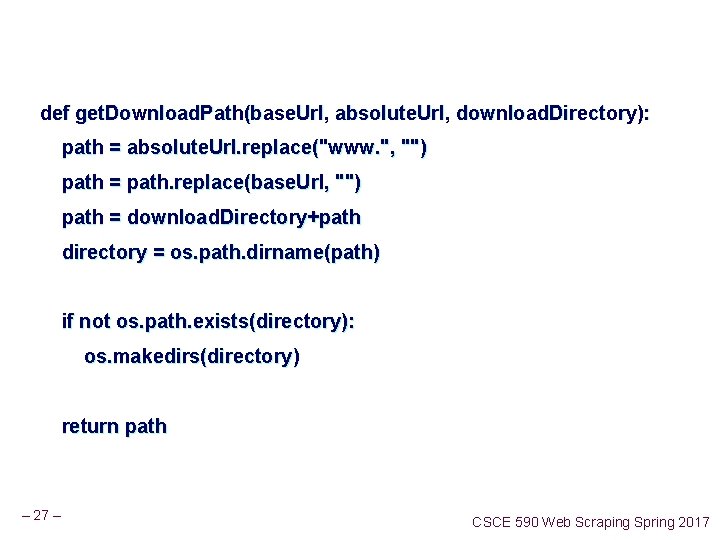

def get. Download. Path(base. Url, absolute. Url, download. Directory): path = absolute. Url. replace("www. ", "") path = path. replace(base. Url, "") path = download. Directory+path directory = os. path. dirname(path) if not os. path. exists(directory): os. makedirs(directory) return path – 27 – CSCE 590 Web Scraping Spring 2017

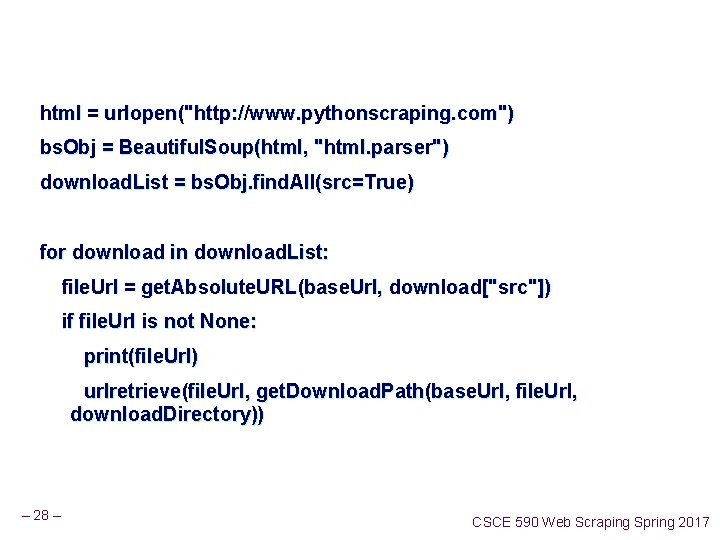

html = urlopen("http: //www. pythonscraping. com") bs. Obj = Beautiful. Soup(html, "html. parser") download. List = bs. Obj. find. All(src=True) for download in download. List: file. Url = get. Absolute. URL(base. Url, download["src"]) if file. Url is not None: print(file. Url) urlretrieve(file. Url, get. Download. Path(base. Url, file. Url, download. Directory)) – 28 – CSCE 590 Web Scraping Spring 2017

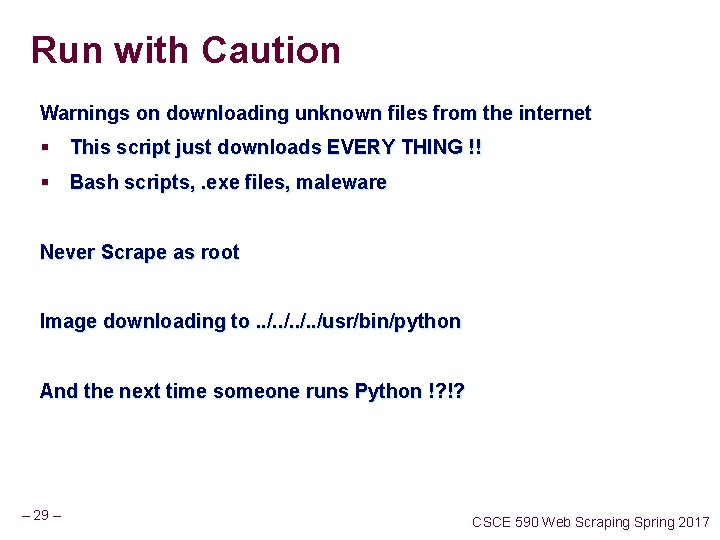

Run with Caution Warnings on downloading unknown files from the internet § This script just downloads EVERY THING !! § Bash scripts, . exe files, maleware Never Scrape as root Image downloading to. . /usr/bin/python And the next time someone runs Python !? !? – 29 – CSCE 590 Web Scraping Spring 2017

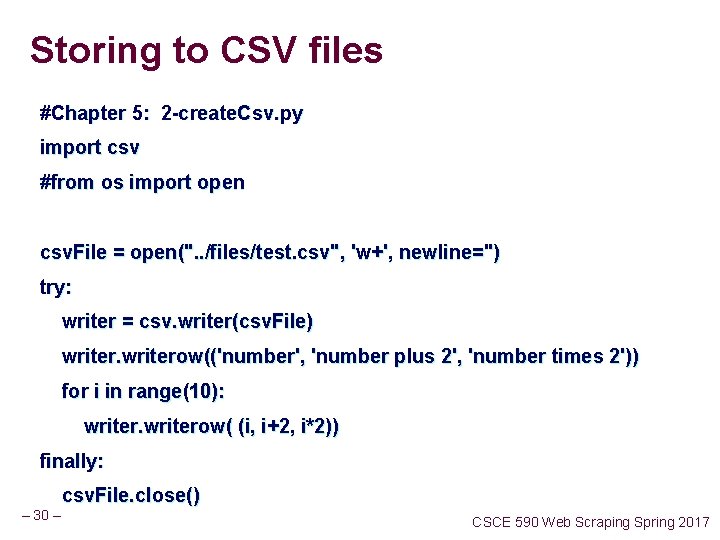

Storing to CSV files #Chapter 5: 2 -create. Csv. py import csv #from os import open csv. File = open(". . /files/test. csv", 'w+', newline='') try: writer = csv. writer(csv. File) writerow(('number', 'number plus 2', 'number times 2')) for i in range(10): writerow( (i, i+2, i*2)) finally: csv. File. close() – 30 – CSCE 590 Web Scraping Spring 2017

Retrieving HTML tables Doing once use Excel and save as csv Doing it 50 times write a Python Script – 31 – CSCE 590 Web Scraping Spring 2017

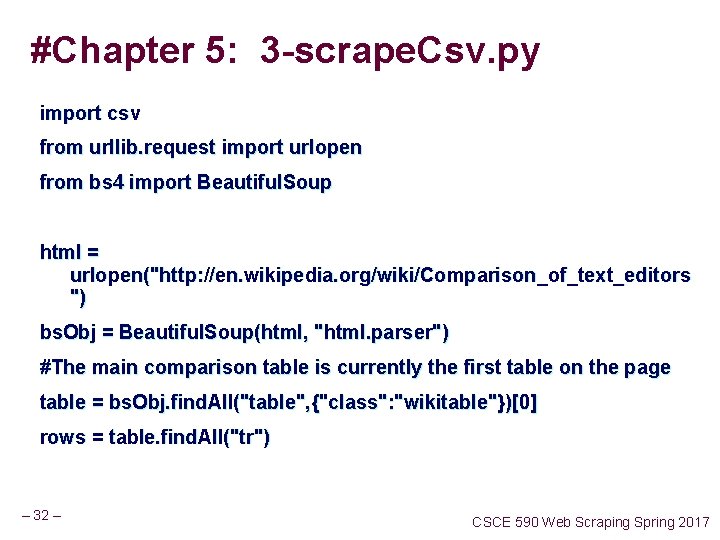

#Chapter 5: 3 -scrape. Csv. py import csv from urllib. request import urlopen from bs 4 import Beautiful. Soup html = urlopen("http: //en. wikipedia. org/wiki/Comparison_of_text_editors ") bs. Obj = Beautiful. Soup(html, "html. parser") #The main comparison table is currently the first table on the page table = bs. Obj. find. All("table", {"class": "wikitable"})[0] rows = table. find. All("tr") – 32 – CSCE 590 Web Scraping Spring 2017

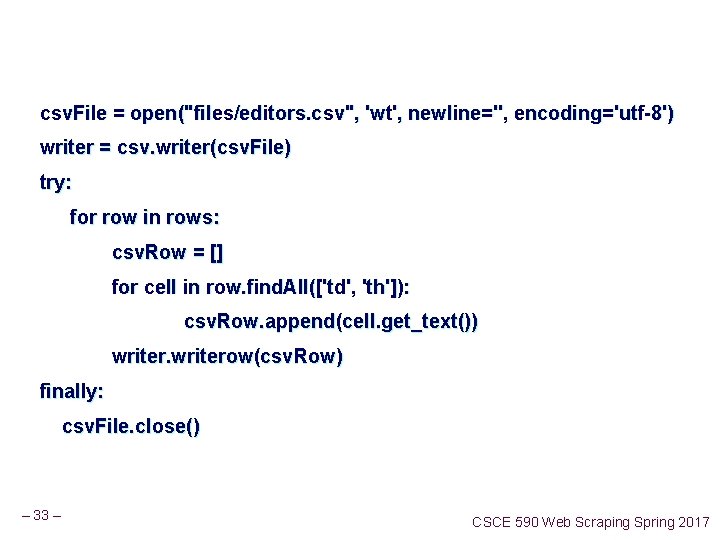

csv. File = open("files/editors. csv", 'wt', newline='', encoding='utf-8') writer = csv. writer(csv. File) try: for row in rows: csv. Row = [] for cell in row. find. All(['td', 'th']): csv. Row. append(cell. get_text()) writerow(csv. Row) finally: csv. File. close() – 33 – CSCE 590 Web Scraping Spring 2017

Storing in Databases My. SQL Microsoft’s Sequel server Oracle’s DBMS Why use My. SQL? § Free § Used by the big boys: You. Tube, Twitter, Facebook § So ubiquity, price, “out of the box usability” – 34 – CSCE 590 Web Scraping Spring 2017

Relational Databases – 35 – CSCE 590 Web Scraping Spring 2017

SQL – Structured Query Language? SELECT * FROM users WHERE firstname = "Ryan" – 36 – CSCE 590 Web Scraping Spring 2017

Installing $ sudo apt-get install mysl-server – 37 – CSCE 590 Web Scraping Spring 2017

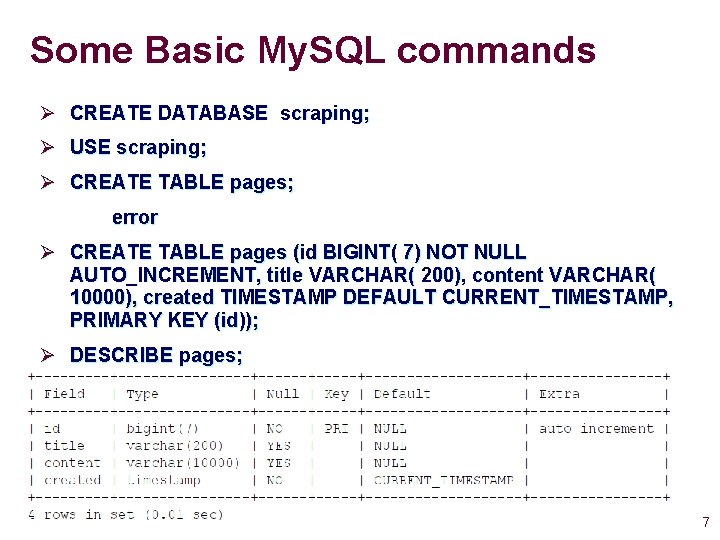

Some Basic My. SQL commands Ø CREATE DATABASE scraping; Ø USE scraping; Ø CREATE TABLE pages; error Ø CREATE TABLE pages (id BIGINT( 7) NOT NULL AUTO_INCREMENT, title VARCHAR( 200), content VARCHAR( 10000), created TIMESTAMP DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (id)); Ø DESCRIBE pages; – 38 – CSCE 590 Web Scraping Spring 2017

> INSERT INTO pages (title, content) VALUES (" Test page title", "This is some te st page content. It can be up to 10, 000 characters long. "); Of course, we can override these defaults: INSERT INTO pages (id, title, content, created) VALUES (3, "Test page title", " This is some test page content. It can be up to 10, 000 characters long. ", "2014 - 09 -21 10: 25: 32"); Mitchell, Ryan. Web Scraping with Python: Collecting Data from the Modern Web (Kindle Locations 2097 -2101). O'Reilly Media. Kindle Edition. – 39 – CSCE 590 Web Scraping Spring 2017

Ø SELECT * FROM pages WHERE id = 2; Ø SELECT * FROM pages WHERE title LIKE "% test%"; Ø SELECT id, title FROM pages WHERE content LIKE "% page content%"; Mitchell, Ryan. Web Scraping with Python: Collecting Data from the Modern Web (Kindle Locations 2106 -2115). O'Reilly Media. Kindle Edition. – 40 – CSCE 590 Web Scraping Spring 2017

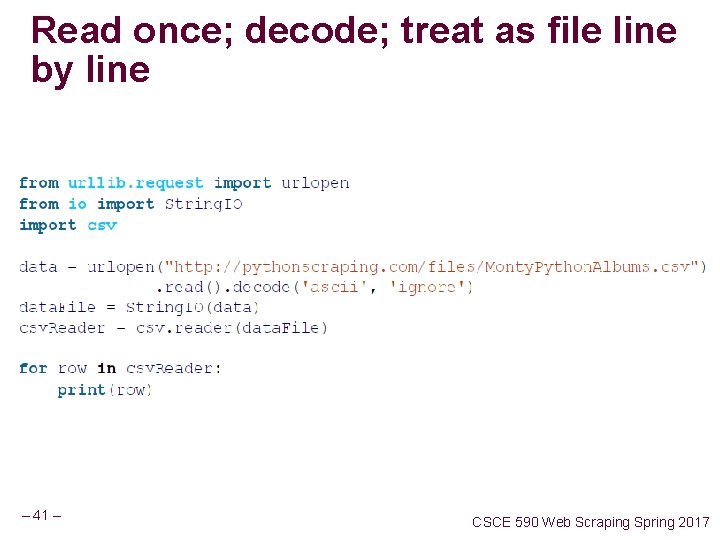

Read once; decode; treat as file line by line – 41 – CSCE 590 Web Scraping Spring 2017

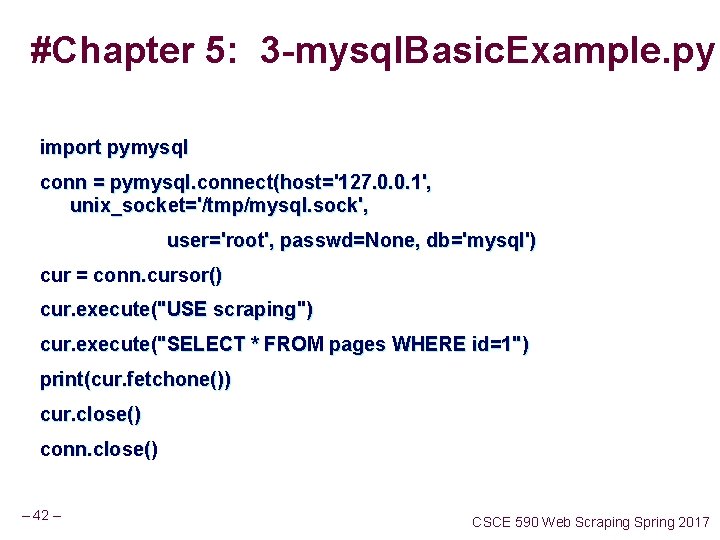

#Chapter 5: 3 -mysql. Basic. Example. py import pymysql conn = pymysql. connect(host='127. 0. 0. 1', unix_socket='/tmp/mysql. sock', user='root', passwd=None, db='mysql') cur = conn. cursor() cur. execute("USE scraping") cur. execute("SELECT * FROM pages WHERE id=1") print(cur. fetchone()) cur. close() conn. close() – 42 – CSCE 590 Web Scraping Spring 2017

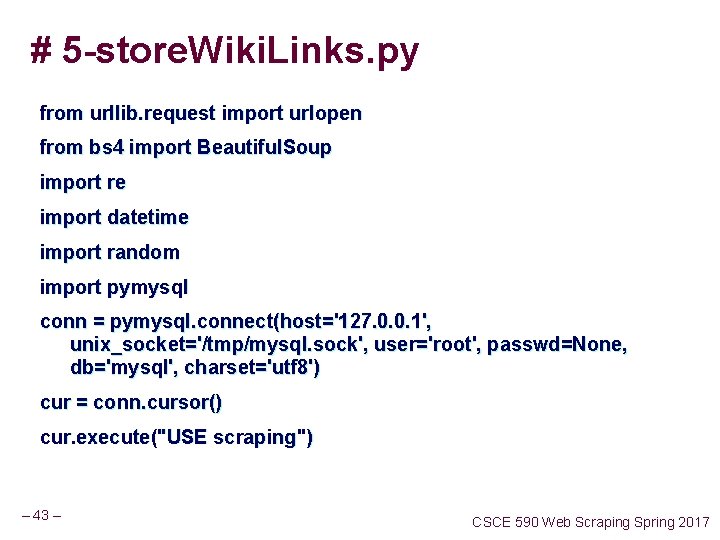

# 5 -store. Wiki. Links. py from urllib. request import urlopen from bs 4 import Beautiful. Soup import re import datetime import random import pymysql conn = pymysql. connect(host='127. 0. 0. 1', unix_socket='/tmp/mysql. sock', user='root', passwd=None, db='mysql', charset='utf 8') cur = conn. cursor() cur. execute("USE scraping") – 43 – CSCE 590 Web Scraping Spring 2017

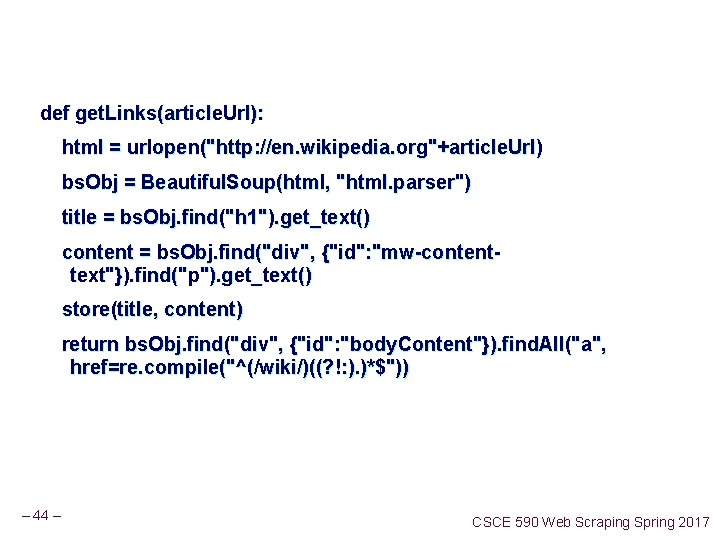

def get. Links(article. Url): html = urlopen("http: //en. wikipedia. org"+article. Url) bs. Obj = Beautiful. Soup(html, "html. parser") title = bs. Obj. find("h 1"). get_text() content = bs. Obj. find("div", {"id": "mw-contenttext"}). find("p"). get_text() store(title, content) return bs. Obj. find("div", {"id": "body. Content"}). find. All("a", href=re. compile("^(/wiki/)((? !: ). )*$")) – 44 – CSCE 590 Web Scraping Spring 2017

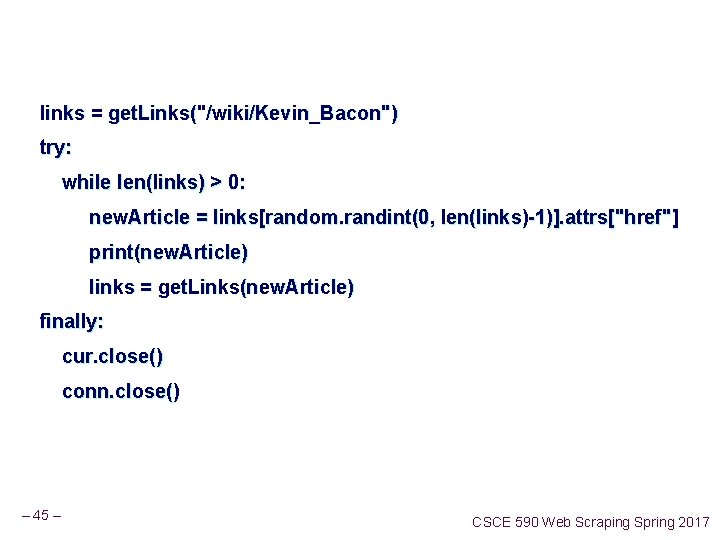

links = get. Links("/wiki/Kevin_Bacon") try: while len(links) > 0: new. Article = links[random. randint(0, len(links)-1)]. attrs["href"] print(new. Article) links = get. Links(new. Article) finally: cur. close() conn. close() – 45 – CSCE 590 Web Scraping Spring 2017

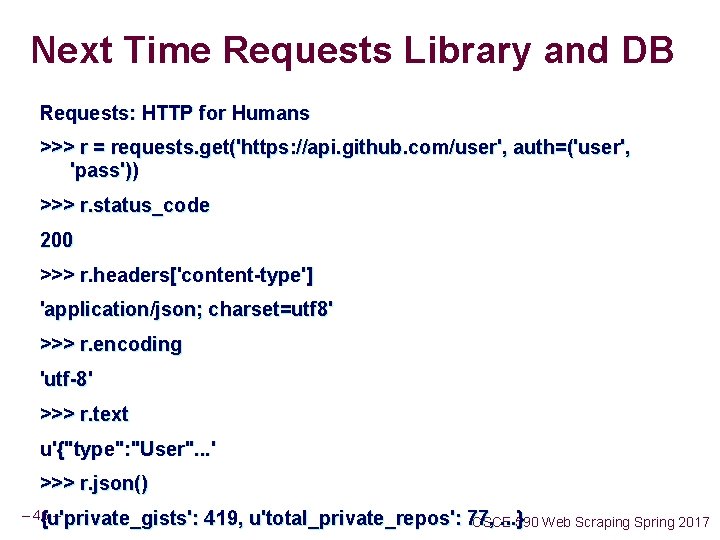

Next Time Requests Library and DB Requests: HTTP for Humans >>> r = requests. get('https: //api. github. com/user', auth=('user', 'pass')) >>> r. status_code 200 >>> r. headers['content-type'] 'application/json; charset=utf 8' >>> r. encoding 'utf-8' >>> r. text u'{"type": "User". . . ' >>> r. json() – 46 – {u'private_gists': 419, u'total_private_repos': 77, . . . } CSCE 590 Web Scraping Spring 2017

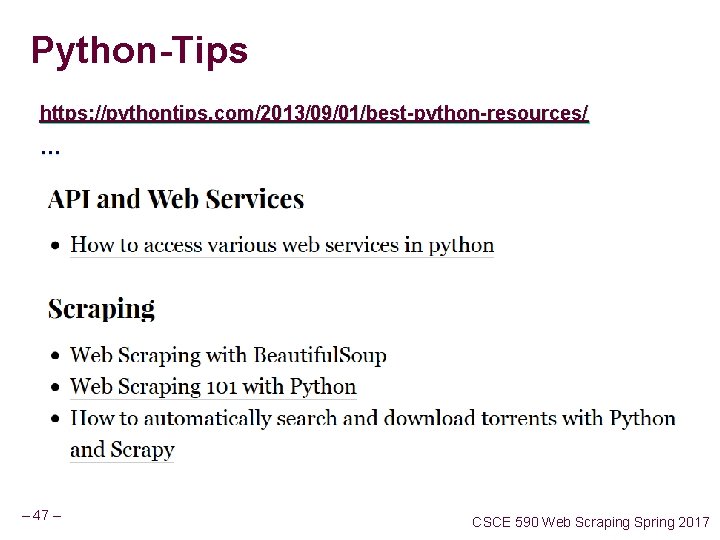

Python-Tips https: //pythontips. com/2013/09/01/best-python-resources/ … – 47 – CSCE 590 Web Scraping Spring 2017

- Slides: 47