Web Image Retrieval ReRanking with Relevance Model WeiHao

Web Image Retrieval Re-Ranking with Relevance Model Wei-Hao Lin, Rong Jin, Alexander Hauptmann Language Technologies Institute School of Computer Science Carnegie Mellon University International Conference on Web Intelligence(WIC’ 03) Presented by Chu Huei-Ming 2005/02/24

Reference • Relevance Models in Information Retrieval – Victor Lavrenko and W. Bruce Croft – Center for Intelligent Information Retrieval Department of Computer Science University of Massachusetts (UMass) – 11 -56 2003 Kluwer Academic Publishers. Printed in the Netherlands 2

Outline • • Introduction Relevance Model Web image retrieval re-ranking Estimating a Relevance Model – Estimation form a set of examples – Estimation without examples • Ranking Criterion • Experiment • Conclusion 3

Introduction (1/2) • Most current large-scale web image search engines exploit text and link structure to “understand” the content of the web images. • This paper propose a re-ranking method to improve web image retrieval by reordering the images retrieved from an image search engine. • The re-ranking process is based on a relevance model. 4

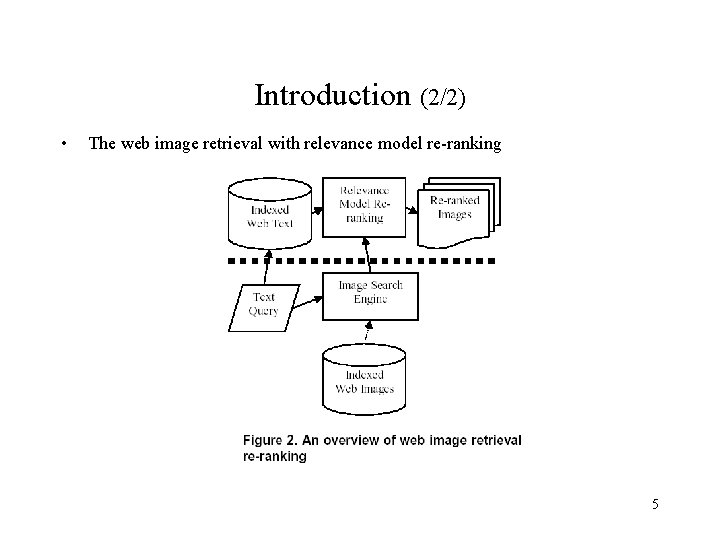

Introduction (2/2) • The web image retrieval with relevance model re-ranking 5

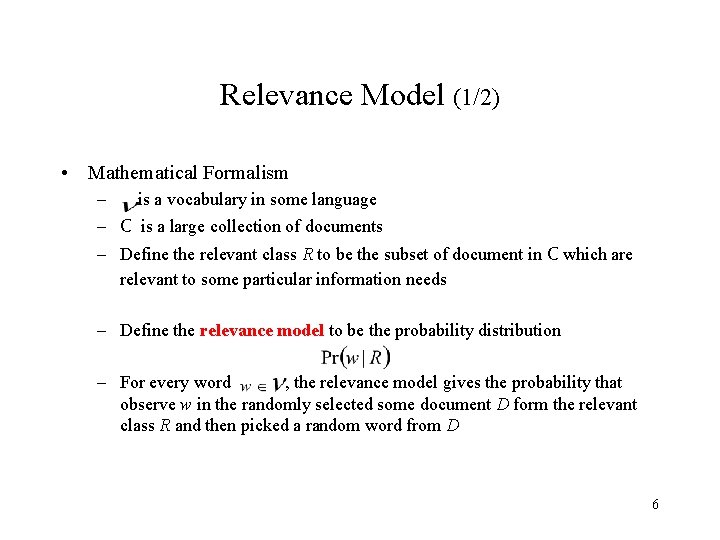

Relevance Model (1/2) • Mathematical Formalism – is a vocabulary in some language – C is a large collection of documents – Define the relevant class R to be the subset of document in C which are relevant to some particular information needs – Define the relevance model to be the probability distribution – For every word , the relevance model gives the probability that observe w in the randomly selected some document D form the relevant class R and then picked a random word from D 6

Relevance Model (2/2) • The important issue in IR is capturing the topic discussed in a sample of text, and to that end unigram models fare quite well. • The choice of estimation techniques has a particularly strong influence on the quality of relevance models 7

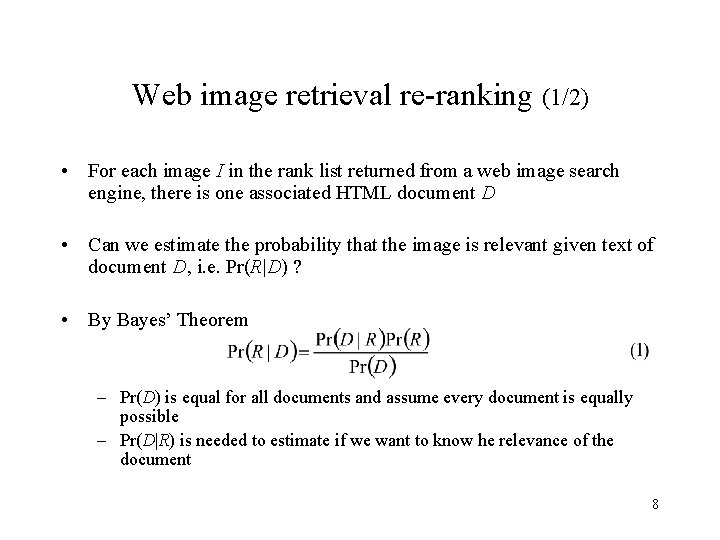

Web image retrieval re-ranking (1/2) • For each image I in the rank list returned from a web image search engine, there is one associated HTML document D • Can we estimate the probability that the image is relevant given text of document D, i. e. Pr(R|D) ? • By Bayes’ Theorem – Pr(D) is equal for all documents and assume every document is equally possible – Pr(D|R) is needed to estimate if we want to know he relevance of the document 8

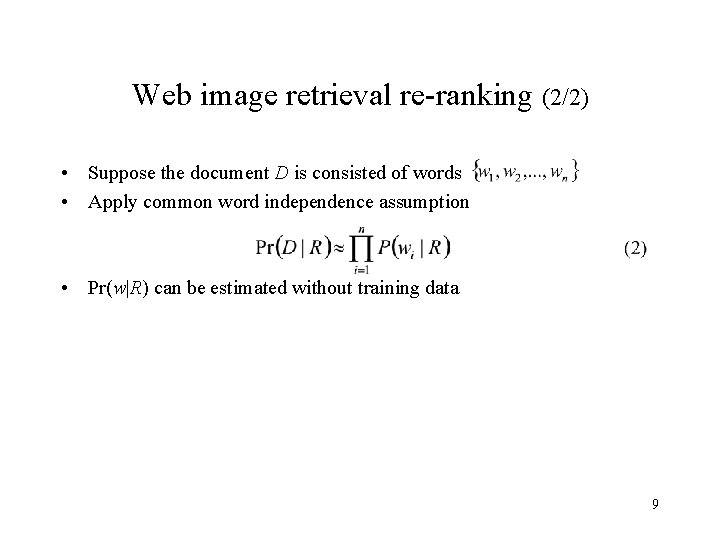

Web image retrieval re-ranking (2/2) • Suppose the document D is consisted of words • Apply common word independence assumption • Pr(w|R) can be estimated without training data 9

Estimating a Relevance Model • Estimation form a set of examples – There has full information about the set R of relevant documents • Estimation without examples – There has no examples from which we could estimate directly 10

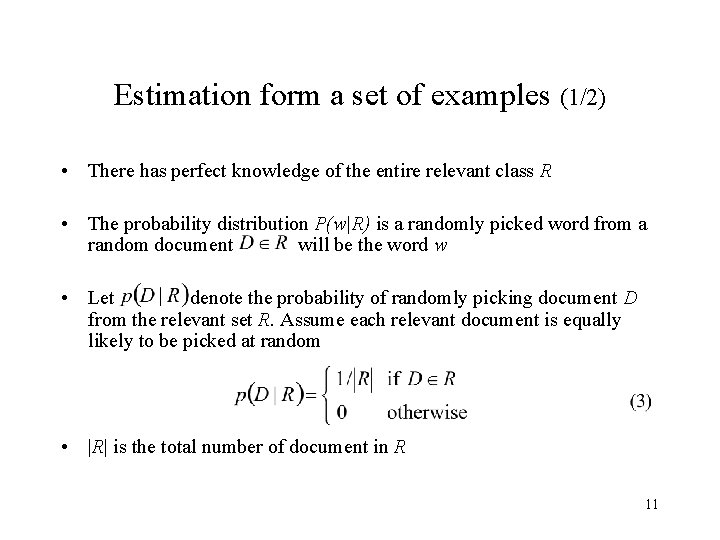

Estimation form a set of examples (1/2) • There has perfect knowledge of the entire relevant class R • The probability distribution P(w|R) is a randomly picked word from a random document will be the word w • Let denote the probability of randomly picking document D from the relevant set R. Assume each relevant document is equally likely to be picked at random • |R| is the total number of document in R 11

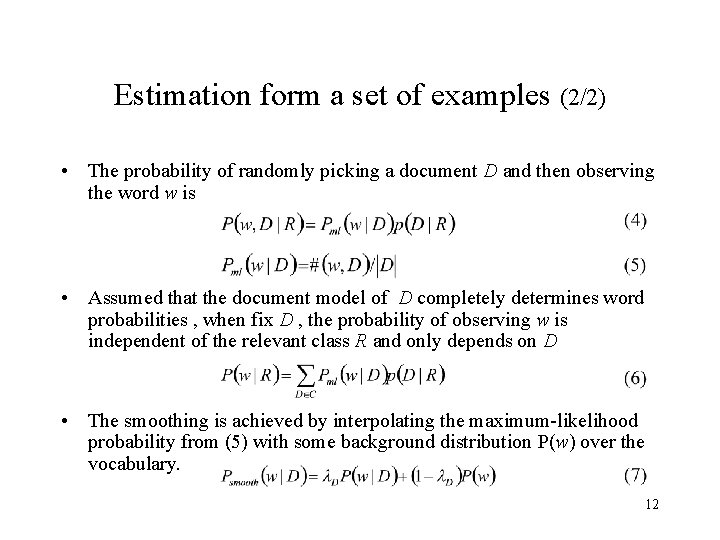

Estimation form a set of examples (2/2) • The probability of randomly picking a document D and then observing the word w is • Assumed that the document model of D completely determines word probabilities , when fix D , the probability of observing w is independent of the relevant class R and only depends on D • The smoothing is achieved by interpolating the maximum-likelihood probability from (5) with some background distribution P(w) over the vocabulary. 12

Estimation without examples (1/6) • In the ad-hoc information retrieval, there has only a short 2 -3 word query, indicative of the user’s information need and no examples of relevant documents 13

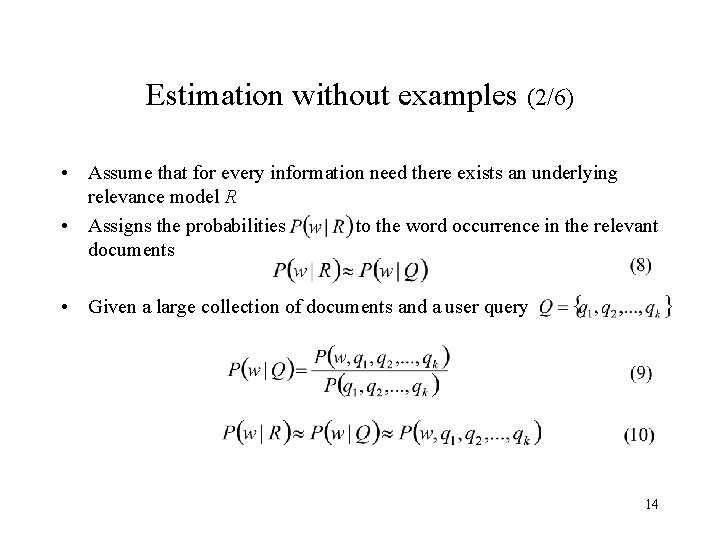

Estimation without examples (2/6) • Assume that for every information need there exists an underlying relevance model R • Assigns the probabilities to the word occurrence in the relevant documents • Given a large collection of documents and a user query 14

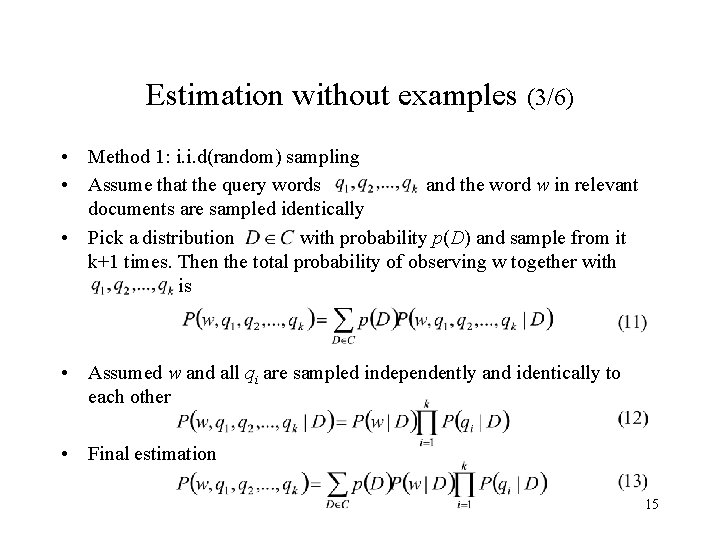

Estimation without examples (3/6) • Method 1: i. i. d(random) sampling • Assume that the query words and the word w in relevant documents are sampled identically • Pick a distribution with probability p(D) and sample from it k+1 times. Then the total probability of observing w together with is • Assumed w and all qi are sampled independently and identically to each other • Final estimation 15

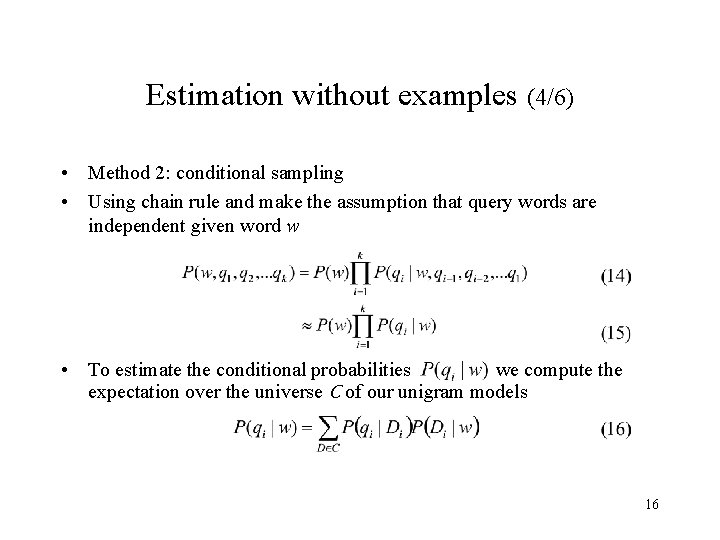

Estimation without examples (4/6) • Method 2: conditional sampling • Using chain rule and make the assumption that query words are independent given word w • To estimate the conditional probabilities we compute the expectation over the universe C of our unigram models 16

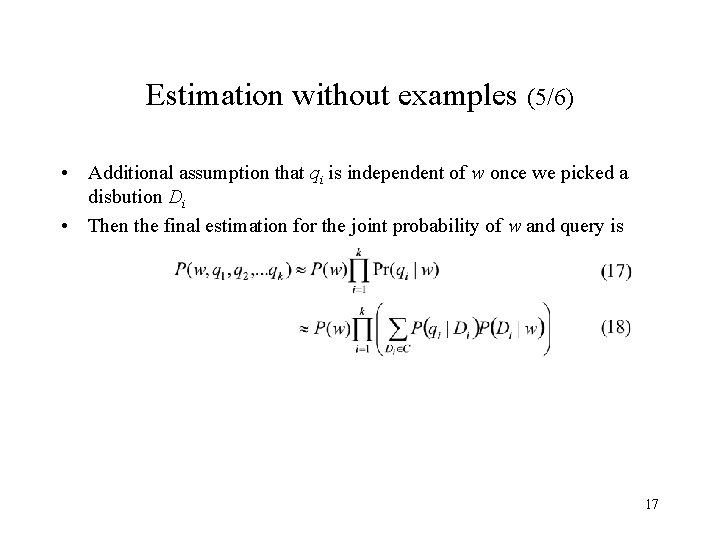

Estimation without examples (5/6) • Additional assumption that qi is independent of w once we picked a disbution Di • Then the final estimation for the joint probability of w and query is 17

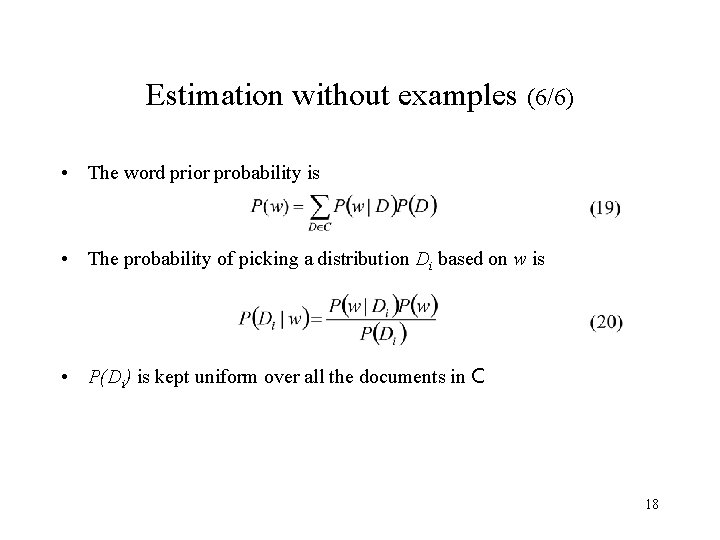

Estimation without examples (6/6) • The word prior probability is • The probability of picking a distribution Di based on w is • P(Di) is kept uniform over all the documents in C 18

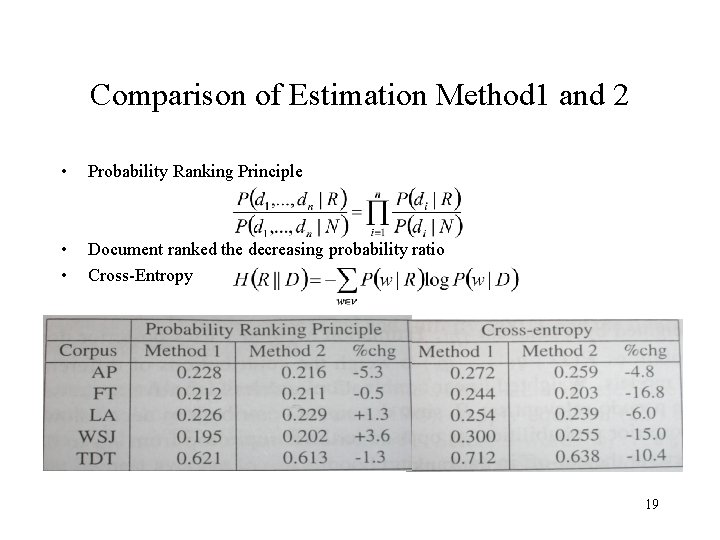

Comparison of Estimation Method 1 and 2 • Probability Ranking Principle • • Document ranked the decreasing probability ratio Cross-Entropy 19

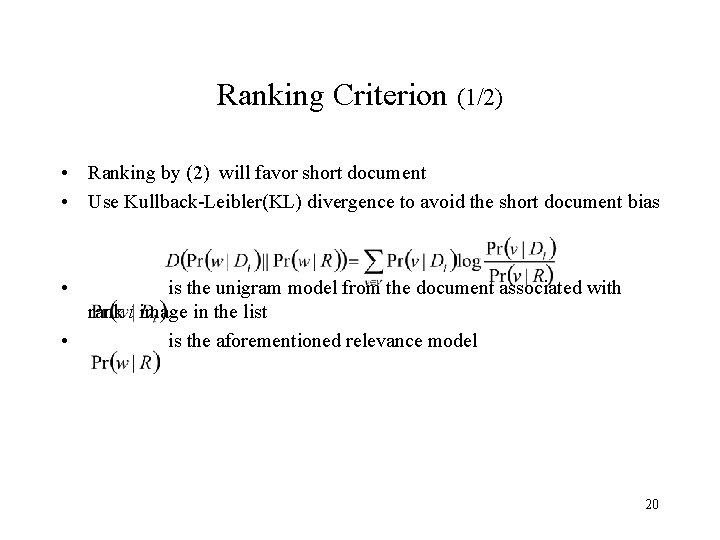

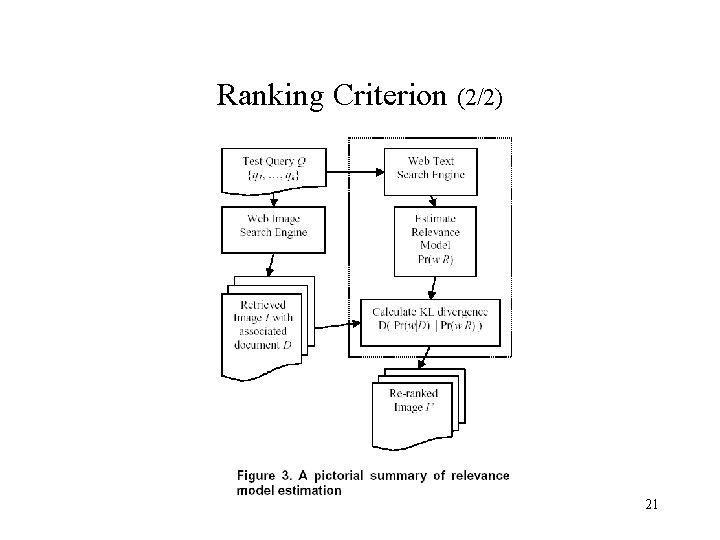

Ranking Criterion (1/2) • Ranking by (2) will favor short document • Use Kullback-Leibler(KL) divergence to avoid the short document bias • is the unigram model from the document associated with rank i image in the list • is the aforementioned relevance model 20

Ranking Criterion (2/2) 21

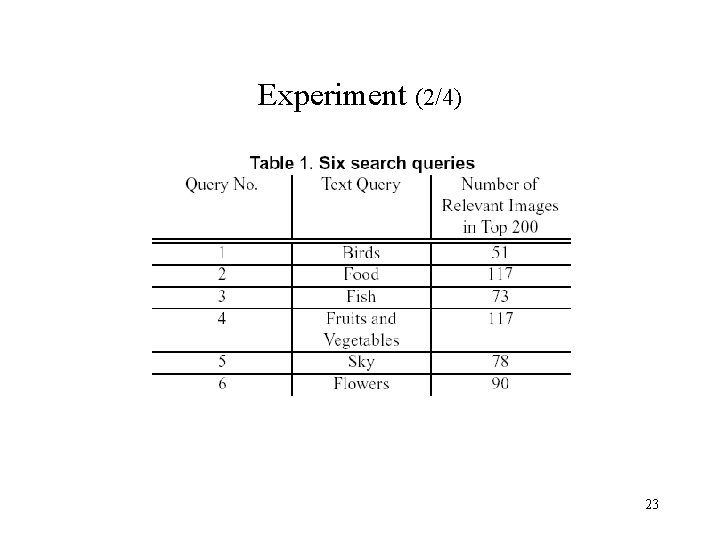

Experiment (1/4) • Test the idea of re-ranking on six text queries to a large-scale web image search engine, Google Image Search. From July 2001 to March 2003 there are 425 million images indexed by it • Six queries are chosen from image categories in Core Image Database • Each text query is typed into Google Image Search and top 200 entries are saved for evaluation • The 1200 images for six queries are fetched, they are manually labeled into three categories: relevant, ambiguous, irrelevant 22

Experiment (2/4) 23

Experiment (3/4) • For each query, send the same keywords to Google Web Search and obtain a list of relevant documents via Google Web APIs • Top-ranked 200 web documents are removed all the HTML tag and filter out the words appearing in the INQUERY stop-word list and stem words using Porter algorithm • The smoothing parameter is 0. 6 24

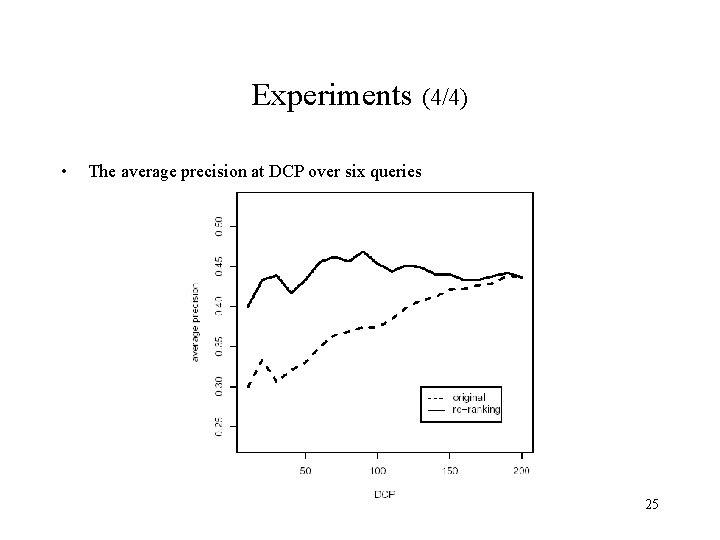

Experiments (4/4) • The average precision at DCP over six queries 25

Conclusion • The average precision at the top 50 documents with the precision improvement from the original 30 -35% to 45 % • The Internet users are usually with limit time and patience, high precision at top-ranked documents will save user a lot of efforts and help them find relevant images more easily and quickly 26

- Slides: 26