Web classification Ontology and Taxonomy References n n

Web classification Ontology and Taxonomy

References n n Using Ontologies to Discover Domain-Level Web Usage Profiles {hdai, mobasher}@cs. depaul. edu Learning to Construct Knowledge Bases from World Wide Web. {M. Craven, D. Di. Pasquo, A. Mitchell, K. Nigam, S Slattery} Carnegie Mellon University-Pittsburg-USA; {D. Freitag A. Mc. Callum} Just Reserch. Pittsburg-USA 2

Definitions n Ontology n n An explicit formal specification of how to represent the objects, concepts and other entities that are assumed to exist in some area of interest and the relationships that hold among them. Taxonomy n a classification of organisms into groups based on similarities of structure or origin etc 3

Goal n n Capture and model behavioral patterns and profiles of users interacting with a web site. Why? n n Collaborative filtering Personalization systems Improve organization and structural of the site Provide dynamic recommendations (www. recommendme. com) 4

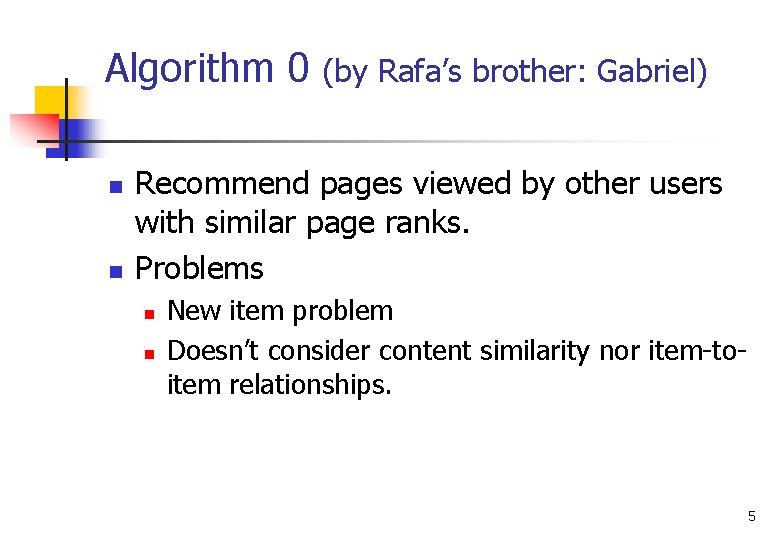

Algorithm 0 n n (by Rafa’s brother: Gabriel) Recommend pages viewed by other users with similar page ranks. Problems n n New item problem Doesn’t consider content similarity nor item-toitem relationships. 5

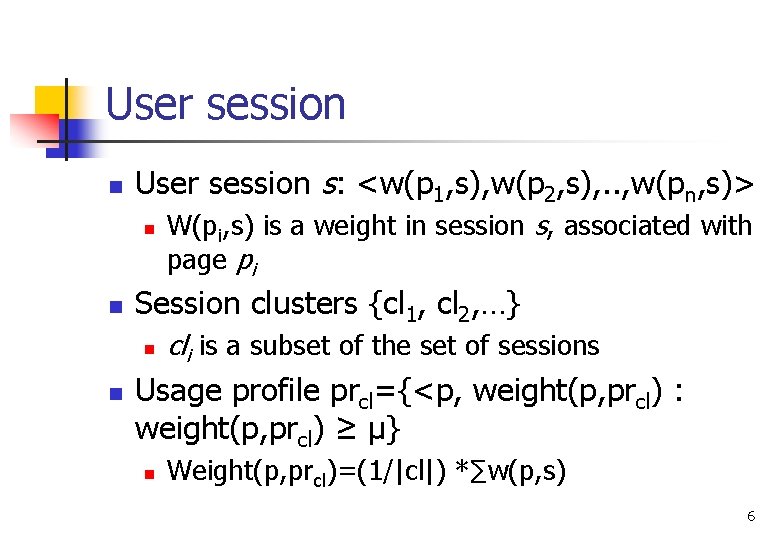

User session n User session s: <w(p 1, s), w(p 2, s), . . , w(pn, s)> n n Session clusters {cl 1, cl 2, …} n n W(pi, s) is a weight in session s, associated with page pi cli is a subset of the set of sessions Usage profile prcl={<p, weight(p, prcl) : weight(p, prcl) ≥ μ} n Weight(p, prcl)=(1/|cl|) *∑w(p, s) 6

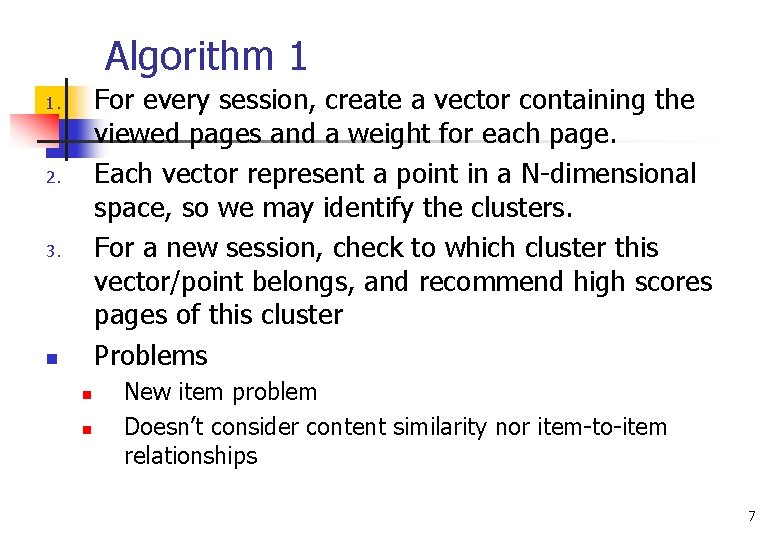

Algorithm 1 For every session, create a vector containing the viewed pages and a weight for each page. Each vector represent a point in a N-dimensional space, so we may identify the clusters. For a new session, check to which cluster this vector/point belongs, and recommend high scores pages of this cluster Problems 1. 2. 3. n n n New item problem Doesn’t consider content similarity nor item-to-item relationships 7

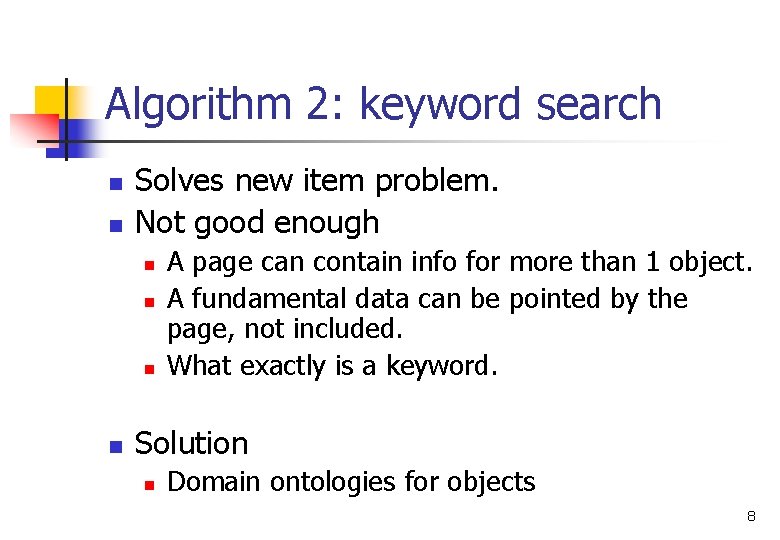

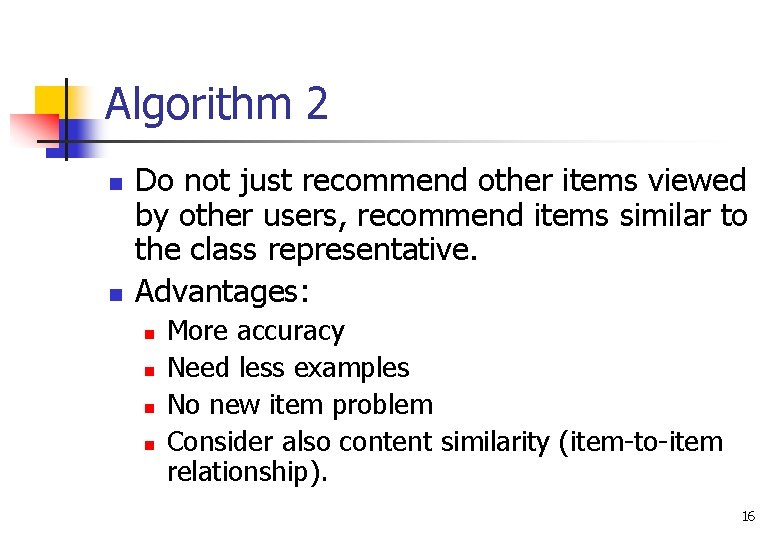

Algorithm 2: keyword search n n Solves new item problem. Not good enough n n A page can contain info for more than 1 object. A fundamental data can be pointed by the page, not included. What exactly is a keyword. Solution n Domain ontologies for objects 8

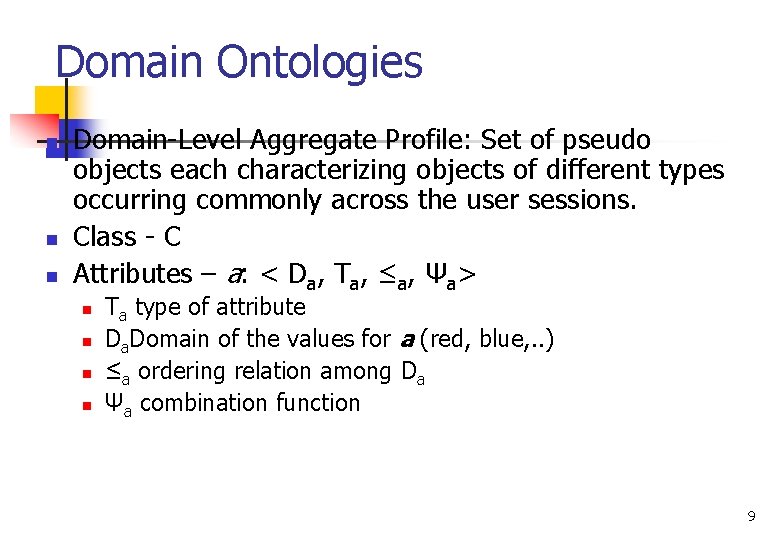

Domain Ontologies n n n Domain-Level Aggregate Profile: Set of pseudo objects each characterizing objects of different types occurring commonly across the user sessions. Class - C Attributes – a: < Da, Ta, ≤a, Ψa> n n Ta type of attribute Da. Domain of the values for a (red, blue, . . ) ≤a ordering relation among Da Ψa combination function 9

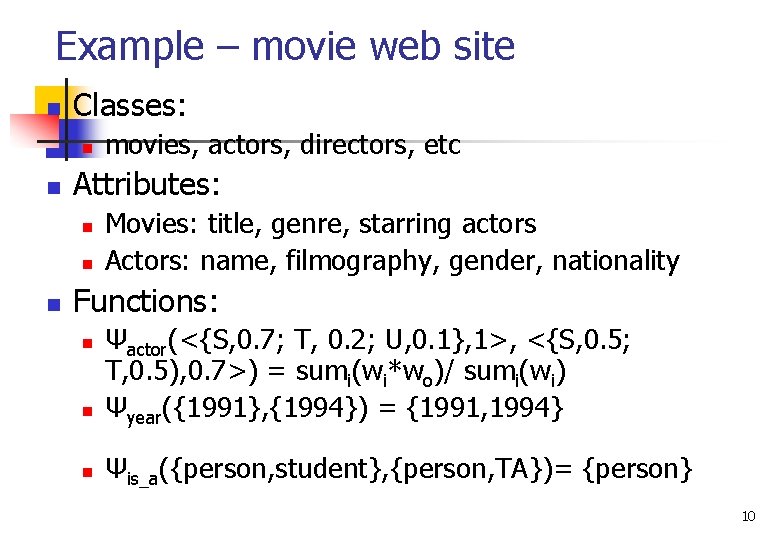

Example – movie web site n Classes: n n Attributes: n n n movies, actors, directors, etc Movies: title, genre, starring actors Actors: name, filmography, gender, nationality Functions: n Ψactor(<{S, 0. 7; T, 0. 2; U, 0. 1}, 1>, <{S, 0. 5; T, 0. 5), 0. 7>) = sumi(wi*wo)/ sumi(wi) Ψyear({1991}, {1994}) = {1991, 1994} n Ψis_a({person, student}, {person, TA})= {person} n 10

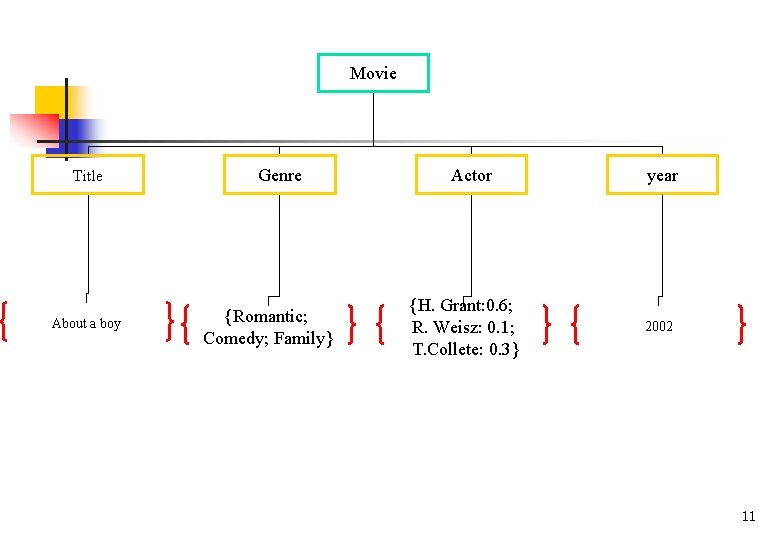

Movie Title About a boy Genre {Romantic; Comedy; Family} Actor {H. Grant: 0. 6; R. Weisz: 0. 1; T. Collete: 0. 3} year 2002 11

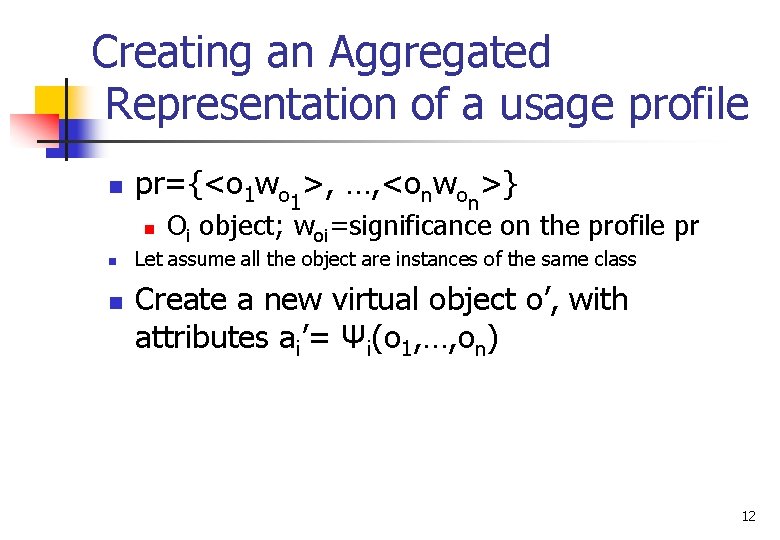

Creating an Aggregated Representation of a usage profile n pr={<o 1 wo >, …, <onwo >} 1 n n Oi object; woi=significance on the profile pr Let assume all the object are instances of the same class Create a new virtual object o’, with attributes ai’= Ψi(o 1, …, on) 12

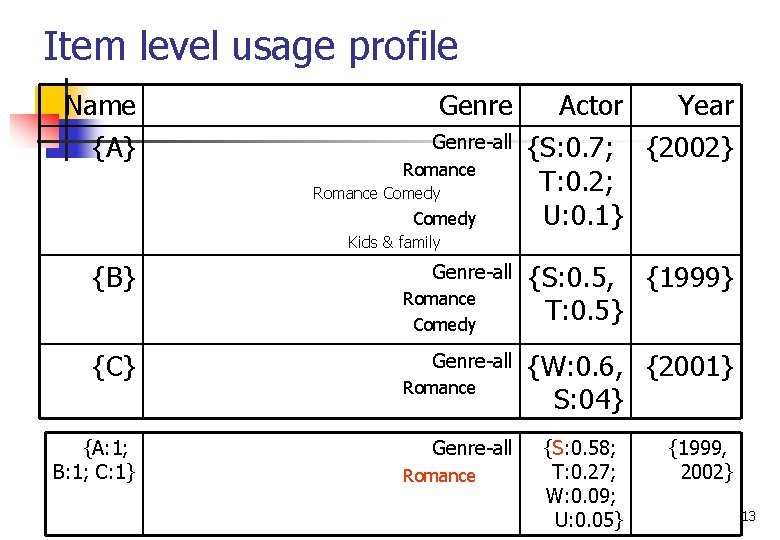

Item level usage profile Name {A} Genre-all Romance Comedy Actor Year {S: 0. 7; {2002} T: 0. 2; U: 0. 1} Kids & family {B} {C} {A: 1; B: 1; C: 1} Genre-all Romance Comedy Genre-all Romance {S: 0. 5, {1999} T: 0. 5} {W: 0. 6, {2001} S: 04} {S: 0. 58; T: 0. 27; W: 0. 09; U: 0. 05} {1999, 2002} 13

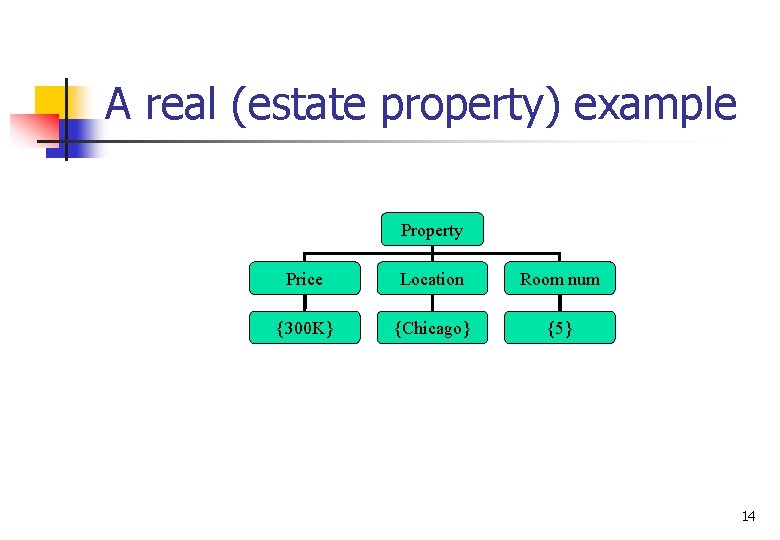

A real (estate property) example Property Price Location Room num {300 K} {Chicago} {5} 14

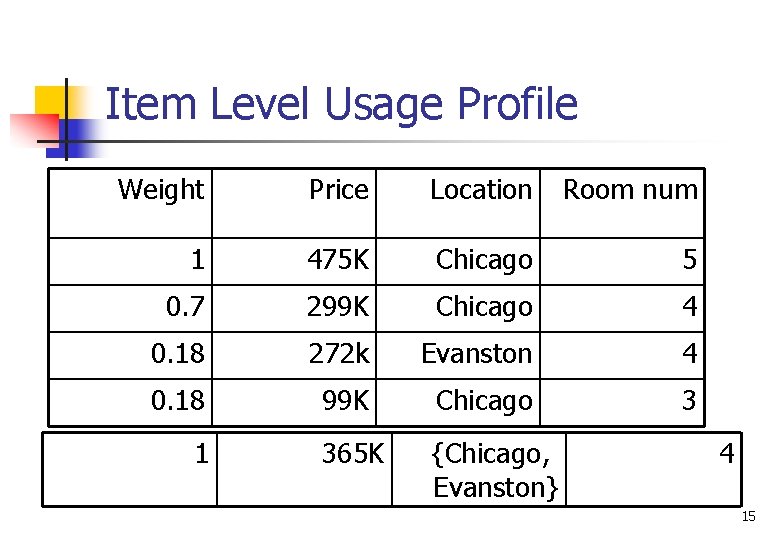

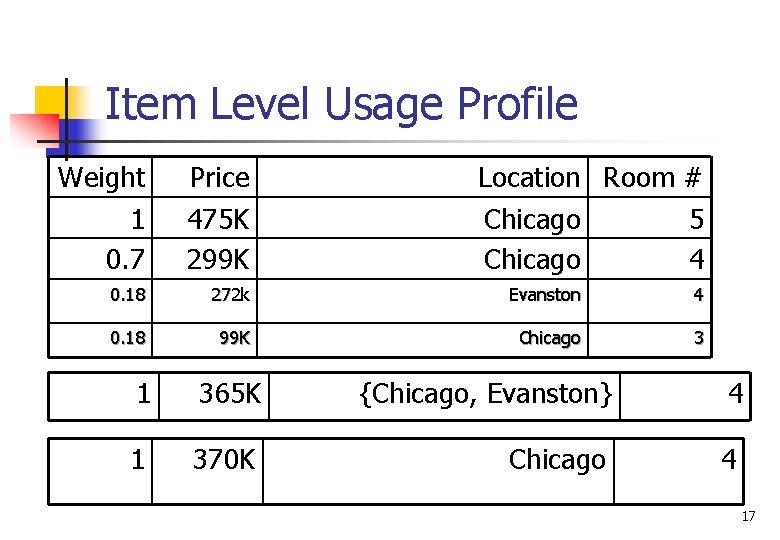

Item Level Usage Profile Weight Price Location Room num 1 475 K Chicago 5 0. 7 299 K Chicago 4 0. 18 272 k Evanston 4 0. 18 99 K Chicago 3 365 K {Chicago, Evanston} 1 4 15

Algorithm 2 n n Do not just recommend other items viewed by other users, recommend items similar to the class representative. Advantages: n n More accuracy Need less examples No new item problem Consider also content similarity (item-to-item relationship). 16

Item Level Usage Profile Weight 1 0. 7 Price 475 K 299 K Location Room # Chicago 5 Chicago 4 0. 18 272 k Evanston 4 0. 18 99 K Chicago 3 1 365 K {Chicago, Evanston} 4 1 370 K Chicago 4 17

Final Algorithm n Given a web site 1. 2. 3. Classify it contents into classes and attributes. Merge the objects of each user profile and create a pseudo object. Recommend according to this pseudo-object. 18

Problems n n n A per-topic solution Found patterns can be incomplete User patterns may change with time (for movies) “I loved ET” problem. Need cookies and other methods to identify users. How is weight calculated? Can need many examples: “I loved American Beauty” problem. How to automatically group the web-pages? 19

Hafsaka? n 20

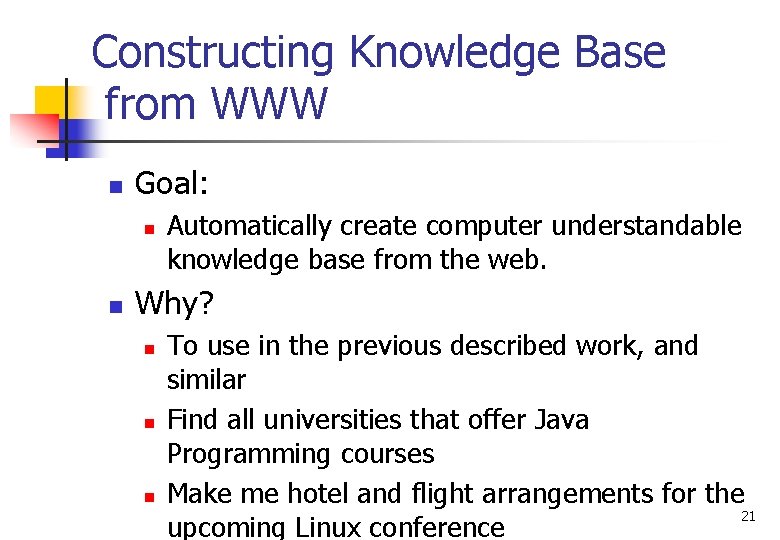

Constructing Knowledge Base from WWW n Goal: n n Automatically create computer understandable knowledge base from the web. Why? n n n To use in the previous described work, and similar Find all universities that offer Java Programming courses Make me hotel and flight arrangements for the 21 upcoming Linux conference

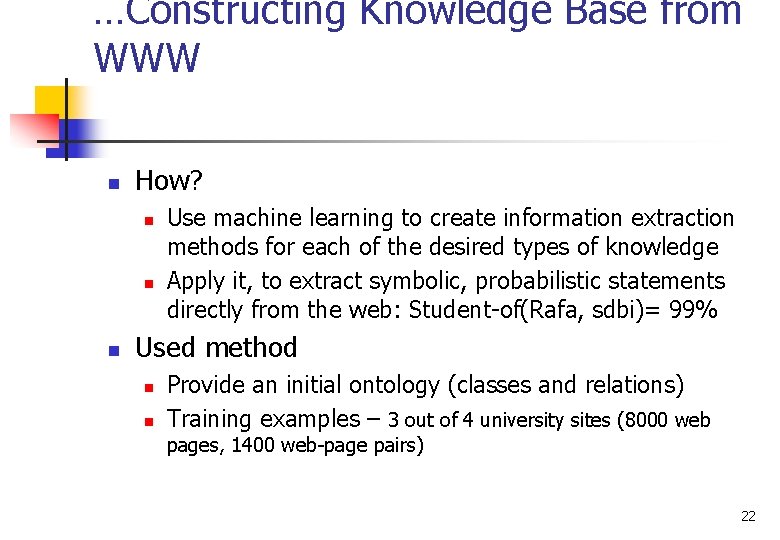

…Constructing Knowledge Base from WWW n How? n n n Use machine learning to create information extraction methods for each of the desired types of knowledge Apply it, to extract symbolic, probabilistic statements directly from the web: Student-of(Rafa, sdbi)= 99% Used method n n Provide an initial ontology (classes and relations) Training examples – 3 out of 4 university sites (8000 web pages, 1400 web-page pairs) 22

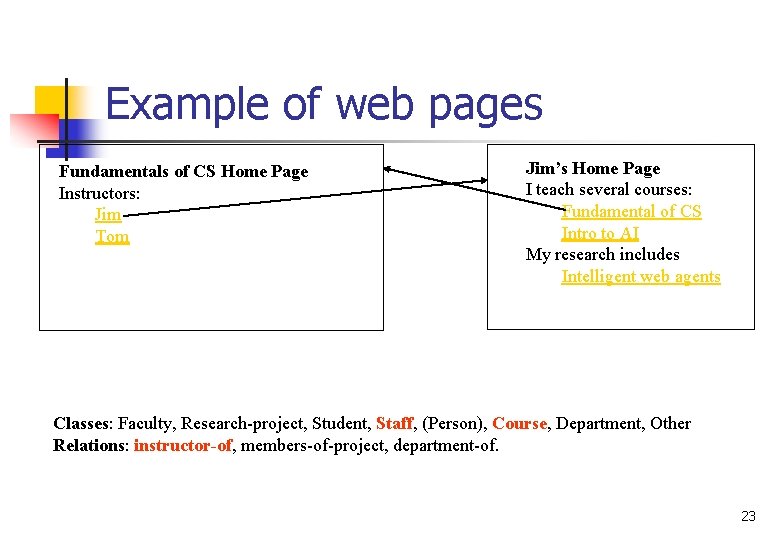

Example of web pages Fundamentals of CS Home Page Instructors: Jim Tom Jim’s Home Page I teach several courses: Fundamental of CS Intro to AI My research includes Intelligent web agents Classes: Faculty, Research-project, Student, Staff, (Person), Course, Department, Other Relations: instructor-of, members-of-project, department-of. 23

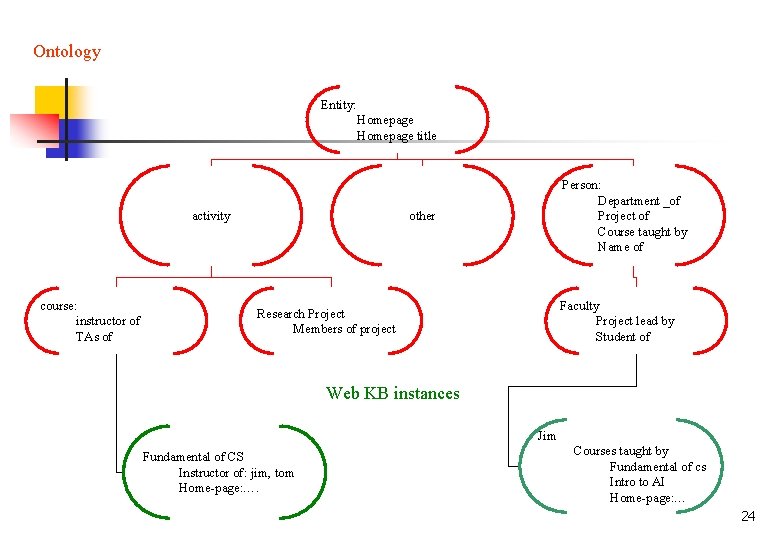

Ontology Entity: Homepage title activity course: instructor of TAs of Person: Department _of Project of Course taught by Name of other Faculty Project lead by Student of Research Project Members of project Web KB instances Jim Fundamental of CS Instructor of: jim, tom Home-page: …. Courses taught by Fundamental of cs Intro to AI Home-page: … 24

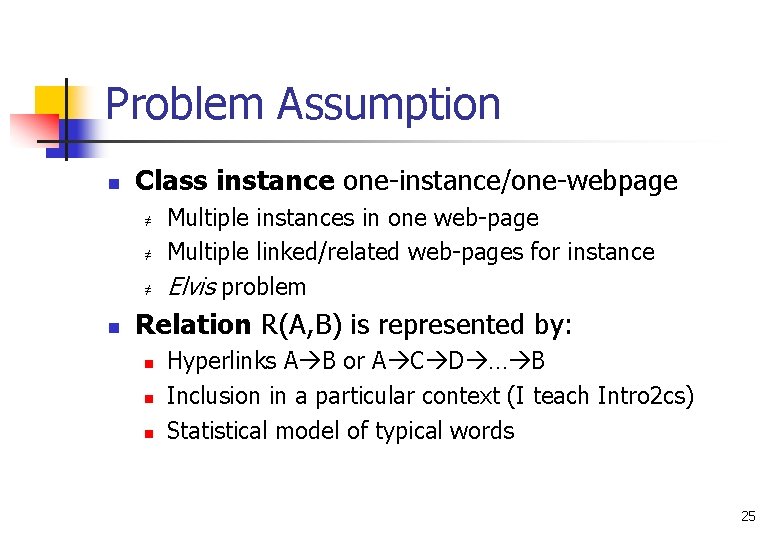

Problem Assumption n Class instance one-instance/one-webpage ≠ ≠ ≠ n Multiple instances in one web-page Multiple linked/related web-pages for instance Elvis problem Relation R(A, B) is represented by: n n n Hyperlinks A B or A C D … B Inclusion in a particular context (I teach Intro 2 cs) Statistical model of typical words 25

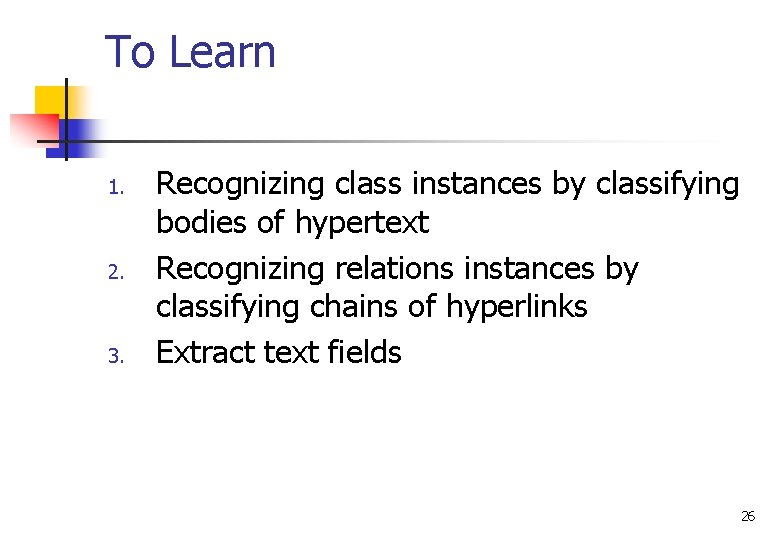

To Learn 1. 2. 3. Recognizing class instances by classifying bodies of hypertext Recognizing relations instances by classifying chains of hyperlinks Extract text fields 26

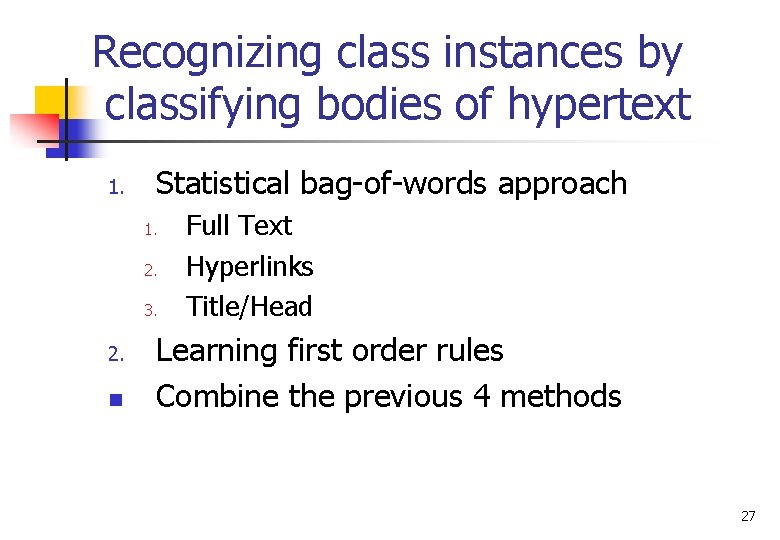

Recognizing class instances by classifying bodies of hypertext 1. Statistical bag-of-words approach 1. 2. 3. 2. n Full Text Hyperlinks Title/Head Learning first order rules Combine the previous 4 methods 27

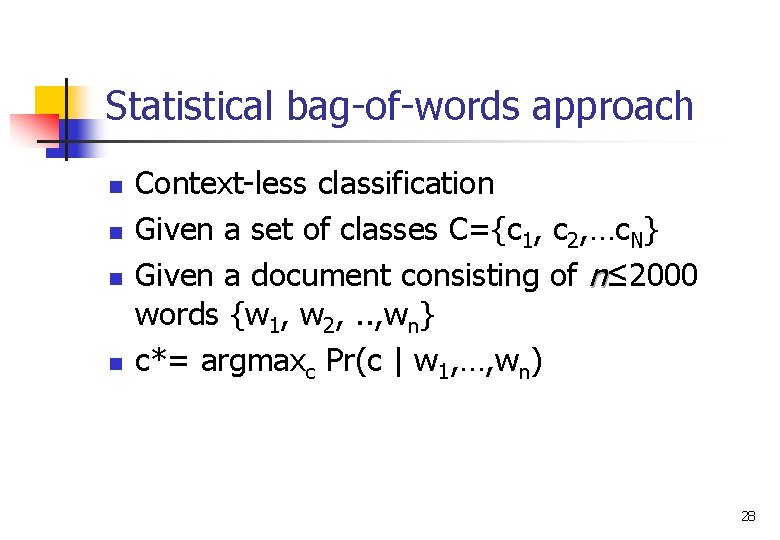

Statistical bag-of-words approach n n Context-less classification Given a set of classes C={c 1, c 2, …c. N} Given a document consisting of n≤ 2000 words {w 1, w 2, . . , wn} c*= argmaxc Pr(c | w 1, …, wn) 28

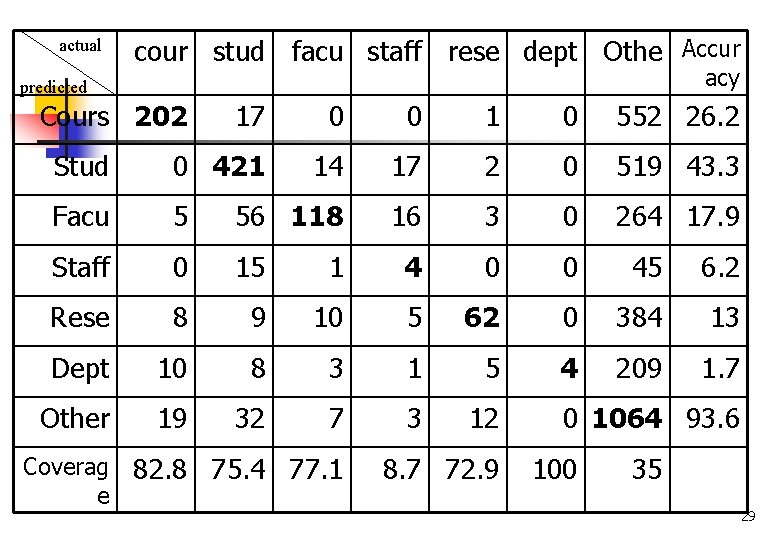

actual cour stud facu staff rese dept Othe Accur acy predicted Cours 202 17 0 0 1 0 552 26. 2 Stud 0 421 14 17 2 0 519 43. 3 Facu 5 56 118 16 3 0 264 17. 9 Staff 0 15 1 4 0 0 45 6. 2 Rese 8 9 10 5 62 0 384 13 Dept 10 8 3 1 5 4 209 1. 7 Other 19 32 7 3 12 Coverag 82. 8 75. 4 77. 1 e 8. 7 72. 9 0 1064 93. 6 100 35 29

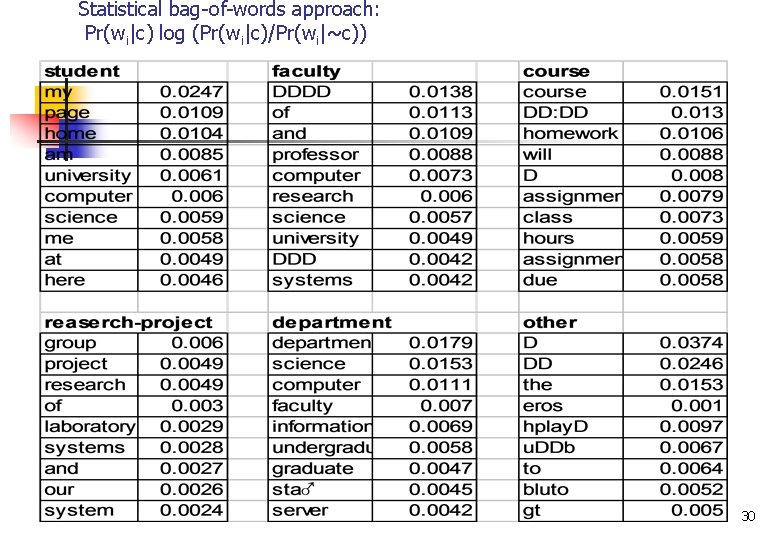

Statistical bag-of-words approach: Pr(wi|c) log (Pr(wi|c)/Pr(wi|~c)) 30

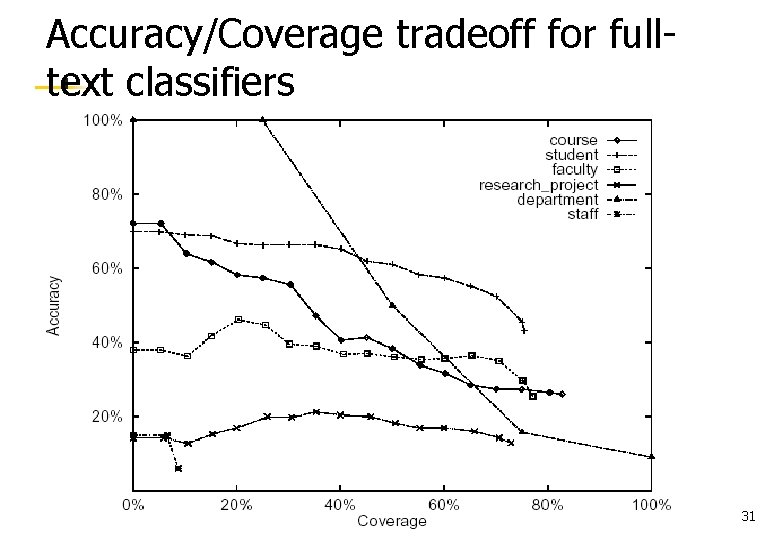

Accuracy/Coverage tradeoff for fulltext classifiers 31

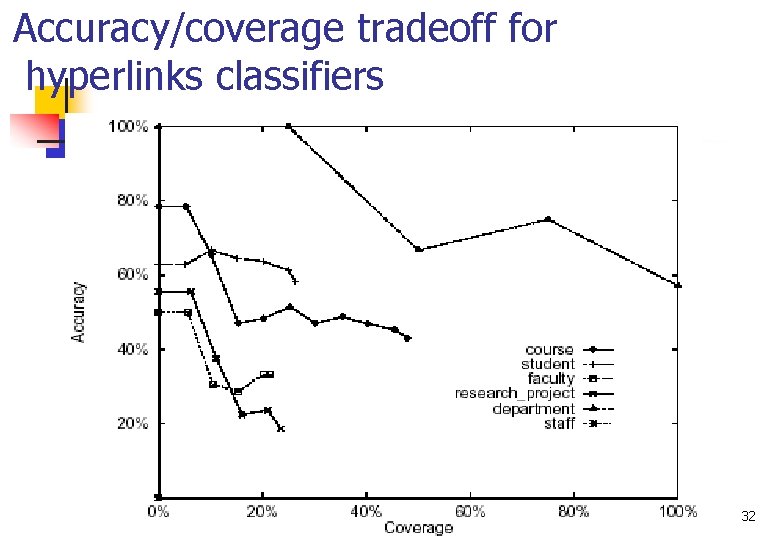

Accuracy/coverage tradeoff for hyperlinks classifiers 32

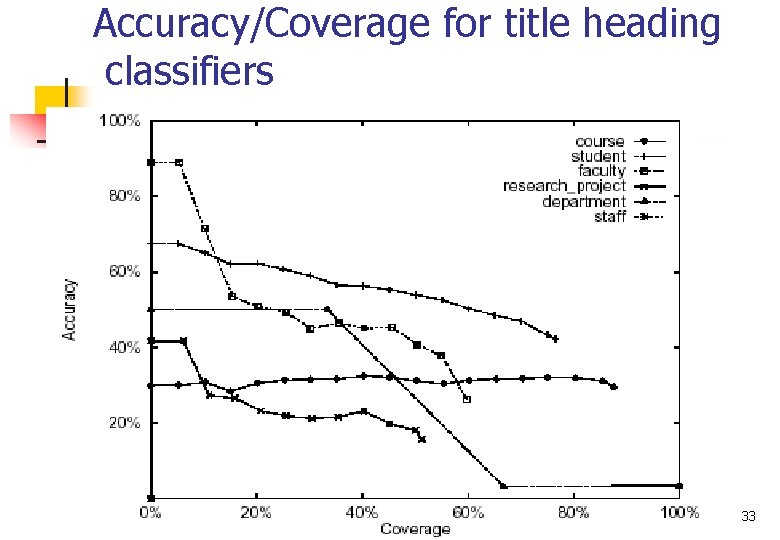

Accuracy/Coverage for title heading classifiers 33

Learning first order rules n n The previous method doesn’t consider relations between pages A page is a course home-page if it contains the word textbook and TA and point to a page containing the word assignment. n FOIL is a learning system that constructs Horn clause programs from examples 34

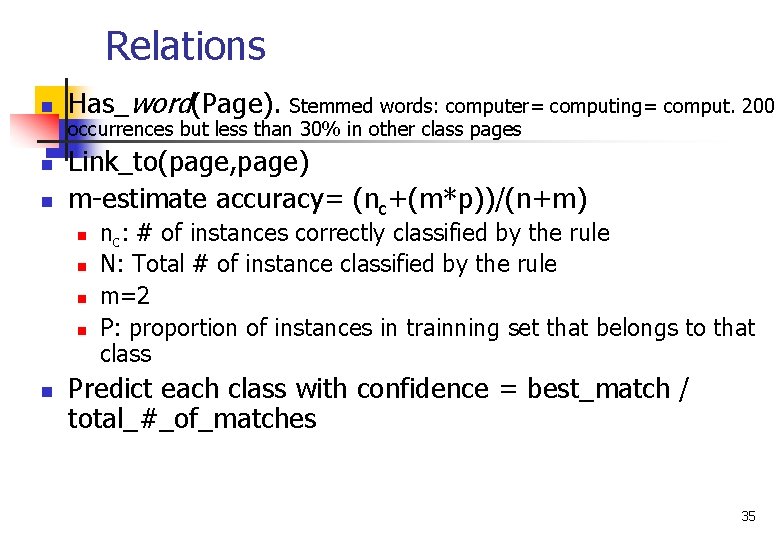

Relations n n n Has_word(Page). Stemmed words: computer= computing= comput. 200 occurrences but less than 30% in other class pages Link_to(page, page) m-estimate accuracy= (nc+(m*p))/(n+m) n n nc: # of instances correctly classified by the rule N: Total # of instance classified by the rule m=2 P: proportion of instances in trainning set that belongs to that class Predict each class with confidence = best_match / total_#_of_matches 35

New learned rules n n n student(A) : - not(has_data(A)), not(has_comment(A)), link_to(B, A), has_jame(B), has_paul(B), not(has_mail(B)). faculty(A) : - has_professor(A), has_ph(A), link_to(B, A), has_faculti(B). course(A) : - has_instructor(A), not(has_good(A)), link_to(A, B), not(link_to(B, 1)), has_assign(B). 36

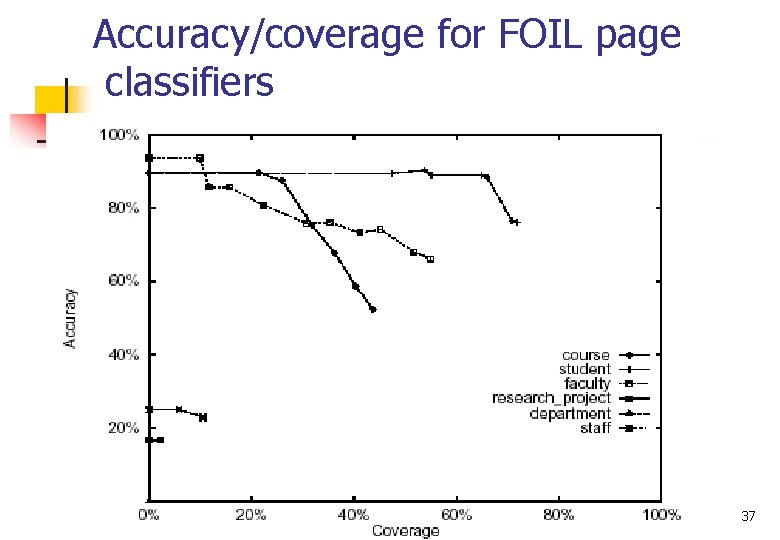

Accuracy/coverage for FOIL page classifiers 37

Boosting n The best prediction classification depends on the class n Combine the predictions using the measure confidence 38

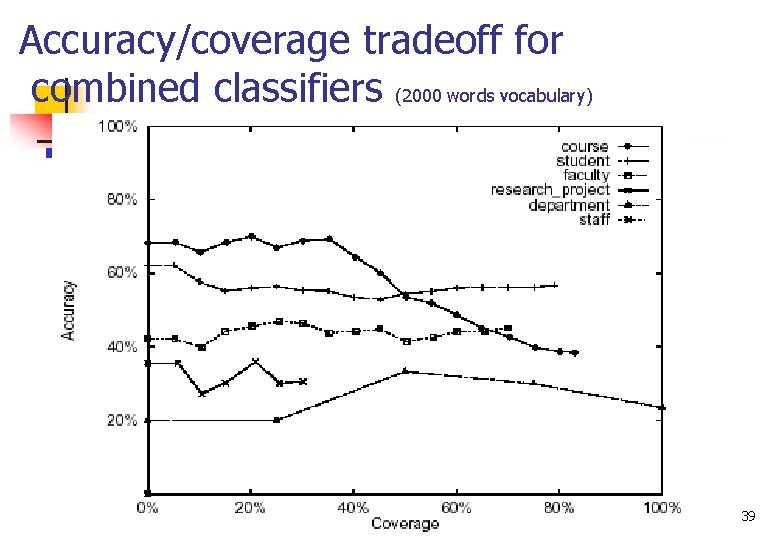

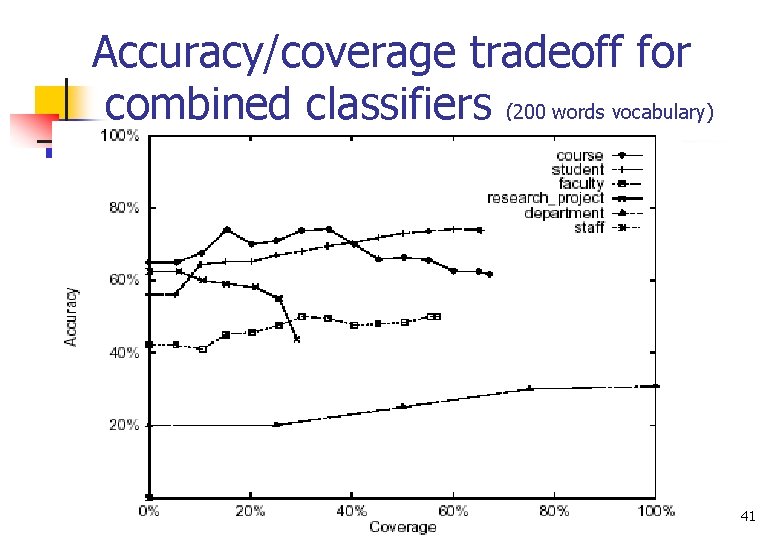

Accuracy/coverage tradeoff for combined classifiers (2000 words vocabulary) 39

Boosting n n Disappointing: Somehow it is not uniformly better Possible solutions n n Using reduced size dictionaries (next) Using other methods for combining predictions (voting instead of best_match / total_#_of_matches) 40

Accuracy/coverage tradeoff for combined classifiers (200 words vocabulary) 41

Multi-Page segments n The group is the longest prefix (indicated in parentheses) n n n A primary page is any page which URL matches: n @/index. {html, htm} @/home. {html, htm} @/%1/%1. {html, htm} n … n n (@/{user, faculty, people, home, projects}/*)/*. {html, htm} (@/{cs? ? ? , www/, *})/ … If no page in the group matches one of these patterns, then the page with the highest score for any non-other class is a primary page. 42 Any non-primary page is tagged as Other

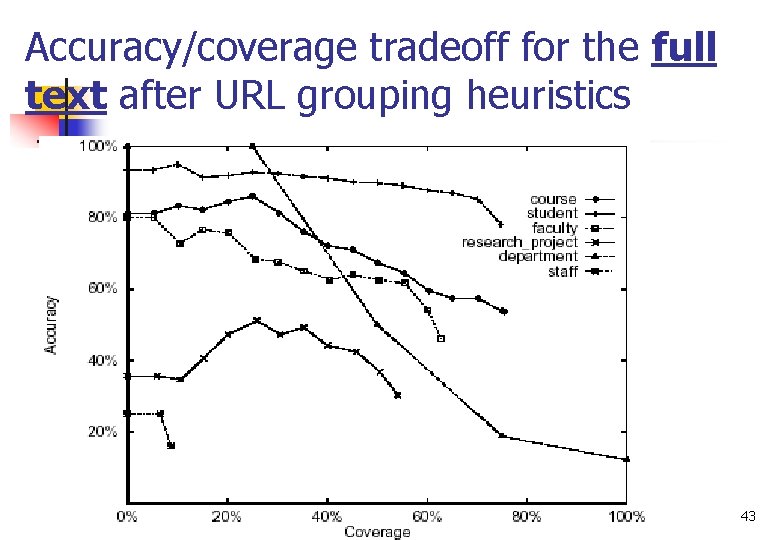

Accuracy/coverage tradeoff for the full text after URL grouping heuristics 43

Conclusion - Recognizing Classes n Hypertext provides redundant information n We can classify using several methods n n n n Full text Heading/title Hyperlinks Text in neighboring pages + Grouping pages No method alone is good enough. Combine predictions (classify methods) allows a better result. 44

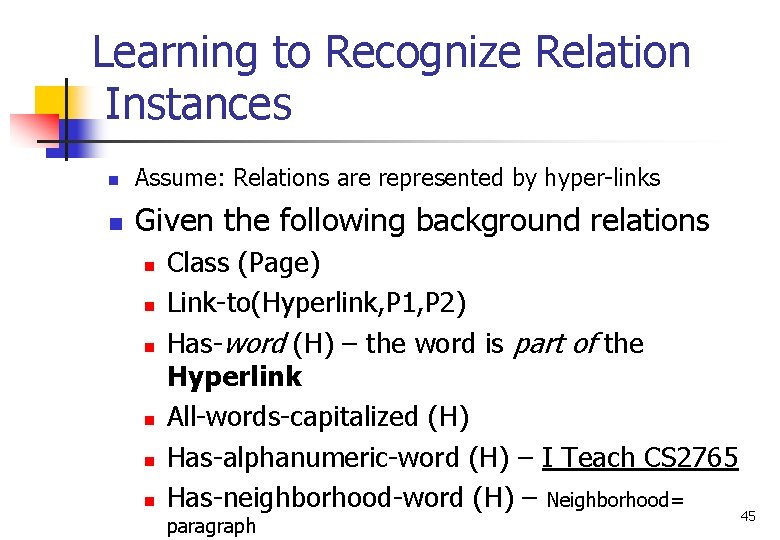

Learning to Recognize Relation Instances n Assume: Relations are represented by hyper-links n Given the following background relations n n n Class (Page) Link-to(Hyperlink, P 1, P 2) Has-word (H) – the word is part of the Hyperlink All-words-capitalized (H) Has-alphanumeric-word (H) – I Teach CS 2765 Has-neighborhood-word (H) – Neighborhood= paragraph 45

Learning to Recognize Relation … Instances n Try to learn the following n n n Members-of-project(P 1, P 2) Intsructors_of_course(P 1, P 2) Department_of_person(P 1, P 2) 46

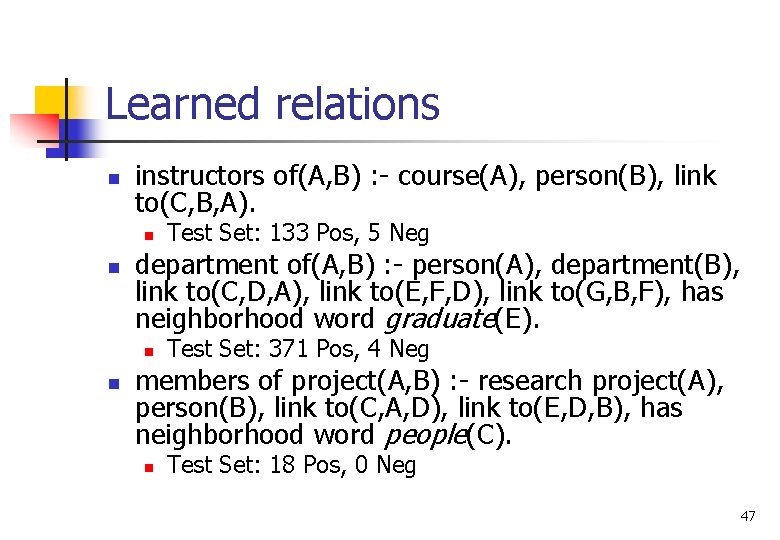

Learned relations n instructors of(A, B) : - course(A), person(B), link to(C, B, A). n n department of(A, B) : - person(A), department(B), link to(C, D, A), link to(E, F, D), link to(G, B, F), has neighborhood word graduate(E). n n Test Set: 133 Pos, 5 Neg Test Set: 371 Pos, 4 Neg members of project(A, B) : - research project(A), person(B), link to(C, A, D), link to(E, D, B), has neighborhood word people(C). n Test Set: 18 Pos, 0 Neg 47

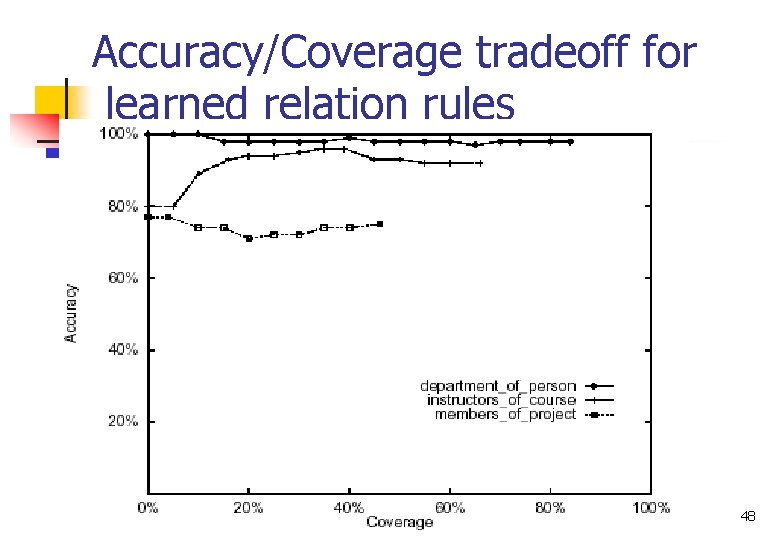

Accuracy/Coverage tradeoff for learned relation rules 48

Learning to Extract Text Fields n Sometimes we want a small fragment of text, not the whole web-page or class (like Jon, Peter, etc) n Make me hotel and flight arrangements for the upcoming Linux conference 49

Predefined predicates n Let F= w 1, w 2, … wj be a fragment of text n n length({<, >, =…}, N). some(Var, Path, Feat, Value): some (A, [next_token, next_token], numeric, true) n n position(Var, From, Relop, N): relpos(Var 1, Var 2, Relop, N): 50

![A wrong Example n ownername(Fragment) : n n n some(A, [prev token], word, “gmt"), A wrong Example n ownername(Fragment) : n n n some(A, [prev token], word, “gmt"),](http://slidetodoc.com/presentation_image_h2/e52a56bdd094bbeec7a7319152e3917e/image-51.jpg)

A wrong Example n ownername(Fragment) : n n n some(A, [prev token], word, “gmt"), some(A, [ ], in title, true), some(A, [ ], word, unknown), some(A, [ ], quadrupletonp, false) length(<, 3) Last-Modified: Wednesday, 26 -Jun-96 01: 37: 46 GMT <title> Bruce Randall Donald </title> <h 1> <img src="ftp: //ftp. cs. cornell. edu/pub/brd/images/brd. gif"> <p> Bruce Randall Donald Associate Professor 51

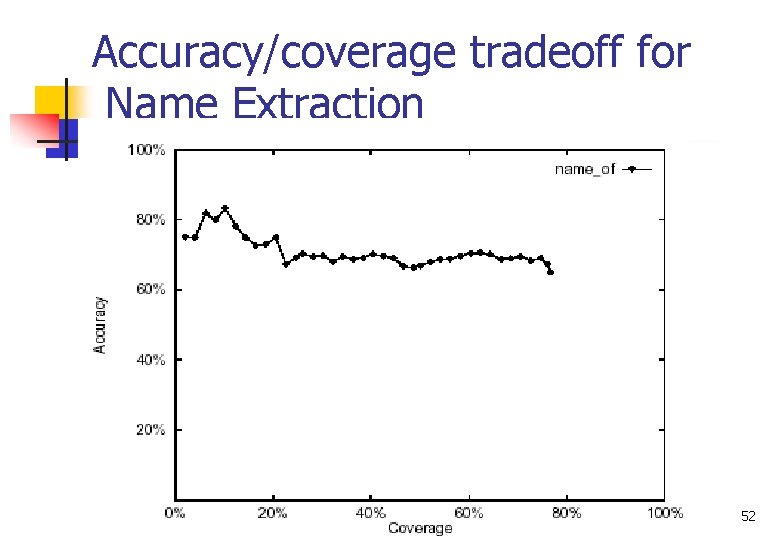

Accuracy/coverage tradeoff for Name Extraction 52

Conclusions n n Used machine learning algorithms to create information extract methods for each desired type of knowledge. Web. KB achieves 70% accuracy at 30% coverage. Bag-of-words (Hyperlinks, web-pages and full text) and First order learning can be used to boost the confidence First order learning can be used to look outward from the page and consider its neighbors 53

Problems n Not as accurate as we want n n You can get more accuracy at cost of coverage Use linguistic features (verbs) Add new methods to the booster (predict the department of a professor, based on the department of his students advisees) A per topic, per language, per … method. Needs hand made labeling to learn. n Learners with high accuracy can be used to teach learners with low accuracy. 54

- Slides: 54