VQ for ASR jangcs nthu edu tw http

- Slides: 9

VQ for ASR 張智星 jang@cs. nthu. edu. tw http: //www. cs. nthu. edu. tw/~jang 多媒體資訊檢索實驗室 清華大學 資訊 程系

Construct VQ-based Classifiers z. Design phase y. Use k-means or generalized Lloyd algorithms to design a codebook (which contains a set of code words, centroids, or prototypical vectors) for each class. z. Application phase y. For a given utterance, find the min. average distortion scores among all classes. -2 -

Vector Quantization (VQ) z. Advantages of VQ for classifier designs: y. Reduced storage y. Reduced computation y. Efficient representation that improves generalization capability -3 -

ASR without Time Alignment z Good for simple vocabularies of highly distinct words, such as English digits z Bad for vocabularies y. With words that can only be distinguished by their temporal sequential characteristics, such as “car” and “rack”, “we” and “you” y. With complex speech classes that encompass long utterances with rich phonetic contents -4 -

Centroid Computation in VQ z The codebook for a class should be designed to minimize the average distortion. Different distortions lead to different codebook designs. y. L 2 distance mean vector y. Mahalanobis distance mean vector y. L 1 distance median vector z Other distortions and corresponding centroid computation can be found in 5. 2. 2 of “Fundamental of Speech Recognition” by L. Rabiner and B. -H. Juang. -5 -

Other Uses of VQ in ASR z. VQ can be used to speed up DTW-based comparison: y. VQ-based classifiers can be used as a preprocessor for DTW-based classifiers. y. VQ can be used for efficient computation of (approximate) DTW distance. -6 -

DTW for Speaker-independent Tasks z. Basic procedures to adapt DTW for speaker-independent tasks: y. Massive recordings y. Use MFCC with vocal tract length normalization y. Use modified K-means to find cluster centers (or representative utterances) for each class -7 -

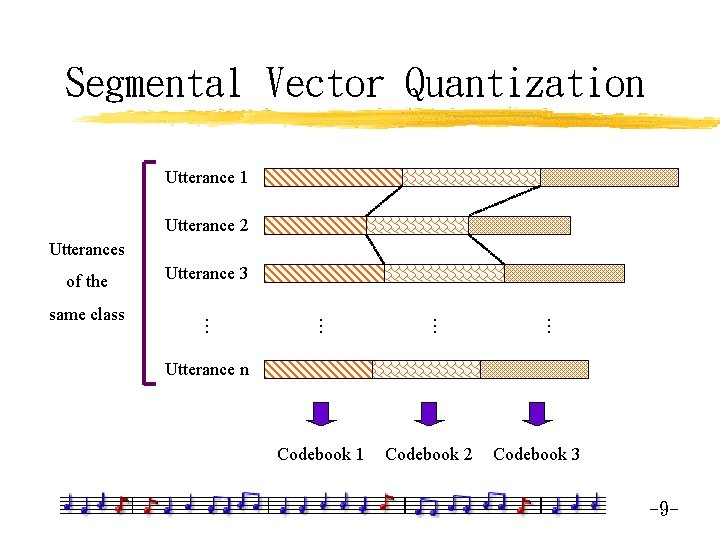

Modified K-means z Goal: Find representative objects in a set with elements in a non-Euclidean space z Steps: y 1. Select initial centers y 2. Label each utterance to a cluster y 3. Revise cluster centers: x. Minimax centers x. Pseudoaverage centers x. Segmental version of the above two (segmental VQ) y 4. Go back to step 2 till converge -8 -

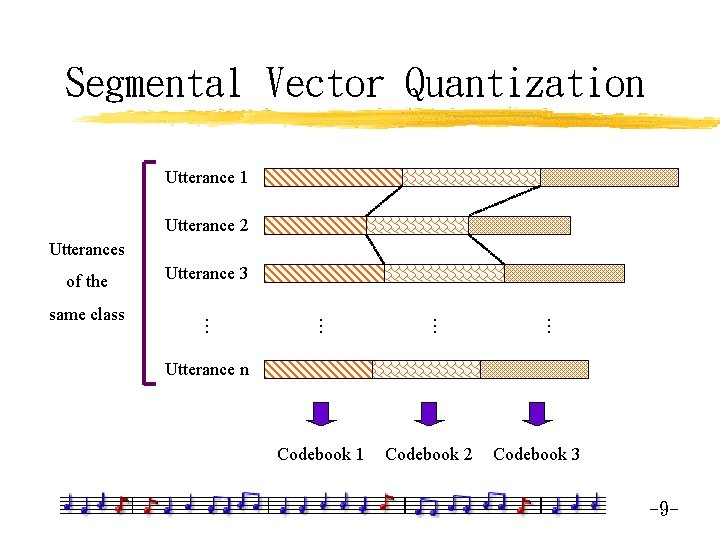

Segmental Vector Quantization Utterance 1 Utterance 2 Utterances of the Utterance 3 same class . . . Utterance n Codebook 1 Codebook 2 Codebook 3 -9 -