VMADSG 2 10 02 Documentation requirements for Simulation

- Slides: 24

VMAD-SG 2 -10 -02 Documentation requirements for Simulation NL/RDW presentation Espedito Rusciano

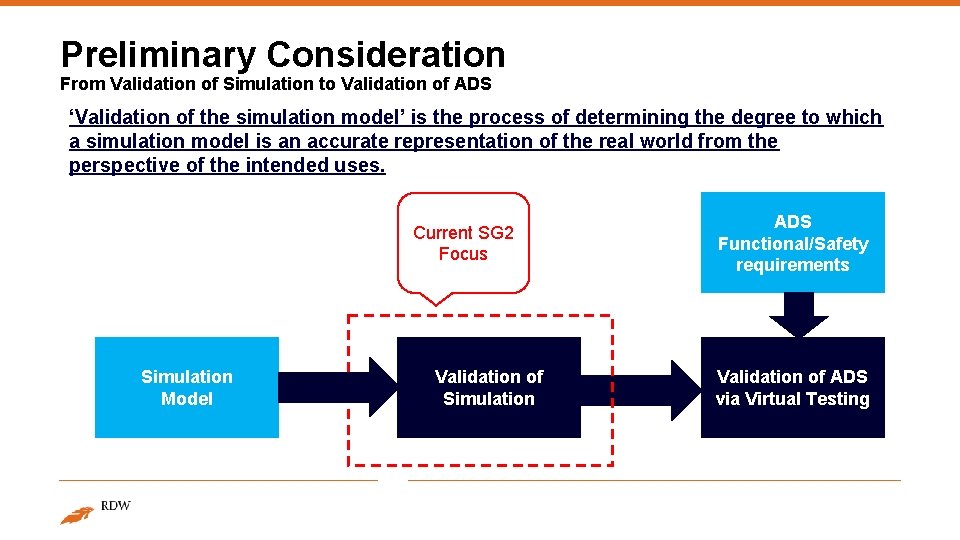

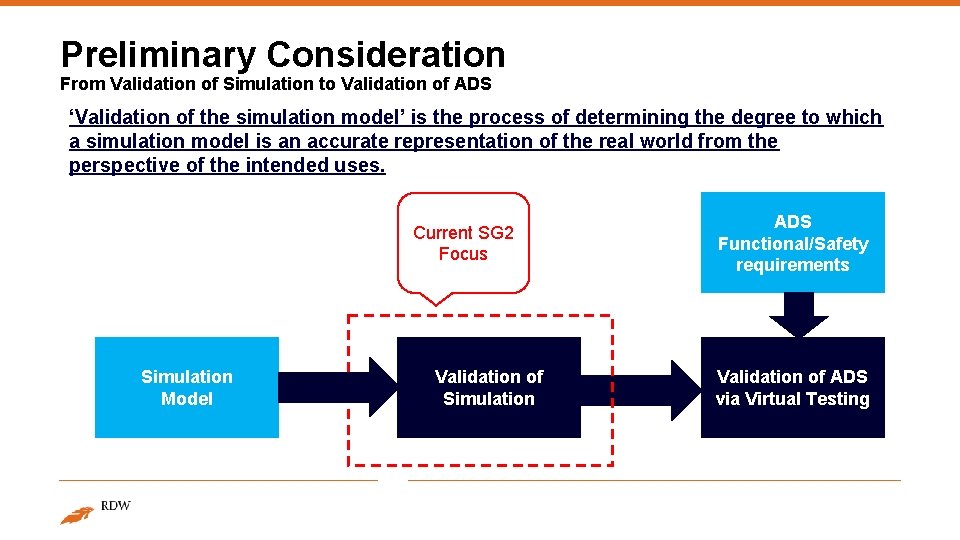

Preliminary Consideration From Validation of Simulation to Validation of ADS ‘Validation of the simulation model’ is the process of determining the degree to which a simulation model is an accurate representation of the real world from the perspective of the intended uses. Current SG 2 Focus Simulation Model Validation of Simulation ADS Functional/Safety requirements Validation of ADS via Virtual Testing

Beyond the Validation of the Simulation model Validation has limitations: � Limited scope of validation tests › Validation test data cannot cover all possible scenarios › Validation of the simulation model over the whole domain (all corner cases) cannot be feasible � Validation data are difficult to obtain: › Obtaining sufficient validation test data can be costly › “Real World” always contains factors not accounted for in the simulation Documentation should not only to provide evidence about the validation of the simulation model, but it should be used for achieving sufficient information on the simulation toolchain process and products with aim of establishing the overall credibility of the simulation toolchain

Documentation Requirements for credibility assessment Scope � To establish the relevant aspects of the simulation model to be documented � To establish a minimum set of documentation to produce and maintain in order to consider a simulation model and its associated data suitable to use for a specific purpose � To define, determine, generate and document information needed to assess the credibility of the simulation. Purpose � To Assure that virtual testing toolchain and its associated can be considered suitable to use for ADS development and validation � To enable the assessor/authorities to understand the steps and decisions taken in the simulation model process, � To assess the validity of the analytical results of virtual testing.

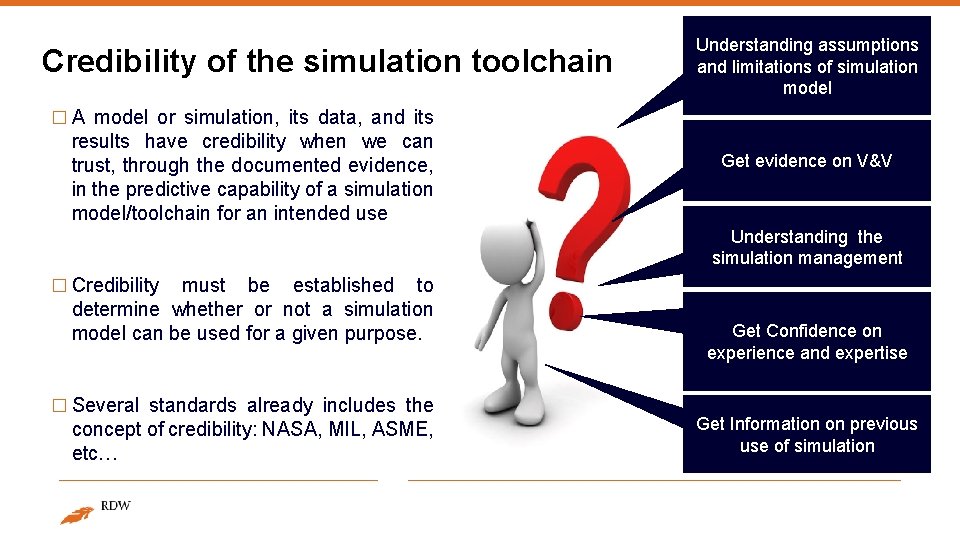

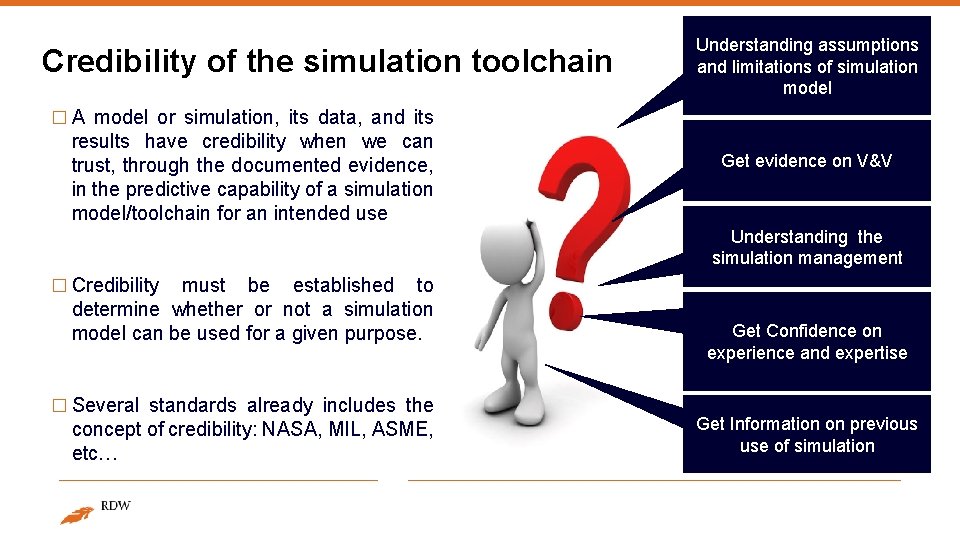

Credibility of the simulation toolchain Understanding assumptions and limitations of simulation model � A model or simulation, its data, and its results have credibility when we can trust, through the documented evidence, in the predictive capability of a simulation model/toolchain for an intended use Get evidence on V&V Understanding the simulation management � Credibility must be established to determine whether or not a simulation model can be used for a given purpose. � Several standards already includes the concept of credibility: NASA, MIL, ASME, etc… Get Confidence on experience and expertise Get Information on previous use of simulation

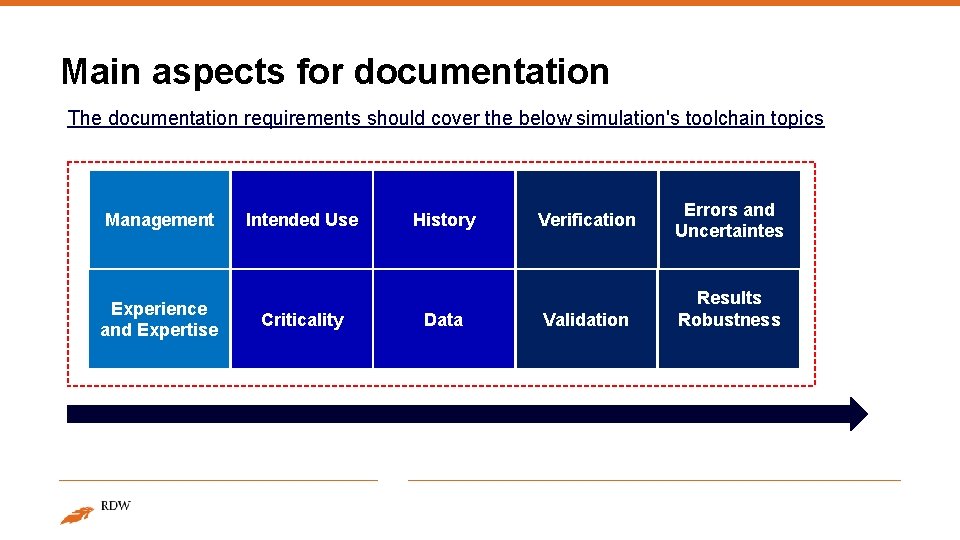

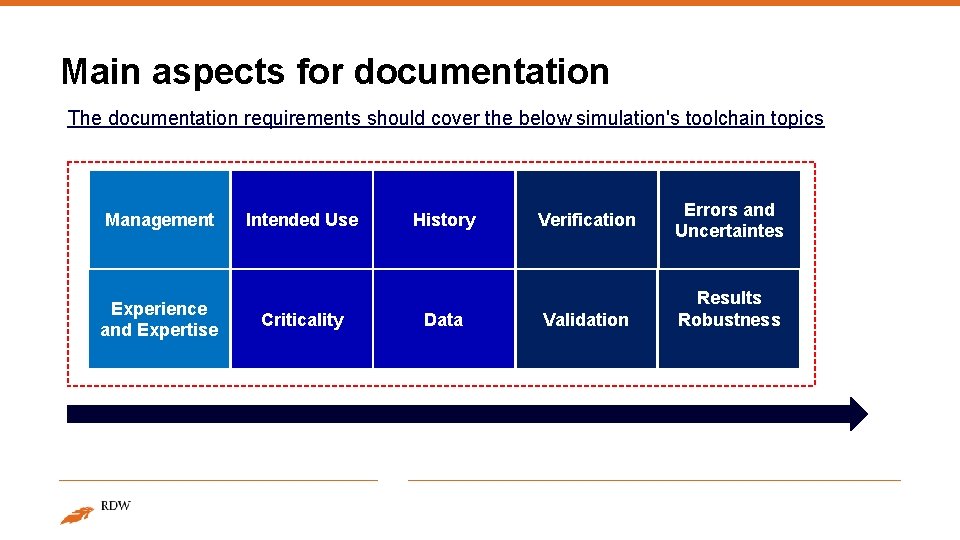

Main aspects for documentation The documentation requirements should cover the below simulation's toolchain topics Management Experience and Expertise Intended Use Criticality History Data Verification Validation Errors and Uncertaintes Results Robustness

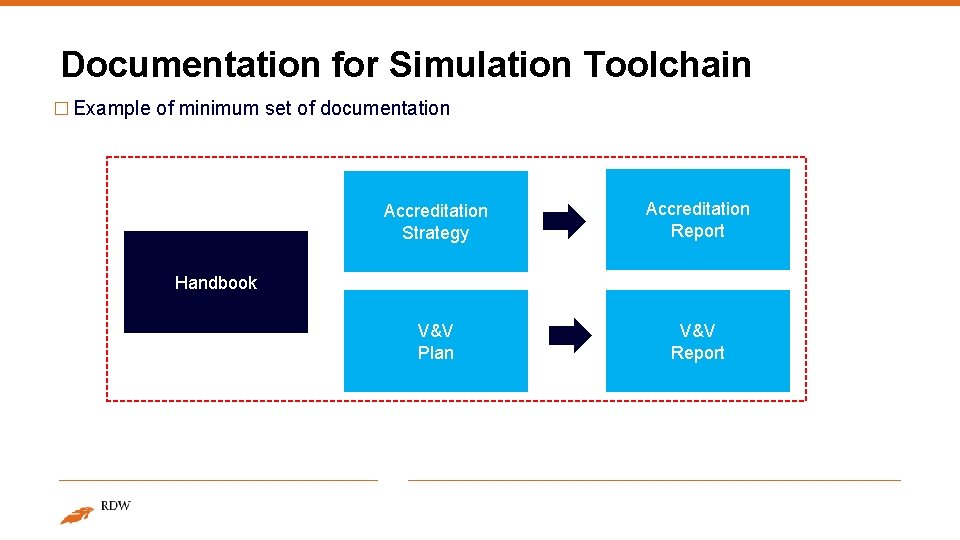

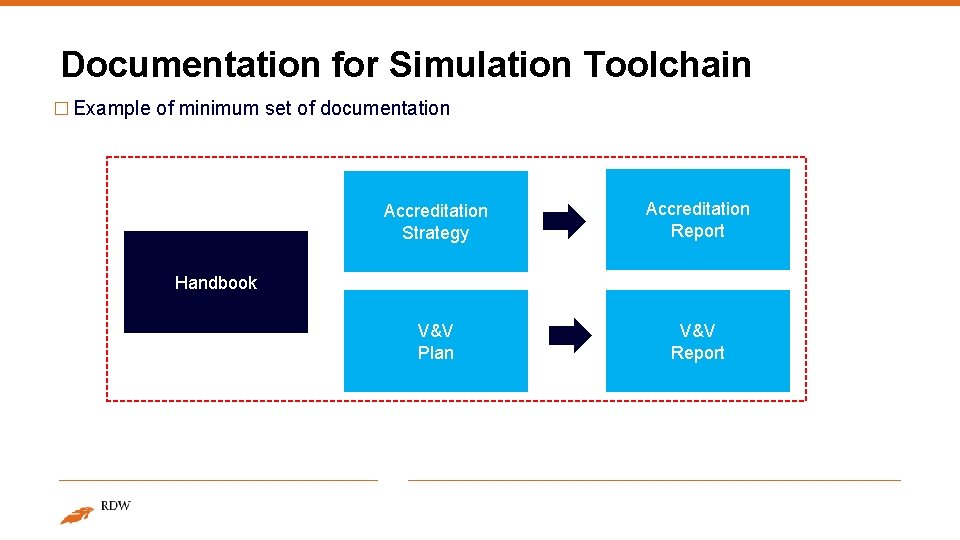

Documentation for Simulation Toolchain � Example of minimum set of documentation Accreditation Strategy Accreditation Report V&V Plan V&V Report Handbook

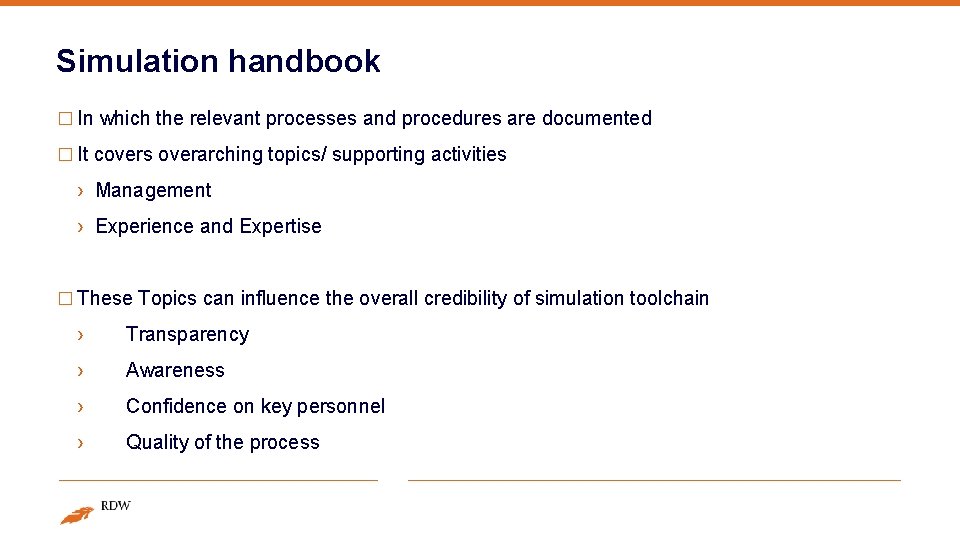

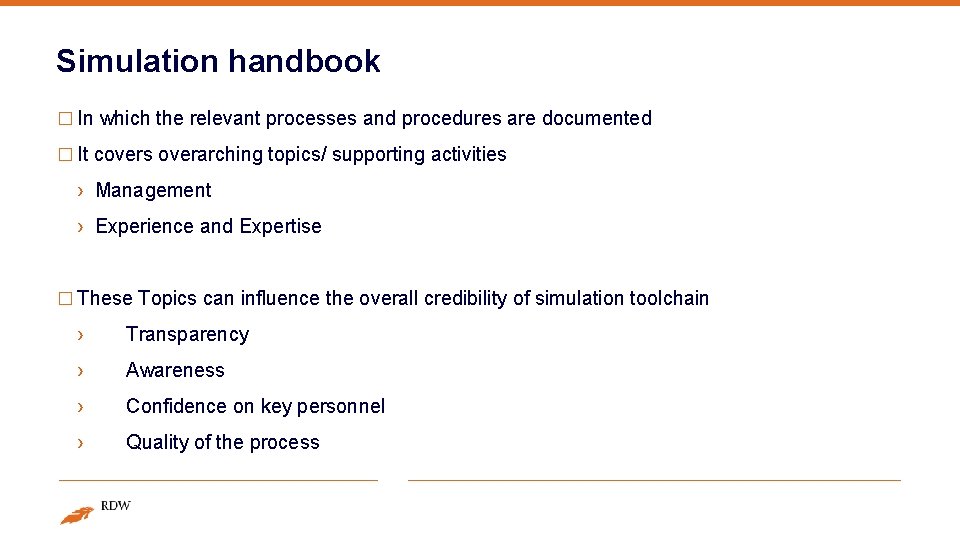

Simulation handbook � In which the relevant processes and procedures are documented � It covers overarching topics/ supporting activities › Management › Experience and Expertise � These Topics can influence the overall credibility of simulation toolchain › Transparency › Awareness › Confidence on key personnel › Quality of the process

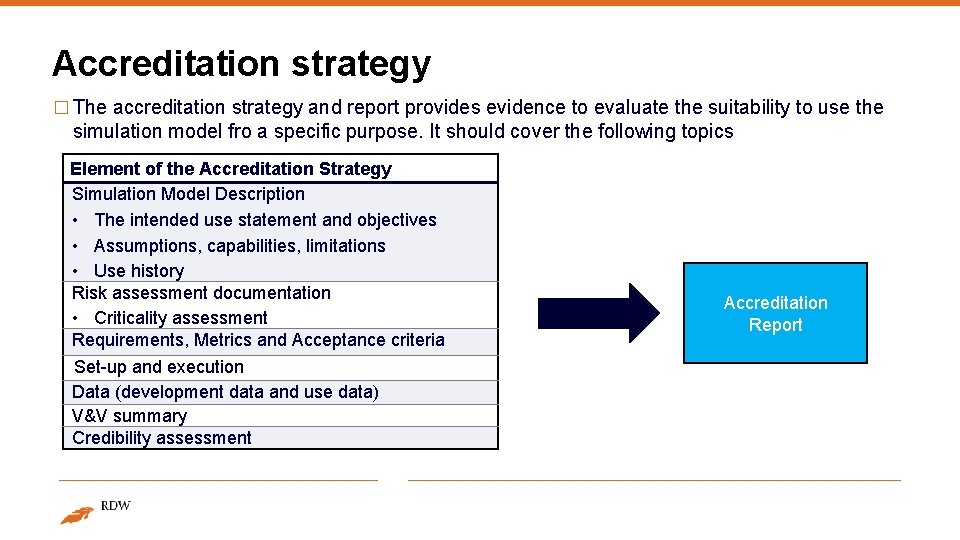

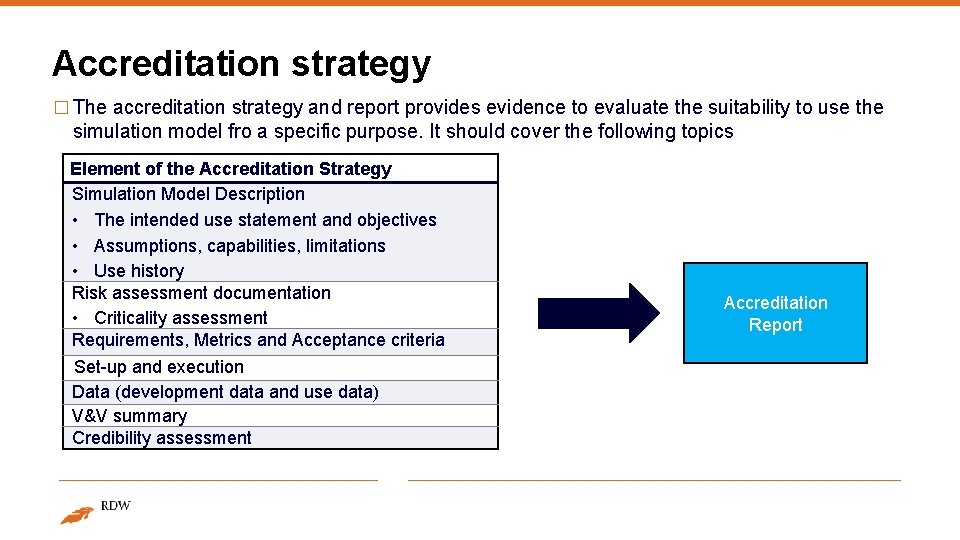

Accreditation strategy � The accreditation strategy and report provides evidence to evaluate the suitability to use the simulation model fro a specific purpose. It should cover the following topics Element of the Accreditation Strategy Simulation Model Description • The intended use statement and objectives • Assumptions, capabilities, limitations • Use history Risk assessment documentation • Criticality assessment Requirements, Metrics and Acceptance criteria Set-up and execution Data (development data and use data) V&V summary Credibility assessment Accreditation Report

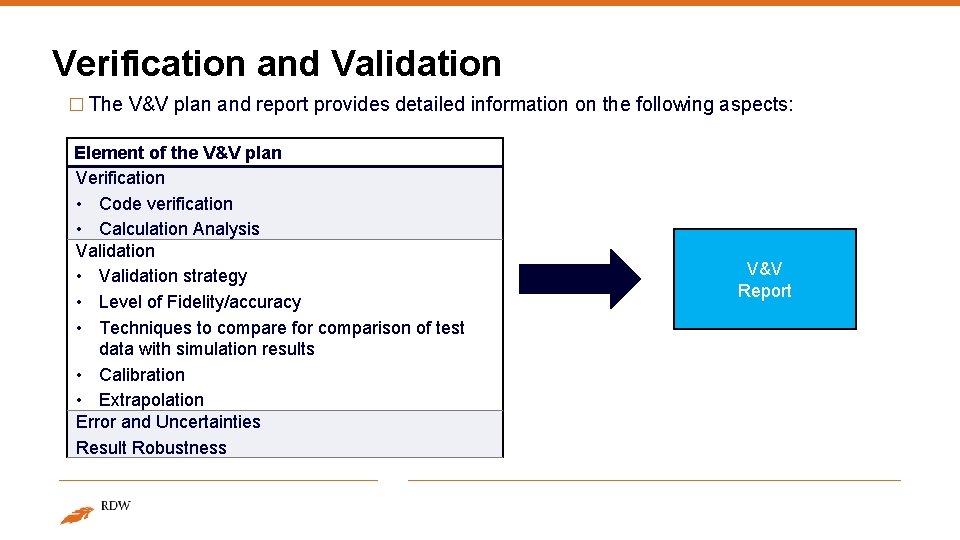

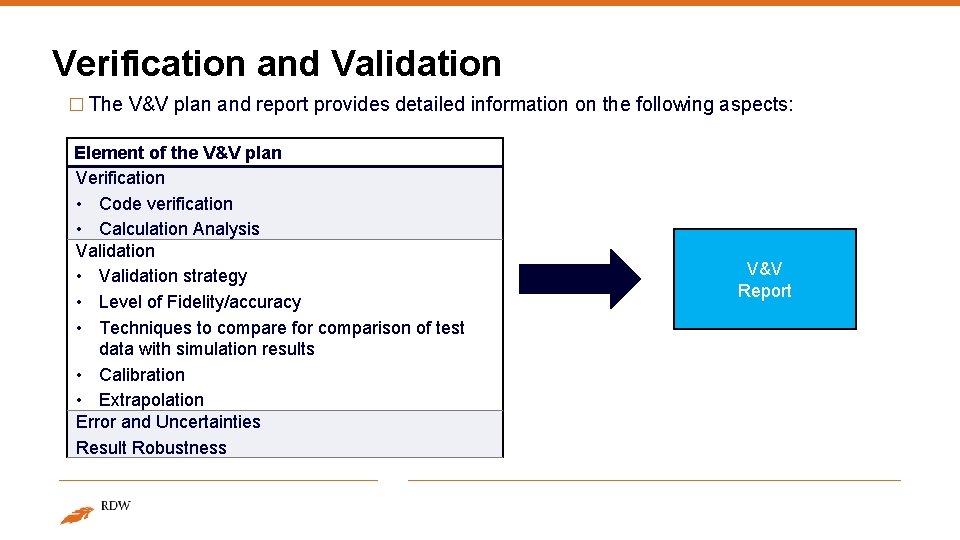

Verification and Validation � The V&V plan and report provides detailed information on the following aspects: Element of the V&V plan Verification • Code verification • Calculation Analysis Validation • Validation strategy • Level of Fidelity/accuracy • Techniques to compare for comparison of test data with simulation results • Calibration • Extrapolation Error and Uncertainties Result Robustness V&V Report

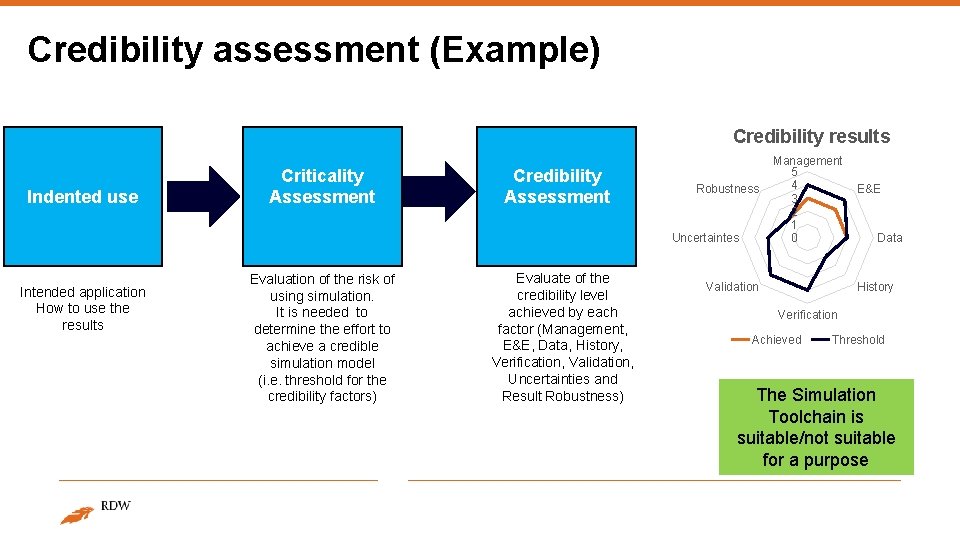

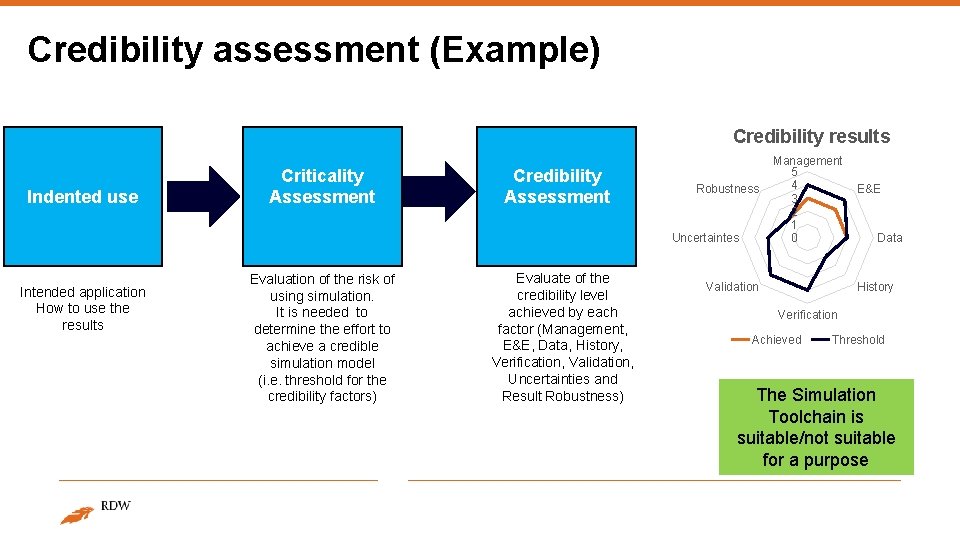

Credibility assessment (Example) Credibility results Indented use Criticality Assessment Credibility Assessment Robustness Uncertaintes Intended application How to use the results Evaluation of the risk of using simulation. It is needed to determine the effort to achieve a credible simulation model (i. e. threshold for the credibility factors) Evaluate of the credibility level achieved by each factor (Management, E&E, Data, History, Verification, Validation, Uncertainties and Result Robustness) Management 5 4 3 2 1 0 Validation E&E Data History Verification Achieved Threshold The Simulation Toolchain is suitable/not suitable for a purpose

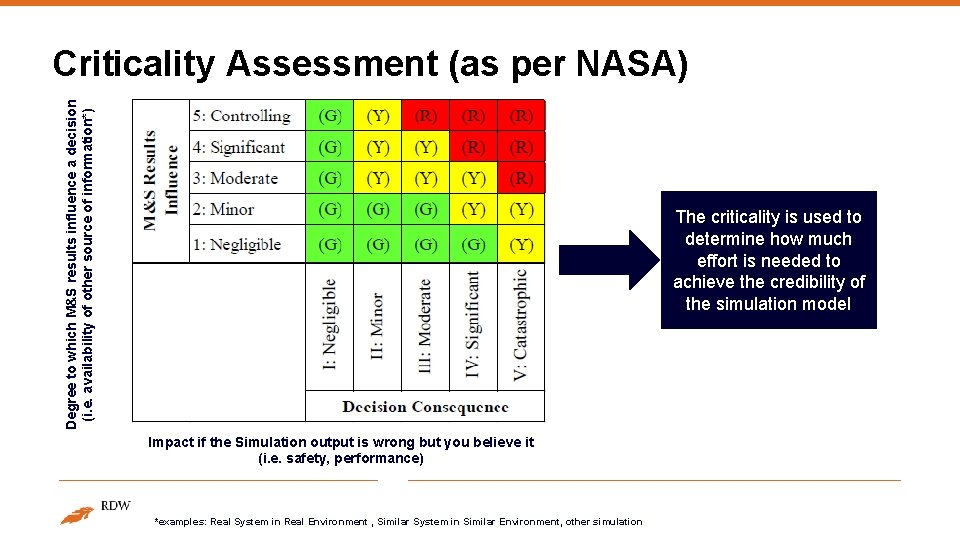

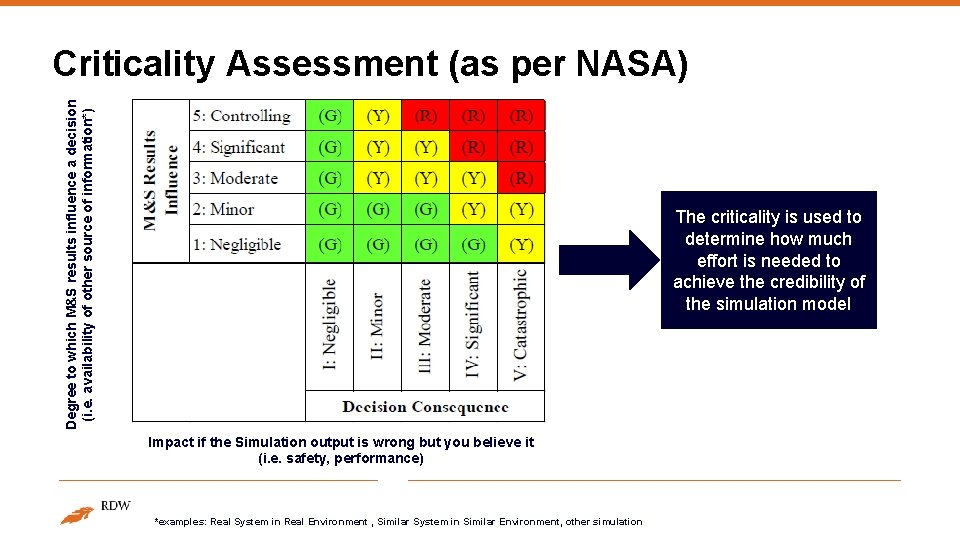

Degree to which M&S results influence a decision (i. e. availability of other source of information*) Criticality Assessment (as per NASA). The criticality is used to determine how much effort is needed to achieve the credibility of the simulation model Impact if the Simulation output is wrong but you believe it (i. e. safety, performance) *examples: Real System in Real Environment , Similar System in Similar Environment, other simulation

Focus on the different credibility factors for Documentation

Supporting activities - Management � The management is used to describe the extent to which an simulation toolchain exhibits the characteristics of work product management: › Process definition; Process measurement; Process control; Process change; Continuous improvement, Maintenance. � As example, the management should includes › Configuration management of models › Management of software and hardware used( i. e. version of the Simulation software, the operating system, and the computer hardware platform used) › Procedures for peer review and quality checks › Procedures for sharing and documenting lessons learned › Procedure for record keeping until the product is no longer in service

Supporting activities - Experience and Expertise � Although current tools are easier to use, nothing replaces experience to assess the process and results � Experience and expertise can play an important role, especially in relation to more complex simulations. It is relatively easy for inexperienced analysts to use modern simulation model to generate seemingly correct results, but it requires training and experience to come to credible results that can withstand the required level of scrutiny. (EASA) � E&E includes: › Qualification (education); › Skills & competences (understanding of processes, procedures, roles and responsibilities); › Training (initial and recurrent). � In the end, E&E documentation provides evidences to convince auditor on the ability to perform certain Simulation activities.

Verification Why not just validate? Why is verification needed as well? The verification helps to provide an assurance that a simulation will not exhibit unrealistic or unstable behavior in those areas that are not or cannot be tested, contributing to the overall credibility of the simulation. (ref. Do. D) � Code Verification › Establishing confidence, through the collection of evidence that the computational models are working correctly � Calculation (or Solution) Verification › Identifying the presence of any numerical and logical errors in the model, assessing their impact upon the accuracy of the results � Other aspects : (a) rigor of the verification processes, and (b) establishment of processes for development of simulation models.

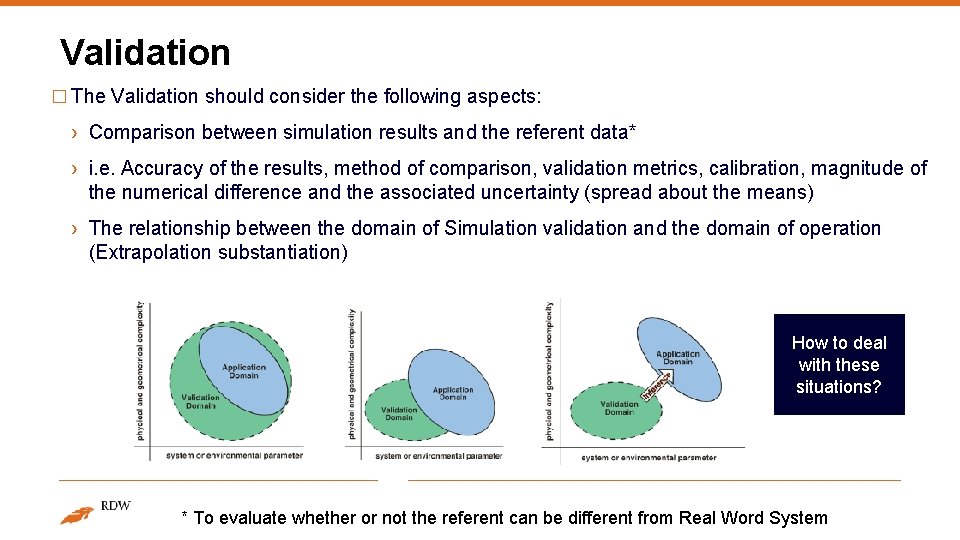

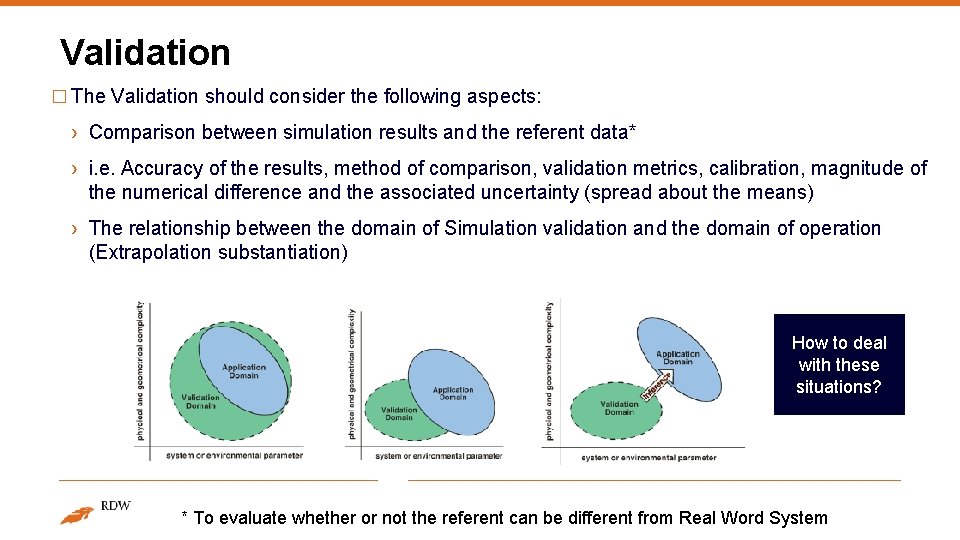

Validation � The Validation should consider the following aspects: › Comparison between simulation results and the referent data* › i. e. Accuracy of the results, method of comparison, validation metrics, calibration, magnitude of the numerical difference and the associated uncertainty (spread about the means) › The relationship between the domain of Simulation validation and the domain of operation (Extrapolation substantiation) How to deal with these situations? * To evaluate whether or not the referent can be different from Real Word System

Data � The quality of the data either for the development and for using the simulation are important aspect for the overall credibility and validation of the simulation models � Quality of the data should consider: › Source data (real world or other source) Is the quality of the data used to develop the model adequate or acceptable? › Accuracy, precision, and uncertainty › Traceability of data from its source Is the quality of the data used to setup and run the model adequate or acceptable?

Error and Uncertainties & Result Robustness � Identification of errors and quantification of uncertainty is fundamental to establishing credibility of M&S process › Identification and classification of sources of uncertainty (e. g. , aleatory vs. epistemic) in the input variables and parameters, › The numerical errors in model implementation mechanisms (e. g. , computational/math models), › Propagation of the uncertainty to simulation outputs, (e. g. , probabilistic analysis, evidence theory, fuzzy logic, etc. ) and comprehensive (e. g. , Monte Carlo sampling) � Documenting the extent of sensitivity analyses ensures an understanding of how well the sensitivities were investigated and provides an understanding of the stability of Simulation results to input perturbations.

Back up slides

Supporting activities - Experience and Expertise � Organizational level: › Processes, procedures and standards in place to identify, obtain, maintain and retain the necessary level of skills, knowledge and experience to be able to perform M&S activities in a sufficiently credible manner. � Individual level: › Minimum criteria, such as the education received, the number of years of experience (both with M&S techniques in general and in relation to specific applications), the number and complexity of projects and applications involved in, the level of involvement and responsibilities in these projects, etc.

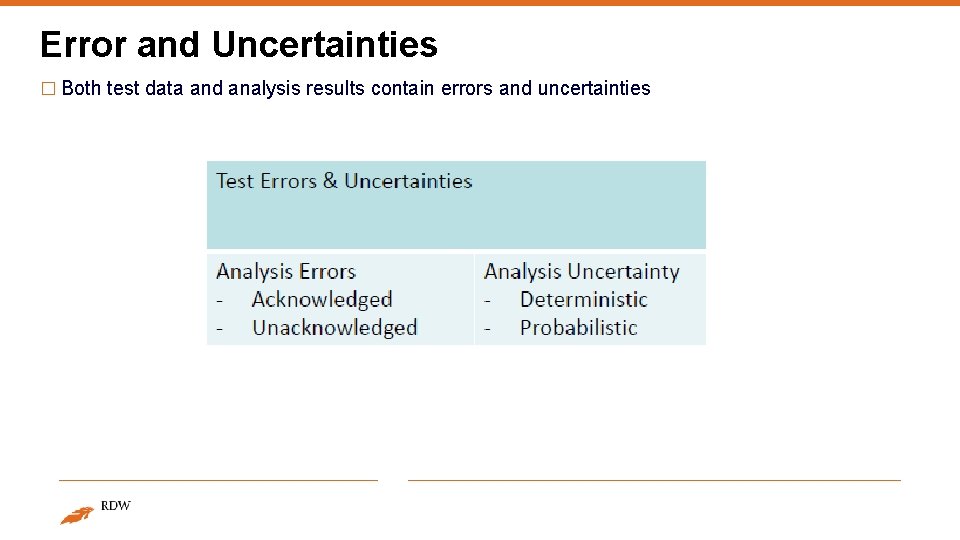

Error and Uncertainties � Both test data and analysis results contain errors and uncertainties

Example of Analysis Errors dged or unacknowledged errors? � Acknowledged Error › Physical approximation error <= check on assumptions & simplifications Physical modelling error Geometry modelling error › Computer round-off error <= usually known and typically small › Iterative convergence error <= usually known and typically small › Discretization error Spatial discretization error <= check convergence through mesh refinement (Grid Convergence Index) Temporal discretization error <= check convergence through smaller time steps � Unacknowledged Error › Computer programming error <= code verification › Usage error <= calculation (or solution) verification

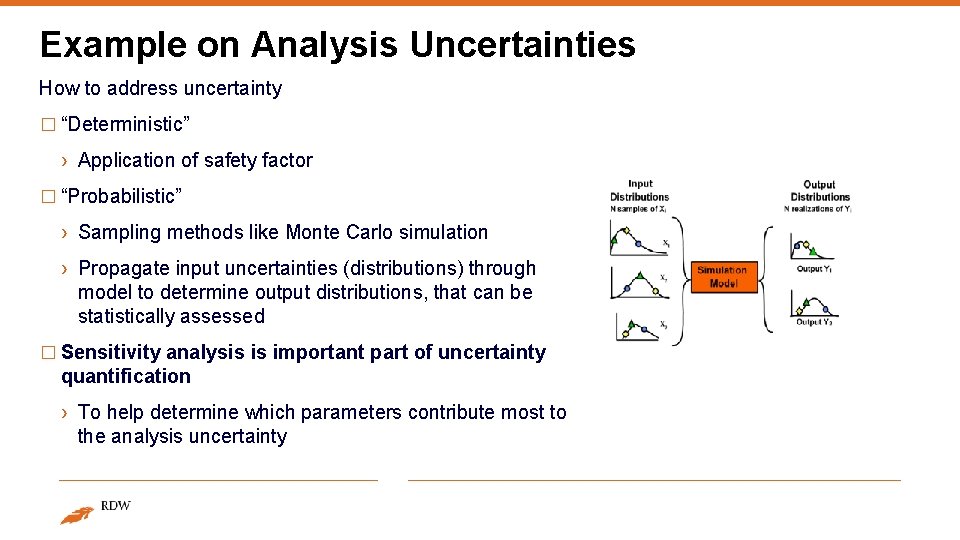

Example on Analysis Uncertainties How to address uncertainty � “Deterministic” › Application of safety factor � “Probabilistic” › Sampling methods like Monte Carlo simulation › Propagate input uncertainties (distributions) through model to determine output distributions, that can be statistically assessed � Sensitivity analysis is important part of uncertainty quantification › To help determine which parameters contribute most to the analysis uncertainty